Abstract

Computer scientists are well-versed in dealing with data structures. The same cannot be said about their dual: codata. Even though codata is pervasive in category theory, universal algebra, and logic, the use of codata for programming has been mainly relegated to representing infinite objects and processes. Our goal is to demonstrate the benefits of codata as a general-purpose programming abstraction independent of any specific language: eager or lazy, statically or dynamically typed, and functional or object-oriented. While codata is not featured in many programming languages today, we show how codata can be easily adopted and implemented by offering simple inter-compilation techniques between data and codata. We believe codata is a common ground between the functional and object-oriented paradigms; ultimately, we hope to utilize the Curry-Howard isomorphism to further bridge the gap.

P. Downen and Z. M. Ariola—This work is supported by the National Science Foundation under grants CCF-1423617 and CCF-1719158.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Functional programming enjoys a beautiful connection to logic, known as the Curry-Howard correspondence, or proofs as programs principle [22]; results and notions about a language are translated to those about proofs, and vice-versa [17]. In addition to expressing computation as proof transformations, this connection is also fruitful for education: everybody would understand that the assumption “an x is zero” does not mean “every x is zero,” which in turn explains the subtle typing rules for polymorphism in programs. The typing rules for modules are even more cryptic, but knowing that they correspond exactly to the rules for existential quantification certainly gives us more confidence that they are correct! While not everything useful must have a Curry-Howard correspondence, we believe finding these delightful coincidences where the same idea is rediscovered many times in both logic and programming can only be beneficial [42].

One such instance involves codata. In contrast with the mystique it has as a programming construct, codata is pervasive in mathematics and logic, where it arises through the lens of duality. The most visual way to view the duality is in the categorical diagrams of sums versus products—the defining arrows go into a sum and come out of a product—and in algebras versus coalgebras [25]. In proof theory, codata has had an impact on theorem proving [5] and on the foundation of computation via polarity [29, 45]. Polarity recognizes which of two dialogic actors speaks first: the proponent (who seeks to verify or prove a fact) or the opponent (who seeks to refute the fact).

The two-sided, interactive view appears all over the study of programming languages, where data is concerned about how values are constructed and codata is concerned about how they are used [15]. Sometimes, this perspective is readily apparent, like with session types [7] which distinguish internal choice (a provider’s decision) versus external choice (a client’s decision). But other occurrences are more obscure, like in the semantics of PCF (i.e. the call-by-name \(\lambda \)-calculus with numbers and general recursion). In PCF, the result of evaluating a program must be of a ground type in order to respect the laws of functions (namely \(\eta \)) [32]. This is not due to differences between ground types versus “higher types,” but to the fact that data types are directly observable, whereas codata types are only indirectly observable via their interface.

Clearly codata has merit in theoretical pursuits; we think it has merit in practical ones as well. The main application of codata so far has been for representing infinite objects and coinductive proofs in proof assistants [1, 39]. However, we believe that codata also makes for an important general-purpose programming feature. Codata is a bridge between the functional and object-oriented paradigms; a common denominator between the two very different approaches to programming. On one hand, functional languages are typically rich in data types—as many as the programmer wants to define via

declarations—but has a paucity of codata types (usually just function types). On the other hand, object-oriented languages are rich in codata types—programmer-defined in terms of classes or interfaces—but a paucity of data types (usually just primitives like booleans and numbers). We illustrate this point with a collection of example applications that arise in both styles of programming, including common encodings, demand-driven programming, abstraction, and Hoare-style reasoning.

declarations—but has a paucity of codata types (usually just function types). On the other hand, object-oriented languages are rich in codata types—programmer-defined in terms of classes or interfaces—but a paucity of data types (usually just primitives like booleans and numbers). We illustrate this point with a collection of example applications that arise in both styles of programming, including common encodings, demand-driven programming, abstraction, and Hoare-style reasoning.

While codata types can be seen in the shadows behind many examples of programming—often hand-compiled away by the programmer—not many functional languages have native support for them. To this end, we demonstrate a pair of simple compilation techniques between a typical core functional language (with data types) and one with codata. One direction—based on the well-known visitor pattern from object-oriented programming—simultaneously shows how to extend an object-oriented language with data types (as is done by Scala) and how to compile core functional programs to a more object-oriented setting (e.g. targeting a backend like JavaScript or the JVM). The other shows how to add native codata types to functional languages by reducing them to commonly-supported data types and how to compile a “pure” object-oriented style of programming to a functional setting. Both of these techniques are macro-expansions that are not specific to any particular language, as they work with both statically and dynamically typed disciplines, and they preserve the well-typed status of programs without increasing the complexity of the types involved.

Our claim is that codata is a universal programming feature that has been thus-far missing or diminished in today’s functional programming languages. This is too bad, since codata is not just a feature invented for the convenience of programmers, but a persistent idea that has sprung up over and over from the study of mathematics, logic, and computation. We aim to demystify codata, and en route, bridge the wide gulf between the functional and object-oriented paradigms. Fortunately, it is easy for most mainstream languages to add or bring out codata today without a radical change to their implementation. But ultimately, we believe that the languages of the future should incorporate both data and codata outright. To that end, our contributions are to:

-

(Section 2) Illustrate the benefits of codata in both theory and practice: (1) a decomposition of well-known \(\lambda \)-calculus encodings by inverting the priority of construction and destruction; (2) a first-class abstraction mechanism; (3) a method of demand-driven programming; and (4) a static type system for representing Hoare-style invariants on resource use.

-

(Section 3) Provide simple transformations for compiling data to codata, and vice-versa, which are appropriate for languages with different evaluation strategies (eager or lazy) and type discipline (static or dynamic).

-

(Section 4) Demonstrate various implementations of codata for general-purpose programming in two ways: (1) an extension of Haskell with codata; and (2) a prototype language that compiles to several languages of different evaluation strategies, type disciplines, and paradigms.

2 The Many Faces of Codata

Codata can be used to solve other problems in programming besides representing infinite objects and processes like streams and servers [1, 39]. We start by presenting codata as a merger between theory and practice, whereby encodings of data types in an object-oriented style turn out to be a useful intermediate step in the usual encodings of data in the \(\lambda \)-calculus. Demand-driven programming is considered a virtue of lazy languages, but codata is a language-independent tool for capturing this programming idiom. Codata exactly captures the essence of procedural abstraction, as achieved with \(\lambda \)-abstractions and objects, with a logically founded formalism [16]. Specifying pre- and post-conditions of protocols, which is available in some object systems [14], is straightforward with indexed, recursive codata types, i.e. objects with guarded methods [40].

2.1 Church Encodings and Object-Oriented Programming

Crucial information structures, like booleans, numbers, and lists can be encoded in the untyped \(\lambda \)-calculus (a.k.a. Church encodings) or in the typed polymorphic \(\lambda \)-calculus (a.k.a. Böhm-Berarducci [9] encodings). It is quite remarkable that data structures can be simulated with just first-class, higher-order functions. The downside is that these encodings can be obtuse at first blush, and have the effect of obscuring the original program when everything is written with just \(\lambda \)s and application. For example, the \(\lambda \)-representation of the boolean value  , the first projection out of a pair, and the constant function K are all expressed as \(\lambda x{.}\lambda y{.}x\), which is not that immediately evocative of its multi-purpose nature.

, the first projection out of a pair, and the constant function K are all expressed as \(\lambda x{.}\lambda y{.}x\), which is not that immediately evocative of its multi-purpose nature.

Object-oriented programmers have also been representing data structures in terms of objects. This is especially visible in the Smalltalk lineage of languages like Scala, wherein an objective is that everything that can be an object is. As it turns out, the object-oriented features needed to perform this representation technique are exactly those of codata. That is because Church-style encodings and object-oriented representations of data all involve switching focus from the way values are built (i.e. introduced) to the way they are used (i.e. eliminated).

Consider the representation of Boolean values as an algebraic data type. There may be many ways to use a

value. However, it turns out that there is a most-general eliminator of

value. However, it turns out that there is a most-general eliminator of

the expression

the expression

. This basic construct can be used to define all the other uses for

. This basic construct can be used to define all the other uses for

. Instead of focusing on the constructors

. Instead of focusing on the constructors

and

and

let’s then focus on this most-general form of

let’s then focus on this most-general form of

elimination; this is the essence of the encodings of booleans in terms of objects. In other words, booleans can be thought of as objects that implement a single method:

elimination; this is the essence of the encodings of booleans in terms of objects. In other words, booleans can be thought of as objects that implement a single method:

. So that the expression

. So that the expression

would instead be written as (

would instead be written as (

). We then define the true and false values in terms of their reaction to

). We then define the true and false values in terms of their reaction to

:

:

Or alternatively, we can write the same definition using copatterns, popularized for use in the functional paradigm by Abel et al. [1] by generalizing the usual pattern-based definition of functions by multiple clauses, as:

This works just like equational definitions by pattern-matching in functional languages: the expression to the left of the equals sign is the same as the expression to the right (for any binding of

and

and

). Either way, the net result is that (

). Either way, the net result is that (

) is

) is

, whereas (

, whereas (

) is

) is

.

.

This covers the object-based presentation of booleans in a dynamically typed language, but how do static types come into play? In order to give a type description of the above boolean objects, we can use the following interface, analogous to a Java interface:

This declaration is dual to a data declaration in a functional language: data declarations define the types of constructors (which produce values of the data type) and codata declarations define the types of destructors (which consume values of the codata type) like

. The reason that the

. The reason that the

observation introduces its own polymorphic type

observation introduces its own polymorphic type

is because an if-then-else might return any type of result (as long as both branches agree on the type). That way, both the two objects

is because an if-then-else might return any type of result (as long as both branches agree on the type). That way, both the two objects

and

and

above are values of the codata type

above are values of the codata type

.

.

At this point, the representation of booleans as codata looks remarkably close to the encodings of booleans in the \(\lambda \)-calculus! Indeed, the only difference is that in the \(\lambda \)-calculus we “anonymize” booleans. Since they reply to only one request, that request name can be dropped. We then arrive at the familiar encodings in the polymorphic \(\lambda \)-calculus:

In addition, the invocation of the

method just becomes ordinary function application;

method just becomes ordinary function application;

of type a is written as b a x y. Otherwise, the definition and behavior of booleans as either codata types or as polymorphic functions are the same.

of type a is written as b a x y. Otherwise, the definition and behavior of booleans as either codata types or as polymorphic functions are the same.

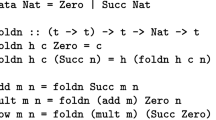

This style of inverting the definition of data types—either into specific codata types or into polymorphic functions—is also related to another concept in object-oriented programming. First, consider how a functional programmer would represent a binary

(with integer-labeled leaves) and a

(with integer-labeled leaves) and a

function that traverses a tree by converting the labels on all leaves and combining the results of sub-trees:

function that traverses a tree by converting the labels on all leaves and combining the results of sub-trees:

The above code relies on pattern-matching on values of the

data type and higher-order functions

data type and higher-order functions

and

and

for accumulating the result. Now, how might an object-oriented programmer tackle the problem of traversing a tree-like structure? The visitor pattern! With this pattern, the programmer specifies a “visitor” object which contains knowledge of what to do at every node of the tree, and tree objects must be able to accept a visitor with a method that will recursively walk down each subcomponent of the tree. In a pure style—which returns an accumulated result directly instead of using mutable state as a side channel for results—the visitor pattern for a simple binary tree interface will look like:

for accumulating the result. Now, how might an object-oriented programmer tackle the problem of traversing a tree-like structure? The visitor pattern! With this pattern, the programmer specifies a “visitor” object which contains knowledge of what to do at every node of the tree, and tree objects must be able to accept a visitor with a method that will recursively walk down each subcomponent of the tree. In a pure style—which returns an accumulated result directly instead of using mutable state as a side channel for results—the visitor pattern for a simple binary tree interface will look like:

And again, we can write this same code more elegantly, without the need to break apart the two arguments across the equal sign with a manual abstraction, using copatterns as:

Notice how the above code is just an object-oriented presentation of the following encoding of binary trees into the polymorphic \(\lambda \)-calculus:

The only essential difference between this \(\lambda \)-encoding of trees versus the \(\lambda \)-encoding of booleans above is currying: the representation of the data type \( Tree \) takes a single product \( TreeVisitor ~a\) of the necessary arguments, whereas the data type \( Bool \) takes the two necessary arguments separately. Besides this easily-converted difference of currying, the usual Böhm-Berarducci encodings shown here correspond to a pure version of the visitor pattern.

2.2 Demand-Driven Programming

In “Why functional programming matters” [23], Hughes motivates the utility of practical functional programming through its excellence in compositionality. When designing programs, one of the goals is to decompose a large problem into several manageable sub-problems, solve each sub-problem in isolation, and then compose the individual parts together into a complete solution. Unfortunately, Hughes identifies some examples of programs which resist this kind of approach.

In particular, numeric algorithms—for computing square roots, derivatives integrals—rely on an infinite sequence of approximations which converge on the true answer only in the limit of the sequence. For these numeric algorithms, the decision on when a particular approximation in the sequence is “close enough” to the real answer lies solely in the eyes of the beholder: only the observer of the answer can say when to stop improving the approximation. As such, standard imperative implementations of these numeric algorithms are expressed as a single, complex loop, which interleaves both the concerns of producing better approximations with the termination decision on when to stop. Even more complex is the branching structure of the classic minimax algorithm from artificial intelligence for searching for reasonable moves in two-player games like chess, which can have an unreasonably large (if not infinite) search space. Here, too, there is difficulty separating generation from selection, and worse there is the intermediate step of pruning out uninteresting sub-trees of the search space (known as alpha-beta pruning). As a result, a standard imperative implementation of minimax is a single, recursive function that combines all the tasks—generation, pruning, estimation, and selection—at once.

Hughes shows how both instances of failed decomposition can be addressed in functional languages through the technique of demand-driven programming. In each case, the main obstacle is that the control of how to drive the next step of the algorithm—whether to continue or not—lies with the consumer. The producer of potential approximations and game states, in contrast, should only take over when demanded by the consumer. By giving primary control to the consumer, each of these problems can be decomposed into sensible sub-tasks, and recomposed back together. Hughes uses lazy evaluation, as found in languages like Miranda and Haskell, in order to implement the demand-driven algorithms. However, the downside of relying on lazy evaluation is that it is a whole-language decision: a language is either lazy by default, like Haskell, or not, like OCaml. When working in a strict language, expressing these demand-driven algorithms with manual laziness loses much of their original elegance [33].

In contrast, a language should directly support the capability of yielding control to the consumer independently of the language being strict or lazy; analogously to what happens with lambda abstractions. An abstraction computes on-demand, why is this property relegated to this predefined type only? In fact, the concept of codata also has this property. As such, it allows us to describe demand-driven programs in an agnostic way which works just as well in Haskell as in OCaml without any additional modification. For example, we can implement Hughes’ demand-driven AI game in terms of codata instead of laziness. To represent the current game state, and all of its potential developments, we can use an arbitrarily-branching tree codata type.

The task of generating all potential future boards from the current board state produces one of these tree objects, described as follows (where

of type

of type

generates a list of possible moves):

generates a list of possible moves):

Notice that the tree might be finite, such as in the game of Tic-Tac-Toe. However, it would still be inappropriate to waste resources fully generating all moves before determining which are even worth considering. Fortunately, the fact that the responses of a codata object are only computed when demanded means that the consumer is in full control over how much of the tree is generated, just as in Hughes’ algorithm. This fact lets us write the following simplistic

function which cuts off sub-trees at a fixed depth.

function which cuts off sub-trees at a fixed depth.

The more complex alpha-beta pruning algorithm can be written as its own pass, similar to

above. Just like Hughes’ original presentation, the evaluation of the best move for the opponent is the composition of a few smaller functions:

above. Just like Hughes’ original presentation, the evaluation of the best move for the opponent is the composition of a few smaller functions:

What is the difference between this codata version of minimax and the one presented by Hughes that makes use of laziness? They both compute on-demand which makes the game efficient. However, demand-driven code written with codata can be easily ported between strict and lazy languages with only syntactic changes. In other words, codata is a general, portable, programming feature which is the key for compositionality in program design.Footnote 1

2.3 Abstraction Mechanism

In the pursuit of scalable and maintainable program design, the typical followup to composability is abstraction. The basic purpose of abstraction is to hide certain implementation details so that different parts of the code base need not be concerned with them. For example, a large program will usually be organized into several different parts or “modules,” some of which may hold general-purpose “library” code and others may be application-specific “clients” of those libraries. Successful abstractions will leverage tools of the programming language in question so that there is a clear interface between libraries and their clients, codifying which details are exposed to the client and which are kept hidden inside the library. A common such detail to hide is the concrete representation of some data type, like strings and collections. Clear abstraction barriers give freedom to both the library implementor (to change hidden details without disrupting any clients) as well as the client (to ignore details not exposed by the interface).

Reynolds [35] identified, and Cook [12] later elaborated on, two different mechanisms to achieve this abstraction: abstract data types and procedural abstraction. Abstract data types are crisply expressed by the Standard ML module system, based on existential types, which serves as a concrete practical touchstone for the notion. Procedural abstraction is pervasively used in object-oriented languages. However, due to the inherent differences among the many languages and the way they express procedural abstraction, it may not be completely clear of what the “essence” is, the way existential types are the essence of modules. What is the language-agnostic representation of procedural abstraction? Codata! The combination of observation-based interfaces, message-passing, and dynamic dispatch are exactly the tools needed for procedural abstraction. Other common object-oriented features—like inheritance, subtyping, encapsulation, and mutable state—are orthogonal to this particular abstraction goal. While they may be useful extensions to codata for accomplishing programming tasks, only pure codata itself is needed to represent abstraction.

Specifying a codata type is giving an interface—between an implementation and a client—so that instances of the type (implementations) can respond to requests (clients). In fact, method calls are the only way to interact with our objects. As usual, there is no way to “open up” a higher-order function—one example of a codata type—and inspect the way it was implemented. The same intuition applies to all other codata types. For example, Cook’s [12] procedural “set” interface can be expressed as a codata type with the following observations:

Every single object of type

will respond to these observations, which is the only way to interact with it. This abstraction barrier gives us the freedom of defining several different instances of

will respond to these observations, which is the only way to interact with it. This abstraction barrier gives us the freedom of defining several different instances of

objects that can all be freely composed with one another. One such instance of

objects that can all be freely composed with one another. One such instance of

uses a list to keep track of a hidden state of the contained elements (where

uses a list to keep track of a hidden state of the contained elements (where

checks if a particular number is an element of the given list, and the operation

checks if a particular number is an element of the given list, and the operation

is the standard functional fold):

is the standard functional fold):

But of course, many other instances of

can also be given. For example, this codata type interface also makes it possible to represent infinite sets like the set

can also be given. For example, this codata type interface also makes it possible to represent infinite sets like the set

of all even numbers which is defined in terms of the more general

of all even numbers which is defined in terms of the more general

that unions all even numbers with some other set (where the function

that unions all even numbers with some other set (where the function

checks if a number is even):

checks if a number is even):

Because of the natural abstraction mechanism provided by codata, different

implementations can interact with each other. For example, we can union a finite set and

implementations can interact with each other. For example, we can union a finite set and

together because both definitions of

together because both definitions of

know nothing of the internal structure of the other

know nothing of the internal structure of the other

. Therefore, all we can do is apply the observations provided by the

. Therefore, all we can do is apply the observations provided by the

codata type.

codata type.

While sets of numbers are fairly simplistic, there are many more practical real-world instances of the procedural abstraction provided by codata to be found in object-oriented languages. For example, databases are a good use of abstraction, where basic database queries can be represented as the observations on table objects. A simplified interface to a database table (containing rows of type

) with selection, deletion, and insertion, is given as follows:

) with selection, deletion, and insertion, is given as follows:

On one hand, specific implementations can be given for connecting to and communicating with a variety of different databases—like Postgres, MySQL, or just a simple file system—which are hidden behind this interface. On the other hand, clients can write generic operations independently of any specific database, such as copying rows from one table to another or inserting a row into a list of compatible tables:

In addition to abstracting away the details of specific databases, both

and

and

can communicate between completely different databases by just passing in the appropriate object instances, which all have the same generic type. Another use of this generality is for testing. Besides the normal instances of

can communicate between completely different databases by just passing in the appropriate object instances, which all have the same generic type. Another use of this generality is for testing. Besides the normal instances of

which perform permanent operations on actual tables, one can also implement a fictitious simulation which records changes only in temporary memory. That way, client code can be seamlessly tested by running and checking the results of simulated database operations that have no external side effects by just passing pure codata objects.

which perform permanent operations on actual tables, one can also implement a fictitious simulation which records changes only in temporary memory. That way, client code can be seamlessly tested by running and checking the results of simulated database operations that have no external side effects by just passing pure codata objects.

2.4 Representing Pre- and Post-Conditions

The extension of data types with indexes (a.k.a. generalized algebraic data types) has proven useful to statically verify a data structure’s invariant, like for red-black trees [43]. With indexed data types, the programmer can inform the static type system that a particular value of a data type satisfies some additional conditions by constraining the way in which it was constructed. Unsurprisingly, indexed codata types are dual and allow the creator of an object to constrain the way it is going to be used, thereby adding pre- and post-conditions to the observations of the object. In other words, in a language with type indexes, codata enables the programmer to express more information in its interface.

This additional expressiveness simplifies applications that rely on a type index to guard observations. Thibodeau et al. [40] give examples of such programs, including an automaton specification where its transitions correspond to an observation that changes a pre- and post-condition in its index, and a fair resource scheduler where the observation of several resources is controlled by an index tracking the number of times they have been accessed. For concreteness, let’s use an indexed codata type to specify safe protocols as in the following example from an object-oriented language with guarded methods:

This example comes from DeLine and Fähndrich [14], where they present an extension to C\(^\sharp \) constraining the pre- and post-conditions for method calls. If we have an instance of this

interface, then observing it through the above methods can return new socket objects with a different index. The index thereby governs the order in which clients are allowed to apply these methods. A socket will start with the index

interface, then observing it through the above methods can return new socket objects with a different index. The index thereby governs the order in which clients are allowed to apply these methods. A socket will start with the index

. The only way to use a

. The only way to use a

is to

is to

it, and the only way to use a

it, and the only way to use a

is to

is to

it. This forces us to follow a protocol when initializing a

it. This forces us to follow a protocol when initializing a

.

.

Intermezzo 1

This declaration puts one aspect in the hands of the programmer, though. A client can open a socket and never close it, hogging the resource. We can remedy this problem with linear types, which force us to address any loose ends before finishing the program. With linear types, it would be a type error to have a lingering

socket laying around at the end of the program, and a call to

socket laying around at the end of the program, and a call to

would use it up. Furthermore, linear types would ensure that outdated copies of

would use it up. Furthermore, linear types would ensure that outdated copies of

objects cannot be used again, which is especially appropriate for actions like

objects cannot be used again, which is especially appropriate for actions like

which is meant to transform a

which is meant to transform a

socket into a

socket into a

one, and likewise for

one, and likewise for

which transforms a

which transforms a

socket into a

socket into a

one. Even better, enhancing linear types with a more sophisticated notion of ownership—like in the Rust programming language which differentiates a permanent transfer of ownership from temporarily borrowing it—makes this resource-sensitive interface especially pleasant. Observations like

one. Even better, enhancing linear types with a more sophisticated notion of ownership—like in the Rust programming language which differentiates a permanent transfer of ownership from temporarily borrowing it—makes this resource-sensitive interface especially pleasant. Observations like

,

,

, and

, and

which are meant to fully consume the observed object would involve full ownership of the object itself to the method call and effectively replace the old object with the returned one. In contrast, observations like

which are meant to fully consume the observed object would involve full ownership of the object itself to the method call and effectively replace the old object with the returned one. In contrast, observations like

and

and

which are meant to be repeated on the same object would merely borrow the object for the duration of the action so that it could be used again.

which are meant to be repeated on the same object would merely borrow the object for the duration of the action so that it could be used again.

3 Inter-compilation of Core Calculi

We saw previously examples of using codata types to replicate well-known encodings of data types into the \(\lambda \)-calculus. Now, let’s dive in and show how data and codata types formally relate to one another. In order to demonstrate the relationship, we will consider two small languages that extend the common polymorphic \(\lambda \)-calculus:

extends \(\lambda \) with user-defined algebraic data types, and

extends \(\lambda \) with user-defined algebraic data types, and  extends \(\lambda \) with user-defined codata types. In the end, we will find that both of these foundational languages can be inter-compiled into one another. Data can be represented by codata via the visitor pattern (

extends \(\lambda \) with user-defined codata types. In the end, we will find that both of these foundational languages can be inter-compiled into one another. Data can be represented by codata via the visitor pattern ( ). Codata can be represented by data by tabulating the possible answers of objects (

). Codata can be represented by data by tabulating the possible answers of objects (

).

).

In essence, this demonstrates how to compile programs between the functional and object-oriented paradigms. The

direction shows how to extend existing functional languages (like OCaml, Haskell, or Racket) with codata objects without changing their underlying representation. Dually, the

direction shows how to extend existing functional languages (like OCaml, Haskell, or Racket) with codata objects without changing their underlying representation. Dually, the

direction shows how to compile functional programs with data types into an object-oriented target language (like JavaScript).

direction shows how to compile functional programs with data types into an object-oriented target language (like JavaScript).

Each of the encodings are macro expansions, in the sense that they leave the underlying base \(\lambda \)-calculus constructs of functions, applications, and variables unchanged (as opposed to, for example, continuation-passing style translations). They are defined to operate on untyped terms, but they also preserve typability when given well-typed terms. The naïve encodings preserve the operational semantics of the original term, according to a call-by-name semantics. We also illustrate how the encodings can be modified slightly to correctly simulate the call-by-value operational semantics of the source program. To conclude, we show how the languages and encodings can be generalized to more expressive type systems, which include features like existential types and indexed types (a.k.a. generalized algebraic data types and guarded methods).

Notation. We use both an overline \(\overline{t}\) and dots \(t_1 \dots \) to indicate a sequence of terms t (and likewise for types, variables, etc.). The arrow type  means

means  ; when n is 0, it is not a function type, i.e. just the codomain

; when n is 0, it is not a function type, i.e. just the codomain

. The application

. The application  means

means  ; when n is 0, it is not a function application, but the constant

; when n is 0, it is not a function application, but the constant  . We write a single step of an operational semantics with the arrow \(\mapsto \), and many steps (i.e. its reflexive-transitive closure) as

. We write a single step of an operational semantics with the arrow \(\mapsto \), and many steps (i.e. its reflexive-transitive closure) as  . Operational steps may occur within an evaluation context E, i.e. \(t \mapsto t'\) implies that \(E[t] \mapsto E[t']\).

. Operational steps may occur within an evaluation context E, i.e. \(t \mapsto t'\) implies that \(E[t] \mapsto E[t']\).

3.1 Syntax and Semantics

We present the syntax and semantics of the base language and the two extensions  and

and  . For the sake of simplicity, we keep the languages as minimal as possible to illustrate the main inter-compilations. Therefore,

. For the sake of simplicity, we keep the languages as minimal as possible to illustrate the main inter-compilations. Therefore,  and

and  do not contain recursion, nested (co)patterns, or indexed types. The extension with recursion is standard, and an explanation of compiling (co)patterns can be found in [11, 38, 39]. Indexed types are later discussed informally in Sect. 3.6.

do not contain recursion, nested (co)patterns, or indexed types. The extension with recursion is standard, and an explanation of compiling (co)patterns can be found in [11, 38, 39]. Indexed types are later discussed informally in Sect. 3.6.

The Base Language. We will base both our core languages of interest on a common starting point: the polymorphic \(\lambda \)-calculus as shown in Fig. 1.Footnote 2 This is the standard simply typed \(\lambda \)-calculus extended with impredicative polymorphism (a.k.a. generics). There are only three forms of terms (variables x, applications t u, and function abstractions \(\lambda x{.}e\)) and three forms of types (type variables a, function types \(\tau \rightarrow \rho \), and polymorphic types \(\forall a{.}\tau \)). We keep the type abstraction and instantiation implicit in programs—as opposed to explicit as in System F—for two reasons. First, this more accurately resembles the functional languages in which types are inferred, as opposed to mandatory annotations explicit within the syntax of programs. Second, it more clearly shows how the translations that follow do not rely on first knowing the type of terms, but apply to any untyped term. In other words, the compilation techniques are also appropriate for dynamically typed languages like Scheme and Racket.

Figure 1 reviews both the standard call-by-name and call-by-value operational semantics for the \(\lambda \)-calculus. As usual, the difference between the two is that in call-by-value, the argument of a function call is evaluated prior to substitution, whereas in call-by-name the argument is substituted first. This is implied by the different set of evaluation contexts (E) and the fact that the operational rule uses a more restricted notion of value (V) for substitutable arguments in call-by-value. Note that, there is an interplay between evaluation and typing. In a more general setting where effects are allowed, the typing rule for introducing polymorphism (i.e. the rule with \(S : \forall a{.}\tau \) in the conclusion) is only safe for substitutable terms, which imposes the well-known the value restriction for call-by-value (limiting S to values), but requires no such restriction in call-by-name where every term is a substitutable value (letting S be any term).

A Language with Data. The first extension of the \(\lambda \)-calculus is with user-defined data types, as shown in Fig. 2; it corresponds to a standard core language for statically typed functional languages. Data declarations introduce a new type constructor ( ) as well as some number of associated constructors (

) as well as some number of associated constructors ( ) that build values of that data type. For simplicity, the list of branches in a case expression are considered unordered and non-overlapping (i.e. no two branches for the same constructor within a single case expression). The types of constructors are given alongside free variables in \(\varGamma \), and the typing rule for constructors requires they be fully applied. We also assume an additional side condition to the typing rule for case expressions that the branches are exhaustive (i.e. every constructor of the data type in question is covered as a premise).

) that build values of that data type. For simplicity, the list of branches in a case expression are considered unordered and non-overlapping (i.e. no two branches for the same constructor within a single case expression). The types of constructors are given alongside free variables in \(\varGamma \), and the typing rule for constructors requires they be fully applied. We also assume an additional side condition to the typing rule for case expressions that the branches are exhaustive (i.e. every constructor of the data type in question is covered as a premise).

Figure 2 presents the extension to the operational semantics from Fig. 1, which is also standard. The new evaluation rule for data types reduces a case expression matched with an applied constructor. Note that since the branches are unordered, the one matching the constructor is chosen out of the possibilities and the parameters of the constructor are substituted in the branch’s pattern. There is also an additional form of constructed values: in call-by-name any constructor application is a value, whereas in call-by-value only constructors parameterized by other values is a value. As such, call-by-value goes on to evaluate constructor parameters in advance, as shown by the extra evaluation context. In both evaluation strategies, there is a new form of evaluation context that points out the discriminant of a case expression, since it is mandatory to determine which constructor was used before deciding the appropriate branch to take.

A Language with Codata. The second extension of the \(\lambda \)-calculus is with user-defined codata types, as shown in Fig. 3. Codata declarations in

define a new type constructor (

define a new type constructor ( ) along with some number of associated destructors (

) along with some number of associated destructors ( ) for projecting responses out of values of a codata type. The type level of

) for projecting responses out of values of a codata type. The type level of  corresponds directly to

corresponds directly to  . However, at the term level, we have codata observations of the form

. However, at the term level, we have codata observations of the form  using “dot notation”, which can be thought of as sending the message

using “dot notation”, which can be thought of as sending the message  to the object t or as a method invocation from object-oriented languages. Values of codata types are introduced in the form

to the object t or as a method invocation from object-oriented languages. Values of codata types are introduced in the form

, which lists each response this value gives to all the possible destructors of the type. As with case expressions, we take the branches to be unordered and non-overlapping for simplicity.

, which lists each response this value gives to all the possible destructors of the type. As with case expressions, we take the branches to be unordered and non-overlapping for simplicity.

Interestingly, the extension of the operational semantics with codata—the values, evaluation contexts, and reduction rules—are identical for both call-by-name and call-by-value evaluation. In either evaluation strategy, a codata object  is considered a value and the codata observation

is considered a value and the codata observation  must evaluate t no matter what to continue, leading to the same form of evaluation context

must evaluate t no matter what to continue, leading to the same form of evaluation context  . The additional evaluation rule selects and invokes the matching branch of a codata object and is the same regardless of the evaluation strategy.

. The additional evaluation rule selects and invokes the matching branch of a codata object and is the same regardless of the evaluation strategy.

Note that the reason that values of codata types are the same in any evaluation strategy is due to the fact that the branches of the object are only ever evaluated on-demand, i.e. when they are observed by a destructor, similar to the fact that the body of a function is only ever evaluated when the function is called. This is the semantic difference that separates codata types from records found in many programming languages. Records typically map a collection of labels to a collection of values, which are evaluated in advance in a call-by-value language similar to the constructed values of data types. Whereas with codata objects, labels map to behavior which is only invoked when observed.

The additional typing rules for  are also given in Fig. 3. The rule for typing

are also given in Fig. 3. The rule for typing  is analogous to a combination of type instantiation and application, when viewing

is analogous to a combination of type instantiation and application, when viewing

as a function of the given type. The rule for typing a codata object, in contrast, is similar to the rule for typing a case expression of a data type. However, in this comparison, the rule for objects is partially “upside down” in the sense that the primary type in question (

as a function of the given type. The rule for typing a codata object, in contrast, is similar to the rule for typing a case expression of a data type. However, in this comparison, the rule for objects is partially “upside down” in the sense that the primary type in question ( ) appears in the conclusion rather than as a premise. This is the reason why there is one less premise for typing codata objects than there is for typing data case expressions. As with that rule, we assume that the branches are exhaustive, so that every destructor of the codata type appears in the premise.

) appears in the conclusion rather than as a premise. This is the reason why there is one less premise for typing codata objects than there is for typing data case expressions. As with that rule, we assume that the branches are exhaustive, so that every destructor of the codata type appears in the premise.

3.2 Compiling Data to Codata: The Visitor Pattern

In Sect. 2.1, we illustrated how to convert a data type representing trees into a codata type. This encoding corresponds to a rephrasing of the object-oriented visitor pattern to avoid unnecessary side-effects. Now lets look more generally at the pattern, to see how any algebraic data type in  can be encoded in terms of codata in

can be encoded in terms of codata in

.

.

The visitor pattern has the net effect of inverting the orientation of a data declaration (wherein construction comes first) into codata declarations (wherein destruction comes first). This reorientation can be used for compiling user-defined data types in  to codata types in

to codata types in

as shown in Fig. 4. As with all of the translations we will consider, this is a macro expansion since the syntactic forms from the base \(\lambda \)-calculus are treated homomorphically (i.e.

as shown in Fig. 4. As with all of the translations we will consider, this is a macro expansion since the syntactic forms from the base \(\lambda \)-calculus are treated homomorphically (i.e.

,

,

, and

, and

). Furthermore, this translation also perfectly preserves types, since the types of terms are exactly the same after translation (i.e.

). Furthermore, this translation also perfectly preserves types, since the types of terms are exactly the same after translation (i.e.

).

).

Notice how each data type (

) gets represented by two codata types: the “visitor” (

) gets represented by two codata types: the “visitor” (

) which says what to do with values made with each constructor, and the type itself (

) which says what to do with values made with each constructor, and the type itself (

) which has one method which accepts a visitor and returns a value of type b. An object of the codata type, then, must be capable of accepting any visitor, no matter what type of result it returns. Also notice that we include no other methods in the codata type representation of

) which has one method which accepts a visitor and returns a value of type b. An object of the codata type, then, must be capable of accepting any visitor, no matter what type of result it returns. Also notice that we include no other methods in the codata type representation of

.

.

At the level of terms, first consider how the case expression of the data type is encoded. The branches of the case (contained within the curly braces) are represented as a first-class object of the visitor type: each constructor is mapped to the corresponding destructor of the same name and the variables bound in the pattern are mapped to parameters of the function returned by the object in each case. The whole case expression itself is then implemented by calling the sole method ( ) of the codata object and passing the branches of the case as the corresponding visitor object. Shifting focus to the constructors, we can now see that they are compiled as objects that invoke the corresponding destructor on any given visitor, and the terms which were parameters to the constructor are now parameters to a given visitor’s destructor. Of course, other uses of the visitor pattern might involve a codata type (

) of the codata object and passing the branches of the case as the corresponding visitor object. Shifting focus to the constructors, we can now see that they are compiled as objects that invoke the corresponding destructor on any given visitor, and the terms which were parameters to the constructor are now parameters to a given visitor’s destructor. Of course, other uses of the visitor pattern might involve a codata type (

) with more methods implementing additional functionality besides case analysis. However, we only need the one method to represent data types in

) with more methods implementing additional functionality besides case analysis. However, we only need the one method to represent data types in  because case expressions are the primitive destructor for values of data types in the language.

because case expressions are the primitive destructor for values of data types in the language.

For example, consider applying the above visitor pattern to a binary tree data type as follows:

Note how this encoding differs from the one that was given in Sect. 2.1 since the  method is non-recursive whereas the

method is non-recursive whereas the  method was recursive, in order to model a depth-first search traversal of the tree.

method was recursive, in order to model a depth-first search traversal of the tree.

Of course, other operations, like the

function, could be written in terms of case expressions and recursion as usual by an encoding with above method calls. However, it is possible to go one step further and include other primitive destructors—like recursors or iterators in the style of Gödel’s system T—by embedding them as other methods of the encoded codata type. For example, we can represent

function, could be written in terms of case expressions and recursion as usual by an encoding with above method calls. However, it is possible to go one step further and include other primitive destructors—like recursors or iterators in the style of Gödel’s system T—by embedding them as other methods of the encoded codata type. For example, we can represent

as a primitive destructor as it was in Sect. 2.1 in addition to non-recursive case analysis by adding an alternative visitor

as a primitive destructor as it was in Sect. 2.1 in addition to non-recursive case analysis by adding an alternative visitor

and one more destructor to the generated

and one more destructor to the generated

codata type like so:

codata type like so:

where the definition of

and the encoding of case expressions is the same. In other words, this compilation technique can generalize to as many primitive observations and recursion schemes as desired.

and the encoding of case expressions is the same. In other words, this compilation technique can generalize to as many primitive observations and recursion schemes as desired.

3.3 Compiling Codata to Data: Tabulation

Having seen how to compile data to codata, how can we go the other way? The reverse compilation would be useful for extending functional languages with user-defined codata types, since many functional languages are compiled to a core representation based on the \(\lambda \)-calculus with data types.

Intuitively, the declared data types in  can be thought of as “sums of products.” In contrast, the declared codata types in

can be thought of as “sums of products.” In contrast, the declared codata types in  can be thought of as “products of functions.” Since both core languages are based on the \(\lambda \)-calculus, which has higher-order functions, the main challenge is to relate the two notions of “products.” The codata sense of products are based on projections out of abstract objects, where the different parts are viewed individually and only when demanded. The data sense of products, instead, are based on tuples, in which all components are laid out in advance in a single concrete structure.

can be thought of as “products of functions.” Since both core languages are based on the \(\lambda \)-calculus, which has higher-order functions, the main challenge is to relate the two notions of “products.” The codata sense of products are based on projections out of abstract objects, where the different parts are viewed individually and only when demanded. The data sense of products, instead, are based on tuples, in which all components are laid out in advance in a single concrete structure.

One way to convert codata to data is to tabulate an object’s potential answers ahead of time into a data structure. This is analogous to the fact that a function of type

can be alternatively represented by a tuple of type

can be alternatively represented by a tuple of type

, where the first and second components are the responses of the original function to

, where the first and second components are the responses of the original function to

and

and

, respectively. This idea can be applied to

, respectively. This idea can be applied to

in general as shown in the compilation in Fig. 5.

in general as shown in the compilation in Fig. 5.

A codata declaration of

becomes a data declaration with a single constructor (

becomes a data declaration with a single constructor (

) representing a tuple containing the response for each of the original destructors of

) representing a tuple containing the response for each of the original destructors of

. At the term level, a codata abstraction is compiled by concretely tabulating each of its responses into a tuple using the

. At the term level, a codata abstraction is compiled by concretely tabulating each of its responses into a tuple using the

constructor. A destructor application returns the specific component of the constructed tuple which corresponds to that projection. Note that, since we assume that each object is exhaustive, the tabulation transformation is relatively straightforward; filling in “missing” method definitions with some error value that can be stored in the tuple at the appropriate index would be done in advance as a separate pre-processing step.

constructor. A destructor application returns the specific component of the constructed tuple which corresponds to that projection. Note that, since we assume that each object is exhaustive, the tabulation transformation is relatively straightforward; filling in “missing” method definitions with some error value that can be stored in the tuple at the appropriate index would be done in advance as a separate pre-processing step.

Also notice that there is a special case for non-observable “empty” codata types, which are all collapsed into a single pre-designated

data type. The reason for this collapse is to ensure that this compilation preserves typability: if applied to a well-typed term, the result is also well-typed. The complication arises from the fact that when faced with an empty object

data type. The reason for this collapse is to ensure that this compilation preserves typability: if applied to a well-typed term, the result is also well-typed. The complication arises from the fact that when faced with an empty object  , we have no idea which constructor to use without being given further typing information. So rather than force type checking or annotation in advance for this one degenerate case, we instead collapse them all into a single data type so that there is no need to differentiate based on the type. In contrast, the translation of non-empty objects is straightforward, since we can use the name of any one of the destructors to determine the codata type it is associated with, which then informs us of the correct constructor to use.

, we have no idea which constructor to use without being given further typing information. So rather than force type checking or annotation in advance for this one degenerate case, we instead collapse them all into a single data type so that there is no need to differentiate based on the type. In contrast, the translation of non-empty objects is straightforward, since we can use the name of any one of the destructors to determine the codata type it is associated with, which then informs us of the correct constructor to use.

3.4 Correctness

For the inter-compilations between  into

into  to be useful in practice, they should preserve the semantics of programs. For now, we focus only on the call-by-name semantics for each of the languages. With the static aspect of the semantics, this means they should preserve the typing of terms.

to be useful in practice, they should preserve the semantics of programs. For now, we focus only on the call-by-name semantics for each of the languages. With the static aspect of the semantics, this means they should preserve the typing of terms.

Proposition 1

(Type Preservation). For each of the

and

and

translations: if \(\varGamma \vdash t : \tau \) then

translations: if \(\varGamma \vdash t : \tau \) then  (in the call-by-name type system).

(in the call-by-name type system).

Proof

(Sketch). By induction on the typing derivation of \(\varGamma \vdash t : \tau \).

With the dynamic aspect of the semantics, the translations should preserve the outcome of evaluation (either converging to some value, diverging into an infinite loop, or getting stuck) for both typed and untyped terms. This works because each translation preserves the reduction steps, values, and evaluation contexts of the source calculus’ call-by-name operational semantics.

Proposition 2

(Evaluation Preservation). For each of the

and

and

translations:

translations:  if and only if

if and only if

(in the call-by-name semantics).

(in the call-by-name semantics).

Proof

(Sketch). The forward (“only if”) implication is a result of the following facts that hold for each translation in the call-by-name semantics:

-

For any redex t in the source, if

then

then  .

. -

For any value V in the source,

is a value.

is a value. -

For any evaluation context E in the source, there is an evaluation context \(E'\) in the target such that

for all t.

for all t.

The reverse (“if”) implication then follows from the fact that the call-by-name operational semantics of both source and target languages is deterministic.

3.5 Call-by-Value: Correcting the Evaluation Order

The presented inter-compilation techniques are correct for the call-by-name semantics of the calculi. But what about the call-by-value semantics? It turns out that the simple translations seen so far do not correctly preserve the call-by-value semantics of programs, but they can be easily fixed by being more careful about how they treat the values of the source and target calculi. In other words, we need to make sure that values are translated to values, and evaluation contexts to evaluation contexts. For instance, the following translation (up to renaming) does not preserve the call-by-value semantics of the source program:

The object

is a value in call-by-value, and the erroneous response to the

is a value in call-by-value, and the erroneous response to the  will only be evaluated when observed. However, the structure

will only be evaluated when observed. However, the structure  is not a value in call-by-value, because the field \( error \) must be evaluated in advance which causes an error immediately. In the other direction, we could also have

is not a value in call-by-value, because the field \( error \) must be evaluated in advance which causes an error immediately. In the other direction, we could also have

Here, the immediate error in  has become incorrectly delayed inside the value

has become incorrectly delayed inside the value  .

.

The solution to this problem is straightforward: we must manually delay computations that are lifted out of (object or \(\lambda \)) abstractions, and manually force computations before their results are hidden underneath abstractions. For the visitor pattern, the correction is to only introduce the codata object on constructed values. We can handle other constructed terms by naming their non-value components in the style of administrative-normalization like so:

Conversely, the tabulating translation  will cause the on-demand observations of the object to be converted to preemptive components of a tuple structure. To counter this change in evaluation order, a thunking technique can be employed as follows:

will cause the on-demand observations of the object to be converted to preemptive components of a tuple structure. To counter this change in evaluation order, a thunking technique can be employed as follows:

The two operations can be implemented as  and

and  as usual, but can also be implemented as more efficient memoizing operations. With all these corrections, Propositions 1 and 2 also hold for the call-by-value type system and operational semantics.

as usual, but can also be implemented as more efficient memoizing operations. With all these corrections, Propositions 1 and 2 also hold for the call-by-value type system and operational semantics.

3.6 Indexed Data and Codata Types: Type Equalities

In the world of types, we have so far only formally addressed inter-compilation between languages with simple and polymorphic types. What about the compilation of indexed data and codata types? It turns out some of the compilation techniques we have discussed so far extend to type indexes without further effort, whereas others need some extra help. In particular, the visitor-pattern-based translation

can just be applied straightforwardly to indexed data types:

can just be applied straightforwardly to indexed data types:

In this case, the notion of an indexed visitor codata type exactly corresponds to the mechanics of case expressions for GADTs. In contrast, the tabulation translation

does not correctly capture the semantics of indexed codata types, if applied naïvely.

does not correctly capture the semantics of indexed codata types, if applied naïvely.

Thankfully, there is a straightforward way of “simplifying” indexed data types to more conventional data types using some built-in support for type equalities. The idea is that a constructor with a more specific return type can be replaced with a conventional constructor that is parameterized by type equalities that prove that the normal return type must be the more specific one. The same idea can be applied to indexed codata types as well. A destructor that can only act on a more specific instance of the codata type can instead be replaced by one which works on any instance, but then immediately asks for proof that the object’s type is the more specific one before completing the observation. These two translations, of replacing type indexes with type equalities, are defined as:

This formalizes the intuition that indexed data types can be thought of as enriching constructors to carry around additional constraints that were available at their time of construction, whereas indexed codata types can be thought of as guarding methods with additional constraints that must be satisfied before an observation can be made. Two of the most basic examples of this simplification are for the type declarations which capture the notion of type equality as an indexed data or indexed codata type, which are defined and simplified like so:

With the above ability to simplify away type indexes, all of the presented compilation techniques are easily generalized to indexed data and codata types by composing them with

. For practical programming example, consider the following safe stack codata type indexed by its number of elements.

. For practical programming example, consider the following safe stack codata type indexed by its number of elements.

This stack type is safe in the sense that the  operation can only be applied to non-empty

operation can only be applied to non-empty  s. We cannot compile this to a data type via

s. We cannot compile this to a data type via

directly, because that translation does not apply to indexed codata types. However, if we first simplify the

directly, because that translation does not apply to indexed codata types. However, if we first simplify the  type via

type via

, we learn that we can replace the type of the

, we learn that we can replace the type of the  destructor with

destructor with  , whereas the

, whereas the  destructor is already simple, so it can be left alone. That way, for any object

destructor is already simple, so it can be left alone. That way, for any object  , even though a client can initiate the observation

, even though a client can initiate the observation  , it will never be completed since there is no way to choose a b and prove that

, it will never be completed since there is no way to choose a b and prove that  equals

equals  . Therefore, the net result of the combined

. Therefore, the net result of the combined

translation turns

translation turns  into the following data type, after some further simplification:

into the following data type, after some further simplification:

Notice how the constructor of this type has two fields; one for  and one for

and one for  , respectively. However, the

, respectively. However, the  operation is guarded by a proof obligation: the client can only receive the top integer and remaining stack if he/she proves that the original stack contains a non-zero number of elements.

operation is guarded by a proof obligation: the client can only receive the top integer and remaining stack if he/she proves that the original stack contains a non-zero number of elements.

4 Compilation in Practice

We have shown how data and codata are related through the use of two different core calculi. To explore how these ideas manifest in practice, we have implemented codata in a couple of settings. First, we extended Haskell with codata in order to compare the lazy and codata approaches to demand-driven programming described in Sect. 2.2.Footnote 3 Second, we have created a prototype language with indexed (co)data types to further explore the interaction between the compilation and target languages. The prototype language does not commit to a particular evaluation strategy, typing discipline, or paradigm; instead this decision is made when compiling a program to one of several backends. The supported backends include functional ones—Haskell (call-by-need, static types), OCaml (call-by-value, static types), and Racket (call-by-value, dynamic types)—as well as the object-oriented JavaScript.Footnote 4 The following issues of complex copattern matching and sharing applies to both implementations; the performance results on efficiency of memoized codata objects are tested with the Haskell extension for the comparison with conventional Haskell code.

Complex Copattern Matching. Our implementations support nested copatterns so that objects can respond to chains of multiple observations, even though

only provides flat copatterns. This extension does not enhance the language expressivity but allows more succinct programs [2]. A flattening step is needed to compile nested copatterns down to a core calculus, which has been explored in previous work by Setzer et al. [37] and Thibodeau [39] and implemented in OCaml by Regis-Gianas and Laforgue [33]. Their flattening algorithm requires copatterns to completely cover the object’s possible observations because the coverage information is used to drive flattening. This approach was refined and incorporated in a dependently typed setting by Cockx and Abel [11]. With our goal of supporting codata independently of typing discipline and coverage analysis, we have implemented the purely syntax driven approach to flattening found in [38]. For example, the

only provides flat copatterns. This extension does not enhance the language expressivity but allows more succinct programs [2]. A flattening step is needed to compile nested copatterns down to a core calculus, which has been explored in previous work by Setzer et al. [37] and Thibodeau [39] and implemented in OCaml by Regis-Gianas and Laforgue [33]. Their flattening algorithm requires copatterns to completely cover the object’s possible observations because the coverage information is used to drive flattening. This approach was refined and incorporated in a dependently typed setting by Cockx and Abel [11]. With our goal of supporting codata independently of typing discipline and coverage analysis, we have implemented the purely syntax driven approach to flattening found in [38]. For example, the

function from Sect. 2.2 expands to:

function from Sect. 2.2 expands to:

Sharing. If codata is to be used instead of laziness for demand-driven programming, then it must have the same performance characteristics, which relies on sharing the results of computations [6]. To test this, we compare the performance of calculating streams of Fibonacci numbers—the poster child for sharing—implemented with both lazy list data types and a stream codata type in Haskell extended with codata. These tests, presented in Table 1, show the speed of the codata version is always slower in terms of run time and allocations than the lazy list version, but the difference is small and the two versions scale at the same rate. These performance tests are evidence that codata shares the same information when compiled to a call-by-need language; this we get for free because call-by-need data constructors—which codata is compiled into via

—memoize their fields. In an eager setting, it is enough to use memoized versions of

—memoize their fields. In an eager setting, it is enough to use memoized versions of

and

and

, which are introduced by the call-by-value compilation described in Sect. 3.5. This sharing is confirmed by the OCaml and Racket backends of the prototype language which find the 100th Fibonacci in less than a second (a task that takes hours without sharing).

, which are introduced by the call-by-value compilation described in Sect. 3.5. This sharing is confirmed by the OCaml and Racket backends of the prototype language which find the 100th Fibonacci in less than a second (a task that takes hours without sharing).

As the object-oriented representative, the JavaScript backend is a compilation from data to codata using the visitor pattern presented in Sect. 3.2. Because codata remains codata (i.e. JavaScript objects), an optimization must be performed to ensure the same amount of sharing of codata as the other backends. The solution is to lift out the branches of a codata object, as shown in Fig. 6, where the call-by-need let-bindings can be implemented by

and

and

in strict languages as usual. It turns out that this transformation is also needed in an alternative compilation technique presented by Regis-Gianas and Laforgue [33] where codata is compiled to functions, i.e. another form of codata.

in strict languages as usual. It turns out that this transformation is also needed in an alternative compilation technique presented by Regis-Gianas and Laforgue [33] where codata is compiled to functions, i.e. another form of codata.

5 Related Work

Our work follows in spirit of Amin et al.’s [3] desire to provide a minimal theory that can model type parameterization, modules, objects and classes. Another approach to combine type parameterization and modules is also offered by 1ML [36], which is mapped to System F. Amin et al.’s work goes one step further by translating System F to a calculus that directly supports objects and classes. Our approach differs in methodology: instead of searching for a logical foundation of a pre-determined notion of objects, we let the logic guide us while exploring what objects are. Even though there is no unanimous consensus that functional and object-oriented paradigms should be combined, there have been several hybrid languages for combining both styles of programming, including Scala, the Common Lisp Object System [8], Objective ML [34], and a proposed but unimplemented object system for Haskell [30].

Arising out of the correspondence between programming languages, category theory, and universal algebras, Hagino [20] first proposed codata as an extension to ML to remedy the asymmetry created by data types. In the same way that data types represent initial F-algebras, codata types represent final F-coalgebras. These structures were implemented in the categorical programming language Charity [10]. On the logical side of the correspondence, codata arises naturally in the sequent calculus [15, 28, 44] since it provides the right setting to talk about construction of either the provider (i.e. the term) or the client (i.e. the context) side of a computation, and has roots in classical [13, 41] and linear logic [18, 19].

In session-typed languages, which also have a foundation in linear logic, external choice can be seen as a codata (product) type dual to the way internal choice corresponds to a data (sum) type. It is interesting that similar problems arise in both settings. Balzer and Pfenning [7] discuss an issue that shows up in choosing between internal and external choice; this corresponds to choosing between data and codata, known as the expression problem. They [7] also suggest using the visitor pattern to remedy having external choice (codata) without internal choice (data) as we do in Sect. 3.2. Of course, session types go beyond codata by adding a notion of temporality (via linearity) and multiple processes that communicate over channels.

To explore programming with coinductive types, Ancona and Zucca [4] and Jeannin et al. [26] extended Java and OCaml with regular cyclic structures; these have a finite representation that can be eagerly evaluated and fully stored in memory. A less restricted method of programming these structures was introduced by Abel et al. [1, 2] who popularized the idea of programming by observations, i.e. using copatterns. This line of work further developed the functionality of codata types in dependently typed languages by adding indexed codata types [40] and dependent copattern matching [11], which enabled the specification of bisimulation proofs and encodings of productive infinite objects in Agda. We build on these foundations by developing codata in practical languages.

Focusing on implementation, Regis-Gianas and Laforgue [33] added codata with a macro transformation in OCaml. As it turns out, this macro definition corresponds to one of the popular encodings of objects in the \(\lambda \)-calculus [27], where codata/objects are compiled to functions from tagged messages to method bodies. This compilation scheme requires the use of GADTs for static type checking, and is therefore only applicable to dynamically typed languages or the few statically typed languages with expressive enough type systems like Haskell, OCaml, and dependently typed languages. Another popular technique for encoding codata/objects is presented in [31], corresponding to a class-based organization of dynamic dispatch [21], and is presented in this paper. This technique compiles codata/objects to products of methods, which has the advantage of being applicable in a simply-typed setting.

6 Conclusion