Abstract

Analysis of tubular glands plays an important role for gastric cancer diagnosis, grading, and prognosis; however, gland quantification is a highly subjective task, prone to error. Objective identification of glans might help clinicians for analysis and treatment planning. The visual characteristics of such glands suggest that information from nuclei and their context would be useful to characterize them. In this paper we present a new approach for segmentation of gland nuclei based on nuclear local and contextual (neighborhood) information. A Gradient-Boosted-Regression-Trees classifier is trained to distinguish between gland-nuclei and non-gland-nuclei. Validation was carried out using a dataset containing 45702 annotated nuclei from 90 \(1024\times 1024\) fields of view extracted from gastric cancer whole slide images. A Deep Learning model was trained as a baseline. Results showed an accuracy and f-score 5.4% and 23.6% higher, respectively, with the presented framework than with the Deep Learning approach.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Gastric cancer (GC) is among the most diagnosed cancers and the second most frequent cause of cancer-related death worldwide [1]. Geographically, the highest incidence of GC is in Asia, Latin America, and the Caribbean [2, 3]. In Colombia, GC is the first cause of cancer-related death, representing a 15% of all cancer deaths, with a high incidence in the Andean zone, especially in the departments of Nariño, Boyacá, and Cundinamarca. Currently, it is considered a major public health problem that has generated an expense of more than 47 million USD in five years [4].

GC comprises several kinds of lesions with different severity grades. From such lesions, adenocarcinoma is the most common, representing more than 90% of all GC [5]. Characterization and quantification of the adenocarcinoma might establish plausible chains of events that improve the disease understanding and reduce its mortality rates. Diagnosis is usually reached by an endoscopic biopsy of the stomach which is processed and analyzed by pathologists who determine the degree of malignancy [6]. One of the most common approaches to identify and grade gastric adenocarcinomas is by identifying and estimating the density of glands. Low-grade lesions are characterized by the presence of well/moderately differentiated glands (Fig. 1-a). In high-grade lesions, glands are highly irregular and poorly differentiated (Fig. 1-b) [5, 7]. Identification of glands plays an important role not only in diagnosis but also in establishing some prognosis [7]. An accurate quantification is therefore essential for both the decision making flow and the treatment planning. Unfortunately, this process has remained highly subjective and prone to error. In this context, automatic measures may contribute to identify tubular glands on GC samples.

This work introduces an automatic strategy that exploits nuclear local and contextual information to identify gland nuclei in fields of view (FoVs) extracted from gastric cancer whole slide images (WSIs). The present approach starts by automatically segmenting nuclei with a watershed-based algorithm [8]. Each nucleus is then characterized by two types of features: first, its own morphological properties (size, shape, color, texture, etc.), second, its neighbor nuclei features within a determined radius. Such features are used to train a Gradient-Boosted-Regression-Trees (GBRT) classifier to differentiate between gland-nuclei and non-gland-nuclei. Unlike other state-of-the-art methods, any feature in this approach exploits nuclei relative information, i.e., any nucleus information is always relative to how such feature is with respect to its surrounding nuclei. This strategy is compared with a Deep Learning (DL) model that was trained to identify gland-nuclei. This DL model receives as input patches from WSIs and outputs probability maps that are thresholded. A watershed-based algorithm segments then the binary output map and splits the connected/overlaid cases to set the final candidates.

2 Methodology

2.1 Preprocessing: Nuclei Segmentation

A watershed-based algorithm [8] is applied to segment nuclei, generating a mask with the position of each nucleus. Each detected nucleus is then assigned to the class either gland-nucleus or non-gland-nucleus (See Fig. 2).

2.2 Nuclear Local and Contextual Information (NLCI)

In H&E images, tubular gland nuclei are generally distinguished from other cell nuclei by their orientation, color, oval shape, eosinophilic cytoplasm, and proximity to other similar nuclei. For this reason, after nuclei were segmented, a set of low-level features were extracted, including shape (nuclei structural area, ratio between axes, etc.), texture (Haralick, entropy, etc.), and color (mean intensity, mean red, etc.). Each nucleus was represented by this set of local features. Additionally, for each nucleus, a set of circles with incremental radii of \(k=dL\times 10, \, dL\times 20, \, dL\times 30\) pixels were placed at the nucleus center (begin \(dL=20\) pixels the averaged nuclei diameter), aiming to mimic a multi-scale representation. Finally, a set of regional features was computed within each circle and used to characterize each of the segmented nuclei. These features measure the neighborhood density and relative variations in color, shape, and texture.

A set of 57 local and contextual features were extracted from each image nuclei and the 33 most discriminating characteristics were selected by distribution analysis and Infinite Latent Feature Selection (ILFS) algorithm [9]. A GBRT classifier [10] was then trained to differentiate between the gland-nuclei and non-gland-nuclei classes. Specifically, we used an adapted GBRT framework [11] which emphasizes the minimization of the loss function.

2.3 Baseline

The baseline corresponded to a state-of-the-art deep learning approach known as Mask Region-based Convolutional Neural Network (R-CNN). This modification of the Fast R-CNN algorithm [12] has been used in the Kaggle 2018 Data Science Bowl challenge for identifying wider range of nuclei across varied conditions [13]. It uses a deep convolutional network with a single-stage training and a multi-scale object segmentation. Mask R-CNN outputs an object detection score and its corresponding mask [14].

The DL model was trained using a set of patches extracted from the FoVs. The positive class patches correspond to the area covered by the bounding box of each gland nucleus while the negative class patches were taken from the background, i.e., regions with non-gland nuclei. Aiming to increase the number of training samples, different transformations (e.g., rotation and mirroring) were applied to the patches. Model training was carried out using a total of 20 epoch cycles with 100 steps each.

Figure 3 presents the architecture of a trained DL model for the exploratory stage. A random extraction of a Region of Interest (RoI) is performed. This RoI is projected to a convolutional network that generates a feature map. These features are introduced to the RoI pooling layer for further processing. At the last stage, fully connected layers generate the desired outputs, including the gland nuclei candidate bounding box and mask.

Figure extracted and adapted from [12]

Mask R-CNN work flow.

3 Experimentation

3.1 Dataset

The dataset consisted of 90 FoVs of \(1024 \times 1024\) pixels at \(40 \times \) extracted from a set of H&E WSIs taken from 5 patients who were diagnosed with GC. The WSIs were provided by the Pathology Department of Universidad Nacional de Colombia. A total of 45702 nuclei were manually annotated, being 12150 gland nuclei while the remaining 33552 corresponded to other structures (non-gland nuclei).

3.2 Experiments

Two experiments were carried out. The first attempted to classify between gland-nuclei and non-gland-nuclei using the NLCI approach. A Monte Carlo Cross validation method with 10 iterations was used. At each iteration, 70% of the whole set of FoVs was used to train the GBRT classifier and the remaining 30% was used to test the trained model. Finally, the measured performances along the 10 iterations were averaged.

The second experiment aimed to identify gland-nuclei using the DL model. For this purpose, 60 FoVs were used to train the model and the remaining 30 for testing. In this case, gland-nuclei detection was assessed based on the number of detected nuclei centroids that correctly overlapped with the ground truth nuclei, judged as correct when centroids were within one nuclear radius.

3.3 Experimental Results

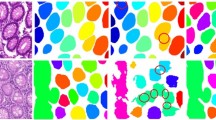

Table 1 presents different performance metrics for both assessed approaches. NLCI achieved an accuracy of 0.977 and an F-measure of 0.955, while R-CNN yielded corresponding accuracy and F-measures of 0.923 and 0.719, respectively. For the qualitative results, Fig. 4 shows the resulting gland nuclei segmentation from both approaches, where R-CNN generates its own masks of single gland nucleus presented by individual colors.

4 Concluding Remarks

In this article, two different approaches to automatically detect gland nuclei on gastric cancer images were presented and compared: a model based on nuclear local and contextual information and a DL model. Results demonstrate that local and contextual features are appropriate to describe the structural features of tubular gland nuclei. Despite the DL model presented good results, this approach requires a powerful/expensive infrastructure, long training times, and huge quantities of annotated data. Due to the lower precision of the model, it indicates the that only local information its taken into account. Future work includes quantification of glands to establish correlation with cancer grade and patient prognosis.

References

Hu, B., El Hajj, N., Sittler, S., et al.: Gastric cancer: classification, histology and application of molecular pathology. J. Gastrointes. Oncol. 3(3), 251–261 (2012)

Karimi, P., Islami, F., Anandasabapathy, S., Freedman, N.D., Kamangar, F.: Gastric cancer: descriptive epidemiology, risk factors, screening, and prevention. Cancer Epidemiol Biomarkers Prev. 23(5), 700–713 (2014). A publication of the American Association for Cancer Research, cosponsored by the American Society of Preventive Oncology

Ferlay, J., Soerjomataram, I., Ervik, M., et al.: Cancer incidence and mortality worldwide: Iarc cancerbase no. 11. http://globocan.iarc.fr (2013). Accessed 25 June 2018

Ministerio de Salud y Protección Social Instituto Nacional de Cancerología de Colombia: Plan Decenal para el Control de Cáncer en Colombia, 2012–2021 (2012)

Kumar, V., Abbas, A., Aster, J.: Robbins & Cotran Pathologic Basis of Disease. Robbins Pathology. Elsevier Health Sciences (2014)

Yoshida, H., Shimazu, T., Kiyuna, T., et al.: Automated histological classification of whole-slide images of gastric biopsy specimens. Gastric Cancer 21(2), 249–257 (2018). https://doi.org/10.1007/s10120-017-0731-8

Martin, I.G., Dixon, M.F., Sue-Ling, H., Axon, A.T., Johnston, D.: Goseki histological grading of gastric Cancer is an important predictor of outcome. Gut 35(6), 758–763 (1994)

Veta, M., van Diest, P.J., Kornegoor, R., et al.: Automatic nuclei segmentation in H&E stained breast cancer histopathology images. PLoS ONE 8(7), (2013)

Roffo, G., Melzi, S., Castellani, U., Vinciarelli, A.: Infinite latent feature selection: a probabilistic latent graph-based ranking approach. In: International Conference on Computer Vision (ICCV) 2017, pp. 1407–1415 (2017)

Friedman, H.J.: Greedy function approximation: a gradient boosting machine. Ann. Stat. 29(5), 1189–1232 (2001). https://www.jstor.org/stable/2699986

Becker, C., Rigamonti, R., Lepetit, V., Fua, P.: Supervised feature learning for curvilinear structure segmentation. In: Mori, K., Sakuma, I., Sato, Y., Barillot, C., Navab, N. (eds.) MICCAI 2013. LNCS, vol. 8149, pp. 526–533. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-40811-3_66

Girshick, R.: Fast R-CNN. In: ICCV 2015 (2015). https://arxiv.org/abs/1504.08083

Kaggle: 2018 data science bowl. Booz Allen Hamilton (2018). https://www.kaggle.com/c/data-science-bowl-2018

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. Facebook AI Research (2017). https://arxiv.org/abs/1703.06870

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Barrera, C., Corredor, G., Alfonso, S., Mosquera, A., Romero, E. (2019). An Automatic Segmentation of Gland Nuclei in Gastric Cancer Based on Local and Contextual Information. In: Lepore, N., Brieva, J., Romero, E., Racoceanu, D., Joskowicz, L. (eds) Processing and Analysis of Biomedical Information. SaMBa 2018. Lecture Notes in Computer Science(), vol 11379. Springer, Cham. https://doi.org/10.1007/978-3-030-13835-6_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-13835-6_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-13834-9

Online ISBN: 978-3-030-13835-6

eBook Packages: Computer ScienceComputer Science (R0)