Abstract

Despite recent efforts to improve the quality of process models, we still observe a significant dissimilarity in quality between models. This paper focuses on the syntactic condition of process models, and how it is achieved. To this end, a dataset of 121 modeling sessions was investigated. By going through each of these sessions step by step, a separate ‘revision’ phase was identified for 81 of them. Next, by cutting the modeling process off at the start of the revision phase, a partial process model was exported for these modeling sessions. Finally, each partial model was compared with its corresponding final model, in terms of time, effort, and the number of syntactic errors made or solved, in search for a possible trade-off between the effectiveness and efficiency of process modeling. Based on the findings, we give a provisional explanation for the difference in syntactic quality of process models.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Because of the ever-increasing complexity of business processes, the demand for process models of high quality is rising. In order to be competitive, businesses need to be as productive as possible, while using their resources economically. When processes are designed, this implies that the supporting process models should be constructed in an efficient way, without wasting unnecessary time or effort. Unfortunately, the process of creating process models is often not effective and/or not efficient. Therefore, this paper investigates whether the time and effort used for constructing a process model influence the quality of the resulting model.

This research goal was triggered by two observations. First, previous research concluded that different process modeling strategies exist, and that the efficiency of modeling can be influenced by applying a strategy that optimally aligns with the cognitive properties of the modeler. The effectiveness of process modeling, on the other hand, appeared to be harder to influence [1]. Second, by comparing several process models that represent the same process, but that were made by different modelers, a high dissimilarity in the pragmatic, semantic and syntactic quality was noticed. To explain this difference, the construction processes of these models were investigated with PPMCharts. A PPMChart is a convenient visualization tool that represents the various steps used to create a process model as dots on a grid, using different colors for each type of operation and different shapes for each type of element on which the operation is performed [2]. During the inspection of these charts, it was noticed that near the end of the modeling session two things happened fairly often: the modeler executed ‘create’ operations in a much slower fashion than at the start, and the modeler looped back through the model and made changes to parts created earlier. This raised our suspicion that some people revised their process model before handing in the result, most likely in search for mistakes.

In this light, the question can be asked whether the conceived process model benefits from such a revision phase. In other words, do these people use the changes of the revision phase to actually improve the model, or do they waste time and effort? Do they mainly correct errors or are they working on improving secondary aspects of the model? Is it possible that they introduce more errors than they solve?

In this exploratory paper, we formulate a provisional answer to the questions mentioned above. An existing dataset was used, containing the operational details of 121 modeling sessions. For each session we tried to determine whether there was a revision phase and if so, the process of process modeling was cut into two separate phases: a first phase that was mostly used for building the model, and a second phase during which the modeler predominantly revised the model created throughout the first part. For each of the 81 instances for which two phases could be distinguished, a process model was exported at two points in time: the model created during the first phase only, called the partial model, as opposed to the final model, which is the result of the modeling session as a whole, including the second phase. The properties of both phases and both models were then compared in order to collect insights related to the above questions.

The research is limited in scope in two ways. First, it focuses on one specific type of conceptual models: sequence flow process models. Moreover, the models in the dataset were restricted to use only 6 constructs. Second, because we prioritized depth of the research over breadth, this study was narrowed to only one quality level, i.e. syntactic quality. Methodologically, it makes sense to first investigate syntactic quality, because in comparison to semantic and pragmatic quality, it can be measured more accurately. On the other hand, syntactic quality may be the least relevant of the three because many tools help to avoid syntactic mistakes completely. Nevertheless, we believe that the insights from the syntax dimension may also shed light on the other two quality dimensions, because the source of cognitive mistakes is probably the same (i.e., cognitive overload due to the complexity of modeling).

This paper is structured as follows. Section 2 presents related research. In Sect. 3, the variables that were selected are presented and discussed. Section 4 explains the applied method to determine the partial models. Section 5 provides the results. Section 6 concludes the paper with a summary of the findings and a brief discussion.

2 Related Work

For the past three decades, business process modeling has been one of the important domains within the information systems research field [3]. The recent developments in research about process models can be classified into three distinct research streams. The first stream focuses on the application of process models (i.e., why are process models created). Davies et al. [4] performed this type of study in the broader research domain of conceptual modeling. The second category studies the quality of process models (i.e., what makes a process model of a high standard). In the absence of a unanimously agreed quality framework for process models [3, 5, 6], multiple publications have proposed their own version [7, 8].

Recently, a third stream originated within the process modeling research field. This sub-branch investigates the process of process modeling instead of the result of this process, the final model (i.e., how is a qualitative process model constructed). The underlying idea is that the quality of the final model is largely based on the quality of the preceding modeling process. This paper is associated with the last stream.

During the last decades, a lot of interesting publications that studied the process of process modeling have been introduced. Hoppenbrouwers et al. [9] laid an important groundwork for future research by presenting fundamental insights into the process of creating conceptual models. Mendling [10] studied the effects of process model complexity on the probability of making errors during the process of process modeling. Pinggera et al. [11] investigated if the structuring of domain knowledge could improve the modeling process of casual modelers. Haisjackl et al. [12] provide valuable insights into the modeler’s behavior towards model lay-out and its implications on pragmatic quality of process models.

While some publications propose modeling techniques and tips to boost the effectiveness of process modeling [13], others suggest an approach that would improve the efficiency of the modeling process [14]. Yet other publications present modeling methods that enhance both the effectiveness and efficiency of process modeling [15, 16]. The aforementioned publications resulted in general guidelines and techniques for process modeling, intended for all conceptual/process modelers. As opposed to these works, recent studies began acknowledging the existence of different modeling styles for creating business process models [17]. Finally, Claes et al. [1] developed a differentiating modeling technique that matches the modeling strategy to the modeler’s cognitive profile, in order to enhance the modeler’s ability to create process models in an efficient way. Furthermore, a series of different tools for visualizing modeling processes were created, which have contributed significantly to the investigation of the process of process modeling [2, 18]. One of these tools – the PPMChart – was used extensively during our research, for the purpose of identifying the two modeling phases (cf. supra).

3 Measurements

In 2015, 146 students of the Business Engineering master program at Ghent University took part in an experiment, in the context of a Business Process Management course. The experiment consisted of three consecutive tasks: a series of cognitive tests, a benchmarking modeling task and an experimental modeling task. In between the second and third task, a subset of the participants received a treatment, with the intention of giving them an advantage over the other students when performing the third task. In this last task, all students were asked to create a process model about a mortgage request case, based on a written description of the process. A more detailed discussion of the experiment is provided by Claes et al. [1].

This experiment generated a dataset [19], containing the operational details of the 146 modeling sessions for the experimental modeling task. For 25 of these sessions, not all variables of interest could be measured adequately, rendering them useless for the research. Hence, 121 modeling sessions were left to analyze.

Due to the lack of a consensus on the definition of high-quality process modeling [3, 5, 6], it was first decided which elements to consider for characterizing a modeling process of high quality. Because of the ever-increasing need for productivity that exists today, we believe that evaluating the modeling session solely by the quality of the result does not suffice, which is why the whole process of process modeling (PPM) was studied for this paper. In order to assess the PPM quality, three quality indicators are proposed, based on the Devil’s Triangle of Project Management [20]:

-

First, time is defined as the number of seconds it takes to reach a certain point of the modeling process.

-

Second, the effort is determined by the number of operations on elements (create, delete, move, …) that are performed in the modeling tool during the process. These actions are divided into mutually exclusive subgroups, as shown in Table 1.

Table 1. Classification of modeling actions -

Third, the syntactic quality of the resulting model consists of two measures: the number of errors made, and the number of errors solved by the modeler. The construction of a list of potential syntax errors and the subsequent detection of these violations was performed by De Bock and Claes [21], who delivered an overview of when errors are made and corrected throughout the modeling process.

All three quality indicators are of continuous nature, meaning they can be determined for every moment in the modeling process. Time and effort combined express the efficiency of the process, while the correctness is an indicator of the effectiveness of process modeling.

4 The Partial Model

During an initial scan of the dataset, it was noticed that near the end of a modeling process, some subjects exhibited behavior that suggested they were inspecting the model they created thus far, potentially in search for mistakes. It was theorized that, in some cases, a modeling session could be split into two distinct phases: a model building phase, during which the biggest part of the model was created (i.e., the “partial model”), and a revision phase, mainly used for tracking down errors and trying to correct them (i.e., resulting in the “final model”). From here on out, we will refer to the moment that separates the two phases as PT or Partial Time, a name derived from the partial model that is exported at that instant. As a result of this definition of the modeling phases, we used the following guidelines to determine PT in all modeling sessions in a consistent way:

-

The biggest part of the model should be produced before PT. Additional ‘create’ operations past this moment are allowed but should mainly be used to replace deleted chunks of elements or add a finishing touch to the model (e.g., to close gaps between activities or to create an end event).

-

The revision phase should be primarily composed of operations that imply that the subject is inspecting the process model: moving elements around, deleting or renaming elements, extensive scrolling, etc.

The identification of PT was performed by the first author, who was inexperienced in this stream of process modeling research at the time of the identification. This was a deliberate choice, with the intention of avoiding that the decision-making process would be biased in favor of the expected results. In order to determine PT as objectively and as consistently as possible, 5 types were identified:

-

Type A: Near the end of the modeling process, the subject clearly tries to correct mistakes using operations from the ‘fix’ category (cf. Table 1). PT is fixed at the last ‘create’ operation before these correction attempts take place.

-

Type B: This type is similar to type A, but the second modeling phase is now identified by a notable time span between the last ‘create’ operation and the end of the modeling process. The biggest part of this time span is used to perform ‘adjust’ actions and scrolls, operations that suggest the subject is reviewing his model in search for errors. PT is fixed at the last ‘create’ operation before this time gap.

-

Type C: The subject deletes unused elements from the model. These are elements that were created somewhere during the modeling process but that were never connected to the rest of the model. PT is fixed at the last ‘create’ operation before these loose elements are erased.

-

Type D: A specific type for subjects who use an aspect-oriented approach. This means that the subject postpones the creation of edges until the (majority of) the nodes of the model are created. Often, the subject first creates all nodes, then connects them with arrows, and typically proceeds with moving elements around to improve the readability of the model, after which they try to identify and solve errors. PT is fixed at the first of this series of ‘adjust’ actions.

-

Type E: Type E includes all models that don’t fit one of the previous descriptions. Typically, these subjects don’t seem to have a separate revision phase. Instead, they either make corrections throughout the whole process of modeling, or they don’t adjust the model at all. As a result, no partial model was exported for these observations. They were left out for part of the analysis (cf. infra).

Two tools were used for the identification of PT. First, we created two PPMCharts for every observation in the dataset: one version including the ‘move’ actions, the other excluding them. Per model, we jointly analyzed its two charts and visually identified provisional time points to be considered for the choice of PT. Next, the Cheetah Experimental Platform [22] was used to inspect the modeling processes, by reviewing a step-by-step replay of each session. Combining insights offered by both tools, we were able to determine a partial model for 81 of the 121 models originally considered. The other 40 were assumed to lack a separate revision phase (i.e., type E).

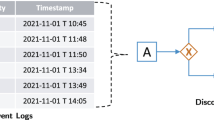

Figure 1 depicts two examples of PPMCharts [2]. On the left is a clear example of a type A model, where the arrow indicates the first ‘delete’ action (red) of a sequence, with only a few ‘create’ actions (green) in between. The chart on the right is an example of a type D model, where the subject first created the nodes (squares) and then the edges (triangles). The arrow indicates the first (very light) blue ‘move’ action of a series.

5 Investigation of the Theorized Trade-off

5.1 Analysis of the Total Modeling Process

For this part of the analysis, all 121 models from the dataset are considered. During an initial data inspection, a disparity in the size of the models that were created was noticed: the number of elements varied between 61 and 120. In order to avoid biased results - the bigger a process model, the more opportunities for the subject to make mistakes - the data were normalized by expressing the number of errors as a percentage of the number of elements in the final model.

Figure 2 shows the relationship between the total time it took a subject to create the process model, and the relative number of errors (s)he made/solved during that time. It also displays the relative number of errors remaining at the end of the process, simply defined as ‘errors made’ minus ‘errors solved’. The following correlation coefficients confirm relations that can also be inferred from Fig. 2:

-

‘Total time’ and ‘errors solved’: 0,253* (p = 0,050)

-

‘Total time’ and ‘errors made’: −0,200 (p = 0,820)

-

‘Total time’ and ‘errors remaining’: −0,183* (p = 0,044)

Very similar results are obtained when time is replaced with effort, the number of operations performed by the subject during the process. The coefficients are:

-

‘Total effort’ and ‘errors solved’: 0,349** (p = 0,000)

-

‘Total effort’ and ‘errors made’: −0,003 (p = 0,970)

-

‘Total effort’ and ‘errors remaining’ −0,229* (p = 0,012)

This similarity is not really surprising, since time and effort are positively correlated with a coefficient of 0,457** (p = 0,000). Subjects that perform more actions, generally take more time.

Our interpretation of these results is as follows. Making mistakes does not per se take a lot of time, hence the absence of a significant relationship between ‘total time’ and ‘errors made’. Solving errors on the other hand is more time consuming and requires extra effort. Therefore, people who use more time and operations to finish a model typically solve more problems, which is why their final model generally contains less syntactic errors. These observations suggest a certain trade-off between efficiency and effectiveness of process modeling.

5.2 Comparison Between the Model Building and the Revision Phase

From this point on, we look at the modeling process as the combination of two phases: a model building phase, during which the subject creates most of the model, followed by a revision phase, where the subject takes time to go over the model in search for mistakes. Dropping the 40 cases for which we weren’t able to identify two separate phases (i.e., type E), leaves us with 81 models for this part of the analysis.

Figure 3 contains boxplots that compare the distribution of the total modeling time of people for which a revision phase was identified with the total modeling time of the remaining subjects. Notwithstanding some outliers, people with a separate revision phase generally exhibit a longer total modeling time. This accentuates once more that detecting and trying to correct errors requires additional time (and effort).

As a result of our definition of both phases, the first phase is characterized by a prevalence of ‘create’ actions, while the second phase mostly contains ‘adjust’ and ‘fix’ operations. In the next part of the analysis, it is investigated if most errors are in fact made in phase 1 and corrected in phase 2. After all, creating elements in the model building phase does not necessarily introduce mistakes, and deleting elements in the revision phase does not necessarily solve mistakes. Moreover, most people also made adjustments during the model building phase.

This time, the number of errors is normalized according to the size of the model at the end of the corresponding phase, as well as to the duration of that phase, since the revision phase is significantly shorter than the model building phase in most cases. The result is a variable representing the number of errors made/solved per hour, divided by the number of elements the model contains (i.e., ‘errors made’ and ‘errors solved’). The graphs in Fig. 4 depict the difference in relative number of errors made/solved between both phases (i.e., ‘errors made/solvedphase2’ - ‘errors made/solvedphase1’). A positive value reflects a higher proportion in the revision phase than in the model building phase, a negative value indicates the opposite.

The graph shows that people have a tendency to make more mistakes in the first phase – this is the case for 67 of the 81 subjects in this study. The resolution of errors, on the other hand, is spread more evenly across both phases: 40 subjects solved relatively more in the second phase and 38 in the first phase (the remaining 3 didn’t fix any issues at all). Errors seem to be resolved in a more efficient way in the second phase, however, since the positive values generally diverge further from zero.

Two considerations are in order here:

-

To be able to solve errors, they obviously have to exist in the first place. As a result, the proportion of errors solved will by default be situated more towards the ending of the modeling process than the proportion of errors made.

-

Because of the way the emergence of syntactic errors was determined, a counteracting effect could also take place for the ‘errors made’. Since it is sometimes unclear in a partial model whether or not a certain syntactic violation is deliberate, or a consequence of the model not being finished yet, syntactic errors were sometimes detected (too) late in the modeling process. As a result, the proportion of ‘errors made’ may have shifted towards the end of the process.

The question can also be asked what would happen with the process models in terms of syntactic quality if the revision phase was to be ignored. In other words: which models generally contain less syntactic errors, the partial or the final models? For this purpose, it was examined how the number of errors evolved during the revision phase of each model. The result is shown below in Fig. 5.

For 33 subjects, the revision phase did not change the number of errors included in the model, often because they neither made nor solved errors at all. In 31 cases, syntactic quality was improved during the second phase. In more than half of these cases three or more errors were solved. The remaining 17 subjects – a noteworthy 20% of the data - created additional errors during this phase.

Unfortunately, inspecting PPMCharts and analyzing replays of modeling sessions with a disadvantageous revision phase did not reveal any significant causes for this deterioration of syntactic quality. Therefore, the 31 subjects with a beneficial revision phase were compared with the 17 subjects who made additional errors. For most variables, no substantial contrast between both groups was found. However, the number of ‘create’ operations reflected the most notable difference between the groups, as shown in Fig. 6.

People who created additional errors during the revision phase generally performed significantly more ‘create’ operations throughout the total modeling process. As a result, they typically also used more effort in general and created a larger process model. A possible explanation is that these subjects created unnecessary elements and thus more complex process models, which caused them to make more errors. More extensive research is required to formulate a substantiated explanation.

6 Conclusion

Using an existing dataset containing data about modeling sessions performed by students, a distinction was made between people who end their modeling process with a separate revision phase and people who don’t. Investigating these sessions resulted in findings that – although they are provisional – we believe lay the foundation for interesting future research. First, it was demonstrated that subjects who take more time to create a process model generally produce a model of higher syntactic quality, mostly because extra time was used to detect and correct mistakes. Second, it was shown that subjects tend to make more errors in the first part of the modeling process than near the end, while the opposite is true for the correction of mistakes. For this dataset, 38% of the subjects made good use of their revision phase, 41% had an unimpactful revision phase and 21% created additional errors. No particular general reason was found as to why the last group made the syntactic quality deteriorate at the end of the modeling process.

Being an exploratory study, one should be careful to generalize these findings and take the next three limitations into consideration. First, the use of an arbitrary dataset containing data generated by students may not be representative for all conceptual modelers. Second, the research is limited by the specific choice of process model quality indicators, thus ignoring the semantic and pragmatic aspect of model correctness. Finally, although considerable effort was spent to avoid biases, the subjective nature of the identification of the revision phases may have had an influence on the results. This research can be extended in multiple ways. Alternative ways to identify a revision phase could be used to verify the results. The definition of model quality can be widened to include semantic and pragmatic quality. Finally, future work should include an extension towards a more complete specification of the BPMN language or towards other conceptual modeling languages.

References

Claes, J., Vanderfeesten, I., Gailly, F., et al.: The Structured Process Modeling Method (SPMM) - what is the best way for me to construct a process model? Decis. Support Syst. 100, 57–76 (2017). https://doi.org/10.1016/j.dss.2017.02.004

Claes, J., Vanderfeeste, I., Pinggera, J., et al.: A visual analysis of the process of process modeling. Inf. Syst. E-bus. Manag. 13, 147–190 (2015). https://doi.org/10.1007/s10257-014-0245-4

De Oca, I.M.-M., Snoeck, I., Reijers, H.A., et al.: A systematic literature review of studies on business process modeling quality. Inf. Softw. Technol. 58, 187–205 (2015). https://doi.org/10.1016/j.infsof.2014.07.011

Davies, I., Green, P., Rosemann, M., et al.: How do practitioners use conceptual modeling in practice? Data Knowl. Eng. 58, 358–380 (2006). https://doi.org/10.1016/j.datak.2005.07.007

Soffer, P., Kaner, M., Wand, Y.: Towards understanding the process of process modeling: theoretical and empirical considerations. In: Daniel, F., Barkaoui, K., Dustdar, S. (eds.) BPM 2011, Part I. LNBIP, vol. 99, pp. 357–369. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-28108-2_35

Recker, J.: A socio-pragmatic constructionist framework for understanding quality in process modelling. Australas. J. Inf. Syst. 14, 43–63 (2007). https://doi.org/10.3127/ajis.v14i2.23

Sánchez-González, L., García, F., Ruiz, F., et al.: Toward a quality framework for business process models. Int. J. Coop. Inf. Syst. 22, 1–15 (2013). https://doi.org/10.1142/S0218843013500032

Vanderfeesten, I., Cardoso, J., Mendling, J., et al.: Quality metrics for business process models. In: Fisher, L. (ed.) BPM and Workflow Handbook, pp. 179–190. Future Strategies (2007)

Hoppenbrouwers, S.J.B.A., Proper, H.A., van der Weide, T.P.: A fundamental view on the process of conceptual modeling. In: Delcambre, L., Kop, C., Mayr, H.C., Mylopoulos, J., Pastor, O. (eds.) ER 2005. LNCS, vol. 3716, pp. 128–143. Springer, Heidelberg (2005). https://doi.org/10.1007/11568322_9

Mendling, J.: Metrics for process models: Empirical foundations of verification, error prediction and guidelines for correctness. LNBIP, vol. 6. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-89224-3

Pinggera, J., Zugal, S., Weber, B., et al.: How the structuring of domain knowledge helps casual process modelers. In: Parsons, J., Saeki, M., Shoval, P., Woo, C., Wand, Y. (eds.) ER 2010. LNCS, vol. 6412, pp. 445–451. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-16373-9_33

Haisjackl, C., Burattin, A., Soffer, P., et al.: Visualization of the evolution of layout metrics for business process models. In: Dumas, M., Fantinato, M. (eds.) BPM 2016. LNBIP, vol. 281, pp. 449–460. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-58457-7_33

Silver, B.: BPMS Watch: Ten tips for effective process modeling. http://www.bpminstitute.org/resources/articles/bpms-watch-ten-tips-effective-process-modeling

Laue, R., Mendling, J.: Structuredness and its significance for correctness of process models. Inf. Syst. E-bus. Manag. 8, 287–307 (2010). https://doi.org/10.1007/s10257-009-0120-x

Xiao, L., Zheng, L.: Business process design: process comparison and integration. Inf. Syst. Front. 14, 363–374 (2012). https://doi.org/10.1007/s10796-010-9251-3

Li, Y., Cao, B., Xu, L., et al.: An efficient recommendation method for improving business process modeling. IEEE Trans. Ind. Inform. 10, 502–513 (2014). https://doi.org/10.1109/TII.2013.2258677

Pinggera, J., Soffer, P., Fahland, D., et al.: Styles in business process modeling: an exploration and a model. Softw. Syst. Model. 14, 1055–1080 (2013). https://doi.org/10.1007/s10270-013-0349-1

Pinggera, J., Zugal, S., Weidlich, M., et al.: Tracing the process of process modeling with modeling phase diagrams. In: Daniel, F., Barkaoui, K., Dustdar, S. (eds.) BPM 2011, Part I. LNBIP, vol. 99, pp. 370–382. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-28108-2_36

Bolle, J., De Bock, J., Claes, J.: Data for Investigating the trade-off between the effectiveness and efficiency of process modeling, Mendeley Data v1 (2018). https://doi.org/10.17632/5b8by4k244.1

The Project Management Hut: Devil’s Triangle of Project Management. https://pmhut.com/devils-triangle-of-project-management

De Bock, J., Claes, J.: The origin and evolution of syntax errors in simple sequence flow models in BPMN. In: Matulevičius, R., Dijkman, R. (eds.) CAiSE 2018. LNBIP, vol. 316, pp. 155–166. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-92898-2_13

Pinggera, J., Zugal, S., Weber, B.: Investigating the process of process modeling with Cheetah Experimental Platform. In: Mutschler, B. et al. (eds.) Proceedings of ER-POIS 2010. CEUR-WS 6, pp. 13–18. CEUR-WS (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Bolle, J., Claes, J. (2019). Investigating the Trade-off Between the Effectiveness and Efficiency of Process Modeling. In: Daniel, F., Sheng, Q., Motahari, H. (eds) Business Process Management Workshops. BPM 2018. Lecture Notes in Business Information Processing, vol 342. Springer, Cham. https://doi.org/10.1007/978-3-030-11641-5_10

Download citation

DOI: https://doi.org/10.1007/978-3-030-11641-5_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-11640-8

Online ISBN: 978-3-030-11641-5

eBook Packages: Computer ScienceComputer Science (R0)