Abstract

-

Long-term water planning is increasingly challenging, since hydrologic conditions appear to be changing from the recently observed past and water needs in many locales are highly relative to existing and easily developed supplies.

-

Globally coordinated policymaking to address climate change also faces large uncertainties regarding underlying conditions and possible responses, making traditional prediction-based planning approaches to be inadequate for the purpose.

-

Methods for Decision Making under Deep Uncertainty (DMDU) can be useful for addressing long-term policy challenges associated with multifaceted, nonlinear, natural and socio-economic systems.

-

This chapter presents two case studies using Robust Decision Making (RDM).

-

The first describes how RDM was used as part of a seven-state collaboration to identify water management strategies to reduce vulnerabilities in the Colorado River Basin.

-

The second illustrates how RDM could be used to develop robust investment strategies for the Green Climate Fund (GCF)—an international global institution charged with making investments supporting a global transition toward more sustainable energy systems that will reduce GHG emissions.

-

Both case studies describe the development of robust strategies that are adaptive, in that they identify both near-term decisions and guidance for how these responses should change or be augmented as the future unfolds.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

1 Long-Term Planning for Water Resources and Global Climate Technology Transfer

Developing water resources to meet domestic, agricultural, and industrial needs has been a prerequisite for sustainable civilization for millennia. For most of history, “water managers” have harnessed natural hydrologic systems, which are inherently highly variable, to meet generally increasing but predictable human needs. More recently, water managers have worked to mitigate the consequences of such development on ecological systems. Traditional water resources planning is based on probabilistic methods, in which future water development needs are estimated based on a single projection of future demand and estimates of available supplies that are based on the statistical properties of recorded historical conditions. Different management strategies are then evaluated and ranked, generally in terms of cost effectiveness, for providing service at a specified level of reliability. Other considerations, such as environmental attributes, are also accounted for as necessary (Loucks et al. 1981).

While tremendous achievements have been made in meeting worldwide water needs, water managers are now observing climate change effects through measurable and statistically significant changes in hydrology (Averyt et al. 2013). Moreover, promising new strategies for managing water resources are increasingly based on new technologies (e.g., desalination, demand management controls), the integration of natural or green features with the built system, and market-based solutions (Ngo et al. 2016). These novel approaches in general do not yet have the expansive data to support accurate estimates of their specific effects on water management systems or their costs. The modern water utility thus must strive to meet current needs while preparing for a wide range of plausible future conditions. For most, this means adapting the standard probabilistic engineering methods perfected in the twentieth century to (1) consider hydrologic conditions that are likely changing in unknown ways, and (2) meet societal needs that are no longer increasing at a gradual and predictable pace.

Water utilities have begun to augment traditional long-term planning approaches with DMDU methods that can account for deep uncertainty about future hydrologic conditions, plausible paths of economic development, and technological advances. These efforts, first begun as research collaborations among policy researchers and innovative planners (e.g., Groves et al. 2008, 2013), are now becoming more routine aspects of utility planning. As evidence of this uptake, the Water Utility Climate Alliance (WUCA), comprised of ten large US water utilities, now recommends DMDU methods as best practice to support long-term water resources planning (Raucher and Raucher 2015).

The global climate clearly exerts tremendous influence on the hydrologic systems under management. Over the past decade, the global community has developed a consensus that the climate is changing in response to anthropogenic activities, most significantly the combustion of carbon-based fuels and the release of carbon dioxide and other greenhouse gasses into the atmosphere (IPCC 2014a). In 2016, nations around the world adopted the Paris Agreement, with a new consensus that development must be managed in a way that dramatically reduces net greenhouse gas emissions in order to stave off large and potentially disastrous changes to the climate (United Nations 2016). As part of this agreement, the Green Climate Fund (GCF) was established to steer funding toward activities that would encourage the shift from fossil fuels to more sustainable energy technologies. Governments will also need to prepare for climate changes that are unavoidable and help adapt many aspects of civilization (IPCC 2014b).

Climate mitigation and adaptation will require billions of dollars of investments in new ways to provide for society’s needs—be it energy for domestic use, industry, and transportation, or infrastructure to manage rising seas and large flood events. Technological solutions will need to play a large role in helping facilitate the needed transitions. As with water resources planning, governments will need to plan for deep uncertainty, and design and adopt strategies that effectively promote the development and global adoption of technologies for climate mitigation under a wide range of plausible futures.

This chapter presents two case studies that demonstrate how DMDU methods, in particular Robust Decision Making (RDM) (see Chap. 2), can help develop robust long-term strategies. The first case study describes how RDM was used for the 2012 Colorado River Basin Study—a landmark 50-year climate change adaptation study, helping guide the region toward implementing a robust, adaptive management strategy for the Basin (Bloom 2015; Bureau of Reclamation 2012; Groves et al. 2013). This case study highlights one of the most extensive applications of an RDM-facilitated deliberative process to a formal planning study commissioned by the US Bureau of Reclamation and seven US states. The second case study presents a more academic evaluation of how RDM can define the key vulnerabilities of global climate policies and regimes for technology transfer and then identify an adaptive, robust policy that evolves over time in response to global conditions (Molina-Perez 2016). It further illustrates how RDM can facilitate a quantitative analysis that employs simple models yet provides important insights into how to design and implement long-term policies.

2 Review of Robust Decision Making

RDM was the selected methodology for the studies described here, as the decision contexts are fraught with deep uncertainty, are highly complex, and have a rich set of alternative policies (c.f. Fig. 1.3 Chap. 1). For such contexts, an “agree on decisions approach” is particularly useful, as it provides a mechanism for linking the analysis of deep uncertainty through the identification of preliminary decisions’ vulnerabilities to the definition of an adaptive strategy—characterized by near-term low-regret actions, signposts, and triggers, and decision pathways for deferred actions. For both case studies, RDM provides an analytic framework to identify vulnerabilities of current strategies in order to identify robust, adaptive strategies—those that will succeed across the broad range of plausible futures.

The two case studies also illustrate how RDM can be applied in different sectors. The water management study emphasizes that RDM addresses both deep uncertainty and supports an iterative evaluation of robust, adaptive strategies designed to minimize regret across the uncertainties. In this case, it does so by selecting among numerous already existing investment proposals. The second case study describes a policy challenge different from natural resources and infrastructure management—promoting global climate technology transfer and uptake—that uses RDM as the means to discover and define policies anew. Both studies define how the strategies could adapt over time to achieve greater robustness than a static strategy or policy could, using information about the vulnerabilities of the tested strategies. This represents an important convergence between RDM and the Dynamic Adaptive Policy Pathways (DAPP) methodology described in Chaps. 4 and 9.

2.1 Summary of Robust Decision Making

As described in Chap. 2 (Box 2.1), RDM includes four key elements:

-

Consider a multiplicity of plausible futures;

-

Seek robust, rather than optimal strategies;

-

Employ adaptive strategies to achieve robustness; and

-

Use computers to facilitate human deliberation over exploration, alternatives, and trade-offs.

Figure 7.1 summarizes the steps to implement the RDM process, and Fig. 7.2 relates these steps to the DMDU framework introduced in Chap. 1. In brief, it begins with (1) a Decision Framing step, in which the key external developments (X), policy levers or strategies (L), relationships (R), and measures of performance (M),Footnote 1 are initially defined through a participatory stakeholder process, as will be illustrated in the case studies. Analysts then develop an experimental design, which defines a large set of futures against which to evaluate one or a few initial strategies. Next, the models are used to (2) Evaluate strategies in many futures and develop a large database of simulated outcomes for each strategy across the futures. These results are then inputs to the (3) Vulnerability analysis, in which Scenario Discovery (SD) algorithms and techniques help identify the uncertain conditions that cause the strategies to perform unacceptably. Often in collaboration with stakeholders and decisionmakers, these conditions are refined to describe “Decision-Relevant Scenarios”. The next step of RDM (4) explores the inevitable trade-offs among candidate robust, adaptive strategies. This step is designed to be participatory, in which the analysis and interactive visualization provide decisionmakers and stakeholders with information about the range of plausible consequences of different strategies, so that a suitably low regret, robust strategy can be identified. The vulnerability and tradeoff information is used to design more robust and adaptive strategies, and to include additional plausible futures for stress testing in (5) the New futures and strategies step. This can include hedging actions—those that help improve a strategy’s performance under the vulnerable conditions—and shaping actions—those designed to reduce the likelihood of facing the vulnerable conditions. Signposts and triggers are also defined that are used to reframe static strategies into adaptive strategies that are implemented in response to future conditions. RDM studies will generally implement multiple iterations of the five steps in order to identify a set of candidates’ robust, adaptive strategies.

(Adapted from Lempert et al. (2013b)

RDM process

Mapping of RDM to DMDU steps presented in Chap. 1

While both case studies highlight the key elements above, they also illustrate how RDM supports the design of adaptive strategies. Fischbach et al. (2015) provide a useful summary of how RDM and other DMDU approaches can support the development of adaptive plans from those that are simply forward looking, to those that include plan adjustment.

For example, a static water management strategy might specify a sequence of investments to make over the next several decades. In some futures, specific later investments might be unnecessary. In other futures, the investments may be implemented too slowly or may be insufficient. An adaptive water management strategy, by contrast, might be forward looking or include policy adjustments, consisting of simple decision rules in which investments are made only when the water supply and demand balance is modeled to be below a threshold (see Groves (2006) for an early example). The RDM process can then determine if this adaptive approach improves the performance and reduces the cost of the strategy across the wide range of futures. RDM can also structure the exploration of a very large set of alternatively specified adaptive strategies—those defining different signposts (conditions that are monitored) or triggers (the specific value(s) for the signpost(s) or conditions that trigger investments). Lastly, RDM can explore how a more “formal review and continued learning” approach (Bloom 2015; Swanson et al. 2010) to adaptive strategies might play out by modeling how decisionmakers might update their beliefs about future conditions and adjust decisions accordingly.

In the first case study, the RDM uncertainty exploration (Steps 2 and 3) is used to identify vulnerabilities, and then test different triggers and thresholds defining an adaptive plan. It further traces out different decision pathways that planners could take to revise their understanding of future vulnerabilities and act accordingly. This is done by tying the simulated implementation of different aspects of a strategy to the modeled observation of vulnerabilities. In the second case study, the RDM process identifies low-regret climate technology policy elements, and then defines specific pathways in which the policies are augmented in response to unfolding conditions.

3 Case Study 1: Using RDM to Support Long-Term Water Resources Planning for the Colorado River Basin

The Colorado River is the largest source of water in the southwestern USA, providing water and power to nearly 40 million people and water for the irrigation of more than 4.5 million acres of farmland across seven states and the lands of 22 Native American tribes, and is vital to Mexico providing both agricultural and municipal needs (Bureau of Reclamation 2012). The management system is comprised of 12 major dams, including Glen Canyon Dam (Lake Powell along the Arizona/Utah boarder) and Hoover Dam (Lake Mead, at the border of Nevada and Arizona). Major infrastructure also transports water from the Colorado River and its tributaries to Colorado’s Eastern Slope, Central Arizona, and Southern California. The 1922 Colorado River Compact apportions 15 million acre-feet (maf) of water equally among four Upper Basin States (Colorado, New Mexico, Utah, and Wyoming) and three Lower Basin States (Arizona, California, and Nevada) (Bureau of Reclamation 1922). Adherence to the Compact is evaluated by the flow at Lee Ferry—just down-river of the Paria River confluence. A later treaty in 1944 allots 1.5 maf of water per year to Mexico, with up to 200 thousand acre-feet of additional waters during surplus conditions. The US Bureau of Reclamation works closely with the seven Basin States and Mexico to manage this resource and collectively resolve disputes.

The reliability of the Colorado River Basin system is increasingly threatened by rising demand and deeply uncertain future supplies. Recent climate research suggests that future hydrologic conditions in the Colorado River Basin could be significantly different from those of the recent historical period. Furthermore, climate warming already underway and expected to continue will exacerbate periods of drought across the Southwest (Christensen and Lettenmaier 2007; Nash and Gleick 1993; Seager et al. 2007; Melillo et al. 2014). Estimates of future streamflow based on paleoclimate records and general circulation models (GCMs) suggest a wide range of future hydrologic conditions—many which would be significantly drier than recent conditions (Bureau of Reclamation 2012).

In 2010, the seven Basin States and the US Bureau of Reclamation (Reclamation) initiated the Colorado River Basin Study (Basin Study) to evaluate the ability of the Colorado River to meet water delivery and other objectives across a range of futures (Bureau of Reclamation 2012). Concurrent with the initiation of the Basin Study, Reclamation engaged RAND to evaluate how RDM could be used to support long-term water resources planning in the Colorado River Basin. After completion of the evaluation study, Reclamation decided to use RDM to structure the Basin Study’s vulnerability and adaptation analyses (Groves et al. 2013). This model of evaluating DMDU methods through a pilot study and then adopting it to support ongoing planning is a low-threshold approach for organizations to begin to use DMDU approaches in their planning activities (Lempert et al. 2013a, b).

The Basin Study used RDM to identify the water management vulnerabilities with respect to a range of different objectives, including water supply reliability, hydropower production, ecosystem health, and recreation. Next, it evaluated the performance of a set of portfolios of different management actions that would be triggered as needed in response to evolving conditions. Finally, it defined the key trade-offs between water delivery reliability and the cost of water management actions included in different portfolios, and it identified low-regret options for near-term implementation.

Follow-on work by Bloom (2015) evaluated how RDM could help inform the design of an adaptive management strategy, both in terms of identifying robust adaptation through signposts and triggers and modeling how beliefs and new information can influence the decision to act. Specifically, Bloom used Bayes’ Theorem to extend the use of vulnerabilities to analyze how decisionmaker views of future conditions could evolve. The findings were then incorporated into the specification of a robust, adaptive management strategy.

3.1 Decision Framing for Colorado River Basin Analyses

A simplified XLRM chart (Table 7.1) summarizes the RDM analysis of the Basin Study and follow-on work by Bloom (2015). Both studies evaluated a wide range of future hydrologic conditions, some based on historical and paleoclimate records, others on projections from GCMs. These hydrologic conditions were combined with six demand scenarios and two operation scenarios to define 23,508 unique futures. Both studies used Reclamation’s long-term planning model—Colorado River Simulation System (CRSS)—to evaluate the system under different futures with respect to a large set of measures of performance. CRSS simulates operations at a monthly time-step from 2012 to 2060, modeling the network of rivers, demand nodes, and 12 reservoirs with unique operational rules.

Both the Basin Study and Bloom focused the vulnerability analysis on two key objectives: (1) ensuring that the 10-year running average of water flow from the Upper to Lower Basin meets or exceeds 7.5 maf per year (Upper Basin reliability objective), and (2) maintaining Lake Mead’s pool elevation above 1,000 feet (Lower Basin reliability objective). Both studies then used CRSS to model how the vulnerabilities could be reduced through the implementation of alternative water management portfolios, each comprised of a range of possible water management options, including municipal and industrial conservation, agricultural conservation, wastewater reuse, and desalination.

3.2 Vulnerabilities of Current Colorado River Basin Management

Both the Basin Study and Bloom’s follow-on work used CRSS to simulate Basin outcomes across thousands of futures. They then used SD methods (see Chap. 2) to define key vulnerabilities. Under the current management of the system, both the Upper Basin and Lower Basin may fail to meet water delivery reliability objectives under many futures within the evaluated plausible range. Specifically, if the long-term average streamflow at Lee Ferry falls below 15 maf and an eight-year drought with average flows below 13 maf occurs, the Lower Basin is vulnerable (Fig. 7.3). The corresponding climate conditions leading to these streamflow conditions include historical periods with less than average precipitation, or those projected climate conditions (which are all at least 2° F warmer) in which precipitation is less than 105% of the historical average (see Groves et al. 2013). The Basin Study called this vulnerability Low Historical Supply, noting that the conditions for this vulnerability had been observed in the historical record. The Basin Study also identified a vulnerability for the Upper Basin defined by streamflow characteristics present only in future projections characterized by Declining Supply. Bloom further parsed the Basin’s vulnerabilities to distinguish between Declining Supply and Severely Declining Supply conditions.

3.3 Design and Simulation of Adaptive Strategies

After identifying vulnerabilities, both studies developed and evaluated portfolios of individual management options that would increase the supply available to the Basin states (e.g., ocean and groundwater desalination, wastewater reuse, and watershed management), or reduce demand (e.g., agricultural and urban conservation). For the Basin Study, stakeholders used a Portfolio Development Tool (Groves et al. 2013) to define four portfolios, each including a different set of investment alternatives for implementation. Portfolio B (Reliability Focus) and Portfolio C (Environmental Performance Focus) represented two different approaches to managing future vulnerabilities. Portfolio A (Inclusive) was defined to include all alternatives in either Portfolios B or C, and Portfolio D (Common Options) was defined to include only those options in both Portfolios B and C. Options within a portfolio were prioritized based on an estimate of cost-effectiveness, defined as the average annual yield divided by total project cost.

CRSS modeled these portfolios as adaptive strategies by simulating what investment decisions a basin manager, or agent, would take under different simulated Basin conditions. The agent monitors three key signpost variables on an annual basis: Lake Mead’s pool elevation, Lake Powell’s pool elevation, and the previous five years of observed streamflow. When these conditions drop below predefined thresholds, the agent implements the next available water management action from the portfolio’s prioritized list. To illustrate how this works, Fig. 7.4 shows the effect of the same management strategy with two different trigger values in a single future. Triangles represent years in which observations cross the trigger threshold. In this future, the Aggressive Strategy begins implementing water management actions sooner than the Baseline Strategy, and Lake Mead stays above 1000 feet. For the Basin Study strategies, a single set of signposts and triggers were used. These strategies were then evaluated across the 23,508 futures. For each simulated future, CRSS defines the unique set of investments that would be implemented per the strategy’s investments, signposts, and triggers. Under different signposts and triggers, different investments are selected for implementation.

Example Colorado River Basin adaptive strategy from Bloom (2015)

The performance of each strategy is unique in terms of its reliability metric and cost. Figure 7.5 summarizes reliability across the Declining Supply futures, and shows the range of 50-year costs for each of the four adaptive strategies. In the figure, Portfolio A (Inclusive) consists of the widest set of options and thus has the greatest potential to prevent water delivery vulnerability. It risks, however, incurring the highest costs. It also contains actions that are controversial and may be unacceptable for some stakeholders. Portfolio D (Common Options) includes the actions that most stakeholders agree on—a subset of A. This limits costs, but also the potential to reduce vulnerabilities. Under these vulnerable conditions, Portfolios A and B lead to the fewest number of years with critically low Lake Mead levels. The costs, however, range from $5.5–$7.5 billion per year. Portfolio C, which includes more environmentally focused alternatives, performs less well in terms of Lake Mead levels, but leads to a tighter range of costs—between $4.75 and $5.25 billion per year. Bloom expanded on this analysis by exploring across a range of different investment signposts and triggers, specified to be more or less aggressive in order to represent stakeholders and planners with different preferences for cost and reliability. A less aggressive strategy, for example, would trigger additional investments only under more severe conditions than a more aggressive strategy.

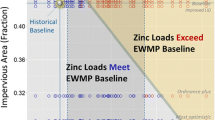

Figure 7.6 shows the trade-offs across two bookend static strategies (“Current Management” and “Implement All Actions”) and five adaptive strategies based on Portfolio A (Inclusive), but with a range of different triggers from Bloom (2015). Note that the signposts and triggers for the Baseline strategy are those used for the Basin Study portfolios. The more aggressive the triggers, the fewer futures in which objectives are missed (left side of figure). However, the range of cost to achieve those outcomes increases.

Vulnerability/cost trade-offs across portfolios with different triggers from Bloom (2015)

3.4 Evaluating Regret of Strategies Across Futures

For this case study, we define a robust strategy as one that minimizes regret across a broad range of plausible future conditions. Regret, in this context, is defined as the additional amount of total annual supply (volume of water, in maf) that would be needed to maintain the Lake Mead level at 1000 feet across the simulation. The more supply that would be needed to maintain the Lake Mead level, the more regret.

The Basin Study adaptive strategies do not completely eliminate regret. Some strategies still underinvest in the driest futures and overinvest in the wettest futures. This result stems from both the use of relatively simplistic triggers and the inherent inability to predict future conditions based on observations. To illustrate this, Fig. 7.7 shows the percent of futures with low regret as a function of long-term streamflow for two static strategies (“Current Management” and “Implement All Actions”) and two of the adaptive strategies shown in Fig. 7.6 (“Baseline” and “Aggressive”). The two static, non-adaptive strategies have the highest regret in either the high streamflow conditions (“Implement All Actions”) or the low streamflow conditions (“Current Management”). By contrast, the two adaptive strategies are more balanced, although the “Baseline” adaptive strategy has moderate regret in the driest conditions, and the “Aggressive” strategy is low regret under wetter conditions, both adaptive strategies perform similarly.

3.5 Updating Beliefs About the Future to Guide Adaptation

There is no single robust choice among the automatic adjustment adaptive strategies evaluated by Bloom (2015). Each strategy suggests some tradeoff in robustness for one range of conditions relative to another. Furthermore, strategies with different triggers reflect different levels of risk tolerance with respect to reliability and costs. Therefore, a planner’s view of the best choice will be shaped by her preference for the varying risks and perceptions about future conditions. An adaptive strategy based on formal review and continued learning would evolve not only in response to some predefined triggers but also as decisionmakers’ beliefs about future conditions evolve over time. Bloom models this through Bayesian updating (see Lindley (1972) for a review of Bayes’ Theorem).

Figure 7.8 shows how a planner with specific expectations about the likelihood of future conditions (priors) might react to new information about future conditions. The top panel (line) shows one plausible time series of decadal average streamflow measured at Lees Ferry. The bars show the running average flow from 2012 through the end of the specified decade. In this future, flows are around the long-term historical average through 2020 and then decline over the subsequent decades. The long-term average declines accordingly to about 12 maf/year—conditions that Bloom classifies as part of the Severely Declining Supply vulnerability.

The bottom panel shows how a decisionmaker might update her assessment of the type of future unfolding. In this example, her priors in 2012 were a 50% chance on the Declining Supply conditions, a 25% chance for the Severely Declining Supply conditions, and a 25% chance for all other futures. As low flows are observed over time, her beliefs adjust accordingly. While they do not adjust by 2040 all the way to the true state—Severely Declining Supply—her assessment of not being in the Declining Supply or Severely Declining Supply condition goes to zero percent. Another planner with higher priors for the Severely Declining Supply would have a higher posterior assessment of the Severely Declining Supply condition by 2040. Planners’ priors can be based on the best scientific evidence available at the time and their own understanding of the policy challenge. Because climate change is deeply uncertain, planners may be unsure about their priors, and even a single planner may wish to understand the implications of a range of prior beliefs.

3.6 Robust Adaptive Strategies, and Implementation Pathways

Bloom then combined the key findings from the vulnerability analysis and the Bayesian analysis to describe a robust adaptive strategy in the form of a management strategy implementation pathway. Specifically, Bloom identified threshold values of beliefs and observations that would imply sufficient confidence that a particular vulnerability would be likely and that a corresponding management response should be taken. He then used these thresholds to a suggest rule of thumb guide for interpreting new information and implementing of a robust adaptive strategy.

Figure 7.9 shows one example application of this information to devise a Basin-wide robust adaptive strategy that guides the investment of additional water supply yield (or demand reductions). In the figure, the horizontal axis shows each decade, and the vertical axis shows different identified vulnerabilities (ordered from less severe to more severe). Each box presents the 90th percentile of yield implemented for a low-regret strategy across all futures within the vulnerability. This information serves as recommendations for the amount of additional water supply or demand reduction that would need to be developed by each decade to meet the Basin’s goals.

Illustrative robust adaptive strategy for Colorado River from Bloom (2015)

Figure 7.9 can be read as a decision matrix, where the dashed lines present one of many feasible implementation pathways through time. The example pathways show how Basin managers would develop new supply if the basin were on a trajectory consistent with the Below Historical Streamflow With Severe Drought vulnerability—similar to current Colorado River Basin conditions. By 2030, the Basin managers would have implemented options to increase net supply by 2.1 maf/year. The figure then highlights a 2030 decision point. At this point, conditions may suggest that the Basin is not trending as dry as Below Historical Streamflow with Severe Drought. Conditions may also suggest that system status is trending even worse. The horizontal barbell charts in the bottom of the figure show a set of conditions and beliefs derived from the Bayesian analysis that are consistent with the Severely Declining Supply scenario. If these conditions are met and beliefs held, then the Basin would need to move down a row in the figure and increase net supply to more than 3.6 maf in the 2031–2040 decade. While such a strategy would likely not be implemented automatically, the analysis presented here demonstrates the conditions to be monitored and the level of new supply that would require formal review, and the range of actions that would need to be considered at such a point.

3.7 Need for Transformative Solutions

The Basin Study analysis evaluated four adaptive strategies and explored the trade-offs among them. Based on those findings, the Basin has moved forward on developing the least-regret options included in those portfolios (Bureau of Reclamation 2015). The follow-on work by Bloom (2015) concurrently demonstrated how the Basin could take the analysis further and develop a robust adaptive strategy to guide investments over the coming decade. One important finding from both works is that there are many plausible futures in which the water management strategies considered in the Basin Study would not be sufficient to ensure acceptable outcomes. Figure 7.10 shows the optimal strategy for different probabilities of facing a Severely Declining Supply scenario (horizontal axis), Stationary or Increasing Supply (vertical axis), or all other conditions (implied third dimension). In general, if the probability of facing the Severely Declining Supply scenario is 30% likely, then the options evaluated in the Basin Study will likely be insufficient and more transformative options, such as pricing, markets, and new technologies, will be needed. Additional iterations of the RDM analysis could help the Basin evaluate such options.

4 Case Study 2: Using RDM to Develop Climate Mitigation Technology Diffusion Policies

The historic 2016 Paris Climate Accord had two main policy objectives (United Nations 2016): (1) maintain global temperature increases below two degrees Celsius, and (2) stabilize greenhouse gas (GHG) emissions by the end of this century. The international community also realizes that meeting these aspirations will require significant and coordinated investment to develop and disseminate technologies to decarbonize the world’s economies. Climate policies that achieve the Paris goals will likely include mechanisms to incentivize the use of renewables and energy efficiency through carbon taxes or trading schemes, as well as investments in the development and transfer to developing countries of new low-carbon technologies. These investments could take the form of price subsidies for technology or direct subsidies for research and development (R&D).

The Green Climate Fund (GCF) was designated to coordinate the global investments needed to help countries transition from fossil energy technologies (FETs) toward sustainable energy technologies (SETs). In this context, SETs include all technologies used for primary energy production that do not result in greenhouse gas emissions. Examples include: photovoltaic solar panels, solar thermal energy systems, wind turbines, marine current turbines, tidal power technologies, nuclear energy technologies, geothermal energy technologies, hydropower technologies, and low-GHG-intensity biomass.Footnote 2

Technology-based climate mitigation policy is complex because of the disparity in social and technological conditions of advanced and emerging nations, the sensitivity of technological pathways to policy intervention, and the wide set of policies available for intervention. This policy context is also fraught with deep uncertainty. On the one hand, the speed and extent of future climate change remains highly uncertain. On the other hand, it is impossible to anticipate the pace and scope of future technological development. Paradoxically, these highly uncertain properties are critical to determine the strength, duration, and structure of international climate change mitigation policies and investments.

The presence of both climate and technological deep uncertainty makes it inappropriate to use standard probabilistic planning methods in this context, and instead calls for the use of DMDU methods for long-term policy design. This case study summarizes a study by Molina-Perez (2016), the first comprehensive demonstration of how RDM methods could be used to inform global climate technology policymaking. The case study shows how RDM can be used to identify robust adaptive policies (or strategies) for promoting international decarbonization despite the challenges posed by the inherent complexity and uncertainty associated with climate change and technological change. Specifically, the study helps illuminate under what conditions the GCF’s investments and climate policy can successfully enable the international diffusion of SETs and meet the two objectives of the Paris Accord.

4.1 Decision Framing for Climate Technology Policy Analysis

To summarize the analysis, Table 7.2 shows a simplified XLRM chart from the Decision Framing step. The uncertain, exogenous factors include twelve scenarios for the evolution of the climate system based on endogenously determined global GHG emission levels (“Climate Uncertainty”), and seven factors governing the development and transfer of technologies (“Technological Factors”). The study considered seven different policies in addition to a no-additional action baseline (“Policy Levers”). The policies include a mixture of carbon taxes and subsidies for technology, research, and development. The first two policies consider no cooperation between regions, whereas the third through seventh policies consider some level of cooperation on taxes and subsidies. An integrated assessment model is used to estimate global outcome indicators (or measures of performance), including end-of-century temperatures, GHG emissions, and economic costs of the policies.

4.2 Modeling International Technological Change

Molina-Perez (2016) used an integrated assessment model specifically developed to support Exploratory Modeling and the evaluation of dynamic complexity. This Exploratory Dynamic Integrated Assessment Model (EDIAM) focuses on describing the processes of technological change across advanced and emerging nations, and how this process connects with economic growth and climate change.

The EDIAM framework depends upon three main mechanisms. The first mechanism determines the volume of R&D investments that are captured by SETs or FETs (based on principles presented in Acemoglu (2002) and Acemoglu et al. (2012)). Five forces determine the direction of R&D:

-

The “direct productivity effect”—incentivizing research in the sector with the more advanced and productive technologies

-

The “price effect”—incentivizing research in the energy sector with the higher energy prices

-

The “market size effect”—pushing R&D toward the sector with the highest market size

-

The “experience effect”—pushing innovative activity toward the sector that more rapidly reduces technological production costs

-

The “innovation propensity effect”—incentivizing R&D in the sector that more rapidly yields new technologies

The remaining two mechanisms describe how technologies evolve in each of the two economic regions—advanced and emerging. In the advanced region, entrepreneurs use existing technologies to develop new technologies, an incremental pattern of change commonly defined in the literature as “building on the shoulders of giants” (Acemoglu 2002; Arthur 2009). In the emerging region, innovative activity is focused on closing the technological gap through imitation and adaptation of foreign technologies. Across both regions, the speed and scope of development is determined by three distinct technological properties across the FETs and SETs sectors: R&D returns, innovation propensity, and innovation transferability. Finally, EDIAM uses the combination of the three mechanisms to endogenously determine the optimal policy response for mitigating climate change (optimally given all of the assumptions), thus making the system highly path-dependent.

4.3 Evaluating Policies Across a Wide Range of Plausible Futures

Molina-Perez used EDIAM to explore how different policies could shape technology development and transfer, and how they would reduce GHG emissions and mitigate climate change across a range of plausible futures. The study evaluated seven alternative policies across a diverse ensemble of futures that capture the complex interactions across the fossil and sustainable energy technologies. The ensemble is derived using a mixed experimental design of a full factorial sampling of 12 climate model responses (scenarios) and a 300-element Latin Hypercube sample across the seven technological factors shown in Table 7.2.

Figure 7.11 presents simulation results for the optimal policy for just three of these futures in which R&D returns are varied and all other factors are held constant. The left pane shows the global temperature anomaly over time for the best policy for three values of the relative R&D returns parameter. Only in two of the three cases is the optimal policy able to keep global temperature increases below 2 °C through 2100. The right pane shows the evolution of technological productivity for the advanced and emerging regions. As expected, higher relative R&D returns can lead to parity in technology productivity under the optimal policies and favorable exogenous conditions.

Molina-Perez also considered the role of climate uncertainty in determining the structure and effectiveness of the optimal policy response. For this study, the climate parameters of EDIAM were calibrated using the CMIP5 climate ensemble (IPCC 2014b; Taylor et al. 2012; Working Group on Coupled Modeling 2015). Each general circulation model used by the IPCC and included in the CMIP5 ensemble uses different assumptions and parameter values to describe the atmospheric changes resulting from growing anthropogenic GHG emissions and, as a result, the magnitude of the estimated changes varies greatly among different modeling groups. For example, Molina-Perez shows that for a more abrupt climate scenario, such as MIROC-ESM-CHEM, it is possible that the optimal policy would use a higher mix of carbon taxes, research subsidies, and technology subsidies than in the case of a less abrupt climate scenario (e.g., NorESM1-M).

4.4 Key Vulnerabilities of Climate Technology Policies

Following the RDM methodology, Molina-Perez used Scenario Discovery (SD) methods to isolate the key uncertainties that drive poor policy performance. Specifically, he expanded on prior SD methods (such as PRIM) by using high-dimensional stacking methods (Suzuki et al. 2006; Taylor et al. 2006; LeBlanc et al. 1990) to summarize the vulnerabilities.

In SD with dimensional stacking, the process starts by decomposing each uncertainty dimension into basic categorical levels. These transformed uncertainty dimensions are then combined into “scenario cells” that represent the basic elements of the uncertainty space. These scenario cells are then combined iteratively with other cells, yielding a final map of the uncertainty space. Following the SD approach, and in a similar way to Taylor et al. (2006), coverage and density statistics are estimated for each scenario cell such that only high-density and high-coverage cells are visualized. Finally, for each axis in the scenario map, the stacking order is determined using principal component analysis (i.e., the loadings of each principal component determine which dimensions can be stacked together). Uncertainty dimensions that can be associated with a principal component are stacked in contiguous montages.

Figure 7.12, for example, shows the vulnerabilities with respect to the two-degree temperature target identified using high-dimensional stacking for the second policy (P2), which includes independent carbon taxes, and subsidies for technology and R&D. The first horizontal montage describes three climate sensitivity bins (low, medium, and high); the second-order montage describes two relative innovation propensity bins (high and low); and the third-order montage describes two relative transferability bins (high and low). The first vertical montage describes three substitution levels (high, medium, and low); and the second-order montage describes two R&D returns bins (high and low). The cells are shaded according to the relative number of futures in which the policy meets the temperature target. The figure shows that policy P2 is more effective than the others in meeting the target in the low- and medium-sensitivity climate futures. Evaluations of the vulnerabilities for the other policies show that each policy regime works better under a different area of the uncertainty space. For instance, P7, which includes GCF funding for technology and research and development, meets the temperature objective in futures with low elasticity of substitution.

The evaluations of vulnerabilities for all seven policies suggest that the implementation of an international carbon tax (homogenous or not) can induce international decarbonization, but under many scenarios it fails to do this at a rate that meets the Paris objectives. As also shown in previous studies, the elasticity of substitution between SETs and FETs is a key factor determining the success (with respect to the Paris objectives) of the optimal policy response. However, this study also shows that technological and climate uncertainties are equally influential. In particular, the technological progress of SETs relative to FETs can affect the structure and effect of optimal environmental regulation to the same degree as climate uncertainty.

4.5 Developing a Robust Adaptive Climate Technology Policy

Molina-Perez used a two-step process to identify a robust adaptive climate policy. In the first step, he identified the policies with the least regret that also do not exceed a 10% cost threshold across all the uncertainties.Footnote 3 Policy regret was defined as the difference in consumer welfareFootnote 4 between a specific policy and the best policy for a given future. Figure 7.13 depicts the lowest-regret policy that meets the cost threshold for the same uncertainty bins used to characterize the vulnerabilities. The label and the color legend indicate the corresponding least-regret policy for each scenario cell. The dark cells refer to the GCF-based policies (P3-P7), while the light cells denote the independent policy architectures (P1 and P2). Cells with no shading represent futures for which no policy has costs below the 10% cost threshold.

These results show that the elasticity of substitution of the technological sectors and climate sensitivity are the key drivers of this vulnerability type. However, the results also highlight the important role of some of the technological uncertainty dimensions. For instance, the figure shows that for the majority of scenario cells with low relative transferability, it is not possible to meet cost and climate targets, regardless of the other uncertainty dimensions. This exemplifies the importance that the pace of development of SETs in the emerging region has for reducing the costs of the best environmental regulation. Similarly, the R&D returns of SETs are also shown to be an important driver of vulnerability.

As with the Colorado River Basin case study, no single policy is shown to be robust across all futures. Therefore, in the second step, Molina-Perez defined transition rules to inform adaptive policies for achieving global decarbonization at a low cost. To do so, he traced commonalities among the least-regret policy identified in each of the scenario cells described in Fig. 7.13. This identifies the combination of uncertainties that signal a move from one policy option to another. A simple recursive algorithm is implemented to describe moves from P1 (independent carbon tax) to successively more comprehensive policies, including significant GCF investments. The algorithm proceeds by: (1) listing valid moves toward each individual scenario cell from external scenario cells (i.e., pathways), (2) listing the uncertainty conditions for each valid move (i.e., uncertainty bins), (3) searching for common uncertainty conditions across these moves, and (4) identifying transition nodes for the different pathways initially identified.

Figure 7.14 shows a set of pathways that represents how the global community might move from one policy to another in response to the changing conditions that were identified in the vulnerability analysis. In these pathways, independent carbon taxation in P1 is a first step, because this policy directs the economic agents’ efforts toward the sustainable energy sector, and because this policy is also necessary to fund the complementary technology policy programs in both regions (a result from EDIAM’s modeling framework). In subsequent phases, different pathways are triggered depending on the unfolding climate and technological conditions. For example, if climate sensitivity is extremely high (β > 5, abrupt climate change), then it would be necessary to implement environmental regulations that are above the 10% cost threshold. In comparison, if climate sensitivity is low (β < 4, slow climate change), then the least-regret policy depends on SETs’ R&D relative returns (γset/γfet). If SETs’ R&D returns are below FETs’ R&D returns (set/γfet < 1, more progress in FETs), then only high cost policies would be available. On the contrary, if SETs outperform the R&D returns of FETs, two policy alternatives are available: If SETs’ transferability is higher than FETs’ transferability (νset/νfet > 1, more rapid update of SETs in emerging nations), then cooperation is not needed, and the individual comprehensive policy (P2) would meet the policy objectives at a low cost. In the opposite case (νset/νfet < 1, more rapid update of FETs in emerging nations), some cooperation through the GCF (P7) would be required to meet the climate objectives below the 10% cost threshold. Note that these pathways consider only the low elasticity of substitution futures. Other pathways are suitable for higher elasticity of substitution futures. Other pathways are suitable for higher elasticity of substitution futures.

These pathways illustrate clearly that optimal environmental regulation in the context of climate change mitigation is not a static concept but rather a dynamic one that must adapt to changing climate and technological conditions. This analysis also provides a means to identify and evaluate appropriate policies for this dynamic environment. The analysis shows that different cooperation regimes under the GCF are best suited for different combinations of climate and technological conditions, such that it is possible to combine these different architectures into a dynamic framework for technological cooperation.

5 Reflections

The complexity and analytic requirements of RDM have been seen by many as an impediment to more widespread adoption. While there have been many applications of RDM that use simple models or are modest in the extent of exploration, the first case study on Colorado River Basin planning certainly was not simple. In fact, this application is notable because it was both extremely analytically intensive, requiring two computer clusters many months to perform all the needed simulations, and it was integrated fully into a multi-month stakeholder process.

As computing becomes more powerful and flexible through the use of high-performance computing facilities and the cloud, however, near real-time support of planning processes using RDM-style analytics becomes more tenable. To explore this possibility, RAND and Lawrence Livermore National Laboratory put on a workshop in late 2014 with some of the same Colorado River Basin planners in which a high-performance computer was used to perform over lunch the equivalent simulations and analyses that were done for the Basin Study over the course of an entire summer (Groves et al. 2016). Other more recent studies have also begun to use cloud computing to support large ensemble analyses that RDM studies often require (Cervigni et al. 2015; Kalra et al. 2015; World Bank Group 2016). Connecting this near-simultaneous analytic power to interactive visualizations has the potential to transform planning processes.

These two case studies also highlight how the iterative, exploratory nature of RDM can support the development of adaptive strategies—an obvious necessity for identifying truly robust strategies in water management, international technology policymaking, and many other domains. A simple recipe might describe the approach presented here in the following way:

-

1.

Define low-regret options for an exhaustive evaluation of automatically adjusting strategies across a wide range of futures;

-

2.

Identify the vulnerabilities for a strategy that implements just the low-regret options;

-

3.

Define and evaluate different signposts and triggers (which could include decisionmaker beliefs about evolving conditions) for making the needed policy adjustments if vulnerabilities appear probable; and

-

4.

Present visualizations of pathways through the decision space to stakeholders and decisionmakers.

This approach to designing adaptive strategies represents a step toward a convergence of two DMDU methods presented in this book—RDM (Chap. 2) and Dynamic Adaptive Policy Pathways (Chap. 4). Continued merging of DMDU techniques for exploration of uncertainties and policies, and techniques and visualizations for defining policy pathways, could yield additional benefit.

Notes

- 1.

Chapter 1 (see Fig. 1.2) uses the acronym XPROW for external forces, policies, relationships, outcomes, and weights.

- 2.

Note that the SET group of technologies does not include carbon removal and sequestration technologies from power plant emissions, such as carbon capture and storage (CCS).

- 3.

Note that the 10% threshold is arbitrarily chosen, and could be the basis for additional exploratory analysis.

- 4.

The utility function of the representative consumers considers as inputs both the quality of the environment (inverse function of temperature rise) and consumption (proxy for economic performance).

References

Acemoglu, D. (2002). Directed technical change. The Review of Economic Studies, 69(4), 781–809.

Acemoglu, D., Aghion, P., Bursztyn, L., & Hemous, D. (2012). The Environment and directed technical change. American Economic Review, 102(1), 131–166.

Arthur, W.B. (2009). The Nature of Technology: What It Is and How It Evolves: Penguin UK.

Averyt, K., Meldrum, J., Caldwell, P., Sun, G., McNulty, S., Huber-Lee, A., et al. (2013). Sectoral contributions to surface water stress in the coterminous United States. Environmental Research Letters, 8, 35046.

Bloom, E., (2015). Changing midstream: providing decision support for adaptive strategies using Robust Decision Making: applications in the Colorado River Basin. Pardee RAND Graduate School. Retrieved July 13, 2018 from http://www.rand.org/pubs/rgs_dissertations/RGSD348.html.

Bureau of Reclamation. (1922). Colorado River Compact. Retrieved July 11, 2018 from https://www.usbr.gov/lc/region/g1000/pdfiles/crcompct.pdf.

Bureau of Reclamation. (2012). Colorado River Basin water supply and demand study: study report United States Bureau of Reclamation (Ed.). Retrieved July 11, 2018 from http://www.usbr.gov/lc/region/programs/crbstudy/finalreport/studyrpt.html.

Bureau of Reclamation. (2015). Colorado River Basin stakeholders moving forward to address challenges identified in the Colorado River Basin study–phase 1 report. Retrieved July 11, 2018 from https://www.usbr.gov/lc/region/programs/crbstudy/MovingForward/Phase1Report.html.

Cervigni, R., Liden, R., Neumann. J. E., and Strzepek, K. M. (2015). Enhancing the climate resilience of Africa’s infrastructure: the power and water sectors. World Bank, Washington, D.C. https://doi.org/10.1596/978-1-4648-0466-3.

Christensen, N. S., & Lettenmaier, D. P. (2007). A multimodel ensemble approach to assessment of climate change impacts on the hydrology and water resources of the Colorado River Basin. Hydrology and Earth System Sciences, 11, 1417–1434.

Fischbach, J. R., Lempert, R. J., Molina-Perez, E., Tariq, A. A., Finucane, M. L.,Hoss, F. (2015). Managing water quality in the face of uncertainty. RAND Corporation, Santa Monica, CA. Retrieved March 8, 2018 from https://www.rand.org/pubs/research_reports/RR720.html.

Groves, D. G., 2006. New methods for identifying robust long-term water resources management strategies for California. Pardee RAND Graduate School, Santa Monica, CA. Retrieved July 08, 2018 from http://www.rand.org/pubs/rgs_dissertations/RGSD196.

Groves, D. G., Bloom, E., Johnson, D. R., Yates, D., MehtaV. K. (2013). Addressing Climate change in local water agency plans. RAND Corporation. Retrieved July 01, 2018 from http://www.rand.org/pubs/research_reports/RR491.html.

Groves, D. G., Davis, M., Wilkinson, R., & Lempert, R. J. (2008). Planning for climate change in the Inland Empire. Water Resources IMPACT, 10, 14–17.

Groves, D. G., Fischbach, J. R., Bloom, E., Knopman, D., & Keefe, R. (2013). Adapting to a changing Colorado River. RAND Corporation, Santa Monica, CA. Retrieved July 02, 2018. http://www.rand.org/pubs/research_reports/RR242.html.

Groves, D. G., R. J. Lempert, D. W. May, J. R. Leek, & J. Syme (2016). Using High-performance computing to support water resource planning: a workshop demonstration of real-time analytic facilitation for the Colorado River Basin. Santa Monica, CA. https://www.rand.org/pubs/conf_proceedings/CF339.html.

IPCC (2014a). Climate Change 2014: synthesis report. Contribution of working groups I, II and III to the fifth assessment report of the intergovernmental panel on climate change core writing team. In R. K. Pachauri & L. A. Meyer (Eds.). IPCC, Geneva, Switzerland.

IPCC (2014b). Impacts, adaptation, and vulnerability. part b: regional aspects. contribution of working group ii to the fifth assessment report of the intergovernmental panel on climate change. Barros, V. R., Field, C. B., Dokken, D. J., Mastrandrea, M. D., Mach, K. J., Bilir, T. E., Chatterjee, M., Ebi, K. L., Estrada, Y. O., Genova, R. C., Girma, B., Kissel, E. S., Levy, A. N., MacCracken, S., Mastrandrea, P. R. & White, L. L. (Eds.). Cambridge University Press, Cambridge UK, New York, NY.

Kalra, N. R., Groves, D. G., Bonzanigo, L, Perez, E. M., Ramos Taipe, C. L., Cabanillas, I. R., et al. (2015). Robust Decision-Making in the water sector: a strategy for implementing Lima’s long-term water resources master plan. Retrieved July 03, 2018 from https://openknowledge.worldbank.org/handle/10986/22861.

LeBlanc, J., Ward, M. O., & Wittels, N. (1990). Exploring n-dimensional databases. In Proceedings of the 1st conference on Visualization ’90 (pp. 230–237).

Lempert, R J., Popper, S. W., Groves, D. G., Kalra, N., Fischbach, J. R. Bankes, S. C., et al. (2013b). Making Good Decisions Without Predictions. RAND Corporation. Retrieved July 01, 2018 from http://www.rand.org/pubs/research_briefs/RB9701.html.

Lempert, R. J., Kalra, N., Peyraud, S., Mao, Z., Tan, S. B., Cira, D., & Lotsch, A. (2013). Ensuring robust flood risk management in Ho Chi Minh City. Retrieved July 01, 2018 from http://www.rand.org/pubs/external_publications/EP50282.html.

Lindley, D. V. (1972). Bayesian statistics, a review. Society for industrial and applied mathematics (Vol. 2) https://doi.org/10.1137/1.9781611970654.

Loucks, D. P., Stedinger, J. R., Haith, D. A. (1981). Water resource systems planning and analysis. Prentice-Hall.

Melillo, J. M., Richmond, T. T., Yohe, G. W. (2014). Climate change impacts in the United States: the third national climate assessment, Vol. 52. https://doi.org/10.7930/j0z31wj2.

Molina-Perez, E. (2016). Directed international technological change and climate policy: new methods for identifying robust policies under conditions of deep uncertainty. Pardee RAND Graduate School. Retrieved July 02, 2018 from http://www.rand.org/pubs/rgs_dissertations/RGSD369.html.

Nash, L. L., & Gleick, P. H. (1993). The Colorado River Basin and climate change: the sensitivity of streamflow and water supply to variations in temperature and precipitation. Washington, D.C.: United States Environmental Protection Agency.

Ngo, H. H., Guo, W., Surampalli, R. Y., Zhang, T. C. (2016). Green technologies for sustainable water management. American Society of Civil Engineers, Reston, VA. https://doi.org/10.1061/9780784414422.

Raucher, K., & Raucher, R. (2015). Embracing uncertainty: a case study examination of how climate change is shifting water utility planning. Retrieved July 08, 2018 from https://www.csuohio.edu/urban/sites/csuohio.edu.urban/files/Climate_Change_and_Water_Utility_Planning.pdf.

Retrieved March 8, 2018 from https://openknowledge.worldbank.org/handle/10986/21875.

Seager, R., Mingfang, T., Held, I., Kushnir, V., Jian, L., Vecchi, G., et al. (2007). Model projections of an imminent transition to a more arid climate in Southwestern North America. Science, 316(5825), 1181–1184.

Suzuki, S., Stern, D., & Manzocchi, T. (2006). Using association rule mining and highdimensional visualization to explore the impact of geological features on dynamic flow behavior. SPE annual technical conference and exhibition.

Swanson, D., Barg, S., Tyler, S., Venema, H., Tomar, S., Bhadwal, S., et al. (2010). Seven tools for creating adaptive policies. Technological Forecasting and Social Change, 77, 924–939.

Taylor, A. L., Hickey, T. J., Prinz, A. A., & Marder, E. (2006). Structure and visualization of high-dimensional conductance spaces. Journal of Neurophysiology, 96(2), 891–905.

Taylor, K. E., Stouffer, R. J., & Meehl, G. A. (2012). An overview of CMIP5 and the experiment design. Bulletin of the American Meteorological Society, 93(4), 485–498.

United Nations (2016). Paris Agreement. Paris: United Nations, pp.1-27.

Working Group on Coupled Modelling. (2015). Coupled Model Intercomparison Project 5 (CMIP5), edited by Programme, World Climate Research. Retrieved March 8, 2018 from https://pcmdi9.llnl.gov/projects/cmip5/.

World Bank Group (2016). Lesotho water security and climate change assessment. World Bank, Washington, DC. Retrieved July 10, 2018 from https://openknowledge.worldbank.org/handle/10986/24905.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2019 The Author(s)

About this chapter

Cite this chapter

Groves, D.G., Molina-Perez, E., Bloom, E., Fischbach, J.R. (2019). Robust Decision Making (RDM): Application to Water Planning and Climate Policy. In: Marchau, V., Walker, W., Bloemen, P., Popper, S. (eds) Decision Making under Deep Uncertainty. Springer, Cham. https://doi.org/10.1007/978-3-030-05252-2_7

Download citation

DOI: https://doi.org/10.1007/978-3-030-05252-2_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-05251-5

Online ISBN: 978-3-030-05252-2

eBook Packages: Business and ManagementBusiness and Management (R0)