Abstract

Discriminant Locality Alignment (DLA) has been successfully applied in handwriting character recognition. In this paper, a new manifold based subspace learning algorithm, which is called Two-dimensional Discriminant Locality Alignment (2DDLA) algorithm, is proposed for online handwriting Tibetan character recognition (OHTCR). The proposed algorithm integrates the idea of DLA and two-dimensional feature extraction algorithm. At first, extracting direction feature matrix and edge feature matrix of Tibetan character respectively, they are together formed original feature matrix. Then, in part optimization stage, for each character sample, a local patch is built by the given sample and its neighbors, and an object function is designed to preserve local discriminant information. Third, in whole alignment stage, the alignment trick is used to align all part optimizations to the whole optimization. The projection matrix can be obtained by solving a standard eigen-decomposition problem. Finally, a SMQDF classifier is used training and recognition. Experimental results demonstrate that 2DLDA is superior to LDA and IMLDA in terms of recognition accuracy. In addition, 2DLDA can overcome the matrix singular problem and small sample size problem in OHTCR.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Online handwriting recognition

- Tibetan character recognition

- Two-dimensional discriminant locality alignment (2DDLA)

- Subspace learning

1 Introduction

With the acceleration of Tibetan information process, the demand of Tibetan character recognition system is becoming more and more prominent. At present, handwriting Tibetan character recognition (HTCR) has made great progress in both research and practical application [1,2,3,4,5,6]. However, the recognition of Tibetan character is different from handwriting recognition of other languages, it poses a special challenge due to a complex structure, wide varieties in writing style, a large character set and many instances of highly similar characters. Figure 1 illustrates some samples of handwriting Tibetan character. Unconstrained online HTCR is still an open problem remaining to be solved, for it is still challenging to reach high recognition rate.

As shown in Fig. 1, many Tibetan characters are almost identical to other characters except for only a small different. However, the small difference can be lost during the feature extraction process. So the discriminate information extraction is crucial for improvement of the recognition performance. In OHTCR, dimensionality reduction is the process of transforming data from a high dimensional space to a low dimensional space to reveal the intrinsic structure of the distribution of data. It plays a crucial role in the field of computer vision and pattern recognition as a way of dealing with the “curse of dimensionality”. In past decades, a large number of dimensionality reduction algorithms have been proposed and studied. Among them, principal components analysis (PCA) [7] and Fisher’s linear discriminant analysis (LDA) [8] are two of the most popular linear dimensionality reduction algorithms.

PCA maximizes the mutual information between original high dimensional Gaussian distributed data and projected low dimensional data. PCA is optimal for reconstruction of Gaussian distributed data. However, it is not optimal for classification problems. LDA overcomes this shortcoming by utilizing the class label information. It finds the projection directions that maximize the trace of the between-class scatter matrix and minimize the trace of the within-class scatter matrix simultaneously. However, LDA is only a suboptimal model which suffers from the class separation problem. The objective of LDA can be formulated as maximizing the sum of all the pairwise distances between different classes, which will overemphasize the large distance of the already well-separated classes, and confuse the small distance classes that are close in the original feature space. Li and Yuan [9] proposed a new method of feature extraction using two-dimensional linear discriminant analysis (2DLDA), and directly uses the matrix to extract the discriminant feature without a vectorization procedure, it has a great advantage over the one-dimensional method in calculation and processing efficiency.

Zhang [10] proposed a local linear dimensionality reduction algorithm called discriminative locality alignment (DLA). The DLA takes into account the locality of samples, deals with the nonlinearity of the samples distribution, and preserves the discriminability of classes as well. However, the DLA algorithm is based on the vector space, and the data must be vectorized during calculation, which destroys the spatial distribution characteristics and structure information of the data. Based on the stability and effectiveness of DLA algorithm in recognition performance, in this paper, we combine the idea of DLA algorithm with two-dimensional feature extraction algorithm, and proposes a two-dimensional discriminative locality alignment (2DDLA) algorithm to improve the recognition performance in OHTCR.

The rest of paper is organized as follows. Section 2 introduces two-dimensional discriminant locality alignment (2DDLA) algorithm for extracting discriminative features for OHTCR and details the basic formulation. Section 3 introduces SMQDF classifier. We perform experiments in Sect. 4 to show the effectiveness of the proposed method and Sect. 5 gives concluding remark.

2 Two-Dimensional Discriminative Locality Alignment

2DDLA aims to extract discriminative information from patches. To achieve this goal, one patch is first built for each sample. Each patch includes a sample and its within-class nearest samples and its between-class nearest samples. Then an objective function is designed to preserve the local discriminative information of each patch. Finally, all the part optimizations are integrated together to form a global coordinate according to the alignment trick. The projection matrix can be obtained by solving a standard Eigen decomposition problem.

2.1 Part Optimization

Suppose we have a set of samples X = [X1, X2,···XN] from C different classes, Xi∈Rm×n. For a given sample Xi, we select k1 nearest neighbors from the samples of the same class with Xi and name them as the neighborhoods of a same class: \( X_{{i^{1} }} , \cdots ,X_{{i^{{k_{1} }} }} \), we also select k2 nearest neighbors from samples of different classes with Xi, and name them as neighborhoods of different classes: \( X_{{i_{1} }} , \cdots ,X_{{i_{{k_{2} }} }} \). By putting them together, we can build the local patch for Xi as \( \varPi_{i} = [X_{i} ,X_{{i^{1} }} , \cdots ,X_{{i^{{k_{1} }} }} ,X_{{i_{1} }} , \cdots ,X_{{i_{{k_{2} }} }} ] \). For each patch, the corresponding output in the low-dimensional space is denoted by \( \varGamma_{i} = [Y_{i} ,Y_{{i^{1} }} , \cdots ,Y_{{i^{{k_{1} }} }} ,Y_{{i_{1} }} , \cdots ,Y_{{i_{{k_{2} }} }} ] \).

In the low-dimensional space, we expect that distances between the given sample and its within-class samples are as small as possible, while distances between the given sample and its between-class samples are as large as possible. So we have

where ||·||F denotes the Frobenius norm for a matrix.

Since the patch formed by the local neighborhood can be regarded approximately linear, we formulate the part discriminator by using the linear manipulation as follows:

where β is a scaling factor (β∈[0,1]). The coefficients vector is defined as:

Then the Eq. (3) reduces to:

where tr (·) denotes the trace operator, the operator ⊗ denotes the Kronecker product of matrix, \( F_{i} = \{ i,i^{1} , \cdots ,i^{{k_{1} }} ,i_{1} , \cdots ,i_{{k_{2} }} \} \) is the index set for the ith patch, \( e_{{k_{1} + k_{2} }} = [1, \cdots 1]^{T} \in R^{{k_{1} + k_{2} }} \), \( I_{{k_{1} + k_{2} }} \) is a (k1 + k2) × (k1 + k2) identity matrix, \( R = [- e_{{k_{1} + k_{2} }} ,I_{{k_{1} + k_{2} }} ]^{T} \), \( L = \left[ {\begin{array}{*{20}c} { - e_{{k_{1} + k_{2} }}^{T} } \\ {I_{{k_{1} + k_{2} }} } \\ \end{array} } \right] \), and

2.2 Whole Alignment

After the part optimization step, we unify the optimizations together as a whole one by assuming that the coordinate for the i’th patch \( \varGamma_{i} = [Y_{i} ,Y_{{i^{1} }} , \cdots ,Y_{{i^{{k_{1} }} }} ,Y_{{i_{1} }} , \cdots ,Y_{{i_{{k_{2} }} }} ] \) is selected from the global coordinate \( \varGamma = [Y_{1} ,Y_{2} , \cdots ,Y_{N} ] \), such that \( \varGamma_{i} = \varGamma S_{i} \), where \( S_{i} \in R^{{(N \times n) \times ((k_{1} + k_{2} + 1) \times n)}} \) is the selection matrix and an entry is defined as follows:

Then Eq. (7) can be rewritten as

By summing over all the part optimizations described as Eq. (8), we can obtain the whole alignment as

where \( L = \sum\limits_{i = 1}^{N} {S_{i} T_{i} S_{i}^{T} \in R^{N \times N} } \) is the alignment matrix.

To obtain the linear and orthogonal projection matrix W, such as Y = WTX, Eq. (9) is deformed as follows:

The transformation matrix W that minimizes the objective function is given by the minimum eigenvalue solution to the standard eigenvalue problem,

3 SMQDF

3.1 MQDF

MQDF [11] classifier’s discriminate function is formulated as

where, Y is input feature vector, m is line number of feature matrix, μj denotes the mean vector of class ωj, λ (j) i and ζ (j) i denote the ith larger eigenvalue and the corresponding eigenvector of the covariance matrix of class ωj, respectively. k is the number of dominant principal eigenvectors that are kept in MQDF, λ is experiment parameter.

We can obtain the classified result based on the following criterion: If \( f(Y,\omega_{i} ) = \mathop { \hbox{min} }\limits_{1 \le j \le C} f(Y,\omega_{j} ) \)(C is class number), then we believe that input pattern Y belongs to the ωi class.

3.2 SMQDF

MQDF classifier is widely used in the area of character recognition. However, it only applies to feature vector, and it is not appropriate for feature matrix. For this reason, SMQDF (second modified quadratic discriminate function) classifier is generated by improving MQDF classifier, its discriminate function as shown in the follow formula (13). We take it as a baseline classifier.

where, Y is feature matrix, m is positive integer, When classifies, Y belongs to the class whose f(Y,ωi) is minimum. To compensate for the estimation error of parameters on limited training samples, the minor eigenvalues are replaced with a constant λ. It can be set to a class-independent constant or class-dependent constant. Here we set λ to be class-independent for its superior performance. λ is computed by

4 Experiment Results

4.1 Experiment Data

We evaluated the recognition performance of 2DDLA on a databases of handwritten Tibetan characters, collected by our group, contains the handwriting samples of 7240 characters, 5000 samples per class [12]. In order to reduce the computing cost, we only selected 562 frequently used characters, 2000 samples per class for training and 500 samples per class for testing.

For character image pre-processing and feature extraction, we adopt the same methods as in [6]. Each character image is normalized to 48 × 96 pixels, the directional features and edge features are extracted. The resulting 60 × 12 feature matrix is projected onto a 12 × 12 subspace learned by 2DDLA, then the baseline classifier SMQDF is designed on this 12 × 12 subspace.

4.2 Choice of Parameters for 2DLDA

Since the parameters setup for 2DDLA is essential for its performance, we carried out the 2DDLA parameter optimization experiments before for OHTCR. In the model of 2DDLA, there are three parameters: k1, k2 andβ, where k1 is the number of the samples from identical class in the given patch, k2 is the number of the samples from other classes in the same given patch, and parameter β is the scale parameter. In order to find a proper range for the dominant parameters k1, k2 andβ in 2DDLA, we will investigate the effects of the three model parameters on the recognition rates in the validation phase based on our collected database.

Suppose n is the training sample number in each class (n = 2000), N is the total training sample number (N = 562 × 2000 = 1024000), and C is class number (C = 562). Then, k1 and k2 could be chosen in the range of [1, n−1] and [0, N−n] respectively. Therefore, 1 ≤ k1 ≤ 1999, 0 ≤ k2 ≤ 1022000, and 0 ≤ β ≤1.

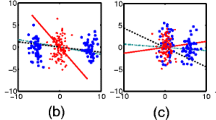

To evaluate the effects of the three model parameters, firstly, we analyze the effect of the scale parameter β, by fixing patch building parameters k1 and k2 to arbitrary values. For a given pair parameters k1 and k2, we can obtain the recognition rate curve with respect to β, as shown in Fig. 1. Base on the figures, we observe that the best recognition rates are obtained when β is neither too small nor too larger.

Secondly, we analyze the effects of patch building parameters k1 and k2, by fixing scale parameter β = 0.1. When we vary k1 and k2 simultaneously, the best recognition rate with the corresponding to β can be acquired. Table 1 shows that the details of the best recognition rate. Figure 2 shows that best recognition rate with the corresponding k1 = 50 and k2 = 300 in this experiment (Fig. 3).

For a given pair parameters k1 and k2, the best recognition rate with the corresponding to β. (a) k1 = 32, k2 = 100, (b) k1 = 32, k2 = 200, (c) k1 = 32, k2 = 300, (d) k1 = 50, k2 = 100, (e) k1 = 50, k2 = 200, (f) k1 = 50, k2 = 300, (g) k1 = 80, k2 = 100, (h) k1 = 80, k2 = 200, (i) k1 = 80, k2 = 300, (j) k1 = 100, k2 = 300, (k) k1 = 100, k2 = 500, (l) k1 = 100, k2 = 800, (m) k1 = 100, k2 = 990, (n) k1 = 300, k2 = 500, (o) k1 = 300, k2 = 800, (p) k1 = 300, k2 = 990, (q) k1 = 500, k2 = 800, (r) k1 = 500, k2 = 1000, (s) k1 = 500, k2 = 1200, (t) k1 = 500, k2 = 1500, (u) k1 = 800, k2 = 1500, (v) k1 = 800, k2 = 2500, (w) k1 = 800, k2 = 3800, (x) k1 = 1000, k2 = 300

Table 1 shows that, the best combination of k1, k2, and β are k1 = 50, k2 = 300, β = 0.1 and k1 = 100, k2 = 300, β = 0.3, with the corresponding accuracy 99.38%. Considering the computing cost, in the following experiments, we use the best combination of k1, k2, and β is 50, 300, 0.1 respectively.

4.3 Evaluation Experiments

To evaluation the performance of 2DDLA in SHCCR, we compare the performance of 2DDLA, LDA, IMLDA [13] and 2DLDA in terms of recognition rate over SMQDF classifier [6]. The experimental results are summarized in Table 2. We can see that the proposed method obtains higher top 1 and top 10 recognition rate than other method.

From Table 2, it is shown that the recognition rates of 2DDLA are significantly higher than that of IMLDA and 2DLDA respectively. It also shows the discriminate information extraction performance is very competitive in OHTCR.

To illustrate the effects of the 2DDLA, Fig. 4 shows some sample that are misrecognized by IMLDA and 2DLDA, but can be corrected by 2DDLA.

5 Conclusion

In this paper, we present a new method, called two-dimensional discriminative locality alignment (2DDLA). Compare with IMLDA and 2DLDA, the proposed method has better recognition rate. It inherits all the advantages of DLA and can overcome the matrix singular problem and small sample size problem in OHTCR.

References

Wang, W., Ding, X., Qi, K.: Study on similar character in Tibetan character recognition. J. Chin. Inf. Process. 16(4), 60–65 (2002)

Wang, H., Ding, X.: Multi-font printed Tibetan character recognition. J. Chin. Inf. Process. 17(6), 47–52 (2003)

Wang, W., Qian, J., Duojie, Z., Ma, M., Qi, K., Duo, L., et al.: A method of online handwritten Tibetan characters recognition. State Intellectual Property Office of the Peoples Republic of China (2011). ZL200910128595.8

Huang, H., Da, F., Hang, X.: Wavelet transform and gradient direction based feature extraction method for offline handwritten Tibetan letter recognition. J. Southeast Univ. 30(1), 27–31 (2014)

Ma, L.L., Wu, J.: A Tibetan component representation learning method for online handwritten Tibetan character recognition. In: Proceedings of the 14th ICFHR, pp. 317–322 (2014)

Wang, W., Qian, J., Wang, D., Duojie, Z.: Online handwriting recognition of Tibetan characters based on the statistical method. J. Commun. Comput. 8(2011), 188–200 (2011)

Jolliffe, I.T.: Principal Component Analysis. Springer, New York (1986). https://doi.org/10.1007/978-1-4757-1904-8

Fisher, R.A.: The use of multiple measurements in taxonomic problems. Ann. Eugen. 7, 179–188 (1936)

Li, M., Yuan, B.: 2D-LDA: a statistical linear discriminant analysis for image matrix. Pattern Recognit. Lett. 26(5), 527–532 (2005)

Zhang, T., Tao, D., Li, X., et al.: Patch alignment for dimensionality reduction. IEEE Trans. Knowl. Data Eng. 10(2), 433–439 (2009)

Kimura, F., Takashina, K., Tsuruoka, S., Miyake, Y.: Modified quadratic discriminant functions and its application to Chinese character recognition. IEEE Trans. Pattern Anal. Mach. Intell. 9(1), 149–153 (1987)

Wang, W., Lu, X., Cai, Z., Shen, W., Fu, J., Caike, Z.: Online handwritten sample generated based on component combination for Tibetan-Sanskrit. J. Chin. Inf. Process. 31(5), 64–73 (2017)

Qian, J., Wang, J.: A novel approach for online handwriting recognition of Tibetan characters. In: Proceedings of IMECS 2010, pp. 1–4 (2010)

Acknowledgment

This work was supported by the Fundamental Research Funds for the Central University of Northwest Minzu University (No. 31920170142), the Program for Leading Talent of State Ethnic Affairs Commission, the National Science Foundation (No. 61375029), and supported by the Gansu Provincial first-class discipline program of Northwest Minzu University.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Cai, Z., Wang, W. (2018). Online Handwriting Tibetan Character Recognition Based on Two-Dimensional Discriminant Locality Alignment. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11258. Springer, Cham. https://doi.org/10.1007/978-3-030-03338-5_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-03338-5_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03337-8

Online ISBN: 978-3-030-03338-5

eBook Packages: Computer ScienceComputer Science (R0)