Abstract

Visible watermark is extensively used for copyright protection with the wide spread of online image. To verify its effectiveness, there are many researches attempt to detect and remove visible watermark thus it increasingly becomes a hot research topic. Most of the existing methods require to obtain the prior knowledge from watermark, which is not applicable for images with unknown and diverse watermark patterns. Therefore, developing a data-driven algorithm that suits for various watermarks is more significant in realistic application. To address the challenging visible watermark task, we propose the first general deep learning based framework, which can precisely detect and remove a variety of watermark with convolutional networks. Specifically, general object detection methods are adopted for watermark detection and watermark removal is implemented by using image-to-image translation model. Comprehensive empirical evaluation are conducted on a new large-scale dataset, which consists of 60000 watermarked images with 80 watermark classes, the experimental results demonstrate the feasible of our introduced framework in practical. This research aims to increase copyright awareness for the spread of online images. A reminder of this paper is that visible watermark should be designed to not only be striking enough for ownership declaration, but to be more resistant for removal attacking.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image, serving as an important information carrier for E-commercial and social media, is widely employed and rapidly spreads nowadays. In modern life, many online images are embedded with visible watermarks for ownership declaration. In order to avoid the misuse of copyrighted images, it requires to perform watermark detection upon images before we use these images. Therefore, it is necessary to develop a watermark detector that is able to automatically and accurately detect visible watermarks in images. Furthermore, as visible watermark plays an important role in copyright protection, for purpose to verify its effectiveness, a number of scientists attempt to attack it by removing watermark from images after detection. Visible watermark detection and removal increasingly becomes a hot research topic [1,2,3,4,5,6].

Developing robust visible watermark detection and removal methods remain as a challenging task due to the diversification of visible watermarks. More specifically, visible watermarks may consist of texts, symbols or graphic etc, leading to the challenge of extracting discriminative feature from unknown and diverse patterns of watermarks. In addition, the variations of the shape, location, transparency and size of the watermarks in various sorts of watermarked image makes it hard to estimate the area of watermark in practical situation.

Although researchers have extensively explored the visible watermark detection and removal problems [1,2,3,4,5,6], these works require handcraft feature from images which highly depends on the prior knowledge. Thus, developing a feasible approach that is able to tackle aforemention challenges for watermarked images remains to be an unsolved problem. Recently, despite deep convolutional networks have shown their strong performance on feature representation for computer vision problems through taking advantage of massive image data, there is a lack of deep learning method for watermark detection as well as removal, and a lack of large-scale watermark dataset. Due to this fact, we contribute a large-scale watermark dataset and further utilize deep learning to generalize the detection and removal of unknown and diverse watermark patterns.

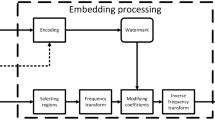

In this work, we propose a new visible watermark processing framework consisting of the robust large-scale watermark detection and removal components. Both of watermark detection and removal are build upon deep convolutional networks. Generally speaking, we exploit the trained watermark detector to locate the area where there is a watermark, which will be cropped out and used for the removal. To be more specific, we adopt the framework of current state-of-the-art object detectors as our watermark detection basic network, which is further implemented to be suitable for detecting and locating visible watermarks in images. In the removal procedure, we cast the watermark removal into an image-to-image translation problem, where we propose a full convolutional architecture to transfer the watermarked pixels into the original unmarked pixels effectively. Finally, both components are able to collaborate together to perform visible watermark detection and removal tasks automatically and consistently.

In summary, the main contributions of this work are: (1) It is the first work that formulate the visible watermark detection as an object detection problem and adapt existing detectors to make them suitable for automatical watermark detection. To achieve this, we contribute a new large-scale visible watermark dataset with dense annotations to facilitate the lack of large-scale image dataset for visible watermark detection task. (2) We propose an integrative deep learning based framework to fully address the visible watermark processing problem including detection and removal. Moreover, extensive comparison experiments are conducted to evaluate our proposed framework and the experimental results demonstrate the effectiveness and efficiency of our proposed framework for complex visible watermark detection and removal tasks in real-world scenarios.

2 Related Work

Watermark Detection and Removal. In watermark detection and removal literature, existing methods can be divided into two categories: (a) single image schemes [1,2,3]. (b) stock images schemes (a large stock of images with same type of watermark) [4, 5]. For single image schemes, Santoyo-Garcia et al. [1] proposed to decompose a watermarked image and then distinguish the watermarked area from the structure image. Pei and Zeng [2] utilized Independent Component Analysis (ICA) for watermarked image recovery. These methods have to extract handcraft features from the whole watermarked image, which makes it very inefficient for these methods to be implemented for detecting and removing watermarks with diverse visible patterns. As for stock image schemes, Dekel et al. [4] proposed to estimate the outline sketch and alpha matte of watermarks from a batch of images. In this case, visible watermarks are regarded as foregrounds, whose attributes are required to be the same. Xu et al. [5] proposed an watermark removal technique which assumes the pending images have the same resolution and watermark region as those of training images. Despite the stock-based approaches can estimate the outline of watermark for stock images, these methods are not suitable for detecting and removing watermarks in real-world scenarios where the images are high potentially marked with unknown watermarks or the pattern of watermarks in different images might be distinct. To overcome these challenge, we proposed a new deep learning based framework which can effectively detect and remove watermark with unknown patterns.

Object Detection. Since we formulate the watermark detection as an object detection task in this paper, existing generic object detectors are related to ours. Currently the deep learning based object detection methods can be divided into two-stage approaches [8,9,10] and one-stage methods [11,12,13,14,15]. Since the one-stage methods take privilege of their high effectiveness and efficiency, they become the mainstream of object detection. For example, YOLOv2 and RetinaNet can obtain the state of art performance in accuracy with high speed (i.e. performing real-time object detection).

Image Inpainting. Related to watermark removal, image inpainting inpaints missing regions in an image, which gains huge benefit from a variety of Generative Adversarial Networks (GAN) based models [16, 17]. Different from image inpainting, in visible watermark removal, those pixels in watermarked area are not missing. They instead embedded some background information. Hence, in this work, we utilize the generator architecture to achieve the transformation between watermarked pixels and unmarked pixels, which is proved to be very effective in our work.

3 Methodology

In this work, we aim at automatically and precisely detecting unknown and diverse visible watermarks in images and exploring watermark removal in an effective and efficient way. In this section, we present our visible watermark processing framework. Firstly, a large-scale image dataset for visible watermark processing is introduced. In general, our whole pipeline can be divided into two separate modules: (1) the watermark detection module and (2) the watermark removal module. To be more specific, we illustrate our watermark detection module which is built on the existing deep learning based general object detection methods in Sect. 3.2 and the watermark removal one is detailed in Sect. 3.3. The illustration of our proposed visible watermark processing framework is shown in Fig. 1.

The pipeline of our visible watermark processing framework. In the period of detection, the goal is to judge whether a image has watermark and locate the watermarked area (the red box). Then, we enlarge the detection boundingbox (the yellow box) and crop the watermarked patch to generate the input for watermark removal. (Color figure online)

3.1 Large-Scale Visible Watermark Dataset

At present there is no watermarked image dataset available for large-scale visible watermark detection and removal. To fill this gap, we contribute a new watermarked image dataset, containing 60000 watermarked images made of 80 watermarks, with 750 images per watermark. Specifically, the original images used in the training and test sets are randomly chosen from the train/val and test sets in PASCAL VOC2012 dataset [18] with replacement respectively. The 80 categories of watermarks cover a vast quantity of patterns, including English and Chinese, which are collected from renowned E-commercial brand, websites, organization, personal, and etc (see Fig. 2(a)). The entire watermarks are transfered into binary image with alpha channel for opacity setting. Furthermore, the size, location and transparency of each watermark in different images are distinct and set randomly. The diversity of watermarks makes our dataset more general (see Fig. 2(b)).

Another important distinction between our dataset and the conventional small-scale watermark dataset [4] is the watermarks in training set are not used for constructing images in test set. To be more particular, in existing watermark dataset, watermarks in training set and test set are exactly the same. This would lead to the situation where the watermark detector trained on such dataset can not work well on detecting unknown watermarks in images, which is impractical. Therefore, to meet the demand of watermark detection in real-world scenarios, in our dataset, watermarks in test set are different from those in training set. More specifically, train set contains 80% sorts of watermark and the test set includes the remaining.

In traditional pattern recognition tasks, object annotation is a time-consuming and tedious procedure. During generating watermarked image, we save the location size of the embedded watermark and original image at the same time. With our large-scale visible watermark dataset, it is possible to develop a significant deep learning based framework for facilitating visible watermark tasks.

3.2 Visible Watermark Detection

Visible watermark detection, one of fundamental topics in the computer vision field, is essential for various important applications, such as intellectual property protection in e-commerce, copyright declaration for business intelligence, and visual online advertising, etc. In this work, instead of directly exploiting an existed watermark detector to detect watermarks at the beginning of our watermark processing framework, we consider to develop a new and more robust one.

From the machine learning perspective, watermark detection can be viewed as an two classification task, where the cropped image patches are classified into the watermark or background category. However, in real-world scenarios, images always contain various contents and the pattern, content, location, size, number of the watermarks in images are unknown. Developing a robust method to detect watermarks in images in the wild is inherent challenging and remains unsolved. In this paper, we formulate watermark detection as an object detection problem. Generally speaking, the recent deep learning based algorithms for generic object detection, e.g. Faster RCNN [10] YOLO [11, 12], RetinaNet [15] are appropriate for our detection task.

Figure 1 shows the proposed deep learning based framework for watermark detection in images. To be more specific, our model takes as input a watermarked image and estimates the probabilities of all candidates with different scale and ratio at all location in the image classified as the area which is tightly covered by a watermark. Considering that the efficiency of watermark method is one of most important criterions in watermark detection, we adopt the one-stage detection methods in our watermark detection framework.

Thanks to the large-scale watermark dataset proposed in this work, our proposed watermark detector can be trained effectively. More importantly, our proposed method can detect watermarks in images effectively and efficiently under unknown condition such as the unknown watermarks in images and so on.

3.3 Visible Watermark Removal

Once the watermarks in images are accurately detected, the detection results can be used for further image-based watermarks processing such as watermark removal, watermark recognition, etc. In this work, we mainly investigate the former task, the watermark removal, and develop image transformation based method for it.

Image transformation, where an image transformation model takes as input an image and generate a different image to facilitate specific tasks, is one of the popular computer vision topics. Examples like image denoising, super-resolution, image style translation, etc., have taken significant steps since convolutional neural network serves as an indispensable foundation for these works. Inspired by the success of image transformation using deep learning technique [16, 19], we propose an effective visible watermark removal system based on deep neural networks.

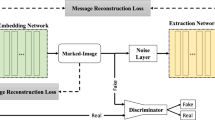

As shown in Fig. 3, the system consists of two components: watermark removal network and loss network. Each watermarked patch x is fed into the watermark removal network to obtain the estimated watermark free patch \(\tilde{y}\). Then the L1 loss and perceptual loss are calculated based on the ground truth and the estimated patches.

The whole network is trained to minimize the loss function via the combination of the two during training. During the test procedure, merely a forward transformation is required via passing watermarked patch through the watermark removal network.

The illustration of our proposed system for visible watermark removal. We leverage the U-net architecture for transferring visible watermarked patch into the watermark free one. The difference between the outputed watermark free patch and the ground truth watermark free patch is calculated using L1 loss, and perceptual loss is exploited for measuring the perceptual features of visible watermark. Therefore, the total loss of the watermark removal module during training process comprise the L1 loss and the perceptual one. The loss network for calculating perceptual loss is pre-trained on ImageNet for image classification, which remains fixed during the training process.

Network Architectures. Rather than transfering a whole image pixel-to-pixel, our work focuses on partial transformation task (i.e. transfer a specific patch of a image). More specifically, pixels inside the detected area are expected to be recovered to unmarked condition, while those in unmarked area in the watermarked image will remain unchanged. Specifically, we adapt the architecture of our removal network as that of the U-net [7]. This network is mirror symmetrical in structure, with skip connection between corresponding blocks. In this way, the shallow features near to the input get combination with those high-level features so that the low-level features such as location and texture of input image can be preserved.

Objective Function. The L1 loss penalizes the pixel distance between the ground truth and the output, which has been proved to have good performance in matching the pixel value of the input with those of the ground truth, and synthesizing the output [16]. Hence it is adopted in our network and is denoted as \(\varvec{L}_{L1}\).

where x denoted as an input watermarked patch detected and cropped from an watermarked image, y refers to the ground truth patch without watermark. f(x) is the output of U-net. As L1 loss is calculated based on per-pixel value in a whole image, it will be huge when each pixel has a small change and the image has little difference in visual. Besides, as the perceptual loss, which has been proved to be efficient in capturing the semantic information of the source image, depends on high level feature from convolutional layer, using perceptual loss can result in a more realistic output. Supposed the feature size of the \(j_{th}\) convolutional layer of loss network is \(C_{j}\times H_{j}\times W_{j}\), the convolutional transformation is denoted as \(\varPhi _{j}\) and \(\tilde{y}\) is the estimated watermark free patch which is equal to f(x). The formulation of the perceptual loss can be expressed as:

In our work, we leverage the \(\mathbf {relu2\_2}\) feature from VGG-16, which is similar to the work in [19]. Consequently, in order to obtain a more visual pleasure results for visible watermark removal, we combine benefits of these two loss functions, which can keep the details of input information as well as the perceptual information. Thus, the objective function of our removal network is:

where \(\alpha \geqslant 0\) is a weight for regularizing the effect of L1 loss and perceptual loss.

4 Experiments

In order to evaluate our proposed framework, we conduct comprehensive experiments on our large-scale visible watermark dataset introduced in Sect. 3.1. In this work, both components in our proposed framework, the watermark detection and removal modules, are evaluated and the experiments are conducted on a computer cluster equipped with NVIDIA Tesla K80 GPU with 12 GB memory. The experimental details of these two components are illustrated and analyzed individually. It should be noted that existed methods cannot handle images with unknown watermark patterns, thus they are not suitable for the case that we deal with in this paper.

4.1 Visible Watermark Detection

Settings. We presume the proposed watermark detection framework can take any recent deep learning algorithms for generic object detection. In our work, one two-stage method Faster RCNN [10] and two one-stage methods YOLOv2 [12] and RetinaNet [15] are adopted to verify our assumption. In order to make the generic object detector suitable for watermarks detection in images, we adapt the number of class to two (i.e. watermark or background), and follow the training strategy on object detection [10, 12, 15] to train our watermark detection networks.

As we fomulate visible watermark detection as an object detection task, we follow the standard object detection evaluation metric to validate the effectiveness of our visible watermark detector, which is the Average Precision (AP) under defined Intersection over union (IoU).

Results and Analysis. Figure 4 shows the AP curves versus IoU threshold of the watermark detection models using Faster RCNN [10], YOLOv2 [12] and RetinaNet [15]. From the figure, it is clear to see that the AP of the visible watermark detection models stays at around 100% when IOU is smaller than 0.4 and the difference between these three models are very small. This promising results imply that the visible watermark model which is obtained by finetuning existing object detection model on our visible watermark dataset can be effective on detecting unknown visible watermark patterns. With the IoU increasing, the AP curves drop dramatically. However, this has limited influence on our work as watermark detection does not require very precise location of watermark bounding box in real-world scenarios. Furthermore, it is evident that the watermark detection model using one-stage method RetinaNet improves AP significantly over Faster RCNN and YOLOv2. This indicates that the focal loss introduced in RetinaNet can result in a more precise detection results for the small and unapparent visible watermarks target.

Visualization of detection examples on our large-scale watermark dataset with RetinaNet. The red box with the predicted watermark confidence score shown on the top of the box is predicted by our watermark detection model using RetinaNet, while the blue box shown on the bottom of the blue box is the goundtruth with IoU ratio between the groundtruth box and the predicted one. (Color figure online)

To have a rounded analysis, we present the results of visible watermark detection in Table 1. The value of AP\(_{50:95}\), AP\(_{50}\) and AP\(_{75}\) are listed, where AP\(_{50:95}\) is the average of AP under IoU threshold ranging from 0.5 to 0.95. These results validate the excellent performance of RetinaNet.

In order to evaluate the performance of our watermark detector, we visualize the watermark detection results of some testing examples in our collected dataset and show them in Fig. 5. The results in the figure indicate that our watermark detector is strong enough to detect those watermarks with different scales, transparency, location and various pattern from background clutter. It verifies that fomulating the visible watermark detection as an object detection task is feasible.

4.2 Removal

Settings. For visible watermark removal, we build up our U-net with four down-sampling blocks. Specifically, the input patch and the ground truth one are cropped from the marked image and the source one according to the predicted watermark bounding box of our watermark detection model using RetinaNet. Here, the center of both cropped marked and ground truth patches center at the center of the detected watermark bounding box and the size is 1.5 times larger than that of the predicted watermark bounding box to ensure that the watermark target can be included in the cropped patches. We further round the size of both cropped patches (i.e. height and width of the patches) to be a multiplier of 16, which is required to meet the input requirement of the U-net. During training, we adopt Adam optimization algorithm with initializing the learning rate as 2e−4, and the batch size is set to be 1. The \(\alpha \) for regulating perceptual loss and L1 loss is adjusted to 1e−6.

The metrics which we adopt to evaluate the effects of watermark removal is the same as that of [4], including Peak Signal to Noise Ratio (PSNR) and Structural dissimilarity Image Index (DSSIM), both of which are adopted to measure the similarity between the predict watermark free patch and the ground truth one.

Results and Analysis. We calculate the average value of PSNR and DSSIM over the whole test set. Table 2 gives the PSNR and DSSIM of our model using different types of loss. As shown in Table 2, our removal model can have significant improvement in comparison over the input image. Besides, the results of the combination of the L1 loss and perceptual loss is shown to be better than those of single type of loss.

As shown in Fig. 6, despite the pattern of watermarks in images shown from the first row to the fourth row is quite diverse, our watermark removal algorithm performs well on removing visible watermarks. More specifically, some watermarks are some English words or letters, while some of them are the combination of English words, Logo and etc. However, our proposed method is able to extract the invariant feature of the watermarks and generate the image patches which is almost the same as the original ones. In addition, we report the removal results of our model using different sorts of loss, which are subtle distinct. The results in Fig. 6 indicate that our model using the loss combining the L1 loss as well as perceptual loss can exploit the strength of both loss to wipe out the visible watermarks and meanwhile keep the fine details of the source images, yielding powerful reconstruction performance.

We also conduct experiments to compare the performance of different architectures. Observing the results of the encoder-decoder architecture mentioned in [16] (The encoder-decoder is created by severing the skip connections in the U-Net), we find that it alters the global brightness and there exists local watermark residual in local area. Thus the watermarked patch is hard to be restored to get similar to its watermark free condition. The outputs of U-net architectures are more similar to the ground truth patches, which is applicable for our removal task. The results in Fig. 7 demonstrate that our U-net architecture is more effective, as it does not break surrounding information by allowing low-level information to be shortcut across the network.

4.3 Discussions

Our experiments show that our proposed framework can effectively deal with the large-scale visible watermark tasks. For watermark detection, our watermarks detection model using one-stage method RetinaNet perform very well on detecting visible watermark. During watermark removal, the size of bounding box is expanded to a little larger than the size of the detected watermark patch to alleviate the effect of partial detection, and our network can adaptively transform the marked pixels to watermark free ones and do not corrode the other pixels at the same time. Therefore, setting a small IoU threshold to capture the watermarked patches as much as possible, and then expanding and inputing these patches into our removal net, can ensure the performance of our proposed framework.

5 Conclusion

This paper presents a new deep learning based framework for large-scale visible watermark processing tasks, which consist of two components: (1) watermark detection, which is fomulated as an object detection task. (2) watermark removal, which is transferred into an image-to-image translation problem. Besides, we build a large-scale visible watermark dataset for training and evaluating deep learning based framework for watermark detection, watermark removal and so on. In addition, extensive experiments are conducted to verify the feasible of our proposed pipeline. Experimental results show that our proposed framework is effective on watermark detection and removal.

References

Santoyo-Garcia, H., Fragoso-Navarro, E., Reyes-Reyes, R., et al.: An automatic visible watermark detection method using total variation. In: IWBF 2017 (2017)

Pei, S.C., Zeng, Y.C.: A novel Image recovery algorithm for visible watermarked images. IEEE Trans. Inf. Forensics Secur. 1, 543–550 (2006)

Huang, C.H., Wu, J.L.: Attacking visible watermarking schemes. IEEE Trans. Multimed. 6(1), 16–30 (2004)

Dekel, T., Rubinstein, M., Liu, C., et al.: On the effectiveness of visible watermarks. In: CVPR 2017 (2017)

Xu, C., Lu, Y., Zhou, Y.: An automatic visible watermark removal technique using image inpainting algorithms. In: ICSAI 2017 (2017)

Qin, C., He, Z., Yao, H.: Visible watermark removal scheme based on reversible data hiding and image inpainting. Sig. Process.: Image Commun. 60, 160–172 (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: MICCAI 2015 (2015)

Girshick, R., Donahue, J., Darrell, T., et al.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: CVPR 2014 (2014)

Girshick, R.: Fast R-CNN. In: ICCV 2015 (2015)

Ren, S., He, K., Girshick, R., et al.: Faster R-CNN: towards real-time object detection with region proposal networks. In: NIPS 2015 (2015)

Redmon, J., Divvala, S., Girshick, R., et al.: You only look once: unified, real-time object detection. In: CVPR 2016 (2016)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: CVPR 2017 (2017)

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement. arXiv preprint (2018)

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Lin, T.Y., Goyal, P., Girshick, R., et al.: Focal loss for dense object detection. In: ICCV 2017 (2017)

Isola, P., Zhu, J.Y., Zhou, T., et al.: Image-to-image translation with conditional adversarial networks. In: CVPR 2017 (2017)

Pathak, D., Krahenbuhl, P., Donahue, J., et al.: Context encoders: feature learning by inpainting. In: CVPR 2016 (2016)

Everingham, M., Eslami, S.M.A., Van Gool, L.: The pascal visual object classes challenge: a retrospective. IJCV 111(1), 98–136 (2015)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 694–711. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_43

Acknowledgment

Danni Cheng and Xiang Li equally contributed to this work. The authors would like to thank Dongcheng Huang and Xiaobin Chang’s valuable advice on paper writing.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Cheng, D. et al. (2018). Large-Scale Visible Watermark Detection and Removal with Deep Convolutional Networks. In: Lai, JH., et al. Pattern Recognition and Computer Vision. PRCV 2018. Lecture Notes in Computer Science(), vol 11258. Springer, Cham. https://doi.org/10.1007/978-3-030-03338-5_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-03338-5_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-03337-8

Online ISBN: 978-3-030-03338-5

eBook Packages: Computer ScienceComputer Science (R0)