Abstract

In this work, we tackle the problem of crowd counting in images. We present a Convolutional Neural Network (CNN) based density estimation approach to solve this problem. Predicting a high resolution density map in one go is a challenging task. Hence, we present a two branch CNN architecture for generating high resolution density maps, where the first branch generates a low resolution density map, and the second branch incorporates the low resolution prediction and feature maps from the first branch to generate a high resolution density map. We also propose a multi-stage extension of our approach where each stage in the pipeline utilizes the predictions from all the previous stages. Empirical comparison with the previous state-of-the-art crowd counting methods shows that our method achieves the lowest mean absolute error on three challenging crowd counting benchmarks: Shanghaitech, WorldExpo’10, and UCF datasets.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Gathering of large crowds is commonplace nowadays, and estimating the size of a crowd is an important problem for different purposes ranging from journalism to public safety. Without turnstiles to provide a precise count, the media and crowd safety specialists must estimate the size of the crowd based on images and videos of the crowd. Manual visual estimation, however, is difficult and laborious for humans. Humans are good at subitizing, i.e., predicting fast and accurate counts for small number of items, but the accuracy with which humans count deteriorates as the number of items increase [7]. Furthermore, the addition of each new item beyond a few adds an extra processing time of around 250 to 300 milliseconds [17]. As a result, any crowd monitoring system that relies on humans for counting people in crowded scenes will be slow and unreliable. There is a need for an automatic computer vision algorithm that can accurately count the number of people in crowded scenes based on images and videos of the crowds.

There exist a number of computer vision algorithms for crowd counting, and the current state-of-the-art methods are based on density estimation rather than detection-then-counting. Density-estimation methods use Convolutional Neural Networks (CNNs) [8, 9] to output a map of density values, one for each pixel of the input image. The final count estimate can be obtained by summing over the predicted density map. Unlike the detection-then-counting approach (e.g., [5]), the output of the density estimation approach at each pixel is not necessarily binary. Density estimation has been proved to be more robust than the detection-then-counting approach because the former does not have to commit to binarized decisions at an early stage.

Crowd counting can be posed as a CNN-based density estimation problem, but this problem can be challenging for a single CNN due to the huge variation of density values across pixels of different images. This figure shows two images from the Shanghaitech dataset that have very different crowd densities. As can be seen, crowd count could vary from a few to a few thousand.

Estimating the crowd density per pixel is a challenging task due to the large variation of the crowd density values. As shown in Fig. 1, some images contain hundreds of people, while others have only a few. It is difficult for a single CNN to handle the entire spectrum of crowd densities. Earlier works [15, 20] have tackled this challenge by using a multi-column or a switching CNN architecture. These CNN architectures consist of three parallel CNN branches with different receptive field sizes. In such architectures, a branch with smaller receptive fields could handle the high density images well, while a branch with larger receptive fields could handle the low density images. More recently, a five-branch CNN architecture was proposed [16] where three of the branches resembled the previous multi-column CNN [20], while the remaining two branches acted as global and local context estimators. These context estimator branches were trained beforehand on the related task of classifying the image into different density categories. Some of the key takeaways from these previous approaches are: (1) using a multi-column CNN model with varying kernel sizes improves the performance of crowd density estimation; and (2) augmenting the feature set with the ones learned from a task related to density estimation, such as count range classification, improves the performance of the density estimation task.

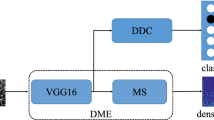

In this work, we propose iterative counting Convolutional Neural Networks (ic-CNN), a CNN-based iterative approach for crowd counting. Unlike previous approaches, where three [15, 20] or more [16] columns are needed to achieve good performance, our ic-CNN approach has a simpler architecture comprising of two columns/branches. The first branch predicts a density map at a lower resolution of \(\frac{1}{4}\) the size of the original image, and passes the predicted map and a set of convolutional features to the second branch. The second branch predicts a high resolution density map at the size of the original image. Density maps contain information about the spatial distribution of crowd in an image. Hence, the first stage map serves as an important feature for the high resolution density map prediction task. We also propose a multi-stage extension of ic-CNN where we combine multiple ic-CNNs sequentially to further improve the quality of the predicted density map. Each ic-CNN in the multi-stage pipeline provides both the low and high resolution density predictions to all subsequent stages. Figure 2 illustrates the schematic architecture for ic-CNN. ic-CNN has two branches: Low Resolution CNN (LR-CNN) and High Resolution CNN (HR-CNN). LR-CNN predicts the density map at a low resolution while HR-CNN predicts the density map at the original image resolution. The key highlights of our work are:

-

1.

We propose ic-CNN, a two-stage CNN framework for crowd density estimation and counting.

-

2.

ic-CNN achieves state of the art results on multiple crowd counting datasets. On Shanghaitech Part B dataset, ic-CNN yields \(48.3\%\) improvement in terms of mean absolute error over the previously published results [16].

-

3.

We also propose a multi-stage extension of ic-CNN, which can combine predictions from multiple ic-CNN models.

Figure shows the ic-CNN architecture which consists of two columns/branches. On the top is the Low Resolution CNN branch (LR-CNN) and at the bottom is the High Resolution CNN branch (HR-CNN). LR-CNN predicts a density map at a lower resolution (LR). It passes the predicted density map and the convolutional feature maps to HR-CNN. HR-CNN fuses its feature maps with the feature maps and predicted density map from LR-CNN, and predicts a high resolution density map (HR) at the size of the original image. LR and HR are low and high resolution prediction maps respectively.

2 Related Work

Crowd counting is an important research problem and a number of approaches have been proposed by the computer vision community. Earlier work tackled crowd counting as an object detection problem [11, 12]. Lin et al. [12] extracted Haar features for head like contours and used an SVM classifier to classify these features as the contour of a head or not. Li et al. [11] proposed a detection based approach where the input image was first segmented into foreground-background regions and a HOG feature based head-shoulder detector was used to detect each person in the crowd. These detection based methods often fail to accurately count people in extremely dense scenes. To handle images of dense crowds, some methods [2, 3] proposed to use a regression approach to avoid the harder detection problem. They instead extracted local patch level features and learned a regression function to directly estimate the total count for an input image patch. These regression approaches, however, do not fully utilize the available annotation associated with training data; they ignore the spatial density and distribution of people in training images. Several researchers [10, 14] proposed to use a density estimation approach to take advantage of the provided crowd density annotation maps of training images. Lempitsky and Zisserman [10] learned a linear mapping between the crowd images and the corresponding ground truth density maps. Pham et al. [14] learned a more robust mapping by using a random decision forest to estimate the crowd density map. These density-based methods solve some of the challenges faced by the earlier detection and regression based approaches, by avoiding the harder detection problem and also utilizing the spatial annotation and correlation. All aforementioned methods predated the deep-learning era, and they used hand crafted features for crowd counting.

More recent methods [4, 13, 15, 16, 18, 20] used CNNs to tackle crowd counting. Wang et al. [18] posed crowd counting as a regression problem, and used a CNN model to map the input crowd image to its corresponding count. Instead of predicting the overall count, Fu et al. [4] classified an image into five broad crowd density categories and used a cascade of two CNNs in a boosting like strategy where the second CNN was trained on the images misclassified by the first CNN. These methods also overlooked the benefits provided by the crowd density annotation maps.

The methods that are most related to our work are [15, 16, 20]. Zhang et al. [20] proposed a CNN-based method to predict crowd density maps. To handle the large variation in crowd densities and sizes across different images, Zhang et al. [20] proposed a multi-column CNN architecture (MCNN) with filters and receptive fields of various sizes. The CNN column with smaller receptive field and filter sizes were responsible for the denser crowd images, while the CNN columns with larger receptive fields and filter sizes were meant for the less dense crowd images. The features from the three columns were concatenated and processed by a \(1 \times 1\) convolution layer to predict the final density map. To handle the variations in density and size within an image, the authors divided each image into non-overlapping patches, and trained the MCNN architecture on these patches. Given that the number of training samples in annotated crowd counting datasets is much smaller in comparison to the datasets pertaining to image classification and segmentation tasks, training a CNN from scratch on full images might lead to overfitting. Hence, patch-based training of MCNN was essential in preventing overfitting and also improving the overall performance by serving as a data augmentation strategy. One issue with MCNN was that it fused the features form three CNN columns for predicting the density map. For a given patch, it is expected that the counting performance can be made more accurate by choosing the right CNN column that specializes in analyzing images of similar density values. Sam et al. [15] built on this idea and decoupled the three columns into separate CNNs, each focused on a subset of the training patches. To decide which CNN to assign a patch to, the authors trained a CNN-based switch classifier. However, since the ground truth label needed to train the switch classifier was unavailable, the authors resorted to a multi-stage training strategy: (1) training the three density predicting CNNs on the entire set of training patches, (2) training the switch classifier using the count from the previous stage to decide the switch labels, and (3) retraining the three CNNs using the patches assigned by the switch classifier. In a more recent work, Sindagi et al. [16] further modified the MCNN architecture by adding two more branches for estimating global and local context maps. The global/local context prediction branches were trained beforehand for the related task of classifying an image/patch into five different count categories. The classification scores were used to create a feature map of the same size as the image/patch, which served as the global/local context map. These context maps were fused with the convolutional feature maps obtained using a three branch multi-column CNN, and the resulting features were further processed by convolutional layers and a \(1\) \(\times \) \(1\) convolution layer to obtain the final density map.

3 Proposed Approach

In this section, we describe the architecture of ic-CNN, its multi-stage extension and the training strategy. ic-CNN is discussed in Sect. 3.1. The multi stage extension of ic-CNN is discussed in Sect. 3.2, and the training details are discussed in Sect. 3.3.

3.1 Iterative Counting CNN

Let \(\mathcal {D}= \{(X_1,Y_1,Z_1),\ldots {,} (X_n,Y_n,Z_n)\}\) be the training set of n (image, high resolution density map, low resolution density map) triplets, where \(X_i\) is the \(i^{th}\) image, \(Y_i\) is the corresponding crowd density map at the same resolution as the image \(X_i\), and \(Z_i\) is a low resolution version of the crowd density map. \(Y_i\) and \(Z_i\) have the same overall count. Let \(f_l\) and \(f_h\) be the mapping functions which transform the image into the low resolution and high resolution density maps, respectively. Let the parameters of the low resolution branch (LR-CNN) and high resolution branch (HR-CNN) be \(\theta _l\) and \(\theta _h\) respectively. Note that \(f_l\) depends on only \(\theta _l\), while \(f_h\) depends on both \(\theta _l\) and \(\theta _h\). Given an input image \(X_i\), the low resolution density map \(\hat{Z_i}\) can be obtained by a doing a forward pass through the LR-CNN branch:

The inputs to the high resolution branch HR-CNN are: the image \(X_i\), the features computed by the low resolution branch LR-CNN, and the low resolution prediction \(\hat{Z_i}\). HR-CNN predicts a high resolution density map of the same size as the original image:

The low resolution prediction \(\hat{Z_i}\) contains information about the spatial distribution of the crowd in the image \(X_i\). It serves as an important feature map for the high resolution prediction task. We can learn the parameters \(\theta _l\) and \(\theta _h\) by minimizing the loss function \(\mathcal {L}(\theta _l, \theta _h)\):

where \(L(\cdot , \cdot )\) denotes the loss function, and a reasonable choice is to use the squared error between the estimated and ground truth values. \( \lambda _{l}\) and \(\lambda _{h}\) are scalar hyperparameters which can be used to give more importance to one of the loss terms. Using Eqs. (1) and (2), the right hand side can be further simplified as:

At test time, given an image \(X_i\), we first obtain the low resolution output \(\hat{Z_i}\) by doing a forward pass through LR-CNN and then pass the convolutional features and the low resolution map \(\hat{Z_i}\) to HR-CNN, which will predict the high resolution map \(\hat{Y_i}\). We use the high resolution output predicted by HR-CNN as the final output of ic-CNN. The overall crowd count is obtained by summing over all the pixels in the density map \(\hat{Y_i}\).

Below we provide the architecture details for the LR-CNN and HR-CNN branches.

LR-CNN. The LR-CNN branch takes as input an image, and predicts a density map at \(\frac{1}{4}\) the size of the original image. LR-CNN has the following architecture: Conv3-64, Conv3-64, MaxPool, Conv3-128, Conv3-128, MaxPool, Conv3-256, Conv3-256, Conv3-256, Conv7-196, Conv5-96, Conv3-32, Conv1-1. Here, ConvX-Y implies a convolution layer having Y filters with \(X\times X\) kernel size. MaxPool is the max pooling layer. We use a ReLU nonlinearity after each convolutional layer.

HR-CNN. The HR-CNN branch predicts the high resolution density map at the same size as the input image. HR-CNN has the following architecture: Conv7-16, MaxPool, Conv5-24, MaxPool, Conv3-48, Conv3-48, Conv3-24, Conv7-196, Conv5-96, Upsampling-2, Conv3-32, Upsampling-2, Conv1-1. Here, Upsampling-2 is a bilinear interpolation layer which upsamples the input to twice its size.

3.2 Multi-stage Crowd Counting

A multi-stage ic-CNN is a network that combines multiple building blocks of ic-CNN described in the previous section. Each ic-CNN block inputs the low and high resolution prediction maps from all the previous blocks. Given an input image \(X_i\), the low resolution branch of the \(k^{th}\) block, represented by the function \(f_{l}^{k}\), outputs the low resolution prediction:

where \(\theta _{l}^k\) represents the parameters of LR-CNN, \(\hat{Z}_{i}^{1:k-1}\) and \(\hat{Y}_{i}^{1:k-1}\) represent the set of low and high level predictions from the first \(k-1\) blocks for the input \(X_i\). The high resolution branch of the \(k^{th}\) block, represented by the function \(f_{h}^{k}\), takes as input the image \(X_i\), the feature maps computed by the low resolution branch \(f_{l}^k\), the low resolution prediction \(\hat{Z}_{i}^k\), and the entire set of low and high resolution prediction maps from the first \(k-1\) blocks. Hence, the output of the \(k^{th}\) HR-CNN can be computed using:

Note that \(f_{l}^k\) and \(f_{h}^k\) do not depend on the parameters for the first \(k-1\) blocks, and \(\hat{Z}_{i}^{1:k-1}\) and \(\hat{Y}_{i}^{1:k-1}\) are treated as fixed inputs (i.e., the parameters of the corresponding network blocks are frozen). We can learn the parameters \(\theta _{l}^k\) and \(\theta _{h}^k\) by minimizing the loss function \(\mathcal {L}(\theta _{l}^k, \theta _{h}^k)\):

3.3 Training Details

An ic-CNN is trained by minimizing the loss function \(\mathcal {L}(\theta _l,\theta _h)\) from Eq. (3). We use the Stochastic Gradient Descent algorithm with the following hyper parameters (unless specified otherwise): learning rate \(10^{-4}\), momentum 0.9, batch size 1. We give more importance to the high resolution loss term in Eq. (3) and set \(\lambda _{l}\) and \(\lambda _{h}\) to \(10^{-2}\) and \(10^{2}\), respectively.

We train a multi-stage ic-CNN in multiple stages. In the \(k^{th}\) stage, we train the \(k^{th}\) ic-CNN block by minimizing the loss function given in Eq. (7), using the Stochastic Gradient Descent algorithm with the same hyper parameters as above. Once the training for the \(k^{th}\) stage has converged, we freeze the parameters for the \(k^{th}\) stage and proceed to the next stage.

The training data consists of crowd images and corresponding ground truth annotation files. A ground truth annotation for an image specifies the location of each person in the image with a single dot on the person. We convert this annotation into a binary map consisting of 0’s at all locations, except for the annotated points which are assigned the value of 1. We convolve this binary map with a Gaussian filter of standard deviation 5. We use the resulting density map for training the networks.

4 Experiments

We conduct experiments on three challenging datasets: Shanghaitech [20], WorldExpo’10 [19], and UCF Crowd Counting Dataset [6].

4.1 Evaluation Metrics

Following previous works for crowd counting, we use the Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) to evaluate the performance of our proposed method. If the predicted count for image i is \(\hat{C_i}\) and the ground truth count is \(C_i\), the MAE and RMSE can be computed as:

where n is the number of test images.

4.2 Experiments on the Shanghaitech Dataset

The Shanghaitech dataset [20] consists of 1198 annotated crowd images. The dataset is divided into two parts, Part-A containing 482 images and Part-B containing 716 images. Part-A is split into train and test subsets consisting of 300 and 182 images, respectively. Part-B is split into train and test subsets consisting of 400 and 316 images. Each person in a crowd image is annotated with one point close to the center of the head. In total, the dataset consists of 330,165 annotated people. Images from Part-A were collected from the Internet, while images from Part-B were collected on the busy streets of Shanghai. To avoid the risk of overfitting to the small number of training images, we trained ic-CNNs on random crops of size \(\frac{H}{3}\times \frac{W}{3}\), where H and W are the height and width of a training image. In Table 1, we compare ic-CNNs with the previous state-of-the-art approaches. ic-CNNs outperform the previous approaches in three out of four cases by a large margin. On Part-B of the Shanghaitech dataset, using the one-stage ic-CNN which has a simpler architecture than the five-branch CP-CNN [16], we improve on the previously reported state of the art results by \(48.3\%\) for MAE metric and \(46.8\%\) for the RMSE metric. On Part A of the Shanghaitech dataset, we achieve a 5.1 absolute improvement in MAE over CP-CNN. Furthermore, for Part A data, the two-stage ic-CNN results in an improvement of 1.3 MAE over the one-stage ic-CNN. We also trained a three-stage ic-CNN on Part A data, which resulted in MAE = 69.4 and RMSE = 116.0. Since adding the \(3^{rd}\) stage did not yield a significant performance gain, we did not experiment with more than three stages.

In Table 2, we analyze the effects of varying the resolution of the intermediate prediction on the overall performance. Using any resolution other than \(\frac{1}{4}\) leads to a drop in the performance.

In Table 3, we analyze the effects of varying the hyperparameter \(\lambda _{h}\) on performance of ic-CNN. We use Shanghaitech Part-A dataset for this experiment. We show the MAE of the high and low resolution branches as the scalar weight \(\lambda _{h}\) is varied. \(\lambda _{l}\) is kept fixed at \(10^{-2}\). We can see that the LR-CNN branch performs better when \(\lambda _{l}\) is comparable with \(\lambda _{h}\), and its performance degrades when \(\lambda _{h}\) is too large. The performance of HR-CNN improves as \(\lambda _{h}\) is varied from \(10^{-4}\) to \(10^2\). In the extreme case when \(\lambda _{h}\) is set to \(10^{4}\), there is a large degradation in the performance of the LR-CNN branch, which affects the performance of the HR-CNN branch. When \(\lambda _{h}\) is \(10^{4}\), the low resolution prediction task is possibly ignored, and the network solely focuses on solving the high resolution task. In such a scenario, the low resolution prediction does not contain any useful information, which affects the performance of the high resolution branch HR-CNN. We obtain the best results for the HR-CNN branch when \(\lambda _{h}\) is set to \(10^{2}\). In this case, the high resolution loss does not force the network to completely ignore the low resolution task.

In Table 4, we show the training time and the number of parameters of ic-CNN, MCNN, Switching CNN, and CP-CNN. An ic-CNN takes 10 h to train, while a Switching CNN takes around 22 h. An ic-CNN has significantly fewer parameters than a CP-CNN and a Switching CNN. We contacted the authors of MCNN and CP-CNN, but we did not get a response for the training time of these networks.

In Table 5, we analyze the importance of each of the components of our proposed ic-CNN model. We see that both the feature sharing and the feedback of the low resolution prediction are important for ic-CNN. Removing any of these two components leads to significant drop in performance.

Performance across different crowd density: We divide the 182 test images from Shanghaitech Part A into 10 groups on the basis of the crowd count. Each group except the last has 18 test images. We average the crowd count across a group to obtain the average count. GT is the ground truth, ic-CNN is prediction from the high resolution branch. For majority of the count groups, the difference between the average counts for ic-CNN and GT is small.

In Fig. 3, we analyze the performance of ic-CNN across different groups of images with varying crowd counts.

4.3 Experiments on the WorldExpo’10 Dataset

The WorldExpo’10 dataset consists of 1132 annotated video sequences captured by 108 surveillance cameras. Annotated frames from 103 cameras are used for training and the annotated frames from the remaining 5 cameras are used for testing. We trained ic-CNN networks using random crops of sizes \(\frac{H}{2}\times \frac{W}{2}\). We used the networks trained on Shanghaitech Part A for initializing the models for the experiments on the WorldExpo dataset. In Table 6, we compare ic-CNN with other state of art approaches. ic-CNN outperforms these previous approaches on three out of five cases.

4.4 Experiments on the UCF Dataset

The UCF Crowd Counting dataset [6] consists of 50 crowd images collected from the web. Each person in the dataset is annotated with a single dot annotation. The numbers of people in the images vary from 94 to 4545 with an average of 1280 people per image. The average count for the UCF dataset is much larger than the previous two datasets. Following previous works using this dataset, we perform five-fold cross validation and report the MAE and RMSE values. We trained ic-CNN networks using random crops of sizes \(\frac{H}{3}\times \frac{W}{3}\). We compare ic-CNN with previous approaches and show the results in Table 7. Since the dataset is small, adding multiple stages to ic-CNN could lead to overfitting. Hence we only use one-stage ic-CNN on the UCF dataset. ic-CNN achieves the best MAE on this dataset, outperforming CP-CNN by a large margin.

4.5 Qualitative Results

In Fig. 4, we show some qualitative results on images from the Shanghaitech Part-A dataset obtained using ic-CNN. The first three are success cases for ic-CNN, while the last two are failure cases. In the failure cases, we see that ic-CNN sometimes misclassify tree leaves as tiny people in a crowd. In Fig. 5, we show some qualitative results on images from Shanghaitech Part-B dataset.

Qualitative results, some success and failure cases. The four columns show the input image, ground truth annotation map, the low resolution prediction (LR output), and the high resolution prediction map (HR output). The total counts are shown below each density map. The first three rows are success cases for ic-CNN, while the last two are failure cases. ic-CNN sometimes misclassifies tree leaves as people.

Qualitative results on the Shanghaitech Part B dataset. The four columns show the input image, the ground truth annotation map, the low resolution prediction (LR output), and the high resolution prediction map (HR output). Underneath each density map is the total count, rounded to the nearest integer.

5 Conclusions

In this paper, we have proposed ic-CNN, a two-branch architecture for crowd counting via crowd density estimation based. We have also proposed a multi-stage pipeline comprising of multiple ic-CNNs, where each stage takes into account the predictions of all the previous stages. We performed experiments on three challenging crowd counting benchmark datasets and observed the effectiveness of our iterative approach.

References

Boominathan, L., Kruthiventi, S.S., Babu, R.V.: CrowdNet: a deep convolutional network for dense crowd counting. In: Proceedings of the ACM Multimedia Conference (2016)

Chan, A.B., Vasconcelos, N.: Bayesian Poisson regression for crowd counting. In: Proceedings of the International Conference on Computer Vision (2009)

Chen, K., Loy, C.C., Gong, S., Xiang, T.: Feature mining for localised crowd counting. In: Proceedings of the British Machine Vision Conference (2012)

Fu, M., Xu, P., Li, X., Liu, Q., Ye, M., Zhu, C.: Fast crowd density estimation with convolutional neural networks. Eng. Appl. Artif. Intell. 43, 81–88 (2015)

Hoai, M., Zisserman, A.: Talking heads: detecting humans and recognizing their interactions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2014)

Idrees, H., Saleemi, I., Seibert, C., Shah, M.: Multi-source multi-scale counting in extremely dense crowd images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2013)

Kaufman, E.L., Lord, M.W., Reese, T.W., Volkmann, J.: The discrimination of visual number. Am. J. Psychol. 62(4), 498–525 (1949)

Krizhevsky, A., Sutskever, I., Hinton, G.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems (2012)

LeCun, Y., Boser, B., Denker, J.S., Henderson, D.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 1(4), 541–551 (1989)

Lempitsky, V., Zisserman, A.: Learning to count objects in images. In: Advances in Neural Information Processing Systems (2010)

Li, M., Zhang, Z., Huang, K., Tan, T.: Estimating the number of people in crowded scenes by mid based foreground segmentation and head-shoulder detection. In: Proceedings of the International Conference on Pattern Recognition (2008)

Lin, S.F., Chen, J.Y., Chao, H.X.: Estimation of number of people in crowded scenes using perspective transformation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 31(6), 645–654 (2001)

Onoro-Rubio, D., López-Sastre, R.J.: Towards perspective-free object counting with deep learning. In: Proceedings of the European Conference on Computer Vision (2016)

Pham, V.Q., Kozakaya, T., Yamaguchi, O., Okada, R.: Count forest: Co-voting uncertain number of targets using random forest for crowd density estimation. In: Proceedings of the International Conference on Computer Vision (2015)

Sam, D.B., Surya, S., Babu, R.V.: Switching convolutional neural network for crowd counting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2017)

Sindagi, V.A., Patel, V.M.: Generating high-quality crowd density maps using contextual pyramid CNNs. In: Proceedings of the International Conference on Computer Vision (2017)

Trick, L.M., Pylyshyn, Z.W.: Why are small and large numbers enumerated differently? A limited-capacity preattentive stage in vision. Psychol. Rev. 101(1), 80 (1994)

Wang, C., Zhang, H., Yang, L., Liu, S., Cao, X.: Deep people counting in extremely dense crowds. In: Proceedings of the ACM Multimedia Conference (2015)

Zhang, C., Li, H., Wang, X., Yang, X.: Cross-scene crowd counting via deep convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2015)

Zhang, Y., Zhou, D., Chen, S., Gao, S., Ma, Y.: Single-image crowd counting via multi-column convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2016)

Acknowledgement

This work was supported by SUNY2020 Infrastructure Transportation Security Center. The authors would like to thank Boyu Wang for participating on the discussions and experiments related to an earlier version of the proposed technique. The authors would like to thank NVIDIA for their GPU donation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Ranjan, V., Le, H., Hoai, M. (2018). Iterative Crowd Counting. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11211. Springer, Cham. https://doi.org/10.1007/978-3-030-01234-2_17

Download citation

DOI: https://doi.org/10.1007/978-3-030-01234-2_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01233-5

Online ISBN: 978-3-030-01234-2

eBook Packages: Computer ScienceComputer Science (R0)