Abstract

Unsupervised domain adaptation has caught appealing attentions as it facilitates the unlabeled target learning by borrowing existing well-established source domain knowledge. Recent practice on domain adaptation manages to extract effective features by incorporating the pseudo labels for the target domain to better solve cross-domain distribution divergences. However, existing approaches separate target label optimization and domain-invariant feature learning as different steps. To address that issue, we develop a novel Graph Adaptive Knowledge Transfer (GAKT) model to jointly optimize target labels and domain-free features in a unified framework. Specifically, semi-supervised knowledge adaptation and label propagation on target data are coupled to benefit each other, and hence the marginal and conditional disparities across different domains will be better alleviated. Experimental evaluation on two cross-domain visual datasets demonstrates the effectiveness of our designed approach on facilitating the unlabeled target task learning, compared to the state-of-the-art domain adaptation approaches.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the real-world applications, there often exists a challenge that we can get access to the abundant target data but with limited or even no labels [1, 2]. However, it would be extremely time-consuming and expensive to manually annotate the data. Domain adaptation has shown appealing performance in handling such a challenge through knowledge transfer from an external well-established source domain, which lies in a different distribution from the target domain [3,4,5,6,7,8,9,10,11,12]. The mechanism of domain adaptation is to uncover the common latent factors across source and target domains, and adopt them to reduce both the marginal and conditional mismatch in terms of the feature space between domains. Following this, different domain adaptation techniques have been developed, including feature alignment and classifier adaptation (Fig. 1).

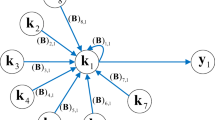

Illustration of our proposed algorithm, where source and target domains are lying in different distributions under the original feature space. We jointly seek two coupled projections \(P_{s/t}\) to map the original data to a domain-invariant space. (a) A semi-supervised class-wise adaptation strategy is proposed via assigning every target data point with a probabilistic label. (b) When source and target data have smaller domain mismatch, graph-based label propagation strategy could assign target labels more accurately.

Recent research efforts on domain adaptation have already witnessed appealing performance via learning effective domain-invariant features from two different domains, such that source knowledge could be adapted to facilitate the recognition task in target domain [3, 5, 7, 8, 10,11,12,13,14,15,16,17,18,19]. Among them, Maximum Mean Discrepancy (MMD) [20] is one of the most widely used strategies to measure the distribution difference between source and target domains [3, 7, 10, 16, 21]. Later on, many domain adaptation approaches were proposed to design a revised class-wise MMD by incorporating the pseudo labels of target data. Those algorithms target at iteratively assigning temporal labels for the target samples and then further refining the class-wise domain adaptation regularizer. However, all the existing methods optimize the target labels in a separate step along with the domain-invariant feature learning. Thus, they may fail to benefit each other in an effective manner.

In this paper, we develop an effective Graph Adaptive Knowledge Transfer (GAKT) framework by unifying domain-invariant feature learning and target label optimization into a joint learning framework. The key idea is to jointly optimize the probabilistic class-wise adaptation term and the graph-based label propagation in a semi-supervised scheme. Thus, two procedures could benefit each other for promising knowledge transfer. To our best knowledge, this would be the first work to jointly model knowledge transfer and label propagation in a unified framework. To sum up, we have two-fold contributions as follows:

-

We attempt to seek a domain-invariant feature space by designing a domain/class-wise adaptation strategy, where marginal/conditional distribution gap between source and target domains could be both leveraged. Specifically, we develop an iterative refinement scheme to optimize the probabilistic class-wise adaptation term by involving the soft labels for target samples from a graph-based label propagation perspective.

-

Simultaneously, graph-based label propagation manages to capture more intrinsic structure across source and target domains in the domain-free feature space, and thus, the labeled source data could better predict the unlabeled target through an effective cross-domain graph. Therefore, well-established source knowledge can be well reused to recognize unlabeled target samples.

2 Related Work

In this part, we present the related research on domain adaptation and discuss the difference between our method and others.

Domain adaptation has been shown as an attractive approach in lots of real-world applications when we have sparsely or none label information for the target domain [2]. Specifically, domain adaptation attempts to enhance the target learning by borrowing the labeled source knowledge, which is lying in the different distributions with the target domain. For instance, we tend to take a picture with cellphone and search in the Amazon pool to recognize what is the object. Generally, there is a distribution gap between the cellphone picture (low resolution and complex background) and Amazon gallery images (clear background). Hence, the core challenge turns to adapting any one domain or both domains to reduce the distribution mismatch.

Generally, domain adaptation techniques can be split into two different lines based on the accessibility of labeled information in the target domain, one is semi-supervised domain adaptation, and the other is unsupervised domain adaptation. For semi-supervised scenario [22, 23], we are accessible to a small amount of labeled target data, which makes the domain adaptation easier. A more challenge case is unsupervised domain adaptation [3, 24], in which we aim to deal with totally unlabeled target domain. Thus, unsupervised domain adaptation attracts more attention. Along this line, domain-invariant feature learning and classifier adaption are two strategies to fight off unsupervised domain adaptation. Specifically, domain-invariant feature learning includes traditional subspace learning [7, 8, 13, 21, 25,26,27] and deep learning methods [5, 19, 28, 29]. Among them, subspace-based domain adaptation approaches have been verified with promising results by aligning two different domains into a domain-invariant low-dimensional feature space. Deep domain adaption methods aim to seek an end-to-end deep architecture to jointly mitigate the domain shift and seek a general classifier. Besides, subspace-based domain adaptation can still improve the adaptation ability over deep domain adaptation with the effective deep features, e.g., DeCAF features.

Hence, we equip subspace learning technique to address marginal/conditional divergences across two different domains. Meanwhile a cross-domain graph built on the source and target would better transfer the label information by capturing the intrinsic structure in the shared space. Specifically, label propagation [30, 31] would be jointly unified into the domain-invariant feature learning framework to refine the class-wise adaption term, which would benefit the effective feature learning. That is being said, the soft labels and their probability are not only needed, but also effective. This is the most significant difference compared to the existing works. More interestingly, we can adapt the newly designed loss function to deep architecture to fine-tune the network parameters in a unified deep domain adaption framework [18, 32].

3 The Proposed Algorithm

Given a labeled source domain with \(n_s\) data points and feature dimension d from C categories: \(\{X_s,Y_s\} = \{(x _{s,1},y _{s,1}),\cdots ,(x _{s,n_s},y _{s,n_s})\}\) in which \(x _{s,i}\in \mathbb {R}^d\) is the feature vector while \(y _{s,i}\in \mathbb {R}^C\) is its corresponding one-hot label vector. Define \(X_t\) as an unlabeled target domain with \(n_t\) data points, i.e., \(X_t = \{x _{t,1},\cdots ,x _{t,n_t}\}\), in which \(x _{t,i}\in \mathbb {R}^d\). In the domain adaptation problem, source and target domains shall have the consistent label information and the goal is to recognize the unlabeled target samples.

Since source and target samples are distributed in different feature spaces, i.e., \(X_s \subsetneq \mathrm {span}(X_t)\), we devote to seek a latent common space shared across source and target domains through two coupled projections \(P_{s/t} \in \mathbb {R}^{d\times {p}}\). p is the dimension of the low-dimensional space (\(p \ll d\)). In this way, the domain shift between source and target could be well addressed, and hence, the discriminative knowledge within well-established source could be reused to facilitate the unlabeled target classification.

3.1 Motivation

Existing transfer subspace learning approaches [3, 10, 13] iteratively predict pseudo labels of the target data through classifiers, e.g., support vector machines (SVM). Most recently, Hou et al. improved the performance through further refining the pseudo labels using label propagation after initial labels from classifiers [7]. Moreover, Yan et al. explored a weighted MMD to account for class weight bias and enhance domain adaptation performance [12]. However, they built the revised MMD by assigning each target data point with only a single specific label. This could hurt the knowledge transfer since target samples might be predicted wrongly in the beginning. Moreover, when target samples from two classes have overlap distribution, it would easily undermine the intrinsic structure within the data by assigning only one hard label to those samples.

Another phenomenon is that we could acquire better target label prediction performance with more iterations during model optimization. Hence, the label probability to the true class for the unlabeled target samples would be triggered to a higher level. When we predict target data with inaccurate labels, they are unable to contribute during the designed class-wise adaptation term. For those reasons, we consider each target sample could be assigned to the entire label pool but with different probabilities, which we refer to as “soft label”. In another word, although the label probability to the true class is a little bit lower in the early stage, it could still benefit the label propagation stage. To further extract effective features, we design an effective probabilistic class-wise adaptation regularizer to convey knowledge transfer by capturing the intrinsic structure of target domain. On the other hand, the label propagation turns out to be more effective with more discriminative domain-invariant features. Finally, these two strategies tend to trigger and benefit each other during the model optimization, which could also be formulated into the unified perspective of multi-view representation [2].

3.2 Probabilistic Class-Wise Domain Adaptation

We first go over the empirical Maximum Mean Discrepancy (MMD) [3], a widely used approach to alleviating marginal distribution disparity. MMD actually contrasts various distributions through the sample mean distance across two domains under the projected feature space, namely

in which \(x _{s/t,i/j}\) denotes the i / j-th sample of \(X_{s/t}\) while \(\mathbf 1 _{n_{s/t}}\) is an all one column vector with size of \(n_{s/t}\).

Such an MMD strategy in Eq. (1) is capable of reducing the disparity of the marginal distributions, but it fails to approach the conditional distribution divergence of two domains. In classification problems, it is essential to reduce the conditional distribution mismatch between two different domains. When target samples are completely not annotated, alignment of the conditional distributions becomes nontrivial, even through exploring sufficient statistics of the distributions. To that end, we develop a probabilistic class-wise adaptation formula to effectively guide the intrinsic knowledge transfer. In this way, the predicted soft labels for the target samples could also benefit the domain alignment as well even when little knowledge of them can be accessible at the beginning.

Suppose \(F^j_t \in \mathbb {R}^{c}\) as the probabilistic label to the j-th target data point, in which every element \(f_t^{(c,j)}\) (\(f_t^{(c,j)} \ge 0\) and \(\sum _{c=1}^Cf_t^{(c,j)}=1\)) means the probability for the j-th unlabeled target data point belonging to the c-th category. In other words, each target sample partially contributes to various classes during label prediction. For instance, the “computer” will be most likely linked to the “monitor”, rather than “mug”, because computers and monitors look more visually similar. Hence, such probabilities and linkage between different concepts would pave the way for the label propagation.

To promote the usage of soft labels in multiple classes and thus address the conditional distribution divergences across two domains, we bring forward the probabilistic labels to the MMD modeling and design a novel weighted class-wise adaption loss function as follows:

in which \(\Vert \cdot \Vert _\mathrm {F}\) indicates the Frobenius norm and \(n_s^c\) means the source sample size of the c-th class. \(n_t^c\) denotes the target sample size for the c-th category, which is neither an integer nor directly provided (We cannot obtain the true target sample size of each class). Thus, we approximately compute the \(n_t^c\) by \(n_t^c = \sum _{j=1}^{n_t}f_t^{(c,j)}\). Note, \(N_{s/t} \in \mathbb {R}^{C\times {C}}\) are diagonal matrices with the c-th diagonal element as \(\frac{1}{n_{s/t}^c}\). In fact, our probabilistic class-wise adaptation term (Eq. (2)) is able to fight off the impact of class weight bias, by considering prior category distributions.

The above Eqs. (1) and (2) learn two domain-specific projections individually, and we also want to mitigate the discrepancy across different domains via constraining the source and target projections similar. Along with this line, an auxiliary mapping function M was explored to link the source projection with the target one, i.e., \(\Vert P_s-MP_t\Vert _\mathrm {F}^2\) [33, 34], while Zhang et al. jointly optimized them and adopted \(\Vert P_s - P_t\Vert _\mathrm {F}^2\) to preserve the source discriminative information and the target variance [35]. However, they ignored the domain-specific parts and focused on the domain-shared projection bases. In this paper, we consider both uncovering more shared bases across source and target domains, and preserving the domain-specific bases, and thus, we explore \(l_{2,1}\)-norm to constrain two projections, i.e., \(\Vert P_s - P_t\Vert _{2,1}\). By integrating Eq. (1), Eq. (2), and projection alignment, we have the objective with constraints \(P_s^\top X_sH_sX_s^\top P_s = \mathrm {I}_p\) and \(P_t^\top X_tH_tX_t^\top P_t = \mathrm {I}_p\):

where \(\bar{Y}_s = [\mathbf 1 _{n_s}, Y_s], \bar{F}_t = [\mathbf 1 _{n_t}, F_t]\), and \(\bar{N}_{s/t} = \mathbf {diag}(\frac{1}{n_{s/t}}, N_{s/t})\), \(H_{s/t} = \mathrm {I}_{n_{s/t}} - \frac{1}{n_{s/t}}\mathbf {I}_{n_{s/t}}\) denotes the centering matrix while \(\mathbf {I}_{n_{s/t}}\) means the \(n\times {n_{s/t}}\) matrix of ones. As discussed in [3, 7], such a constraint would help keep the data variance after adaptation, which further brings in additional data discriminating ability during the learning of \(P_{s/t}\).

3.3 Joint Knowledge Transfer and Label Propagation

Suppose \(\mathbf {G}\) is an undirected graph defined on the mixture of the source and target with \(n = n_s+n_t\) samples and W is its corresponding weight matrix. We could model a smooth Label Propagation through the graph Laplacian regularization [30, 31, 36]:

where \(F = [F_s;F_t] \in \mathbb {R}^{n\times {C}}\) and \(L = W-D \in \mathbb {R}^{n\times {n}}\) represents the graph Laplacian [31, 36,37,38]. Meanwhile, D denotes a diagonal matrix with the diagonal entries as the column sums of W. Specifically,

where \(W_{st} = W_{ts}^\top \in \mathbb {R}^{n_s\times {n_t}}\) is a weight matrix across source and target samples.

Note the above graph Laplacian shares the same learning target \(F_t\), and we may merge the two learning problems and formulate the final learning objective for joint knowledge adaption:

To deal with the constraint \(F_t\mathbf 1 _{C} = \mathbf 1 _{n_t}\) efficiently, we relax the equality condition by incorporating a penalty regularizer \(\gamma \Vert F_t\mathbf 1 _{C} -\mathbf 1 _{n_t}\Vert _2^2\) into the objective formula (Eq. (5)), in which \(\gamma \) is the positive penalty parameter.

Remark: Our proposed approach joints effective domain-free feature learning and target label propagation in a unified knowledge adaptation framework. Thus, it could benefit each other to improve the recognition for the target domains. With domain/class-wise adaption, the well-established source information is able to boost the target recognition. With domain shift mitigated, an effective graph across source and target could be built so that source labels are able to propagate the unlabeled target data. Meanwhile, when more accurate labels are assigned to the target data, probabilistic class-wise adaptation term could transfer more effective knowledge across two domains. Such an EM-like refinement will facilitate the knowledge transfer.

3.4 Optimization Solution

It is easy to check that \(P_s, P_t\) and \(F_t\) in Eq. (5) cannot be jointly optimized. To address this optimization problem, we first transform it into the augmented Lagrangian function by relaxing the non-negative constraint as:

where \(\varPhi \) is the Lagrange multiplier for constraint \(F_{t}\ge 0\). While it is difficult to jointly optimize \(F_t, P_s\) and \(P_t\), it is solvable over each of them in a leave-one-out manner. Specifically, we explore an EM-like optimization scheme to update the variables. For E-step, we fix \(P_s, P_t\) and update \(F_t\) and \(N_t\); while for M-step, we update the subspace projections \(P_s, P_t\) using the updated \(F_t, N_t\). Hence, we optimize two sub-problems iteratively.

E-step: Label Propagation

Given two subspace projections \(P_s\) and \(P_t\), we could insert \(F_s = Y_s\) into \(\mathrm {tr}(F^\top {L}F)\) and get \(\mathrm {tr}(F_t^\top {L}_{tt}F_t+2Y_s^\top {L}_{st}F_t)\). Thus, we obtain the partial derivative of \(\mathcal {J}\) w.r.t. \(F_t\), by setting it to zero as:

Using the KKT conditions \(\varPhi \odot F_t = 0\) [39] (\(\odot \) denotes the dot product of two matrices), we achieve the following equations for \(F_t\):

Following [37], we obtain the updating rule:

where \(\mathcal {F}_W =\gamma {F_t}{} \mathbf 1 _{C}^\top + \lambda (W_{tt}F_t+W_{st}^\top Y_s)\) and \(\mathcal {F}_D = \gamma \mathbf{1 _{n_t}{} \mathbf 1 _{C}^\top }+\lambda D_{tt}F_t\). Specifically, \([A]^+\) means the negative elements of the matrix A are replaced by 0. Similarly, \([A]^-\) denotes the positive elements of the matrix A are replaced by 0. When we achieve \(F_t\), \(N_t\) can be updated accordingly.

M-step: Learning Subspace Projection

When \(F_t\) and \(N_t\) are optimized, we could update the subspace projection \(P = [P_s, P_t]\) with the refined class-wise adaption term. Thus,

where

G is a \(p\times {p}\) diagonal matrix with its i-th diagonal element as \(G_{ii} = \frac{1}{\Vert \mathbf {p}_i\Vert _2}\) if \(\mathbf {p}_i \ne 0\), otherwise \(G_{ii} = 0\). \(\mathbf {p}_i\) is the i-th row vector of \(P_s-P_t\). Equation (9) could be addressed by a generalized Eigen-decomposition problem: \((\mathbf {T}+\alpha \mathbf {G})\rho = \eta \mathbf {S}\rho \). The vectors \(\rho _i~(i \in [0, p-1])\) are obtained according to its minimum eigenvalues. Thus, we achieve updated subspace projection \(P = [\rho _0,\cdots ,\rho _{p-1}]\). After we achieve \(P_s\) and \(P_t\), we could optimize G.

By alternating the E and M steps detailed above, we will iteratively optimize the problem until the objective function becomes converged. What is noteworthy is that, we could generally obtain a probabilistic labeling for the unlabeled target samples with two effective coupled projections. Thus, if we exploit such a label assignment strategy (Eq. (8)) to improve the projection discriminability (Eq. (9)) in an iterative fashion, we are able to alternatively enhance the labeling quality and feature learning. For initialization of \(F_t\), we adopt Label Propagation (Eq. (4)) from L built on original features of source and target domains. Furthermore, we can further achieve the partial derivatives with respect to X, i.e., \(\frac{\partial \mathcal {J}}{\partial X}\), and then conduct the standard back propagation strategy to optimize the convolutional neural network weights.

3.5 Time Complexity

In this section, we analyze the model complexity for our approach. There are two main time-consuming components: (1) Non-negative \(F_t\) optimization (Step 1); (2) Subspace projection learning (Step 2).

In detail, the major time-consuming terms in non-negative \(F_t\) optimization are matrix multiplications in Step 1. Generally, the multiplication for matrix with the size \(n_t\times {n_t}\) could cost \(\mathcal {O}(n_t^3)\). Suppose there are l multiplication operations, thus, Step 1 would cost \(\mathcal {O}(ln_t^3)\). Step 2 could cost \(\mathcal {O}(d^3)\) for the generalized Eigen-decomposition of Eq. (9) for matrices with size of \(\mathbb {R}^{d\times {d}}\), which could be reduced to \(\mathcal {O}(d^{2.376})\) through the Coppersmith-Winograd method [40]. Furthermore, we can speed up the operations of large matrices through a sparse matrix, and state-of-the-art divide-and-conquer approaches. Meanwhile, we could also store some intermediate computation results which could be reused in every stage.

4 Experiments

In this part, we first illustrate the benchmarks as well as the experimental settings, and then present the comparative evaluations with existing domain adaptation approaches, further with some property analysis.

4.1 Datasets and Experimental Setting

Office-31+Caltech256Footnote 1 consists of 10 common categories from Office-31 and Caltech-256 benchmarks, with 3 subsets (Amazon, Webcam, and DSLR) from Office-31 and one from Caltech-256, respectively. Note that Amazon and Caltech-256 images are collected online with a clear background, while Webcam and DSLR images are taken from office environments with different devices. For a fair comparison, we utilize the 4096-dim DeCAF\(^6\) feature and adopt the full-sample protocol provided by [24] in unsupervised domain adaptation.

Office+HomeFootnote 2 [18] contains 4 domains, each with 65 categories’ daily objects. Specifically, Art denotes artistic depictions for object images; Clipart means picture collection of clipart; Product shows object images with a clear background, similar to Amazon category in Office-31; Real-World represents object images collected with a regular camera. We adopt deep features of the \(fc_7\) layer in the VGG-F model, pre-trained using the ImageNet 2012 [18].

We mainly compare with six state-of-the-art shallow domain adaptation approaches to evaluate the effectiveness of our algorithm as follows: Geodesic Flow Kernel (GFK) [24], Joint Distribution Adaptation (JDA) [3], Closest Common Space Learning (CCSL) [16], Label Structural Consistency (LSC) [7], Joint Geometrical and Statistical Alignment (JGSA) [35] and Probabilistic Unsupervised Domain Adaptation (PUnDA) [11]. Moreover, Label Propagation (LP) [30] is adopted as a baseline, which directly builds a graph on original features across source and target domains. For LP and our model, we both adopt k-nearest neighbor graph (\(k=5\) in our experiment) with heat-kernel weight [30]. We further compare to several deep domain adaptation models, i.e., DAN [32], DHN [18] and WDAN [12], to show the superiority of our model. Specifically, we adopt the VGG-F structure for these three methods in terms of fair comparison. Also, we cite the results reported by other publications when the experimental settings are exactly the same, or run available source codes under other settings.

In all our experiments, we adopt k-nearest neighbor graph (\(k=5\) in our experiment) with heat-kernel weight [30]. We set \(\lambda = 10\), \(\alpha = 0.1\), and \(\gamma = 10^4\) in our experiments to guarantee the sum of each soft label to be 1. We adopt the top-1 classification accuracy for the unlabeled target sample as the evaluation metric.

4.2 Comparison Experiments

First of all, we evaluate our algorithm and other competitors with source and target as one single subset. Tables 1 and 2 list the comparison results of 12 different cases based on Office-31+Caltech-256 and Office+Home, respectively. From the performance, we notice that our proposed approach works better than other baselines across almost all the cases. Especially in two cases, our model achieves 100% accuracy. Also in several tasks, e.g., \(C\rightarrow {W}\), the performance of our proposed algorithm is 3% higher than the state-of-the-art approaches.

Secondly, we explore the evaluation on knowledge transfer with multiple sub-domains. Figure 2 lists the comparison results from different methods on various imbalanced cross-domain combinations. For x-axis in Fig. 2, either domain consists of multiple sub-domain data, and complete results of different approaches are listed. From these results, we see our approach works favorably against state-of-the-art unsupervised domain adaptation algorithms.

Discussion: LP could work well in some cases when the distribution differences of two domains are not large, e.g., \(D\rightarrow {W}\), \(W\rightarrow {D}\), \(A\rightarrow {C}\) and \(C\rightarrow {A}\). However, it cannot achieve appealing performance in some challenging tasks, e.g., \(C\rightarrow {W}\). While our approach could even improve by 18.9% in \(C\rightarrow {W}\), which verifies the effectiveness of our approach. Another thing is that deep features pre-trained on large-scale dataset could mitigate the domain shift somehow, especially for different resolutions.

CCSL is designed for the imbalanced domain transfer, by associating such data to the capability of keeping discriminative and structural information within and across domains. However, it is too specific and not general. From the performance, we witness that our algorithm is able to consistently outperform CCSL. JDA and RTML both adopt pseudo labels of the target sample from a specific classier to refine the class-wise adaptation term. In this way, every target sample is assigned to a single label, which may bring in problems when they are assigned with wrong labels. RTML further explores the marginal denoising reconstruction, and thus achieves better results than JDA.

Besides, LSC adopts a specific classifier to initialize the pseudo labels of the target, and then refines the labels through label propagation on a cross-domain graph. However, it still considers the hard labels of the target data to build the class-wise adaptation. Most importantly, such label prediction and feature learning are separately learned for JDA, RTML and LSC. Compared with these methods, we manage to conduct joint feature learning and label propagation to benefit each other for more effective knowledge transfer. Compared with [7], while the two models share certain spirits, our method concentrates on building a joint UDA learning model. The model in [7], however, designs a separate label propagation after feature alignment, which may hinder the knowledge transfer. In addition, [7] still feeds the hard labels back to optimize feature adaption, which strictly follows the conventional semi-supervised learning. However, we introduce the soft labels as well as class-wise adaption strategy which is well integrated with the label propagation framework. That is being said, the soft labels and their probability are not only needed, but also effective. This is the most significant difference compared to the existing works. From the results, we notice that our model performs better in all the cases.

Moreover, JGSA also seeks two linear projections that transform source and target data into a low-dimensional domain-invariant space in which the geometrical and distribution shift are mitigated jointly. However, it does not consider the class-wise adaptation to mitigate the conditional distribution difference. Similarly, PUnDA also seeks linear transformations per domain to project data into a shared space, which jointly reduces the domain mismatch while improving the classifier’s discriminability.

Deep domain adaptation methods manage to simultaneously build deep architectures and conduct knowledge transfer. From our results, we notice that such a joint learning strategy could benefit the performance when comparing with several traditional linear transfer learning models. However, our model could further outperform those deep domain adaptation models, i.e., DAN, DHN, WDAN, which indicates that two separate steps in our pipeline can also adapt knowledge across different domains. Specifically, upon advanced deep features, our model is able to further improve the performance, which primarily stems from our probabilistic class-wise adaptation scheme to explore the intrinsic structure of the data during knowledge transfer. Moreover, traditional deep domain adaptation approaches always adopt a pre-trained model, which is similar to the case that we directly work on the deep features. The difference is that we only fine-tune the final layer. From our experimental results, we find knowledge transfer part plays a key role in successful domain adaptation, while fine-tuning deep structure parameters influences slightly on the final performance. To verify this point, we further evaluate our model with deep domain adaptation in different architectures, i.e., GoogLeNet [41] and VGGnet-16 [42]. Our model adopts the features generated from GoogLeNet and VGG-16, and their dimensionality are 1024 and 4096, respectively. The experimental results are provided in Fig. 3, where we witness that the proposed approach still obtains better performance than deep domain adaptation models.

Finally, we notice that the performances of all the algorithms on Office+Home are much lower than Office-31+Caltech256, due to the fact that there are more categories and more samples in Office+Home.

4.3 Empirical Evaluation

In this part, we present the convergence analysis, influence of parameters, and dimensionality of two coupled projections.

First of all, we testify the convergence of our proposed model. The cross-domain task \(C\rightarrow A\) on Office-31+Caltech256 is adopted for evaluation. The convergence curve is shown in Fig. 4(a), where we could observe that our approach converges very well.

Secondly, we evaluate the influence of parameter \(\lambda \) and show the recognition results at various values in Fig. 4(b), in which we notice that our model generates better performance across three different cases when \(\lambda \in [1,10]\). Generally, we set \(\lambda = 10\) as default during the experiments.

Moreover, we verify the dimension property of \(P_s\) and \(P_t\). In Fig. 4(c), we obtain an initially significant increase followed by a stable recognition performance, which denotes that our model works very well even when the data are lying in a low-dimensional space. Thus, we could verify that effective projections further enhance the knowledge transferability based on the deep features.

Finally, we aim to show that the proposed soft-label MMD is significantly superior to the hard-label MMD. Specifically, we do a post-processing for each \(F_t\) updating by transforming it to a zero-one matrix. We show the results of this variant and our proposed model on 12 cross-domain tasks (Office+Home datasets) in Fig. 5, where we notice that soft-label version could generally improve the performance over hard-label version 1–2%. On the other hand, we can also get a rough idea about the advantage of soft labels over the “hard” ones. For example, our model and LSC [7] used soft-label MMD and hard-label MMD, respectively, although both used label propagation. From the results, we already notice our model works better than LSC.

Recognition accuracies (%) for domain adaptation experiments 12 cross-domain tasks (listed in Table 2) on the Office+Home dataset.

Furthermore, we visualize the soft labels \(F_t\) to show that our model could improve the label prediction through model optimization (An example is shown in Fig. 6). From the results, we notice that our approach could enhance the label prediction based on the original LP. That means our “soft label” would be optimized during the model training. We also offer statistics summarizing how many images are wrongly classified by LP [30] but are correctly classified by the proposed approach, and vice versa. Specifically, we evaluate on Office+Home database with 4 sets, i.e., Art (2411 samples); Clipart (4325 samples); Product (4341 samples); Real World (4308 samples), and the results for 12 cross-domain tasks are shown in Table 3. We notice our model would wrongly classify some images which are correctly recognized by LP, which may be caused by some hurt to the label propagation of LP with further domain alignment. However, our model is able to significantly correctly classify more samples over LP. This indicates our joint adaptation could enhance the label prorogation ability across different labeled source and unlabeled target domains.

5 Conclusion

In this paper, we developed a novel Graph Adaptive Knowledge Transfer framework for unsupervised domain adaption. Specifically, we built a probabilistic class-wise adaptation term by assigning the target samples with multiple labels through graph-based label propagation. Meanwhile, two effective subspace projections were learned via the probabilistic class-wise adaption strategy so that intrinsic information across source and target could be preserved with the graph. In this way, accurate labels could be assigned to target samples with label propagation. These two strategies worked in an EM-like way to improve the unlabeled target recognition. Experiments on two cross-domain visual benchmarks verified the effectiveness of the designed algorithm over other state-of-the-art domain adaptation models, even deep domain adaptation ones.

References

Patel, V.M., Gopalan, R., Li, R., Chellappa, R.: Visual domain adaptation: a survey of recent advances. IEEE Sig. Process. Mag. 32(3), 53–69 (2015)

Ding, Z., Shao, M., Fu, Y.: Robust multi-view representation: a unified perspective from multi-view learning to domain adaption. In: IJCAI, pp. 5434–5440 (2018)

Long, M., Wang, J., Ding, G., Sun, J., Yu, P.S.: Transfer feature learning with joint distribution adaptation. In: ICCV, pp. 2200–2207 (2013)

Baktashmotlagh, M., Harandi, M.T., Lovell, B.C., Salzmann, M.: Unsupervised domain adaptation by domain invariant projection. In: ICCV, pp. 769–776 (2013)

Ding, Z., Shao, M., Fu, Y.: Deep low-rank coding for transfer learning. In: IJCAI, pp. 3453–3459 (2015)

Shao, M., Ding, Z., Zhao, H., Fu, Y.: Spectral bisection tree guided deep adaptive exemplar autoencoder for unsupervised domain adaptation. In: AAAI, pp. 2023–2029 (2016)

Hou, C.A., Tsai, Y.H.H., Yeh, Y.R., Wang, Y.C.F.: Unsupervised domain adaptation with label and structural consistency. IEEE TIP 25(12), 5552–5562 (2016)

Tsai, Y.H.H., Hou, C.A., Chen, W.Y., Yeh, Y.R., Wang, Y.C.F.: Domain-constraint transfer coding for imbalanced unsupervised domain adaptation. In: AAAI, pp. 3597–3603 (2016)

Wei, P., Ke, Y., Goh, C.K.: Deep nonlinear feature coding for unsupervised domain adaptation. In: IJCAI, pp. 2189–2195 (2016)

Ding, Z., Fu, Y.: Robust transfer metric learning for image classification. IEEE TIP 26(2), 660–670 (2017)

Gholami, B., (Oggi) Rudovic, O., Pavlovic, V.: PUnDA: probabilistic unsupervised domain adaptation for knowledge transfer across visual categories. In: ICCV, pp. 3581–3590 (2017)

Yan, H., Ding, Y., Li, P., Wang, Q., Xu, Y., Zuo, W.: Mind the class weight bias: weighted maximum mean discrepancy for unsupervised domain adaptation. In: CVPR, pp. 2272–2281 (2017)

Li, J., Zhao, J., Lu, K.: Joint feature selection and structure preservation for domain adaptation. In: IJCAI, pp. 1697–1703 (2016)

Liu, H., Shao, M., Ding, Z., Fu, Y.: Structure-preserved unsupervised domain adaptation. IEEE TKDE (2018). https://ieeexplore.ieee.org/document/8370901/

Ding, Z., Ming, S., Fu, Y.: Latent low-rank transfer subspace learning for missing modality recognition. In: AAAI, pp. 1192–1198 (2014)

Hsu, T.M.H., Chen, W.Y., Hou, C.A., Tsai, Y.H.H., Yeh, Y.R., Wang, Y.C.F.: Unsupervised domain adaptation with imbalanced cross-domain data. In: ICCV, pp. 4121–4129 (2015)

Herath, S., Harandi, M., Porikli, F.: Learning an invariant Hilbert space for domain adaptation. In: CVPR, pp. 3956–3965 (2017)

Venkateswara, H., Eusebio, J., Chakraborty, S., Panchanathan, S.: Deep hashing network for unsupervised domain adaptation. In: CVPR, pp. 5018–5027 (2017)

Zhang, W., Ouyang, W., Li, W., Xu, D.: Collaborative and adversarial network for unsupervised domain adaptation. In: CVPR, pp. 3801–3809 (2018)

Gretton, A., Borgwardt, K.M., Rasch, M., Schölkopf, B., Smola, A.J.: A kernel method for the two-sample-problem. In: NIPS, pp. 513–520 (2007)

Li, J., Lu, K., Huang, Z., Zhu, L., Shen, H.T.: Transfer independently together: a generalized framework for domain adaptation. IEEE TCYB (2018). https://ieeexplore.ieee.org/document/8337102/

Kumar, A., Saha, A., Daume, H.: Co-regularization based semi-supervised domain adaptation. In: NIPS, pp. 478–486 (2010)

Saenko, K., Kulis, B., Fritz, M., Darrell, T.: Adapting visual category models to new domains. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6314, pp. 213–226. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15561-1_16

Gong, B., Shi, Y., Sha, F., Grauman, K.: Geodesic flow kernel for unsupervised domain adaptation. In: CVPR, pp. 2066–2073 (2012)

Shekhar, S., Patel, V., Nguyen, H., Chellappa, R.: Generalized domain-adaptive dictionaries. In: CVPR, pp. 361–368 (2013)

Shao, M., Kit, D., Fu, Y.: Generalized transfer subspace learning through low-rank constraint. IJCV 109(1–2), 74–93 (2014)

Li, S., Song, S., Huang, G., Ding, Z., Wu, C.: Domain invariant and class discriminative feature learning for visual domain adaptation. IEEE TIP 27(9), 4260–4273 (2018)

Ding, Z., Nasrabadi, N.M., Fu, Y.: Semi-supervised deep domain adaptation via coupled neural networks. IEEE TIP 27(11), 5214–5224 (2018)

Chen, Q., Liu, Y., Wang, Z., Wassell, I., Chetty, K.: Re-weighted adversarial adaptation network for unsupervised domain adaptation. In: CVPR, pp. 7976–7985 (2018)

Zhou, D., Bousquet, O., Lal, T.N., Weston, J., Schölkopf, B.: Learning with local and global consistency. NIPS 16(16), 321–328 (2004)

Wang, L., Ding, Z., Fu, Y.: Adaptive graph guided embedding for multi-label annotation. In: IJCAI, pp. 2798–2804 (2018)

Long, M., Cao, Y., Wang, J., Jordan, M.I.: Learning transferable features with deep adaptation networks. In: ICML, pp. 97–105 (2015)

Fernando, B., Habrard, A., Sebban, M., Tuytelaars, T.: Unsupervised visual domain adaptation using subspace alignment. In: ICCV, pp. 2960–2967 (2013)

Wang, S., Ding, Z., Fu, Y.: Coupled marginalized auto-encoders for cross-domain multi-view learning. In: IJCAI, pp. 2125–2131 (2016)

Zhang, J., Li, W., Ogunbona, P.: Joint geometrical and statistical alignment for visual domain adaptation. In: CVPR, pp. 1859–1867 (2017)

Nguyen, C.H., Mamitsuka, H.: Discriminative graph embedding for label propagation. IEEE TNN 22(9), 1395–1405 (2011)

Zhao, H., Ding, Z., Fu, Y.: Multi-view clustering via deep matrix factorization. In: AAAI, pp. 2921–2927 (2017)

Ding, Z., Shao, M., Fu, Y.: Deep robust encoder through locality preserving low-rank dictionary. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9910, pp. 567–582. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46466-4_34

Kuhn, H.W.: Nonlinear programming: a historical view. In: Giorgi, G., Kjeldsen, T.H. (eds.) Traces and Emergence of Nonlinear Programming, pp. 393–414. Springer, Basel (2014). https://doi.org/10.1007/978-3-0348-0439-4_18

Coppersmith, D., Winograd, S.: Matrix multiplication via arithmetic progressions. In: ACM STOC, pp. 1–6 (1987)

Szegedy, C., et al.: Going deeper with convolutions. In: CVPR, pp. 1–9 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Acknowledgment

This work is supported in part by the NSF IIS award 1651902, NIJ Graduate Research Fellowship 2016-R2-CX-0013, ONR Young Investigator Award N00014-14-1-0484, and U.S. Army Research Office Young Investigator Award W911NF-14-1-0218.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Ding, Z., Li, S., Shao, M., Fu, Y. (2018). Graph Adaptive Knowledge Transfer for Unsupervised Domain Adaptation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11206. Springer, Cham. https://doi.org/10.1007/978-3-030-01216-8_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-01216-8_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01215-1

Online ISBN: 978-3-030-01216-8

eBook Packages: Computer ScienceComputer Science (R0)