Abstract

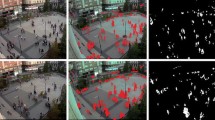

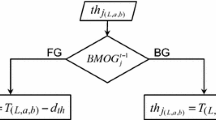

Time-Adaptive, Per-Pixel Mixtures Of Gaussians (TAPP-MOGs) have recently become a popular choice for robust modeling and removal of complex and changing backgrounds at the pixel level. However, TAPPMOG-based methods cannot easily be made to model dynamic backgrounds with highly complex appearance, or to adapt promptly to sudden “uninteresting” scene changes such as the repositioning of a static object or the turning on of a light, without further undermining their ability to segment foreground objects, such as people, where they occlude the background for too long. To alleviate tradeoffs such as these, and, more broadly, to allow TAPPMOG segmentation results to be tailored to the specific needs of an application, we introduce a general framework for guiding pixel-level TAPPMOG evolution with feedback from “high-level” modules. Each such module can use pixel-wise maps of positive and negative feedback to attempt to impress upon the TAPPMOG some definition of foreground that is best expressed through “higher-level” primitives such as image region properties or semantics of objects and events. By pooling the foreground error corrections of many high-level modules into a shared, pixel-level TAPPMOG model in this way, we improve the quality of the foreground segmentation and the performance of all modules that make use of it. We show an example of using this framework with a TAPPMOG method and high-level modules that all rely on dense depth data from a stereo camera.

Chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

References

D. Beymer. “Person Counting using Stereo”. In Wkshp. on Human Motion, 2000.

K. Bhat, M. Saptharishi, P. Khosla. “Motion Detection and Segmentation Using Image Mosaics”. In IEEE Intl. Conf. on Multimedia and Expo 2000, Aug. 2000.

T. Darrell, D. Demirdjian, N. Checka, P. Felzenszwalb. “Plan-view Trajectory Estimation with Dense Stereo Background Models”. In ICCV’01, July 2001.

A. Elgammal, D. Harwood, L. Davis. “Non-Parametric Model for Background Subtraction”. In ICCV Frame-rate Workshop, Sep 1999.

N. Friedman, S. Russell. “Image Segmentation in Video Sequences: a Probabilistic Approach”. In 13th Conf. on Uncertainty in Artificial Intelligence, August 1997.

X. Gao, T. Boult, F. Coetzee, V. Ramesh. “Error Analysis of Background Adaptation”. In CVPR’00, June 2000.

M. Harville. “Stereo Person Tracking with Adaptive Plan-View Statistical Templates”. HP Labs Technical Report, April 2002.

M. Harville, G. Gordon, J. Woodfill. “Foreground Segmentation Using Adaptive Mixture Models in Color and Depth”. In Proc. of IEEE Workshop on Detection and Recognition of Events in Video, July 2001.

K. Konolige. “Small Vision Systems: Hardware and Implementation”. 8th Int. Symp. on Robotics Research, 1997.

A. Mittal, D. Huttenlocher. “Scene Modeling for Wide Area Surveillance and Image Synthesis”. In CVPR’00, June 2000.

H. Nakai. “Non-Parameterized Bayes Decision Method for Moving Object Detection”. In ACCV’95, 1995.

J. Ng, S. Gong. “Learning Pixel-Wise Signal Energy for Understanding Semantics”. In Proc. Brit. Mach. Vis. Conf., Sep. 2001.

J. Orwell, P. Remagnino, G. Jones. “From Connected Components to Object Sequences”. In Wkshp. on Perf. Evaluation of Tracking and Surveillance, April 2000.

Point Grey Research, http://www.ptgrey.com

S. Rowe, A. Blake. “Statistical Background Modeling for Tracking with a Virtual Camera”. In Proc. Brit. Mach. Vis. Conf., 1995.

C. Stauffer, W.E.L. Grimson. “Adaptive Background Mixture Models for Real-Time Tracking”. In CVPR’99, Vol. 2, pp. 246–252, June 1999.

K. Toyama, J. Krumm, B. Brumitt, B. Meyers. “Wallflower: Principles and Practice of Baczkground Maintenance”. In ICCV’99, pp. 255–261, Sept 1999.

Tyzx Inc. “Real-time Stereo Vision for Real-world Object Tracking”. Tyzx White Paper, April 2000. http://www.tyzx.com

J. Woodfill, B. Von Herzen. “Real-Time Stereo Vision on the PARTS Reconfigurable Computer”. Symposium on Field-Programmable Custom Computing Machines, April 1997.

M. Xu, T. Ellis. “Illumination-Invariant Motion Detection Using Colour Mixture Models”. In Proc. Brit. Mach. Vis. Conf., Sep. 2001.

R. Zabih, J. Woodfill. “Non-parametric Local Transforms for Computing Visual Correspondence”. In ECCV’94, 1994.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2002 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Harville, M. (2002). A Framework for High-Level Feedback to Adaptive, Per-Pixel, Mixture-of-Gaussian Background Models. In: Heyden, A., Sparr, G., Nielsen, M., Johansen, P. (eds) Computer Vision — ECCV 2002. ECCV 2002. Lecture Notes in Computer Science, vol 2352. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-47977-5_36

Download citation

DOI: https://doi.org/10.1007/3-540-47977-5_36

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-540-43746-8

Online ISBN: 978-3-540-47977-2

eBook Packages: Springer Book Archive