Abstract

Network traffic classification is important to many network applications. Machine learning is regarded as one of the most effective technique to classify network traffic. In this paper, we adopt the fast correlation-based filter algorithm to filter redundant attributes contained in network traffic. The attributes selected by this algorithm help to reduce the classification complexity and achieve high classification accuracy. Since the traffic attributes contain a large amount of users’ behavior information, the privacy of user may be revealed and illegally used by malicious users. So it’s demanding to classify traffic with certain segment of frames which encloses privacy-related information being protected. After classification, the results do not disclose privacy information, while may still be used for data analysis. Therefore, we propose a random perturbation algorithm based on relationship among different data attributes’ orders, which protects their privacy, thus ensures data security during classification. The experiment results demonstrate that data perturbed by our algorithm is classified with high accuracy rate and data utility.

Similar content being viewed by others

Keywords

1 Introduction

Network traffic classification has attracted research interest recently, because it largely improved efficiency of the management of networks and Quality of Services (QoS) [1]. With the development of Internet technology, new application types are emerging rapidly, especially the application based on non-standard ports and protocol encryption, which reduces the efficiency of port-based method [2] and deep payload inspection (DPI) [3]. Using machine learning (ML) methods to classify network traffic has been widely used. ML-based methods use some statistical features such as message length and duration to identify the type of flow as different application types such as WWW, MAIL, etc. ML techniques may classify new-coming network traffic efficiently and greatly improve the accuracy.

The statistical features of network traffic carry a lot of sensitive information which may represent behaviors and other critical information of users. For example, the size of the data packets sent by a user when downloading and browsing the web are different. If an attacker determines the statistical information in a stream, he may determine the user’s behavior and recognize the user at a high probability. Therefore, there are risks of privacy disclosure by applying ML-based classification. How to achieve a high classification accuracy while not disclosing users’ privacy is the key issue that we study in this paper. This problem is also known as how to tradeoff the data’s utility and security.

2 Related Work

ML-based methods for classification mainly include unsupervised and supervised learning approaches. Unsupervised approaches classify unlabeled data based on the similarities between sample data, such as K-means clustering [4], EM [5], Auto Class [6], DBSCAN [7] and so on. They are suitable for identifying new application types in dynamic environment. The classification accuracy is hard to define and classification results may not include much useful information. Supervised learning algorithms are more popular in this field. Naive Bayes proposed by Moore et al. [8].

C4.5 Decision Tree [9] and K-Nearest Neighbor (KNN) [10] are all popular supervised algorithm. SVM [11] may overcome the problem of localized optimization. Supervised classification algorithms can greatly improve the accuracy of the classification results because there have been useful prior-knowledge before classification. In this paper we focus on using supervised classification algorithms for network traffic classification.

Privacy preserving techniques are applied to protect the privacy of users when publishing data. There are mainly three methods to preserve privacy. The first method is using k-anonymity [12] to reduce the granularity of data representation, such that any given record maps onto at least k-1 other records in the data. This method is vulnerable to malicious attackers and only provide limited protection on sensitive data. Data encryption [13] is the second method for privacy protection which applies encryption methods in the data mining process. This method is more secure than the first one, but hard to be applied in many scenarios because it involves complex computation. Randomized perturbation is the third method in which noise is added for masking the values, such as differential privacy [14]. This method is easy to implement, which is less complex than data encryption. On the other hand, it provides higher security level than k-anonymity.

Therefore, in order for data classification to guarantee a high accuracy rate and preserve privacy, we propose a random perturbation algorithm based on the data attributes’ order relationship. The experimental results indicate that the perturbed data are classified with a satisfactory accuracy while privacy is preserved.

The remainder of the paper is organized as follows: Sect. 3 introduces the process of supervised classification system and describes the algorithm of attributes order adjustment in detail; Sect. 4 presents the perturbation-based network traffic privacy preserving method; Sect. 5 gives the experimental results and analysis; Sect. 6 concludes this paper.

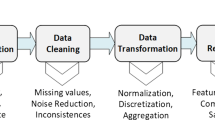

3 The Process of Supervised Classification System

Supervised classification system is consisted of four parts as shown in Fig. 1: training or test sets, attribute selection, classifier and classification results evaluation. The supervised classification system uses a partial set of attributes to identify the type of flow application, and then use the ML-based methods to construct the classifier and classify the network traffic.

3.1 Dataset Statistics

The experimental data sets proposed by Moore et al. [15] is adopted in our paper. The data sets includes 377526 network flow samples and is divided into 10 types. The statistical information of the data sets is shown in the Table 1.

3.2 Attributes Selection

Each sample in the data sets is captured from a complete TCP flow and includes 248 attributes, among which a large number of features do not contribute to flow classification and thus regarded as redundant. These features do not improve the accuracy of classification, but only increase the search complexity for classification algorithms. So we adopt a Fast Correlation-Based Filter algorithm (FCBF) [16] to filter redundant attributes contained in network traffic.

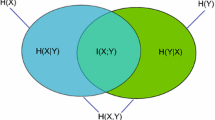

In FCBF algorithm, each attribute in the network traffic is regarded as a set of random events. We measure the correlation in order to filter the attributes with high redundancy. The main parameter adopt in FCBF is Symmetry Uncertainty (S), which is defined as

In above formula, \( H(X) \) stands for the information entropy of the variable X, which is defined as

Where \( P(x_{i} ) \) represents the probability that variable X has value \( x_{i} \).

\( IG(X|Y) \) is used to measure the closeness of the relationship between variables X and Y. It is expressed as

Where \( H(X|Y) = - \sum\limits_{{y_{j} }} {P(y_{j} )} \sum\limits_{{x_{i} }} {P(x_{i} |y_{j} )} \log_{2} (P(x_{i} |y_{j} )) \) represents the mutual information between variables X and Y.

The value of \( SU(X,Y) \) is between [0, 1]. 0 means that two variables are independent and 1 means that two variables determine each other. SU can not only measure the correlation between two attributes, but also measure the correlation between attributes and categories.

The steps of FCBF algorithm are as follows. First, calculate SU between attributes as well as between attributes and categories. Second, select a feature subset \( S^{{\prime }} \) by setting a threshold \( \delta \) of SU. We thereby select \( S^{{\prime }} \) by following steps:

-

(1)

Select the attributes according to the threshold \( \delta \), that is, select \( A_{i} \in S^{{\prime }} \), \( 1 \le i \le N \) satisfies\( SU_{i,c} \ge \delta \). \( SU_{i,c} \, \) denotes the value of \( SU \) that measures the correlation between the attribute i and the class C.

-

(2)

Remove the redundant attributes. For the attribute i and attribute j that selected by (1), if \( \, SU_{i,j} \ge SU_{i,c} \), the attribute j is regarded as a redundant attribute where the \( \, SU_{i,j} \) denotes the value of \( SU \) that measures the correlation between the attribute i and the attribute j.

Finally, the attributes in feature subset \( S^{{\prime }} \) are output in descending order.

3.3 Classifier

Supervised learning methods mainly classify the network traffic according to the marker sample data, and establish the relationship between the network flow features and the training set sample categories. Naïve Bayes, C4.5 Decision Tree, KNN, SVM are supervised learning methods. In this paper, we would use the above supervised classification algorithms to classify network traffic and compare the performance of different classification algorithms.

3.4 Classification Results Evaluation

The purpose of network traffic classification is to correctly identify the types of network applications. We use accuracy as an indicator to evaluate the quality of traffic classification methods. It is computed as below:

4 Perturbation-Based Network Traffic Privacy Preserving Method

Deep mining of network traffic will lead to leakage of users’ privacy. So we propose a perturbation-based network traffic privacy preserving method, which uses the data after perturbation of the raw data for classification. The flow chart is shown as Fig. 2.

Assume that A is the set of raw attribute data, the number of samples contained in A is n (the number of rows in the data table), that is,

Let the newly generated attribute data set be B after perturbation, the number of samples contained in B is also n, that is,

Assume that each sample contains m attributes, that is, each the n-th sample can be represented as \( X_{n} = (x_{n}^{1} ,x_{n}^{2} , \ldots ,x_{n}^{m} ) \). The value corresponding to the m-th attribute in the data table A is expressed by \( P_{m} = (x_{1}^{m} ,x_{2}^{m} , \ldots ,x_{i}^{m} , \ldots ,x_{n}^{m} ) \). Similarly, the perturbed data table B should also contain m attributes in each sample. The n-th sample of the perturbed data is expressed as \( Y_{n} = (y_{n}^{1} ,y_{n}^{2} , \ldots ,y_{n}^{m} ) \) and the corresponding value of m-th attribute in the data table B is described by \( Q_{m} = (y_{1}^{m} ,y_{2}^{m} , \ldots ,y_{i}^{m} , \ldots ,y_{n}^{m} ) \).

4.1 Count Distribution of Each Attribute

We take the first attribute \( P_{1} = (x_{1}^{1} ,x_{2}^{1} , \ldots ,x_{i}^{1} , \ldots ,x_{n}^{1} ) \) in A as an example to count the distribution. Since each attribute may include many different values, if we calculate probability for each value, the complexity of the algorithm will increase. What’s more, this method cannot be applied to each dimension data so the generality is limited. Therefore, we propose a method by dividing the values into several intervals and the expressions of the corresponding distribution functions in each interval are obtained respectively. The specific process is as follows.

-

(1)

Find the maximum value as \( \hbox{max} (P_{1} ) \) and the minimum value as \( \hbox{min} (P_{1} ) \) in \( P_{1} \).

-

(2)

Divide the data in [\( \hbox{min} (P_{1} ) \), \( \hbox{max} (P_{1} ) \)] into \( k \) segments uniformly and get every partition value respectively. Intern partition values satisfy that:

$$ \hbox{min} (P_{1} ) = s_{1} \le s_{2} \le \ldots\, s_{i} \le \ldots \le s_{{k{ + }1}} = \hbox{max} (P_{1} ) $$

Since the entire data is divided into \( k \) segments evenly, any partition value is calculated by

It is seen from the above formula that the larger \( k \) is, the better match of the calculated distribution function is obtained to the original distribution. However, this results in the increased complexity of calculation.

-

(3)

Count the number of data contained in each partition. The total number of data is denoted as \( num_{total} \). The number within \( ( - \infty ,s_{1} ] \) is denoted as \( num_{1} \) and the number in the interval \( (s_{k} ,s_{{k{ + }1}} ] \) is denoted as \( num_{k} \).

Then we can get the probability at partition value \( s_{1} \) is

The probability at any partition value \( s_{j} \) can be calculated by

-

4)

Use the linear interpolation method to get the expressions of the corresponding distribution functions in each partition respectively after calculating the probability at any partition value. Then we can get the distribution function of the original data. The distribution function \( F(x) \) of \( P_{1} \) is defined as follows.

$$ F(x) = \left\{ {\begin{array}{*{20}l} 0 \hfill & {x\,{ < }\,s_{ 1} } \hfill \\ {\frac{{\sum\limits_{1}^{i} {num_{i} } }}{{num_{total} }}} \hfill & {x = s_{i} } \hfill \\ {F(s_{i} ) + \frac{{F(s_{i + 1} ) - F(s_{i} )}}{{s_{i + 1} - s_{i} }} (x - s_{i + 1} )} \hfill & {s_{i} < x < s_{i + 1} } \hfill \\ 1 \hfill & {x > s_{k + 1} } \hfill \\ \end{array} } \right. $$

We use the above distribution function to approximate the original distribution of data. Then the inverse function is used to generate random data that is independent and identically distributed (iid) to the original data. The inverse function is to exchange the range and domain of the original distribution function. In addition, we guarantee the number of generated data in each partition is the same as the original data and conforms to a uniform distribution. Then we can get the random data of each attribute by repeating the above steps.

4.2 Restore the Relationship Based on the Order

Although the data generated by the above steps retain approximate identical distribution as the original data, the randomly generated data cause damage to utility of the data. In order to restore the correlation among the data as much as possible, we adjust the perturbed data based on their attributes’ order relationship. The following example describes the adjustment process.

Assume that two attributes of the raw data are \( P_{1} = (x_{1}^{1} ,x_{2}^{1} , \ldots ,x_{i}^{1} , \ldots ,x_{n}^{1} ) \) and \( P_{2} = (x_{1}^{2} ,x_{2}^{2} , \ldots ,x_{i}^{2} , \ldots ,x_{n}^{2} ) \). \( P_{1} ' = (x_{1}^{1'} ,x_{2}^{1'} , \ldots ,x_{i}^{1'} , \ldots ,x_{n}^{1'} ) \) is the generated sequence by above steps that has identical distribution to \( \, P_{1} \), \( P_{2} ' = (x_{1}^{2'} ,x_{2}^{2'} , \ldots ,x_{i}^{2'} , \ldots ,x_{n}^{2'} ) \) is the perturbed data from \( \, P_{2} \). We use the order relationship between \( \, P_{1} \, \) and \( \, P_{2} \, \) to adjust the perturbed data \( \, P_{1}^{{\prime }} \) and \( \, P_{2}^{{\prime }} \). Firstly, we obtain the orders in each data sequence. The goal is to keep the perturbed data as close as possible to raw data. Then we use the order relationship between \( \, P_{1} \, \) and \( \, P_{2} \, \) as a reference, the data in \( \, P_{2}^{{\prime }} \) is adjusted based on \( \, P_{1}^{{\prime }} \). If the order in \( \, P_{1} \, \) corresponds to the order in \( \, P_{2} \), the order in \( \, P_{1}^{{\prime }} \) also corresponds to the same order in \( \, P_{2}^{{\prime }} \), that is, the value in \( \, P_{2}^{{\prime }} \) should be adjusted to the corresponding position. For instance, if the smallest value in \( \, P_{1} \, \) corresponds to the largest value in \( \, P_{2} \), the largest value in \( \, P_{2}^{{\prime }} \) should adjust to the proper position to correspond the smallest value in \( \, P_{1}^{{\prime }} \). Repeat the above steps, until all the data adjustments are complete. \( Q_{1} \) and \( Q_{2} \) are the adjusted sequences of \( \, P_{1}^{{\prime }} \) and \( \, P_{2}^{{\prime }} \) respectively. So \( \, Q_{1} \, \) and \( \, Q_{2} \) are published as perturbed data for research on traffic classification.

The published data is a perturbation to the original data according to the above process. What’s more, the random perturbation algorithm based on the order relation can not only prevents data reconstruction but also maintains the correspondence of the original data thus guaranteeing the utility of data.

5 Experiment and Analysis

5.1 Attribute Selection Experiment

We apply the FCBF algorithm to filter redundant attributes in Weka environment and use the default threshold \( \delta \) to select 10-dimensional attributes. Table 2 shows the results of selecting attributes. We do not take the information about ports in the dataset into consideration so as to avoid the impact of ports.

In the experiment, the 248-dimensional attributes in the original network traffic data are compared with the 10-dimensional attribute results selected by the FCBF algorithm. The parameters in the experiment are determined by using the enumeration local search method. Naive Bayes adapts default parameters. The C-SVC algorithm is selected in SVM and the kernel function is RBF. The nonlinear mapping and the use of a step size search strategy result in a penalty factor C = 512 and a nuclear parameter \( \gamma = 0.05 \). An important parameter in the C4.5 decision tree is the significance level \( \, \alpha \) that can be used to set the range of confidence intervals and we set \( \alpha = 0.2 \). KNN has a parameter \( K \) which means the number of neighbors and we set \( K = 5 \). We use 10-fold cross-validation methods to get classification results.

It can be seen from classification results in Table 3, the classification accuracy and the stability of Naïve Bayes has been greatly improved after the FCBF algorithm. The classification accuracy of the KNN algorithm has also been improved to some extent. Although the accuracy of the C4.5 Decision tree and SVM slightly decreased, the running time of the algorithm was greatly reduced due to the reduction of the dimensions. It can be seen that the 10-dimensional attributes selected by the FCBF algorithm can obtain satisfactory classification results and are superior to the original data classification results in terms of classification efficiency. Therefore, we further analyze the 10-dimensional data in the following experiments.

5.2 Data Perturbation Experiment

We first use the first dimension attribute to classify and then increase the dimension of attributes. As the dimension of the attribute increases, the relationship between the dimension of the attribute and the classification accuracy is shown in the Fig. 3.

It is shown in Fig. 3 that the accuracy of each classification algorithm is constantly increasing with the increase of the dimensionality. The accuracy of each classification algorithm would reach more than 90%, which means that data is of high utility. By comparing several classification algorithms, it can be found that the classification accuracy of the SVM algorithm is relatively high.

Next, our proposed perturbation algorithm is applied to the data. First, the perturbation algorithm is applied to the first dimension attribute and generate a sequence that is identically distributed to it. Then the correspondence between the perturbation data and the category is performed according to the correspondence between the original first dimension attribute and the category. After that, the first dimension attribute is used as a criterion to adjust the internal position of the second attribute and then the two-dimensional attribute data are used to classify. Each dimension attribute is perturbed in this way. We repeat the experiment three times and get average in order to further verify the effectiveness of the algorithm. Figures 4 and 5 show the experimental results when we divide the data into 100 segments uniformly, that is, k = 100.

It can be seen from the Figs. 4 and 5, the classification accuracy of each algorithm increases as the dimension of the attribute increases. Compared with the results of the classification of the raw 10-dimensional attributes, it can be seen that the classification accuracy rate has slightly decreased. When the 10-dimensional attributes are all disturbed, the classification accuracy rate can still be accepted, indicating that the data still maintains high utility. In addition, the perturbation-based method is suitable because the data after perturbation can be used for various classification algorithms.

6 Conclusion

Network traffic classification is the process of identifying network applications and classifying the corresponding traffic. In this paper, we first adapt the FCBF algorithm to filter the redundant attributes of network traffic and use several supervised learning methods to classify network traffic. The experiment results show that features selected by the FCBF algorithms can maintain satisfactory accuracy and improve the efficiency of classification algorithms. Based on selected attributes, a privacy preserving algorithm using perturbation for network traffic classification is proposed in order to protect users’ privacy information in network traffic. The algorithm first generates a random sequence with the identical distribution as the original attributes, and then adjusts the data according to the order relationship of the original attributes. The experimental results indicate that the privacy preserving algorithm can effectively achieve the accuracy of classification, that is, guarantee the utility of data. On the other hand, due to the use of random perturbation method, it is difficult for an attacker to reconstruct the original data that helps to guarantee the security of data. Moreover, the perturbation algorithm is scalable to be used for various classification algorithms.

References

Guo, L., Shen, H.: Privacy-preserving internet traffic publication. In: IEEE Trustcom/BigDataSE/ISPA, pp. 884–891 (2017)

Moore, A.W., Papagiannaki, K.: Toward the accurate identification of network applications. In: Dovrolis, C. (ed.) PAM 2005. LNCS, vol. 3431, pp. 41–54. Springer, Heidelberg (2005). https://doi.org/10.1007/978-3-540-31966-5_4

Madhukar, A., Williamson, C.: A longitudinal study of P2P traffic classification. In: 14th IEEE International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems, pp. 179–188 (2006)

Kanungo, T., Mount, D.M., Netanyahu, N.S.: An efficient K-means clustering algorithm: analysis and implementation. IEEE Trans. Pattern Anal. Mach. Intell. 24, 881–892 (2002)

McGregor, A., Hall, M., Lorier, P., Brunskill, J.: Flow clustering using machine learning techniques. In: Barakat, C., Pratt, I. (eds.) PAM 2004. LNCS, vol. 3015, pp. 205–214. Springer, Heidelberg (2004). https://doi.org/10.1007/978-3-540-24668-8_21

Zander, S., Nguyen, T., Armitage, G.: Automated traffic classification and application identification using machine learning. In: IEEE Conference on Local Computer Networks, pp. 250–257 (2005)

Erman, J., Arlitt, M., Mahanti, A.: Traffic classification using clustering algorithms. In: Proceedings of the 2006 SIGCOMM Workshop on Mining Network Data, pp. 281–286 (2006)

Moore, A.W., Zuev, D.: Internet traffic classification using bayesian analysis techniques. ACM SIGMETRICS Perform. Eval. Rev. 33, 50–60 (2005)

Williams, N., Zander, S.: Evaluating machine learning algorithms for automated network application identification, Center for Advanced Internet Architectures Technical report (2006)

Li, W., Moore, A.W.: A machine learning approach for efficient traffic classification. In: 15th IEEE International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems, pp. 310–317 (2007)

Deng, H., Yang, A.M.: P2P traffic classification method based on SVM. In: Computer Engineering and Applications (2006)

Aggarwal, C.C.: On k-anonymity and the curse of dimensionality. In: Proceedings of the 31st International Conference on Very Large Data Bases, pp. 901–909 (2005)

Waters, B.: Efficient identity-based encryption without random oracles. In: Cramer, R. (ed.) EUROCRYPT 2005. LNCS, vol. 3494, pp. 114–127. Springer, Heidelberg (2005). https://doi.org/10.1007/11426639_7

Dwork, C.: Differential Privacy. In: Bugliesi, M., Preneel, B., Sassone, V., Wegener, I. (eds.) ICALP 2006, Part II. LNCS, vol. 4052, pp. 1–12. Springer, Heidelberg (2006). https://doi.org/10.1007/11787006_1

Moore, A.W., Zuev, D.: Discriminators for use in ow-based classification (2005)

Yu, L., Liu, H.: Feature selection for high-dimensional data: a fast correlation-based filter solution. In: 20th International Conference on Machine Learning, pp. 856–863 (2003)

Acknowledgement

This work was done under the support of Research Initiative Grant of Australian Research Council Discovery Projects funding DP150104871, Beijing Natural Science Foundation Grant No. 4172045 and National Science Foundation of China Grant No. 61501025.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Lu, Y., Tian, H., Shen, H., Xu, D. (2019). Privacy Preserving Classification Based on Perturbation for Network Traffic. In: Park, J., Shen, H., Sung, Y., Tian, H. (eds) Parallel and Distributed Computing, Applications and Technologies. PDCAT 2018. Communications in Computer and Information Science, vol 931. Springer, Singapore. https://doi.org/10.1007/978-981-13-5907-1_13

Download citation

DOI: https://doi.org/10.1007/978-981-13-5907-1_13

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-5906-4

Online ISBN: 978-981-13-5907-1

eBook Packages: Computer ScienceComputer Science (R0)