Abstract

With the advent of big data, interest for new data mining methods has increased dramatically. The main drawback of traditional data mining methods is the lack of comprehensibility. In this paper, the firefly algorithm was employed for standalone binary classification, where each solution is represented by two classification rules that are easy understandable by users. Implicitly, the feature selection is also performed by the algorithm. The results of experiments, conducted on three well-known datasets publicly available on web, were comparable with the results of the traditional methods in terms of accuracy and, therefore, the huge potential was exhibited by the proposed method.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Data Mining is the most complex part of the Knowledge Discovery from Data (KDD) process that is comprised of: Data selection and creation, preprocessing (i.e., data cleaning and transformation), data mining, and evaluation [9]. Typically, the data preprocessing captures the feature extraction and feature selection. The aim of the former is to derive a new, less complex dataset, while the latter to find the best subset of features from a set of features. Classification and clustering are two of the most widely studied tasks of data mining, where the classification is referred to a prediction of the class labels on the basis of test observations during the process of learning [16].

Mainly, the traditional classification methods are Decision Trees [14], Bayesian networks [6], Neural Networks [7], and Support Vector Machines [8]. Although these methods are able to find the local optimal classification models in some situations, the majority of them are not very comprehensible, and thus hard to handle by casual users. Usually, they are time consuming too. Fortunately, searching for the best classification model of all the possible candidates can be defined as an optimization problem appropriate for solving with stochastic nature-inspired population-based algorithms. Here, the quality of solutions can be evaluated according to classification accuracy and comprehensibility. The majority of these algorithms represents the classification model in terms of “If-then” rules, and are, therefore, close to human comprehension.

Stochastic nature-inspired population-based algorithms have frequently been applied to data mining in the last three decades. For instance, Srikanth et al. in [18] proposed the Genetic Algorithm (GA) for clustering and classification. In the Swarm Intelligence (SI) domain, Particle Swarm Optimization (PSO) and Ant Colony Optimization (ACO) attracted many scientists to use them for solving the problems in data mining. For instance, Sousa et al. [17] compared the implemented PSO algorithms for data mining with the GA, while Ant-Miner, developed by Parpinelly et al. [11] using ACO, was proposed to discover classification rules. The more complete surveys of using EAs in data mining can be found in [4, 5, 15], while the review of papers describing SI-based algorithms in data mining was presented in [10]. Recently, a Gravitational Search (GS) has achieved excellent results in discovering classification models, as reported by Peng et al. [13].

This paper tries to answer the question whether the stochastic nature-inspired population-based algorithms can be competitive tool for pure binary classification compared with the classical data mining methods. Here, the “pure” means that algorithms perform classification task standalone, i.e., without any interaction with traditional methods. The Firefly Algorithm (FA) [20] for binary classification was proposed, capable of discovering the classification models and evaluating their quality according to a classification accuracy. In our opinion, the main advantage of the FA against the PSO algorithm lays in the principle of FA working, because particles in this algorithm are not dependent on the global best solution as in PSO only, but also on the more attractive particles in the neighborhood. On the other hand, the model in the proposed FA consists of two classification rules, i.e., one for True Negative (TN) and the other for True Positive (TP) classification results. Prior to classification, feature selection is executed implicitly by FA.

The proposed FA was applied to three well-known datasets for binary classification that are publicly available on the web. The obtained results showed big potential in binary classification field that could also be applied for general classification.

The main goals of this paper are as follows:

-

to develop the new classification method based on real-coded FA,

-

to encode two classification rules simultaneously, and decode by the new genotype-phenotype mapping,

-

to perform the feature selection implicitly by the classification,

-

to evaluate the proposed method on some binary classification datasets.

In the remainder of the paper, the structure is as follows: Sect. 2 introduces fundamentals of the FA. In Sect. 3, the proposed classification method is described in detail. The experiments and results are presented in Sect. 4, while the paper is concluded with Sect. 5, in which directions for the future work are also outlined.

2 Fundamentals of the Firefly Algorithm

The inspiration for the Firefly Algorithm (FA) was fireflies with flashing lights that can be admired on clear summer nights. The light is a result of complex chemical reactions proceeding in a firefly‘s body and has two main purposes for the survival of these small lightning bugs: (1) To attract mating partners, and (2) To protect against predators. As follows from a theory of physics, the intensity of the firefly‘s light decreases with increasing the distance r from the light source, on the one hand, and the light is absorbed by the air as the distance from the source increases, on the other.

Both physical laws of nature are modeled in the FA developed by Yang at 2010 [20], as follows: The FA belongs to a class of SI-based algorithms, and therefore operates with a population of particles representing solutions of the problem in question. Thus, each solution is represented as a real-valued vector, in other words:

where N denotes the population size, D a dimension of the problem to be solved, and t is a generation number. Here, the elements are initialized according to the following equation:

where U(0, 1) denotes the random number drawn from uniform distribution in interval [0, 1], and \( Ub _j\) and \( Lb _j\) are the upper and lower bounds of the j-th element of the vector.

The physical laws of a firefly flashing are considered in the FA by introducing the light intensity relation, as follows:

where \(I_0\) denotes the light intensity at the source, and \(\gamma \) is a light absorption coefficient. Similar to the light intensity, the attraction between two fireflies, where the brighter is capable of attracting a potential mating partner more, is calculated according to the following equation:

where \(\beta _0\) is the attraction at \(r=0\).

The distance \(r_{i,j}^{(t)}\) between two fireflies \(\mathbf {x}_i^{(t)}\) and \(\mathbf {x}_j^{(t)}\) is expressed as an Euclidian distance, as follows:

The variation operators are implemented as a move of a definite virtual firefly i towards the more attractive firefly j according to the following equation:

which consists of three terms: The current position \(\mathbf {x}_i^{(t)}\) of the i-th firefly, the social component determining the move of the i-th firefly towards the more attractive j-th firefly, and a randomization component determining the random move of the same firefly in the search space.

Typically, the step size scaling factor \(\alpha \) is proportional to the characteristics of the problem, while the randomization factor \(\epsilon _i\) is a random number drawn from Gaussian distribution with mean zero and standard deviation one, denoted as N(0, 1). Contrarily, the uniform distribution U(0, 1) from interval [0, 1] was used in our study instead of the normal distribution.

The quality of the solution, expressed by the fitness function, is, in the FA, proportional to the light intensity as \(I(\mathbf {x}_i)\propto f(\mathbf {x}_i)\). The pseudo-code of the FA is illustrated in the Algorithm 1, from which it can be seen that this consists of the following components: (1) Representation of a solution, (2) Initialization (line 1), (3) Termination condition (line 4), (4) Move operator (line 8), (5) Evaluation function (lines 2 and 11), and (6) Ranking and finding the best solution (line 13).

3 Proposed Method

The task of the proposed stochastic nature-inspired population-based algorithm is to search for the model appropriate for binary classification of an arbitrary dataset. The model consists of two rules containing features of the dataset for predicting the True Negative and True Positive values. Thus, the learning is divided into a training phase, in which 80% of dataset instances are included, and a test phase, where we operate with the remaining 20% of the instances in the same dataset. The search for a model is defined as an optimization, where the fitness function is defined as a classification accuracy metric that is expressed mathematically as:

where TN= True Negative, TP= True Positive, FN= False Negative, and FP= False Positive.

Solutions \(\mathbf {x}_i\) for \(i=1,\ldots , N \) in the proposed algorithm are represented as real-valued vectors with elements \(x_{i,j}\in [0,1]\) for \(j=1,\ldots ,L\), representing features, where L is the length of a solution, to which the binary vector \(\mathbf {b}_{i}=\{b_{i,j}\}\) is attached with elements \(b_{i,k}\in \{0,1\}\) for \(k=1,\ldots , M \), and \( M \) is the number of features. These are obtained in the preprocessing phase, where the dataset in question is analyzed in detail. The features can be either categorical (i.e., \(b_{i,k}=0\)) or numerical (i.e., \(b_{i,k}=1\)). The former consists of attributes drawn from a discrete set of feasible values, while the latter of continuous intervals limited by their lower and upper bounds. Each feature has its own predecessor \( control \), determining its presence or absence in the specific rule.

In summary, the length of the solution L is calculated as:

where \( num\_of\_category\_attr \) denotes the number of categorical features, num_of_ numeric_attr is the number of numerical features, and one is reserved for \( threshold \) that determines if the definite feature belongs to the rule or not. Obviously, the feature belongs to the rule when the relation \( control \ge threshold \) is satisfied.

In order to transform the representation of solutions into their problem context, the genotype-phenotype mapping is needed. The genotype-phenotype mapping determines how the genotype \(\mathbf {x}_i\) of length L, calculated according to Eq. (8), is mapped into the corresponding phenotype \(\mathbf {y}_i\) for \(k=1,\ldots , M \), where the variable \( M \) denotes the number of features in a dataset.

There are two ways in which to perform the genotype-phenotype mapping, depending on the type of feature: Actually, the categorical variables demand two, and the numerical even three elements for this mapping. In general, the mapping is expressed mathematically as (Fig. 1):

for \(k=1,\ldots ,M\), where \(\left| Attr _k\right| \) denotes the size of the k-th attribute set \( Attr _k=\{a_1,\ldots ,a_{n_k}\}\), and \(D_k\) is a domain of feasible values of attributes, expressed as \(( Max _k- Min _k)\), where \( Max _k\) and \( Min _k\) represent the maximum and minimum values of the numerical feature found in the dataset.

In summary, the phenotype value \(y_{i,k}\) can obtain three values after the genotype-phenotype mapping: (1) \(-1\), If the feature is not present in the rule, (2) The attribute of the feature set, if the feature is categorical, and (3) The interval of the feasible values, if the feature is numerical.

4 Experiments and Results

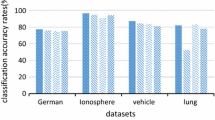

The aim of conducting experiments was twofold: (1) To evaluate the performance of the proposed method on some well-known binary datasets, and (2) To compare the obtained results with the results of some classical classification methods. In line with this, the results of the FA for binary classification were compared with the results obtained by: (1) Random Forest (RF) [3], (2) Multi-Layer Perceptron (MLP) [21], and (3) Bagging [2]. All algorithms in the experiments were applied to three well-known datasets for binary classification taken from the UCI Machine Learning Repository [1], whose characteristics are depicted in Table 1.

As can be seen from the table, binary datasets in question are relatively elementary, because the number of features are less than 10, while the number of instances does not exceed the value of 1,000.

The parameter setting of the FA during the tests is presented in Table 2, from which it can be seen that the maximum number of fitness function evaluations amounts to 5, 000. Settings of the other algorithm parameters were taken from the existing literature. This means that no specific optimization of parameter settings was performed in the study. Moreover, the proposed FA did not include any domain-specific knowledge about imposed classification problems incorporated in the sense of adaptation or hybridization.

Indeed, the proposed FA for binary classification was implemented in the Python programming language using the external NiaPy library [19]. The implementations of the remaining three methods were taken from the scikit-learn Python package [12], where default parameter settings were adopted. Let us emphasize that 25 independent runs were conducted for each method in question, where the achieved classification accuracy was collected after each run. As a result, the quality of the methods was evaluated according to the five aforementioned standard statistical measures: minimum, maximum, average, median, and standard deviation values.

The detailed results of the comparative analysis according to classification accuracy are illustrated in Table 3, where five statistical measures are analyzed according to the used algorithms and the observed datasets. Here, the best results are presented in bold case. As can be seen in the Table 3, the best results were achieved by the RF and MLP classification methods, where the RF gained the better accuracy by classifying the Pima dataset, the MLP was better at the Haberman’s, while, at the Breast’s, both mentioned methods obtained the same classification accuracy.

According to Table 4, where the percent in deviation of the results of the definite method from the best results designated by ‘\(\ddagger \)’ in the Table, are calculated, the RF and MLP classification methods exhibit the best percent in general, because they outperformed all the others even three times. The bagging achieved the best mean results by classification of the Pima dataset. Although the FA for binary classification did not achieve the best accuracy in any instance of dataset, its best, as well as mean results, were no worse than 10 percentage points of the best results, except at Breast’s, where the accuracy was close to this border value (precisely 89.49 %).

Finally, an example of classification rules generated by the proposed FA in classifying the Pima Diabetes dataset is illustrated in Table 5 that is divided into two parts: (1) Feature, and (2) Classification rules. The former consists of three fields: Sequence number, feature name and type. The latter is divided into two rules, i.e., for True Negative TN, and for True Positive TP classifications.

As can be seen from the Table, there are eight features in the dataset. Interestingly, the dataset supports only numerical attributes. These attributes are, therefore, represented as continuous domains of values. Thus, the first rule determines the combination attributes that are classified as True Negative predictions, while the second rule as True Positive predictions.

From this table, it can be concluded that this representation of rules is undoubtedly comprehensive, and, in that way, is easily understandable by the user.

5 Discussion

The huge progress in big data has caused rapid development of new data mining methods that need to satisfy two requests: (1) To process enormous volumes of data, and (2) To ensure enough processing time for their analysis. The classical data mining methods suffer from a lack of comprehensibility that disallows users to use them as effectively as possible. Mainly, the stochastic nature-inspired population-based algorithms are well-known general tools suitable for solving the hardest optimization problems. Recently, this family of algorithms has been applied to data mining field, in order to search for the best model in the model search space.

In this preliminary study, the FA was proposed for the binary classification task, with the following advantages: The FA search process searches for new solutions, not only on basis of the best global solution, but moves each particle in the search space with regard to its neighborhood consisting of the more attractive particles. Furthermore, the original FA operates with real-valued vectors, which represent the solutions of the problem in question. The genotype-phenotype mapping must be performed in order to transform the representation in the genotype space into the solution in the problem context. In our case, the mapping decodes two classification rules from each solution, where the first is dedicated for classification of TN predictions, while the second for classification of TP. Moreover, the algorithm is capable of performing the feature selection implicitly, because only the more important features must be presented in the solution. Finally, the features can be either categorical or numerical. Both types are represented as real values and, therefore, no discretization is necessary.

The proposed FA for binary classification was applied to three well-known datasets publicly available on the web. The obtained results were compared with three classical classification methods: RF, MLP, and boosting. Although the FA did not improve the results achieved by the classical methods, they showed that this has a big potential for improving its results in the future, especially due to the fact that the algorithm was used as is, i.e., no features were implemented to improve it.

In line with this, the improvement of the FA in the sense of adaptation and hybridization should be a reasonable direction for the future. However, testing the behavior of the algorithm on general classification problems could also be challenging.

References

Asuncion, A., Newman, D.: UCI machine learning repository (2007)

Breiman, L.: Bagging predictors. Mach. Learn. 24(2), 123–140 (1996)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Cantú-Paz, E.: On the use of evolutionary algorithms in data mining. In: Data Mining: A Heuristic Approach, pp. 22–46. IGI Global (2002)

Freitas, A.A.: A survey of evolutionary algorithms for data mining and knowledge discovery. In: Ghosh, A., Tsutsui, S. (eds.) Advances in Evolutionary Computing. NCS, pp. 819–845. Springer, Heidelberg (2003). https://doi.org/10.1007/978-3-642-18965-4_33

Friedman, N., Geiger, D., Goldszmidt, M.: Bayesian network classifiers. Mach. Learn. 29(2–3), 131–163 (1997)

Haykin, S.: Neural Networks: A Comprehensive Foundation, 2nd edn. Prentice Hall PTR, Upper Saddle River (1998)

Hearst, M.A.: Support vector machines. IEEE Intell. Syst. 13(4), 18–28 (1998)

Maimon, O., Rokach, L.: Introduction to soft computing for knowledge discovery and data mining. In: Maimon, O., Rokach, L. (eds.) Soft Computing for Knowledge Discovery and Data Mining, pp. 1–13. Springer, Boston (2008). https://doi.org/10.1007/978-0-387-69935-6_1

Martens, D., Baesens, B., Fawcett, T.: Editorial survey: swarm intelligence for data mining. Mach. Learn. 82(1), 1–42 (2011)

Parpinelli, R.S., Lopes, H.S., Freitas, A.A.: Data mining with an ant colony optimization algorithm. Trans. Evol. Comput. 6(4), 321–332 (2002)

Pedregosa, F., et al.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12(Oct), 2825–2830 (2011)

Peng, L., Yang, B., Chen, Y., Abraham, A.: Data gravitation based classification. Inf. Sci. 179(6), 809–819 (2009)

Ross Quinlan, J.: Induction of decision trees. Mach. Learn. 1(1), 81–106 (1986)

Rekha, S.: A survey of evolutionary algorithms and its use in data mining application. J. Pure Appl. Math. 119(12), 13593–13600 (2018)

Ratnoo, S., Vashishtha, J., Goyal, P., Ahuja, J.: A novel fitness computation framework for nature inspired classification algorithms. Procedia Comput. Sci. 132, 208–217 (2018)

Sousa, T., Silva, A., Neves, A.: Particle swarm based data mining algorithms for classification tasks. Parallel Comput. 30(5–6), 767–783 (2004)

Srikanth, R., George, R., Warsi, N., Prabhu, D., Petry, F.E., Buckles, B.P.: A variable-length genetic algorithm for clustering and classification. Pattern Recogn. Lett. 16(8), 789–800 (1995)

Vrbančič, G., Brezočnik, L., Mlakar, U., Fister, D., Fister Jr., I.: NiaPy: python microframework for building nature-inspired algorithms. J. Open Source Softw. 3, 613 (2018)

Yang, X.-S.: Firefly algorithm, stochastic test functions and design optimisation. Int. J. Bio-Inspired Comput. 2(2), 78–84 (2010)

Zurada, J.M.: Introduction to Artificial Neural Systems, vol. 8. West Publishing Company, St. Paul (1992)

Acknowledgment

Iztok Fister Jr., Grega Vrbančič and Vili Podgorelec acknowledge the financial support from the Slovenian Research Agency (Research Core Funding No. P2-0057). Dušan Fister acknowledges the financial support from the Slovenian Research Agency (Research Core Funding No. P5-0027). Iztok Fister acknowledges the financial support from the Slovenian Research Agency (Research Core Funding No. P2-0041).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Fister, I., Fister, I., Fister, D., Vrbančič, G., Podgorelec, V. (2020). On the Potential of the Nature-Inspired Algorithms for Pure Binary Classification. In: Krzhizhanovskaya, V.V., et al. Computational Science – ICCS 2020. ICCS 2020. Lecture Notes in Computer Science(), vol 12141. Springer, Cham. https://doi.org/10.1007/978-3-030-50426-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-030-50426-7_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-50425-0

Online ISBN: 978-3-030-50426-7

eBook Packages: Computer ScienceComputer Science (R0)