Abstract

Floating-point arithmetics may lead to numerical errors when numbers involved in an algorithm vary strongly in their orders of magnitude. In the paper we study numerical stability of Zernike invariants computed via complex-valued integral images according to a constant-time technique from [2], suitable for object detection procedures. We indicate numerically fragile places in these computations and identify their cause, namely—binomial expansions. To reduce numerical errors we propose piecewise integral images and derive a numerically safer formula for Zernike moments. Apart from algorithmic details, we provide two object detection experiments. They confirm that the proposed approach improves accuracy of detectors based on Zernike invariants.

This work was financed by the National Science Centre, Poland. Research project no.: 2016/21/B/ST6/01495.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The classical approach to object detection is based on sliding window scans. It is computationally expensive, involves a large number of image fragments (windows) to be analyzed, and in practice precludes the applicability of advanced methods for feature extraction. In particular, many moment functions [9], commonly applied in image recognition tasks, are often preculded from detection, as they involve inner products i.e. linear-time computations with respect to the number of pixels. Also, the deep learning approaches cannot be applied directly in detection, and require preliminary stages of prescreening or region-proposal.

There exist a few feature spaces (or descriptors) that have managed to bypass the mentioned difficulties owing to constant-time techniques discovered for them within the last two decades. Haar-like features (HFs), local binary patterns (LBPs) and HOG descriptor are state-of-the-art examples from this category [1, 4, 14] The crucial algorithmic trick that underlies these methods and allows for constant-time—O(1)—feature extraction are integral images. They are auxiliary arrays storing cumulative pixel intensities or other pixel-related expressions. Having prepared them before the actual scan, one is able to compute fast the wanted sums via so-called ‘growth’ operations. Each growth involves two additions and one subtraction using four entries of an integral image.

In our research we try to broaden the existing repertoire of constant-time techniques for feature extraction. In particular, we have managed to construct such new techniques for Fourier moments (FMs) [7] and Zernike moments (ZMs) [2]. In both cases a set of integral images is needed. For FMs, the integral images cumulate products of image function and suitable trigonometric terms and have the following general forms: \(\mathop {\sum \sum }\nolimits _{j,k} f(j,k) \cos (-2\pi (j t/\text {const}_1 \,+\, k u/\text {const}_2))\), and \(\mathop {\sum \sum }\nolimits _{j,k} f(j,k) \sin (-2\pi (j t/\text {const}_1 \,+\, k u/\text {const}_2))\), where f denotes the image function and t, u are order-related parameters. With such integral images prepared, each FM requires only 21 elementary operations (including 2 growths) to be extracted during a detection procedure. In the case of ZMs, complex-valued integral images need to be prepared, having the form:  , where

, where  stands for the imaginary unit (

stands for the imaginary unit ( ). The formula to extract a single ZM of order (p, q) is more intricate and requires roughly \(\frac{1}{24}p^3-\frac{1}{8}pq^2+\frac{1}{12}q^3\) growths, but still the calculation time is not proportional to the number of pixels.

). The formula to extract a single ZM of order (p, q) is more intricate and requires roughly \(\frac{1}{24}p^3-\frac{1}{8}pq^2+\frac{1}{12}q^3\) growths, but still the calculation time is not proportional to the number of pixels.

It should be remarked that in [2] we have flagged up, but not tackled, the problem of numerical errors that may occur when computations of ZMs are backed with integral images. ZMs are complex numbers, hence the natural data type for them is the complex type with real and imaginary parts stored in the double precision of the IEEE-754 standard for floating-point numbers (a precision of approximately 16 decimal digits). The main culprit behind possible numerical errors are binomial expansions. As we shall show the algorithm must explicitly expand two binomial expressions to benefit from integral images, which leads to numbers of different magnitudes being involved in the computations. When multiple additions on such numbers are carried out, digits of smaller-magnitude numbers can be lost.

In this paper we address the topic of numerical stability. The key new contribution are piecewise integral images. Based on them we derive a numerically safer formula for the computation of a single Zernike moment. The resulting technique introduces some computational overhead, but remains to be a constant-time technique.

2 Preliminaries

Recent literature confirms that ZMs are still being applied in many image recognition tasks e.g: human age estimation [8], electrical symbols recognition [16], traffic signs recognition [15], tumor diagnostics from magnetic resonance [13]. Yet, it is quite difficult to find examples of detection tasks applying ZMs directly. Below we describe the constant-time approach to extract ZMs within detection, together with the proposition of numerically safe computations.

2.1 Zernike Moments, Polynomials and Notation

ZMs can be defined in both polar and Cartesian coordinates as:

where:

is the imaginary unit (

is the imaginary unit ( ), and f is a mathematical or an image function defined over unit disk [2, 17]. p and q indexes, representing moment order, must be simultaneously even or odd, and \(p\geqslant |q|\).

), and f is a mathematical or an image function defined over unit disk [2, 17]. p and q indexes, representing moment order, must be simultaneously even or odd, and \(p\geqslant |q|\).

ZMs are in fact the coefficients of an expansion of function f, given in terms of Zernike polynomials \(V_{p,q}\) as the orthogonal base:Footnote 1

where  . As one can note \(V_{p,q}\) combines a standard polynomial defined over radius r and a harmonic part defined over angle \(\theta \). In applications, finite partial sums of expansion (4) are used. Suppose \(\rho \) and \(\varrho \) denote the imposed maximum orders, polynomial and harmonic, respectively, and \(\rho \geqslant \varrho \). Then, the partial sum that approximates f can be written down as:

. As one can note \(V_{p,q}\) combines a standard polynomial defined over radius r and a harmonic part defined over angle \(\theta \). In applications, finite partial sums of expansion (4) are used. Suppose \(\rho \) and \(\varrho \) denote the imposed maximum orders, polynomial and harmonic, respectively, and \(\rho \geqslant \varrho \). Then, the partial sum that approximates f can be written down as:

2.2 Invariants Under Rotation

ZMs are invariant to scale transformations, but, as such, are not invariant to rotation. Yet, they do allow to build suitable expressions with that property. Suppose \(f'\) denotes a version of function f rotated by an angle \(\alpha \), i.e. \(f'(r,\theta )=f(r,\theta +\alpha )\). It is straightforward to check, deriving from (1), that the following identity holds

where \(M'_{p,q}\) represents a moment for the rotated function \(f'\). Hence in particular, the moduli of ZMs are one type of rotational invariants, since

Apart from the moduli, one can also look at the following products of moments

with the first factor raised to a natural power. After rotation one obtains

Hence, by forcing \(n q + s = 0\) one can obtain many rotational invariants because the angle-dependent term  disappears.

disappears.

2.3 ZMs for an Image Fragment

In practical tasks it is more convenient to work with rectangular, rather than circular, image fragments. Singh and Upneja [12] proposed a workaround to this problem: a square of size \(w\times w\) in pixels (w is even) becomes inscribed in the unit disc, and zeros are “laid” over the square-disc complement. This reduces integration over the disc to integration over the square. The inscription implies that widths of pixels become \(\sqrt{2}/w\) and their areas \(2/w^2\) (a detail important for integration). By iterating over pixel indexes: \(0\, \leqslant \, j, k \, \leqslant \, w - 1\), one generates the following Cartesian coordinates within the unit disk:

In detection, it is usually sufficient to replace integration involved in \(M_{p,q}\) by a suitable summation, thereby obtaining a zeroth order approximation. In subsequent sections we use the formula below, which represents such an approximation (hat symbol) and is adjusted to have a convenient indexing for our purposes:

— namely, we have introduced the substitutions \(p:=2p+o\), \(q:=2q+o\). They play two roles: they make it clear whether a moment is even or odd via the flag \(o\in \{0,1\}\); they allow to construct integral images, since exponents \(\frac{1}{2}(p\mp s)-s\) present in (2) are now suitably reduced.

2.4 Proposition from [2]

Suppose a digital image of size \(n_x\times n_y\) is traversed by a \(w\times w\) sliding window. For clarity we discuss only a single-scale scan. The situation is sketched in Fig. 1. Let (j, k) denote global coordinates of a pixel in the image. For each window under analysis, its offset (top-left corner) will be denoted by \((j_0, k_0)\). Thus, indexes of pixels that belong to the window are: \(j_0\, \leqslant \, j \, \leqslant \, j_0{+}w{-}1\), \(k_0\, \leqslant \, k \, \leqslant \, k_0{+}w{-}1\). Alse let \((j_c,k_c)\) represent the central index of the window:

Given a global index (j, k) of a pixel, the local Cartesian coordinates corresponding to it (mapped to the unit disk) can be expressed as:

Let \(\{ii_{t,u}\}\) denote a set of complex-valued integral imagesFootnote 2:

where pairs of indexes (t, u), generating the set, belong to: \(\{(t,u):0{\, \leqslant \,} t {\, \leqslant \,} \lfloor \rho /2\rfloor ,\) \(0{\, \leqslant \,} u {\, \leqslant \,} \min \left( \rho {-}t,\lfloor (\rho {+}\varrho )/2\rfloor \right) \}\).

For any integral image we also define the growth operator over a rectangle spanning from \((j_1,k_1)\) to \((j_2,k_2)\):

with two complex-valued substractions and one addition. The main result from [2] (see there for proof) is as follows.

Proposition 1

Suppose a set of integral images \(\bigl \{ii_{t,u}\bigr \}\), defined as in (14), has been prepared prior to the detection procedure. Then, for any square window in the image, each of its Zernike moments (11) can be calculated in constant time — O(1), regardless of the number of pixels in the window, as follows:

3 Numerical Errors and Their Reduction

Floating-point additions or subtractions are dangerous operations [6, 10] because when numbers of different magnitudes are involved, the right-most digits in the mantissa of the smaller-magnitude number can be lost when widely spaced exponents are aligned to perform an operation. When ZMs are computed according to Proposition 1, such situations can arise in two places.

The connection between the definition-style ZM formula (11) and the integral images-based formula (16) are expressions (13): \(\frac{\sqrt{2}}{w}(k-k_c)\), \(\frac{\sqrt{2}}{w}(j_c-j)\). They map global coordinates to local unit discs. When the mapping formulas are plugged into \(x_k\) and \(y_j\) in (11), the following subexpression arises under summations:

Now, to benefit from integral images one has to explictly expand the two binomial expressions, distinguishing two groups of terms:  —dependent on the global pixel index, and

—dependent on the global pixel index, and  —independent of it . By doing so, coordinates of the particular window can be isolated out and formula (16) is reached. Unfortunately, this also creates two numerically fragile places. The first one are integral images themselves, defined by (14). Global pixel indexes j, k present in power terms

—independent of it . By doing so, coordinates of the particular window can be isolated out and formula (16) is reached. Unfortunately, this also creates two numerically fragile places. The first one are integral images themselves, defined by (14). Global pixel indexes j, k present in power terms  vary within: \(0 \, \leqslant \, j \leqslant n_y - 1\) and \(0 \, \leqslant \, k \leqslant n_x - 1\). Hence, for an image of size e.g. \(640 \times 480\), the summands vary in magnitude roughly from \(10^{0(t+u)}\) to \(10^{3(t+u)}\). To fix an example, suppose \(t+u=10\) (achievable e.g. when \(\rho =\varrho =10\)) and assume a roughly constant values image function. Then, the integral image \(ii_{t,u}\) has to cumulate values ranging from \(10^0\) up to \(10^{30}\). Obviously, the rounding-off errors amplify as the \(ii_{t,u}\) sum progresses towards the bottom-right image corner. The second fragile place are expressions:

vary within: \(0 \, \leqslant \, j \leqslant n_y - 1\) and \(0 \, \leqslant \, k \leqslant n_x - 1\). Hence, for an image of size e.g. \(640 \times 480\), the summands vary in magnitude roughly from \(10^{0(t+u)}\) to \(10^{3(t+u)}\). To fix an example, suppose \(t+u=10\) (achievable e.g. when \(\rho =\varrho =10\)) and assume a roughly constant values image function. Then, the integral image \(ii_{t,u}\) has to cumulate values ranging from \(10^0\) up to \(10^{30}\). Obviously, the rounding-off errors amplify as the \(ii_{t,u}\) sum progresses towards the bottom-right image corner. The second fragile place are expressions:  and

and  , involving the central index, see (16). Their products can too become very large in magnitude as computations move towards the bottom-right image corner.

, involving the central index, see (16). Their products can too become very large in magnitude as computations move towards the bottom-right image corner.

In error reports we shall observe relative errors, namely:

where \(\phi \) denotes a feature (we skip indexes for readability) computed via integral images while \(\phi ^*\) its value computed by the definition. To give the reader an initial outlook, we remark that in our C++ implementation noticeable errors start to be observed already for \(\rho {=}\varrho {=}8\) settings, but they do not yet affect significantly the detection accuracy. For \(\rho {=}\varrho {=}10\), the frequency of relevant errors is already clear they do deteriorate accuracy. For example, for the sliding window of size \(48\times 48\), about \(29.7\%\) of all features have relative errors equal at least \(25\%\). The numerically safe approach we are about to present reduces this fraction to \(0.7\%\).

3.1 Piecewise Integral Images

The technique we propose for reduction of numerical errors is based on integral images that are defined piecewise. We partition every integral image into a number of adjacent pieces, say of size \(W\times W\) (border pieces may be smaller due to remainders), where W is chosen to exceed the maximum allowed width for the sliding window. Each piece obtains its own “private” coordinate system. Informally speaking, the (j, k) indexes that are present in formula (14) become reset to (0, 0) at top-left corners of successive pieces. Similarly, the values cumulated so far in each integral image \(ii_{t,u}\) become zeroed at those points. Algorithm 1 demonstrates this construction. During detection procedure, global coordinates (j, k) can still be used in main loops to traverse the image, but once the window position gets fixed, say at \((j_0,k_0)\), then we shall recalculate that position to new coordinates \((j'_0,k'_0)\) valid for the current piece in the following manner:

Note that due to the introduced partitioning, the sliding window may cross partitioning boundaries, and reside in either: one, two or four pieces of integral images. That last situation is illustrated in Fig. 2. Therefore, in the general case, the outcomes of growth operations \(\varDelta \) for the whole window will have to be combined from four parts denoted in the figure by \(P_1\), \(P_2\), \(P_3\), \(P_4\). An important role in that context will be played by the central index \((j_c, k_c)\). In the original approach its position was calculated only once, using global coordinates and formula (12). The new technique requires that we “see” the central index differently from the point of view of each part \(P_i\). We give the suitable formulas below, treating the first part \(P_1\) as reference.

Note that the above formulation can, in particular, yield negative coordinates. More precisely, depending on the window position and the \(P_i\) part, the coordinates of the central index can range within: \(-W+w/2< j_{c,P_i}, k_{c,P_i} < 2W-w/2\).

3.2 Numerically Safe Formula for ZMs

As the starting point for the derivation we use a variant of formula (11), taking into account the general window position with global pixel indexes ranging as follows: \(j_0\, \leqslant \, j \, \leqslant \, j_0 {+} w {-} 1\), \(k_0\, \leqslant \, k \, \leqslant \, k_0 {+} w {-} 1\). We substitute \(\frac{4p{+}2o{+}2}{\pi w^2}\) into \(\gamma \).

The second pass in the derivation of (25) splits the original summation into four smaller summations over window parts lying within different pieces of integral images, see Fig. 2. We write this down for the most general case when the window crosses partitioning boundaries along both axes. We remind that three simpler cases are also possible: \(\{P_1\}\) (no crossings), \(\{P_1,P_2\}\) (only vertical boundary crossed), \(\{P_1,P_3\}\) (only horizontal boundary crossed). The parts are directly implied by: the offset \((j_0,k_0)\) of detection window, its width w and pieces size W. For strictness, we could have written a functional dependence of form \(P(j_0,k_0,w,W)\), which we skip for readability. Also in this pass we switch from global coordinates (j, k) to piecewise coordinates \((j_P,k_P)\) valid for the given part P. The connection between those coordinates can be expressed as follows

In the third pass we apply two binomial expansions. In both of them we distinguish two groups of terms:  (independent of the current pixel index) and

(independent of the current pixel index) and  (dependent on it). Lastly, we change the order of summations and apply the constant-time \(\varDelta \) operation.

(dependent on it). Lastly, we change the order of summations and apply the constant-time \(\varDelta \) operation.

The key idea behind formula (25), regarding its numerical stability, lies in the fact that it uses decreased values of indexes \(j_P\), \(k_P\), \(j_{c,P}\), \(k_{c,P}\), comparing to the original approach, while maintaining the same differences \(k_P-k_{c,P}\) and \(j_{c,P} - j_P\). Recall the initial expressions \((k-k_c)\sqrt{2}/w\) and \((j_c-j)\sqrt{2}/w\) and note that one can introduce arbitrary shifts, e.g. \(k:=k\pm \alpha \) and \(k_c:=k_c\pm \alpha \) (analogically for the pair: j, \(j_c\)) as long as differences remain intact. Later, when indexes in such a pair become separated from each other due to binomial expansions, their numerical behaviour is safer because the magnitude orders are decreased.

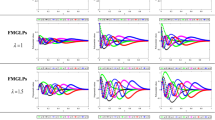

Features with high errors can be regarded as damaged, or even useless for machine learning. In Table 1 and Fig. 3 we report percentages of such features for a given image, comparying the original and the numerically safer approach. The figure shows how the percentage of features with relative errors greater than 0.25, increases as the sliding window moves towards the bottom-right image corner.

4 Experiments

In subsequent experiments we apply RealBoost (RB) learning algorithm producing ensembles of weak classifiers: shallow decision trees (RB+DT) or bins (RB+B), for details see [2, 5, 11]. Ensemble sizes are denoted by T. Two types of feature spaces are used: based solely on moduli of Zernike moments (ZMs-M), and based on extended Zernike product invariants (ZMs-E); see formulas (7), (8), respectively. The ‘-NER’ suffix stands for: numerical errors reduction. Feature counts are specified in parenthesis. Hence, e.g. ZMs-E-NER (14250) represents extended Zernike invariants involving over 14 thousand features, computed according to formula (25) based on piecewise integral images.

Experiment: “Synthetic A letters” For this experiment we have prepared a synthetic data set based on font material gathered by T.E. de Campos et al. [2, 3]. A subset representing ‘A’ letters was treated as positives (targets). In train images, only objects with limited rotations were allowed (\(\pm 45^\circ \) with respect to their upright positions). In contrast, in test images, rotations within the full range of \(360^\circ \) were allowed. Table 2 lists details of the experimental setup.

We report results in the following forms: examples of detection outcomes (Fig. 4), ROC curves over logarithmic FAR axis (Fig. 5a), test accuracy measures for selected best detectors (Table 4). Accuracy results were obtained by a batch detection procedure on 200 test images including 417 targets (within the total of \(3\,746\,383\) windows).

The results show that detectors based on features obtained by the numerically safe formula achieved higher classification quality. This can be well observed in ROCs, where solid curves related to the NER variant dominate dashed curves, and is particularly evident for higher orders \(\rho \) and \(\varrho \) (blue and red lines in Fig. 5a). These observations are also confirmed by Table 4

Experiment: “Faces” The learning material for this experiment consisted of \(3\,000\) images with faces (in upright position) looked up using the Google Images and produced a train set with \(7\,258\) face examples (positives). The testing phase was a two-stage process, consisting of preliminary and final tests. Preliminary tests were meant to generate ROC curves and make an initial comparison of detectors. For this purpose we used another set of faces (also in upright position) containing \(1\,000\) positives and \(2\,000\,000\) negatives. The final batch tests were meant to verify the rotational invariance. For this purpose we have prepared the third set, looking up faces in unnatural rotated positions (example search queries: “people lying down in circle”, “people on floor”, “face leaning to shoulder”, etc.). We stopped after finding 100 such images containing 263 faces in total (Table 3).

Zernike Invariants as Prescreeners. Preliminary tests revealed that classifiers trained on Zernike features were not accurate enough to work as standalone face detectors invariant to rotation. ROC curves (Fig. 5b) indicate that sensitivities at satisfactory levels of \({\approx } 90\%\) are coupled with false alarm rates \({\approx }\,2{\cdot } 10^{-4}\), which, though improved with respect to [2]Footnote 3, is still too high to accept. Therefore, we decided to apply obtained detectors as prescreeners invariant to rotation. Candidate windows selected via prescreeningFootnote 4 were then analyzed by angle-dependent classifiers. We prepared 16 such classifiers, each responsible for an angular section of \(22.5^\circ \), trained using \(10\,125\) HFs and RB+B algorithm. Figure 5b shows an example of ROC for an angle-dependent classifier (\(90^\circ \,{\pm }\,11.25^\circ \)). The prescreening eliminated about \(99.5\%\) of all windows for ZMs-M and \(97.5\%\) for ZMs-E.

Table 4 reports detailed accuracy results, while Fig. 6 shows detection examples. It is fair to remark that this experiment turned out to be much harder than the former one. Our best face detectors based on ZMs and invariant to rotation have the sensitivity of about \(90\%\) together with about \(7\%\) of false alarms per image, which indicates that further fine-tuning or larger training sets should be considered. As regards the comparison between standard and NER variants, as previously the ROC curves show a clear advantage of NER prescreeners (solid curved dominate their dashed counterparts). Accuracy results presented in Table 4 also show such a general tendency but are less evident. One should remember that these results pertain to combinations: ‘prescreener (ZMs) + postscreener (HFs)’. An improvement in the prescreener alone may, but does not have to, influence directly the quality of the whole system.

5 Conclusion

We have improved the numerical stability of floating-point computations for Zernike moments (ZMs) backed with piecewise integral images. We hope this proposition can pave way to more numerous detection applications involving ZMs. A possible future direction for this research could be to analyze the computational overhead introduced by the proposed approach. Such analysis can be carried out in terms of expected value of the number of needed growth operations.

Notes

- 1.

ZMs expressed by (1) arise as inner products of the approximated function and Zernike polynomials: \(M_{p,q}=\langle f, V_{p,q}\rangle \big / \Vert V_{p,q}\Vert ^2\).

- 2.

In [2] we have proved that integral images \(ii_{t,u}\) and \(ii_{u,t}\) are complex conjugates at all points, which allows for computational savings.

- 3.

In [2] the analogical false alarm rates were at the level \({\approx }\,5\cdot 10^{-3}\).

- 4.

We chose decision thresholds for prescreeners to correspond to \(99.5\%\) sensitivity.

References

Acasandrei, L., Barriga, A.: Embedded face detection application based on local binary patterns. In: 2014 IEEE International Conference on High Performance Computing and Communications (HPCC, CSS, ICESS), pp. 641–644 (2014)

Bera, A., Klęsk, P., Sychel, D.: Constant-time calculation of Zernike moments for detection with rotational invariance. IEEE Trans. Pattern Anal. Mach. Intell. 41(3), 537–551 (2019)

de Campos, T.E., et al.: Character recognition in natural images. In: Proceedings of the International Conference on Computer Vision Theory and Applications, Lisbon, Portugal, pp. 273–280 (2009)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: Conference on Computer Vision and Pattern Recognition (CVPR 2005), vol. 1, pp. 886–893. IEEE Computer Society (2005)

Friedman, J., Hastie, T., Tibshirani, R.: Additive logistic regression: a statistical view of boosting. Ann. Stat. 28(2), 337–407 (2000)

Goldberg, D.: What every computer scientist should know about floating-point arithmetic. ACM Comput. Surv. 23(1), 5–48 (1991)

Klȩsk, P.: Constant-time fourier moments for face detection—can accuracy of haar-like features be beaten? In: Rutkowski, L., Korytkowski, M., Scherer, R., Tadeusiewicz, R., Zadeh, L.A., Zurada, J.M. (eds.) ICAISC 2017. LNCS (LNAI), vol. 10245, pp. 530–543. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59063-9_47

Malek, M.E., Azimifar, Z., Boostani, R.: Facial age estimation using Zernike moments and multi-layer perceptron. In: 22nd International Conference on Digital Signal Processing (DSP), pp. 1–5 (2017)

Mukundan, R., Ramakrishnan, K.: Moment Functions in Image Analysis - Theory and Applications. World Scientific, Singapore (1998)

Rajaraman, V.: IEEE standard for floating point numbers. Resonance 21(1), 11–30 (2016). https://doi.org/10.1007/s12045-016-0292-x

Rasolzadeh, B., et al.: Response binning: improved weak classifiers for boosting. In: IEEE Intelligent Vehicles Symposium, pp. 344–349 (2006)

Singh, C., Upneja, R.: Accurate computation of orthogonal Fourier-Mellin moments. J. Math. Imaging Vision 44(3), 411–431 (2012)

Sornam, M., Kavitha, M.S., Shalini, R.: Segmentation and classification of brain tumor using wavelet and Zernike based features on MRI. In: 2016 IEEE International Conference on Advances in Computer Applications (ICACA), pp. 166–169 (2016)

Viola, P., Jones, M.: Rapid object detection using a boosted cascade of simple features. In: Conference on Computer Vision and Pattern Recognition (CVPR 2001), pp. 511–518. IEEE (2001)

Xing, M., et al.: Traffic sign detection and recognition using color standardization and Zernike moments. In: 2016 Chinese Control and Decision Conference (CCDC), pp. 5195–5198 (2016)

Yin, Y., Meng, Z., Li, S.: Feature extraction and image recognition for the electrical symbols based on Zernike moment. In: 2017 IEEE 2nd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), pp. 1031–1035 (2017)

Zernike, F.: Beugungstheorie des Schneidenverfahrens und seiner verbesserten Form, der Phasenkontrastmethode. Physica 1(8), 668–704 (1934)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Klęsk, P., Bera, A., Sychel, D. (2020). Reduction of Numerical Errors in Zernike Invariants Computed via Complex-Valued Integral Images. In: Krzhizhanovskaya, V.V., et al. Computational Science – ICCS 2020. ICCS 2020. Lecture Notes in Computer Science(), vol 12139. Springer, Cham. https://doi.org/10.1007/978-3-030-50420-5_24

Download citation

DOI: https://doi.org/10.1007/978-3-030-50420-5_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-50419-9

Online ISBN: 978-3-030-50420-5

eBook Packages: Computer ScienceComputer Science (R0)