Abstract

Over the last decade, most of studies were focused on assessments of presence, degrees of immersion and user involvement with virtual reality, few works, however, addressed the mixed reality environments. In virtual reality, mostly through an egocentric point of view, the user is usually represented by a virtual body. In this work, we present an approach where the user can see himself instead of a virtual character. Different than augmented reality approaches, we only detach the user’s body and his/her proxies interfaces. We conducted four experiments to measure and evaluate quantitatively and qualitatively the feeling of presence. We measure and compare the degree of presence in different modes and techniques of representation of the user’s body through virtually integrated questionnaires. We also analyzed what factors influence to a greater or lesser feeling of presence. For this study, we adapted a real bike with an haptic interface. Results show that there is not necessarily a better or worse type of avatar, but their appearance must match the scenarios and others elements in the virtual environment to greatly increase the participant sense of presence.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Studies on immersion and presence in Mixed Reality (MR) are not new, but with the continuous evolution of the technologies used, we are increasing the simulation capacity of the developed experiences. An important point of this advancement is the ability to present content more realistically, either graphically or interactively, allowing more immersive experiences to the user [1].

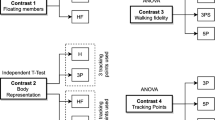

In a recent work [2] we introduced the concept of real egocentric representation: real hands, forearms and legs appearance, capturing images from the Head Mounted Display (HMD) camera and showing tangible interfaces feedback for the user. We define here the concept of real avatar and virtual avatar representation. In the real egocentric representation, the real body and interfaces proxies are shown. For so, image processing techniques are required for extracting the user’s body from the background scene. Gestures and movement must be captured in order to become inputs of the virtual scenario. For the virtual avatar, other techniques must be used for interpreting the user’s actions and interfaces (e.g., traditional VR interfaces, depth cameras, tracking systems). In the previous work, we built a real bicycle and an HMD as a MR display using a procedural city as a background scenario. We show that it is the MR that provides a greater sense of presence. However, the work only presents a qualitative analysis. In this work, the same bicycle system was implemented but a new study was carried out in order to collect quantitative data in a systematic way through four experiments: two that simulates a VR mode with a virtual avatar and two others with a MR simulation mode, where the user’s real body and the front part of the real bicycle are visualized inside the virtual environment. Each mode has two different types of scenarios, one realistic and one non-realistic, for all participants. Thus, we sought to verify how much the visualization of the body itself in the interactive environment influences the sensation of presence and whether it is better or worse than the feeling of presence visualizing a 3D reconstruction. In this paper, the visualization of one’s own real hand, forearms and part of the legs will be referred as a real avatar, while the 3D reconstruction virtual hands, forearms and part of the legs will be described as a virtual avatar. Besides, we seek to identify the influence of the type of scenario (realistic or non-realistic) in which the participant is involved, according to the representation of his/her avatar, and the effect of Plausibility through features to evaluate reactions of the users during the experiments. The tests were conducted within twenty participants.

On Sect. 2, we discuss related works that influenced and lead to the development of this experiment. After that, in Sect. 3, it is shown the methodology and data measures acquirements. Following Sect. 4 details experiments and the results obtained. Finally, in Sect. 5, the conclusion achieved based on the results is presented.

2 Related Works

2.1 Presence in Mixed and Virtual Reality

Studies on VR have gained prominence in several areas of knowledge and have a fundamental characteristic: the creation of the sense of presence. According with Witmer et al. [3], presence can be defined as a normal awareness phenomenon that requires directed attention and is based on the interaction between sensory stimulation, environmental factors that encourage involvement and enable immersion, in addition to inherent tendencies to become involved. However, few studies had taken those questions from a real virtual environment evaluation in order to mitigate the effects of interruption to measure presence [4]. Other authors subdivide presence into objective and subjective categories [5] or in different types of personal, social, and local presence [6]. As for [7], the act of being aware of its surroundings is of extreme importance to understand the factors of immersion and presence. Slater et al. [8, 9] argues that the perception of the environment for the participant is still an action that involves his/her whole body, so the more faithful the system can capture the movements or gestures and represent them within the system, the higher is the immersion. Thus, when a VR system is determined immersive, we mean that it possesses the technological capability to meet the sensory needs of a system participant and the immersion can be measured through the hardware’s capacity. It is known that to make the user experience immersive, with environments generated through sensorial elements, usually visual, auditory and tactile stimuli and also by the continuous tracking of the surrounding environment, is crucial to track real and virtual world, maintaining their correct positioning during the interaction.

In the case of MR, one of the main characteristics is the ability to permeate between the virtual and the real world [10]. This ability that MR environment is constructed and enriched using real-world information sources that causes extremely intense and immersive feeling has emerged as “pervasive virtuality” [11,12,13].

When a VR or MR environment is able to present itself in a sufficiently believable way, it can provide the sensation of “Plausibility” described in [8, 15]. For instance, when participants react and try to bypass rapidly approaching virtual objects. Another sensation connected with the sense of presence is the Place Illusion [16], it is presented as the sensation of being in the place shown as it was a real experience. So, when a system effectively manages to replace or alter a person’s sensory perception, the consciousness of reality is transformed into an awareness of the virtually created scenario and means that the visual scene visualized could be perceived in a satisfactorily realistic way by the participant’s brain.

2.2 Virtual and Real Avatars

The ‘obvious’ distinction between the terms ‘real’ and ‘virtual’ is shown to have several different aspects, depending on whether one is dealing with real or virtual objects, real or virtual images, and direct or non-direct viewing of these [10]. In this paper, our concept of a real avatar will be considered the experience of someone viewing one’s own real hand directly in front of one’s own self, which is defined as quite distinct from a virtual avatar [10], that is, viewing an image of the virtual 3D hand on the HMD, and it’s associated perceptual issues.

2.3 Interfaces and Interactivity in Virtual Environments

In order to interact with the contents of VR or MR, an interface is needed to meet the requirements of the experience and enable the feeling of presence. Traditionally, physical controls have been used for manipulations of objects in VR interactions, with gesture controls being used on a smaller scale, due to their application being more punctual. However, some authors [17] show that gesture controls have the highest degree of immersion in the system, allowing the interaction between the user and the interface to be done in a more natural way (NUI). In MR, the user can see virtual elements and some of the real features, but one must carefully think about how the interaction with the content will be, since putting a standard control in the user’s hands will break the immersion of the system and user presence. One possibility is to use a motion controller disguised as a “prop” with its usability and appearance matching the simulated environment. This allows the user to physically interact with a controller in a more natural way. The use of bicycles with HMD acting as haptic interfaces in VR have also been presented in different works, such as [18]. However, assessments on the interactivity and appropriation of the user’s actual body itself with the bicycle are still rare, being most works focused on the body perception restricted to the arms and hands of the users [19, 20]. This implementation of haptic interfaces in virtual systems contributes to increase user presence, but without haptic feedback in the interactions [21, 22], which should be consistent with the actions performed in the real world, in order to avoid the effect of sensory conflicts [23, 24] and possible breakdown of immersion and presence. This was the motivation for [2], who implemented an haptic system using a standard commercial bicycle to allow the interactivity of the experiment to be as close as possible with a realistic situation, since its handlebars can be moved freely with the hands of the participant, either by viewing a real or a virtual avatar. As an extra complement, we developed integrated questionnaires as an alternative to obtain the data, avoiding any possible presence breakdown and increasing the consistency of the data response variance, as based on recent works [25].

3 Methodology

The detailed description of the MR builds, and also the chroma key installation have been previously presented in [2]. Basically, it is a standard commercial bicycle supported with a stand to hold the bicycle stable, an Arduino board connected to the sensors, a Hall sensor and magnets attached to the rear rims used to measure the RPM of the rear wheel of the bicycle and a 10 KΩ potentiometer connected through 3D printed gears to the handlebars allowing to capture the turning angle of the bicycle. The software was developed in Unity3D (2017.1.0f3) using Leap Motion Orion (3.2.1) and SteamVR (1.2.3) plugins and using the Arduino sensors data as input on the application. The experiment runs on a Windows PC with an Intel i7 3.10 GHz, 8 GB RAM a Nvidia GTX 1050 and the HTC Vive as the Head Mounted Display.

Exclusively for VR mode, there was a capture of the participant’s hands actions and transmission to the simulation, causing the virtual avatar to move hands and fingers faithfully to the user’s actual movements. With the positioning data of the participant’s hands, we performed the positioning of the front arm through algorithms of inverse kinematics to enable a realistic movement of the arms. This synchronization with the positioning of the real and virtual bicycles allowed the user to move their hands touching the surface of the actual bicycle while visualizing the same occurring in the virtual environment with the hand of his/her avatar and the virtual bicycle. For the MR mode, there was no need to make this positioning since the participant sees his/her own body and the actual bicycle within the simulation. The image captured by the Vive’s front facing single camera was positioned inside of the simulation aligned with the point of view from the user.

3.1 Scenarios

The scenarios chosen were from two cities: a representative of a nonrealistic environment, in this case, a cartoon style, with vivid colors and a low number of polygons, and another capable of representing a realistic city with a high quantity of polygons, high quality of models and textures. Each city was built following the same pattern for the streets, changing only the appearances and distribution of the buildings and houses between them. The location of the participant’s starting point in both cities was the same, close to several corners and intersections of the virtual map, giving him/her different possibilities of exploration.

In Fig. 1, the four generated modes can be seen: A: Cartoon Scenario in MR, with a real avatar (where the participant can see his hands and the real bicycle), B: Cartoon Scenario in VR, with a virtual avatar (where the participant sees the hands of an avatar and a virtual bike), C: Realistic Scenario in MR, with the real avatar and D: Realistic Scenario in VR, with the virtual avatar.

3.2 Participants, Stimuli, Tasks, and Measures

The experiment was performed with 20 participants: 15 were males and five females. The age group ranged from 19 to 32 years, and 50% of the users had no experience with VR, and 70% were not familiar with cycling.

After signing the consent, image, video forms, and also a profile questionnaire to evaluate their experience with HMD and their ability to ride a bicycle, each participant was asked to go up on the real bicycle. After setting up the HMD and being familiarized with the handlebar, the participant was transported to a virtual room where a self-explanatory board presented the objectives of the experiment and how to proceed to answer the integrated questionnaires. After that, he/she started the experiment in one of the four possible modes. The usage time for each mode was fixed (2 min) so that the participant could freely pedal within the presented virtual scene. The order in which the participant experimented was defined following the sampling balancing technique of the Latin Square, used to avoid the learning factor of the participant between the environments [26]. In this study, since the experiment was composed of 4 distinct modes, the Latin Square used was a multiple of 4.

To encourage the movement and exploration in the scenarios, we scattered along with the route objects that should be collected. Each scenario had 20 precious gems that could be collected along the course and a hidden crystal that shifted position to each mode. Also, as part of the scenario, characters were walking through the city and looking at the participant when they approach, to improve the sense of realism.

To analyze the degree of presence, integrated questionnaires were developed during the experiment and adapted inside our virtual environment experiment. Thus, through the ray casting-gaze technique, the participant answered by looking at the desired answer, and after 3 s, the option was confirmed. In total, four questions were proposed at the end of each mode, whose responses were collected through a seven-point Likert scale.

During the course, the evaluator had a real-time aerial view of the city map with the participant’s path demarcation. This view allowed the evaluator to interact with the virtual scene through the interface that was presented on the computer, activating events that should draw attention and cause reactions of the participant, such as an explosion that occurred in pre-established locations or a tank of war that fired bullets of cannon toward the participant. These reactions were implemented to analyze the effects of “plausibility” of the virtual system [15]. The explosion was chosen to represent reactions to the unexpected relative to a sudden event, while the bullets fired by the tank served to observe the reflection of the participant when dodging from a virtual object. Each event was performed only once in each mode, and it was adopted that the first attempt of reaction should occur only after one minute of the beginning of the current mode. The order of which event was first activated was alternated with each mode. All sessions were recorded on video.

At the end of the four modes, a final “thank-you” screen was displayed. Finally, we applied a final post-experiment questionnaire with two questions: Which was the scenario remembered in more detail, and if the participant was deconcentrated for some reason during the experiment. A summary of the data collected is presented in Table 1.

4 Virtual or Real Avatars: Which Is Better?

The 7-point Likert scale responses were considered as an ordinal scale. Thus, these non-parametric data were analyzed following Friedman and Mann-Whitney tests [27], in the statistical analysis program R.

4.1 Answers Evaluation for the Sense of Presence

Figure 2 shows the graph of the responses to the first question at the end of each of the four modes of the experiment: “How much do you feel present at the moment?”. The data indicates that in the Realistic MR Scenario the number of participants that felt present were higher than when compared with other modes. The Friedman test was performed, and a statistical significance was found (Friedman X2 = 3.0008, p = 0.01439) only between Realistic MR and Cartoon MR Scenarios, identified by an * in Fig. 2.

It was also observed that the Mann-Whitney test did not show a significant difference between the groups of participants with and without experience in the use of devices of VR (for all groups p > 0.05) and with and without experience with cycling (for all groups p > 0.05).

In the environment analysis, high notes represent 50% or more, but no significant difference was found between the modes (Friedman X2 = 2.115, p > 0.05). This analysis indicates that the scenarios are important for the sense of presence due to the high notes, but this importance is independent of the visual style of the scenario.

The analyze of the avatar body indicates no significant difference between any of the groups (Friedman X2 = 1.2702, p > 0.05). However, it is possible to see a reduction in the number of high notes (7 and 6) and an increase in the low notes (1 and 2) when compared to the previous question. These results can lead us to conclude that the body also contributes to the presence.

When analyzing the locomotion scores, there was a constant mean (5.15) among the four modes, but once more, no significant difference was found between these modes (Friedman X2 = 1.7201, p > 0.05), even though this result was expected, as the locomotion was a constant among the modes tested because there were no changes in how the bike interacted in each mode.

4.2 Complementary Analysis

The combination of the interface in MR together with the Realistic Scenario showed a significant increase in the sense of presence of the participant than when the same interface was applied to the Cartoon Scenario. Visualizing his/her own body within an interactive environment influences the sense of presence. However, this influence can be both positive and negative, as demonstrated by the conflicting results obtained in the Realistic MR and Cartoon MR Scenarios. A plausible explanation for this phenomenon may be the effect of the Uncanny Valley [28, 29] that occurs when a virtual or robotic character tries to replicate human behavior, but imperfectly resemble actual human beings provoke strangely familiar feelings of eeriness and revulsion in observers.

We confirmed that if the participant sees his/her own hands inside the virtual environment and if his/her hands are inside a scenario that does not match a reality similar to the hands (for example, the Cartoon environment), it can cause revulsion and also the rupture of the immersion. This also explains why it does not happen in the MR Realistic Scenario as the real avatar overcome the Uncanny Valley. In the case of Realistic VR and Cartoon VR Scenarios, which use the VR interface, the virtual hand is anterior to the Uncanny Valley because it does not try to faithfully replicate the appearance of a hand in the smallest details and therefore was well accepted by the participants, supported by the similarly researched in [30, 31].

4.3 Analysis of Participant Reactions

The verification of participant reactions to the events was done by observing the video records of the experiment. Reactions per participant were counted and organized according to the two types of reaction (unexpected event or reflex event) presented in Figs. 3 and 4, respectively.

In the graph of reactions to the unexpected event (explosion), we can see that in all modes, the number of users who reacted to the unexpected is greater than those who did not respond with a reaction. However, there is a difference in the number of reactions of users in the Realistic MR Scenario (16) than in the other modes (13, 12, 13). Also, presenting a higher proportion in the difference between the users with the reaction of those who did not present a response.

In relation to the graph of Fig. 4, we noticed that there was less variance in the differences between the users that presented a reaction of those that did not. Perhaps due to the fact that the tank was already visualized by the user and that somehow subconscious could already be predicted that something could happen even without knowing exactly what. Even so, it should be noted that for the same RM Realistic Scenario, there was also a greater amount of reaction than for other scenarios. Once the user visualizes their own hands, parts of the arm and legs and even of the actual bicycle, the feeling of presence and plausibility of being in that place could have caused that when foreseeing a deviation or even a reaction they were more intense. The targeting of bullets towards the user certainly allowed greater credibility of the event that comes in compliance with the predictive effect that the tank can generate when viewed by the user, causing a critical role to maintain the effect of Plausibility Illusion as indicated by Sanchez and Slater in [14, 15].

These differences of reactions corroborate with the results of the data in the comparison of the degree of presence seen in Fig. 2. We can affirm that both groups with and without reaction of Realistic MR Scenario were more immersed in the virtual environment than the non-reaction group of the Cartoon MR Scenario. This indicates that the act of visualizing their own body within a realistic scenario also brings a sense of presence greater than in a non-realistic scenario. The free comments further support that participants could indicate in the post-test questionnaire (“Which of the four modes do you remember in more detail and why”). In this case, the most outstanding scenario was the MR Realistic Scenario (n = 9), which may indicate that either this is a confirmation of the high degree of presence experienced by the participants or a possible novelty effect. There is evidence that when a person remembers in more detail one scene than another is an indication that the participant was feeling more present in that environment [7, 8].

5 Conclusions

In this work, we intend to give an answer for which MR egocentric visualization mode is more immersive: a real image or avatar representation of the user. This work validates preliminary results from a previous study, which proposes strategies of real egocentric images visualization. Also, once again, the comparison of experiments by the users showed the capability of seeing their real body in the MR experiment allowed them to have better handling and balance of the haptic interface being tested. Some of the users liked this improvement due to the capacity that they were able to see their real body.

The fact that we did not have to remove the participant from the virtual environment to each questionnaire reduced the experiment time and also avoided any possible presence of the user with the system. The learning process was fast, and none of the participants presented any difficulty, even those who had little or no experience in cycling or with virtual reality devices.

In the present study it was possible to systematically analyze that there is not necessarily a better or worse type of body representations, but the contents (scenarios, body representations or objects) reproduced must be created and presented in a way they are consistent with the complete environment. That is, it is not enough to have only the interface of MR, or changes of scenery or types of bodies, but there must be a specific combination of the body with its background elements. New devices are starting to emerge, being able to function independently of a computer and can scan the surrounding environment, which allows the user and the content presented to freely interact with the environment without a clear line from where the real world ends and where the virtual begins. In the future, the experiments can be performed in this other form of interfaces.

References

Slater, M., Usoh, M.: Representations systems, perceptual position, and presence in immersive virtual environments. Presence: Teleoper. Virtual Environ. 2(3), 221–233 (1993)

Oliveira, W., Gaisbauer, W., Tizuka, M., Clua, E., Hlavacs, H.: Virtual and real body experience comparison using mixed reality cycling environment. In: Clua, E., Roque, L., Lugmayr, A., Tuomi, P. (eds.) ICEC 2018. LNCS, vol. 11112, pp. 52–63. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-99426-0_5

Witmer, B.G., Singer, M.J.: Measuring Presence in Virtual Environments: a presence questionnaire. Presence 7(3), 225–240 (1998)

Frommel, J., et al.: Integrated questionnaires: maintaining presence in game environments for self-reported data acquisition. In: Proceedings of the 2015 Annual Symposium on Computer-Human Interaction in Play (CHI PLAY 2015), pp. 359–368. ACM Press, London (2015)

Schloerb, D.W.: A quantitative measure of telepresence. Presence: Teleoper. Virtual Environ. 4(1), 64–80 (1995)

Heeter, C.: Being there: the subjective experience of presence. Presence: Teleoper. Virtual Environ. 1(2), 262–271 (1992)

Slater, M.: Measuring presence: a response to the Witmer and Singer presence questionnaire. Presence 8(5), 560–565 (1999)

Slater, M., Lotto, B., Arnold, M.M., Sánchez-Vives, M.V.: How we experience immersive virtual environments: the concept of presence and its measurement. Anuario Psicol. 40, 193–210 (2009)

Kilteni, K., Groten, R., Slater, M.: The sense of embodiment in virtual reality. Presence: Teleoper. Virtual Environ. 21(4), 373–387 (2012)

Milgram, P., Kishino, F.: A taxonomy of mixed reality visual displays. IEICE Trans. Inform. Syst. 77(12), 1321–1329 (1994)

Valente, L., Feijó, B., Ribeiro, A., Clua, E.: The concept of pervasive virtuality and its application in digital entertainment systems. In: Wallner, G., et al. (eds.) ICEC 2016. LNCS, vol. 9926, pp. 187–198. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46100-7_16

Artanim: Real Virtuality. http://artaniminteractive.com/real-virtuality. Accessed 21 Apr 2018

Silva, A.R., Valente, L., Clua, E., Feijó, B.: An indoor navigation system for live-action virtual reality games. In: 2015 14th Brazilian Symposium on Computer Games and Digital Entertainment (SBGames), pp. 1–10. IEEE (2015)

Sanchez-Vives, M.V., Slater, M.: From presence to consciousness through virtual reality. Nat. Rev. Neurosci. 6(4), 332 (2005)

Slater, M.: Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philoso. Trans. Roy. Soc. B: Biol. Sci. 364(1535), 3549–3557 (2009)

Minsky, M.: Telepresence. Omni 2(9), 45–51 (1980)

Emma-Ogbangwo, C., Cope, N., Behringer, R., Fabri, M.: Enhancing user immersion and virtual presence in interactive multiuser virtual environments through the development and integration of a gesture-centric natural user interface developed from existing virtual reality technologies. In: Stephanidis, C. (ed.) HCI 2014. CCIS, vol. 434, pp. 410–414. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07857-1_72

Ranky, R., Sivak, M., Lewis, J., Gade, V., Deutsch, J.E., Mavroidis, C.: VRACK - virtual reality augmented cycling kit: design and validation. In: Virtual Reality. IEEE, Waltham (2010)

Argelaguet, F., Hoyet, L., Trico, M., Lécuyer, A.: The role of interaction in virtual embodiment: effects of the virtual hand representation. In: Virtual Reality (VR), pp. 3–10. IEEE (2016)

Schwind, V., Knierim, P., Tasci, C., Franczak, P., Haas, N., Henze, N.: These are not my hands!”: Effect of gender on the perception of avatar hands in virtual reality. In: CHI 2017 Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, pp. 1577–1582. ACM, Denver (2017)

Lee, S., Kim, G.J.: Effects of haptic feedback, stereoscopy, and image resolution on performance and presence in remote navigation. Int. J. Hum.-Comput. Stud. 66(10), 701–717 (2008)

Sallnäs, E.-L., Rassmus-Gröhn, K., Sjöström, C.: Supporting presence in collaborative environments by haptic force feedback. ACM Trans. Comput.-Hum. Interact. (TOCHI) 7(4), 461–476 (2000)

Mittelstaedt, J., Wacker, J., Stelling, D.: Effects of display type and motion control on cybersickness in a virtual bike simulator. Displays 51, 43–50 (2018)

Carvalho, M.R.D., Costa, R.T.D., Nardi, A.E.: Simulator sickness questionnaire: tradução e adaptação transcultural. J Bras Psiquiatr 60(4), 247–252 (2011)

Schwind, V., Knierim, P., Haas, N., Henze, N.: Using presence questionnaires in virtual reality. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, CHI 2019, pp. 360:1–360:12. ACM, New York (2019)

MacKenzie, I.S.: Within-subjects vs. between-subjects designs: which to use? Hum.-Comput. Interact.: Empir. Res. Perspect. 7, 2005 (2002)

Joshi, A., Kale, S., Chandel, S., Pal, D.: Likert scale: explored and explained. Br. J. Appl. Sci. Technol. 7(4), 396 (2015)

Mori, M., MacDorman, K.F., Kageki, N.: The uncanny valley [from the field]. IEEE Robot. Autom. Mag. 19(2), 98–100 (2012)

Schwind, V., Wolf, K., Henze, N.: Avoiding the uncanny valley in virtual character design. Interactions 25(5), 45–49 (2018)

Schwind, V., Knierim, P., Tasci, C., Franczak, P., Haas, N., Henze, N.: These are not my hands!: Effect of gender on the perception of avatar hands in virtual reality. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, pp. 1577–1582. ACM (2017)

Tauziet, C.: Designing for hands in VR (2016). https://medium.com/facebook-design/designing-for-hands-in-vr-61e6815add99. Accessed 20 Apr 2019

Acknowledgments

We thank the Medialab from the Institute of Computer of the Federal Fluminense University for providing the bicycle and infrastructure required to develop the haptic system and the participants that help by being part of our tests.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 IFIP International Federation for Information Processing

About this paper

Cite this paper

Oliveira, W., Tizuka, M., Clua, E., Trevisan, D., Salgado, L. (2019). Virtual and Real Body Representation in Mixed Reality: An Analysis of Self-presence and Immersive Environments. In: van der Spek, E., Göbel, S., Do, EL., Clua, E., Baalsrud Hauge, J. (eds) Entertainment Computing and Serious Games. ICEC-JCSG 2019. Lecture Notes in Computer Science(), vol 11863. Springer, Cham. https://doi.org/10.1007/978-3-030-34644-7_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-34644-7_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-34643-0

Online ISBN: 978-3-030-34644-7

eBook Packages: Computer ScienceComputer Science (R0)