Abstract

Most contemporary robots have depth sensors, and research on semantic segmentation with RGBD images has shown that depth images boost the accuracy of segmentation. Since it is time-consuming to annotate images with semantic labels per pixel, it would be ideal if we could avoid this laborious work by utilizing an existing dataset or a synthetic dataset which we can generate on our own. Robot motions are often tested in a synthetic environment, where multichannel (e.g., RGB + depth + instance boundary) images plus their pixel-level semantic labels are available. However, models trained simply on synthetic images tend to demonstrate poor performance on real images. In order to address this, we propose two approaches that can efficiently exploit multichannel inputs combined with an unsupervised domain adaptation (UDA) algorithm. One is a fusion-based approach that uses depth images as inputs. The other is a multitask learning approach that uses depth images as outputs. We demonstrated that the segmentation results were improved by using a multitask learning approach with a post-process and created a benchmark for this task.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Semantic segmentation is a fundamental task for robots to understand their surroundings in detail. Most robots have depth sensors. In fact, research on semantic segmentation with RGBD images has been conducted and has demonstrated that depth images boost the accuracy of segmentation [13]. However, semantic pixel-level labels are necessary to train semantic segmentation models in general and it is time-consuming to annotate the image per pixel. For instance, the pixel labeling of one Cityscapes image takes 1.5 h on average [6]. It would be ideal to avoid this laborious work by utilizing an existing dataset or a synthetic dataset which we could generate on our own.

Recently, the number of RGBD datasets taken in the real world has increased. In addition to the widely used 2.5D dataset such as NYUDv2 [31] and SUNRGBD [32], large-scale real 3D datasets such as Stanford2d3d [1, 2], ScanNet [7] have been generated due to the development of the 3D scanner and scalable RGB-D capture system. However, the number of real datasets is small compared to the RGB dataset.

Conversely, computer graphics technology has been developed and large-scale synthetic datasets have also been generated. For example, SUNCG [38] contains 400 K physically-based rendered images from 45 K realistic 3D indoor scenes. SceneNet [24] contains 5 million images rendered of 16,895 indoor scenes. It is also possible to purchase 3D CAD models online and create customized synthetic datasets using UnrealCV [28]. The appearance of synthetic images is a bit different from that of real ones but the synthetic datasets still look real. In fact, it is ideal if a model trained on these dataset performs well on real datasets because robot motions are often tested in a synthetic environment before being tested in a real environment.

However, such a model is known not to generalize well because of the pixel-level distribution shift [15]. In order to solve this problem, a domain adaptation technique is necessary. Although several research studies on unsupervised domain adaptation for semantic segmentation have been conducted, they use only RGB input and do not consider the utilization of a multichannel (here we mean RGB + depth images, which are now easy to obtain in both synthetic and real environments.

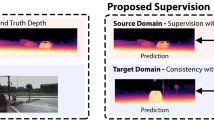

We propose two approaches that can efficiently use multichannel inputs with an UDA algorithm. One is a fusion-based approach that uses different modal images as inputs and the other is a multitask learning approach that uses only RGB images as inputs but other modal images as outputs. Fusing different modalities (RGB, depth or boundary) efficiently is known to boost the segmentation accuracy compared to a simple concatenation of inputs known as early fusion in past research. Except for early fusion, many fusion methods [5, 12, 13, 26] exist and their efficacy is task-specific, which makes us rethink their ideas. Multitask learning is also a promising approach. Multitask learning that solves related tasks such as semantic segmentation and depth estimation tasks simultaneously is known to boost each task’s performance [17, 19]. In multitask learning it is easy to add another single task, such as a boundary detection task, which can be thought to render feature maps more aware of boundaries. Boundary detection output can be utilized collaterally to refine the messy domain-adapted segmentation output.

In summary, the specific contribution of this paper includes:

-

We combine a multichannel semantic segmentation task with an unsupervised domain adaptation (UDA, see Fig. 1) task and propose two approaches (fusion-based and multitask learning)

-

We show that the multitask learning approach outperforms the simple early fusion approach according to all evaluation metrics.

-

We propose adding a boundary detection task to the multitask learning approach and use the detection result to refine the segmentation output, which improves both the qualitative and quantitative results.

2 Related Work

Here, we describe two related research themes, domain adaptation for semantic segmentation and semantic segmentation with multichannel image.

2.1 Domain Adaptation for Semantic Segmentation

When we train a classifier in one (source) domain and apply it to classify samples in a new (target) domain, the classifier is known not to generalize well in the new domain due to the domain’s difference. Many methods tackle the problem by aligning distributions of features between the source and target domain [3, 9, 23, 34]. These methods are proposed to deal with classification problem. Recently, methods for semantic segmentation have been proposed too. Hoffman et al. [15] first tackled this problem. They adopted an adversarial training framework which has a feature extractor and a discriminator (see Fig. 2a). A discriminator tries to detect whether the extracted feature correctly comes from source samples or target samples, while a feature extractor tries to generate features that deceive a discriminator in an adversarial manner. The other researches on this theme also leverage adversarial training. Zhang et al. [37] adopted curriculum learning that starts the easier task (global and super pixel label distribution of source samples matching those of target samples), then tries to solve difficult tasks (semantic segmentation). Chen et al. [4] tried to tackle the cross-city adaptation problem via adversarial training and extract static-object priors that can be obtained from the Google Street View time-machine feature.

Example of network architectures for domain adaptation [(a) domain adversarial neural network (DANN) [15] and (b) maximum classifier discrepancy (MCD) [30]]. When inputs belong to source samples which have labels, we train a feature generator and a classifier using the labels. When inputs belong to target samples which have no labels, we train a feature generator and (one discriminator in the case DANN is used or two classifiers in the case MCD is used) in an adversarial manner.

We utilize Saito et al. [30]’s method (MCD), which is shown to be effective in segmentation task. They proposed a method that uses two classifiers’ difference of output (called discrepancy) to align features between the source and target domain. They trained one feature extractor network and two different classifier networks for the same task (see Fig. 2b). Two classifiers are trained to increase the discrepancy for target samples whereas feature extractor is trained to decrease it. Details are in Sect. 3.1.

2.2 Semantic Segmentation with Multichannel (RGBD) Image

Previously, RGBD segmentation was conducted based on handcrafted features specifically designed for capturing depth as well as color features [10]. Long et al. [22] proposed a fully convolutional neural network (FCN) for semantic segmentation. FCN not only replaced the fully connected layer in classification models such as AlexNet [18] with a convolutional layer but also proposed using two methods, deconvolution and shortcut, which are now widely used in many semantic segmentation models. Long et al. also reported the segmentation scores of the NYUDv2 dataset [31], where RGB + Depth were combined in the input (we call this early fusion) and RGB + HHA in output (we call this score fusion). HHA is the three dimensional encoding of depth (Horizontal disparity, Height above ground, and the Angle of the local surface normal with the inferred gravity direction) proposed by Gupta et al. [11]. FuseNet [13] prepared an RGB and depth encoder separately then fused the two encoders in certain middle layers (see Fig. 4d). The locality sensitive deconvolutional network with Gate Fusion [5] used an affinity matrix embedded with pairwise relations between neighboring RGB-D pixels to recover sharp boundaries of FCN maps. Gate Fusion (see Fig. 4c) learns to adjust to the contributions of RGB and depth that exist in the last layer of the network. RDFNet [26] fuses two networks with multi-modal feature fusion blocks and multi-level feature refinement blocks following RefineNet [21].

The above-mentioned approaches utilize all the different modals as input, but there is also an approach that utilizes only RGB as input and the other modals as output; this is the multitask learning approach. Multitask learning is a promising approach for efficiently and effectively addressing multiple mutually-related recognition tasks and its performance is known to outperform that of the single task methods. Kendall et al. worked on three tasks (semantic and instance segmentation, and depth estimation) [17] and Kuga et al. also worked on three tasks (RGB reconstruction, semantic segmentation, and depth estimation) [19].

There are other approaches using geometric cues obtained from depth images [20, 27], but in this research, we just focus on fusion-based and multitask learning approaches, which renders our model not only applicable to geometric applications but also other modal images, such as thermal images.

3 Proposed Models

Our objective is to conduct unsupervised semantic segmentation with multichannel input. In order to realize that, the two required functionalities are:

-

Annotation free (using no labels in a target dataset)

-

Using different modalities [RGB, Depth (HHA), (Boundary)] efficiently

3.1 Annotation Free (Using No Labels in a Target Dataset)

In order to satisfy the former function, a simple solution utilizes an existing dataset or synthetic dataset which we can generate on our own. However, if there is a domain shift (a difference of appearance or label distribution) between existing training data and test data, the performance can be poor. We tackle this case because a domain shift usually exists between synthetic and real datasets. Hence, we use a domain adaptation algorithm, which leverages adversarial training and enables the model to extract domain-robust features. In order to adopt the adversarial training algorithms, we separate an end-to-end segmentation model into a feature generator and a classifier as shown in Fig. 2. To utilize MCD [30], we prepare two classifiers (\(C_1\), \(C_2\)) and train them by three steps as shown in Fig. 3.

Adversarial training steps in MCD [30].

Formulation: We have access to a labeled source RGB image \(\mathbf x ^{s}_\mathrm{RGB}\), HHA image \(\mathbf x ^{s}_\mathrm{HHA} (=\mathbf y ^{s}_{2})\), instance boundary image \(\mathbf y ^{s}_{3}\) and a corresponding semantic segmentation label \(\mathbf y ^{s}_{1}\) drawn from a set of labeled source images {\(X^{s}_\mathrm{RGB}\), \(Y^{s}_{1}\), \(Y^{s}_{2}(= X^{s}_\mathrm{HHA})\), \(Y^{s}_{3}\)}, as well as an unlabeled target image \(\mathbf x ^{t}_\mathrm{RGB},\mathbf x ^{t}_\mathrm{HHA}\) drawn from unlabeled target images {\(X^{t}_\mathrm{RGB}, Y^{t}_{2}(= X^{t}_\mathrm{HHA})\)}. We train a feature generator network G, which takes inputs \(\mathbf x ^{s}\) or \(\mathbf x ^{t}\), and classifier networks \(C_1\) and \(C_2\), which take features from G. \(C_1\) and \(C_2\) classify them into K classes per pixel, that is, they output a \((K\times |\mathbf x |)\)-dimensional vector of logits. Note that \(|\mathbf x |\) denotes the number of pixels per image. We obtain class probabilities by applying the softmax function for the vector. We use the notation \(p_{1}(\mathbf y _{1}|\mathbf x )\), \(p_{2}(\mathbf y _{1}|\mathbf x )\) to denote the \((K\times |\mathbf x |)\)-dimensional probabilistic outputs for input \(\mathbf x \) obtained by \(C_1\) and \(C_2\) respectively.

Step A. We train G, \(C_1\) and \(C_2\) to classify the source samples correctly. In order to make classifiers and generator obtain task-specific discriminative features, this step is crucial. We train the networks to minimize softmax cross entropy. The objective is as follows:

Step B. We train \(C_1\) and \(C_2\) as a discriminator with fixing G. Let \(\mathcal {L}_\mathrm{adv}(X_{t})\) be the adversarial loss that can be computed using target sample. This loss measures the discrepancy of \(C_1\) and \(C_2\). A classification loss on the source samples is also added for better performance. The same number of source and target samples were randomly chosen to update the model. The objective is as follows:

Step C. We train G to minimize the adversarial loss with fixing \(C_1\) and \(C_2\). The objective is as follows:

The target and source images feed to the training randomly and these three steps are repeated until convergence of all the parts (classifiers and generator). The order of the three steps is not important but it is important to train the classifiers and generator in an adversarial manner under the condition that they can classify source samples correctly.

However, this still outputs messy segmentation results for the indoor scene recognition task (see Fig. 5). We propose to refine this by using boundary detection output that can be gained via a multitask learning approach (Details are in Sect. 3.2).

3.2 Using Different Modalities [RGB, Depth (HHA), (Boundary)] Efficiently

In order to satisfy the latter function, we propose the two approaches below:

-

1.

Fusion-based approach that uses all different modal images as input

-

2.

Multitask learning approach that uses only RGB as input and the other modals as output.

We will describe these two approaches in detail.

Fusion-based [(a)–(d)] and multitask learning [(e), (f)] architectures. (e) model that solves the semantic segmentation and depth regression task and (f) model that solves the boundary detection task in addition to the other two tasks. In this figure, one classifier (two classifiers in the score fusion model) exists but actually two classifiers (four classifiers in the score fusion model) exist to utilize MCD as shown in Fig. 2b.

Fusion-Based Approach. If multimodal images are inputs, an appropriate fusing method is known to boost segmentation accuracy. There are many fusion methods [5, 12, 13, 26] but in this research we focus on four comparatively simple fusions that are early fusion, late fusion, score fusion, fusenet like fusion (see Fig. 4a–d), because they are not specifically designed and widely used. Early fusion just concatenates the RGB and HHA (depth) in inputs. Late fusion fuses two encoders in the middle (in this research, the middle means the one layer before the final output). When fusing, we consider two ways of fusing, addition or concatenation. Score fusion fuses two encoders in the output. When fusing, we also consider three fusing methods, addition or concatenation +1 \(\times \, 1\) convolution or gate fusion [5]. Fusenet-like fusion fuses two encoders in certain middle layers [13]. Past research [14, 33] showed that lower layers of a CNN are largely task and category agnostic but domain-specific, while higher layers are largely task and category specific but domain-agnostic. So late fusion is considered to be the best out of the four.

To incorporate these fusion-based models into MCD algorithm which we described in Sect. 3.1, we just replace \(X_\mathrm{RGB}\) with \(X_\mathrm{RGBHHA}\).

Multitask Learning Approach. Multitask learning is a promising approach for efficiently and effectively addressing multiple mutually related recognition tasks and its performance is known to outperform that of single tasks [17]. We solve semantic segmentation and depth regression tasks simultaneously. Also, a general segmentation model is known to get not sharp boundaries [5, 26]. Kendall et al. [16] showed that the points on object boundaries have high aleatoric uncertainty. Hence, we add one extra task, a boundary detection task, which can be thought to render feature maps more aware of boundaries. One feature map is used as input for the semantic segmentation and depth estimation task. In addition, two lower feature maps were also used as inputs for the boundary detector following holistically-nested edge detection (HED) [35] as shown in Fig. 4f.

This boundary detection output can be utilized to refine the segmentation output. In fact, based on the segmentation results in Fig. 5, the outputs of the domain-adapted model are messier than usual. In order to fix this, we propose post-processing the segmentation result based on boundary detection output as shown in Fig. 6. In detail, we first threshold the boundary detection output and then assign IDs to each separated region. Segmentation output (class label) in one region should be unique (one class label for one ID) and so the segmentation output is refined by voting in each separated region. However, boundary detection output is not always perfect. One region sometimes expands to the adjacent region and becomes too large. Therefore, we do not post-process a region whose area is bigger than the maximum-threshold (set to one-third of the image size). In addition, points exactly on the boundaries are not post-processed.

To incorporate these multitask learning models into MCD algorithm which we described in Sect. 3.1, we replace the semantic segmentation loss of Step A with a total multitask loss. When we compute the total loss, tuning the weight of each task is important. We adopted Kendall’s algorithm to automatically tune the weight [17] by introducing trainable homoscedastic uncertainty parameter \(\sigma _{i}\), where i denotes the task index (1: semantic segmentation, 2: depth regression, 3: boundary detection). This total loss is computed as follows;

where \(\mathcal {L}_{1}(=\mathcal {L}_{seg})\), \(\mathcal {L}_{2}\), \(\mathcal {L}_{3}\) denotes the cross entropy loss for semantic segmentation, the mean squared loss for depth (HHA) regression loss, the class-balanced cross entropy loss for boundary detection, respectively. When \(\mathcal {L}_{3}\) is not used, the model corresponds to Fig. 4e and, otherwise it corresponds to Fig. 4f. Note that only depth regression loss (\(\mathcal {L}_{2}\)) is computed on both source and target samples but segmentation and boundary detection losses are computed only on source samples because we hypothesize that semantic labels and instance boundaries only exist in source samples. \(\mathcal {L}_{1}(=\mathcal {L}_{seg})\) is computed as Eq. 2 and \(\mathcal {L}_{2}\), \(\mathcal {L}_{3}\) are computed as follows;

where f transforms input \(X_\mathrm{RGB}\) to depth (HHA) regression output, and \(|Y_{3-}|\) and \(|Y_{3+}|\) denote the edge and non-edge ground truth label sets, respectively. (Note that \(Y^{s}_{3} \in \{0,1\}\) and \(|Y_{3}| = |Y_{3+}| + |Y_{3-}|\).) \(\log p(y^{s}_{3j}=1| X_{3})\) and \(\log p(y^{s}_{3j}=0| X_{3})\) denotes the sigmoid output of predicted boundary detection on the edge and non-edge points, respectively.

4 Experiment

4.1 Setting

Implementation DetailFootnote 1: We use a dilated residual network (drn_d_38) [36] which is pre-trained on ImageNet [8], which was shown to perform well in [30]. We followed the public implementationFootnote 2 and adopted MCD [30] as an unsupervised domain adaptation method because it had good performance on domain adaptation problems from synthetic GTA [29] to real CityScapes [6]. Then, we separated drn_d_38 into a feature generator and a classifier (actually two classifiers) and trained them in an adversarial manner. In fusion-based models, the last transposed convolution layer was used as a classifier and all lower layers were used as feature generators. In multitask learning models, the feature generator is the same as fusion-based models but the classifier is composed of a bilinear upsampling layer that enlarges the feature map eight times and three convolution layers following [17]. We used Momentum SGD to optimize our model and set the momentum rate to 0.9 and the learning rate to \(1.0 \times 10^{3}\) in all experiments. The image size was resized to \(640 \times 480\) and no data augmentation methods were used. We set one epoch to consist of 5000 iterations and chose test epoch numbers based on the entropy criteria following [25].

Dataset: We used the publicly available synthetic dataset SUNCG [38] as the source domain dataset and the real dataset NYUDv2 [31] as the target domain dataset. SUNCG contains two types of RGB images, an OpenGL-based and physically-based color image. We would like to use more realistic data and therefore used the latter type. 568,793 RGB + HHA + instance boundary (only for multitask:triple) images of SUNCG and 795 RGB + HHA images of NYUDv2 train set were used for training and the NYUDv2 test set that contains 654 images was used for evaluation. During training, we randomly sampled just a single sample (setting the batch size to 1 because of the GPU memory limit) from both the images (and their labels) of the source dataset and the remaining images of the target dataset yet with no labels. Removing 6 classes (books, paper, towel, box, person, bag) that do not exist in SUNCG from the NYUDv2 40 class, 34 common classes were considered. According to the author of SUNCG, they removed person and plant in the rendered data because these two types of objects can be hardly rendered photo-realistic. Figure 7 shows the class label distribution of SUNCG and NYUDv2. The imbalanced distribution demands the application of the four evaluation metrics.

Evaluation Metrics: We report on four metrics from common semantic segmentation and scene parsing evaluations. They are pixel accuracy (pixAcc), mean accuracy (mAcc), mean intersection over union (mIoU), and frequency weighted intersection over union (fwIoU). Let k be the number of classes, \(n_{ii}\) be the number of pixels of class i predicted to belong to class j, \(t_i\) be the total number of pixels of class i in ground truth segmentation. We compute: \(\mathrm{pixAcc} = \frac{\sum _{i} n_{ii}}{\sum _{i} t_{i}} (= \frac{\sum _{i} n_{ii}}{\sum _{i}\sum _{j} n_{ij}})\), \(\mathrm{mAcc} = \frac{1}{k} \sum _{i} \frac{n_{ii}}{t_{i}}\), \(\mathrm{mIoU} = \frac{1}{k} \sum _{i} \frac{n_{ii}}{\sum _{j} (n_{ij} + n_{ji})- n_{ii}}\), \(\mathrm{fwIoU} = \frac{1}{\sum _{k} t_{k}} \sum _{i} \frac{t_{i} n_{ii}}{\sum _{j} (n_{ij} + n_{ji})- n_{ii}}\).

4.2 Results

Figure 8 and Table 1 show the qualitative and quantitative results, respectively. We can confirm the effect of domain adaptation. Adapt (Multitask:Triple+Refine) was the best according to three evaluation metrics and outperformed Adapt (RGB) and Adapt (EarlyFusion) in all the evaluation metrics. The post-process which refines the segmentation results using the boundary detection outputs could improve all the metrics and lead better qualitative results. In the fusion-based models, Adapt (LateFusion:Add) could outperform Adapt (RGB) in three evaluation metrics but the performance of most of the fusion-based models is not that different nor worse than that of Adapt (RGB). This is due to the fact that the visibility of RGB images was better than that of HHA images for almost all the classes in the dataset in addition to the fact that objects which exist far away from the camera cannot be seen in HHA images due to the depth sensor range limitation, which could have a negative effect in fusion-based approaches.

IoUs (See Table 3) RGB vs. HHA. The classes whose IoU of RGB outperformed those of HHA were Ceiling and Floor, whose visibility is better in HHA than in RGB. Conversely, the classes whose IoU of HHA outperformed those of RGB were Window, Blinds or Television. This result is reasonable from the perspective of such classes as shown in: Fig. 9.

Sample (RGB, HHA, GT from left to right) of SUNCG [38]. Window, Blinds or Television looks clear in the RGB image. However, Floor looks clear in the HHA image.

Fusion-Based vs. Multitask Learning. Multitask learning approaches, especially the Adapt (Triple+Refine) model, outperformed fusion-based approaches significantly in classes such as Bed, Picture, Toilet except for Ceiling. This indicates that multitask learning approaches work well for object classes while fusion-based approaches work well for region classes.

Boundary Detection Result. Following HED [35], we computed three evaluation metrics, fixed contour threshold (ODS), per-image best threshold (OIS) and average precision (AP) using public codeFootnote 3. We compared with handcrafted edge detection methods (Sobel, Canny, and Laplacian, whose hyper-parameter was set to default of OpenCV) that do not use ground truth. As shown in Table 2, the boundary detection output outperformed these handcrafted methods, but the adaptation was not so effective. Based on this, boundary detection is considered to be a domain agnostic task compared to semantic segmentation.

Failures. From Fig. 7 and Table 3, the IoUs of the rare classes such as BookShelf, Pillow, Mirror, Clothes, BookShel, Shower-curtain, Whiteboard, Other-furniture were zero or almost zero. Rare classes in source samples seem to be difficult to recognize. Figure 10 shows the confusion matrix of the best model, Adapt (Multitask:Triple+Refine). Floormat is mispredicted as Floor, Whiteboard is mispredicted as Wall, Picture and Blinds are mispredicted as Window, Pillow is mispredicted as Sofa. If we consider the source label distribution shown in Fig. 7, a rare class is often mispredicted as a common class whose position is the same as the rare one. The ratio of pixels that are mispredicted as Othrprop was high. This is probably because Othrprop contains various kinds of classes such as car, motorcycle, soccer goal post, gas stove as shown in Fig. 11.

5 Conclusion

We combined a multichannel semantic segmentation task with an unsupervised domain adaptation task and proposed two architectures (fusion-based and multitask learning). We demonstrated that the multitask learning approach outperforms the simple early fusion approach in all the evaluation metrics. In addition, we propose adding a boundary detection task in the multitask learning approach and using the detection result to refine the segmentation output. We qualitatively and quantitatively show this post-process is effective especially in the classes whose boundaries look clear. However, the scores of the adaptation result are still poor when compared to oracle. In future work, we would like to use a few labeled and many unlabeled target samples (semi-supervised setting) and improve the results.

References

Armeni, I., Sax, S., Zamir, A.R., Savarese, S.: Joint 2D–3D-semantic data for indoor scene understanding. arXiv:1702.01105 (2017)

Armeni, I., et al.: 3D semantic parsing of large-scale indoor spaces. In: CVPR (2016)

Bousmalis, K., Silberman, N., Dohan, D., Erhan, D., Krishnan, D.: Unsupervised pixel-level domain adaptation with generative adversarial networks. In: CVPR (2017)

Chen, Y.H., Chen, W.Y., Chen, Y.T., Tsai, B.C., Wang, Y.C.F., Sun, M.: No more discrimination: cross city adaptation of road scene segmenters. In: ICCV (2017)

Cheng, Y., Cai, R., Li, Z., Zhao, X., Huang, K.: Locality-sensitive deconvolution networks with gated fusion for RGB-D indoor semantic segmentation. In: CVPR (2017)

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: CVPR (2016)

Dai, A., Chang, A.X., Savva, M., Halber, M., Funkhouser, T., Nießner, M.: ScanNet: richly-annotated 3D reconstructions of indoor scenes. In: CVPR (2017)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: CVPR (2009)

Ganin, Y., Lempitsky, V.: Unsupervised domain adaptation by backpropagation. In: ICML (2014)

Gupta, S., Arbelaez, P., Malik, J.: Perceptual organization and recognition of indoor scenes from RGB-D images. In: CVPR (2013)

Gupta, S., Girshick, R., Arbeláez, P., Malik, J.: Learning rich features from RGB-D images for object detection and segmentation. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8695, pp. 345–360. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10584-0_23

Ha, Q., Watanabe, K., Karasawa, T., Ushiku, Y., Harada, T.: MFNet: towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes. In: IROS (2017)

Hazirbas, C., Ma, L., Domokos, C., Cremers, D.: FuseNet: incorporating depth into semantic segmentation via fusion-based CNN architecture. In: Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y. (eds.) ACCV 2016. LNCS, vol. 10111, pp. 213–228. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54181-5_14

Hoffman, J., Gupta, S., Leong, J., Guadarrama, S., Darrell, T.: Cross-modal adaptation for RGB-D detection. In: ICRA (2016)

Hoffman, J., Wang, D., Yu, F., Darrell, T.: FCNs in the wild: pixel-level adversarial and constraint-based adaptation. arXiv:1612.02649 (2016)

Kendall, A., Gal, Y.: What uncertainties do we need in Bayesian deep learning for computer vision? In: NIPS (2017)

Kendall, A., Gal, Y., Cipolla, R.: Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In: CVPR (2018)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: NIPS (2012)

Kuga, R., Kanezaki, A., Samejima, M., Sugano, Y., Matsushita, Y.: Multi-task learning using multi-modal encoder-decoder networks with shared skip connections. In: ICCV Workshop (2017)

Lin, D., Chen, G., Cohen-Or, D., Heng, P.A., Huang, H.: Cascaded feature network for semantic segmentation of RGB-D images. In: ICCV (2017)

Lin, G., Milan, A., Shen, C., Reid, I.: RefineNet: multi-path refinement networks with identity mappings for high-resolution semantic segmentation. In: CVPR (2017)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: CVPR (2015)

Long, M., Cao, Y., Wang, J., Jordan, M.I.: Learning transferable features with deep adaptation networks. In: ICML (2015)

McCormac, J., Handa, A., Leutenegger, S., Davison, A.J.: SceneNet RGB-D: can 5m synthetic images beat generic ImageNet pre-training on indoor segmentation? In: ICCV (2017)

Morerio, P., Cavazza, J., Murino, V.: Minimal-entropy correlation alignment for unsupervised deep domain adaptation. In: ICLR (2018)

Park, S.J., Hong, K.S., Lee, S.: RDFNet: RGB-D multi-level residual feature fusion for indoor semantic segmentation. In: ICCV (2017)

Qi, X., Liao, R., Jia, J., Fidler, S., Urtasun, R.: 3D graph neural networks for RGBD semantic segmentation. In: ICCV (2017)

Qiu, W., et al.: UnrealCV: virtual worlds for computer vision. In: ACMMM Open Source Software Competition (2017)

Richter, S.R., Vineet, V., Roth, S., Koltun, V.: Playing for data: ground truth from computer games. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 102–118. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_7

Saito, K., Watanabe, K., Ushiku, Y., Harada, T.: Maximum classifier discrepancy for unsupervised domain adaptation. In: CVPR (2018)

Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: NYU depth dataset V2. In: ECCV (2012)

Song, S., Lichtenberg, S.P., Xiao, J.: SUN RGB-D: a RGB-D scene understanding benchmark suite. In: CVPR (2015)

Song, X., Herranz, L., Jiang, S.: Depth CNNs for RGB-D scene recognition: learning from scratch better than transferring from RGB-CNNs. In: AAAI (2017)

Tzeng, E., Hoffman, J., Saenko, K., Darrell, T.: Adversarial discriminative domain adaptation. In: CVPR (2017)

Xie, S., Tu, Z.: Holistically-nested edge detection. In: ICCV (2015)

Yu, F., Koltun, V., Funkhouser, T.: Dilated residual networks. In: CVPR (2017)

Zhang, Y., David, P., Gong, B.: Curriculum domain adaptation for semantic segmentation of urban scenes. In: ICCV (2017)

Zhang, Y., et al.: Physically-based rendering for indoor scene understanding using convolutional neural networks. In: CVPR (2017)

Acknowledgements

The work was partially funded by the ImPACT Program of the Council for Science, Technology, and Innovation (Cabinet Office, Government of Japan).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Watanabe, K., Saito, K., Ushiku, Y., Harada, T. (2019). Multichannel Semantic Segmentation with Unsupervised Domain Adaptation. In: Leal-Taixé, L., Roth, S. (eds) Computer Vision – ECCV 2018 Workshops. ECCV 2018. Lecture Notes in Computer Science(), vol 11133. Springer, Cham. https://doi.org/10.1007/978-3-030-11021-5_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-11021-5_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-11020-8

Online ISBN: 978-3-030-11021-5

eBook Packages: Computer ScienceComputer Science (R0)