Abstract

Automatic speech processing devices have become popular for quantifying amounts of ambient language input to children in their home environments. We assessed error rates for language input estimates for the Language ENvironment Analysis (LENA) audio processing system, asking whether error rates differed as a function of adult talkers’ gender and whether they were speaking to children or adults. Audio was sampled from within LENA recordings from 23 families with children aged 4–34 months. Human coders identified vocalizations by adults and children, counted intelligible words, and determined whether adults’ speech was addressed to children or adults. LENA’s classification accuracy was assessed by parceling audio into 100-ms frames and comparing, for each frame, human and LENA classifications. LENA correctly classified adult speech 67% of the time across families (average false negative rate: 33%). LENA’s adult word count showed a mean +47% error relative to human counts. Classification and Adult Word Count error rates were significantly affected by talkers’ gender and whether speech was addressed to a child or an adult. The largest systematic errors occurred when adult females addressed children. Results show LENA’s classifications and Adult Word Count entailed random – and sometimes large – errors across recordings, as well as systematic errors as a function of talker gender and addressee. Due to systematic and sometimes high error in estimates of amount of adult language input, relying on this metric alone may lead to invalid clinical and/or research conclusions. Further validation studies and circumspect usage of LENA are warranted.

Similar content being viewed by others

Introduction

Language is a quintessential human behavior which children must learn through exposure to competent talkers. It is well-established that rates of speech and language skill attainment depend on the quantity of speech experienced by young children (Greenwood, Thiemann-Bourque, Walker, Buzhardt, & Gilkerson, 2011; Hart & Risley, 1995; Hoff & Naigles, 2002; Montag, Jones, & Smith, 2018; Romeo et al., 2018; Rowe, 2012; Weisleder & Fernald, 2013; Weizman & Snow, 2001). Specifically, developmental language attainment appears best predicted by the quantity of language directed to young children themselves – i.e., the amount of so-called infant-directed speech – rather than the amount of overheard or adult-directed speech (Romeo et al., 2018; Weisleder & Fernald, 2013). Quantification of the amount of language in children’s natural home environments – and ideally the amount directed to children themselves – is therefore an essential method for both basic behavioral research as well as clinical purposes. In research, accurate quantification of the amount of language in a child’s home is critical for evaluating theoretical questions about the nature of language development (e.g., Montag et al., 2018; Shneidman, Arroyo, Levine, & Goldin-Meadow, 2013; Weisleder & Fernald, 2013). Further, in clinical practice, child early interventionists use information about amounts of caregiver communication to assess the effectiveness of caregiver-centered interventions for enhancing frequency and quality of child-directed communications (Roberts & Kaiser, 2011; Vigil, Hodges, & Klee, 2005).

Technological devices for automatic speech processing have become increasingly popular methods of quantifying amounts of ambient language in children’s environments. One device widely employed by researchers and clinicians is the Language ENvironment Analysis (LENA™; LENA Research Foundation, Boulder, CO) system (Christakis et al., 2009; Ford, Baer, Xu, Yapanel, & Gray, 2008; Gilkerson, Coulter, & Richards, 2008; Gilkerson & Richards, 2008; Greenwood et al., 2011; Xu, Yapanel, & Gray, 2009c; Zimmerman et al., 2009). This system consists of an audio recorder capable of holding up to 16 hours of audio within a vest worn by a child. LENA uses offline software to generate an automated Adult Word Count and other measures that have now been widely used in numerous basic scientific and applied clinical studies and settings (Burgess, Audet, & Harjusola-Webb, 2013; Caskey, Stephens, Tucker, & Vohr, 2011, 2014; Caskey & Vohr, 2013; Johnson, Caskey, Rand, Tucker, & Vohr, 2014; Oller et al., 2010; Pae et al., 2016; Sacks et al., 2014; Soderstrom & Wittebolle, 2013; Suskind, Leffel, et al., 2016b; Thiemann-Bourque, Warren, Brady, Gilkerson, & Richards, 2014; Wang et al., 2017; Warlaumont, Richards, Gilkerson, & Oller, 2014; Warren et al., 2010; Weisleder & Fernald, 2013; Zhang et al., 2015). This includes several large-scale (e.g., city-sponsored) programs deploying LENA devices for providing data used for decision-making relative to provision of clinical and social services and evaluation of the effectiveness thereof (e.g., the city of Providence LENA effort) (Wong, Boben, & Thomas, 2018).

Yet, questions remain about LENA’s strengths – and weaknesses – as a tool for language quantification (Cristia et al., in press). The goal of this paper is to provide rigorous evaluation of potential sources of error in LENA’s automated language measures, thereby providing researchers and interventionists with high-quality information to assist judicious and circumspect deployment of LENA’s time-saving automated technology. We focused on addressing unanswered questions about the accuracy of LENA’s measure of ambient language by adult speakers, a measure known as Adult Word Count. We focus on this measure due to its widespread adoption as a means of quantifying ambient language in a child’s environment. Although LENA generates other automatic measures of language, such as conversational turn counts and/or vocalizations by the child wearing the vest, the focus of the present project was on accuracy in quantification of adult language input. Given this priority for developing the human coding system used in the present project, the methods used in this paper do not permit full evaluation of these other metrics, which is beyond the scope of present work.

To date, it remains unanswered whether the accuracy of LENA’s language quantification is high and consistent across family home environments, and whether accuracy is affected by incidental variables such as the genders of talkers, and/or whether they are addressing a child (or an adult). Achieving a high, consistent level of accuracy across these incidental variables is essential for drawing appropriate, correct interpretations about the language input provided to children within individual family environments. It would be concerning, for instance, if LENA’s technology incorrectly and systematically attributed a mother’s utterances to a child – particularly a child who was at risk for developmental disorder or delay. If these sorts of errors were attested, then invalid clinical or research inferences could readily be drawn from automatic language measures, specifically that: (a) the caregiver was vocalizing less than she actually was, and that (b) the child was vocalizing more than he actually was. Such a pattern of errors could, for instance, lead clinicians to believe that the caregiver, rather than the child, should be the focus of intervention efforts – for example, through use of caregiver-oriented therapies aimed at increasing the quantity of speech input she provides to the child. Errors in measurement of the actual amount of speech content in the environment, if large enough, could potentially obscure profoundly the true picture of behavior. Large errors of undercounting would make families who were providing a lot of language input appear as if they had provided little language input. Large errors of overcounting would make families who were providing very little language input appear as if they had provided a lot of language input. We therefore pose the following rhetorical and practical question: What is an acceptable degree (and type) of error in quantification of amount of language input in a child’s environment? If this error is too great, it may suggest a need to develop alternative measures of automated language quantification and/or encourage a return to greater reliance on hand-coding by human listeners.

A number of studies have provided validation data of LENA’s automatic language estimates by narrowly examining the extent to which its final Adult Word Count estimate correlates with counts generated by humans of numbers of words spoken by adults across samples of recordings (Table 1). Other studies (Ambrose, Walker, Unflat-Berry, Oleson, & Moeller, 2015; Burgess et al., 2013; Ramírez-Esparza, García-Sierra, & Kuhl, 2014) have transcribed audio from LENA recordings to calculate word counts from human transcription but did not directly compare these word counts to LENA’s Adult Word Count. These studies collectively indicate that, across large numbers of recordings, the LENA Adult Word Count tends to follow the general trend of human-counted numbers of words in recordings. However, the proportion of unexplained variance (1 − r2) in this relationship has been shown to range up to 40% (cf. Soderstrom & Wittebolle, 2013). Thus, for any given recording, there is little basis for predicting whether LENA’s Adult Word Count will be under- or over-counting actual numbers of adult spoken words, or how big the actual discrepancy will be.

However, correlation coefficients are a poor means of assessing accuracy, not to mention variability in accuracy (Busch et al., 2017). Estimates which were “fully” accurate would entail a function y = x (with slope 1 and intercept 0), indicating that for each “true” value of x, no adjustment to the slope or intercept offset is needed to obtain a value y. Correlations instead indicate the degree of scatter of values around an arbitrary line of best fit and thus do not reveal degree of measurement bias. Measurement bias might be proportional (i.e., a difference in slopes of best-fit lines from 1) or fixed (i.e., a non-zero intercept; Busch et al., 2017; Ludbrook, 1997). Therefore, evaluations of whether one method (e.g., actual human word counts) can be replaced with another (LENA’s Adult Word Count estimates) should not be based solely on correlations. Further, it is not clear that using ordinary least squares to regress results from one method (e.g., LENA’s Adult Word Count) on another (e.g., human word counts) is a valid way of comparing methods given that under such methods, a low least-squares error could be obtained while still demonstrating proportional or fixed biases (Bland & Altman, 1986; Busch et al., 2017; Ludbrook, 1997). Therefore, additional nuanced examination of accuracy is warranted.

By design, accuracy of LENA’s Adult Word Count depends on its accuracy with respect to a prior step, namely accuracy in classifying audio as human communicative vocalizations. However, the literature is lacking in rigorous, peer-reviewed, independent studies examining the relationship between LENA’s degree of accuracy in classifying audio as human communicative vocalizations and accuracy in its Adult Word Counts. Indeed, LENA’s Adult Word Count is the end result of multiple, hierarchically dependent signal-processing steps for classifying audio sound sources, and errors at any stage could potentially be compounded to affect Adult Word Count accuracy. The initial steps of LENA’s algorithms involve classifying (i.e., labeling) stretches of audio of variable length as female adult speech (labeled as FAN in LENA’s ADEX software), male adult speech (MAN), key child (CHN), other child (CXN), overlapping vocalization (OLN), TV/electronic media (TVN), noise (NON), silence (SIL), or uncertain (FUZ). Next, the seven categories other than silence are divided into “near-field” or “far-field” sounds based on the energy in the acoustic signal. Next, short stretches of audio categorized as (near-field) speech or speech-like vocalizations by an adult or child that are temporally close to one another are grouped together into units called “conversational blocks”, while remaining contiguous stretches of audio classified as “far-field” (or “faint”) are reclassified as “Pause” units (on the rationale that speech in such audio may likely be unintelligible or hard to hear) (Xu, Yapanel, Gray, & Baer, 2008a; Xu, Yapanel, Gray, Gilkerson, et al., 2008b). Finally, stretches of audio classified as (near-field) male or female adult speech (MAN or FAN) are used to derive LENA’s Adult Word Count values.

The LENA Foundation has provided data on the relationship between Adult Word Count accuracy and segment classification accuracy. In a well-cited but unpublished study, Xu et al. (2009) reported an overall Pearson’s r of 0.92 between human transcription and LENA’s Adult Word Count within 1-hour samples from 70 recordings. A number of important details of their analysis are not reported, such as how temporal mismatch between human-identified vocalization events and LENA’s machine classifications was dealt with in the agreement analysis. This is a critical detail for a rigorous analysis of agreement which is necessary to be able to replicate a study, since otherwise it is not clear under what conditions a human transcription should count as having agreed with an automatic classification or not. Xu et al. further reported a difference in word count estimates (human – LENA) for two separate 12-hour recordings, one in a quiet environment and one in a noisy environment; the difference was roughly −0.4% for the former but −27.3% for the latter. This tantalizing finding suggests substantial variability in Adult Word Count accuracy may occur in the LENA system, though this remains largely unexplored.

A handful of studies have evaluated LENA’s accuracy at classifying audio, as opposed to Adult Word Count accuracy. Perhaps the most widely cited example is the non-peer-reviewed study provided by LENA discussed above by Xu et al. (2009; Xu, Yapanel, Gray, Gilkerson, et al., 2008b), which has been cited many times in support of the claim of reliability of LENA classification (Ambrose, VanDam, & Moeller, 2014; Caskey & Vohr, 2013; Dykstra et al., 2013; Gilkerson, Richards, & Topping, 2017a; Gilkerson, Richards, Warren, et al., 2017b; Greenwood et al., 2017; Greenwood et al., 2011; Johnson et al., 2014; Marchman, Martínez, Hurtado, Grüter, & Fernald, 2017; Ota & Austin, 2013; Ramírez-Esparza, García-Sierra, & Kuhl, 2017; Richards, Gilkerson, Xu, & Topping, 2017a; Richards, Xu, et al., 2017b; Sangwan, Hansen, Irvin, Crutchfield, & Greenwood, 2015; Thiemann-Bourque et al., 2014; VanDam, Ambrose, & Moeller, 2012; Warlaumont et al., 2010; Warlaumont et al., 2014; Xu, Gilkerson, Richards, Yapanel, & Gray, 2009a; Xu, Richards, et al., 2009b; Zhang et al., 2015). The classification accuracy data reported by Xu et al., which was re-reported in Christakis et al., (2009), Zimmerman et al. (2009), and Warren et al. (2010), was based on human coding generated for another unpublished study (Gilkerson et al., 2008). Xu et al. reported classification accuracy for LENA of 82%, 76%, and 76% for adult, child, and other segments, respectively, based on a frame size of 10 ms, as reported in Warren et al. (2010) as opposed to the original study by Xu et al. (2009). This data set has also been analyzed in great detail for the accuracy of child vocalization classification (Oller et al., 2010). However, Xu et al. (2009; p. 5) state that their algorithm for sampling the audio for use in the analysis “was designed to automatically detect high levels of speech activity between the key child and an adult”, leaving unclear whether their sampling procedure might have introduced bias into estimates of accuracy in cases when sampling did not rely solely on portions automatically determined by LENA to involve high levels of speech activity.

Groups outside of the LENA organization have also investigated classification by LENA. Ko, Seidl, Cristia, Reimchen, and Soderstrom (2016) randomly selected LENA-defined segments (50 FAN and 50 CHN) from 14 recordings (1400 total segments). Humans then manually coded these segments. LENA’s mean accuracy was 84%; however, accuracy ranged between 51% and 93% across recordings, indicating a great deal of variability in accuracy. A similar recent analysis of classification accuracy (Seidl et al., 2018) had human listeners code 1384 LENA-defined FAN and CHN segments. They found overall accuracy of 72% with confusion between FAN and CHN segments occurring 15% of the time.

VanDam and Silbert (2013, 2016) elaborated upon other classification results by determining factors in the audio that predict accuracy in LENA. They selected 30 segments each from 26 recordings that LENA had classified as FAN, MAN, or CHN. Human listeners classified these LENA-defined segments as mother, father, child, or other. Human listeners classified segments LENA identified as FAN or MAN as adult speech 80% of the time. They further found evidence that LENA’s classification relied on fundamental frequency (F0) and duration as major criteria for deciding among adult male, adult female, or child talkers. Missing from studies of LENA’s audio classification reliability, among other things, are robust assessments of LENA’s false negative rate (since many studies have focused only on stretches of audio that LENA had identified as a talker), a thorough characterization of variability in accuracy across multiple families, and identifying how classification error carries over to LENA’s Adult Word Count.

Further, none of the studies mentioned above assessed whether there are systematic biases in accuracy of LENA’s classification of audio or Adult Word Count estimates across adult talkers or situations. Given VanDam and Silbert’s (2016) finding that LENA appears to rely heavily on F0 and duration to classify a talker as a man, woman, or child, it is notable that F0 varies considerably as a function of many factors, including talker gender, speaker size, emotional state, and/or communicative intent (Bachorowski, 1999; Benders, 2013; Fernald, 1989; Pisanski et al., 2014; Pisanski & Rendall, 2011; Podesva, 2007; Porritt, Zinser, Bachorowski, & Kaplan, 2014). Situation-specific speech register could potentially affect accuracy in LENA, something especially important for clinical and research issues in child language. Adults often adopt an infant-directed (ID) speech register when speaking with young children, typically characterized by higher and more variable F0 (i.e., dynamic pitch) and slower rate (i.e., longer durations) relative to an adult-directed (AD) register, along with shifts in other kinds of acoustic cues (e.g., distributions of vowel formants; Cristia & Seidl, 2013; Kondaurova, Bergeson, & Dilley, 2012; Wieland, Burnham, Kondaurova, Bergeson, & Dilley, 2015). Therefore, the intended addressee –child or adult – can have implications for distributions of acoustic cues – especially F0 and duration – in ID vs. AD speech, potentially systematically affecting LENA performance. The gender of a talker and the addressee of a segment of speech – whether addressing a child or an adult – could in theory systematically affect accuracy of LENA’s measures. Ensuring the consistency and comparability of metrics in this widely-used device is important for ensuring the soundness of theoretical claims or clinical guidance made on LENA’s output.

The present study was designed to provide important new data regarding variability and consistency in LENA’s accuracy for quantifying children’s language environments across families in English. We focused on LENA’s accuracy in a corpus of recordings comprised of many families with children at risk for language disorder/delay due to hearing loss. There is a high need for accurate and valid quantification of language input to at-risk children, both for research purposes and since the data might be used to guide clinical decision-making and assess effectiveness of caregiver-centered interventions. Prior studies have shown that caregivers use acoustically similar speech behaviors when speaking to children with hearing impairment as to typical-hearing children (Wieland et al., 2015; Bergeson, Miller, & McCune, 2006), or else that caregivers may pronounce speech in a manner similar to how they speak to adults (Kuhl et al., 1997; Lam & Kitamura, 2010). Caregivers may also make minor acoustic modifications when speaking to children who have hearing impairment, many of which may make speech acoustically clearer and words more detectable (Lam & Kitamura, 2012). For example, they may use a slightly higher second formant (F2) for vowels (Wieland et al., 2015), or they may raise their pitch slightly more than they would to a typical-hearing child of the same age (Bergeson, Miller, & McCune 2006). If LENA shows high error in a sample where children are at risk for delay and/or disorder under conditions where adults are expected to be speaking at least as – if not more – clearly than they would speak to typically developing children or adults, then this would be important information to bring to light for interventionists and behavioral researchers to consider in determining whether and when to deploy LENA technology. To the extent that LENA is being deployed as a technology used to detect speech vocalizations by adults (and children), it is important to show that it is capable of robust, high, accurate performance in quantifying caregiver utterances produced in cases of at-risk child populations.

We asked whether differences in classification accuracy for human vocalizations could explain differences in Adult Word Count accuracy. In our study, we selected audio from cases where LENA had and had not identified speech in order to evaluate LENA’s accuracy more thoroughly than prior studies. Finally, an important goal was to quantify how accuracy in LENA’s classifications and Adult Word Count might differ based on the gender of the talker and the addressee in ID vs. AD speech. Previewing our results, we found that LENA’s classification and Adult Word Count accuracy depended on both the gender (male vs. female) of the talker and the addressee (ID vs. AD).

Methods

The present study was conducted as part of initial phases of a larger NIH-funded project at Ohio State University and Michigan State University focused on investigating how the amount and quality of language input in a child’s environment predicts language development. This study was an initial validation test and assessment of whether LENA’s Adult Word Count was suitable as a primary dependent measure for our broader project. Specifically, we asked (1) whether error in LENA’s Adult Word Count was small and consistent across families; (2) whether this error was unbiased across and robust to conditions of interest, i.e., ID vs. AD speech; and (3) whether the amount of error was affected by extraneous factors, such as whether talkers were male vs. female. Satisfying (1), (2), and (3) were necessary preconditions for using LENA’s Adult Word Count as a primary metric for planned individual differences research. The study was also designed to permit identifying systematic sources of inaccuracy or bias in LENA classification steps that might help explain downstream inaccuracies in calculation of the LENA Adult Word Count.

Participants

LENA recordings used in the present study were collected in pilot and initial stages of the larger NIH-funded project described above. Families gave permission to participate and to have their child wear a LENA system for at least one day. The research was approved by the institutional review boards at The Ohio State University and Michigan State University. The present study was based on a single day-long recording from each of a total of 23 enrolled families who had completed at least one day-long LENA recording at the time of initiation of the present study. If an enrolled family had completed more than just one LENA recording, as called for under the broader grant protocol, then the first LENA recording made was included in the present study. Within each family, one child under 3 years of age was given a LENA vest to wear for the recording (M = 1 year 8 months, SD = 8.8 months; range: 4 months to 2 years 8 months at the time of recording). (See Appendix Table 11 for details.) For four families, the target children (i.e., those wearing the LENA device) had normal hearing, for eight families, the target child had hearing aids, for two families, the target child had a cochlear implant in one ear and a hearing aid in the other, and for nine families, the target child had bilateral cochlear implants. Children with cochlear implants had 3–22 months (M = 10 months, SD = 7.54 months) of post-implantation hearing experience. The first available recording from each family where the child had hearing experience was used.

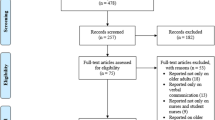

General research design and selection of audio

Our approach involved the following steps: (1) running LENA’s software on the entire day-long audio recording of family language environments; (2) sampling audio from the day-long recording; (3) enlisting human coders to (a) identify times during sampled audio when they heard speech vocalizations, and, for adults’ speech, determine whether it was child- or adult-directed, and (b) count the number of words in adult speech utterances; (4) parceling sampled audio into 100-ms frames, then for each frame, compare the code from humans with that from LENA; and (5) compare human word counts and LENA’s Adult Word Count estimates.

Prior published studies of LENA’s classification accuracy have not estimated the proportion of intelligible speech which LENA inaccurately classifies as non-speech (i.e., the false negative rate). Our study thus sought to estimate a false negative rate in part by sampling pause units, i.e., portions of audio which LENA had classified as not containing near-field speech, as well as from conversational blocks, i.e., portions of audio which LENA had classified as containing near-field speech (although see Schwarz et al., 2017; Soderstrom & Wittebolle, 2013 for analysis of Adult Word Count accuracy that included audio from LENAdefined pauses). Thus, unlike prior classification studies (e.g., VanDam & Silbert, 2016), our design permitted estimation of LENA’s classification rates of true positives, true negatives, false positives, and false negatives for categories like speech vs. non-speech.

LENA’s algorithm was first deployed on the entire day-long audio recording using its offline Advanced Data Extractor (ADEX) classification software (v. 1.1.3-5r10725). From each family’s recording, we then excluded audio for which the child was asleep based on context in the audio which evidenced prolonged heavy breathing, the parents saying goodnight, and/or other contextually based cues to naps, since there was no communicative relevance for the child of any adult speech during those times. Next, we selected the first and last 30 “adult-speech” conversational blocks, i.e., those that had been classified by LENA’s ADEX classification software as involving at least one adult talker – female (FAN) or male (MAN) – as a primary participantFootnote 1. The selection of conversational blocks containing adult speech was motivated by the desire to use LENA’s Adult Word Count metric, which is only calculated for segments of adult speech. In total, samples of approximately 30 minutes of audio (“sampled audio”) were drawn from the beginnings and endings of each recording. These times were selected because family members were likely to be at home and engaged in routine, child-centered activities, e.g., waking up, eating morning or evening meals, and getting ready for bed. As such, this audio was deemed likely to be a fairer test of LENA’s capabilities as it was deemed likely to directly assess the home environment without variability introduced by families engaging in a wide-ranging set of daily activities. Additionally, given our priority of maximizing reliable determination of when ID vs. AD speech was happening from context, sampling audio from the beginning and end of the day had the benefit of enhancing continuity of understanding situational contexts of communicative interactions, which other sampling methods might not have afforded. If the total duration of either the first 30 or last 30 adult speech conversational blocks was less than 10 minutes of audio length, then for whichever portion(s) – first or last – that fell below 10 minutes of audio, we included the next (or preceding, respectively) consecutive adult speech conversational block until the 10-minute audio length minimum was reached. This yielded a minimum of 20 minutes of sampled audio from adult speech conversational blocks per recording (M = 22.98 min, SD = 5.36 min, range: 20.02–44.33 min). There was considerable variability in individual conversational block durations across recordings that collectively constituted sampled audio (M = 10.65 sec, SD = 21.07 sec, median = 4.17 sec, range: 0.6–529.97 sec).

The sampled audio also included approximately 9 minutes of short chunks of audio from pause units (i.e., audio that LENA had identified as not containing near-field speech), which were interleaved between audio portions of adult speech conversational blocks from the beginning and end of the day that had been selected as described above. The mean portion of sampled audio from pause units was M = 9.31 minutes (SD = 0.43 min, range: 8.75–9.96 min). Sampled audio from pause units was selected by first dividing pause units that fell between selected adult conversational blocks into 5-second chunks; chunks were then randomly selected for study inclusion until 5 minutes total duration from pause units was selected at the beginning and at the end of the file. Any portions of sampled audio that incidentally overlapped with a conversational block consisting of primarily child talkers were excluded. After this exclusion, if the total duration of sampled audio from pause units was less than 4 minutes, then additional 5-second chunks of pause units were randomly included in the sample until a minimum of 4 minutes from pause units was achieved. Durations of pause intervals between the sampled conversational blocks with adult speech varied considerably (M = 31.9 sec, SD = 231.0 sec, median = 10.9 se., range: 2.3–12062.9 sec). Across all selected recordings, sampled audio for analysis (from conversational blocks and pause units) consisted of a mean of 32.29 minutes of audio per participating family (SD = 5.42; range: 29.07–54.15 min). The total size of the sample was 735 minutes of total coded audio, which compares favorably with the amount of audio examined in the other studies listed in Table 1. Independent variables of primary interest for statistical hypothesis testing were (1) the gender of adult speakers identified by human listeners (male vs. female) and (2) addressee (ID vs. AD). Due to the spontaneous nature of speech, not all conditions were represented for all families.

Coding of human communicative vocalizations by human analysts

In this study, ten trained human analysts identified entire stretches of human communicative vocalizations (i.e., speech or speech-like vocalizations by adult male, adult female, or child talkers) within sampled audio and marked the temporal starts and ends of these entire intervals on the relevant textgrid tier (see Fig. 1) in Praat (Boersma & Weenink, 2017). For stretches judged to have been adult speech, analysts indicated whether the speech was directed to a child (ID speech), to an adult (AD speech), or neither (e.g., self-directed speech, pet-directed speech) based on context. Laughs, burps, sighs, and other non-speech noises made in the throat (e.g., in surprise) were not treated as speech nor as speech-like vocalizations. Stretches of speech that were unintelligible, due to being, e.g., very faint or distant, were likewise not identified nor labeled, consistent with LENA’s goal of excluding “far-field” speech unlikely to contribute to child language development. Analysts counted all words within a contiguous stretch of speech attributed to a single adult talker and typed a number into the relevant Praat textgrid interval.

Annotation scheme used by human analysts to identify human communicative vocalizations. The top of the display shows the waveform and spectrogram. Textgrid tiers provided for the following information (top to bottom): (1) The Analyzed Intervals tier indicated sampled audio portions (given with a “y’). (2) The Conversational Blocks tier depicted starts and ends of conversational blocks (e.g., AIOCM for adult male with target child) and pause units. (3) The Segments tier depicted LENA’s segment level ADEX code from among its sound categories. Analysts also indicated starting and ending points of human communicative vocalizations as shown above for: (4) the paternal caregiver, (5) the maternal caregiver, (6) a child, (7) another adult female, or (8) another adult male. For tiers corresponding to adult speech, i.e., (4), (5), (7), and (8), analysts indicated the addressee (e.g., “T” for child or “A” for adult), and they also typed a number corresponding to the judged number of intelligible words in each adult speech interval. Finally, (9) the Excluded Vocalizations tier was used to mark speech that significantly overlapped with other noises, other speech, or speech-like vocalizations and were marked as overlap noise by LENA. Analysts determined the tier that audio should be assigned to based exclusively on their judgment of the audio recording

Additional details of the coding procedure ensured that LENA was given “the benefit of the doubt”, e.g., concerning handling of acoustic overlap of talkers, and that minor temporal discrepancies did not count against agreement. First, recall that LENA assigns a single ADEX segment code to each successive chunk of audio. This is potentially problematic for LENA in the case of overlapping sound sources such as overlapped human vocalizations. In these cases, LENA is forced to “choose” between a single talker code (MAN, FAN, CHN, or CXN) or else a multi-talker code, (OLN), which stands for “overlapped speech or noise” during which no adult words are estimated. During OLN intervals, LENA does not increment the Adult Word Count. Recognizing LENA’s classification algorithms might handle such cases unreliably, coders were instructed that, in the special case that they detected overlapped speech in a portion of signal, they should consider LENA’s labels and favor a coding consistent with LENA’s interpretation. In particular, if the coder heard the portion as containing two or more overlapping talkers but LENA assigned a single talker code for that portion of signal, then in the case that the coder perceived the portion to have contained speech from a talker consistent with the single talker code which LENA had indicated, coders were instructed to attribute that portion to the single talker which LENA has identified by marking the interval on a tier for that talker type. If, on the other hand, LENA had assigned the multi-talker OLN code to the overlapped speech, then coders were instructed to mark the portion of overlapped speech on the “Excluded Vocalizations” tier (tier 9 in Fig. 1). Conversely, if the coder heard the portion as containing two or more overlapping talkers but LENA assigned a single talker code that did not correspond to either of the talkers the coder heard, then the coder transcribed that portion on the “Excluded Vocalizations” tier. Given that OLN codes and “Excluded Vocalizations” entailed no increment to LENA’s Adult Word Count, this handling had the effect of ensuring that speech frames identified as consisting of overlapped human vocalizations which were coded as OLN were essentially not treated in our analysis as speech (since they were not attributed to a single adult male, adult female, or child talker, consistent with LENA’s handling). Second, to further advantage LENA and speed coding, analysts copied the temporal boundaries of LENA’s ADEX codes by default to mark the starts and ends of speech events, only changing those times relative to LENA if LENA was incorrect by more than 100 ms (a value in line with prior estimates of LENA’s temporal accuracy: Ko et al., 2016). This meant that minor (< 100 ms) discrepancies that LENA may have had with the actual start or end of vocalization did not count against LENA in our agreement quantification algorithms.

Analyses of agreement between human and LENA classification

Following humans’ labeling continuous human speech vocalizations as above, our general approach to determining when LENA and human coders agreed was to: (i) divide sampled audio into short 100-ms frames; (ii) determine the human-derived category characterizing each frame (see below); (iii) determine the LENA classification code characterizing each frame; and then to (iv) determine, for each frame, whether the category implied a match between the LENA code and the human-derived category. Accuracy (and error) was then calculated as a percentage of frames showing consistency (or inconsistency) between the LENA code and the human-derived category.

-

(i)

Divide sampled audio into short frames. Each textgrid annotating the sampled audio was first divided into a sequence of frames using MATLAB R2017b (The MathWorks website [http://www.mathworks.com]) and the mPraat toolbox (Bořil & Skarnitzl, 2016), following prior work (Atal & Rabiner, 1976; Deller, Hansen, & Proakis, 2000; Dubey, Sangwan, & Hansen, 2018a, 2018b; Ephraim & Malah, 1984; Proakis, Deller, & Hansen, 1993; Rabiner & Juang, 1993). A 100-ms frame length was chosen first based on the granularity of LENA segmentation accuracy in prior literature (for instance, Ko et al., 2016); secondly, based on the instructions to human coders regarding the granularity of their decisions when LENA segment boundaries deviated from perceived audio; and finally, based on the observation that 100 ms is one sixth the size of the smallest LENA segment (600 ms) and one twelfth the size of the average segment (M = 1260 ms, SD = 760 ms), providing a meaningful resolution for sampling LENA’s classification of audio. Frames containing audio outside of sampled audio were discarded.

-

(ii)

Determine the human-derived category characterizing each frame. Next, for each frame, we determined the human label that best characterized that frame (adult male, adult female, child, or other). This step was necessary, given the possibility that the start or end of the continuous stretch of human vocalization that a coder had identified might have started or ended part-way through the 100-ms frame; as a result, one part of the frame might have been identified by a coder as speech from one category, while the other part of the frame was identified by a coder as from the other category. Therefore, the label that was assigned to the frame was the one which corresponded to the label that took up the greatest temporal extent (i.e., 50 ms or more) of the frame. For instance, if 90% of a frame’s temporal extent was identified as an adult male talker and 10% as an adult female talker, the frame was classified as an adult male speech frame. Regions coded by humans as either “paternal caregiver” or “other adult male” were treated as adult male speech, and regions coded by humans as either “maternal caregiver” or “other adult female” were treated as adult female speech (see Table 2). Frames of adult speech (either by a male or female) were characterized as an “AD” or “ID” frame if 50% or greater of the frame’s temporal extent had been annotated as adult-directed or infant-directed, respectively (or neither in the case of pet-directed or self-directed speech).

-

(iii)

Determine the LENA classification code characterizing each frame. Next, a single label derived from LENA segment codes was assigned to each frame, corresponding to the one taking up the greatest temporal extent of the frame (i.e., 50 ms or more).

-

(iv)

Determine, for each frame, whether the LENA classification code implied a match with the human-derived category. We computed several different analyses of agreement based on comparisons between human-derived categories implied by the human labels and LENA classification codes for frames; see Table 2. The first analysis addressed agreement about when speech vocalizations were happening and who was talking; it was based on a four-way category distinction: male adult speech, female adult speech, child vocalization, or other. The second analysis addressed agreement about whether a frame constituted some kind of speech vocalization or not; it was based on a two-way category distinction: speech vs. non-speech. Finally, the third analysis addressed agreement about when adult speech was happening or not; it was based on a two-way category distinction: adult speech vs. everything else. The third analysis was expected to be most pertinent to accuracy of LENA’s Adult Word Count, since this measure is based on the frames classified by LENA as adult speech (i.e., as MAN or FAN). Agreement (or error) was quantified as the percentage of frames classified correctly (or incorrectly), given the category implied by human annotation.

Analyses of adult word count accuracy

Two approaches were taken to calculating error in LENA’s Adult Word Count. First, we calculated the ratio of total Adult Word Count for sampled audio from each family’s file (determined by summing Adult Word Counts in sampled audio from the ADEX file) to the total adult word count identified by humans within sampled audio. This ratio was a measure of the degree of over- or underestimation by the LENA metric for each family, where correlations between these quantities would not have revealed patterns of error as fully. Second, we assigned a fractional signed error in Adult Word Count to each frame. To calculate the fractional signed error, a fractional LENA Adult Word Count was first assigned to each 100-ms frame by identifying the Adult Word Count of the LENA segment(s) that the frame overlapped with, then multiplying by the proportion of the corresponding LENA segment duration that temporally overlapped with the frame. Next, a fractional human word count was analogously determined for each frame; this was calculated by multiplying the human adult word count of the adult speech portion that the frame overlapped with by the proportion of the duration of the speech portion that temporally overlapped with the frame. The fractional signed error for the frame was then calculated by subtracting the fractional human adult word count from the fractional LENA Adult Word Count. This fractional word count error was a dependent variable in statistical analyses testing whether categorical predictor variables (ID vs. AD, female vs. male speech, and correct vs. incorrect classification) associated with frames significantly influenced fractional signed error in Adult Word Count.

Human inter-rater reliability

Inter-rater reliability was assessed through re-coding a total of about 3.6 minutes of audio randomly selected from each of ten recordings, which were also randomly selected, including 2.4 min of audio from adult conversational blocks and 1.2 minutes from pause units drawn equally from the beginning and end of the recording. For each 100-ms frame within audio selected for the inter-rater reliability analysis (approximately 21,600 frames in total), the frame’s classification by each analyst was determined by assigning each frame to a category (male adult, female adult, child, or other) for each coder following the rule described above using the largest portion of the frame’s temporal extent. Cohen’s kappa (Carletta, 1996; Kuhl et al., 1997) was then used to determine agreement between pairs of codes. Further, a value of kappa was calculated to assess the agreement in labeling ID and AD speech within the subset of frames for which the frame had been classified as adult speech in both the original and reliability coding.

Results

Human inter-rater reliability

Our first step was to establish inter-rater reliability for coding by human analysts. Results showed high inter-rater reliability among humans for distinctions of interest. The average κ values indicate very good to outstanding agreement (Breen, Dilley, Kraemer, & Gibson, 2012; Krippendorff, 1980; Landis & Koch, 1977; Rietveld & van Hout, 1993; Syrdal & McGory, 2000). For the four-way classification of frames as adult male, adult female, child vocalization, and anything else (i.e., other), human analysts agreed with mean κ = 0.77 (SD = 0.08). For the speech vs. non-speech distinction, human analysts agreed with mean κ = 0.67 (SD = 0.12). For the adult speech vs. everything else distinction, human analysts agreed with mean κ = 0.81 (SD = 0.08). For adult speech frames, human analysts agreed on whether speech was AD or ID with mean κ = 0.90 (SD = 0.18). Further, accuracy of human word counts showed a strong correlation between the two sets of coded files, r(8) = .96, p < .001. This consistent across-the-board agreement suggests the robustness of human judgments about when speech was happening/not happening, who was talking, whether the adults were talking to a child or to an adult, and how many words the adult spoke. The remaining analyses used these human judgments as the basis of determinations of LENA’s accuracy.

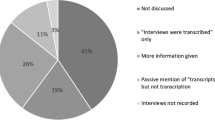

Classification accuracy achieved by LENA for identifying speech vocalizations and attributing these to the correct talkers

Throughout the following, italic font is used to indicate a frame’s classification as assigned by humans. Table 3 shows counts of frames classified by humans as female adult speech, male adult speech, child vocalizations, or other in rows; LENA’s classifications of frames are shown across the columns.Footnote 2 A chi-square test of independence showed that there was a significant relation between human classification and how LENA classified across the eight categories [χ2(21, N = 440,802), p < .001], suggesting that, as expected, LENA’s rates of classification for categories like FAN, MAN, etc. differed as a function of information captured by human classifications (e.g., acoustic properties). Importantly, while there are many on-diagonal entries (i.e., correct classifications), there are many off-diagonal entries (i.e., incorrect classifications). For example, 59% of all female adult speech frames were correctly classified by LENA as “FAN”, such that, by extension, 41% of female adult speech frames were misclassified; it is noteworthy that 12% of these misclassifications were misattributions of a female adult speech frame to a child talker (CHN or CXN). By contrast, 57% of all male adult speech frames were correctly classified as “MAN”, such that, by extension, 43% of male adult speech frames were misclassified; however, just 4% of the misclassified male adult speech frames were misattributions by LENA to a child talker (CHN or CXN). These observations preview our finding of an interaction between talker gender (male vs. female) and speech style (infant-directed vs. adult-directed), something discussed below.

Table 4 shows LENA’s classification accuracy as an overall percentage of frames correctly classified by LENA within each family’s recording, averaged across families. Human-identified female adult speech, male adult speech, child vocalization, and other frames were classified correctly by LENA at average rates of 59%, 60%, 63%, and 82%, respectively (which corresponded in turn to error rates of 41%, 40%, 37% and 18%, respectively).Footnote 3 We also conducted a simple one-sample t test to determine whether, across recordings, classification accuracy was reliably higher than the theoretically defined value of chance, assuming four analysis categories and an unbiased classification method (i.e., ¼ or 25%). LENA’s classification was statistically above this value (i.e., 25%) for frames of each of the four classification categories for all 23 families [adult female: t(22) = 15.79, p < .001, adult maleFootnote 4: t(21) = 9.25, p < .001; child vocalizations: t(22) = 18.19, p < .001; other: t(22) = 36.24, p < .001].Footnote 5 We also used tests of proportions for each family individually to investigate whether LENA’s classification accuracy for the four classification categories was significantly above chance (25%) for that family. For one family (Family 5), classification accuracy for male adult speech was statistically at chance levels (z = −.64, p = .26); based on this sample, male adult speech for this family was more likely to have been misclassified as female adult speech (27/52 frames) or as child speech (11/52 frames) than to have been correctly classified as male adult speech (just 11/52 frames).Footnote 6

Classifications of “speech”: LENA false negative and false positive rates

Next, we assessed LENA’s accuracy at classifying speech and non-speech frames as “speech” vs. “non-speech” (cf. Table 2), which gives an index of LENA’s ability to detect human vocal activity and is an important step in all automatic speech classification systems (Kaushik, Sangwan, & Hansen, 2018). Figure 2a shows a boxplot for LENA accuracy in classifying speech frames across families. Mean accuracy for classifying frames as “speech” was 74%; this corresponded to a false negative rate (i.e., LENA misclassifying speech as “non-speech”) of 26% (SD = 7%). Classification accuracy for speech frames varied widely, from 53% to 86% across families (corresponding to 14% to 47% false negative rates). Table 5 presents error rates across families for classification analyses, and shows that all families had over 10% error rate for false negatives.

Box plots showing variability in classification accuracy as a percentage of frames across each family’s recording for LENA classifying human-labeled (a) speech and (b) non-speech or as (c) adult speech, and (d) everything else (see Table 2 for how these categories are defined). Data from individual families are shown in scatterplots for each classification. Overlaid numbers identify families’ recordings across analyses, further illustrating variability

Figure 2b shows a boxplot for LENA accuracy in classifying non-speech frames across families. Mean accuracy for “non-speech” classifications was 82%; this corresponded to a false positive rate (i.e., LENA misclassifying non-speech frames as “speech”) of 18% (SD = 8%). Classification accuracy for non-speech frames ranged from 64% to 91% (corresponding to a range between 9% to 36% false positives); Table 5 shows a substantial majority (91%) of families had over 10% error rate for false positives.

The lowest bar for evaluating LENA’s classification relates to whether it performed better than chance. Classification for both speech and non-speech frames was better than chance (50%) by a significant statistical margin across families [speech: t(22) = 17.531, p <.001; non-speech: t(22) = 20.382, p < .001].Footnote 7 Tests of proportions were also calculated for each family individually to investigate whether LENA’s classification accuracy for speech vs. non-speech was above chance for that family. Classification rates for speech vs. non-speech exceeded chance levels (50%) for all families’ recordings (α = .05).

Classifications of “adult speech”: LENA false negative and false positive rates

We next assessed LENA’s accuracy at classifying adult speech frames (i.e., frames humans identified as an adult female or adult male talker) as “adult speech” (i.e., FAN or MAN); see Table 2. Figure 2c shows a boxplot for LENA accuracy in classifying adult speech frames across families. Mean accuracy for adult speech frames was 67%, corresponding to a mean false negative rate of 33% (SD = 9%) (i.e., LENA misclassifying adult speech frames as “everything else”). Classification accuracy for adult speech varied widely across families, ranging from 45% to 82% (corresponding to a range between 18% to 55% false negative rates). Table 5 shows that all families had over 10% error rate for false negatives.

Figure 2d shows a boxplot for LENA accuracy in classifying frames of everything else across families. Mean accuracy for classifying everything else (i.e., the complement of adult speech) was 92%, corresponding to a mean false positive rate of 8% (SD = 6%) (i.e., LENA misclassifying everything else frames as “adult speech”). Classification accuracy for everything else frames varied from 70% to 97% (corresponding to 3% to 30% false positive rates). Table 5 shows that a substantive minority (17%) of families had over 10% error rates for false positives.

LENA’s classification accuracy was significantly better than chance (i.e., 50% for two categories) at classifying both adult speech frames [t(22) = 8.865, p < .001] and everything else [t(22) = 35.808, p < .001].Footnote 8 Tests of proportions were calculated for each family individually to investigate whether LENA’s classification accuracy for adult speech vs. everything else was above chance for that family. This analysis revealed that for two families, LENA’s machine classifications were significantly below chance levels of accuracy for “adult speech” classification with α = .05 (Family 10: z = −5.09; Family 18: z = −5.19). In both of these cases, intelligible frames of live adult speech were frequently miscoded by LENA as noise or recorded content (including OLN, TVN, and FUZ).

This variability across families in adult speech false positive and false negative rates might be less worrisome if there was consistency in LENA’s accuracy within a family’s recording from one time point to the next. We therefore conducted a statistical test of the null hypothesis that there was consistency in LENA’s accuracy levels across our two sampling time points, i.e., no difference in LENA classification accuracy for adult speech between samples drawn from the beginning vs. the end of the day. A mixed effects model with a logit linking function was created to predict accuracy across frames (incorrect frames coded as 0, correct coded as 1; incorrect set as baseline) based on the fixed factor of Time with two levels (beginning vs. end, with beginning set as the baseline) and a random intercept for each family. This statistical test showed that the null hypothesis was not supported. Instead, LENA showed systematically lower accuracy for frames drawn from the end of the day than frames at the beginning of the day [beginning (baseline): β = 1.90, z = 29.02, p < .001, odds ≅ 6.7:1; end vs. beginning, β = −.11, z = −12.65, p < .001, odds ≅ 0.9:1]. Thus, not only is there a lack of consistency in classification accuracy/error rates for LENA across families’ recordings, but there is a lack of consistency in classification accuracy/error rates within families’ recordings as well. We return to this point in the Discussion.

Effects of talker gender (male, female) and addressee (ID vs. AD) on “adult speech” classification accuracy

The remaining analyses focused on adult speech frames only. Table 6 shows how the gender of the talker (male vs. female) as well as the addressee (ID vs. AD speech) affected patterns of LENA classification for adult speech framesFootnote 9. Both “FAN” and “MAN” classifications result in increments to LENA’s Adult Word Count estimates, while frames classified in any other way do not. Values in the third data column, which collapses instances which LENA-classified adult speech frames as either “FAN” or “MAN’”, therefore reflect correct classifications as “adult speech” of some type (even if the talker’s gender was misclassified).

The patterns in Table 6 suggest that classification accuracy of adult speech may indeed depend on both talker gender and the addressee (ID vs. AD). For instance, for female adult talkers, a higher percentage of frames was accurately classified in AD (72%) than in ID (64%), with the latter condition involving a lot of misclassifications as a child (14%). Figure 3 shows rates of correct classification of adult speech frames as “adult speech” for each family broken out as a function of Talker Gender (female, male) and Addressee (AD, ID). There is tremendous variability in how accurately adult speech was detected across families’ recordings, and this accuracy varies as a function of the gender and addressee.

To construct a statistical test of whether there were systematic effects of Talker Gender or Addressee (ID vs. AD) on accuracy of classification of adult speech frames, we constructed a mixed effects logistic regression model with a binomially distributed dependent variable of accuracy of classification as “adult speech” (Agresti, 2002; Barr, Levy, Scheepers, & Tily, 2013; Jaeger, 2008; Matuschek, Kliegl, Vasishth, Baayen, & Bates, 2017; Quené & Van den Bergh, 2008). This statistical approach shows robustness to imbalanced numbers of data points across grouping factors, as well as to missing observations (Th. Gries, 2015). The dependent variable value for each frame was set to 0 if that frame’s LENA classification for “adult speech” was incorrect (i.e., if LENA classified the adult speech frame as anything other than FAN or MAN), and as 1 if its classification for “adult speech” was correct (i.e., if LENA classified the frame as either FAN or MAN, even if it got the gender wrong). The model (implemented in R; Bates, Mächler, Bolker, & Walker, 2015; R Development Core Team, 2015) included categorical predictor variables of Gender (female vs. male, with female set as the baseline) and Addressee (AD vs. ID, with AD set as the baseline), as well as their interaction, plus a random intercept term for the effect of each family.Footnote 10

As shown in Table 7, statistical modeling revealed statistically significant effects of both Gender and Addressee (and a significant interaction between these) on LENA’s ability to classify adult speech frames accurately as “adult speech.” The significant effect of Addressee (p < .001) indicates better classification as “adult speech” for adult female AD speech (i.e., the baseline, M = 68%, SD = 15%; odds of correct classification ~2.4:1 [=exp(0.855)]) than for adult female ID speech (M = 63%, SD = 12%; odds of correct classification ~1.8:1 [=exp(0.855)*exp(-0.261)]). The significant effect of Gender (p < .001) indicates there was better classification for adult male AD speech (M = 76%, SD = 19%; odds of correct classification ~2.7:1 [=exp(0.855)*exp(0.129)]) than adult female AD speech (odds of 2.4:1 [=exp(0.855)]). Finally, the significant interaction between Gender and Addressee (p < .001) indicates that Addressee did not affect equally adult male and adult female speech. Rather, adult male ID speech had an odds of correct classification of about 3.3:1 [=exp(0.855)*exp(0.129)*exp(-0.261)*exp(0.477)], which was significantly more accurate classification than would be expected based on the independent effects of ID addressee on female speech and the effect of being male rather than female. This meant that adult male ID speech was classified correctly as “adult speech” almost twice as often as expected based on independent effects of being ID and male (3.3/1.8 ≅ 1.83). In summary, accuracy of classifying adult speech frames as “adult speech” was significantly affected by both the gender of the talker, and whether the speech was AD or ID.

Gender classification accuracy: Effects of addressee (ID vs. AD)

To recap, statistical tests revealed significant differences in LENA’s classification accuracy for “adult speech” as a function of talker gender and addressee. Female ID speech produced the worst classification performance, while male ID speech produced the best classification performance. Yet, these conditions were associated with notable error patterns (cf. Table 6); for instance, frames in the female ID condition were disproportionately misclassified as a child. Misclassifications as a child were far less common in the other three conditions. The male ID condition further showed an apparently disproportionate misclassification of the gender of the talker, and the female AD speech condition was also associated with a large number of gender misclassifications. Given these error patterns, we further investigated LENA’s accuracy in classifying talker gender. Figure 4 depicts LENA’s accuracy, for frames of adult speech, at correctly classifying the gender of an adult talker, broken out by the talker’s human-identified gender (male vs. female) and the addressee condition (ID vs. AD).

Effects of Talker Gender (Female, Male) and Addressee (AD, ID) on accuracy of gender classification within the subset of human-identified adult speech frames correctly classified as adult speech by LENA. Boxplots and associated scatter plots highlight mean accuracy and variability across families (indicated by numbers in the scatterplot)

Rigorous statistical testing bears out what is apparent in the figure, i.e., differential error in LENA’s classification of the gender of an adult talker and addressee condition. The statistical analysis was done on the subset of adult speech frames which were correctly classified by LENA as “adult speech” (i.e., FAN or MAN). We constructed a mixed effects logistic regression model with a categorical, binomially distributed dependent variable in which, for each human-identified adult speech frame which LENA had classified as adult speech (FAN or MAN), the dependent variable value was coded as 1 if LENA correctly classified the gender as the same that humans had identified, and as 0 otherwise. Our model also included categorical predictor variables of (human-identified) talker gender (with female set as the baseline) and addressee (ID vs. AD; with AD set as the baseline). A random intercept term for the effect of each family was also included.

As shown in Table 8, statistical modeling revealed that gender classification for adult speech frames was significantly affected both by Gender and Addressee, and by a significant interaction between these. The significant effect of Addressee (p < .001) suggested that classifying gender for adult female ID speech was eight times better (with odds of correct classification of ~53:1 [=exp(1.851)*exp(2.126)]) than for adult female AD speech (with odds of ~6:1 [=exp(1.851)]). Further, the significant effect of Gender (p < .001) suggested that classification of gender for adult male AD speech was four times better (odds of ~27:1 [=exp(1.851)*exp(1.438)]) than for adult female AD speech (odds of ~6:1 [=exp(0.855)]). Finally, the significant interaction between Gender and Addressee (p < .001) meant that Addressee did not affect the relative accuracy of gender classification equally for adult male and adult female speech. Rather, adult male ID speech had an odds of correct gender classification of about 3.6:1 [=exp(1.851)*exp(1.438)*exp(2.126)*exp(-4.124)]. As such, LENA classified gender for adult male ID speech more poorly than any other condition; the odds of correct gender classification for adult female AD speech being two times higher; for adult male AD speech, seven times higher; and for adult female ID speech, 14 times higher, than accuracy of gender classification in the adult male ID speech condition.

Accuracy in LENA’s Adult Word Count measure

Figure 5 presents the percent over- or underestimation of LENA’s Adult Word Count compared to the word count from human listeners for sampled audio. A value of 100% means LENA’s Adult Word Count perfectly agrees with human word count. The mean percent of overestimation for LENA Adult Word Count was M = 147%, indicating an average 47% overestimation in word count by LENA relative to human word counts. The median overestimation was 31% (the difference between the mean and the median is largely driven by three families – excluding these families generated a mean = 29% overestimation, a value in line with the median). LENA word counts ranged from 83% to 310% of human word counts (SD = 56%). Table 5 shows that 22/23 families had greater than 10% difference between LENA’s Adult Word Count and human word counts (either over- or underestimation). Surprisingly, for 7/23 (30%) of the families, the overestimation was greater than 50%. Nevertheless, in keeping with prior findings, LENA Adult Word Count and human adult word counts were correlated with one another [r(21) = .86, p < .001]Footnote 11, meaning that both the human count and LENA count tended to rise together, in spite of the overestimation by LENA. Similarly, the ranking of participants based on LENA’s Adult Word Count and human word counts showed a significant correlation (rs(21) = .46, p = .029), suggesting that the ranked order of word counts from humans and LENA were somewhat consistent despite the large and variable errors we observed.

Box plots showing variability in error between LENA Adult Word Count and human adult word count. Values represent the percent of over or underestimation by LENA (LENA/Human) such that the dashed line at 100% represented perfect agreement between LENA and human word counts. Values below this line represent underestimation and values above represent overestimation

Relationship between classification accuracy and Adult Word Count

Given that the Adult Word Count is preceded by, and depends on, the classification step, we expected that accuracy for classifying frames as “adult speech” would significantly influence the accuracy of LENA’s Adult Word Count. However, no prior published study has tested or shown such a dependency. To test this, we constructed a generalized linear model in R (using glm) to test the extent to which, across families, the percentage of correct classifications of adult speech and everything else frames (or their interaction) predicted the percentage of over- or underestimation for the LENA Adult Word Count (see above). All variables were scaled and centered. Table 9 shows the results of this statistical modeling. Accuracy of classification of everything else frames significantly predicted Adult Word Count accuracy, with a large effect size (r = −0.77).Footnote 12 There were no other significant effects; we return to this point in the Discussion.Footnote 13

Given this finding relating overall everything else classification accuracy to overall Adult Word Count error, we sought to identify how classification accuracy interacted with the additional factors of gender and addressee on a frame by frame basis. Therefore, a generalized linear mixed effect regression model was constructed to predict the continuous dependent variable of signed per-frame Adult Word Count error. The model included categorical predictor variables for each adult speech frame consisting of Talker Gender (with female as the baseline), Addressee (ID vs. AD, with AD as baseline), Classification Accuracy (incorrect vs. correct, with incorrect as baseline), and all possible interactions (see Method). The model included a random intercept-only effect term to account for clustering by family. This model was reduced through iterative elimination of nonsignificant interaction terms starting with the three-way interaction until a likelihood ratio test revealed that the next simpler model was a significantly worse fit, following current best practices for model fitting (Gries, 2016), including an assumption of convergence of the t and z distributions.

The final model (Table 10) showed that Adult Word Count accuracy was significantly affected by Classification Accuracy, which had a large effect on the amount of per-frame signed error; there were also smaller, but still significant, effects of Talker Gender and Addressee, and significant interactions between Talker Gender and Addressee and between Classification Accuracy and Gender. A value of “0” for per-frame signed error would indicate perfect agreement in proportional word counts by humans and LENA. First, incorrectly classified AD frames engendered more negative signed error (bfemale = −0.3, bmale = −0.28), that is, a greater deviation in the direction of under-counting, than incorrectly classified ID frames (bfemale = −0.23, bmale = −0.24). Moreover, correctly classified frames engendered positive signed error, i.e., over-counting, of a magnitude that depended on the Talker Gender and Addressee. Correctly classified AD frames engendered positive signed error (bfemale = +0.06, bmale = +0.05) which was nevertheless smaller in magnitude than the error of ID frames (bfemale = +0.13, bmale = +0.09).

Taken together, these results reveal that LENA showed systematically more error in detecting and correctly classifying speech of adult females than speech of adult males. Even under conditions when LENA had accurately classified frames of adult talkers as “adult speech”, LENA was less accurate in registering and counting words of adult females than in counting words of adult males, showing systematically greater undercounting of words of adult females than words of adult males. Finally, there were significantly higher error rates for the LENA Adult Word Count when adult females were directing their utterances to children (i.e., ID condition), compared with any other condition.

Discussion

This study presented an independent assessment of reliability in classification and Adult Word Count from LENA at-home recordings. Independent assessment (i.e., analyses not funded by the LENA Foundation) is a requisite for clinicians and researchers to use this tool with confidence. The current analysis focused on accuracy of audio classification by LENA, accuracy of LENA’s Adult Word Count metric, and the implications of classification errors on Adult Word Count estimates. Our focus on these metrics was due to the developmental importance of quantity and quality of environmental speech and the importance of child-directed speech for language development (e.g., Hoff & Naigles, 2002; Huttenlocher, Haight, Bryk, Seltzer, & Lyons, 1991; Shneidman et al., 2013). LENA’s automatic analysis of Adult Word Count has become widely used to assess the quantity and quality (assessed through addressee) of speech in children’s environments (Romeo et al., 2018; Weisleder & Fernald, 2013). Given the shift towards its use, we sought to provide an independent evaluation of LENA by having human analysts (i) identify when a man, woman, or child produced a speech vocalization; (ii) indicate, for adult talkers, whether the utterance was child-directed or adult-directed; and (iii) count the number of intelligible adult words. We therefore posed the question: What amount of error is “acceptable,” for both research and clinical purposes, for ensuring standards of validity and reliability in order to justify reliance on automatic, machine-based decisions about the amount of language input in a child’s environment?

LENA showed variable – and in some cases quite large – errors in classifying audio as the correct talker (man, woman, or child). The average false negative rate for adult speech frames was 33% (range: 18–55% missed frames). For all 23 families in our sample, LENA was in error on more than 10% of intelligible adult speech frames. Classification was highest (92% accuracy) for audio that did not contain adult speech (everything else). In contrast, human-identified adult female speech was correctly identified by LENA as female speech only 59% of the time.

Further, both human-identified gender (male vs. female) and addressee (ID vs. AD) significantly affected the accuracy of LENA’s audio classification. LENA was overall statistically better at classifying frames of adult speech as “adult speech” for male voices compared with female voices. LENA showed especially high error at classifying adult female speech in ID condition, in which LENA disproportionately classified frames as a child talker (and thus not as adult speech). Even when LENA accurately identifies audio as adult speech, gender and addressee still affect accurate classification of talker gender. Within correctly classified adult speech frames we found that accuracy for ID speech was high for women but low for men, whereas AD speech accuracy was consistent across genders. Thus, even in cases when LENA accurately identifies the amount of adult speech, variability due to addressee and gender may lead to attribution of adult word count to the incorrect gender. Given typical goals of many research studies and clinical situations to attribute input to the correct talker or talker group, ascribing that input to the wrong talker(s) could lead to incorrect conclusions by researchers or clinicians about who was providing input to the child, and how much input they provided. As one example, if words produced by a male caregiver (say, a single father) were misattributed to a female caregiver, this could lead a clinician to doubt the father’s reports about how much input he was providing to a child, where this could potentially further lead to needless, ineffectual interventions aiming to increase the amount of input the father provided to the child.

We also showed that these systematic classification errors significantly impacted the accuracy of LENA’s Adult Word Count. On average, LENA overestimated Adult Word Counts by 47% (ranging from undercounting words by 17% to over-counting words by 208%). The correlation observed between human word counts and LENA’s Adult Word Count (r = .86) was well within the range of values reported for prior studies (see Table 1), and the Spearman correlation (rs = .46) showed similar rankings between LENA and human coders. However, the variability in error for Adult Word Count estimates we identify are concerning, and these significant correlations obscure the problematic overestimation by LENA we observed, highlighting the inadequacy of correlations for assessing reliability.

This is the first paper to have identified the speaker gender and intended addressee as variables that directly affect accuracy of segment classification and Adult Word Count. The fact that our study included both of these dependent measures, while also measuring the false negative (“miss”) rate, allowed us to evaluate how the these variables were related to one another, thus going substantially beyond prior work which has examined only subsets of these variables (e.g., Bergelson, Casillas, Soderstrom, Seidl, Warlaumont, Amatuni, 2019; VanDam & Silbert, 2016). Gender and addressee both interacted with classification accuracy to predict word count error. Interestingly, the relative amount of error across ID vs. AD conditions depended on whether frames had been correctly classified as adult speech. In particular, when human identified adult speech frames were missed by LENA, the Adult Word Count showed greater error (i.e., more undercounting) when frames were AD compared with when they were ID. However, when adult speech frames were correctly classified, the Adult Word Count showed greater error (i.e., more overcounting) when frames were ID compared with when they were AD. Further, frames of male adult speech generated significantly less error in Adult Word Count than frames of female adult speech for three out of four conditions; only inaccurately classified ID frames showed less error for female than male speech. The patterns we identified suggest that LENA misattributes or misses adult words as a function of the talker’s gender and speech style in part due to systematic errors in classification, and this is especially problematic for ID speech from adult female speakers.

Therefore, a main finding was that adult females talking in ID register were particularly likely to have their speech “missed” (i.e., LENA failed to detect it) for purposes of Adult Word Count; such speech was disproportionately attributed to children. LENA very rarely misattributed the gender of female adult talkers who were addressing children (ID speech). In other words, when female ID speech was accurately identified to be from an adult (as opposed to mistakenly attributed to a child), this adult speech was assigned to the correct gender (“female”) with high accuracy. Adult male speech showed a generally opposite pattern – better detection accuracy but worse gender classification. That is, adult male speech was much more readily detected as “adult speech” (and tended to be more faithfully reflected in Adult Word Counts), but gender classification was quite poor, with male ID speech was misattributed to females 14 times more often than the reverse (female adult ID speech being attributed to a male adult).

Across all results of classification and Adult Word Count accuracy, we see striking variability between families. Some of the variability across families in the accuracy with which adults’ speech was classified as “adult speech” depended upon the gender and addressee of the speaker. Speakers of a given gender differ in their typical fundamental frequency ranges; for instance, the distribution of mean F0 values for adult female speakers – even in AD register – ranges from statistically quite low and overlapping with higher-pitched males, to statistically quite high and overlapping with the typical F0 values of children (Hanson, 1997; Hanson & Chuang, 1999; Iseli, Shue, & Alwan, 2006). Classification of speech given this variability is further complicated by variable usage of ID and/or AD registers between speakers. Given prior research suggesting a dependency of LENA’s classification accuracy on F0 (VanDam & Silbert, 2016), we speculate that female talkers who naturally have lower F0 may have produced speech which was better detected than female talkers with higher F0.

Varying degrees of competing environmental noise sources presumably also account for some of the variability in classification error, although this is speculative. Classification errors where TV or young siblings are misclassified as adult speech could significantly alter Adult Word Count accuracy, a concern that may underlie our finding that the rate of correct classification of everything else frames in the “adult speech” analysis significantly predicted Adult Word Count error. In keeping with this idea, we observed that for two of the families with more than 100% overestimation in LENA’s Adult Word Count – relative to the human word count – the error seemed to have been driven by misclassification of TV, while in the third case, it appeared to be due to misclassification of sibling speech as adult speech. Overestimation by LENA has been observed in prior studies due to TV (Xu, Yapanel, et al., 2009c), and during activities in the home (Burgess et al., 2013; or in Table 2 of Soderstrom & Wittebolle, 2013). Additional work will be needed to determine if LENA generates accurate language estimates in particular auditory environments (e.g., quiet settings with little reverberation).