Abstract

Recent evidence has shown that practice recognizing certain objects hurts memories of objects from the same category, a phenomenon called recognition-induced forgetting. In all previous studies of this effect, the objects have been related by semantic category (e.g., instances of vases). However, the relationship between objects in many real-world visual situations stresses temporal grouping rather than semantic relations (e.g., a weapon and getaway car at a crime scene), and temporal grouping is thought to cluster items in models of long-term memory. The goal of the present study was to determine whether temporally grouped objects suffer recognition-induced forgetting. To this end, we implemented a modified recognition-induced forgetting paradigm in which the objects were temporally clustered at study. Across four experiments, we found that recognition-induced forgetting occurred only when the temporally clustered objects were also semantically related. We conclude by discussing how these findings relate to real-world vision and inform models of memory.

Similar content being viewed by others

Accessing information in long-term memory has negative side effects, such as the forgetting of related information also stored in memory. The understanding of these negative side effects is clearly relevant in applied settings like the judicial system, in which erroneous eyewitness identification remains one of the leading causes of wrongful convictions (Benforado, 2015). This provides a practical call for a more comprehensive understanding of the consequences of accessing visual long-term memories.

One illustration of the negative consequences of accessing visual objects in long-term memory is recognition-induced forgettingFootnote 1 (Maxcey & Woodman, 2014). This paradigm involves recognizing an object stored in long-term memory and results in impaired memory for semantically related objects from the same category (e.g., other vases, other lamps, other chairs). This negative consequence of accessing memories exists in children (Maxcey & Bostic, 2015), young adults (Maxcey & Woodman, 2014), and older adults (Maxcey, Bostic, & Maldonado, 2016). All studies of recognition-induced forgetting thus far (Maxcey, 2016; Maxcey & Bostic, 2015; Maxcey et al., 2016; Maxcey & Woodman, 2014) have used semantically related objects to find the induced forgetting of related objects. However, the relationship between objects in many real-world visual recognition tasks may be temporal (i.e., clustered by co-occurring in time) rather than semantic (i.e., clustered by categorical relatedness). For example, an eyewitness to a crime may need to remember the bank robber, getaway car, and gun. These items are from different semantic categories (e.g., face, car, gun), but they were all encountered at the same point in time (e.g., at the time of the bank robbery). The goal of the present study was to determine whether temporally grouped objects suffer recognition-induced forgetting, as do semantically grouped objects.

There are reasons to expect that recognition-induced forgetting also operates across temporally grouped objects. A large body of work has described evidence for both temporal and spatial groupings in episodic memory (Howard & Kahana, 2002; Kahana, Howard, & Polyn, 2008; Kraus et al., 2015). The activation of an object in long-term memory appears to result in the activation of associated, context-based long-term memory representations (Hirsh, 1974; Miyashita, 1993). This can be measured in neural activity in the medial temporal lobe and sensory cortex (Kok, Jehee, & de Lange, 2012; Schapiro, Kustner, & Turk-Browne, 2012; Turk-Browne, Simon, & Sederberg, 2012). The brain then uses this predictive context-based information to facilitate performance (Olson & Chun, 2001) or to prune memory representations of objects that are predicted but then fail to appear (Kim, Lewis-Peacock, Norman, & Turk-Browne, 2014).

On the other hand, there are also reasons to expect that recognition-induced forgetting does not spread among items that are temporally grouped. All studies of recognition-induced forgetting have grouped objects by semantic category (e.g., practicing vases induces the forgetting of other vases). Currently there is no evidence that recognition-induced forgetting operates over any other type of grouping (e.g., temporal). Second, several different families of dual-process models of recognition memory posit that discriminations based on familiarity do not operate over temporal groupings (Brown & Aggleton, 2001; Yonelinas, 2002), but instead are based on semantic memory (Nyberg, Cabeza, & Tulving, 1996; Tulving, 1982, 1985; Tulving & Markowitsch, 1998; Tulving & Schacter, 1990; Wheeler, Stuss, & Tulving, 1997). If recognition-induced forgetting operates over familiarity discriminations, then temporally grouped objects should not suffer from induced forgetting following recognition practice.

In summary, whether or not this type of forgetting occurs with temporally grouped items is not only important in the real world, but will provide leverage regarding the type of memory mechanisms at play in this forgetting effect. Here we tested whether practice recognizing one item in a temporally related pair (e.g., the pink umbrella from a “pink umbrella + orange vase” pair) induced the forgetting of the other (i.e., related) item from the pair (e.g., the orange vase).

General procedure

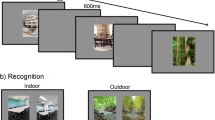

All four experiments in this study followed the same general procedure. The sequence of experimental events is shown in Fig. 1 (see the Appendix, Figs. 7, 8, and 9 for an example of the full stimulus set for one participant in Exps. 1–3, and Figs. 10, 11, and 12 for an example of the full stimulus set for one participant in Exp. 4).

General method for Experiments 1–3. The experiment began with a study phase during which participants were presented with pairs of objects. The pairs consisted of either two randomly paired objects (known as a temporal-only pair) or two objects from the same semantic category (know as a temporal-plus-semantic pair). In the recognition practice phase, participants reported which object in a two-alternative forced choice task they remembered from the study phase. The object was either from a temporal-only pair or a temporal-plus-semantic pair. Finally, in the test phase, participants completed an old–new recognition judgment in response to sequentially presented objects. Old objects in the test phase had originally belonged to either a temporal-only pair or a temporal-plus-semantic pair. Novel test lures were drawn from categories that had either been presented in a temporal-only pair or a temporal-plus-semantic pair.

The experiment began with the study phase, in which pairs of objects were presented on the screen for 5,000 ms each, interleaved with 500-ms fixation crosses. Participants were instructed to remember the pairs of objects for a later memory test. In Experiments 1–3, the critical manipulation was the relationship between these paired objects. First, half of the pairs of objects (14 trials) were related to one another only in that they were presented at the same point in time on the screen. These pairs were called temporal-only pairs. For example, an umbrella and a vase presented on the screen together would be a temporal-only pair. Second, the remaining 14 pairs of objects were related to one another in that they were presented at the same point in time and drawn from the same semantic category. These pairs were called temporal-plus-semantic pairs. In the present study, we used the term semantic to refer to membership in the same object category (e.g., mugs, lamps, chairs). For example, two mugs that were presented on the screen at the same time would be a temporal-plus-semantic pair. After 28 trials of the study phase (see the Appendix, Fig. 7), participants completed a 5-min visual distractor task of searching for the protagonist in Where’s Waldo books.

Next, in the practice phase, participants were shown two objects on the screen and instructed to indicate which object they had seen earlier in the experiment. One of the objects was from a pair in the study phase, and the other was a novel lure from the same semantic category (e.g., a red ball as the practiced object and a yellow ball as a novel lure). Responses to the two-alternative forced choice recognition task were made with the right index finger pressing the leftmost button on the response box if the object on the left was familiar, and the second button from the left with the right middle finger if the object on the right was familiar. Half of the pairs from the study phase had an object that would be practiced during the practice phase. Thus, only half of all pairs (14/28) had an object that was practiced. Of the 14 pairs that had an object practiced, seven were temporal-only pairs, and the remainder were temporal-plus-semantic pairs. The 14 practiced objects were practiced twice, on two different trials, against a different novel practice lure on each trial (see the Appendix, Fig. 8). After the 28 trials of the practice block, the participants completed another 5-min visual distractor task of searching for Waldo.

Finally, in the test phase, one object was presented on the screen at a time, and participants were asked to make an old–new recognition judgment. If the object was old (previously seen in the experiment), participants were instructed to respond “old” by pressing the leftmost key on the response box with their right index finger. If the object was new (never before seen in the experiment), participants were instructed to respond “new” by pressing the second button from the left on the response box with their right middle finger.

The test phase included 84 trials, with half (42) presenting new objects and half (42) old objects. The old objects fell into three general classes: (1) 14 practiced objects: objects that were shown during the study phase and then practiced twice during the practice phase; (2) 14 related objects: objects that were not practiced, but the other object from its pair was practiced; and (3) 14 baseline objects: objects that were from categories that had never been practiced. The assignment of specific objects to these three classes was counterbalanced. Each of these three general classes of objects was further divided on the basis of the type of pair to which they had belonged. Specifically, each class of 14 objects consisted of seven objects from a temporal-only pair and seven objects from a temporal-plus-semantic pair. Again, the temporal-only pairs consisted of two objects presented together during the study phase that were not semantically related (e.g., vase and umbrella), whereas temporal-plus-semantic pairs consisted of two objects presented together during the study phase that were also semantically related (e.g., two different coffee mugs). The 42 new objects were drawn from the same semantic categories as the 42 old objects (see the Appendix, Fig. 9).

Experiment 1

Method

Participants

Twenty-six healthy young adults (mean age 19.5 years) participated for course credit. The participants reported normal color vision and normal or corrected-to-normal visual acuity. Informed consent was obtained prior to the beginning of the experiment, and all procedures were approved by the Institutional Review Board.

Stimuli and procedure

Participants were comfortably seated at a viewing distance of 80 cm, controlled by a forehead rest. The stimuli were drawn from public domain images downloaded from Google Images (http://images.google.com), viewed on a white background, each subtending 4.85° × 4.85° of visual angle. We followed the procedure outlined exactly as above in the General Procedure section.

Data analysis

The primary dependent variable for our recognition data was hit rate (i.e., hits for practiced, related, and baseline objects). To examine the conditions under which recognition-induced forgetting occurs, we implemented preplanned t tests between the hit rates for baseline and related objects. We found the same pattern of results using A' (Snodgrass, Levy-Berger, & Haydon, 1985). See Table 1 for the A' and B'' D values. To provide a way of quantifying the support for the null or alternative hypothesis, we calculated scaled JZS Bayes factors for all t tests (as specified in Rouder, Speckman, Sun, Morey, & Iverson, 2009), as well as Cohen’s d measure of effect size for all significant t tests.

Results

We first confirmed that participants had successfully practiced objects during the recognition practice phase. The average performance during the recognition practice phase was 90%. We further examined performance during the recognition practice phase by comparing memory for objects from temporal-only pairs (90%) to memory for objects from temporal-plus-semantic pairs (90%) and found no difference in recognition practice performance, t(25) = 1.00, p < .001, JZS Bayes factor = 4.83 in favor of the null hypothesis, meaning that the null hypothesis was approximately five times more likely than the alternative hypothesis. Therefore, any differences found herein between these two conditions cannot be explained by performance during the recognition practice phase.

To examine the relationship between the two conditions (temporal-only and temporal-plus-semantic), we submitted the data to a 2×2 repeated measures analysis of variance (ANOVA) with the factors Relatedness (temporal-only and temporal-plus-semantic) and Memory Change (forgetting, calculated as baseline – related; remembering, calculated as practiced – baseline). We found main effects of relatedness, F(1, 25) = 9.470, p = .005, η p 2 = .275, and memory change, F(1, 25) = 12.704, p = .002, η p 2 = .337, but no interaction F(1, 25) = 0.692, p = .413, η p 2 = .027. The average false alarm rate was 12.46.

Temporal-plus-semantic objects

Because in this experiment we used a novel manipulation of temporal groupings, we next confirmed that we could find the typical benefit for practiced objects and impairment for related objects with the temporal-plus-semantic objects. Figure 2a shows a significant effect of objects tested, F(2, 50) = 27.83, p < .001, η p 2 = .527, driven by improved recognition of practiced objects (98%) relative to baseline objects (79%) [t(25) = 4.77, p < .001, d = 1.35, scaled JZS Bayes factor = 379.6 in favor of the alternative hypothesis, meaning that the alternative hypothesis is approximately 380 times more likely than the null], and impaired recognition of related objects (71%) relative to baseline objects (79%) [t(25) = 2.11, p = .045, d = 0.34, scaled JZS Bayes factor = 1.37 in relatively weak favor of the alternative hypothesis], demonstrating the standard recognition-induced forgetting effect (Maxcey & Woodman, 2014), but in this modified paradigm.

Hit rates across the three classes of objects, to the “old” memory test objects in the test phase of Experiment 1: (a) Temporal-plus-semantic objects. (b) Temporal-only objects. In this and all subsequent data figures, the x-axis intersects the y-axis at the hit rate for baseline objects, and error bars represent 95% confidence intervals as described by (Cousineau, 2005) with Morey’s correction applied (Morey, 2008).

Temporal-only objects

Having established that recognition-induced forgetting can occur for semantically related objects that are temporally grouped, we next examined memory for objects that were temporally but not semantically grouped. Figure 2b shows the significant effect of objects tested, F(2, 50) = 17.22, p < .001, η p 2 = .408, driven by improved test phase recognition of practiced objects (99%) relative to baseline objects (81%) [t(25) = 5.33, p < .001, d = 1.51, scaled JZS Bayes factor = 1,409.6 in favor of the alternative hypothesis], but not test phase recognition of related objects (84%) relative to baseline objects (81%) [t(25) = 0.61, p = .458, scaled JZS Bayes factor = 4.07 in favor of the null hypothesis, meaning the null hypothesis is approximately four times more likely than the alternative].

Discussion

In Experiment 1, recognition-induced forgetting did occur for temporal-plus-semantic objects, but not for objects that were temporally but not also semantically grouped. Indeed, the difference between memory for baseline and related temporal-only objects was numerically in the opposite direction for related temporal-only objects.

Experiment 2

The objects in Experiment 1 were randomly paired, and participants were given no instruction to remember them together. As a result, we may have failed to find evidence of recognition-induced forgetting for temporal-only object pairs due to the absence of explicit instructions to remember the paired objects together. In Experiment 2, we tested the possibility that recognition-induced forgetting would occur for temporally grouped objects if participants were given explicit instructions to remember the object pairs as if they were being encountered together in the same room.

Some people have the view that a JZS Bayes factor below 3 (as we found in Exp. 1, in favor of the alternative hypothesis for recognition-induced forgetting of temporal-plus-semantic objects) is weak evidence of an effect. Therefore, we also ran Experiment 2 to further determine that recognition-induced forgetting did indeed occur for semantically related objects.

Method

Participants

A new group of 26 individuals from the same subpopulation participated (mean age = 19.6 years).

Procedure

The procedure was identical to that of Experiment 1, with only the following change. Participants were instructed to remember each pair of objects as if they were being encountered together in the same dorm room. Participants were told that every time the screen changed and two new objects appeared, they were seeing objects encountered in a different person’s dorm room.

Results

We first confirmed that participants successfully practiced the objects during the recognition practice phase. The average performance during the recognition practice phase was 91%. We further examined performance during the recognition practice phase by comparing memory for objects from temporal-only pairs (91%) to memory for objects from temporal-plus-semantic pairs (90%) and found no difference in recognition practice performance, t(25) = 1.02, p = .317, scaled JZS Bayes factor = 3.02 in favor of the null hypothesis. Therefore, any differences found herein between these two conditions cannot be explained by performance during the recognition practice phase.

We found a main effect of memory change, F(1, 25) = 20.336, p < .001, η p 2 = .449, but not one of relatedness, F(1, 25) = 0.440, p = .513, η p 2 = .017, and a significant interaction, F(1, 25) = 9.015, p = .006, η p 2 = .265. The average false alarm rate was 12.46%.

Temporal-plus-semantic objects

Figure 3a shows the significant effect of objects tested, F(2, 50) = 35.70, p < .001, η p 2 = .678, driven by improved recognition of practiced objects (97%) relative to baseline objects (75%) [t(25) = 6.88, p < .001, d = 1.62, JZS Bayes factor = 50,086.04 in favor of the alternative] and impaired recognition of related objects (66%) relative to baseline objects (75%) [t(25) = 2.86, p = .008, d = 0.46, JZS Bayes factor = 5.45 in favor of the alternative]. Having established that recognition-induced forgetting did occur for semantically related objects that are temporally grouped, we next examined memory for objects that were temporally but not semantically grouped.

Experiment 2 hit rates across the three classes of objects, to the “old” memory test objects in the test phase: (a) Temporal-plus-semantic objects. (b) Temporal-only objects.

Temporal-only objects

Figure 3b shows that the significant effect of objects tested, F(2, 50) = 31.31, p < .001, η p 2 = .556, was driven by improved test phase recognition of practiced objects (97%) relative to baseline objects (65%) [t(25) = 8.33, p < .001, d = 2.06, JZS Bayes factor = 1,165,993 in favor of the alternative], but not test phase recognition of related objects (69%) relative to baseline objects (65%) [t(25) = 0.735, p = .469, JZS Bayes factor = 3.77 in favor of the null].

Discussion

The results of Experiment 2 continue to indicate that recognition-induced forgetting occurs for semantically, but not temporally, grouped objects, with a numerical difference in the opposite direction.

Experiment 3

It is possible that participants did not show recognition-induced forgetting of temporal-only groupings in Experiments 1 and 2 because they were unable to remember the temporal groupings from the study phase. Specifically, if participants were not remembering the object pairs from the study phase, that would explain why we did not get recognition-induced forgetting of temporal-only pairs. Indeed, evidence of recognition-induced forgetting for temporal-plus-semantic pairs could simply be due to the semantic relationship and not to the temporal relationship. To rule out this alternative explanation, we added a fourth phase in Experiment 3 to test memory for the originally studied pairs at the end of the experiment. If participants had above-chance memory for these originally studied pairs, then the lack of recognition-induced forgetting for temporally grouped objects could not be due to a failure to remember the study phase pairs throughout the experiment.

Method

Participants

The participants were 44 new healthy young adults (mean age of 23.4 years). Experiment 3 included more participants because it had fewer trials in the novel analysis of memory for pairs introduced in this experiment.

Procedure

The procedure was identical to that of Experiment 2, with the following changes. After the third phase of the experiment, participants were presented with a fourth phase in which their memory for the original pairs was tested. The pairs memory test phase included 28 trials. Each trial presented a pair of objects. Half of the trials presented pairs that had originally been studied pairs from the study phase. For these pairs, the participant should correctly respond “yes” that they had seen that pair in the study phase. The remaining trials in the memory test phase were modified such that one of the objects was from an originally studied pair and the other object was a novel object from the same category as the original object it replaced. For example, if an originally studied temporal-only pair consisted of a yellow backpack and a pink coatrack, a novel pairing would be a pink coatrack and a green backpack. If an originally studied temporal-plus-semantic pair consisted of a red bowtie and a blue bowtie, a novel pairing would be a blue bowtie and a green bowtie. These novel pairs warranted a “no” response from participants, because they were not originally studied pairs. The specific 14 pairs that were changed were counterbalanced across participants. Half of the trials in this phase tested memory for temporal-only pairs, whereas the remaining trials consisted of temporal-plus-semantic pairs.

Results

We first confirmed that participants successfully practiced objects during the recognition practice phase. The average performance during the recognition practice phase was 94%. We further examined performance during the recognition practice phase by comparing memory for objects from temporal-only pairs (95%) to memory for objects from temporal-plus-semantic pairs (93%) and found no difference in recognition practice performance, t(43) = 1.27, p = .210, JZS Bayes factor = 2.89 in favor of the null hypothesis. Therefore, any differences found herein between these two conditions cannot be explained by performance during the recognition practice phase.

We found main effects of memory change, F(1, 43) = 8.885, p = .005, η p 2 = .171, and relatedness, F(1, 43) = 16.338, p < .001, η p 2 = .275, as well as a significant interaction, F(1, 43) = 17.652, p < .001, η p 2 = .291. The average false alarm rate was 14.23%.

Temporal-plus-semantic objects

We first sought to confirm that we could replicate Experiments 1 and 2 in Experiment 3. Figure 4a shows the significant effect of objects tested, F(2, 86) = 98.17, p < .001, η p 2 = .695, driven by improved recognition of practiced objects (97%) relative to baseline objects (76%) [t(43) = 6.93, p < .001, d = 1.39, scaled JZS Bayes factor = 805,633.8 in favor of the alternative hypothesis], and impaired recognition of related objects (56%) relative to baseline objects (76%) [t(43) = 6.51, p < .001, d = 1.07, scaled JZS Bayes factor = 214,076.2 in favor of the alternative hypothesis].

Experiment 3 hit rates across the three classes of objects, to the “old” memory test objects in the test phase: (a) Temporal-plus-semantic objects. (b) Temporal-only objects.

Temporal-only objects

Figure 4b shows that the significant effect of objects tested, F(2, 86) = 42.95, p < .001, η p 2 = .500, was driven by improved recognition of practiced objects (98%) relative to baseline objects (68%) [t(43) = 7.74, p < .001, d = 1.66, scaled JZS Bayes factor = 10,252,237 in favor of the alternative hypothesis], but not by test phase recognition of related objects (69%) relative to baseline objects (68%) [t(43) = 0.75, p = .748, scaled JZS Bayes factor = 4.70 in favor of the null hypothesis]. Thus, we replicated the pattern of results from Experiments 1 and 2.

Memory for pairs

We found that responses to old pairs in the fourth phase of the experiment averaged 76%, indicating that participants had memory significantly above 50% chance for the temporal groupings shown at the beginning of the experiment during the study phase [t(43) = 16.09, p < .001, d = 3.43, scaled JZS Bayes factor = 5.716049 × 1016 in favor of the alternative hypothesis].

Recall that recognition-induced forgetting occurred for temporal-plus-semantic pairs but not for temporal-only pairs. The induced forgetting of temporal-plus-semantic pairs suggests that memory should be lower for those pairs than for temporal-only pairs, which did not suffer recognition-induced forgetting. Indeed, memory for temporal-only object pairs (84%) was reliably higher than memory for temporal-plus-semantic pairs (69%) [t(43) = 6.99, p < .001, d = 1.19, scaled JZS Bayes factor = 973,422.2 in favor of the alternative hypothesis], consistent with the evidence from Experiments 1 and 2 that recognition practice differentially influenced the two types of pairs, inducing the forgetting of temporal-plus-semantic pairs but not of temporal-only pairs.

Discussion

The results of Experiment 3 ruled out the possibility that participants did not remember the pairs from the study phase and continued to indicate that recognition-induced forgetting occurs for semantically, but not for temporally, grouped objects. It is possible that, by the time memory for pairs was tested in this fourth phase, memory for the pairs had been contaminated by the practice and study phases. However, any interference with representations of these pairs would only have served to decrease memory for them; when tested during the fourth phase, however, memory for pairs from the study phase was still above chance. Therefore, Experiment 3 ruled out the alternative explanation that recognition-induced forgetting did not occur because the temporal groupings were not remembered.

Experiment 4

The objects used in Experiments 1–3 had been randomly paired objects (e.g., a glove and a vase). These randomly paired objects lacked the contextual cues typical of real-world scenes, such as that a toaster and a blender co-occur in a kitchen. In Experiment 4, we presented pairs of objects that would be found together in a location (e.g., kitchen), to strengthen the temporal grouping of the studied pairs. These represented object pairs that are weakly semantically related, since they were not from the same category of objects, but from related categories. This was our attempt to give temporal grouping a semantic boost to once again try to observe forgetting between the objects.

Method

Participants

The participants were 26 new, healthy young adults (mean age of 24.1 years).

Stimuli and procedure

Experiment 4 was identical to Experiment 2, with the following changes, shown in Fig. 5. The study phase consisted of 24 trials. Each trial consisted of two objects that belonged to a scene (e.g., tractor and chicken). Thus, all of the objects were temporal-only pairs. There were no temporal-plus-semantic pairs in this experiment. The name of the scene appeared in text at the top of the screen (e.g., “farm”). Participants were encouraged to remember the two objects as if they had encountered them in the scene named on the screen.

In Experiment 4, the pairs presented during the study phase were grouped by scene. The scene name was presented above the pair. All objects in Experiment 4 were temporal-only related (e.g., tractor and chicken). There were no temporal-plus-semantic pairs (i.e., none of the pairs consisted of two objects from the same category, such as two tractors or two chickens). During the recognition practice phase, participants were presented with two objects that would be plausible to find in the named scene (e.g., both a tractor and a bale of straw would plausibly be found in a farm scene) and asked which object they remembered studying in the farm scene. At test, participants were sequentially presented with objects and the name of a scene in which an object was likely to be found. Participants made old–new recognition judgments regarding whether they had indeed previously seen the presented object in the named scene.

The practice phase consisted of 24 trials. During the practice phase, two objects and a scene name were again presented. One of the two objects was the practiced object (e.g., tractor), and the other was a novel practice lure that also could have been found in the scene (e.g., straw bale). Finally, the test phase consisted of 96 trials. During each test trial, the name of the scene where the object was likely to have been found was presented on the screen, and participants were required to respond whether they had encountered that specific object previously in the experiment in the named scene. Half of the test trials presented objects from the study phase, with the remaining half presenting new objects that could have also been found in the named scene. The 48 objects from the original study phase were divided into 24 baseline objects, 12 related objects, and 12 practiced objects.

Results

Figure 6 shows the significant effect of objects tested, F(2, 50) = 31.46, p < .001, η p 2 = .557, driven by improved recognition of practiced objects (97%) relative to baseline objects (79%) [t(25) = 8.21, p < .001, d = 1.86, scaled JZS Bayes factor = 906,447.2 in favor of the alternative hypothesis], but not test phase recognition of related objects (81%) relative to baseline objects (79%) [t(25) = 0.79, p = .436, scaled JZS Bayes factor = 3.63 in favor of the null hypothesis]. The average false alarm rate was 5.93%.

Hit rates of the responses to the “old” memory test objects in the test phase of Experiment 4.

Discussion

In Experiment 4, all of the objects were temporally grouped and drawn from related object categories, but not drawn from the same category of objects (e.g., vases, umbrellas, or butterflies). Participants were encouraged to remember the two objects as if they had encountered the objects together in the scene named on the screen. Under these circumstances, temporally grouped objects did not suffer from recognition-induced forgetting, replicating and extending the same pattern of results from Experiments 1–3.

In all recognition-induced forgetting studies to date, the categories of objects have been semantic categories like vases, apples, and backpacks (Maxcey, 2016; Maxcey & Bostic, 2015; Maxcey et al., 2016; Maxcey & Woodman, 2014). These categories have been constructed such that simply looking at the images would activate the shared category membership. Specifically, pictures of a black vase, a flowered vase, and a metal vase would all activate the semantic category “vase,” without requiring that a category label be presented on the screen simultaneously with the object image. When we presented two objects that did not clearly activate the same semantic category (e.g., a cow and a tractor), we had no prior evidence to suggest that these images would activate a shared semantic category that would give rise to recognition-induced forgetting in the same way that temporal-plus-semantic pairs had in the previous experiments. Future work will further elucidate the types of categorical groupings that give rise to recognition-induced forgetting.

General discussion

Here we tested the hypothesis that recognition-induced forgetting occurs for temporally related objects. In Experiments 1–3, objects were either related in a temporal-only manner, meaning that they co-occurred at the same time on the screen but were not drawn from the same semantic category (e.g., a lamp and a vase), or the objects were related in a temporal-plus-semantic manner, in that they co-occurred on the screen at the same time and belonged to the same semantic category (e.g., two vases). We found that, although temporal-plus-semantic objects did suffer recognition-induced forgetting, temporal-only objects were immune from such forgetting.

Experiment 3 ruled out the potential alternative explanation that recognition-induced forgetting did not occur for temporally grouped objects because participants did not remember the temporal groupings from the study phase. In Experiment 4, the objects were grouped by membership in a scene. Specifically, two objects were presented as consistent elements of a larger named scene, such as the circus, doctor’s office, or kitchen. Even with the schematic representations of scenes guiding temporal grouping, the temporally grouped objects in Experiment 4 (e.g., a blender and spatula from a kitchen scene) did not suffer from recognition-induced forgetting. There are four major implications of our findings. We will discuss each of these in turn below.

Can forgetting occur over newly learned associations?

Recent evidence has shown that despite forming memory representations quickly, visual long-term memory is limited in its ability to form and maintain associations correctly over time (Lew, Pashler, & Vul, 2016). These results may be taken to suggest that the reason we did not find recognition-induced forgetting for temporal-only pairs in the present study was that they had been learned in the laboratory within an hour before. However, the results of Experiment 3 demonstrated that participants did remember the temporal-only pairs from the study phase throughout the experiment. In fact, memory for temporal-only pairs was superior to memory for temporal-plus-semantic pairs following the test phase of the experiment.

Mechanisms underlying recognition-induced forgetting

When the present results are viewed through the lens of the dual-process framework for recognition memory, it would appear that recognition-induced forgetting is driven by familiarity signals (e.g., I recognize that woman but I do not remember a specific episode during which I encountered her). Consistent with the present results, this type of memory does not support associative or memory representations for pairs of items (Yonelinas, 2002).

Evidence from both human and animal studies has suggested that perirhinal and adjacent visual cortex are the neural substrates of the familiarity branch of recognition memory (for a review, see Brown & Aggleton, 2001). If this dual-process model of recognition memory is correct, then the present study predicts that future work could test the prediction that recognition-induced forgetting operates over representations in the perirhinal and adjacent visual cortex rather than in the hippocampus because temporally paired objects are immune to recognition-induced forgetting. This would fit with a number of the other known features of perirhinal cortex, such as its being robustly activated by picture stimuli and supporting the representation of object properties as well as of semantic and conceptual properties (Davachi, 2006; Köhler, Danckert, Gati, & Menon, 2005; Pihlajamäki et al., 2003; Pihlajamäki et al., 2004; Taylor, Moss, Stamatakis, & Tyler, 2006).

Does recognition-induced forgetting operate over episodic or semantic memory?

Our evidence that recognition-induced forgetting does not operate over temporally grouped objects may suggest that this forgetting effect does not involve episodic memory representations, but rather semantic memory representations. Indeed, Tulving (1982, 1985; see also Nyberg et al., 1996; Tulving & Markowitsch, 1998; Tulving & Schacter, 1990; Wheeler et al., 1997) suggested that episodic memory drives recollection and that semantic memory supports familiarity. Given that the present results align with the familiarity branch of dual-process models of recognition memory, as discussed above, these models suggest that recognition-induced forgetting operates over semantic memory representations rather than episodic memory representations, although further research will be needed.

Are retrieval- and recognition-induced forgetting the same phenomenon?

Retrieval-induced forgetting is a similar access-based memory phenomenon, with the major methodological distinction that in this case researchers examine forgetting of verbal stimuli following retrieval practice (Anderson, Bjork, & Bjork, 1994). In the typical retrieval-induced forgetting study, participants are given a list of category–exemplar word pairs to remember. Then a subset of those category–exemplar pairs must be retrieved during word stem completion tasks. For example, participants must retrieve the word “banana” from the study phase pair “FRUIT–banana” in order to complete the word stem “FRUIT–ba____” (see Murayama, Miyatsu, Buchli, & Storm, 2014, for an excellent review of the retrieval-induced forgetting literature).

In the original article on recognition-induced forgetting, we discussed the difficulty of implementing a retrieval task using visual stimuli that lends itself to quantitative performance measures (Maxcey & Woodman, 2014). Specifically, the closest visual task that implements retrieval of the sort in the word stem completion tasks used in retrieval-induced forgetting studies would be instructing participants to complete a line drawing when given a few initial lines. Such a laboratory task certainly lacks ecological validity, as compared to the many circumstances under which recognition of visual objects is required during real-world vision.

Given the early stage of investigation of recognition-induced forgetting, it has been difficult to specify the relationship between recognition- and retrieval-induced forgetting. Until now, we have only found similarities between the two forgetting phenomena (Maxcey, 2016). However the present study illustrates a clear distinction between the two phenomena. Specifically, the original studies of retrieval-induced forgetting examined memory for words, which were inherently organized in semantic memory. Indeed, retrieval-induced forgetting has been shown in semantic memory (Johnson & Anderson, 2004). However, retrieval-induced forgetting studies have typically been interpreted as involving episodic retrieval (Anderson, 2003; Levy & Anderson, 2002), and this type of forgetting has been shown to occur for associative information stored in episodic memory, such as location and perceptual groupings (Ciranni & Shimamura, 1999; Gómez-Ariza, Fernandez, & Bajo, 2012). The present findings that recognition-induced forgetting does not operate over temporally paired objects suggest that episodic memories are immune to recognition-induced forgetting. Rather, the present findings suggest that recognition-induced forgetting operates among representations in semantic long-term memory. Thus, these seemingly similar forgetting phenomena appear to involve distinct memory representations.

Conclusion

The present study suggests that recognition-induced forgetting does not occur in the eyewitness testimony situation described earlier, in which a witness to a crime recognizes a bank robber and consequently forgets the getaway car, because the face and car are drawn from different semantic categories. However, the present study does support the notion that recognition-induced forgetting would occur in the event of an eyewitness recognizing one of two weapons from the scene of a crime, or one of two cars fleeing the crime scene. This is because those objects belong to the same semantic category.

Notes

Recognition-induced forgetting seems closely related to another access-based forgetting phenomenon, retrieval-induced forgetting, as we will talk about in the General Discussion (see also Maxcey, 2016). Here it is important to note that in the present study we examined the forgetting of pictures in visual long-term memory as a function of recognition practice. Retrieval-induced forgetting typically examines the forgetting of words from long-term memory following retrieval practice.

References

Anderson, M. C. (2003). Rethinking interference theory: Executive control and the mechanisms of forgetting. Journal of Memory and Language, 49, 415–445. doi:10.1016/j.jml.2003.08.006

Anderson, M. C., Bjork, R. A., & Bjork, E. L. (1994). Remembering can cause forgetting: Retrieval dynamics in long-term memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 20, 1063–1087. doi:10.1037/0278-7393.20.5.1063

Benforado, A. (2015). Unfair: The new science of criminal justice. New York, NY: Crown.

Brown, M. W., & Aggleton, J. P. (2001). Recognition memory: What are the roles of the perirhinal cortex and hippocampus? Nature Reviews Neuroscience, 2, 51–61.

Ciranni, M. A., & Shimamura, A. P. (1999). Retrieval-induced forgetting in episodic memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 25, 1403–1414. doi:10.1037/0278-7393.25.6.1403

Cousineau, D. (2005). Confidence intervals in within-subject designs: A simpler solution to Loftus and Masson's method. Tutorials in Quantitative Methods for Psychology, 1(1), 42–45

Davachi, L. (2006). Item, context and relational episodic encoding in humans. Current Opinion in Neurobiology, 16, 693–700.

Gómez-Ariza, C. J., Fernandez, A., & Bajo, M. T. (2012). Incidental retrieval-induced forgetting of location information. Psychonomic Bulletin & Review, 19, 483–489.

Hirsh, R. (1974). The hippocampus and contextual retrieval of information from memory: A theory. Behavioral Biology, 12, 421–444.

Howard, M. W., & Kahana, M. J. (2002). A distributed representation of temporal context. Journal of Mathematical Psychology, 46, 269–299. doi:10.1006/jmps.2001.1388

Johnson, S. K., & Anderson, M. C. (2004). The role of inhibitory control in forgetting semantic knowledge. Psychological Science, 15, 448–453.

Kahana, M. J., Howard, M. W., & Polyn, S. M. (2008). Associative retrieval processes in episodic memory. In J. Byrne & H. L. Roediger III (Eds.), Learning and memory: Vol. 2. Cognitive psychology of memory (pp. 467–490). Amsterdam, The Netherlands: Elsevier.

Kim, G., Lewis-Peacock, J. A., Norman, K. A., & Turk-Browne, N. B. (2014). Pruning of memories by context-based prediction error. Proceedings of the National Academy of Sciences, 111, 8997–9002.

Köhler, S., Danckert, S., Gati, J. S., & Menon, R. S. (2005). Novelty responses to relational and non-relational information in the hippocampus and the parahippocampal region: A comparison on the basis of event-related fMRI. Hippocampus, 15, 763–774.

Kok, P., Jehee, J. F., & de Lange, F. P. (2012). Less is more: Expectation sharpens representations in the primary visual cortex. Neuron, 75, 265–270.

Kraus, B. J., Brandon, M. P., Robinson, R. J., Connerney, M. A., Hasselmo, M. E., & Eichenbaum, H. (2015). During running in place, grid cells integrate elapsed time and distance run. Neuron, 88, 578–589. doi:10.1016/j.neuron.2015.09.031

Levy, B. J., & Anderson, M. C. (2002). Inhibitory processes and the control of memory retrieval. Trends in Cognitive Sciences, 6, 299–305. doi:10.1016/S1364-6613(02)01923-X

Lew, T. F., Pashler, H. E., & Vul, E. (2016). Fragile associations coexist with robust memories for precise details in long-term memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 42, 379–393. doi:10.1037/xlm0000178

Maxcey, A. M. (2016). Recognition-induced forgetting is not due to category-based set size. Attention, Perception, & Psychophysics, 78, 187–197. doi:10.3758/s13414-015-1007-1

Maxcey, A. M., & Bostic, J. (2015). Activating learned exemplars in children impairs memory for related exemplars in visual long-term memory. Visual Cognition, 23, 643–558. doi:10.1080/13506285.2015.1064052

Maxcey, A. M., & Woodman, G. F. (2014). Forgetting induced by recognition of visual images. Visual Cognition, 22, 789–808. doi:10.1080/13506285.2014.917134

Maxcey, A. M., Bostic, J., & Maldonado, T. (2016). Recognition practice results in a generalizable skill in older adults: Decreased intrusion errors to novel objects belonging to practiced categories. Applied Cognitive Psychology, 30, 643–649. doi:10.1002/acp.3236

Miyashita, Y. (1993). Inferior temporal cortex: Where visual perception meets memory. Annual Review of Neuroscience, 16, 245–263.

Morey, R. D. (2008). Confidence intervals from normalized data: A correction to cousineau. Tutorials in Quantitative Methods for Psychology, 4(2), 61–64

Murayama, K., Miyatsu, T., Buchli, D., & Storm, B. C. (2014). Forgetting as a consequence of retrieval: A meta-analytic review of retrieval-induced forgetting. Psychological Bulletin, 140, 1383–1409. doi:10.1037/a0037505

Nyberg, L., Cabeza, R., & Tulving, E. (1996). PET studies of encoding and retrieval: The HERA model. Psychonomic Bulletin & Review, 3, 135–148.

Olson, I. R., & Chun, M. M. (2001). Temporal contextual cueing of visual attention. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27, 1299–1313. doi:10.1037/0278-7393.27.5.1299

Pihlajamäki, M., Tanila, H., Hänninen, T., Könönen, M., Mikkonen, M., Jalkanen, V., … Soininen, H. (2003). Encoding of novel picture pairs activates the perirhinal cortex: An fMRI study. Hippocampus, 13, 67–80.

Pihlajamäki, M., Tanila, H., Könönen, M., Hänninen, T., Hämäläinen, A., Soininen, H., & Aronen, H. J. (2004). Visual presentation of novel objects and new spatial arrangements of objects differentially activates the medial temporal lobe subareas in humans. European Journal of Neuroscience, 19, 1939–1949.

Rouder, J. N., Speckman, P. L., Sun, D., Morey, R. D., & Iverson, G. (2009). Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic Bulletin & Review, 16, 225–237. doi:10.3758/PBR.16.2.225

Schapiro, A. C., Kustner, L. V., & Turk-Browne, N. B. (2012). Shaping of object representations in the human medial temporal lobe based on temporal regularities. Current Biology, 22, 1622–1627.

Snodgrass, J. G., Levy-Berger, G., & Haydon, M. (1985). Human experimental psychology. Oxford, UK: Oxford University Press.

Taylor, K. I., Moss, H. E., Stamatakis, E. A., & Tyler, L. K. (2006). Binding crossmodal object features in perirhinal cortex. Proceedings of the National Academy of Sciences, 103, 8239–8244.

Tulving, E. (1982). Synergistic ecphory in recall and recognition. Canadian Journal of Psychology, 36, 130.

Tulving, E. (1985). Memory and consciousness. Canadian Psychology, 26, 1–12. doi:10.1037/h0080017

Tulving, E., & Markowitsch, H. J. (1998). Episodic and declarative memory: Role of the hippocampus. Hippocampus, 8, 198–204. doi:10.1002/(SICI)1098-1063(1998)8:3&lt;198::AID-HIPO2&gt;3.0.CO;2-G

Tulving, E., & Schacter, D. L. (1990). Priming and human memory systems. Science, 247, 301–306. doi:10.1126/science.2296719

Turk-Browne, N. B., Simon, M. G., & Sederberg, P. B. (2012). Scene representations in parahippocampal cortex depend on temporal context. Journal of Neuroscience, 32, 7202–7207.

Wheeler, M. A., Stuss, D. T., & Tulving, E. (1997). Toward a theory of episodic memory: The frontal lobes and autonoetic consciousness. Psychological Bulletin, 121, 331.

Yonelinas, A. P. (2002). The nature of recollection and familiarity: A review of 30 years of research. Journal of Memory and Language, 46, 441–517. doi:10.1006/jmla.2002.2864

Author note

E. S. was supported by NIH grant 4T34GM007663. Geoffrey F. Woodman provided constructive comments on a previous version of the manuscript. We thank the following individuals for their useful online calculators: Jeffrey Rouder for his scaled JZS Bayes Factor calculator as well as valuable feedback on the appropriate use of the JZS Bayes factor, and Lee Becker for his online Cohen’s d calculator.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Experiment 4: Study phase pairs comprised the following paired objects and were presented along with the verbal label indicating the scene to which the objects belonged. Note that during the experiment, the scene name was presented above the paired objects.

Experiment 4: Recognition practice phase pairs comprised the following paired objects. In this figure, the old object is always on the left, for demonstration purposes. The location of the old object (left vs. right) was counterbalanced in the actual experiment.

Experiment 4: Test phase objects comprised the following objects and scene labels, presented sequentially.

Rights and permissions

About this article

Cite this article

Maxcey, A.M., Glenn, H. & Stansberry, E. Recognition-induced forgetting does not occur for temporally grouped objects unless they are semantically related. Psychon Bull Rev 25, 1087–1103 (2018). https://doi.org/10.3758/s13423-017-1302-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-017-1302-z