Abstract

We investigated whether musical competence was associated with the perception of foreign-language phonemes. The sample comprised adult native-speakers of English who varied in music training. The measures included tests of general cognitive abilities, melody and rhythm perception, and the perception of consonantal contrasts that were phonemic in Zulu but not in English. Music training was associated positively with performance on the tests of melody and rhythm perception, but not with performance on the phoneme-perception task. In other words, we found no evidence for transfer of music training to foreign-language speech perception. Rhythm perception was not associated with the perception of Zulu clicks, but such an association was evident when the phonemes sounded more similar to English consonants. Moreover, it persisted after controlling for general cognitive abilities and music training. By contrast, there was no association between melody perception and phoneme perception. The findings are consistent with proposals that music- and speech-perception rely on similar mechanisms of auditory temporal processing, and that this overlap is independent of general cognitive functioning. They provide no support, however, for the idea that music training improves speech perception.

Similar content being viewed by others

Music and speech comprise sounds that unfold over time. The two domains may draw on separate (Peretz & Coltheart, 2003) or overlapping (e.g., Patel, 2011) mental resources. Here, we examined whether music skills predict phonological perception in a foreign language, asking whether (1) speech perception is associated with musical competence, (2) observed associations are better attributed to music training or perceptual abilities, and (3) such associations are independent of general cognitive abilities.

Music perception and speech perception

Theories of overlap in temporal processing for music and speech (Goswami, 2012; Tallal & Gaab, 2006) imply that rhythm abilities are especially likely to correlate with speech processing. In line with this view, rhythm abilities predict phonological processing in typically developing children (Carr, White-Schwoch, Tierney, Strait, & Kraus, 2014; Moritz, Yampolsky, Papadelis, Thomson, & Wolf, 2013), adolescents (Tierney & Kraus, 2013), and adults (Grube, Cooper, & Griffiths, 2013). Such associations also extend to syntax and reading abilities (Gordon et al., 2015; Grube et al., 2013; Tierney & Kraus, 2013). For children with reading impairments, rhythm abilities are below normal (Overy, Nicolson, Fawcett, & Clarke, 2003) and correlated with their phonological and reading abilities (Huss, Verney, Fosker, Mead, & Goswami, 2011). Moreover, interventions that focus on rhythm and temporal-processing improve their phonological skills (Flaugnacco et al., 2015; Thomson, Leong, & Goswami, 2013).

Among adults, however, the story is actually more complicated. For example, melody and rhythm perception are correlated (.5 < r < .7; Bhatara et al., 2015; Wallentin et al., 2010), and in studies of non-native language (L2) abilities, researchers have reported that L2 experience predicts rhythm but not melody perception (Bhatara, Yeung, & Nazzi, 2015), melody perception is correlated positively with L2 pronunciation (Posedel, Emery, Souza, & Fountain, 2012), and better melody and rhythm abilities predict better L2 phonological abilities (Kempe, Bublitsz, & Brooks, 2015; Slevc & Miyake, 2006). Moreover, for typically developing children, melody perception predicts phonological processing (or reading ability) equally well or better than rhythm perception (Anvari, Trainor, Woodside, & Levy, 2002; Grube, Kumar, Cooper, Turton, & Griffiths, 2012), and associations between rhythm perception and phonological processing can disappear when IQ is held constant (Gordon et al., 2015). It is an open question, then, whether associations with speech perception are stronger for rhythm than for melody perception.

Music training and speech perception

Music training is associated with speech perception, higher-level language abilities (e.g., reading), and general cognitive abilities (Schellenberg & Weiss, 2013). Indeed, music training is associated with L1 phonological perception (Zuk et al., 2013) and reading abilities (Corrigall & Trainor, 2011), and with L2 fluency (Swaminathan & Gopinath, 2013; Yang, Ma, Gong, Hu, & Yao, 2014). Longitudinal interventions with random assignment indicate that music training may actually cause improvement in children’s speech perception (Degé & Schwarzer, 2011; Flaugnacco et al., 2015; François, Chobert, Besson, & Schön, 2013; Moreno et al., 2009; Thomson et al., 2013).

Nevertheless, associations between music training and speech perception are not always replicable (Boebinger et al., 2015; Ruggles, Freyman, & Oxenham, 2014). Moreover, intervention studies have adopted intensive (daily) training that focused primarily on listening skills rather than playing an instrument (Degé & Schwarzer, 2011), programs that included training in speech rhythm in addition to music rhythm (Thomson et al., 2013), or tasks that were biased in favor of the music group (François et al., 2013). Correlational studies typically involve more conventional music lessons but preexisting musical, cognitive, and motivational factors mean that the direction of causation is unclear (Corrigall & Schellenberg, 2015; Corrigall, Schellenberg, & Misura, 2013).

The present study

We sought to determine whether non-native speech perception is associated with music training, and whether it is more closely associated with melody or rhythm perception. Examination of music-perception abilities and music training allowed us to ask whether observed associations were better explained by music-perception abilities with training held constant, or, conversely, by music training with music-perception abilities held constant.

One view holds that music lessons enhance speech skills by training the ability to decode meaning from sound (Kraus & Chandrasekaran, 2010). Because listeners perceive speech sounds from an unfamiliar language using the phonological framework of their native language (e.g., Best, McRoberts, & Goodell, 2001; Werker & Tees, 1984), our stimulus set included tokens that varied in resemblance to Canadian-English phonology (Best et al., 2001; Best, McRoberts, & Sithole, 1988). At the extreme, we tested participants’ ability to discriminate clicks in Zulu. In other conditions, Zulu contrasts were foreign sounding but more easily assimilated to English categories. If there is an association between music and speech, musical competence may be important only when stimuli sound like speech.

Although musical competence predicts speech and language abilities, it is also correlated with visuospatial skills and general cognitive abilities (Schellenberg & Weiss, 2013). Indeed, intelligence and memory are predicted by music training and by basic music-perception skills. Thus, we also tested whether associations between music and speech are a by-product of individual differences in general cognitive functioning.

Method

Participants

The participants were 151 undergraduates who were native speakers of English (87 female, 13 left-handed, mean age 18.4 years, SD = 1.0) and received all of their formal education in English. None reported a history of hearing problems or exposure to an African language. They had an average of 4.9 years of private or school music lessons. For those who reported learning more than one instrument (or voice), duration of training was summed across instruments. Because the distribution was skewed positively (SD = 6.8 years, median = 2), duration of training was square-root transformed for statistical analyses.

Measures

Socioeconomic status

Participants provided information about their family income and mother’s and father’s education, as in previous research (e.g., Schellenberg, 2006). Because the three SES variables were intercorrelated, ps < .001, the principal component was extracted for use in the statistical analysis. This latent variable correlated highly with each original variable, rs > .7, and accounted for 61.8% of the variance.

General cognitive abilities

The forward and backward portions of the Digit Span test were used to measure short-term and working memory, respectively. Nonverbal intelligence was measured with the 12-item version of Raven’s Advanced Progressive Matrices (APM; Bors and Stokes, 1998).

Music-perception skills

The Musical Ear Test (MET; Wallentin, Nielsen, Friis-Olivarius, Vuust, & Vuust, 2010) provided two measures of musical competence, specifically melody perception and rhythm perception. Each trial involved two short auditory sequences that were identical on half of the trials and different on others. Participants judged whether the two sequences were identical.

Speech perception

A Zulu, minimal-pairs, consonant-matching task had four conditions that varied in difficulty depending on similarity to English consonants (Best et al., 1988, 2001). Condition 1 (the easiest) contrasted a voiceless and voiced lateral fricative (/ɬ/-/ɮ/), which native-English listeners assimilate to different English phonemes (/θ s ∫/ vs /ð z ʒ/). Condition 2 had voiceless aspirated and ejective (glottalized) velar stops (/kh/-/k′/), which are typically assimilated to a single English consonant (/k/), although one phoneme sounds like a better approximation. Condition 3 comprised plosive and implosive voiced bilabial stops (/b/-/ɓ/), both of which are assimilated to a single English consonant (/b/) but sound different. Condition 4 (the most difficult) had voiceless unaspirated apical clicks and lateral clicks, which cannot be assimilated to any English consonants. All tokens were consonant-vowel syllables with contrasting consonants but the same vowels within a condition (/ɛ/, /a/, /u/, and /a/ in Conditions 1–4, respectively). All contrasts differed in both temporal and pitch cues, such that associations with either melody or rhythm perception were plausible. The two click syllables varied less in overall duration (286 vs. 293 ms on average), however, compared to pairs in other conditions (310 vs. 345 ms, 285 vs. 264 ms, and 261 vs. 294 ms, for Conditions 1–3, respectively). More detailed acoustic information is provided in Supplementary Materials.

In an AXB discrimination task, A (presented first) and B (presented last) were contrasting speech tokens that had different consonants. X (presented between A and B) was always a non-identical token from the same category as A (half of the trials) or B (the other half). Participants decided whether A or B sounded more like X, such that the task required phoneme discrimination and matching. Assignment of the two phonemic categories to A or B was counterbalanced. Within each trial, the onset-to-onset interval was fixed at 1 s. The test was presented in eight blocks of 40 trials each, with two blocks per condition, such that there were 80 trials per condition, with blocks and trials randomized separately for each participant.

Procedure

Participants were tested individually in a sound-attenuating booth. They completed the digit-span test, the speech-perception test, and a questionnaire that asked for background information (history of music training, demographics). After a short break, they completed the APM and the MET. The speech perception test and the MET were administered on an iMac with stimuli presented over headphones. The testing session took up to 90 min.

Results

Performance was above chance levels in each of the four speech conditions, ps < .001. A one-way repeated-measures Analysis of Variance confirmed that performance differed across conditions, F(3, 450) = 767.24, p < .001, partial η2 = .836, with better performance in Condition 1 than 2, in Condition 2 than 3, and in Condition 3 than 4, ps < .001.

Despite the predicted decline in performance as the stimuli decreased in similarity to English phonemes, a marked violation of sphericity, p < .001 (Mauchly’s test), indicated that pairwise correlations between conditions varied markedly. To reduce redundancy in the results that follow, we conducted a principal components analysis (varimax rotation). A two-component solution accounted for two-thirds (66.45%) of the original variance. Conditions 1, 2, and 3 loaded onto the first component (rs ≥ .68), whereas Condition 4 was almost perfectly correlated with the second component (r = .93). Whereas the first (speech-like) component reflected perception of speech-like aspects of the Zulu tokens, the second (non-speech-like) component reflected perception of non-speech-like aspects. Factors scores were used in subsequent analyses. Results from original conditions are provided in the Supplementary Materials.

Preliminary analyses revealed that SES had no associations with any other variables. SES was not considered further. Correlations among our cognitive variables (short-term memory, working memory, nonverbal intelligence) revealed that working memory was correlated positively with short-term memory, r = .47, and nonverbal intelligence, r = .28, ps < .001. To identify possible confounding variables in the main analyses, we conducted pairwise associations between cognitive variables and each of our music (music training, melody perception, rhythm perception) and phoneme-perception (speech-like, non-speech-like) variables (see Table 1). Duration of music training was associated positively with working memory. Melody perception was correlated positively with short-term memory, whereas rhythm perception was correlated positively with short-term and working memory. The phoneme-perception variables had no associations with the cognitive variables.

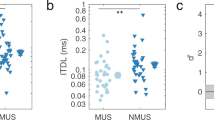

The main analyses examined associations between the speech and music variables (see Table 2). As expected, melody perception and rhythm perception were associated positively, and both variables were correlated positively with music training. There were no simple or partial associations between music training and non-native phoneme discrimination. When we examined associations between music perception and phoneme discrimination, only rhythm perception was a significant predictor, but only for the speech-like factor. Rhythm perception continued to be associated with the speech-like factor after we held short-term memory and working memory constant, pr (partial correlation) = .25, p = .002. When we included music training and nonverbal intelligence as additional control variables, the partial association between rhythm perception and the speech-like component was similar in magnitude, pr = .26, p = .001.

To ensure that the null results with music training were not an artifact of the way it was coded, we coded training in three additional ways. The results did not change (see the Supplementary Materials).

Discussion

In a sample of adult native speakers of English, we examined whether musical expertise predicted speech perception in a foreign language. Rhythm perception predicted phoneme-discrimination performance in Zulu, and this association remained significant even after controlling for general cognition and music training. The association was limited, however, to phonemes that resembled tokens from English phonology. Rhythm perception did not predict the ability to discriminate Zulu clicks, and there were no associations between non-native speech perception and melody perception or music training.

Although rhythm perception and melody perception were correlated in the present study, our findings are consistent with proposals of a special relation between music and language domains (Kraus & Chandrasekharan, 2010; Patel, 2011) that stems primarily from shared temporal processing (Goswami, 2012; Tallal & Gaab, 2006). Nevertheless, a correlation with rhythm but not with melody could also stem from the fact that temporal distinctions (e.g., overall duration) were greater for the speech-like than the non-speech-like contrasts. The association could also be the consequence of native-language background. Because pitch does not determine lexical meaning in English, native speakers may attend preferentially to temporal cues. For tone languages, however, native speakers may be more inclined to attend to pitch cues in non-native speech, possibly giving rise to an association of melody perception with non-native phoneme perception. A similar argument applies to the finding that rhythm perception did not predict the perception of Zulu clicks. If musical competence is especially relevant for acoustic cues that are perceived to be communicative or meaningful (Kraus & Chandrashekaran, 2010), Zulu clicks may not sound meaningful. For native speakers of click languages (Zulu or otherwise), however, musical competence could be associated with non-native click perception. More generally, native-language background may moderate the association between music and non-native speech perception by influencing which speech sounds have communicative relevance.

There was no association between music training and speech perception, a finding consistent with some previous reports (Boebinger et al., 2015; Ruggles et al., 2014), but contrary to results reported by researchers who administered tests of phonology (e.g., Zuk et al., 2013), speech segmentation (François et al., 2013), the perception of pitch and intonation in speech (Besson, Schön, Moreno, Santos, & Magne, 2007), and speech perception in suboptimal conditions (e.g., Strait & Kraus, 2011; Swaminathan et al., 2015). Although researchers frequently interpret significant correlations between music training and speech perception as evidence for training effects or plasticity (e.g., Kraus & Chandrasekaran, 2010; Skoe & Kraus, 2012; Strait & Kraus, 2011), such associations could be the consequence of preexisting individual differences (Schellenberg, 2015). Findings from twin studies confirm that genetic factors play a role in music perception, the propensity to engage in musical activities, and musical accomplishment (for review see Hambrick, Ullén, & Mosing, 2016). In short, nature influences musical competence and the likelihood of taking music lessons, which would, in turn, further influence musical competence—a gene-environment interaction (Hambrick et al., 2016; Schellenberg, 2015). In the present study, rhythm perception, but not music training, was associated with speech perception, and rhythm perception predicted speech perception after holding training constant. In other words, natural abilities were a better predictor of speech perception than music training. If music training influences speech perception, the effect appears to be small (or non-existent), and potentially a consequence of pre-existing differences in music perception (i.e., aptitude).

Unequivocal evidence for training effects comes only from experiments with random assignment, which eliminate processes that promote musical participation in the first place, and make it impossible to examine interactions between genes and the environment. Correlational and quasi-experimental studies, by contrast, provide ecologically valid snapshots but leave training effects undifferentiated from preexisting differences. Future research could diminish this problem by including a more comprehensive suite of hypothesized preexisting factors. In the present investigation, we measured music perception and music training. With training held constant, performance on the music-perception test was a better estimate of natural musical abilities. With music perception held constant, training was a purer measure of learning music, which could in principle promote speech and language skills, although personality, cognitive, and demographic variables are also implicated in the choice to take music lessons (Corrigall et al., 2013). In any event, the present findings indicate that associations between language and music processes are likely to be over-simplified when researchers assume that music training causes improvements in speech perception.

References

Anvari, S. H., Trainor, L. J., Woodside, J., & Levy, B. A. (2002). Relations among musical skills, phonological processing, and early reading ability in preschool children. Journal of Experimental Child Psychology, 83, 111–130. doi:10.1016/S0022-0965(02)00124-8

Besson, M., Schön, D., Moreno, S., Santos, A., & Magne, C. (2007). Influence of musical expertise and musical training on pitch processing in music and language. Restorative Neurology and Neuroscience, 25, 399–410.

Best, C. T., McRoberts, G. W., & Goodell, E. (2001). Discrimination of non-native consonant contrasts varying in perceptual assimilation to the listener’s native phonological system. Journal of the Acoustical Society of America, 109, 775–794.

Best, C. T., McRoberts, G. W., & Sithole, N. M. (1988). Examination of perceptual reorganization for non-native speech contrasts: Zulu click discrimination by English-speaking adults and infants. Journal of Experimental Psychology: Human Perception and Performance, 14, 345–360.

Bhatara, A., Yeung, H. H., & Nazzi, T. (2015). Foreign language learning in French speakers is associated with rhythm perception, but not with melody perception. Journal of Experimental Psychology: Human Perception and Performance, 41, 277–282. doi:10.1037/a0038736

Boebinger, D., Evans, S., Rosen, S., Lima, C. F., Manly, T., & Scott, S. K. (2015). Musicians and non-musicians are equally adept at perceiving masked speech. Journal of the Acoustical Society of America, 137, 378–387. doi:10.1121/1.4904537

Bors, D. A., & Stokes, T. L. (1998). Raven’s advanced progressive matrices: Norms for first-year university students and the development of a short form. Educational and Psychological Measurement, 58, 382–398. doi:10.1177/0013164498058003002

Carr, K. W., White-Schwoch, T., Tierney, A. T., Strait, D. L., & Kraus, N. (2014). Beat synchronization predicts neural speech encoding and reading readiness in preschoolers. Proceedings of the National Academy of Sciences, 111, 14559–14564. doi:10.1073/pnas.1406219111

Corrigall, K. A., & Schellenberg, E. G. (2015). Predicting who takes music lessons: Parent and child characteristics. Frontiers in Psychology, 6, 282. doi:10.3389/fpsyg.2015.00282

Corrigall, K. A., Schellenberg, E. G., & Misura, N. M. (2013). Music training, cognition, and personality. Frontiers in Psychology, 4, 222. doi:10.3389/fpsyg.2013.00222

Corrigall, K. A., & Trainor, L. J. (2011). Associations between length of music training and reading skills in children. Music Perception, 29, 147–155. doi:10.1525/mp.2011.29.2.147

Degé, F., & Schwarzer, G. (2011). The effect of a music program on phonological awareness in preschoolers. Frontiers in Psychology, 2, 124. doi:10.3389/fpsyg.2011.00124

Flaugnacco, E., Lopez, L., Terribili, C., Montico, M., Zoia, S., & Schön, D. (2015). Music training increases phonological awareness and reading skills in developmental dyslexia: A randomized control trial. PLoS ONE, 10(9), e0138715. doi:10.1371/journal.pone.0138715

François, C., Chobert, J., Besson, M., & Schön, D. (2013). Music training for the development of speech segmentation. Cerebral Cortex, 23, 2038–2043. doi:10.1093/cercor/bhs180

Gordon, R. L., Shivers, C. M., Wieland, E. A., Kotz, S. A., Yoder, P. J., & McAuley, J. D. (2015). Musical rhythm discrimination explains individual differences in grammar skills in children. Developmental Science, 18, 635–644. doi:10.1111/desc.12230

Goswami, U. (2012). Language, music, and children’s brains: A rhythmic timing perspective on language and music as cognitive systems. In P. Rebuschat, M. Rohrmeier, J. A. Hawkins, & I. Cross (Eds.), Language and music as cognitive systems (pp. 292–301). Oxford: Oxford University Press.

Grube, M., Cooper, F. E., & Griffiths, T. D. (2013). Auditory temporal-regularity processing correlates with language and literacy skill in early adulthood. Cognitive Neuroscience, 4, 225–230. doi:10.1080/17588928.2013.825236

Grube, M., Kumar, S., Cooper, F. E., Turton, S., & Griffiths, T. D. (2012). Auditory sequence analysis and phonological skill. Proceedings of the Royal Society of London B: Biological Sciences, 279, 4496–4504. doi:10.1098/rspb.2012.1817

Hambrick, D. Z., Ullén, F., & Mosing, M. (2016). Is innate talent a myth? Scientific American.

Huss, M., Verney, J. P., Fosker, T., Mead, N., & Goswami, U. (2011). Music, rhythm, rise time perception and developmental dyslexia: Perception of musical meter predicts reading and phonology. Cortex, 47, 674–689. doi:10.1016/j.cortex.2010.07.010

Kempe, V., Bublitz, D., & Brooks, P. J. (2015). Musical ability and non‐native speech‐sound processing are linked through sensitivity to pitch and spectral information. British Journal of Psychology, 106, 349–366. doi:10.1111/bjop.12092

Kraus, N., & Chandrasekaran, B. (2010). Music training for the development of auditory skills. Nature Reviews Neuroscience, 11, 599–605. doi:10.1038/nrn2882

Moreno, S., Marques, C., Santos, A., Santos, M., Castro, S. L., & Besson, M. (2009). Musical training influences linguistic abilities in 8-year-old children: More evidence for brain plasticity. Cerebral Cortex, 19, 712–723. doi:10.1093/cercor/bhn120

Moritz, C., Yampolsky, S., Papadelis, G., Thomson, J., & Wolf, M. (2013). Links between early rhythm skills, musical training, and phonological awareness. Reading and Writing, 26, 739–769. doi:10.1007/s11145-012-9389-0

Overy, K., Nicolson, R. I., Fawcett, A. J., & Clarke, E. F. (2003). Dyslexia and music: Measuring musical timing skills. Dyslexia, 9, 18–36. doi:10.1002/dys.233

Patel, A. D. (2011). Why would musical training benefit the neural encoding of speech? The OPERA hypothesis. Frontiers in Psychology, 2, 142. doi:10.3389/fpsyg.2011.00142

Peretz, I., & Coltheart, M. (2003). Modularity of music processing. Nature Neuroscience, 6, 688–691. doi:10.1038/nn1083

Posedel, J., Emery, L., Souza, B., & Fountain, C. (2012). Pitch perception, working memory, and second language phonological production. Psychology of Music, 40, 508–517. doi:10.1177/0305735611415145

Ruggles, D. R., Freyman, R. L., & Oxenham, A. J. (2014). Influence of musical training on understanding voiced and whispered speech in noise. PLoS ONE, 9(1), e86980. doi:10.1371/journal.pone.0086980

Schellenberg, E. G. (2006). Long-term positive associations between music lessons and IQ. Journal of Educational Psychology, 98, 457–468. doi:10.1037/0022-0663.98.2.457

Schellenberg, E. G. (2015). Music training and speech perception: A gene–environment interaction. Annals of the New York Academy of Sciences, 1337, 170–177. doi:10.1111/nyas.12627

Schellenberg, E. G., & Weiss, M. W. (2013). Music and cognitive abilities. In D. Deutsch (Ed.), The psychology of music (3rd ed., pp. 499–550). Amsterdam: Elsevier.

Skoe, E., & Kraus, N. (2012). A little goes a long way: How the adult brain is shaped by musical training in childhood. Journal of Neuroscience, 32, 11507–11510. doi:10.1523/JNEUROSCI.1949-12.2012

Slevc, L. R., & Miyake, A. (2006). Individual differences in second-language proficiency does musical ability matter? Psychological Science, 17, 675–681. doi:10.1111/j.1467-9280.2006.01765.x

Strait, D. L., & Kraus, N. (2011). Can you hear me now? Musical training shapes functional brain networks for selective auditory attention and hearing speech in noise. Frontiers in Psychology, 2, 113. doi:10.3389/fpsyg.2011.00113

Swaminathan, S., & Gopinath, J. K. (2013). Music training and second-language English comprehension and vocabulary skills in Indian children. Psychological Studies, 58, 164–170. doi:10.1007/s12646-013-0180-3

Swaminathan, J., Mason, C. R., Streeter, T. M., Best, V., Kidd, G., Jr., & Patel, A. D. (2015). Musical training, individual differences and the cocktail party problem. Scientific Reports, 5, 11628. doi:10.1038/srep11628

Tallal, P., & Gaab, N. (2006). Dynamic auditory processing, musical experience and language development. Trends in Neurosciences, 29, 382–390. doi:10.1016/j.tins.2006.06.003

Thomson, J. M., Leong, V., & Goswami, U. (2013). Auditory processing interventions and developmental dyslexia: A comparison of phonemic and rhythmic approaches. Reading and Writing, 26, 139–161. doi:10.1007/s11145-012-9359-6

Tierney, A. T., & Kraus, N. (2013). The ability to tap to a beat relates to cognitive, linguistic, and perceptual skills. Brain and Language, 124, 225–231. doi:10.1016/j.bandl.2012.12.014

Wallentin, M., Nielsen, A. H., Friis-Olivarius, M., Vuust, C., & Vuust, P. (2010). The musical ear test, a new reliable test for measuring musical competence. Learning and Individual Differences, 20, 188–196. doi:10.1016/j.lindif.2010.02.004

Werker, J. F., & Tees, R. C. (1984). Phonemic and phonetic factors in adult cross-language speech perception. Journal of the Acoustical Society of America, 75, 1866–1878.

Yang, H., Ma, W., Gong, D., Hu, J., & Yao, D. (2014). A longitudinal study on children’s music training experience and academic development. Scientific Reports, 4, 5854. doi:10.1038/srep05854

Zuk, J., Ozernov-Palchik, O., Kim, H., Lakshminarayanan, K., Gabrieli, J. D., Tallal, P., & Gaab, N. (2013). Enhanced syllable discrimination thresholds in musicians. PLoS ONE, 8(12), e80546. doi:10.1371/journal.pone.0080546

Acknowledgements

We thank Catherine Best for providing us with Zulu speech tokens and Sarah Selvadurai for assistance with data collection. Funded by the Natural Sciences and Engineering Research Council of Canada.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(PDF 80.1 kb)

Rights and permissions

About this article

Cite this article

Swaminathan, S., Schellenberg, E.G. Musical competence and phoneme perception in a foreign language. Psychon Bull Rev 24, 1929–1934 (2017). https://doi.org/10.3758/s13423-017-1244-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-017-1244-5