Abstract

People often indicate a higher price for an object when they own it (i.e., as sellers) than when they do not (i.e., as buyers)—a phenomenon known as the endowment effect. We develop a cognitive modeling approach to formalize, disentangle, and compare alternative psychological accounts (e.g., loss aversion, loss attention, strategic misrepresentation) of such buyer-seller differences in pricing decisions of monetary lotteries. To also be able to test possible buyer-seller differences in memory and learning, we study pricing decisions from experience, obtained with the sampling paradigm, where people learn about a lottery’s payoff distribution from sequential sampling. We first formalize different accounts as models within three computational frameworks (reinforcement learning, instance-based learning theory, and cumulative prospect theory), and then fit the models to empirical selling and buying prices. In Study 1 (a reanalysis of published data with hypothetical decisions), models assuming buyer-seller differences in response bias (implementing a strategic-misrepresentation account) performed best; models assuming buyer-seller differences in choice sensitivity or memory (implementing a loss-attention account) generally fared worst. In a new experiment involving incentivized decisions (Study 2), models assuming buyer-seller differences in both outcome sensitivity (as proposed by a loss-aversion account) and response bias performed best. In both Study 1 and 2, the models implemented in cumulative prospect theory performed best. Model recovery studies validated our cognitive modeling approach, showing that the models can be distinguished rather well. In summary, our analysis supports a loss-aversion account of the endowment effect, but also reveals a substantial contribution of simple response bias.

Similar content being viewed by others

The minimum price at which people are willing to accept to sell an object they own is often higher than the maximum price they are willing to pay for the same object when they do not own it. This systematic difference between buying and selling prices—which can also occur within the same person when taking different perspectives—is known as the endowment effect (Kahneman, Knetsch, & Thaler, 1990, 1991; Thaler, 1980; see also Birnbaum & Stegner, 1979). The endowment effect has attracted considerable attention because it contradicts a central tenet of economic theory: According to the Coase theorem, the value of an object should be independent of its initial ownership (Kahneman et al., 1990).

There have been several proposals as to which cognitive mechanisms give rise to the endowment effect, or how buyers’ and sellers’ cognitive mechanisms might differ generally.Footnote 1 For instance, there may be asymmetries between buyers and sellers in terms of the order in which they search for positive and negative aspects of an object (e.g., G. J. Johnson & Busemeyer, 2005; E. J. Johnson, Häubl, & Keinan, 2007; Pachur & Scheibehenne, 2012; see also Morewedge & Giblin, 2015). Most proposals, however, have focused on potential buyer–seller differences in how the information acquired is subsequently processed. The arguably most prominent account of this type is loss aversion (Kahneman et al., 1990), which posits that buyers and sellers differ in their sensitivity to the magnitude of an object’s potential consequences (see below for details). Further, there is evidence in valuations of risky options that buyers and sellers differ in probability weighting (e.g., Brenner, Griffin, & Koehler, 2012), and some authors have highlighted the role of strategic misrepresentation (e.g., Heifetz & Segev, 2004; Isoni, Loomes, & Sugden, 2011; Plott & Zeiler, 2005, 2007). Finally, Yechiam and Hochman (2013a) have recently proposed a loss-attention account, according to which buyers and sellers may differ in the amount of attention they invest in a task. The latter suggests that there might also be buyer–seller differences in learning and memory, which could explain why selling prices are often closer to a normative benchmark than buying prices (Yechiam, Abofol, & Pachur, in press; Yechiam, Ashby, & Pachur, in press).

Although empirical support has been garnered for each of these proposals, it is currently unclear how they fare against each other, or how well their predictions can actually be told apart. Here, we propose a cognitive modeling approach to formalize, disentangle, and directly compare alternative accounts of buyer–seller differences in pricing decisions of monetary lotteries. Cognitive modeling is becoming increasingly popular in cognitive science (Busemeyer & Diederich, 2010; Lewandowsky & Farrell, 2010), but we know of no comprehensive and quantitative model comparison of the mechanisms proposed to underlie buying and selling prices.

To be able to test the interesting possibility suggested by Yechiam and Hochman’s (2013a) loss-attention account that buyers and sellers differ in learning and memory processes, we investigate buying and selling prices as decisions from experience (e.g., Hertwig & Erev, 2009). Specifically, we use the sampling paradigm, in which people are asked to evaluate monetary lotteries whose payoff distributions are initially unknown, but one can freely sample from them by clicking on a button that realizes a draw from the respective distribution.Footnote 2 The magnitude and relative frequency of the experienced outcomes can then be used to construct a subjective valuation. If, as proposed by Yechiam and Hochman (2013a), sellers pay more attention to the task than buyers do, they may differ in the learning process—a possibility that has not been tested previously. The sampling paradigm—and decisions from experience in general—are increasingly employed to examine risky choice, in particular because it lays open the underlying search and learning processes (e.g., Newell & Camilleri, 2011; Hertwig, 2015; Hills & Hertwig, 2010; Lejarraga, Hertwig, & Gonzalez, 2012; Rakow, Demes, & Newell, 2008; Rakow & Newell, 2010). Decisions from experience are also increasingly being used to study pricing decisions for individual objects (e.g., Ashby & Rakow, 2014; Golan & Ert, 2015).

For greater generality of our model comparison and because none of the currently existing formal frameworks can accommodate all of the accounts of buyer–seller differences mentioned above, we implemented the accounts in three computational frameworks: reinforcement learning (e.g., Sutton & Barto, 1998; Yechiam & Busemeyer, 2005), instance-based learning theory (Gonzalez & Dutt, 2011), and cumulative prospect theory (Tversky & Kahneman, 1992). One important difference between the frameworks is that whereas reinforcement learning models and instance-based learning theory can also model how an option’s attractiveness is sequentially learned from experience, cumulative prospect theory has been originally developed for situations in which information about the outcomes and probabilities of lotteries are summarized to participants. This is not problematic here, however, because—as in other applications of cumulative prospect theory to decisions from experience (e.g., Abdellaoui, L’Haridon, & Paraschiv, 2011; Jarvstad, Hahn, Rushton, & Warren, 2013; Kellen, Pachur, & Hertwig, 2016; Lejarraga, Pachur, Frey, & Hertwig, 2016; Ungemach, Chater, & Stewart, 2009)—we only model the valuation at the end of the sampling process. Nevertheless, it should be noted that when applying cumulative prospect theory to decisions from experience, we assume that people have an accurate sense of the relative frequencies of the outcomes they experience (which are then used as probabilities in the modeling); this seems a plausible assumption (e.g., Hasher & Chromiak, 1977; Underwood, Zimmerman, & Freund, 1971; Ungemach et al., 2009). The comparison of reinforcement learning and instance-based learning theory with cumulative prospect theory allows us to test whether processes specific to experiential learning (e.g., recency, memory decay) are necessary to capture buyer–seller discrepancies in pricing decisions from experience. Overall, our quantitative model comparison provides insights into which minimal assumptions about buyer–seller differences in mechanisms are necessary to capture the endowment effect in the context of monetary lotteries.

Morewedge and Giblin (2015) recently proposed an attribute sampling bias theory, which attempts to integrate various manifestations of buyer–seller differences in valuation. In a nutshell, it is argued that ownership influences the accessibility of frame-consistent attributes by biasing search, attention, and recollection akin to confirmatory hypothesis testing. Our cognitive modeling approach complements Morewedge and Giblin’s proposal. Specifically, the focus of our article is to model information processing subsequent to information search, whereas Morewedge and Giblin are more concerned with processes that include information search.

In what follows, we first describe the candidate accounts of buyer–seller discrepancies tested in more detail; we then formalize these accounts as model variants within the three computational frameworks. In Study 1, we test the models by reanalyzing empirical data available from an experiment by Pachur and Scheibehenne (2012), in which participants made hypothetical pricing decisions in an experiential sampling paradigm. In Study 2, we draw on data from a new experiment, in which participants’ pricing decisions were incentivized. Finally, we present model recovery analyses that examine how well the best performing models in Studies 1 and 2 can be told apart. We conclude by discussing implications of the proposed cognitive modeling approach and the findings obtained for research on the endowment effect and valuation from experience.

As noted above, we examine the endowment effect in the context of monetary lotteries. Although the seminal investigations on the endowment effect (e.g., Kahneman et al., 1990; Thaler, 1980) involved the valuation of consumer items such as mugs and pens, many subsequent studies have turned to monetary lotteries and found a robust endowment effect there as well (e.g., Birnbaum, Coffey, Mellers, & Weiss, 1992; Birnbaum & Zimmerman, 1998; Brenner et al., 2012; Casey, 1995; Isoni et al., 2011; Kachelmeier & Shehata, 1992; Peters, Slovic, & Gregory, 2003; for a meta-analysis, see Yechiam, Ashby, & Pachur, in press). In contrast to consumer items, an advantage of lotteries is that their attributes can be quantified precisely (which is important for the modeling) and that they also allow to test for buyer–seller differences in risk attitude. Yet, the precise nature of lotteries, whose outcomes can be evaluated and retrieved rather easily, might reduce the influence of subjective factors in the valuation. This could explain why the endowment effect for monetary lotteries, while substantial, seems to be smaller than for public, safety, or private goods (Horowitz & McConnell, 2002).

Accounts of buyer–seller differences in valuation

From several accounts (e.g., loss aversion, loss attention, strategic misrepresentation) of discrepancies between buying and selling prices, in the following we derive specific hypotheses on how buyers and sellers might differ in various aspects of the cognitive processing (e.g., outcome sensitivity, choice sensitivity, learning, probability sensitivity, response bias) when they price monetary lotteries. We first provide a conceptual introduction to the various accounts; we then formalize the derived mechanisms using the computational frameworks of reinforcement learning, instance-based learning theory, and cumulative prospect theory. Figure 1 provides a summary overview of the accounts, in which differences between buyers and sellers in cognitive mechanism are hypothesized by the accounts and the computational frameworks in which we implement these hypotheses.

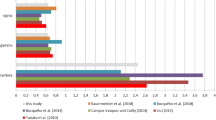

Overview of different theoretical accounts of buyer–seller differences in valuation, the differences in cognitive mechanism between buyers and sellers that can be derived from the accounts, and in which computational frameworks we implemented the hypothesized cognitive mechanisms. RL = reinforcement learning; CPT = cumulative prospect theory; IBL = instance-based learning theory

Loss aversion

In their original studies on the endowment effect, Kahneman and colleagues (1990) proposed that “[t]his effect is a manifestation of ‘loss aversion,’ the generalization that losses are weighted substantially more than objectively commensurate gains in the evaluation of prospects and trades” (pp. 1327–1328; see also Thaler, 1980). In other words, it is assumed that being endowed with an object shifts the reference point such that having the object is the status quo and selling it is viewed as a potential loss. As losses have an aversive effect that the decision maker strives to avoid, this affects the processing of outcome information.

Loss aversion is accommodated in the value function, which translates objective outcomes into subjective values (Kahneman & Tversky, 1979). Specifically, the function has a steeper curvature for losses than for gains, meaning that increases in outcomes have a greater impact on subjective values in the loss domain than in the gain domain. The decision maker’s outcome sensitivity is consequently higher for losses than for gains. According to the loss-aversion account of buyer–seller differences in pricing, sellers should have higher outcome sensitivity than buyers.

Loss attention

Yechiam and Hochman (2013a) argued for a different view of the effect of losses: Rather than (exclusively) triggering avoidance, losses are proposed to “increase the overall attention allocated to the situation” (pp. 213–214), which can lead to better performance. In support of their attentional model, Yechiam and Hochman (2013b) reported a study in which the option with the higher expected value was chosen more frequently when it also had a chance of leading to a (small) loss (e.g., win $200 with a chance of 50%, otherwise lose $1) than when not (e.g., win $200 with a chance of 50%, otherwise win $1). The authors proposed that an attentional effect might also contribute to buyer–seller differences in pricing decisions (Yechiam & Hochman, 2013a, p. 502): If sellers frame the possible outcomes of the object as losses (Kahneman et al., 1990), they may invest more attention in the task and thus show better performance. Consistent with this prediction, Yechiam, Abofol, and Pachur (in press) found that selling prices for lotteries are often more closely aligned with the lotteries’ expected values (i.e., their normative prices) than are buying prices (see also Manson & Levy, 2015; Yechiam, Ashby, & Pachur, in press). How might increased attention affect the cognitive processing specifically? Here we consider two aspects: (a) increased choice sensitivity and (b) better learning and memory. Although it may not be immediately apparent how better processing (of sellers) can also lead to systematically higher valuations (i.e., the endowment effect), later in this article (see General Discussion and Appendix F) we show that because of an asymmetric regression effect, under risk seeking (i.e., a convex utility function) higher choice sensitivity (expected for sellers) can indeed lead to systematically higher valuations than lower choice sensitivity (expected for buyers); further, it is shown that when attractive outcomes have a relatively high probability, also both higher learning rate and better memory of sellers can lead to an endowment effect.

Choice sensitivity

Yechiam and Hochman (2013a) proposed that the enhanced task attention triggered by the possibility of a loss increases the decision-maker’s sensitivity to their subjective valuation of an option when the valuation is translated into a response. As a consequence, the decision maker would be more consistent in choosing a response according to their subjective valuation and pick the corresponding response more deterministically. Ceteris paribus, and unless the valuation itself is highly inaccurate, the response will be a more accurate reflection of the experiences made by the decision maker (rather than reflecting noise) and thus lead to better performance. This sensitivity is referred to as choice sensitivity. Fitting a reinforcement learning model with a choice sensitivity parameter to experience-based decisions, Yechiam and Hochman (2013b) indeed obtained a higher estimate for this parameter in a condition with losses than in a condition with gains. In the context of pricing decisions by buyers and sellers (where decision makers choose among alternative pricing responses), choice sensitivity might thus be higher for the latter than for the former.

Learning and memory

Beyond higher choice sensitivity, enhanced attention when faced with potential losses may affect cognitive processing more generally. In the context of a learning process, losses may influence how strongly the decision maker adjusts the expected reward of an option upon receiving feedback. In support of this possibility, Yechiam and Hochman (2013b) found faster improvement and a higher learning rate parameter (in addition to higher choice sensitivity) in a condition with losses than in a condition with gains. If sellers frame the outcomes of a traded option as losses (increasing their attention to the task), they might thus have a higher learning rate than buyers.

An attentional effect of loss attention during learning might also lead sellers to encode the experienced outcomes more deeply, affecting how quickly the memory traces of those outcomes are forgotten. As a consequence, sellers might show lower memory decay than buyers. Further, increased attention by sellers could also lead to less noise during the retrieval of experienced outcomes. Implementing buyer–seller differences in these mechanisms in reinforcement learning models and instance-based learning theory, we can formalize and test the possibilities.

Probability weighting

Some studies have suggested that buyers and sellers differ in how they respond to probability information. For instance, Brenner and colleagues (2012; see also Blondel & Levy-Garboua, 2008) found larger overweighting of small-probability events by sellers than by buyers. Casey (1995) fitted probability weighting functions on the individual level to buying and selling prices for monetary lotteries, and similarly obtained more strongly curved functions for sellers than for buyers. (When the functions were fitted to responses on the aggregate level, there were no buyer–seller differences.)

Strategic misrepresentation

Finally, it has been proposed that buyer–seller discrepancies in pricing might result, at least in part, from strategic misrepresentation of their actual valuations. For instance, Plott and Zeiler (2005) proposed that “the use of the word ‘sell’ can automatically call forth a margin above the minimum that an individual might accept in exchange for a good” (p. 537). The idea is that people might overgeneralize the use of a “sell high, buy low” strategy (or “strategic heuristic”), that might be beneficial during negotiation in naturalistic trading contexts, to situations in the lab (Korobkin, 2003; see also Knez, Smith, & Williams, 1985). From this perspective, the endowment effect arises from a tendency of seller and buyers to inflate or deflate, respectively, their actual valuation of the object. One straightforward way to formalize such distortion is in terms of a simple additive response bias. Birnbaum and Stegner (1979) found that a model that captured buyer–seller differences in terms of a bias parameter “provided a good account of this change of weight across points of view” (i.e., buyer vs. seller; p. 71). Response bias parameters have often been included in formal psychological models (e.g., Green & Swets, 1966; Ratcliff, 1978) and are important for disentangling effects of processing factors from those of strategic influences (e.g., Jones, Moore, Shub, & Amitay, 2015). In the context of the endowment effect, the role of strategic effects has mainly been addressed experimentally, but to our knowledge there have been no attempts to actually measure the size of response bias and compare its contribution to other possible mechanisms of buyer–seller differences.

Overview of the models

We implemented the candidate mechanisms derived from the accounts as formal, mathematical models within three prominent computational frameworks: reinforcement learning, instance-based learning theory, and cumulative prospect theory (see Fig. 1). Models of reinforcement learning have proven highly successful in modeling experience-based decision making (e.g., Busemeyer & Myung, 1992; Steingroever, Wetzels, & Wagenmakers, 2014; Yechiam & Busemeyer, 2005). Instance-based learning theory (Gonzalez & Dutt, 2011) models decisions from experience based on processes of memory retrieval and decay. Cumulative prospect theory (Tversky & Kahneman, 1992) is arguably the most prominent account of decision making under risk and has also been used to describe experience-based decision making (Abdellaoui et al., 2011; Glöckner, Hilbig, Henninger, & Fiedler, 2016; Jarvstad et al., 2013; Jessup, Bishara, & Busemeyer, 2008; Kellen et al., 2016; Lejarraga et al., 2016; Ungemach et al., 2009). It allows us to study probability weighting (i.e., how probability information is represented in decisions), which is an important concept in the context of risky decision making (e.g., Wakker, 2010) and, as mentioned above, has been proposed as contributing to buyer–seller differences in valuation.

For each of these computational frameworks, we formulate different model variants, each of which implements one (or combinations) of the candidate mechanisms. In each model variant, one (or several) of the parameters is estimated separately for sellers (i.e., willing to accept condition; WTA) and buyers (i.e., willing to pay condition; WTP), while the other parameters are estimated for buyers and sellers together. This approach allows us to evaluate how well the model variants—and thus the mechanisms of buyer–seller differences in pricing decisions that they implement—are able to capture empirical data.

Reinforcement learning

The general notion underlying reinforcement learning is that an expectancy of an option is gradually formed through repeated feedback. The expectancy represents the future reward to be expected from the option and is repeatedly updated as a function of experience (for an overview, see Gureckis & Love, 2015). This learning process is formalized in terms of three psychological components: a utility function, an updating rule, and a choice (or action-selection) rule (cf. Steingroever, Wetzels, & Wagenmakers, 2013a; Yechiam & Busemeyer, 2005).

Utility function

This function translates the objective value of an experienced outcome, x, into a subjective value or utility, u. As commonly assumed in models of risky decision making, we used a power utility function,

where α (0 < α) governs the curvature of the utility function; larger values of α indicate greater sensitivity to differences in the outcomes. When α = 1, the subjective utilities reflect the objective values linearly; with 0 < α < 1 the utility function is concave (for x > 0; convex for x < 0), indicating a reduced sensitivity to differences in the objective magnitudes. Here, we formally implement the prediction that sellers have higher outcome sensitivity than buyers (as proposed by the loss-aversion account) via the exponent parameter in the utility function. Accordingly, α should be higher for sellers than for buyers, αWTA > αWTP.

Note that differences in outcome sensitivity, which mean differences in the steepness of the curvature of the utility function, can be formalized in several ways. An alternative to allowing for differences in the exponent of the power function in Equation 1 would be to formalize a steeper utility function for losses by u(x) = –λ(–x α), with λ > 1 (Tversky & Kahneman, 1992). Both this and the approach adopted in Equation 1 similarly affect the steepness of the utility function, though in slightly different ways. An additional analysis, in which buyer–seller differences in outcome sensitivity were implemented by estimating a common α for buyers and sellers and estimating a λ for the seller condition (with λ > 1 indicating higher outcome sensitivity for sellers), showed a worse model fit than when estimating α separately for buyers and sellers (these analyses also clearly indicated that a nonlinear, concave utility function is necessary to capture the data).

Updating rule

After a subjective utility of the experienced outcome has been formed, the expectancy of the option is updated. Several updating rules have been proposed in the literature; none has emerged as being generally superior (e.g., Ahn, Busemeyer, Wagenmakers, & Stout, 2008; Steingroever et al., 2013a, 2013b; Yechiam & Busemeyer, 2005). We therefore considered three updating rules that have received support in studies on experience-based decision making. According to the delta rule (e.g., Busemeyer & Myung, 1992; Fridberg et al., 2010; Yechiam & Busemeyer, 2008), the expectancy of choosing the option at trial t (that is here: after t samples have been drawn) is determined by adjusting the expectancy at trial t – 1 based on the discrepancy between this previous expectancy and the utility of the outcome experienced at trial t:

where ϕ [0 ≤ ϕ ≤ 1] represents a learning rate parameter governing how strongly the expectancy is adjusted. If sellers learn faster than buyers (e.g., because they invest more cognitive resources in the task), one might expect a higher value for the former than for the latter, that is, ϕWTA > ϕWTP.

An alternative updating process is formulated by the value-updating rule (Ashby & Rakow, 2014; Hertwig, Barron, Weber, & Erev, 2006), according to which the expectancy of the option at trial t is determined as

As in the delta rule, the parameter ϕ [0 ≤ ϕ] governs the impact of previous experiences. With ϕ < 1 the rule yields recency (i.e., stronger impact of more recent experiences); with ϕ > 1 it yields primacy (i.e., stronger impact of less recent experiences). If, as proposed by Yechiam and Hochman (2013a), sellers pay more attention to the task than buyers, they might manifest lower recency, that is, ϕWTA should be closer to 1 than ϕWTP is.

Third, we tested the natural-mean rule, which has been found to provide a good description of decisions from experience (Ashby & Rakow, 2014; Hertwig & Pleskac, 2008; Wulff & Pachur, 2016). In contrast to the value-updating rule, the natural-mean rule weights all experiences equally and thus simply represents the average across all experienced outcomes of a lottery:

Note that the natural-mean rule is nested under the value-updating rule, with ϕ = 1. It thus can also serve to assess whether the added model flexibility achieved by having a parameter that allows past experiences to be differentially weighted is warranted. Note that as the natural-mean rule has no free parameter, it does not assume that sellers and buyers differ in the updating process.

Choice rule

Finally, a rule is required that translates the expectancy of a lottery into a pricing response. Acknowledging that decision making is probabilistic (e.g., Mosteller & Nogee, 1951), a popular approach to derive a response probability is a ratio-of-strength rule, according to which the response is a probabilistic function of the relative strength of a given response R i relative to all J possible responses (e.g., Luce, 1959):

where s i is the strength for response R i. In this article, the models are applied to a task where participants provided prices for each lottery by choosing one of 11 values on a response scale representing the range of the lottery’s possible outcomes divided into 10 equally spaced intervals (see Fig. 2). Applied to this setting, the response (or choice) rule determines the probability that scale value R i is chosen among the J scale values at trial t, given the lottery’s expectancy. The probability is a function of the distance between the scale value (e.g., 53 CHF) and the expectancy of the lottery,

The distance index, D i,t , at trial t follows an exponential decay function of the absolute difference between the scale value R i and the expectancy (see Shepard, 1958):

The parameter θ determines how strongly the predicted probability of choosing the respective scale value decreases with increasing difference between the expectancy and the scale value. The parameter θ thus effectively controls how sensitive the choice probability is to this difference (with higher values indicating higher sensitivity).Footnote 3 According to Yechiam and Hochman’s (2013a) loss-attention account, the choice sensitivity parameter should be higher for sellers than for buyers (i.e., θWTA > θWTP).

The β parameter in Equation 6 represents response bias (implementing the notion of strategic misrepresentation by way of a “buy low, sell high” strategy), an additive constant that increases or decreases (depending on the sign of β) the expectancy of a given lottery when it is translated into a choice of one of the scale values. Assuming that sellers inflate their subjective valuations of the lottery and buyers deflate their subjective valuations (e.g., Plott & Zeiler, 2005), the bias parameter should be higher for the former than for the latter (i.e., βWTA > βWTP).

Additional model variants tested

Because the buyer–seller discrepancies may be driven by more than one mechanism, we also tested model variants in which multiple parameters were estimated separately for the buyer and seller conditions. For instance, we tested a model variant allowing for separate parameters for buyers and sellers both in outcome sensitivity (i.e., αWTA and αWTP) and learning rate (i.e., ϕWTA and ϕWTP). Of these model variants, only those that allowed buyers and sellers to vary in terms of both outcome sensitivity (α) and response bias (β) achieved competitive model performance. We therefore report the results of only these models. To test whether the increased model flexibility achieved by allowing for a nonlinear utility function (see Equation 1) was matched by a corresponding increase in predictive power, we also tested model variants in which the utility function was restricted to be linear (i.e., α = 1; e.g., Busemeyer & Townsend, 1993). The performance of these variants was clearly inferior, and they were therefore not considered further.

The combination of the utility function and choice rule with the three updating rules resulted in three types of reinforcement learning models, which we refer to (based on the underlying updating rule) as the delta model, the value-updating model, and the natural-mean model. Table 1 summarizes the five variants of the delta model, the five variants of the value-updating model (VUM), and the four variants of the natural-mean model (NM) tested. These models assume buyer–seller differences in learning/memory (DELTAphi, VUMphi), outcome sensitivity (DELTAalpha, VUMalpha, NMalpha), choice sensitivity (DELTAtheta, VUMtheta, NMtheta), response bias (DELTAbeta, VUMbeta, NMbeta), or in both outcome sensitivity and response bias (DELTAalphabeta, VUMalphabeta, NMalphabeta).

Instance-based learning theory

An alternative framework for modeling decisions from experience was recently proposed by Gonzalez and Dutt (2011). In analyses of aggregate choice data, instance-based learning theory (IBL) outperformed reinforcement learning models (and other models) in describing decisions from experience. In IBL, the subjective valuation of a lottery is a function of instances stored in memory, which represent the experienced outcomes of the lottery. A valuation is constructed by “blending” (i.e., integrating) the instances; the blended value, B, at trial t depends on the outcomes experienced for the lottery as well as the probability of retrieving the corresponding instances from memory, and is defined as

where p k(t) is the retrieval probability of outcome x k at trial t.Footnote 4 The retrieval probability is in turn a function of the activation of the outcome in memory relative to the activation of all N other experienced outcomes of that lottery,

where τ is random noise defined as

σ is a free noise parameter, with higher values indicating more noise in the extent to which the retrieval follows the outcome’s activation strength. The activation of outcome x k at trial t is a function of the recency and frequency of retrieving relevant instances from memory, and is determined as follows:

The right-hand part of the equation represents Gaussian noise, with ρk,t being a random draw from a uniform distribution U(0, 1). d is a free decay parameter. With higher values of d, the decay of the instance’s activation is faster, and it is harder for the model to retrieve instances of outcomes that occurred many trials ago.

As for the reinforcement learning models, the predicted choice probabilities for the J values on the response scale (yielding a pricing decision) were determined on the basis of the difference between IBL’s valuation of a lottery (i.e., the blended value; see Equation 7) and each scale value (see Equation 6). Although IBL chooses deterministically, we followed the procedure proposed by Gonzalez and colleagues (e.g., Gonzalez & Dutt, 2011; Lejarraga, Dutt, & Gonzalez, 2012) to derive choice probabilities for the different scale values (given a particular set of parameter values). Specifically, we simulated 5,000 draws of random noise (see Equation 10) and determined for each scale value its distance to the resulting blended value of the lottery. At each draw, the scale value with the smallest distance was chosen deterministically as a pricing response for the lottery. Because the blended value varied due to the random noise component, this approach yielded a probability distribution across the different values on the response scale.

As shown in Table 1, we tested three model variants of IBL to implement different mechanisms of buyer–seller differences. The first model variant, IBLd, allows for differences between buyers and sellers in the decay parameter d. To the extent that sellers pay more attention during the task than buyers (as proposed by the loss-attention account) and thus encode experienced outcomes better, decay might be lower for sellers than for buyers (i.e., d WTA < d WTP). The second variant, IBLsigma, allows for differences in the noise parameter σ, governing the noise in the retrieval of instances of experienced outcomes. If sellers pay more attention than buyers, noise might be larger for the latter (i.e., σWTA < σWTP). Finally, IBLbeta implements the notion that sellers and buyers have different response biases, βWTA and βWTP, formalized as added or subtracted constants as in Equation 6—but with E(t) substituted with B(t)—that is, when the difference between the blended value and each scale value is calculated.

Cumulative prospect theory

Finally, we implemented candidate mechanisms of buyer–seller differences within the framework of cumulative prospect theory (CPT; Tversky & Kahneman, 1992). CPT has the same power function transforming objective outcomes into subjective values as the reinforcement learning model (see Equation 1); the loss-aversion account therefore predicts that the outcome sensitivity parameter is larger in the seller condition than in the buyer condition (i.e., αWTA > αWTP).

In addition, CPT assumes a probability weighting function that transforms the probability of an outcome into a subjective decision weight. For the present application of CPT, we assume that the decision maker extracts a probability for each outcome from the individually experienced relative frequency of the outcome and we use these experienced probabilities (which, due to sampling error, might deviate from the “objective” probabilities of the outcomes; see Appendix A) to model the valuations and estimate the probability weighting function. The decision weights in CPT result from a rank-dependent transformation of the (experienced) probabilities of the outcomes. With outcomes 0 < x 1 ≤ . . . < x m and corresponding probabilities p 1 . . . p m , the weights are defined as follows:

with w being the weighting function. For positive outcomes, the decision weight for an outcome represents the marginal contribution of the outcome’s probability to the total probability of obtaining a better outcome. The weighting function has an inverse S-shaped curvature, corresponding to an overweighting of low probability outcomes and underweighting of high probability outcomes. We used a two-parameter weighting function originally proposed by Goldstein and Einhorn (1987) (additional analyses showed that this functional form outperformed various other forms that have been proposed in the literature; for an overview see Stott, 2006). It separates the curvature of the probability weighting function from its elevation and is defined as follows:

with γ (>0) governing the curvature of the weighting function. Values of γ < 1 indicate a stronger inverse S-shaped curvature and lower sensitivity to probabilities (in the modeling analyses below, we also allow values of γ > 1, yielding an S-shaped curvature). The parameter δ (>0) governs the elevation of the weighting function and can be interpreted as the attractiveness of gambling and thus also as an indicator of a person’s risk attitude, with (in the gain domain) higher values on δ indicating higher risk seeking (see Qiu & Steiger, 2011).

By estimating the γ and δ parameters separately for buyers and sellers, we can test the possibility that the two trading perspectives are associated with differences in probability weighting. The results of Brenner and colleagues (2012) suggest that sellers might show lower probability sensitivity than buyers (i.e., γWTA < γWTP). Sellers could also be more risk seeking, as indicated by a higher elevation of the probability weighting function (i.e., δWTA > δWTP; see Brenner et al., 2012; Blondel & Lévy-Garboua, 2008).

According to CPT, the overall subjective valuation V of a lottery is given by:

where v(x k) is the value function representing the subjective value of outcome x k of the lottery. We used the same power function as in the reinforcement learning framework (see Equation 1). As for the reinforcement models, the predicted choice probability for each value on the response scale was derived from the subjective valuation. That is, the choice probability is a function of the absolute difference between the scale value and the predicted subjective value—see Equations 5b and 6; E(t) in Equation 6 is substituted with V. Table 1 summarizes the six model variants of CPT tested, allowing for buyer–seller differences in outcome sensitivity (CPTalpha), probability sensitivity (CPTgamma), elevation (CPTdelta), choice sensitivity (CPTtheta), response bias (CPTbeta), or in both outcome sensitivity and response bias (CPTalphabeta).

To compare the candidate mechanisms for buyer–seller differences in pricing decisions from experience, we next fitted the variants of the reinforcement learning models, IBL, and CPT to the experimental data obtained by Pachur and Scheibehenne (2012; experience condition) and evaluated their performance in capturing the data, taking into account the differences in model complexity between the different model variants.

Study 1: How well do the models capture buyer–seller discrepancies?

In the experiment by Pachur and Scheibehenne (2012), participants (N = 76) provided valuations for 30 monetary lotteries from both a seller perspective (WTA condition) and a buyer perspective (WTP condition; see Appendix A for a complete list of the lotteries). In the WTA condition, participants were asked to imagine that they owned the right to play a lottery and to indicate the minimum amount of money they would accept to sell that right. To indicate a price, participants chose one of 11 values on a response scale, spanning the range of the lottery’s possible outcomes in 10 equally spaced intervals (see Fig. 2). In the WTP condition, participants were asked to imagine that they had the chance to buy the right to play a lottery and to indicate the maximum amount they would be willing to pay. The two conditions were presented as separate blocks, the order of which was counterbalanced across participants and within each condition, and the 30 lotteries were presented in random order. Participants were not told in advance that the two blocks contained the same lotteries. At each trial, a lottery was presented as an initially unknown payoff distribution. Participants learned about its possible outcomes by clicking a button on the computer screen to make a random draw from the underlying payoff distribution. They could draw as many samples as they wanted (but had to draw at least one) before stating a buying or selling price (the mean sample size per lottery was 23.1, SD = 13.5). The valuations of the lotteries were hypothetical. Figure 3 (left panel) shows the average selling and buying prices obtained by Pachur and Scheibehenne (2012) for each lottery as a function of the lottery’s expected value. As can be seen, the selling prices were consistently higher than the buying prices. Moreover, the selling prices were more closely aligned with the lotteries’ expected values. How do the different formal models reflect these differences between buyers and sellers?

Parameter estimation and model evaluation

On the basis of each participant’s sampled outcomes and pricing responses, we estimated the model parameters using a maximum likelihood approach (see Appendix B).Footnote 5 (This also means that CPT’s value and weighting functions were estimated based on the experienced outcomes and probabilities.) Given that the buyer and seller perspectives were manipulated using a within-subjects design, we fitted all models on the level of the individual participant. The parameter estimation was based on a combination of a grid search and subsequent optimization using the simplex method (Nelder & Mead, 1965), with the 20 best fitting value combinations emerging from the grid search as starting points for simplex.Footnote 6 Given that the lotteries differed considerably in both the magnitudes and the range of their outcomes (see Appendix A), the modeling was based on the pricing responses and sampled outcomes mapped on the 11-point response scale for each lottery. For instance, the outcome “50 CHF” in lottery 20 (Appendix A) was mapped as “8.11” on the 11-point scale. Using absolute values would lead to lotteries with a larger outcome range contributing more to the (mis)fit of the model than lotteries with a lower outcome range. Additional analyses also showed that using absolute values led to a considerably worse fit of the models.

To evaluate the performance of the models while taking into account differences in model complexity, we computed for each model, separately for each participant, the Akaike information criterion (AIC; e.g., Akaike, 1973; Burnham & Anderson, 2002), which penalizes models with more free parameters, as well as the AIC model weights (e.g., Wagenmakers & Farrell, 2004). A more detailed description can be found in Appendix C. We also calculated the Bayesian information criterion (BIC; Burnham & Anderson, 2002; Schwarz, 1978) and the BIC model weights, which are reported in Appendix D. Because our model recovery analysis (see below) showed that BIC could lead to a substantial misclassification of the underlying mechanism, however, our model comparison focuses on the AIC values.

Results

Model performance.

Which of the tested model variants strikes the best balance between model complexity and model fit in accounting for the data? Table 2 shows that the best performing model overall (in terms of having the lowest AIC summed across all participants) is CPTbeta, the variant of cumulative prospect theory assuming buyer–seller differences in response bias, closely followed by CPTalphabeta, the variant that additionally allows for differences in outcome sensitivity. CPTbeta receives the strongest support also on the individual level, with the highest average AIC weight and the highest number of participants for whom the model showed the best fit (although here it is closely followed by CPTdelta, the variant allowing for differences in the elevation of the probability weighting function). Interestingly, these results thus show that a successful description of pricing decisions from experience does not necessarily require the assumption of a learning or forgetting mechanism—which is part of the variants of the reinforcement learning model and instance-based learning theory. With the exception of VUMalphabeta and NM alphabeta, none of these models showed competitive performance. Nevertheless, it is of note that within each model class, it is consistently the models assuming buyer–seller differences in response bias, often in combination with buyer–seller differences in outcome sensitivity, that perform best.

As a test of absolute model fit, Fig. 4 (left panel) plots the predictions of CPTbeta against the observed data. The figure shows the average (across participants) predicted valuation responses (based on the best fitting parameters) as a function of the average observed buying and selling prices, separately for each of the 30 lotteries (expressed on the 11-point response scale). The predicted responses were determined by summing across the response categories, each weighted by the predicted choice probability (see Equation 5b). As can be seen, the model does a good job of capturing the data (on average), as indicated by the fact that the predictions line up rather closely around the diagonal. Note that the endowment effect is reflected by the pattern that selling prices (black dots) are generally higher than buying prices (gray triangles). Figure 4 further shows that the model variant assuming buyer–seller differences in response bias (CPTbeta) reproduces the data somewhat better than the one assuming differences in outcome sensitivity (CPTalpha; middle panel). Finally, the rightmost panel shows that the model assuming differences in choice sensitivity (CPTtheta) yields a worse fit (note that random choice would be indicated by a horizontal line).

Empirical selling prices (WTA) and buying prices (WTP) plotted against model predictions (based on the best-fitting parameters) in Study 1. Shown are the average (across participants) prices and model predictions for each lottery, separately for the WTA (black dots) and WTP (gray triangles) condition

Parameter estimates.

The median estimated parameters of the tested model variants are reported in Table 3 (Table 11 in Appendix D reports the proportions of participants for whom the parameter value was higher for the seller, WTA, than for the buyer, WTP, conditions). Of particular interest are the estimates for those parameters that were estimated separately for the WTA and WTP conditions. According to the two best performing models, CPTbeta and CPTalphabeta, there are buyer–seller differences on response bias such that sellers inflate and buyers deflate the subjective valuation derived from the experienced outcomes. In addition, CPTalphabeta indicates that, consistent with a loss-aversion account, sellers have a higher outcome sensitivity than buyers.

Summary

Application of the formal models to empirical data reported by Pachur and Scheibehenne (2012) yielded two important insights: First, model variants of cumulative prospect theory generally achieved the best performance. This suggests that a successful description of pricing decisions from experience may not necessarily require the assumption of learning or memory mechanisms. Second—across computational frameworks and across aggregate and individual levels—models assuming buyer–seller differences in response bias seemed to strike the best balance between model fit and parsimony, though they were closely followed by the variant additionally allowing for differences in outcome sensitivity. Models implementing loss attention obtained the least support.

Study 2: Modeling incentivized buying and selling prices

According to the best performing model in Study 1, buyers and sellers hardly differed in their subjective value of experienced outcomes. This finding is inconsistent with the prominent loss-aversion account of the endowment effect, which predicts that sellers have higher outcome sensitivity than buyers (Kahneman et al., 1991). Instead, our results indicate that buyers and sellers differ in how they translate their subjective valuation into a behavioral response. This seems to support Plott and Zeiler’s (2005) argument that discrepancies in buying and selling prices may often reflect strategic misrepresentations, with people inappropriately using a “buy low, sell high heuristic” rather than stating their actual subjective valuations (for a critical discussion, see Isoni et al., 2011). However, in Study 1, where the pricing decisions were not incentivized, such deviations did not have actual monetary consequences; this may have amplified strategic misrepresentation and clouded buyer–seller differences in information processing (e.g., outcome sensitivity). To test this conjecture, we conducted a replication of Study 1, but using the Becker-DeGroot-Marschak (BDM) procedure (Becker, DeGroot, & Marschak, 1964) to incentivize participants to reveal their actual valuations of the lotteries. Would response biases recede under these conditions?

Method

Participants.

Eighty students (43 female; M = 23.7 years, SD = 3.5) participated in the experiment, which was conducted at the Max Planck Institute for Human Development in Berlin, Germany. Participants received €5 compensation plus an additional performance-contingent payment (determined based on the BDM method) ranging from –€0.03 to €4.25 (M = €0.67, SD = 1.22).

Material, design, and procedure.

We used the same 30 lotteries, design, and procedure as in Study 1, except that responses were incentivized with the BDM method (see Appendix E for the detailed instructions). Participants took between 20 and 35 minutes to complete the experiment.

Results

Replicating Pachur and Scheibehenne (2012), the number of draws participants took before making a pricing decision did not differ between the WTA and WTP conditions, Ms = 26.1 (SD = 12.9) versus 25.8 (SD = 11.0), t(79) = 0.31, p = .76. Figure 3 (right panel) shows the average selling and buying prices, expressed on the 11-point response scale and separately for the 30 lotteries (the pricing decisions expressed on the monetary scales are reported in Appendix A). As can be seen, selling prices substantially exceeded the buying prices, Ms = 6.14 (SD =1.47) versus 4.53 (SD = 1.55), t(79) = 9.67, p = .001, indicating that the endowment effect also emerged under incentive compatibility. As Fig. 3 shows, the discrepancy between selling and buying prices was slightly smaller than in Study 1, with an effect size (point-biserial correlation; Rosenthal, Rosnow, & Rubin, 2000) of r = .74, relative to r = .85 in Study 1.Footnote 7

We used the same procedure as in Study 1 to fit the models to the data. How well did the models perform? As shown in Table 4, NMalphabeta, CPTalphabeta, and VUMalphabeta, which allow for buyer–seller differences in both outcome sensitivity and response bias, now performed best, closely followed by CPTbeta, which only allows for buyer–seller differences in response bias. CPTalphabeta also performed best on the individual level, with the highest average AIC weight and the highest number of participants for whom the model showed the best fit. Given that, as shown in Table 5, the parameter estimates of NMalphabeta and CPTalphabeta indicated no (or very small) systematic differences between buyers and sellers in outcome sensitivity (e.g., for NMalphabeta the median estimates were αWTA = .893 and αWTP = .866, respectively) but a substantial difference in response bias, the contribution of buyer–seller differences in response bias may nevertheless be larger than the one in outcome sensitivity. These results suggest that the endowment effect may be driven by multiple mechanisms, and the cognitive modeling approach applied here seems to be able to disentangle and quantify them.

How did the best fitting parameter estimates capture the buying and selling prices incentivized using the BDM procedure? Tables 5 (see also Table 11 in Appendix D) shows that the pattern was similar to the one in Study 1: With the exception of the models allowing for buyer–seller differences in choice sensitivity (i.e., DELTAtheta, VUMtheta, NMtheta, and CPTtheta) and the variants of instance-based learning theory allowing for differences in decay and retrieval noise (i.e., IBLd and IBLsigma), all models indicated systematic differences between the WTA and WTP conditions (in contrast to Study 1, however, there was no significant difference in the parameters for IBLsigma). Together, these results indicate that the parameter estimates obtained are robust: Study 2 was conducted in a different lab and the monetary outcomes were shown in a different currency than in Study 1.

Do incentives reduce response bias?

As mentioned above, the BDM procedure has been proposed to minimize strategic misrepresentation (e.g., Kahneman et al., 1991; Plott & Zeiler, 2005). To test whether our cognitive modeling approach indicates support for this specific effect of the BDM procedure in the present study, we compared the sizes of buyer–seller differences with and without BDM (i.e., Study 2 vs. Study 1) on the parameters of different models. For instance, consider CPTalphabeta, which was among the best performing models in Studies 1 and 2 and which provides estimates for buyer–seller differences in both outcome sensitivity and response bias. Consistent with the hypothesis that the BDM procedure specifically affects response bias, a comparison of the reduction in buyer–seller differences (i.e., αWTA vs. αWTP, and βWTA vs. βWTP) in Study 2 compared to Study 1 showed that the effect size of the reduction (though small in an absolute sense) was considerably larger on the response bias parameter β (point-biserial correlation r = 0.082) than on the outcome sensitivity parameter α (r = 0.004). To the extent that the BDM procedure reduces strategic misrepresentation, our cognitive modeling approach thus makes it possible to track and quantify this specific effect.

Summary and discussion

The results of Study 2 show that under conditions of incentive compatibility there is some evidence that buyer–seller differences in outcome sensitivity contribute to the discrepancy between buying and selling prices, as predicted by the loss-aversion account. Still, even under incentive compatibility, there seems to be an influence of strategic misrepresentation, as models assuming buyer–seller differences in both response bias and in outcome sensitivity performed best.

Model recovery: Can the models be discriminated?

The modeling analyses in Studies 1 and 2 showed that more than one of the candidate models can, in principle, accommodate the discrepancies in buying and selling prices. One might therefore suspect that the models are to some extent able to mimic each other. Can our approach actually discriminate between the tested implementations of the candidate accounts of buyer–seller differences? Further, are there differences in flexibility between the models? For instance, modeling the discrepancy between buying and selling prices via the exponent of the utility/value function (see Equation 1) or via a nonlinear probability weighting function (see Equation 12) may be more flexible than modeling it via an additive constant (see Equation 6).

To address these questions, we conducted two model recovery studies in which we first generated several data sets for several models; in each set one of the models was the generating mechanism (using a particular parameter setting). Then we fitted this model as well as the other models to each set. The crucial question was how frequently the data-generating model would emerge as the one with the best fit. Given that cumulative prospect theory emerged as the best fitting computational framework across Studies 1 and 2, we focused on this framework. In our first model recovery study, we included CPTbeta, CPTalpha, and CPTdelta, as these variants performed relatively well (see Tables 2 and 4) and allow for a clear-cut comparison of the distinct mechanisms under investigation. We thus examine to what extent buyer–seller differences in response bias, outcome sensitivity, and probability weighting can actually be told apart.

Our second model recovery analysis compared CPTbeta and CPTalphabeta, which were consistently among the best performing models in Studies 1 and 2 (see Tables 2 and 4). To what extent can a model that assumes buyer–seller differences only on response bias be discriminated from a model that additionally assumes differences on outcome sensitivity—in particular given the empirical finding that the differences in the estimated outcome sensitivity was rather small relative to the differences in response bias (see Tables 3 and 5)? Further, as CPTbeta and CPTalphabeta differ in model complexity, is our model evaluation procedure suitable for distinguishing them?

Method

We used the same procedure to generate the data and subsequently evaluate the models in both model recovery studies.

Data generation.

We simulated decision makers who provided buying and selling prices for each of the 30 lotteries used in Studies 1 and 2. This was done for each of the tested models (i.e., CPTbeta, CPTalpha, and CPTdelta in the first model recovery study and CPTbeta and CPTalphabeta in the second model recovery study), using the respective set of median best fitting parameters obtained in Study 1 (see Table 3). As input for the models, we used the sampled outcomes of each of the 76 participants in Study 1 (note that depending on the sampled outcomes, the same set of parameter values can yield different predictions). Based on the choice probabilities that the respective model predicted for each of the 11 values on the response scale for a given lottery, a pricing response was drawn probabilistically. This was repeated 10 times, yielding for each generating model a total of 76 × 10 = 760 simulated decision makers (each with 30 selling and 30 buying prices) in each set. There were three such sets in the first recovery analysis and two in the second recovery analysis.

Model classification

Each of the models was fitted to each set of simulated pricing decisions using the same estimation procedure as in Studies 1 and 2. We then determined for each simulated decision maker which of the tested models performed best. This was done using both AIC and BIC (see Appendix C). A model was said to be accurately recovered if it showed, overall, the best performance (in terms of the percentage of simulated decision makers for whom the model showed the best fit) for the data set that it had generated.

Results

Figures 5a and b show for both model recovery analyses that the parameter values used to generate each set were recovered with high accuracy by the respective model. This demonstrates that the model parameters can be identified rather well (although some CPT parameters are intercorrelated to some extent; Scheibehenne & Pachur, 2015). For the first model recovery study, Table 6 shows the percentage of cases (out of the 760 simulated decision makers in each set) in which each of the three tested models (CPTbeta, CPTalpha, and CPTdelta) performed best, separately for the sets generated by each model. The results using AIC for model classification are shown in the upper part of Table 6; the results using BIC, in the lower part. The percentages in the diagonal (shown in bold) represent correct model recoveries (i.e., where the mechanism that generated the data also provided the best account when fitted to the data).

a Density plots showing the distribution of the estimated model parameters in the first model recovery analysis in the data sets that were generated by these models. Dotted lines indicate the parameter values that were used to generate the data. b Density plots showing the distribution of the estimated parameters of the two models of the second model recovery analysis in the data sets that were generated by these models. Dotted lines indicate the parameter values that were used to generate the data

As can be seen from the table, the three models can be distinguished rather well, and this holds for both AIC and BIC. For instance, in the set of buying and selling prices generated by CPTalpha, this model achieved the lowest (i.e., best) AIC in 88.8% of cases, whereas CPTbeta and CPTdelta achieved the best performance in only 7.9% and 3.3% of cases, respectively. These results indicate that our modeling approach was well suited to distinguish whether the discrepancy between buying and selling prices was driven by differences in response bias, outcome sensitivity, or probability weighting. Finally, given that CPTalpha was recovered more frequently when CPTbeta was the generating mechanism than vice versa (both for AIC and BIC), modeling discrepancies between buying and selling prices via the exponent of the value function may be more flexible than via an additive constant. Based on these results, it seems unlikely that the good performance of CPTbeta in Studies 1 and 2 was due to a higher model complexity that was not taken into account by AIC (which only considers the number of free parameters).

The results for the second model recovery study, with CPTbeta and CPTalphabeta, are shown in Table 7. Note that using BIC CPTbeta is generally the most frequently recovered model, even in the data set generated by CPTalphabeta. Using AIC, by contrast, the model that generated the data is also the best performing one in the majority of cases. For this reason, our conclusions on relative model performance in Studies 1 and 2 are based on AIC rather than on BIC. The results using AIC demonstrate that CPTbeta and CPTalphabeta, despite their overlap in the underlying mechanism, can be discriminated with our model comparison approach. Nevertheless, model recovery was clearly more accurate for CPTbeta than for CPTalphabeta, and in cases where CPTalphabeta had generated the data, CPTbeta showed the best performance on AIC in 42.6% of the cases. It is therefore possible that in our model comparisons of Studies 1 and 2 the support for CPTalphabeta is underestimated.

General discussion

Past research has offered various accounts of the psychological mechanisms that might underlie buyer–seller differences in pricing decisions. To date, however, there have been few attempts to test, disentangle, and directly compare these accounts. In this article, we developed and applied a formal, quantitative cognitive modeling approach to empirical pricing decisions of monetary lotteries obtained by means of an experiential sampling paradigm. This paradigm also allowed us to examine buyer–seller differences in learning—a possibility suggested by the loss-attention account (Yechiam & Hochman, 2013a). To formalize the candidate accounts, we considered three computational frameworks that have been used to model decisions from experience—reinforcement learning, instance-based learning theory, and cumulative prospect theory—and implemented the different mechanisms derived from the accounts as model variants within these computational frameworks.

The first important insight from a quantitative model comparison was that there are considerable differences in the models’ ability to capture the data. Within each of the three computational frameworks, model variants assuming buyer–seller differences in response bias were consistently among those showing the best balance between model complexity and model fit. Evidence for an additional contribution of buyer–seller differences in outcome sensitivity also emerged, but only when the pricing decisions were incentivized (Study 2).

Second, across the three computational frameworks in which we implemented the candidate accounts of buyer–seller differences, the model variants of cumulative prospect theory performed best, even taking into account their higher model complexity (using AIC). This finding suggests that buyer–seller differences in valuations from experience can be accurately captured without considering cognitive mechanisms that are specific to sequentially learned information—such as learning and memory effects. Note that this conclusion also follows when comparing the models based on BIC, which punishes more heavily for model complexity. Although here variants of a reinforcement learning model perform best (see Appendix D), it is those of the natural-mean model, which assumes, as cumulative prospect theory, no distortion through learning and memory (e.g., recency). Thus, we are confident that the lack of support for memory and learning processes in our data is not due to model flexibility issues; nevertheless, future comparisons of computational models of the endowment effect should also employ alternative methods, such as out-of-sample prediction (e.g., Busemeyer & Wang, 2000) or Bayesian methods (e.g., Lee & Wagenmakers, 2013; Pachur, Suter, & Hertwig, 2017). The lack of evidence for memory and learning mechanisms is consistent with previous comparisons of learning models and cumulative prospect theory (Frey, Mata, & Hertwig, 2015; Glöckner et al., 2016), whereas in Erev et al. (2010) the latter was outperformed by learning models.

Finally, in model recovery studies focusing on the best performing models from Studies 1 and 2, we demonstrate that with our cognitive modeling approach these formal implementations of buyer–seller differences can be discriminated rather well. Overall, our results suggest that to capture the endowment effect in the context of lotteries, models need to allow, at the very minimum, for buyer–seller differences in outcome sensitivity and response bias. In the following, we discuss implications of our modeling approach and findings.

The role of response bias in the endowment effect

In light of the many proposals of how cognitive processing of buyers and sellers might differ, it may seem surprising that we find, in addition to some effect of differences in outcome sensitivity, evidence for a robust and substantial contribution of strategic misrepresentation. In the past, it has been suggested that the use of a “buy low, sell high” strategy—which we formalized in terms of response-bias models—resulted from either insufficient incentivization, misconception, or implicit influences, where “calls for selling behavior might trigger an instinctive reaction (e.g., sell high and buy low)” (Plott & Zeiler, 2005, p. 537). Our finding of evidence for models with buyer–seller differences in response bias even under conditions of incentive compatibility suggests that lack of understanding of the BDM procedure or implicit, automatic factors, which are less under the decision-maker’s control, play at least some role. Although the BDM procedure is a commonly used method for encouraging people to state their actual subjective valuations, there is some evidence that participants do not always fully understand it (e.g., Cason & Plott, 2014; James, 2007; Safra, Segal, & Spivak, 1990). Despite due efforts to clearly explain the procedure to participants, we cannot rule out that in Study 2 some participants did not completely understand it. However, our realization of the BDM procedure is consistent with how buying and selling prices were collected in many previous studies; our data should thus be comparable to and instructive for those studies.

Probability weighting in valuations from experience

In previous analyses of decisions from experience, one key topic has been the pattern of probability weighting suggested by people’s decisions (see Hertwig et al., 2004). The estimated weighting function parameters in the best performing variants of cumulative prospect theory (see Tables 3 and 5) yield an inverse S-shaped curvature (indicated by γ < 1), and thus overweighting of rare events and some reduced sensitivity to differences in the probabilities people experienced. This result is consistent with Kellen et al.’s (2016) systematic analysis of probability weighting in decisions from experience (see also Glöckner et al., 2016).

Does this contradict the conclusions of Hertwig et al. (2004) that in decisions from experience people choose as if they underweight rare events? Note that the conclusions by Hertwig et al. are based on a very different definition of over- and underweighting than those following from our (and Kellen et al.’s, 2016) estimated probability weighting function. The probability weighting function we estimated from people’s buying and selling prices is based on the probabilities that people actually experienced. Hertwig et al.’s diagnosis of underweighting, by contrast, referred to the “latent” probabilities (i.e., the objective probabilities underlying the payoff distributions) of the events; based on the latter definition, people often had not experienced (or had underexperienced) rare events in their (small) samples. Hertwig et al.’s underweighting argument and the present finding of overweighting are thus not in contradiction. In fact, Kellen et al. (2016) replicated the choice pattern on the basis of which Hertwig et al. concluded that rare events are underweighted, while at the same time estimating a probability weighting function (based on the experienced probabilities) from people’s choices that indicates overweighting of the experienced probabilities.

Loss attention and the endowment effect in valuations from experience

The models implementing a loss-attention account, in terms of buyer–seller differences in either choice sensitivity or learning and memory, did not perform well (relative to the other models) in capturing people’s buying and selling prices. This finding might raise the question of whether differences in choice sensitivity or memory and learning processes can lead to systematically higher selling prices than buying prices—the typical endowment effect—in the first place. For instance, lower choice sensitivity should lead to responses becoming more unsystematic and thus, ceteris paribus, to a regression toward the mean of the response scale. So is a loss-attention account of the endowment effect a nonstarter?

Additional analyses, reported in Appendix F, suggest otherwise. It is shown that buyer–seller differences in choice sensitivity can lead to the pattern of higher selling than buying prices as long as the utility function is nonlinear, and the regression effect thus asymmetric around the mean of the response scale; with a linear utility function the regression effect is symmetric and differences in choice sensitivity cannot lead to the endowment effect. Moreover, the pattern of higher choice sensitivity for sellers than for buyers (i.e., the prediction of the loss-attention account) leads to the endowment effect only when decision makers are risk seeking (i.e., have a convex utility/value function); when decision makers are risk averse (i.e., have a concave utility/value function), higher choice sensitivity for buyers than for sellers is required for the endowment effect to occur. The analyses reported in Appendix F also elaborate the conditions under which the account implemented in IBL can give rise to the pattern of higher selling than buying prices, specifically, that it depends on the skewness of the probability distribution of the lottery’s outcomes. These insights qualify the ability of Yechiam and Hochman’s (2013a) loss-attention account to generate the endowment effect in principle. It should be kept in mind, however, that the implementations of this account showed a rather poor model fit for the present data.

Overall, the models implementing a loss-attention account within IBL performed worst. What might be reasons for this result? One possibility is suggested by the analysis mentioned above (reported in detail in Appendix F), showing that IBL can produce the endowment effect only for lotteries with a particular structure. Given the empirical robustness of the endowment effect (e.g., Yechiam, Ashby, & Pachur, in press), it is likely that this limitation severely restricts the explanatory power of IBL to account for differences in buying and selling prices. Second, a more general limitation of IBL might be that in contrast to the other modeling frameworks, IBL makes the assumption that the choice rule itself is deterministic and that probabilistic choice is exclusively due to noise during memory retrieval (see Equations 8 and 10). It is possible that this specific assumption gives IBL a disadvantage. Additional, exploratory analyses showed that IBL equipped with a probabilistic ratio-of-strength choice rule (Luce, 1959) performed considerably better (though still worse than the model variants of the other computational frameworks).

Applications of the cognitive modeling approach

The cognitive modeling approach outlined in this article can be used to complement other, experimental work on the psychological underpinnings of buyer–seller differences in pricing decisions. Various factors that can influence the size of the endowment effect have been identified, such as ownership, attachment, experience with trading, or affect (e.g., Loewenstein & Issacharoff, 1994; Morewedge et al., 2009; Peters et al., 2003; Plott & Zeiler, 2005; Strahilevitz & Loewenstein, 1998; for an overview, see Ericson & Fuster, 2014; Morewedge & Giblin, 2015). The cognitive modeling approach could be applied to investigate to what extent, for instance, manipulation of the duration of ownership (e.g., Strahilevitz & Loewenstein, 1998) impacts outcome sensitivity, response bias, or both.

Further, the cognitive modeling approach can be applied to study individual differences in the endowment effect. For instance, there is some evidence that the effect is larger in older than in younger adults (Gächter, Johnson, & Herrmann, 2010; but see Kovalchik, Camerer, Grether, Plott, & Allman, 2005), but the cognitive mechanisms underlying such potential age differences are not yet understood. Fitting computational models (e.g., variants of cumulative prospect theory) to the selling and buying prices of older and younger adults would make it possible to compare the age groups in terms of the estimated parameter profiles in general, but also in terms of which buyer–seller difference in the estimated parameters best accounts for age differences. The relative performance of candidate models might even differ between age groups.

Finally, by decomposing selling and buying prices into various latent cognitive mechanisms, the cognitive modeling approach could be used to correlate measures of these mechanisms with other individual-difference measures, such as cognitive capacity, physiological measures, and neuroimaging data (Forstmann, Wagenmakers, Eichele, Brown, & Serences, 2011).

Conclusion

For a long time, research on buyer–seller differences in pricing decisions has been primarily concerned with testing the reality of the endowment effect and it sensitivity to various psychological influences, such as ownership, expectations, or affect. By contrast, relatively little attention has been paid to the distinction and comparison of candidate psychological mechanisms mediating buyer–seller differences in the processing of acquired information. We proposed a cognitive modeling approach to formalize and contrast various existing accounts of how the cognitive mechanisms of buyers and sellers might differ. An analysis using our approach suggests that multiple mechanisms contribute to buyer–seller differences in pricing and can be disentangled by our cognitive modeling approach. The results to some extent support the loss-aversion account, but they also reveal a substantial contribution of strategic misrepresentation, even when decisions are incentivized. A minimal assumption required to model buying and selling prices of lotteries it is thus that there are buyer–seller differences in outcome sensitivity and response bias. We found no evidence that sellers show more accurate learning or response selection processes than buyers due to loss attention.

Notes

Note that this issue is orthogonal to the question of which aspects of being endowed—such as ownership, expectations, or attachment to the object—influence the size or the existence of the endowment effect (e.g., Morewedge, Shu, Gilbert, & Wilson, 2009; Strahilevitz & Loewenstein, 1998; for an overview, see Ericson & Fuster, 2014). As elaborated in the General Discussion, the cognitive modeling approach proposed here complements this work, hinting at the possible cognitive mechanisms mediating the impact of these aspects.

Several experimental paradigms exist in the literature on decisions from experience (for an overview, see Hertwig & Erev, 2009). Alternatives to the sampling paradigm are the partial-feedback and the full-feedback paradigms, in which each sample represents a consequential choice (i.e., exploration and exploitation collapse) and participants receive feedback about the chosen option and both options, respectively.

In additional analyses, we tested all models using a version of the choice rule in which the θ parameter in Equation 6 was set to 1 (see Nosofsky & Zaki, 2002) and a free choice-sensitivity parameter was instead included in Equation 5b (as in the softmax choice rule; Sutton & Barto, 1998). In this version, model fit was considerably inferior; however, neither the qualitative pattern of the model performance nor the parameter estimates were affected by how choice sensitivity was formalized.

In contrast to reinforcement models and cumulative prospect theory (see below), in IBL it is not assumed that the experienced outcomes are submitted to a nonlinear transformation. Future research might test IBL assuming a nonlinear transformation of outcomes and, potentially, also of the probabilities (as in cumulative prospect theory; see Equation 12).

Additional analyses fitting the models to minimize the mean squared deviation between the predicted and empirical pricings (which then, however, could not include a choice sensitivity parameter and thus did not allow us to test all predictions of the loss-attention account) led to qualitatively very similar results.