Abstract

Auditory verbal hallucinations (AVH) are reported in approximately 70% of psychotic patients, but they also may occur in approximately 10% of the healthy general population. AVH have been related to altered processing of vocal emotions at both sensory and higher-order processing stages in psychotic patients. However, it remains to be clarified whether individuals with high hallucination proneness (HP) exhibit a similar pattern of alterations. We investigated the impact of HP on vocal emotional perception and specified whether manipulations of acoustic cues, such as sound intensity and duration, related to salience changes, affect the time course of voice processing reflected in event-related potentials (ERP) of the electroencephalogram. Participants varying in HP performed a task involving the categorization of emotional nonverbal vocalizations (neutral, anger, and amusement) differing in duration and intensity. ERP results demonstrated interactive effects of HP, valence, and acoustic cues on both early (N1, P2) and late (Late Positive Potential [LPP]) processing stages. Higher HP was associated with decreased N1 and increased P2 amplitudes in response to louder and longer neutral (vs. positive) vocalizations, as well as with a larger LPP to louder and longer negative (vs. neutral) vocalizations. These findings suggest that HP is associated with changes in the processing of vocal emotions that might be related to altered salience of acoustic representations of emotions. Consistent with prior studies with psychotic patients, these findings show that altered perception of vocal emotions may additionally contribute to the experience of hallucinations in nonclinical samples.

Similar content being viewed by others

Introduction

Auditory verbal hallucinations (AVH), the experience of hearing a voice in the absence of corresponding acoustic stimulation, represent the most common type of hallucinations in schizophrenia, with an estimated prevalence of 70% (Johns et al., 2004). However, they also occur in approximately 10% of persons without a clinical diagnosis (Maijer, Begemann, Palmen, Leucht, & Sommer, 2018). Studying the experience of hearing voices in nonclinical individuals may provide critical insights into the neural and cognitive mechanisms underpinning AVH, with the advantage of avoiding the potential confounds associated with medication and hospitalization in psychotic patients (Badcock & Hugdahl, 2012).

Similarities in the cognitive (Brébion et al., 2016; Larøi, van der Linden, & Marczewski, 2004a) and neural (Diederen et al., 2012; Linden et al., 2010) mechanisms underpinning AVH in psychotic and nonclinical participants have been identified, supporting the notion of a psychosis continuum, i.e., that psychotic-like experiences are distributed in the general population along a continuum of severity (Baumeister, Sedgwick, Howes, & Peters, 2017; van Os, Hanssen, Bijl, & Ravelli, 2000; van Os, Linscott, Myin-Germeys, Delespaul, & Krabbendam, 2009). For instance, both psychotic and nonclinical persons reporting AVH are more likely than healthy controls to misattribute inner speech to an external source (Brébion et al., 2016; Larøi, van der Linden, & Marczewski, 2004a). Phenomenological similarities were also noted, namely the perceived location (inside the head), loudness (less intense than one’s own voice), and source (external) of AVH (Daalman et al., 2011). However, compared with psychotic patients with AVH, nonclinical voice hearers tend to perceive their hallucinatory experiences as more controllable (Choong, Hunter, & Woodruff, 2007; Daalman et al., 2011; de Leede-Smith & Barkus, 2013), as well as less frequent (Daalman et al., 2011; Larøi, 2012; Larøi & van der Linden, 2005). AVH also may differ in verbal content, which tends to be more negative in psychotic patients (e.g., commands or criticisms about what the patient is doing; Larøi et al., 2012 Nayani & David, 1996) than in nonclinical voice hearers (e.g., hearing a voice calling a person’s name when no one is there; de Leede-Smith & Barkus, 2013). From a dimensional perspective of psychotic-like experiences (van Os, Hanssen, Bijl, & Vollebergh, 2001; van Os & Linscott, 2012), the experience of AVH in the general population without need for clinical care has been typically examined by testing: 1) individuals with AVH proneness who experience brief and infrequent AVH that do not affect their daily functioning; and 2) nonclinical voice-hearers who experience frequent AVH of longer duration, often associated with other psychotic-like and mood symptoms (Johns et al., 2014). While the first subgroup is typically assessed using general hallucination-proneness measures (e.g., the Launay-Slade Hallucination Scale [LSHS]; Bradbury, Stirling, Cavill, & Parker, 2009; Morrison, Wells, & Nothard, 2002), screening interviews targeting the specific experience of hearing voices often are used to identify the second subgroup (Sommer et al., 2010).

Voices (including hallucinated voices) carry not only verbal information but also critical nonverbal information about the speaker, such as their identity and emotional state (Belin, Bestelmeyer, Latinus, & Watson, 2011). Neuroimaging evidence suggests that these three types of vocal information are processed in partially dissociated cortical regions (Belin et al., 2011; Belin, Fecteau, & Bédard, 2004). In schizophrenia patients reporting positive symptoms, behavioral and brain changes were found in the processing of speech information (Kuperberg, West, Lakshmanan, & Goff, 2008), voice identity (Alba-Ferrara, Weis, Damjanovic, Rowett, & Hausmann., 2012; Pinheiro, Rezaii, Rauber, & Niznikiewicz, 2016b), and vocal emotions (Alba-Ferrara, Fernyhough, Weis, Mitchell, & Hausmann, 2012a; Giannitelli et al., 2015; Pinheiro et al., 2013, 2014; Rossell & Boundy, 2005; Weisgerber et al., 2015). Specifically, schizophrenia patients with AVH were found to be less accurate in the recognition of negative relative to positive vocalizations (Rossell & Boundy, 2005) and showed reduced activation of the amygdala and hippocampus when listening to cries compared with laughs (Kang et al., 2009).

Vocal emotional perception in AVH along the psychosis continuum

Alterations in the processing of vocal emotions are recognized as an important feature of schizophrenia (Bozikas et al., 2006; Edwards, Pattison, Jackson, & Wales, 2001; Hooker & Park, 2002; Leitman et al., 2007; Pinheiro et al., 2013, 2014), being observed before illness onset (Addington et al., 2012; Amminger, Schäfer, Klier, Schlögelhofer, Mossaheb, Thompson, & Nelson 2012; Amminger, Schäfer, Papageorgiou, Klier, Schlögelhofer, Mossaheb, & McGorry 2012) and aggravated in patients with AVH (Alba-Ferrara, Fernyhough, et al., 2012a; Rossell & Boundy, 2005; Shea et al., 2007). Compared with nonhallucinating patients, psychotic patients reporting AVH were found to be less accurate at recognizing emotional prosodic cues (Alba-Ferrara, Fernyhough, et al., 2012a; Shea et al., 2007) and less accurate in decoding emotions in nonverbal vocalizations (Rossell & Boundy, 2005). Nonetheless, no significant differences were observed between patients with versus without AVH in the recognition of emotions from speech prosody (Rossell & Boundy, 2005). It is possible that semantic processing deficits (Rossell & Boundy, 2005) have masked symptom-specific differences in vocal emotional processing. Together, these findings suggest that schizophrenia patients who are less able to recognize emotions from both speech prosody and nonverbal vocalizations are more likely to experience AVH. Deficits in vocal emotional processing could contribute toinner speech misattribution that is thought to subserveAVH (Alba-Ferrara, Fernyhough, et al., 2012a). Note, however, that only a few studies examined whether and how the occurrence of positive-like symptoms (i.e., hallucinations and/or delusions) in nonclinical individuals affects the processing of vocal emotions (Addington et al., 2012; Amminger et al., 2012a, 2012b; Pinheiro, Farinha-Fernandes, Roberto, & Kotz, 2019). These behavioral studies have been mixed, with some reporting altered (Addington et al., 2012; Amminger et al., 2012a, 2012b) or preserved (Pinheiro et al., 2019) vocal emotional recognition.

Vocal emotional perception: insights from event-related potentials

Studies probing event-related potentials (ERP) of the electroencephalogram (EEG) support the notion that the processing of vocal emotions involves three distinct, but interactive, stages (Paulmann & Kotz, 2008a, 2008b; Schirmer & Kotz, 2006). After the sensory processing of the voice signal (reflected in the N1), the detection of its emotional salience takes place (reflected in the P2). Higher-order processes, such as the cognitive evaluation of the emotional significance of the voice, are typically reflected in later components, such as the Late Positive Potential ([LPP]; Thierry & Roberts, 2007). An early differentiation between neutral and emotional vocal cues is reflected in N1 and P2 amplitude modulations (Liu et al., 2012; Paulmann, Bleichner, & Kotz, 2013; Sauter & Eimer, 2009). Decreased N1 (Liu et al., 2012) and enhanced P2 (Sauter & Eimer, 2009) amplitudes for emotional relative to neutral vocal sounds have been reported, suggesting that the emotional content of a stimulus facilitates acoustic sensory processing and salience detection, respectively. Furthermore, emotional vocal cues tend to elicit larger LPP amplitudes than neutral voices (Pell et al., 2015; Pinheiro et al., 2016a), reflecting enhanced sustained attention towards the processing of emotionally relevant information (Hajcak, MacNamara, Foti, Ferri, & Keil, 2013; Schupp et al., 2000).

Vocal emotions are expressed through the combination of different types of acoustic features, such as fundamental frequency (F0) or pitch, intensity, and duration (Schirmer & Kotz, 2006). Of note, acoustic changes may contribute to the enhanced salience of emotional voices. Using an oddball task, Schirmer, Simpson, and Escoffier (2007) demonstrated an amplitude increase of the mismatch negativity ([MMN] – an index of automatic deviance detection – Näätänen, Pakarinena, Rinnea, & Takegataa, 2004) and P3a (an index of attention orienting – Duncan et al., 2009) in response to vocal emotions characterized by high relative to low intensity, indicating that loud emotional voices were acoustically more salient. Moreover, irrespective of their intensity, vocal emotional sounds were more easily detected and captured more attention than nonvocal sounds, which confirms the primacy of social sounds in the auditory system (Schirmer et al., 2007).

Stimulus duration also was found to modulate ERP signatures of vocal emotional processing. For instance, Chang, Zhang, Zhang, and Sun (2018) observed decreased N1 amplitude in response to vocal emotions of short and long (vs. medium) duration, as well as increased P2 amplitude in response to vocal emotions of short (vs. medium and long) duration (Chang et al., 2018). Furthermore, the N1 was decreased in response to vocalizations expressing anger, sadness, and surprise relative to happiness in the short duration condition, whereas the P2 was increased in response to happy and angry relative to surprised voices, especially when their duration was shorter (Chang et al., 2018). These findings indicate that sound duration also may affect the sensory processing and the automatically perceived salience of vocal emotions, which may be facilitated for vocal cues of short duration (Chang et al., 2018).

Altered bottom-up and top-down processing of vocal emotions has been consistently reported in schizophrenia patients with AVH (Alba-Ferrara, Fernyhough, et al., 2012a; Leitman et al., 2005; Pinheiro et al., 2013, 2014; Rossell & Boundy, 2005; Shea et al., 2007). Consistent with the hypothesized psychosis continuum, it is likely that high hallucination proneness (HP) is associated with changes in the processing of vocal emotions at both early sensory (N1 and P2) and higher-order cognitive (LPP) stages. To the best of our knowledge, no study has examined how HP affects the time course of vocal emotional processing reflected in distinct ERP indices. Nevertheless, some behavioral studies probing vocal emotional processing in individuals at genetic (Tucker, Farhall, Thomas, Groot, & Rossell, 2013) and clinical (Addington et al., 2012; Amminger et al., 2012b; Amminger et al., 2012a) high-risk of converting to psychosis and reporting positive-like symptoms (hallucinations and/or delusions) revealed similar alterations to those observed in schizophrenia patients with AVH. Specifically, Tucker et al. (2013) demonstrated that first-degree relatives of schizophrenia patients with AVH made significantly more errors in the discrimination of intensity and duration of pure tones compared to healthy controls. Furthermore, the discrimination of pure tones in these participants was associated with vocal emotional recognition accuracy: the number of errors in intensity and pitch discrimination was negatively correlated with recognition accuracy of vocal emotions (Tucker et al., 2013). Auditory processing deficits in relatives of schizophrenia patients with AVH, which were associated with reduced processing speed of vocal emotional cues, also were found to predict AVH proneness: the more prominent the auditory processing deficits were, the more likely nonclinical AVH were to occur (Tucker et al., 2013). Reduced accuracy in vocal emotional recognition also was reported in nonclinical participants experiencing psychotic-like symptoms in three additional behavioral studies (Addington et al., 2012; Amminger et al., 2012a, 2012b). Using words with emotional semantic content, van’t Wout and colleagues (2004) showed that the frequency of hallucinations in nonclinical individuals with high HP was positively associated with the time needed to process neutral (target) words when preceded by positive or negative emotional (prime) words. Hence, it is crucial to clarify whether AVH proneness is related to changes in the perception of vocal emotions and whether these putative changes are similar to those observed in schizophrenia patients. As ERPs are examined before a behavioral response is made, they afford excellent temporal resolution of the sensory and cognitive processes under study.

Current Study and Hypotheses

We probed whether the processing of emotional vocal cues is altered as a function of increased HP and whether these potential changes are associated with specific acoustic cues of vocal emotions, namely intensity and duration. Manipulations of these cues may signal changes in the acoustic saliency of vocal emotions (Schirmer et al., 2007). Nonverbal vocalizations were selected to avoid potential biases related to the concurrent processing of semantic information and because they represent more primitive expressions of emotions compared with speech prosody (Pell et al., 2015). ERP data were expected to provide insights into three processing stages of vocal emotional processing (N1, P2, and LPP).

Consistent with continuum models of psychosis (Baumeister et al., 2017; van Os et al., 2000; van Os et al., 2009), increased HP was expected to be associated with alterations in both early (N1, P2) and late (LPP) stages of vocal emotional processing. The hypothesized association between HP and altered perception of vocal emotions was further expected to be modulated by stimulus valence and acoustic parameters that were shown to increase emotional arousal (increased stimulus intensity – Schirmer et al., 2007) and facilitate emotion decoding (increased stimulus duration – Castiajo & Pinheiro, 2019).

Specifically, we hypothesized that increased HP would be associated with larger P2 amplitudes to positive vocalizations. This hypothesis was grounded in previous evidence showing selective changes in salience detection of positive vocal cues in psychotic patients (Pinheiro et al., 2013, 2014). We also hypothesized that high HP would be associated with increased LPP amplitude to negative vocalizations, irrespective of acoustic-specific changes. This hypothesis considered previous studies with schizophrenia patients revealing that AVH are associated with enhanced sustained attention to negative voices (Alba-Ferrara, Fernyhough, et al., 2012a).

Finally, considering that altered vocal emotional processing in psychotic patients with AVH has been specifically related to changes in duration discrimination (Fisher et al., 2011; Fisher, Labelle, & Knott, 2008), we expected that an increased HP would be associated with more pronounced N1 and P2 alterations (i.e., increased and decreased amplitude, respectively) when stimulus duration is manipulated.

Method

Participants

In the first stage of the study, a large sample of college students from different Portuguese Universities (N = 354) were enrolled in a study designed to adapt the Launay-Slade Hallucination Scale-Revised (LSHS – Larøi & van der Linden, 2005) for the Portuguese population (Castiajo & Pinheiro, 2017). The LSHS Portuguese version includes 16 items that tap into distinct forms of hallucinations (auditory, visual, olfactory, tactile, hypnagogic, and hypnopompic). The overall score ranges from 0 to 64; higher scores indicate higher HP. This scale has been widely used to probe nonclinical hallucinatory experiences (Larøi, Marczewski, & van der Linden, 2004b; Larøi & van der Linden, 2005; Morrison et al., 2002; Morrison, Wells, & Nothard, 2000; Waters, Badcock, & Maybery, 2003). The Portuguese version of the LSHS has shown adequate psychometric properties (Castiajo & Pinheiro, 2017).

In the second stage, and after being screened via phone to ensure eligibility, 45 participants who initially took part in the LSHS validation study were recruited for an ERP experiment on the basis of their total LSHS scores. Additional inclusion criteria were: 1) right-handedness (Oldfield, 1971); 2) European Portuguese as first language; 3) no hearing and vision impairment; 4) no history of neurological illness; 5) no history of drug or alcohol abuse in the past year (APA, 2000); and 6) no presence of medication for medical disorders that would impact EEG morphology. All participants were screened for psychopathological symptoms and for schizotypal traits with the Brief Symptom Inventory (BSI – Derogatis & Spencer, 1982; Portuguese version – Canavarro, 1999) and the Schizotypal Personality Questionnaire (SPQ – Raine, 1991; Portuguese version – Santos, 2011), respectively.

Of the 45 eligible participants, 10 subjects declined to participate due to scheduling reasons and 2 subjects had to be excluded due to EEG artifacts.Footnote 1 The final sample comprised 33 participants varying in their LSHS total scores (M = 22.15, SD = 11.70, range 4-47 points; Table 1) who met the inclusion criteria and completed the clinical and ERP assessments (mean age = 25.27, SD = 5.87 years, age range 18-42 years; mean education level = 15.21, SD = 2.39 years, education range 12-21 years; 25 females). Table 2 shows the prevalence, frequency of occurrence, perceived degree of control, and emotional content of the hallucinatory experiences for each type of hallucination measured by the LSHS.

In this sample, the LSHS total score was positively correlated with the SPQ total score (r = 0.665, p < 0.001), and with the BSI positive symptom distress index (r = 0.453, p = 0.008), ensuring good convergent validity with other self-reported clinical measures. Participants who reported AVH were further screened with the Psychotic Symptom Rating Scale (PSYRATS – Haddock, McCarron, Tarrier, & Faragher, 1999; Portuguese version – Telles-Correia et al., 2017) to better understand the phenomenological characteristics of their experiences. Voice-hearing experiences were predominantly described as not unpleasant and not distressful. Participants provided written informed consent and received vouchers or course credit for their participation. The experiment was approved by a local Ethics Committee (University of Minho, Braga, Portugal).

Stimuli

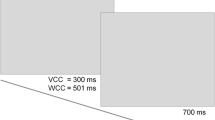

Thirty nonverbal vocalizations (15 from female and 15 from male speakers) expressing anger (growls; n = 10), amusement (laughter; n = 10), and neutral content (n = 10) were selected from the Montreal Affective Voices battery (MAV, Belin, Fillion-Bilodeau, & Gosselin, 2008). The selected MAV stimuli were acoustically manipulated. First, their duration was equalized to 700 milliseconds (ms) (Castiajo & Pinheiro, 2019). Then, a shorter (500 ms) version of each type of vocalization was created. To test whether the manipulated vocal samples still conveyed the intended emotions, they were first judged by a sample of participants who did not take part in the EEG experiment (N = 52; mean age = 23.42, SD = 7.80 years, age range 18-49 years; 27 females – see Castiajo & Pinheiro, 2019). The overall mean recognition accuracy (proportion of correct responses) for the three types of vocalizations was 0.80 in the 500-ms condition (anger – 0.53; amusement – 0.97; neutral – 0.91), and 0.84 in the 700-ms condition (anger – 0.62; amusement – 0.98; neutral – 0.92). The MAV vocal samples were also manipulated in terms of intensity (55 vs. 75 dB). Therefore, ten exemplars of each type of vocalization in all four acoustic conditions (short-soft, short-loud, long-soft, long-loud) were used as stimuli in the current experiment (see Supplementary Material for examples). The manipulation of stimulus duration and intensity was performed with Praat software (Boersma & Weenink, 2005, www.praat.org).

Procedure

Participants were seated comfortably at a distance of 100 cm from a computer screen in a sound and light-attenuating chamber, with a keyboard in front of them. The experimental session included three blocks, each comprising ten random presentations of each type of vocalization in four acoustic conditions (3 types of vocalizations × 10 speakers × 4 acoustic conditions = 120 total trials per block). Stimuli were presented binaurally through Sennheiser CX 300-II headphones. Presentation software (Version 18.3, Neurobehavioral Systems, Inc., Berkeley, CA, www.neurobs.com) was used to control stimulus presentation and timing, as well as to record each participants’ responses.

Figure 1 illustrates the design of an experimental trial. Participants were asked to categorize each vocalization according to its emotional quality by pressing one of three keys (negative, neutral, positive). During each block, a break was provided after 60 trials, and no feedback was provided. The experimental session lasted approximately 40 minutes.

EEG data acquisition and analysis

The EEG was recorded with a 64-channel BioSemi Active Two System (http://www.biosemi.com/products.htm) at a sampling rate of 512 Hz. Reference electrodes were placed on the left and right mastoids. In addition, eye blinks and movements were monitored through two electrodes placed on both left and right temples (horizontal electrooculogram [HEOG]) and one below the left eye (vertical electrooculogram [VEOG]). The offset of all electrodes was kept below 30 mV.

EEG data were analyzed using Brain Vision Analyzer 2.0.4 software (www.brainproducts.com). The signal was filtered offline with a 0.1 to 30 Hz 2nd order Butterworth bandpass filter, and then referenced to the average of the left and right mastoids. Individual ERP epochs were created for each vocalization type in each condition, with a −150 ms pre-stimulus baseline and 700 ms post-stimulus duration. After applying a baseline correction from −150 to 0 ms, eye blinks were corrected using the method of Gratton et al. (Gratton, Coles, & Donchin, 1983). Single trial epochs containing excessive motor artifacts (±100 μV criterion) were not included in the ERP averages. For each participant, ERP averages included at least 70% of the trials per condition. The number of discarded epochs did not differ between conditions (p > 0.05).

After a careful visual inspection of grand average waveforms, three ERP components were selected for statistical analyses: N1, P2, and LPP. The amplitude of each component was measured as the mean voltage in the following latency windows, following prior studies (Pell et al., 2015; Pinheiro et al., 2014, 2016a, 2017c): 100-200 ms (N1), 200-300 ms (P2), and 500-700 ms (LPP). Mean amplitudes for each component of interest were measured at four regions-of-interest (ROIs): ROI1 (left fronto-central): F1, F3, FC1, FC3; ROI2 (right fronto-central): F2, F4, FC2, FC4; ROI3 (left centro-parietal): C1, C3, CP1, CP3; ROI4 (right centro-parietal): C2, C4, CP2, CP4.

Statistical analyses

ERP and accuracy data were separately analyzed with linear mixed-effects models using the lmer4 (Bates, Maechler, Bolker, & Walker, 2014) and lmerTest (Kuznetsova, Brockhoff, & Christensen, 2017) packages in the R environment (R3.4.3. GUI 1.70). HP was calculated as the sum of the 16 LSHS items (i.e., LSHS total score). Rather than splitting participants into high and low HP subgroups, a continuous variable (LSHS total scores) was used in the statistical model to avoid issues associated with dichotomization, including loss of statistical power and biased estimates (Altman & Royston, 2006; Selvin, 1987). Mixed-effects models were chosen, because they have benefits over traditional statistical methods (i.e., analysis of variance). Specifically, they consider both fixed and random effects (participants’ intra-individual variability) andreduce the potential occurrence of spurious effects (Jaeger, 2008). Due to its robustness and efficient estimates, mixed-effects models have been proposed as alternatives to traditional methods for statistically analyzing ERP data using a repeated-measures design (Baayen, Davidson, & Bates, 2008; Bagiella, Sloan, & Heitjan, 2000). Because the best procedure to correct for multiple testing in mixed-effects modeling remains to be determined (Joo, Hormozdiari, Han, & Eskin, 2016), and since the current study is driven by a priori hypotheses (Streiner & Norman, 2011), no adjustment for multiple comparisons was used. Notwithstanding, we provide confidence intervals, which have been proposed as an alternative to traditional methods to correct for multiple comparisons (Nakagawa, 2004; Thompson, 2002). Only statistically significant findings (p < 0.05) are reported.

ERP data

The hypothesis that HP would affect the processing of vocal emotions at early and later stages was tested for each ERP component with three distinct mixed-effects models, including amplitude as outcome, participants as random effects, and HP (LSHS total score), intensity (loud [75 dB], soft [55 dB]), valence (neutral, positive, negative), duration (long [700 ms], short [500 ms]), and ROI (left fronto-central, right fronto-central, left centro-parietal, right centro-parietal) as fixed effects.

Behavioral data

To specify the effects of HP on the recognition accuracy of vocal emotions (proportion of correct responses), a linear mixed-effects model was tested. Specifically, recognition accuracy was included as outcome, participants as random effects and HP (LSHS total score), intensity (loud [75 dB], soft [55 dB]), valence (neutral, positive, negative), and duration (long [700 ms], short [500 ms]) as fixed effects.

Results

ERP data

Figure 2 illustrates grand average waveforms as a function of emotion and manipulations of intensity and duration. Mean amplitudes for each emotion type and acoustic condition are presented in Figure 3. Scatterplots in Figure 4 show the mean N1, P2, and LPP amplitudes for each participant as a function of HP.

Grand average waveforms contrasting neutral, negative, and positive vocalizations under intensity and duration manipulations at C3 and C4 electrodes. Topographic maps show the spatial distribution of the N1, P2, and LPP effects in the total sample (N = 33). Neu = neutral; Neg = negative; Pos = positive; LPP = Late Positive Potential

Amplitude differences between neutral and emotional vocalizations in each acoustic condition based on duration and intensity manipulations. Bars represent mean amplitudes over left centro-parietal electrodes (ROI3) in the case of N1, and LPP and over right centro-parietal electrodes (ROI4) in the case of P2. Standard error (SE) of the means is represented in error bars. Amp = amplitude

Mean amplitude at centro-parietal electrodes as a function of hallucination proneness across emotion types (neutral, negative, positive) and acoustic manipulations (shorter/softer: 500 ms – 55 dB; shorter/louder: 500 ms – 75 dB; longer/softer: 700 ms – 55 dB; longer/louder: 700 ms – 75 dB). HP = hallucination proneness; Amp = amplitude

N1

N1 amplitude was modulated by stimulus intensity and valence, but not by stimulus duration (p > 0.05). The N1 was decreased in response to loud compared with soft vocalizations (β = 1.915, SE = 0.398, t(1551) = 4.801, p < 0.001, 95% confidence interval [CI]: [1.132, 2.697]), as well as in response to both negative (β = 0.847, SE = 0.398, t(1551) = 2.126, p = 0.033, 95% CI: [0.065, 1.630]) and positive (β = 1.403, SE = 0.398, t(1551) = 3.519, p < 0.001, 95% CI: [0.621, 2.185]) compared with neutral vocalizations. Intensity interacted with valence: loud vocalizations elicited a less negative N1 response when they had a neutral compared with positive quality (β = −2.311, SE = 0.564, t(1551) = −4.098, p < 0.001, 95% CI: [−3.418, −1.205]).

HP interacted with valence, intensity, and duration: an increase in HP was associated with a decreased N1 in response to neutral compared to positive vocalizations in the high intensity (loud) and long duration conditions (β = −0.081, SE = 0.031, t(1551) = −2.556, p = 0.011, 95% CI: [−0.143, −0.018]).

P2

P2 amplitude was modulated by valence and intensity: the P2 was increased (i.e., more positive) in response to positive compared to neutral vocalizations (β = 1.185, SE = 0.461, t(1551) = 2.569, p = 0.010, 95% CI: [0.280, 2.090]) and to loud compared to soft vocalizations (β = 2.132, SE = 0.461, t(1551 ) = 4.621, p < 0.001, 95% CI: [1.227, 3.037]). Valence interacted with intensity and duration: an increase in P2 amplitude was observed in response to positive compared with neutral vocalizations in the high intensity and long duration conditions (β = 2.202, SE = 0.922, t(1551) = 2.387, p = 0.017, 95% CI: [0.392, 4.012]).

HP interacted with valence, intensity, and duration: an increase in HP was associated with a less positive P2 for positive compared with neutral vocalizations when they were acoustically more salient, i.e., in the high intensity and long duration conditions (β = −0.117, SE = 0.036, t(1551) = −3.193, p = 0.001, 95% CI: [−0.189, −0.045]).

LPP

LPP amplitude was modulated by valence and intensity: the LPP was increased (i.e., more positive) in response to negative compared to neutral vocalizations (β = 2.778, SE = 0.645 t(1551) = 3.318, p < 0.001, 95% CI: [1.513, 4.044]), and in response to loud compared to soft vocalizations (β = 2.140, SE = 0.645, t(1551) = 3.318, p < 0.001, 95% CI: [0.875, 3.405]). Intensity interacted with valence and duration: the LPP was increased in response to loud negative vocalizations with a longer duration compared to neutral vocalizations (β = 3.980, SE = 1.290, t(1551) = 3.085, p = 0.002, 95% CI: [1.450, 6.511]).

HP modulated the interaction between duration and valence: higher HP was associated with an increased LPP for negative compared with neutral vocalizations when they had a longer duration (β = 0.097, SE = 0.036, t(1551) = 2.675, p = 0.008, 95% CI: [0.025, 0.168]). HP also modulated the interaction between intensity and valence: higher HP was associated with an increased LPP for loud negative compared to neutral vocalizations (β = 0.074, SE = 0.036, t(1551) = 2.033, p = 0.042, 95% CI [0.002, 0.145]).Footnote 2

Behavioral data

Recognition accuracy was modulated by stimulus valence: negative vocalizations were less accurately recognized than neutral vocalizations (β = -0.087, SE = 0.033, t(363) = -2.595, p = 0.010, 95% CI: [−0.154, −0.021]; Table 3). Neither stimulus intensity and duration nor individual differences in HP affected recognition accuracy (p > 0.05).

Discussion

Alterations in vocal emotional perception were found to be associated with AVH in psychotic patients (Alba-Ferrara, Fernyhough, et al., 2012a; Rossell & Boundy, 2005; Shea et al., 2007). However, whether similar changes are observed in nonclinical participants with high HP remained to be clarified. Using ERP, the current study demonstrates that both early and later stages of vocal emotional processing are affected by HP. Furthermore, it provides preliminary evidence for a link between abnormal perception of vocal emotions and hallucination proneness, consistent with the hypothesis of a psychosis continuum (Baumeister et al., 2017; van Os et al., 2000; van Os et al., 2009).

Sensory processing of vocal emotions (N1) as a function of hallucination proneness

The auditory N1 indexes the sensory processing of the stimulus (Näätänen & Picton, 1987) and the allocation of resources to form and maintain a sensory memory trace of the eliciting stimulus (Obleser & Kotz, 2011). In good agreement with previous evidence (Liu et al., 2012), the current study revealed that positive (amusement) and negative (angry) vocalizations elicited a decreased N1 response compared to neutral vocalizations, suggesting that auditory sensory information is more easily processed when it has an emotional quality (Jessen & Kotz, 2011; Paulmann, Jessen, & Kotz, 2009). Studies using pure tones have shown that an increase in sound intensity (>70 dB) results in decreased N1 amplitude. We support this finding: higher sound intensity was associated with a less negative N1 amplitude, irrespective of valence. In contrast, we found a decreased N1 response to neutral relative to positive vocalizations in the high intensity condition, which indicates that valence modulates the effects of sound intensity on the N1, plausibly via arousal and its effects on attention (Lithari et al., 2010). Listeners tend to associate an increase in stimulus intensity with an increase in emotional arousal: for example, sound intensity correlates with distance and can inform on whether danger is approaching (Schirmer et al., 2007). Arousal and attention effects were found to be reflected in an increased N1 response (Coull, 1998). The current finding may indicate that the sensory processing of loud emotional (positive) vocalizations is facilitated relative to the sensory processing of loud neutral vocalizations, possibly due to increased automatic attention. No significant changes in N1 amplitude were observed in response to duration manipulations, revealing that the N1 was mainly modulated by intensity changes.

Consistent with our hypothesis, the N1 also was affected by HP. An increase in HP was associated with a decreased N1 amplitude in response to neutral relative to positive vocalizations, but only in the high intensity and long duration conditions, i.e., when sounds were physically more salient. In other words, more salient acoustic information was needed to normalize the N1 response to positive vocalizations as a function of increased HP. Similarly, alterations in the sensory processing of vocal emotions have been reported in psychotic patients with AVH (Fisher et al., 2008; Fisher et al., 2011).

Detection of the emotional salience of the voice (P2) as a function of hallucination proneness

In agreement with previous evidence (Liu et al., 2012; Sauter & Eimer, 2009), we observed a more positive P2 response to emotional relative to neutral vocalizations. This effect occurred specifically for positive vocalizations, supporting its socially relevant role (Pell et al., 2015; Pinheiro, Barros, Dias, & Kotz, 2017a; Pinheiro, Barros, Vasconcelos, Obermeier, & Kotz, 2017b). The P2 amplitude increase to positive vocalizations was further enhanced when the voice was acoustically more salient, i.e., high intensity and long duration conditions. This may have contributed to facilitated emotional salience detection.

We also observed that an increased HP resulted in a reverse P2 effect: loud and long positive vocalizations led to a decreased P2 amplitude relative to loud and long neutral vocalizations. This finding suggests that HP is associated with altered emotional salience detection in voices, particularly of positive vocal cues. Positive vocalizations could elicit decreased attention, which might affect how emotional salience is automatically perceived. Previous studies with psychotic patients demonstrated selective changes in salience detection from positive vocal cues (i.e., increased P2 amplitude to happy prosody – Pinheiro et al., 2013, 2014). The current findings suggest that the early stages of vocal emotional processing might be more affected during the perception of positive vocalizations also in nonclinical persons with high HP.

Cognitive evaluation of the emotional significance of the voice (LPP) as a function of hallucination proneness

Consistent with previous studies (Pell et al., 2015), we found that the LPP amplitude was increased for negative (angry) compared to neutral vocalizations, irrespective of stimulus intensity and duration. In healthy participants, the increased sustained attention to angry voices, reflected in larger LPP amplitudes, has been shown to indicate the preferential processing of potentially threatening cues (Frühholz & Grandjean, 2012). The current finding reveals that negative vocalizations were associated with enhanced sustained attention and required increased elaborative processing relative to neutral vocalizations, corroborating its adaptive function (Pell et al., 2015).

HP also affected the cognitive evaluation of the emotional significance of the voice. Typically, emotional vocalizations elicit an increased LPP amplitude compared to neutral vocal cues (Pell et al., 2015; Pinheiro et al., 2016a). In the current study, we observed that listeners with high HP benefited from cues that were acoustically more salient: LPP was enhanced for negative relative to neutral vocalizations in the high intensity and duration conditions. Studies with schizophrenia patients with AVH have shown enhanced sustained attention to negative vocal cues even when the acoustic properties of the voice were not manipulated (Alba-Ferrara, Fernyhough, et al., 2012a). However, the current study reveals that a negativity bias in voice perception (reflected in an increased LPP for negative relative to neutral voices) in participants with high HP is only observed when voices are acoustically more salient. Given that HP did not modulate the recognition accuracy of negative vocalizations, which achieved high recognition accuracy in all four acoustic conditions (Table 3), this pattern of findings suggests interactive effects of HP and salience rather than the effects of lower recognizability of negative cues.

Overall, our findings indicate that alterations in vocal emotional processing in high HP may be primarily driven by altered salience of acoustic representations of emotions. The aberrant salience hypothesis of psychosis (Kapur, 2003) postulates that psychotic symptoms are associated with altered dopaminergic transmission that leads to abnormal salience assignment to stimuli in the world. The current study indicates that high hallucination proneness in the general population also may be associated with changes in how salience is detected and assigned to emotional voices. Longitudinal investigations of nonclinical persons with high HP are necessary to examine whether and how changes in vocal emotional perception may predict transition to psychosis.

Limitations

The interpretation of the current findings should consider the relatively small sample size and convenience sampling approach. Evidence has shown little to no degree of bias in the estimation of fixed effects with mixed-effects modeling, even when the sample size is small (Clarke & Wheaton, 2007; Maas & Hox, 2005). Notwithstanding, the current findings (especially those concerning interactions between factors) should be considered exploratory and replicated in future studies with larger samples.

Conclusions

Our findings suggest that the sensory processing (N1), salience detection (P2), and cognitive evaluation of the emotional significance of vocalizations (LPP), taking place before a behavioral response is made, are affected by high HP. However, individual differences in HP did not modulate recognition accuracy of emotional vocalizations. Hence, electrophysiological measures may represent a more sensitive measure of the effects of high HP on the perception of vocal emotions. Importantly, the effects of high HP were modulated by the acoustic properties of emotional vocalizations, specifically intensity and duration, which were found to change the acoustic saliency of sounds. Additionally, these effects were valence-specific: whereas changes in the processing of positive vocalizations were enhanced in early stages (N1 and P2), changes in the processing of negative vocalizations were more pronounced in later cognitive stages (LPP). Similarities in ERP changes underlying the processing of vocal emotions in nonclinical persons with high HP and psychotic patients (Pinheiro et al., 2013, 2014) support the hypothesis of a psychosis continuum (Baumeister et al., 2017; van Os et al., 2000; van Os et al., 2009). Changes in voice perception mechanisms may be a core feature of hallucination proneness (Pinheiro, Schwartze, & Kotz, 2018).

Notes

The hypotheses of the current study were tested with mixed-effects models. Because the best procedure to determine sample size when using mixed-effects modeling remains to be specified (Maas & Hox, 2005; McNeish & Stapleton, 2016), sample size was determined based on previous studies probing the effects of HP measured with the LSHS (e.g., N = 20 – Bentall & Slade, 1985; N = 32 – Pinheiro et al., 2018; N = 40 – van't Wout, Aleman, Kessels, Larøi, & Kahn, 2004; N = 42 – Vercammen & Aleman, 2010).

In an exploratory analysis, we probed whether the ERP responses were modulated by the emotional content of AVH assessed with the PSYRATS. These effects were tested in the nine participants who more frequently reported hearing voices based on the LSHS items specifically tapping into AVH (i.e., “I have been troubled by hearing voices in my head.” “In the past, I have had the experience of hearing a person’s voice and then found that no one was there.” “I often hear a voice speaking my thoughts aloud.”) and follow-up questions (“It occurs to me very rarely. It occurs to me very often.”). The N1, P2, and LPP responses to vocal emotions showed no effects of the emotional content of AVH (p > 0.05).

References

Addington, J., Piskulic, D., Perkins, D., Woods, S. W., Liu, L., & Penn, D. L. (2012). Affect recognition in people at clinical high risk of psychosis. Schizophrenia Research, 140(1-3), 87-92. https://doi.org/10.1016/j.schres.2012.06.012

Alba-Ferrara, L., Fernyhough, C., Weis, S., Mitchell, R. L., & Hausmann, M. (2012a). Contributions of emotional prosody comprehension deficits to the formation of auditory verbal hallucinations in schizophrenia. Clinical Psychology Review, 32(4), 244-250. https://doi.org/10.1016/j.cpr.2012.02.003

Alba-Ferrara, L., Weis, S., Damjanovic, L., Rowett, M., & Hausmann, M. (2012b). Voice identity recognition failure in patients with schizophrenia. The Journal of Nervous and Mental Disease, 200(9), 784-790. https://doi.org/10.1097/NMD.0b013e318266f835

Altman, D. G., & Royston, P. (2006). The cost of dichotomising continuous variables. BMJ (Clinical research ed.), 332(7549), 1080. https://doi.org/10.1136/bmj.332.7549.1080

American Psychiatric Association (2000). Diagnostic and statistical manual of mental disorders: DSM-IV-TR (4th ed.). Washington, DC: American Psychiatric Association.

Amminger, G. P., Schäfer, M. R., Klier, C. M., Schlögelhofer, M., Mossaheb, N., Thompson, A., ... Nelson, B. (2012a). Facial and vocal affect perception in people at ultra-high risk of psychosis, first-episode schizophrenia and healthy controls. Early Intervention in Psychiatry, 6(4), 450-454. https://doi.org/10.1111/j.1751-7893.2012.00362.x

Amminger, G. P., Schäfer, M. R., Papageorgiou, K., Klier, C. M., Schlögelhofer, M., Mossaheb, N., … McGorry, P. D. (2012b). Emotion recognition in individuals at clinical high-risk for schizophrenia. Schizophrenia Bulletin, 38(5), 1030-1039. https://doi.org/10.1093/schbul/sbr015

Badcock, J. C., & Hugdahl, K. (2012). Cognitive mechanisms of auditory verbal hallucinations in psychotic and non-psychotic groups. Neuroscience and Biobehavioral Reviews, 36(1), 431-438. https://doi.org/10.1016/j.neubiorev.2011.07.010

Baayen, R. H., Davidson, D. J., & Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. Journal of Memory and Language, 59(4), 390-412. https://doi.org/10.1016/j.jml.2007.12.005

Bagiella, E., Sloan, R.P., Heitjan, D.F., 2000. Mixed-effects models in psychophysiology. Psychophysiology 37, 13-20. https://doi.org/10.1017/S0048577200980648

Bates, D., Maechler, M., Bolker, B., & Walker, S. (2014). lme4: Linear mixed-effects models using Eigen and S4. R package version, 1(7), 1-23.

Baumeister, D., Sedgwick, O., Howes, O., & Peters, E. (2017). Auditory verbal hallucinations and continuum models of psychosis: A systematic review of the healthy voice-hearer literature. Clinical Psychology Review, 51, 125-141. https://doi.org/10.1016/j.cpr.2016.10.010

Belin, P., Bestelmeyer, P. E. G., Latinus, M., & Watson, R. (2011). Understanding Voice Perception. British Journal of Psychology, 102(4), 711-725. https://doi.org/10.1111/j.2044-8295.2011.02041.x

Belin, P., Fecteau, S., & Bédard, C. (2004). Thinking the voice: Neural correlates of voice perception. Trends in Cognitive Sciences, 8(3), 129-135. https://doi.org/10.1016/j.tics.2004.01.008

Belin, P., Fillion-Bilodeau, S., & Gosselin, F. (2008). The Montreal Affective Voices: A validated set of nonverbal affect bursts for research on auditory affective processing. Behavior Research Methods, 40(2), 531-539. https://doi.org/10.3758/BRM.40.2.531

Bentall, R. P., & Slade, P. D. (1985). Reality testing and auditory hallucinations: A signal detection analysis. British Journal of Clinical Psychology, 24(3), 159-169. https://doi.org/10.1111/j.2044-8260.1985.tb01331.x

Boersma, P., & Weenink, D. (2005). Praat: Doing phonetics by computer.2009. Computer program available at http://www.praat.org.

Bozikas, V. P., Kosmidis, M. H., Anezoulaki, D., Giannakou, M., Andreou, C., & Karavatos, A. (2006). Impaired Perception of Affective Prosody in Schizophrenia. Journal of Neuropsychiatry and Clinical Neurosciences, 18(18), 81-85. https://doi.org/10.1176/jnp.18.1.81

Bradbury, D. A., Stirling, J., Cavill, J., & Parker, A. (2009). Psychosis-like experiences in the general population: An exploratory factor analysis. Personality and Individual Differences, 46(7), 729-734. https://doi.org/10.1016/j.paid.2009.01.035

Brébion, G., Stephan-Otto, C., Ochoa, S., Roca, M., Nieto, L., & Usall, J. (2016). Impaired self-monitoring of inner speech in schizophrenia patients with verbal hallucinations and in non-clinical individuals prone to hallucinations. Frontiers in Psychology, 7, 1381. https://doi.org/10.3389/fpsyg.2016.01381

Canavarro, M.C. (1999). Inventário de Sintomas Psicopatológicos – B.S.I. In M.R. Simões, M.M. Gonçalves, & L.S. Almeida (Eds.), Testes e provas psicológicas em Portugal (vol. 2, pp. 95-109). Braga: APPORT/SHO.

Castiajo, P., & Pinheiro, A. P. (2019). Decoding emotions from nonverbal vocalizations: How much voice signal is enough?. Motivation and Emotion, 43, 803-813. https://doi.org/10.1007/s11031-019-09783-9

Castiajo, P., & Pinheiro, A. P. (2017). On “hearing” voices and “seeing” things: Probing hallucination predisposition in a Portuguese nonclinical sample with the Launay-Slade Hallucination Scale-Revised. Frontiers in Psychology, 8(1138), 1-17. https://doi.org/10.3389/fpsyg.2017.01138

Chang, J., Zhang, X., Zhang, Q., & Sun, Y. (2018). Investigating duration effects of emotional speech stimuli in a tonal language by using event-related potentials. IEEE Access, 6, 13541-13554. https://doi.org/10.1109/ACCESS.2018.2813358

Choong, C., Hunter, M. D., & Woodruff, P. W. R. (2007). Auditory hallucinations in those populations that do not suffer from schizophrenia. Current Psychiatry Reports, 9(3), 206-212. https://doi.org/10.1007/s11920-007-0020-z

Clarke, P., & Wheaton, B. (2007). Addressing data sparseness in contextual population research: Using cluster analysis to create synthetic neighborhoods. Sociological Methods & Research, 35(3), 311-351.

Coull, J. T. (1998). Neural correlates of attention and arousal: Insights from electrophysiology, functional neuroimaging and psychopharmacology. Progress in Neurobiology, 55(4), 343-361. https://doi.org/10.1016/S0301-0082(98)00011-2

Daalman, K., Boks, M. P. M., Diederen, K. M. J., de Weijer, A. D., Blom, J. D., Kahn, R. S., & Sommer, I. E. C. (2011). The same or different? A phenomenological comparison of auditory verbal hallucinations in healthy and psychotic individuals. Journal of Clinical Psychiatry, 72(3), 320-325.

de Leede-Smith, S., & Barkus, E. (2013). A comprehensive review of auditory verbal hallucinations: Lifetime prevalence, correlates and mechanisms in healthy and clinical individuals. Frontiers in Human Neuroscience, 367, 62-87. https://doi.org/10.3389/fnhum.2013.00367

Derogatis, L. R. & Spencer, P. (1982). Brief Symptom Inventory (BSI) Clinical Psychometric Research.

Diederen, K. M. J., Daalman, K., De Weijer, A. D., Neggers, S. F. W., Van Gastel, W., Blom, J. D., … Sommer, I. E. C. (2012). Auditory hallucinations elicit similar brain activation in psychotic and nonpsychotic individuals. Schizophrenia Bulletin, 38(5), 1074-1082. https://doi.org/10.1093/schbul/sbr033

Duncan, C. C., Barry, R. J., Connolly, J. F., Fischer, C., Michie, P. T., Näätänen, R., ... & Van Petten, C. (2009). Event-related potentials in clinical research: Guidelines for eliciting, recording, and quantifying mismatch negativity, P300, and N400. Clinical Neurophysiology, 120(11), 1883-1908. https://doi.org/10.1016/j.clinph.2009.07.045

Edwards, J., Pattison, P. E., Jackson, H. J., & Wales, R. J. (2001). Facial affect and affective prosody recognition in first-episode schizophrenia. Schizophrenia Research, 48(2-3), 235-253. https://doi.org/10.1016/S0920-9964(00)00099-2

Fisher, D. J., Grant, B., Smith, D. M., Borracci, G., Labelle, A., & Knott, V. J. (2011). Effects of auditory hallucinations on the mismatch negativity (MMN) in schizophrenia as measured by a modified ‘optimal’multi-feature paradigm. International Journal of Psychophysiology, 81(3), 245-251. https://doi.org/10.1016/j.ijpsycho.2011.06.018

Fisher, D. J., Labelle, A., & Knott, V. J. (2008). The right profile: mismatch negativity in schizophrenia with and without auditory hallucinations as measured by a multi-feature paradigm. Clinical Neurophysiology, 119(4), 909-921. https://doi.org/10.1016/j.clinph.2007.12.005

Frühholz, S., & Grandjean, D. (2012). Towards a fronto-temporal neural network for the decoding of angry vocal expressions. Neuroimage, 62(3), 1658-1666. https://doi.org/10.1016/j.neuroimage.2012.06.015

Giannitelli, M., Xavier, J., François, A., Bodeau, N., Laurent, C., Cohen, D., & Chaby, L. (2015). Facial, vocal and cross-modal emotion processing in early-onset schizophrenia spectrum disorders. Schizophrenia Research, 168(1), 252-259. https://doi.org/10.1016/j.schres.2015.07.039

Gratton, G., Coles, M.G., & Donchin, E. (1983). A new method for off-line removal of ocular artifact. Electroencephalography and Clinical Neurophysioly, 55(4), 468-484. https://doi.org/10.1016/j.schres.2015.07.039

Haddock, G., McCarron, J., Tarrier, N., & Faragher, E. B. (1999). Scales to measure dimensions of hallucinations and delusions: the psychotic symptom rating scales (PSYRATS). Psychological Medicine, 29(4), 879-889. https://doi.org/10.1017/S0033291799008661

Hajcak, G., MacNamara, A., Foti, D., Ferri, J., & Keil, A. (2013). The dynamic allocation of attention to emotion: Simultaneous and independent evidence from the late positive potential and steady state visual evoked potentials. Biological Psychology, 92(3), 447-455. https://doi.org/10.1016/j.biopsycho.2011.11.012

Hooker, C., & Park, S. (2002). Emotion processing and its relationship to social functioning in schizophrenia patients. Psychiatry Research, 112(1), 41-50. https://doi.org/10.1016/S0165-1781(02)00177-4

Jaeger, T. F. (2008). Categorical data analysis: Away from ANOVAs (transformation or not) and towards logit mixed models. Journal of Memory and Language, 59(4), 434-446. https://doi.org/10.1016/j.jml.2007.11.007

Jessen, S., & Kotz, S. A. (2011). The temporal dynamics of processing emotions from vocal, facial, and bodily expressions. Neuroimage, 58(2), 665-674. https://doi.org/10.1016/j.neuroimage.2011.06.035

Joo, J. W. J., Hormozdiari, F., Han, B., & Eskin, E. (2016). Multiple testing correction in linear mixed models. Genome Biology, 17(1), 1-18. https://doi.org/10.1186/s13059-016-0903-6

Johns, L. C., Cannon, M., Singleton, N., Murray, R. M., Farrell, M., Brugha, T., ... Meltzer, H. (2004). Prevalence and correlates of self-reported psychotic symptoms in the British population. The British Journal of Psychiatry, 185(4), 298-305. https://doi.org/10.1192/bjp.185.4.298

Johns, L. C., Kompus, K., Connell, M., Humpston, C., Lincoln, T. M., Longden, E., ... & Fernyhough, C. (2014). Auditory verbal hallucinations in persons with and without a need for care. Schizophrenia Bulletin, 40(4), S255-S264. https://doi.org/10.1093/schbul/sbu005

Kang, J. I., Kim, J. J., Seok, J. H., Chun, J. W., Lee, S. K., & Park, H. J. (2009). Abnormal brain response during the auditory emotional processing in schizophrenic patients with chronic auditory hallucinations. Schizophrenia Research, 107(1), 83-91. https://doi.org/10.1016/j.schres.2008.08.019

Kapur, S. (2003). Psychosis as a state of aberrant salience: a framework linking biology, phenomenology, and pharmacology in schizophrenia. American Journal of Psychiatry, 160(1), 13-23. https://doi.org/10.1176/appi.ajp.160.1.13

Kuperberg, G. R., West, W. C., Lakshmanan, B. M., & Goff, D. (2008). Functional magnetic resonance imaging reveals neuroanatomical dissociations during semantic integration in schizophrenia. Biological Psychiatry, 64(5), 407-418. https://doi.org/10.1016/j.biopsych.2008.03.018

Kuznetsova, A., Brockhoff, P. B., & Christensen, R. H. B. (2017). lmerTest package: Tests in linear mixed effects models. Journal of Statistical Software, 82(13). https://doi.org/10.18637/jss.v082.i13

Larøi, F. (2012). How do auditory verbal hallucinations in patients differ from those in non-patients?. Frontiers in Human Neuroscience, 6, 1-9. https://doi.org/10.3389/fnhum.2012.00025

Larøi, F., Sommer, I. E., Blom, J. D., Fernyhough, C., Ffytche, D. H., Hugdahl, K., … Waters, F. (2012). The characteristic features of auditory verbal hallucinations in clinical and nonclinical groups: State-of-the-art overview and future directions. Schizophrenia Bulletin, 38(4), 724-733. https://doi.org/10.1093/schbul/sbs061

Larøi, F., & van der Linden, M. (2005). Nonclinical Participants' Reports of Hallucinatory Experiences. Canadian Journal of Behavioural Science/Revue Canadienne des Sciences du Comportement, 37(1), 33-43. https://doi.org/10.1037/h0087243

Larøi, F., van der Linden, M., & Marczewski, P. (2004a). The effects of emotional salience, cognitive effort and meta-cognitive beliefs on a reality monitoring task in hallucination-prone subjects. British Journal of Clinical Psychology, 43(3), 221-233. https://doi.org/10.1348/0144665031752970

Larøi, F., Marczewski, P., & van der Linden, M. (2004b). Further evidence of the multidimensionality of hallucinatory predisposition: Factor structure of a modified version of the Launay-Slade Hallucinations Scale in a normal sample. European Psychiatry, 19(1), 15-20. https://doi.org/10.1016/S0924-9338(03)00028-2

Leitman, D. I., Foxe, J. J., Butler, P. D., Saperstein, A., Revheim, N., & Javitt, D. C. (2005). Sensory contributions to impaired prosodic processing in schizophrenia. Biological Psychiatry, 58(1), 56-61. https://doi.org/10.1016/j.biopsych.2005.02.034

Leitman, D. I., Hoptman, M. J., Foxe, J. J., Saccente, E., Wylie, G. R., Nierenberg, J., … Javitt, D. C. (2007). The neural substrates of impaired prosodic detection in schizophrenia and its sensorial antecedents. American Journal of Psychiatry, 164(3), 474-482.

Linden, D. E., Thornton, K., Kuswanto, C. N., Johnston, S. J., van de Ven, V., & Jackson, M. C. (2010). The brain’s voices: Comparing nonclinical auditory hallucinations and imagery. Cerebral Cortex, 21(2), 330-337. https://doi.org/10.1093/cercor/bhq097

Lithari, C., Frantzidis, C. A., Papadelis, C., Vivas, A. B., Klados, M. A., Kourtidou-Papadeli, C., ... Bamidis, P. D. (2010). Are females more responsive to emotional stimuli? A neurophysiological study across arousal and valence dimensions. Brain Topography, 23(1), 27-40. https://doi.org/10.1007/s10548-009-0130-5

Liu, T., Pinheiro, A. P., Deng, G., Nestor, P. G., McCarley, R. W., & Niznikiewicz, M. A. (2012). Electrophysiological insights into processing nonverbal emotional vocalizations. NeuroReport, 23(2), 108-112. https://doi.org/10.1097/WNR0b013e32834ea757

Maas, C. J., & Hox, J. J. (2005). Sufficient sample sizes for multilevel modeling. Methodology, 1(3), 86-92. https://doi.org/10.1027/1614-2241.1.3.86

Maijer, K., Begemann, M. J., Palmen, S. J., Leucht, S., & Sommer, I. E. (2018). Auditory hallucinations across the lifespan: A systematic review and meta-analysis. Psychological Medicine, 48(6), 879-888. https://doi.org/10.1017/S0033291717002367

McNeish, D. M., & Stapleton, L. M. (2016). The effect of small sample size on two-level model estimates: A review and illustration. Educational Psychology Review, 28(2), 295-314. https://doi.org/10.1007/s10648-014-9287-x

Morrison, A. P., Wells, A., & Nothard, S. (2000). Cognitive factors in predisposition to auditory and visual hallucinations. British Journal of Clinical Psychology, 39(1), 67-78. https://doi.org/10.1348/014466500163112

Morrison, A. P., Wells, A., & Nothard, S. (2002). Cognitive and emotional predictors of predisposition to hallucinations in non-patients. British Journal of Clinical Psychology, 41(3), 259-270. https://doi.org/10.1348/014466502760379127

Näätänen, R., Pakarinena, S., Rinnea, T., & Takegataa, R. (2004).The mismatch negativity (MMN): Towards the optimal paradigm. Clinical Neurophysiology, 115, 140-144. https://doi.org/10.1016/j.clinph.2003.04.001

Näätänen, R., & Picton, T. (1987). The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology, 24(4), 375-425. https://doi.org/10.1111/j.1469-8986.1987.tb00311.x

Nakagawa, S. (2004). A farewell to Bonferroni: The problems of low statistical power and publication bias. Behavioral Ecology, 15(6), 1044-1045. https://doi.org/10.1093/beheco/arh107

Nayani, T. H., & David, A. S. (1996). The auditory hallucination: A phenomenological survey. Psychological Medicine, 26(01), 177-189. https://doi.org/10.1017/S003329170003381X

Obleser, J., & Kotz, S. A. (2011). Multiple brain signatures of integration in the comprehension of degraded speech. Neuroimage, 55(2), 713-723. https://doi.org/10.1016/j.neuroimage.2010.12.020

Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh Inventory. Neuropsychologia, 9(1), 97-113.

Paulmann, S., Bleichner, M., & Kotz, S. A. (2013). Valence, arousal, and task effects in emotional prosody processing. Frontiers in Psychology, 4, 345. https://doi.org/10.3389/fpsyg.2013.00345

Paulmann, S., Jessen, S., & Kotz, S. A. (2009). Investigating the multimodal nature of human communication: Insights from ERPs. Journal of Psychophysiology, 23(2), 63-76. https://doi.org/10.1027/0269-8803.23.2.63

Paulmann, S., & Kotz, S. A. (2008a). An ERP investigation on the temporal dynamics of emotional prosody and emotional semantics in pseudo-and lexical-sentence context. Brain and Language, 105(1), 59-69. https://doi.org/10.1016/j.bandl.2007.11.005

Paulmann, S., & Kotz, S. A. (2008b). Early emotional prosody perception based on different speaker voices. Neuroreport, 19(2), 209-213. https://doi.org/10.1097/WNR.0b013e3282f454db

Pell, M. D., Rothermich, K., Liu, P., Paulmann, S., Sethi, S., & Rigoulot, S. (2015). Preferential decoding of emotion from human non-linguistic vocalizations versus speech prosody. Biological Psychology, 111, 14-25. https://doi.org/10.1016/j.biopsycho.2015.08.008

Pinheiro, A. P., Barros, C., Dias, M., & Kotz, S. A. (2017a). Laughter catches attention!. Biological Psychology, 130, 11-21. https://doi.org/10.1016/j.biopsycho.2017.09.012

Pinheiro, A. P., Barros, C., Vasconcelos, M., Obermeier, C., & Kotz, S. A. (2017b). Is laughter a better vocal change detector than a growl?. Cortex, 92, 233-248. https://doi.org/10.1016/j.cortex.2017.03.018

Pinheiro, A. P., del Re, E., Mezin, J., Nestor, P. G., Rauber, A., McCarley, R. W., … Niznikiewicz, M. A. (2013). Sensory-based and higher-order operations contribute to abnormal emotional prosody processing in schizophrenia: An electrophysiological investigation. Psychological Medicine, 43(3), 603-618. https://doi.org/10.1017/S003329171200133X

Pinheiro, A. P., Farinha-Fernandes, A., Roberto, M. S., & Kotz, S. A. (2019). Self-voice perception and its relationship with hallucination predisposition. Cognitive Neuropsychiatry, 1-19. https://doi.org/10.1080/13546805.2019.1621159

Pinheiro, A. P., Rezaii, N., Nestor, P. G., Rauber, A., Spencer, K. M., & Niznikiewicz, M. (2016a). Did you or I say pretty, rude or brief? An ERP study of the effects of speaker’s identity on emotional word processing. Brain and Language, 153, 38-49. https://doi.org/10.1016/j.bandl.2015.12.003

Pinheiro, A. P., Rezaii, N., Rauber, A., Liu, T., Nestor, P. G., McCarley, R. W., … Niznikiewicz, M. A. (2014). Abnormalities in the processing of emotional prosody from single words in schizophrenia. Schizophrenia Research, 152(1), 235-241. doi: https://doi.org/10.1016/j.schres.2013.10.042

Pinheiro, A. P., Rezaii, N., Rauber, A., Nestor, P. G., Spencer, K. M., & Niznikiewicz, M. (2017c). Emotional self–other voice processing in schizophrenia and its relationship with hallucinations: ERP evidence. Psychophysiology, 54(9), 1252-1265. https://doi.org/10.1111/psyp.12880

Pinheiro, A. P., Rezaii, N., Rauber, A., & Niznikiewicz, M. (2016b). Is this my voice or yours? The role of emotion and acoustic quality in self-other voice discrimination in schizophrenia. Cognitive Neuropsychiatry, 21(4), 335-353. https://doi.org/10.1080/13546805.2016.1208611

Pinheiro, A. P., Schwartze, M., & Kotz, S. A. (2018). Voice-selective prediction alterations in nonclinical voice hearers. Scientific Reports, 8(1), 1-10. https://doi.org/10.1038/s41598-018-32614-9

Raine, A. (1991). The SPQ: A scale for the assessment of schizotypal personality based on DSM-III-R criteria. Schizophrenia Bulletin, 17(4), 555-567. https://doi.org/10.1093/schbul/17.4.555

Rossell, S. L., & Boundy, C. L. (2005). Are auditory-verbal hallucinations associated with auditory affective processing deficits?. Schizophrenia Research, 78(1), 95-106. https://doi.org/10.1016/j.schres.2005.06.002

Santos, F. (2011). A Dimensionalidade dos Fenómenos Esquizotípicos: Validação e Adaptação do Schizotypal Personality Questionnaire (Unpublished master’s thesis). F.P.C.E University of Coimbra, Portugal.

Sauter, D. A., & Eimer, M. (2009). Rapid detection of emotion from human vocalizations. Journal of Cognitive Neuroscience, 22(3), 474-481. https://doi.org/10.1162/jocn.2009.21215

Schirmer, A., & Kotz, S. A. (2006). Beyond the right hemisphere: Brain mechanisms mediating vocal emotional processing. Trends in Cognitive Sciences, 10(1), 24-30. https://doi.org/10.1016/j.tics.2005.11.009

Schirmer, A., Simpson, E., & Escoffier, N. (2007). Listen up! Processing of intensity change differs for vocal and nonvocal sounds. Brain Research, 1176, 103-112. https://doi.org/10.1016/j.brainres.2007.08.008

Schupp, H. T., Cuthbert, B. N., Bradley, M. M., Cacioppo, J. T., Ito, T., & Lang, P. J. (2000). Affective picture processing: The late positive potential is modulated by motivational relevance. Psychophysiology, 37(2), 257-261. https://doi.org/10.1111/1469-8986.3720257

Selvin, S. (1987). Two issues concerning the analysis of grouped data. European Journal of Epidemiology, 3(3), 284-287.

Shea, T. L., Sergejew, A. A., Burnham, D., Jones, C., Rossell, S. L., Copolov, D. L., & Egan, G. F. (2007). Emotional prosodic processing in auditory hallucinations. Schizophrenia Research, 90(1-3), 214-220. https://doi.org/10.1016/j.schres.2006.09.021

Sommer, I. E., Daalman, K., Rietkerk, T., Diederen, K. M., Bakker, S., Wijkstra, J., & Boks, M. P. (2010). Healthy individuals with auditory verbal hallucinations; who are they? Psychiatric assessments of a selected sample of 103 subjects. Schizophrenia Bulletin, 36(3), 633-641. https://doi.org/10.1093/schbul/sbn130

Streiner, D. L., & Norman, G. R. (2011). Correction for multiple testing: Is there a resolution?. Chest, 140(1), 16-18. https://doi.org/10.1378/chest.11-0523

Telles-Correia, D., Barbosa-Rocha, N., Gama-Marques, J., Moreira, A. L., Alves-Moreira, C., Saraiva, S., ... Haddock, G. (2017). Validation of the Portuguese version of the Psychotic Symptom Rating Scales (PSYRATS). Actas Espanolas de Psiquiatria, 45(2), 56-61.

Thierry, G., & Roberts, M. V. (2007). Event-related potential study of attention capture by affective sounds. Neuroreport, 18(3), 245-248. https://doi.org/10.1097/WNR.0b013e328011dc95

Thompson, B. (2002). What future quantitative social science research could look like: Confidence intervals for effect sizes. Educational Researcher, 31(3), 25-32.

Tucker, R., Farhall, J., Thomas, N., Groot, C., & Rossell, S. L. (2013). An examination of auditory processing and affective prosody in relatives of patients with auditory hallucinations. Frontiers in Human Neuroscience, 7, 1-11. https://doi.org/10.3389/fnhum.2013.00531

Waters, F. A., Badcock, J. C., & Maybery, M. T. (2003). Revision of the factor structure of the Launay–Slade Hallucination Scale (LSHS-R). Personality and Individual Differences, 35(6), 1351-1357. https://doi.org/10.1016/S0191-8869(02)00354-9

Weisgerber, A., Vermeulen, N., Peretz, I., Samson, S., Philippot, P., Maurage, P., ... de Longueville, X. (2015). Facial, vocal and musical emotion recognition is altered in paranoid schizophrenic patients. Psychiatry Research, 229(1-2), 188-193. https://doi.org/10.1016/j.psychres.2015.07.042

van Os, J., Hanssen, M., Bijl, R. V., & Ravelli, A. (2000). Strauss (1969) revisited: A psychosis continuum in the general population?. Schizophrenia Research, 45(1), 11-20. https://doi.org/10.1016/S0920-9964(99)00224-8

van Os, J., Hanssen, M., Bijl, R. V., & Vollebergh, W. (2001). Prevalence of psychotic disorder and community level of psychotic symptoms: An urban-rural comparison. Archives of General Psychiatry, 58(7), 663-668.

van Os, J., & Linscott, R. J. (2012). Introduction: the extended psychosis phenotype relationship with schizophrenia and with ultrahigh risk status for psychosis. Schizophrenia Bulletin, 38(2), 227-230. https://doi.org/10.1093/schbul/sbr188

van Os, J., Linscott, R. J., Myin-Germeys, I., Delespaul, P., & Krabbendam, L. (2009). A systematic review and meta-analysis of the psychosis continuum: Evidence for a psychosis proneness–persistence–impairment model of psychotic disorder. Psychological Medicine, 39, 179-195. https://doi.org/10.1017/S0033291708003814

van't Wout, M., Aleman, A., Kessels, R. P., Larøi, F., & Kahn, R. S. (2004). Emotional processing in a non-clinical psychosis-prone sample. Schizophrenia Research, 68(2), 271-281. https://doi.org/10.1016/j.schres.2003.09.006

Vercammen, A., & Aleman, A. (2010). Semantic expectations can induce false perceptions in hallucination-prone individuals. Schizophrenia Bulletin, 36(1), 151-156. https://doi.org/10.1093/schbul/sbn063

Acknowledgments

The authors are grateful to all participants who took part in this study.

Funding

This work was supported by a Doctoral Grant SFRH/BD/92772/2013 awarded to PC, and by Grants IF/00334/2012, PTDC/MHN-PCN/3606/2012, and PTDC/MHC-PCN/0101/2014 awarded to APP. These Grants were funded by the Science and Technology Foundation (Fundação para a Ciência e a Tecnologia - FCT, Portugal) and FEDER (European Regional Development Fund) through the European programs QREN (National Strategic Reference Framework) and COMPETE (Operational Programme “Thematic Factors of Competitiveness”).

Author information

Authors and Affiliations

Contributions

AP conceived and designed the study. PC collected, analyzed, and interpreted the data and produced the drafting of the manuscript. AP supervised all steps in the study and provided a critical revision of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

No potential conflict of interest was reported by the authors.

Additional information

Open practices statements

None of the data reported here is available, and none of the experiments was preregistered. The stimuli used (vocalizations) are presented as supplementary material.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Castiajo, P., Pinheiro, A.P. Acoustic salience in emotional voice perception and its relationship with hallucination proneness. Cogn Affect Behav Neurosci 21, 412–425 (2021). https://doi.org/10.3758/s13415-021-00864-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-021-00864-2