Abstract

Research into the capacity of audiovisual integration has previously assessed whether capacity is strictly limited to a single item, or whether it can exceed one item under certain environmental conditions. More recently, investigations have turned to examining the effects of various stimulus factors on capacity. Across two experiments, we looked at a number of factors that were expected to play a modulatory role on capacity. Experiment 1 deployed a manipulation of illusory polygons, revealing an increase in audiovisual capacity, even in an absence of visual connections. This demonstrates that exceeding the capacity of 1 does not only represent a functional increase in the binding of a singular, complex visual object, but that it can also represent binding of multiple simpler objects. Findings also support the hypothesis that capacity modulates quantitatively, but not qualitatively, with respect to speed of presentation. Experiment 2 examined the effects of different sound types (sine tones or white noise) and of different spatial visual field sizes on the capacity of audiovisual integration. The results indicate that capacity is maximized when stimuli are presented in a smaller circle (7.5°) if alongside a sine tone, and when presented in a larger circle (18.5°) alongside white noise. These results suggest that audiovisual integration capacity is dependent on the combination of sound type and visual spatial field size. The combination of these results reveal additional phenomenological features of the capacity of audiovisual integration, and provides impetus for further research into applications of the findings.

Similar content being viewed by others

Introduction

The capacity limits of various information processing elements have been, and continue to be, important topics of study in perception and cognition. Early work in this field includes the classic work of Miller (1956) who established the “magic number” 7 for the capacity of working memory, as well as Cowan’s (2001) work on visual working-memory capacity. More recently, attention has turned to considering the capacity of audiovisual integration, with two major theoretical perspectives suggesting different capacities: either the capacity of audiovisual integration is strictly limited to a single item (Van der Burg, Awh, & Olivers, 2013), or it is flexible, can exceed one, and modulates based on unimodal and multimodal stimulus factors (Wilbiks & Dyson, 2016, 2018). While an ecological argument can be made for the limit of one item, it also seems unlikely that capacity should be strictly limited in this way, when there are situations in which binding more than one object could be adaptive (e.g., binding candidates; Wilbiks & Dyson, 2013). Most importantly, a great deal of inter-individual variation in data from both research groups named above seems to indicate that capacity is indeed a flexible quantity, which varies based on stimulus demands and individual differences. The current research addresses variation in capacity as a function of differing auditory and visual components being presented to participants.

Multisensory integration plays an important role in the way we perceive and interact with the world. The capacity to attend to a single stimulus in our noisy environment can be challenging, but can be facilitated by the presentation of a simultaneous (or near-simultaneous) tone (Bertelson, Pavani, Ladavas, Vroomen, & de Gelder, 2000; Van der Burg, Olivers, Bronkhorst, Theeuwes, 2008; Vroomen & de Gelder, 2000). In Van der Burg et al.’s (2008) study of the “pip -and-pop” effect, visual targets were presented among an array of similar distractor stimuli, in cases where it is normally very difficult to locate the target. They found that presenting an irrelevant, spatially uninformative auditory event can drastically decrease the time needed for participants to complete this type of serial search task. These results demonstrated the possible involvement of stimulus-driven multisensory integration on attentional selection using a difficult visual search task. Van der Burg et al. (2008) also showed that presentation of a visual warning signal provided no facilitation in the pip-and-pop task, which indicates that the integration of an auditory tone with a specific visual presentation leads it to “pop out” of the display and allows further processing of that particular visual presentation to be prioritized. Furthering the contribution of these results, Van der Burg, Cass, Olivers, Theeuwes, and Alais (2010) showed that the observed facilitatory effect was not due to general alerting, nor was it due to top-down cueing of the visual change. Instead, they proposed that the temporal information provided by the auditory signal is integrated with the visual signal, generating a more prominent emergent feature that automatically draws attention. Van der Burg et al. (2010) therefore proposed that the binding of synchronized audiovisual signals occurs rapidly, automatically, and effortlessly, with the auditory signal attaching to the visual signal relatively early in the perceptual process. As a result, the visual target becomes more salient within its dynamic, cluttered environment.

Fujisaki, Koene, Arnold, Johnston, and Nishida (2006) ascertained that a certain degree of temporal synchrony must be in place for a causal relationship to be present. They found that identifying a visual stimulus that changed at the same time as an auditory stimulus became more difficult for participants as the number of unsynchronized visual distractors increased. The amount of time needed by participants increased commensurate with the number of distractors. Additionally, they found that the same visual distractors did not affect target identification if the target location was pre-cued. Fujisaki et al. (2006) interpreted their participants’ performances on this serial search task as a suggestion that the analysis of audiovisual temporal events is not innate and automatic, but instead that it necessitates effortful cognitive analysis. Auditory facilitation of visual target discrimination can therefore be understood as being linked to late-stage cognitive processes. Kösem and van Wassenhove (2012) furthered these findings by studying the extent to which temporal regularities affect the detection and identification of events across sensory modalities, finding that visual stimuli are better processed when they are accompanied by a tone sequence at a regular rhythm. Further, Kawachi, Grove, and Sakurai (2014) suggest that the human perceptual system can resolve ambiguity of multiple objects in motion through a cross-modal interaction using a single auditory cue. Their results reveal how a brief tone can modulate the perception of visual events when they occur very briefly after the auditory cue has been presented (Kawachi et al., 2014). However, these findings leave us with further questions, and it remains unclear how synchronous audiovisual stimuli are detected in temporally cluttered audiovisual environments.

The study of audiovisual integration is closely related to the study of visual working memory, in that it stands to reason that maintenance of multiple visual stimuli in working memory is required in order to subsequently integrate those visual stimuli with an auditory stimulus (e.g., Van der Burg et al., 2013). Much of the early work into visual working memory capacity was conducted by Cowan (2001), who showed that there is a limit of between three and five objects, and this finding is in alignment with that of other researchers (e.g., Luck & Vogel, 1997; Pylyshyn, 2001). In later work, Cowan (2010) theorized that the reason for the limited capacity of visual working memory is a product of both retroactive interference and of a temporal limitation on neural firing, as each memory item needs to be maintained every 100 ms. In our earlier work (Wilbiks & Dyson, 2016), we examined neural correlates of audiovisual integration capacity, and found that at the fastest speeds of presentation (150 ms stimulus-onset asynchrony (SOA)) the incoming sensory information was so degraded that audiovisual integration capacity could not reliably exceed one item. This maps clearly onto Cowan’s (2001, 2010) findings, and reinforces the apparent relationship between audiovisual integration capacity and visual working-memory capacity.

In considering the qualitative nature of visual working-memory span, Vogel, Woodman, and Luck (2001), concluded that four items was the maximum capacity of visual working memory, and stated that complexity of the objects being shown had no influence on the capacity. However, later studies conducted by Alvarez and Cavanagh (2004) and Eng, Chen, and Jiang (2005) suggested otherwise, both showing evidence that the greater informational load a visual stimulus holds, the fewer items of that category can be held in visual working memory. Awh, Barton, and Vogel (2007) examined whether complexity is strictly bound to a specific number of ‘slots’ or if it can also be quantified in terms of complexity of objects. Their findings suggested that working memory does indeed hold a fixed number of objects, regardless of their complexity. The findings of Alvarez and Cavanagh (2004) and Awh et al. (2007) are in theoretical opposition to one another. While Awh et al. (2007) conceptualize a discrete number of slots in working memory, regardless of stimulus complexity, Alvarez and Cavanagh (2004) assert that trying to remember more complex stimuli would necessarily lead to a reduction in the numerical capacity of working memory. The current research is an attempt to examine these two alternatives in an audiovisual integration task, and will shed light on the ability of individuals to integrate multiple visual stimuli that are separate from one another with a tone.

One technique that has been shown to be useful in processing information for storage in visual working memory is the use of chunking (Miller, 1956; Gobet, Lane, Croker, Cheng, Jones, Oliver, & Pine, 2001). While chunking has traditionally been invoked as a memory technique, recent research has also shown that it can be used to increase the number of individual items that can be perceived. Sargent, Dopkins, Philbeck, and Chickha (2010) found that participants were better at identifying targets scattered throughout a room if those targets were in close physical proximity to one another. This finding is supported by work on perceptual grouping of stimuli through both being in a common region (e.g., in a delineated space), but also in close proximity to one another (Botta, Lupianez, & Sanabria, 2013). They found that visuo-spatial cues were most effective when the targets that were being cued were grouped by both common region and proximity. Based on these findings, it would seem to be important to investigate another set of stimulus factors that has been suggested to affect audiovisual integration, which includes the size of the field of vision in which stimuli are presented. Laberge and Brown (1986) used a flanker control method to restrict the location and size of initial attentional focus, and found that a faster response was given to a target stimulus at a specific retinal location when the range of expectation was larger. Castiello and Umilta (1989) observed eye-movement in assessing the spatial extent of attentional selection. They demonstrated that attentional focus could be adjusted so that it would cover different-sized areas of the visual field, that an increase in one’s area of attentional focus leads to a reduction in processing efficiency, and that there was a gradual drop-off in processing efficiency around the attentional focus. In a visual-cueing study, Botta, Santangelo, Raffone, Lupianez, and Belardinelli (2010) found that the distance between visual targets was an important factor in the effectiveness of cues, suggesting that when exogenous (rather than endogenous) cues were used, distance was the most important factor in cueing. Further investigations using a similar paradigm showed that audiovisual integration of bimodal cues provided a stronger cueing effect than unimodal auditory or visual cues alone (Botta, Santangelo, Raffone, Sanabria, Lupianez, & Belardinelli, 2011). The combination of these findings suggests that the distance between targets (in this study, operationalized by the effective diameter of the display) is an important factor to consider in examining the capacity of audiovisual integration.

Weinbach and Henik (2012) proposed spatial attention to be important to consider when observing the interaction between congruency and alerting. They hypothesized that alerting signals expand the focus of visuospatial attention, thus increasing the accessibility of events in the spatial surrounding of the target stimulus. This study provides evidence regarding the role of alerting signals in increasing congruency effects when the relevant and irrelevant dimensions are spatially separated (Weinbach & Henik, 2012). Follow-up studies revealed that target salience also plays an important role in the effectiveness of alerting signals – specifically, that if target salience is significantly greater than distractor salience, visual cueing becomes more effective (Weinbach & Henik, 2014). Furthering Weinbach and Henik’s (2012, 2014) results, Seibold (2018) hypothesized that the presentation of an auditory alerting signal before a visual target increases the interference from the closest distractors, and that this increase may be correlated to the expansion of the size of the spatial focus of attention. However, her findings could not provide supporting evidence for an influence of alerting signals on the size of the attentional focus. Rather, they were more consistent with nonspatial accounts that showed the alerting effect to have an influence on perceptual processing. This in turn led to a larger congruency effect in response selection (Seibold, 2018).

Early research into the capacity of audiovisual integration (Van der Burg et al., 2013) found that capacity is highly inflexible, and limited to less than one item. More recently (Wilbiks & Dyson, 2016, 2018), we showed that it is possible for capacity to exceed one item, and that it is a largely variable quantity when stimuli are changed. In the research by both Van der Burg et al. (2013) and Wilbiks and Dyson (2016), a visual-only condition was employed to ensure that the phenomenon being observed was indeed an effect of audiovisual integration, and could not be explained through simple cueing. In both these cases (Van der Burg et al., 2013, Experiment 1d; Wilbiks & Dyson, 2016, Experiment 5), there was no significant benefit to performance from a visual cue. In order to further ensure that audiovisual integration serves as a stronger perceptual boost (cf. Van der Burg et al., 2008) than visual cueing, we included visual-only conditions in Experiments 1b and 2b. The main experiments in the current research address two remaining questions related to the effects of stimuli on modulation of capacity. In Experiment 1a, we further considered the findings of Wilbiks and Dyson’s (2018) Experiment 2, examining whether the perceptual chunking of stimuli can occur even when physical connections are not present. In doing so, we disambiguate the relationship between number of stimuli and stimulus complexity, in the same way as has been done previously in research on visual working memory (cf. Alvarez & Cavanagh, 2004; Awh et al., 2007). Experiment 2a addresses additional issues of stimulus factors on capacity by manipulating the circumference of the display and the type of auditory stimuli being used. In combination, these experiments provide additional insight into the fundamental elements present in establishing the capacity of audiovisual integration.

Experiment 1a

The findings of Wilbiks and Dyson (2016) showed that, contrary to Van der Burg et al. (2013), it is possible for capacity to exceed one item, with estimates ranging as high as 1.7 items at a 700 ms SOA. The estimation of capacity involves a curve-fitting process that is analogous to that of Cowan’s (2001) K. This procedure is discussed at length in the work of Van der Burg et al. (2013), but to summarize it here, it involves fitting the proportion correct data for each combination of SOA, number of visual locations changing, and any other stimulus parameters to a least-squares model of idealized curves. This model assumes that if an individual’s numerical capacity to integrate visual information with a tone is equal to or greater than the number of visual locations that are changing (e.g., capacity = 2, number of dots changing = 1), performance on those trials should be perfect (i.e., p = 1). However, if capacity is less than the number of visual locations changing (e.g., capacity = 2, number of dots changing = 3), then performance can be modeled as a function of capacity (K) and the number of dots that were changing (n), based on the following equation: p = K/2n + .5. Through this process, we established an estimate of capacity, but we “lost” the factor of number of locations, as the raw proportion correct data is subsumed in the fitted curves. That is why the data analysis in this paper comprises comparisons of capacity for each combination of SOA and other factors, but not for the number of locations changing.

Having previously established the malleability of audiovisual integration capacity, Wilbiks and Dyson (2018) examined the ability of participants to perceptually chunk changing dot locations into a complex object (i.e., polygons). The initial research into audiovisual integration capacity (Van der Burg et al., 2013; Wilbiks & Dyson, 2016) involved the presentation of a number of dots that changed polarity rapidly from black to white (or vice versa). One of those polarity changes was accompanied by a tone, with participants asked to note which dot locations changed polarity in synchrony with the tone. The participants were then asked to respond to a specific, probed dot location, indicating whether the dot at that location changed (or did not change) at the presentation including the tone. Wilbiks and Dyson (2018) examined chunking by including lines connecting each dot that was changing polarity on a given dot presentation. This meant that on trials with two dots changing, a single line was presented. In the case of three dots changing, a triangle was presented, connecting each of the three dot locations. In doing so, participants would be able to integrate a single polygon with the tone, rather than integrating three individual dot locations, and the data showed that connecting changing dots with lines (or polygons) led to a functional increase in the capacity of audiovisual integration. However, this may be due to binding a tone with a single, more complex object (cf. Alvarez & Cavanagh, 2004) rather than binding a greater number of stimuli. The question then arises as to whether this functional increase in capacity represents a true numerical increase in capacity. That is to say, participants may not be binding two items (dots) but rather binding the orientation of a single, more complex object (line). Kanizsa (1976) demonstrated that we can induce perception of shapes that are not physically present by presenting illusory contoured shapes through “cut-out” sections of larger shapes. By implementing a similar paradigm, we induce participants to chunk numerous changing locations without those locations being physically connected. By employing illusory contoured polygons, we are no longer providing physical connections, but rather suggesting to participants that locations should be chunked together. It is also important to take into consideration the corpus of findings from the study of perceptual grouping (e.g., Botta et al., 2013; Sargent et al., 2010). These findings suggest capacity should be maximized when stimuli are both in common space (close to each other) and when they are able to be grouped through delineation. In Wilbiks and Dyson (2018), we physically grouped visual locations together through clearly visible lines, meaning that both common space and delineation was contributing to grouping. In the current study, there is not a distinct delineation to draw the objects together, while they are still presented in a common region. We expect participants to exhibit greater estimates of audiovisual integration capacity in illusory contour conditions than in non-contour conditions, which would demonstrate a true increase in numerical capacity, in support of the findings of Awh et al. (2007), as well as suggesting that in audiovisual integration, common region is a sufficient condition for grouping to occur, while delineation is not necessary.

In order to address an additional outstanding question, SOA will be set from 100 ms to 600 ms at 100-ms increments. Previous criticisms of this paradigm have suggested that with increasing SOA, there is a qualitative shift in the processes occurring – to wit, that at higher SOAs, we are no longer seeing true audiovisual integration. Through this manipulation, we expect to find that capacity estimates increase in a linear manner as a function of SOA, which suggests that capacity changes from fast to slow SOAs represent a quantitative, but not qualitative, increase in capacity.

Method

Participants

The participants for Experiment 1a were drawn from introductory psychology courses at Mount Allison University after being recruited through an undergraduate research participation pool. As an incentive, participants were awarded partial course credit for their final grade in their course. All recruitment and experimental practices were approved by the Mount Allison University Research Ethics Board.

We initially tested 42 participants. Informed, written consent was received from each of the participants prior to the experiment. Before data analysis, we calculated a 95% confidence interval around 50% (chance responding), as was done by Wilbiks and Dyson (2016, 2018). Any participants who fell within this confidence interval throughout all four blocks were then removed from the data set. This resulted in 26 of the 42 participants being considered for the data analysis, with a mean age of 19.8 years (SD = 1.2), of which 18 participants identified as female, eight as male, and three reported being left-handed. While we acknowledge that removal of 38% of the individuals tested is not ideal, we also wished to maintain consistency with our data management practices used in earlier research projects. The reason so many participants failed to complete the task correctly in this case is likely a combination of the difficulty of the task, along with the fact that our sample was drawn completely from undergraduate student populations. This may have been further exacerbated by the fact that our testing for this experiment took place in the final few weeks of the semester, where students’ attention may be more focused on preparation for exams than on experimental performance (cf. Nicholls, Loveless, Thomas, Loetscher, & Churches, 2015). To maintain transparency, we include information about analysis of the full data set in the results sections of each experiment, noting that the pattern of results remains the same, and that all but one significant finding remains when the full data set is used.

Materials

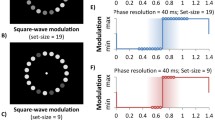

Visual stimuli were presented on a Dell 2407WFP monitor at a screen resolution of 1,440 × 900 and a refresh rate of 60 Hz, using an Optiplex 755 Dell PC running Windows XP Professional (Service Pack 3) at a viewing distance of approximately 57 cm. Auditory stimuli were presented binaurally via Logitech ML 235 headphones. Stimulus presentation was controlled by Presentation (NBS) version 20.1, build 12.04.17, and behavioral responding was recorded with a Dell L100 keyboard. Eight dots (1.5° in diameter) could be displayed in one of two colors: black (0, 0, 0) or white (255, 255, 255) against a mid-gray background (128, 128, 128). The main phase of the experiment consisted of the simultaneous display of eight dots along an implied circle (13o in diameter), the center of which was marked by a 0.15o fixation dot. A single, smaller probe dot was overlaid on a target dot at the end of each trial and was red (255, 0, 0) with a diameter of 1°. In the polygon-present condition, each change of dot polarity was accompanied by lines connecting the dots that were changing (e.g., if three dots changed, a triangle was formed). However, the lines connecting the dots were the same color as the background (128, 128, 128), and as such were only visible in the area that they overlapped the dots. Figure 1 provides a schematic representation of the visual stimuli that were presented to participants in the two experiments. The auditory stimulus used was a 60 ms long, 400-Hz tone with 5-ms linear on-set and offset ramps, presented at an intensity of approximately 74 dB(C), which was created using SoundEdit 16 (MacroMedia).

Trial schematic for Experiment 1 and Experiment 2. In Experiment 1 (top panel), 50% of trials included “illusory polygons”’ as shown in the figure, and 50% did not. In Experiment 2 (bottom panel), 50% of trials had a smaller diameter circle (7.5°; bottom line) and 50% had a larger diameter circle (18.5°; top line). These circle diameters were orthogonally contrasted with auditory stimuli consisting of either a sine tone or a burst of white noise.

Procedure

Forty-eight individual conditions of stimulus were created by orthogonally varying the presence of illusory polygons (absent, present), SOA of visual stimuli (100 ms, 200 ms, 300 ms, 400 ms, 500 ms, 600 ms), and the number of visual stimuli that changed on each alternation (1, 2, 3, 4). These 48 conditions were each presented once to create an experimental block. Each participant completed one randomized practice block of 16 trials, and eight experimental blocks, for a total of 384 experimental trials. Trial order was randomized in practice and in experimental trials. Each trial began with a fixation point displayed in the center of the screen for 500 ms. The sets of black and white dots were generated independently for each trial, and there was no restriction on which dot(s) could change color at each alternation, nor was there a restriction on how many dots could be white or black at any one time. An initial set of dots was presented, followed by nine additional dot presentations (for a total of ten presentations), with each set displayed for the amount of time specified as the SOA for that trial. The critical presentation was the penultimate (ninth) frame. On this presentation, the onset of the dots was accompanied by an auditory tone. Following a final (tenth) presentation, a 1,000-ms retention interval occurred during which only the fixation point was displayed on the screen. During the recall phase, the tenth array of dots was displayed again, with the same locations black and white as when it was first presented, along with an overlay of a red probe dot on one of the eight dots. Participants were asked to respond to whether the dot at the probe location had changed or not on the critical display (i.e., the change from the eighth to the ninth display) by pressing the number 1 on the number pad if that dot did not change, and by pressing the number 2 on the number pad if that dot did change. The probe had a validity of 50%, and the location for invalid trial probes was randomly determined. No feedback was provided, and the subsequent trial began shortly after a response was entered.

Results

Data were collapsed across validity conditions in the same manner as employed by Van der Burg et al. (2013) and Wilbiks and Dyson (2016, 2018). The proportion correct for each combination of conditions and for each participant was fitted to a model that was analogous to Cowan’s (2001) K, wherein if n ≤ K, then p = 1, and when n > K, then p = K/2n + .5 (where n represents the number of visual elements changing (1–4), p is the proportion likelihood of correct responding, and K is an estimate of the capacity of audiovisual binding). The fitting procedure involves using K as the free parameter, and optimizing this value to minimize root-mean-square error between the raw proportion of correct responses for each number of locations changing and the ideal model. As the fitting procedure uses the values for each number of locations changing, along with the proportion of correct responses for each condition, capacity estimates (K) are obtained for each combination of polygon and SOA, with no further consideration of number of locations changing or proportion correct responding.

An initial analysis was conducted by means of a 2 (Polygon: present, absent) × 6 (SOA: 100, 200, 300, 400, 500, 600 ms) repeated-measures ANOVA. Full results of the ANOVA are displayed in Table 1,Footnote 1 with pertinent measures of statistical significance and effect sizes summarized in this section. A significant main effect of polygon was found (p = .047, ηp2 = .149), indicating that the presence of illusory connections yields a greater audiovisual integration capacity than no contours. A main effect of SOA was also significant (p < .001, ηp2 = .476), with an increase in capacity appearing with incrementally slower SOAs. A significant interaction between polygon and SOA (p < .001, ηp2 = .163) was found (means and standard errors are displayed in Fig. 2), which was further probed with a Tukey’s HSD test (p < .05). This test revealed that, while numerical capacity was greater for illusory polygon conditions at four of six data points, significant facilitation was only manifest at the 600-ms SOA.

In previous research on this topic, there has been some disagreement between our research group and others as to whether the processes underlying audiovisual integration change qualitatively from slower to faster speeds of presentation, or whether the processes remain the same, and capacity increases quantitatively as a function of slowing speed of presentation. In order to inform this argument, a trend analysis was conducted on the data, with the working hypothesis that if capacity increases in a linear manner, this is evidence for a quantitative increase, but if capacity increases in a non-linear way, it is more likely that a qualitative change occurs at a specific speed of presentation. Results of this analysis revealed that for the no-polygon condition, the data for the six SOAs were a strong, significant fit for a linear trend (F (1,25) = 30.229, MSE = .354, p < .001) in the absence of a quadratic trend (F (1,25) = 2.749, MSE = .303, p = .110) or any higher order trends (Fig. 2).Footnote 2 The analysis on the data with polygons had a similar pattern, showing that the data were a significant fit for a linear trend (F (1,25) = 33.448, MSE = .918, p < .001) in the absence of a quadratic trend (F (1,25) = 0.756, MSE = .283, p = .393) or any higher order trends. These findings suggest that the increase in capacity with increasing SOA is a result of a quantitative difference between conditions, rather than a qualitative difference.

Finally, in order to answer questions about whether capacity under each combination of stimulus parameters is less than or greater than one (as originally asked by Van der Burg et al., 2013), capacity estimates were compared to the norm of 1 through a series of single sample t-tests. Full results are given in Table 2. Capacity was found to be significantly less than 1 for 100ms and 200 ms regardless of presence of polygons. It was only significantly greater than 1 for 500 ms and 600 ms in the presence of polygons. This pattern of results recapitulates what has previously been shown, that capacity exceeds one only at higher SOAs, and when other facilitative factors are employed.

Discussion

The purpose of Experiment 1a was to further investigate the flexibility of audiovisual integration capacity. While previous research in this series focused on whether the capacity of audiovisual integration could exceed one item, the focus has now shifted to the consideration of the flexibility of capacity as a function of speed of presentation and presence of illusory polygons. As such, it was not entirely unexpected that capacity would fall shy of one item in many cases, given the relatively short SOAs employed – a phenomenon that is likely due to an inability to reliably process incoming sensory information, as we previously showed both behaviorally and electrophysiologically (Wilbiks & Dyson, 2016). Only six of the 12 conditions tested showed a capacity numerically greater than one, and only two of those – 500 ms and 600 ms with polygons – were significantly greater.

In this case, rather than comparing capacity estimates to a fixed norm of one item, we sought to examine whether illusory polygons (such as those employed by Kanizsa, 1976) can offer a perceptual chunking effect in the absence of physically connected visual stimuli. Results show that the inclusion of illusory polygons provides facilitation overall, which is evidence in favor of capacity being numerically increased by perceptual chunking. This is analogous with the findings of Awh et al. (2007) in the field of visual working memory, as we demonstrate an increased capacity through perceptual chunking (Gobet et al., 2001), even when there are no physical connections between those locations. Additionally, linear trend analysis provides further evidence that capacity increases as a function of speed of presentation in a quantitative, rather than qualitative manner. We also note that there appears to be some flattening of the capacity function at 500- and 600-ms SOAs when no polygons were present. While this function overall still fits a linear trend, it is appropriate to comment on possible reasons for this unexpected flattening. One potential reason for this observation is that in the absence of illusory polygons, participants were unable to integrate much more than one item on average. The flattening occurs at a value slightly greater than 1, suggesting that while capacity increases as a function of SOA, it levels off once it exceeds one item. This is not in line with our previous results (e.g., Wilbiks & Dyson, 2016), but may be occurring due to greater relative difficulty of the task when polygons are not present as compared to the conditions in which participants are presented with polygons to aid with chunking. When chunking is possible, capacity can continue to increase as a function of SOA up to the maximum SOA tested (600 ms), where capacity is approaching a value of two items.

Experiment 1a provides evidence that audiovisual capacity is facilitated by illusory polygons, and that capacity progressively increases with decreasing presentation speed, in an apparently quantitative relationship. This provides an increase in our understanding of the effects of stimulus factors on audiovisual integration capacity. In order to further extend what is known about stimulus influence on audiovisual integration capacity, Experiment 2a considers the influence of tone type and display diameter on capacity.

Experiment 1b

Previous research on the pip-and-pop effect (Van der Burg et al., 2008) has shown that the presentation of a transient, spatially uninformative tone increases performance on a visual search task. In examining the capacity of audiovisual integration, previous work has often employed a visual-only condition to ensure that the phenomenon being observed was indeed an effect of audiovisual integration, and could not be explained through simple cueing (Van der Burg et al., 2013, Experiment 1d; Wilbiks & Dyson, 2016, Experiment 5). In both these cases, there was no significant benefit to performance from a visual cue. However, in order to ensure that the effects observed in the current research are also occurring due to true audiovisual integration, a visual-only version of the experiment was conducted.

Method

Stimulus parameters were identical to the polygon present trials of Experiment 1a, with the following exceptions. On half of the trials, rather than presenting a tone on the critical trial, two concentric green annuli (RGB: 0,128,0) were presented, positioned 1° inside and 1° outside the dots. This visual cue (also employed by Van der Burg et al., 2013, and Wilbiks & Dyson, 2016) was intended to serve as a cue that does not interfere with the perception of the dots, but also remains spatially uninformative. In order to manage the number of stimulus presentations that participants had to attend to, the number of SOAs being employed was reduced from six to three (200, 400, and 600 ms).

Seventeen participants from the University of New Brunswick Saint John were tested for this study, with one participant’s data removed based on the same criteria as in Experiment 1a. The participants had an average age of 19.3 (SD = 1.3) years, with four males, 12 females, and one left-handed and 15 right-handed individuals. None of the participants had taken part in any previous studies in this project.

Results and discussion

All data fitting procedures were identical to Experiment 1a. Capacity estimates were entered into a within-subjects ANOVA with factors of cue type (2: auditory, visual) and SOA (3: 200 ms, 400 ms, 600 ms). Full results of the ANOVA are given in Table 3, with significant results discussed further here. There was a significant effect of SOA (p = .002, ηp2 = .330), and post hoc analysis using Tukey’s HSD (p < .05) revealed that capacity estimates were significantly greater at 600 ms compared to 200 ms SOA (see Fig. 3). There was no significant main effect of cue type, nor any significant interaction. As such, we can conclude that in this experiment, a visual cue is able to provide the information required for participants to do the task. However, while the effect of cue type was not significant, the numerical data do still suggest superiority for audiovisual as compared to visuo-visual integration.

This experiment found that a visual cue can be employed to promote perception in polygon-present conditions. A possible reason for this finding is that the presence of polygons promotes focus on visual stimuli beyond the changing of polarity of dots. Alternatively, it is possible that perceptual chunking (cf. Gobet et al., 2001) is boosting visual perception ahead of integration, such that a single more complex visual stimulus is being perceived. In any case, this finding is at odds with what has been observed in previous research, although it is also still the case that visual cueing is numerically less effective than audiovisual integration. In order to further investigate this finding, future research could focus more on visual presentations themselves, by examining whether participants are able to perceive not just which dot locations are changing on the critical trial, but also whether participants can identify the full display that was presented to them on that trial as compared to other trials.Footnote 3 This would elucidate whether visual cueing is truly as effective as audiovisual integration when polygons are present, or whether the visual cue is just “good enough” for the current manipulation.

Experiment 2a

Wilbiks and Dyson (2018) showed that external perceptual factors as well as internal recalibration due to training modulate the capacity of audiovisual integration capacity. Experiment 1a found that these perceptual factors include illusory polygons, which only suggested to a participant that stimuli should be chunked, while not actually connecting them.

In considering the type of auditory stimulus used in audio-visual integration experiments, there is some debate as to the relative efficacy of using tones of a specific frequency (e.g., sine tone), or using a wider variety of frequencies (e.g., white noise). The observation of positive effects of white noise on perception in other modalities has usually exploited the stochastic resonance property of the noise, which has been shown to boost sensory sensitivity. For example, research has shown that presenting auditory white noise allows individuals to perceive sub-threshold visual stimuli (Usher & Feingold, 2000; Manjarrez, Mendez, Martinez, Flores, & Mirasso, 2007). In looking further into this phenomenon, Gleiss and Kayser (2014) identified a potential mechanism for this facilitation, showing that continuously presented white noise boosted neural oscillations in the occipital cortex, in turn promoting detection of visual stimuli. While the majority of research on effects of white noise on visual perception has examined it as a constantly presented stimulus, Seitz, Kim, and Shams (2006) found that white noise (in addition to sine tones) facilitates learning of visual sequences. Additional findings in this field suggest that white noise bursts can facilitate performance through an audiovisual integration task (Van der Stoep, Van der Stigchel, Nijboer, & Van der Smagt, 2016), and this finding suggests we should be able to employ a white-noise burst in the present research as well. While white noise and – much more often – sine tones have both been used in audiovisual integration tasks, there is a relative dearth of evidence with regard to the interaction between sound type and visual stimuli in audiovisual integration, and as such Experiment 2a examines the effect of different sound types on audiovisual integration capacity.

To further contribute to findings regarding the effects of auditory stimuli on visuospatial attention (Seibold, 2018; Weinbach & Henik, 2012, 2014), we systematically quantified a difference in visual stimuli by comparing the difference between a smaller field of vision and a larger field of vision. Additionally, we produced a difference in auditory stimuli by comparing the difference between a sine tone and white noise. In doing so, we compared four different stimulus combinations based on our assumptions. We expected that the presentation of a sine tone during the critical trial would promote binding to locations that changed polarity, whereas the presentation of white noise during the critical trial would not be as effective. We also expected that the presentation of visual stimuli in a smaller circle will show a greater capacity of audiovisual integration than in cases where visual stimuli form a larger circle, as individuals naturally tend to have narrower attentional fields of vision (Laberge & Brown, 1986), and because being in closer proximity to one another should lead to more optimal perceptual grouping (Botta et al., 2013), and therefore to a greater capacity of integration. To present a potential counterargument, Seibold (2018) found that while an alerting tone can lead to a reduction in reaction times, this improvement is not due to an expansion of the field of vision. However, Seibold’s work was based on an alerting tone that preceded the critical stimulus presentation, while the current research involves a simultaneous tone being presented to increase performance through audiovisual integration. We hypothesized that the capacity of audiovisual integration would be greater for visual stimuli presented within a narrower field of vision than those presented in a wider field (based on the findings of Laberge & Brown, 1986). We also expected that a sine tone would promote binding more than a presentation of white noise (based on the findings of Seibold, 2018). We did not make any specific predictions regarding the interaction between the sound type and display diameter.

Method

Participants

Thirty-five students from Mount Allison University participated in this study. Data from 26 participants were analyzed, as nine had to be excluded from the dataset due to failure to complete the task according to instructions, or due to their response rates nearing chance as in Experiment 1. The final sample consisted of four males and 22 females, with a mean age was 19.19 years (SD = 1.44). Participants were enrolled in a first-year undergraduate psychology class at Mount Allison University and were compensated with class credit.

Materials and procedure

Experimental parameters were identical to Experiment 1, with the following exceptions. The diameter of the imaginary circle upon which dots were presented included a “small” diameter (7.5°) and a “large” diameter (18.5°). Figure 1 provides a schematic of the visual stimuli that were employed. The tones presented were either a 60-ms, 400-Hz tone (as in Experiment 1) or a 60-ms burst of white noise, both of which were presented with 5-ms on- and off-ramps. Additionally, the number of SOAs was reduced from six to three, including 200, 400, and 600 ms.

Results

Data fitting was conducted in the same way as in Experiment 1a, which yielded an estimate of audiovisual integration capacity (K) for each combination of circle diameter, sound type, and SOA. Means and standard errors are displayed in Fig. 4. We conducted a 2 × 2 × 3 ANOVA, comparing two sound types (sine tone, white noise), two circle diameters (7.5°, 18.5°), and three stimulus-onset asynchronies (200, 400, and 600 ms). Full resultsFootnote 4 of the ANOVA are shown in Table 4, with a summary of pertinent measures of significance and effect size in this section. A main effect of SOA was found (p < .001, ηp2 = .678). Tukey HSD post hoc tests (p < .05) revealed significant differences between each SOA: 200 ms (M = .577, SE = .032), 400 ms (M = 1.083, SE = .063), and 600 ms (M = 1.484, SE = .086). Contrary to our hypotheses, we found no main effect of sound type (p =.887, ηp2 = .001) or circle diameter (p = .522, ηp2 = .017).Unexpectedly, a significant interaction was found between sound type and circle diameter (p = .039, ηp2 = .160). The means and standard errors for this interaction are displayed in Fig. 5, and reveal that capacity estimates were maximized for large diameter circles when white noise was presented, and for small diameter circles when a tone was presented.

As in Experiment 1a, a series of single-sample t-tests was used to compare capacity estimates to the norm of 1 (full results are given in Table 5). It was found that capacity was significantly less than 1 at 200 ms, regardless of sound type and circle circumference. Capacity was not significantly different from 1 at 400 ms regardless of other stimulus parameters. At 600 ms SOA, capacity was significantly greater than 1 at all sound type/circumference combinations other than the combination of white noise and a small circle. While these findings provide more information regarding capacity in comparison to the normal value of one, we feel the more interesting findings in this experiment come from the earlier ANOVA results.

Discussion

In Experiment 2a, we focused on different factors that might facilitate audiovisual processing in the hope of furthering understanding of the nature of audiovisual integration. We hypothesized that the presentation of a sine tone during the critical trial would promote binding to locations of stimuli, whereas the presentation of white noise during the critical trial would not be as effective. We also expected that the presentation of visual stimuli in a smaller circle would show results of greater capacity in audiovisual integration than in cases where visual stimuli form a larger circle. Thus, the capacity of audiovisual integration was hypothesized to be greatest during trials with visual stimuli in a smaller field of vision, where the auditory cue is a sine tone. Although no significant main effects were found between the use of a sine tone and a white noise burst, or between a larger visual spatial field and a smaller one, the general finding of a slower SOA being beneficial to audiovisual integration capacity (Wilbiks & Dyson, 2016, 2018) was reaffirmed. This study successfully replicated the notion of a significant relationship between the number of visual stimuli that can be bound with a tone and SOA.

The suggestion that the capacity of audiovisual integration is affected by different sound types and visual spatial fields is supported in the current research, but not in the precise way that we had hypothesized. While we had expected a smaller circle and a sine tone to promote increases in capacity both independently and in combination, we only observed an increase in capacity when presented with the combination of a sine tone and a smaller circle (7.5°), or with white noise and a larger circle (18.5°). While this was not what we expected, it does support Weinbach and Henik’s (2012) proposition regarding the importance of spatial attention in the interaction between congruency and alerting. They found that alerting signals expand the focus of visuospatial attention, and thus increase the accessibility of events in the spatial surrounding of the target stimulus, although our finding is more nuanced than theirs. In this case, employing a sine tone – with its focused single-frequency sound – promoted increased capacity of audiovisual integration when the visual locations were located in closer proximity to one another. Conversely, the wide-ranging frequencies included in a burst of white noise promoted a capacity when visual locations were spread further apart from one another. While further research is necessary to confirm this finding, it does present the possibility that an individual’s field of visual focus may be affected by the type of sound that is presented, rather than simply by the fact that some sound was presented to them.

It is also important to consider a potential alternate explanation of the mechanism behind the widening effect of the white noise: that it may be occurring due to an increase in arousal stemming from the alerting noise, rather than a boost in audiovisual integration. We believe that our finding is a result of audiovisual integration for two major reasons. First, previous work we have conducted (Wilbiks & Dyson, 2018) shows that cross-modal correspondences between pitch and brightness significantly modulate audiovisual integration capacity. The presence of this cross-modal effect would only be plausible if there was integration between the auditory and visual stimuli. As the current research employs the same general paradigm as was used in Wilbiks and Dyson (2018), it follows that integration is occurring here as well. Additional evidence comes from an argument presented by Botta et al. (2011), who argued (and demonstrated) that increasing stimulus salience (as indexed by visual thickness) is not sufficient to boost cueing in the same way as does audiovisual integration. As such, we believe our explanation of audiovisual integration to be the interpretation of the data with the most support.

Although this finding was unexpected, and therefore requires further confirmatory study and consideration of specific stimulus parameters, it does have wide-ranging implications in fields such as the design of alert systems where the optimal correspondence between auditory and visual signals could be a matter of life and death. For example, in a situation in which one wants to draw attention to a singular visual stimulus (e.g., a looming projectile), it would be most advantageous to use a focused, single-frequency auditory alerting stimulus. Conversely, when attempting to generally increase an individual’s alertness (e.g., paying attention to all objects visible whilst driving), it would be better to use a burst of white noise.

Experiment 2b

As was done with Experiment 1, a visual-only version of this task was conducted and compared to audiovisual conditions, in order to ensure that audiovisual integration is occurring on this task, and that it is superior to visual cueing.

Method

Stimulus parameters were identical to Experiment 2a, with the following exceptions. On half of trials, the same visual cue was employed as in Experiment 1b, while on the other half of trials, white noise auditory cues were employed. Seventeen participants took part in this study, with one participant’s data removed based on the same criteria as in earlier studies. The final sample had an average age of 26.7 years (SD = 1.1), with five males, 11 females, and one left-handed and 15 right-handed individuals.

Results and discussion

Capacity estimates were derived in the same manner as in previous experiments, and were subjected to a within-subjects ANOVA with factors of cue type (2: auditory, visual), circle circumference (2: 7.5°, 18.5°), and SOA (3: 200, 400, 600 ms). Full results are shown in Table 6, with significant findings discussed here. There was a main effect of cue type (p = .005, ηp2 = .415), which showed that capacity estimates were greater on auditory trials than visual trials, and a significant main effect of SOA (p < .001, ηp2 = .402). These effects were subsumed by a significant cue type × SOA interaction (p = .033, ηp2 = .204). Means for this interaction are displayed in Fig. 6, and post hoc analysis using Tukey’s HSD (p < .05) revealed that auditory cues led to greater capacity estimates than visual cues at each individual SOA. Additionally, there was no significant modulation with increasing SOA in the visuo-visual trials, while there was a significant increase in audiovisual trials.

In this case, the results of the experiment followed our expected pattern of results, demonstrating that audiovisual integration is significantly stronger than visuo-visual cueing. The lack of modulation as a function of SOA in the visuo-visual version suggests that, in this case, the visual cue is not sufficient to lead to the “pop out” (cf. Van der Burg et al., 2008) of the critical visual presentation, regardless of the difficulty of the perceptual task being presented. This suggests that, at least in Experiment 2, we are observing significant facilitation effect being caused by audiovisual integration.

General discussion

Across two main experiments (and 2 additional manipulations), we have elucidated the effects of further stimulus factors on the dynamic capacity of audiovisual integration. Experiment 1a showed that capacity is increased by implied connections (Kanizsa, 1976) between specified visual stimulus locations, suggesting that perceptual chunking (Gobet et al., 2001; Sargent et al., 2010) can occur without explicitly connected stimuli. Experiment 2a explored the interaction between the field of focus required to attend to changing dots and the type of auditory stimulus employed, finding that capacity was maximized when large diameters were paired with white noise (rather than sine tones) and when small diameters were paired with sine tones (rather than white noise).

In considering the findings in the context of previous research, it is apparent that there is a similarity in the phenomenology of audiovisual integration capacity and visual working memory. While Awh et al. (2007) and Alvarez and Cavanagh (2004) presented conflicting views on the nature of the capacity of visual working memory, we are presenting a model of the capacity of audiovisual integration that is in alignment with Awh et al. (2007). The findings of Experiment 1a (along with findings from Wilbiks & Dyson, 2018) show that the capacity of audiovisual integration is greater when participants are presented with connections between changing visual stimuli, and that this is true both when those connections are visible lines and when they are implied lines based on “cut-outs” in the stimuli. Just as Awh et al. (2007) showed that increasing complexity of stimuli has no effect on the numerical capacity of visual working memory, we have shown that creating more complex stimuli (i.e., polygons rather than dots) does not reduce the numerical capacity – in fact, it increases the functional capacity of audiovisual integration. We have also shown that perceptual chunking (Gobet et al., 2001; Sargent et al., 2010) can be successfully employed when the chunks are either explicitly or implicitly presented to participants. This leads to a natural question for further study, which is to examine whether participants are able to chunk strictly through endogenous allocation of attention. In both of our studies, the chunking employed was driven through stimulus factors (although there was a difference between whether connections were physically present, or implied) – but would it be possible for a participant to be instructed to use chunking by telling them to “try to perceive the dots as a shape,” and to do so with no additional visual information present?

An additional factor to consider is the ability of participants to bind information within a certain spatial proximity. Botta et al. (2010, 2011) showed that in an exogenous cueing paradigm, distance between target stimuli was the most important factor in the effectiveness of cueing. The current research is not a cueing paradigm per se, as the auditory stimulus is presented simultaneously with the target visual stimuli. However, we can use Botta’s (2010; 2011) research as an analogue for our potential findings, understanding that it is also likely the case that greater spatial proximity between visual stimuli would lead to a greater ability to bind visual stimuli to one another (and, therefore, to integrate more visual stimuli with the auditory stimulus). In Experiment 1, this means that moving stimuli further apart from each other would have led to a decrease in capacity. In Experiment 2a, one would have expected this to lead to a significant increase in capacity for smaller diameter displays as compared to larger ones. However, there was no main effect of circle diameter in Experiment 2a, leading us to believe that there are more complex factors at play, as has been addressed in the earlier discussion.

Ecologically speaking, it seems more efficient to have a strict one-to-one mapping of audiovisual integration, as in most natural situations a single visual stimulus and a single auditory stimulus would connect to one another (Olivers, Awh, & Van der Burg, 2016; Van der Burg et al., 2013). However, there are certainly exceptions to this rule with regard to natural situations – for example, a stream passing over a series of rocks produces a single ensemble sound (a “babbling” brook), while one can identify a number of visual events (water deflected by numerous rocks) that produce that sound. There is also evidence in the feature-binding literature showing that links between multiple stimuli can be formed through serial connections between those stimuli (e.g., stimulus A bound to B, which is also bound to C, etc.; Hommel, 1998, 2004; Hommel & Colazato, 2004). Given these situations, the previous findings from Wilbiks and Dyson (2016, 2018) and the present findings, it is now possible to consider the importance of an audiovisual integration system that has a capacity greater than one. We previously argued that in a situation where the visual component of a perceived sound is ambiguous, the most adaptive response is to bind as many binding candidates as possible (Wilbiks & Dyson, 2013). Having done so, one can subsequently seek out post hoc information that can disambiguate the situation. For example, if you hear a roar and are in a room with a lion, a tiger, and a bear, it is useful to take note of the locations of each potential predator and monitor them each for further sounds or signs of aggression before taking action. As such, it is advantageous to have the largest capacity possible, in order to take account of as many threatening stimuli as possible.

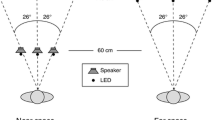

Overall, the findings further confirm that audiovisual integration capacity is a flexible quantity (cf. Wilbiks & Dyson, 2018), and that it modulates based on stimulus factors of various kinds, both unimodal auditory or visual, as well as cross-modal. Future research in this field should seek to identify the source of the great deal of variation between individual participant capacity measures. It is possible that this variation could be occurring due to some underlying perceptual and/or cognitive abilities, and research examining this could seek to form a predictive model of audiovisual integration capacity. It would also be of interest, moving forward, to employ more ecologically valid stimuli, as it has been shown that experiments using artificial tones do not generalize to natural sounds (Schutz & Gillard, 2018; Schutz & Vaisberg, 2014). For example, Experiment 2 used a pure tone (single frequency) and white noise (many frequencies), but it would be of additional interest to use intermediate types of tones, such as instrumental tones and other ecologically valid stimuli that might promote integration in a visual display of intermediate size. To ascertain how performance on these idealized, laboratory-based tasks translate into the real world, we could also employ concrete stimuli such as images and sounds of animals, and could also present them in real three-dimensional space, rather than on a computer monitor and headphones. To that end, Van Wanrooij, Bremen, and Opstal (2010) conducted a study wherein participants were placed in the center of a dark room where several LED lights and speakers surrounded them. The participants were then asked to orient a head-fixed laser pointer towards one randomly selected LED that was paired with an auditory tone from the same source. Throughout the experiment, the auditory tone was not always spatially aligned with the LED. The results showed that individuals who were always given spatially aligned audio and visual sources performed better over those who pseudorandomly had spatially unaligned auditory and visual sources. Additionally, it was found that those who always had unaligned audio and visual sources performed better than the pseudorandomly assigned condition.

An additional extension of this field of research would be to examine individual differences in capacity as a function of other perceptual and attentional factors. Research to this point has revealed a large degree of interindividual difference in capacity data, and it is possible that this variation is in some part due to other underlying abilities. Further to this end, there has been research in both unimodal (e.g., Baum, Stevenson, & Wallace, 2015; Deruelle et al., 2006; Mottron, Dawson, Soulieres, Hubert & Burack, 2006) and multimodal (e.g., Stevenson, Zemtsov & Wallace, 2012) perception showing that some differences that exist can be accounted for by presence of traits related to autism spectrum disorder (ASD; Baron-Cohen, Wheelwright, Skinner, Martin & Clubley, 2001). While this has not been examined directly in relation to audiovisual integration capacity, it is certainly worthy of study, in terms of both clinical and sub-clinical levels of ASD. If a significant relationship is found, it may be possible to employ an audiovisual integration capacity task as an early diagnostic system for autism, as the sensory and perceptual differences in autism can in many cases be observed earlier than traditional diagnostic systems can be employed. This is of utmost importance in the field of ASD, as earlier diagnosis of ASD has been associated with better treatment outcomes in the long term (Fernell, Eriksson, & Gillberg, 2013). An additional clinical application of this research would be in studying individuals diagnosed with major depressive disorder (MDD). Previous research has found that individuals with MDD experienced a decline in spatial suppression that enhanced motion perception for typically suppressed stimuli (Golomb, McDavitt, Ruf, Chen, Saricicek, Maloney, Hu, Chun, & Bhagwagar, 2009). If it is the case that depressed individuals may be better at tracking moving objects than their neurotypical peers, we may also expect to find differences in their audiovisual integration capacity, which could further be employed in studying symptomatology and assessment methods for MDD.

Through a series of experiments, we have further demonstrated the function of audiovisual integration using dots and tones (and, in Experiment 2, white noise). While future studies as discussed above would extend this field of study and build towards application of this fundamental research to both real-world scenarios and clinical applications, the current research has furthered the understanding of audiovisual integration capacity, and shown that the match between the size of the display and the sound stimulus used, as well as presence of implied connections have facilitative effects on capacity estimates.

Open Practices Statement

The data for all experiments are available at: https://osf.io/sfcyd/

Notes

The pattern of results using the full data set (N = 42) was similar to that of the trimmed data set (N = 26), with a significant main effect of SOA (p < .001, ηp2 = .617), along with a significant polygon × SOA interaction (p = .001, ηp2 = .098). One difference between the full data and trimmed data set is that the main effect of polygon crossed the standard threshold of significance (p = .064, ηp2 = .087), although the pattern of results did not change.

The data as a function of SOA only, along with the linear trend analysis, were reported in Wilbiks and Dyson (2018) in order to satisfy a reviewer’s query. However, the remainder of the data analysis in Experiment 1, and the entirety of Experiment 2 have not been reported elsewhere.

We thank a reviewer for this recommendation for further study.

The pattern of results using the full data set (N = 35) was similar to that of the trimmed data set (N = 26), with a significant main effect of SOA (p < .001, ηp2 = .622), along with a significant sound type × circle diameter interaction (p = .049, ηp2 = .131).

References

Alvarez, G. A., Cavanagh, P. (2004). The capacity of visual short-term memory is set both by visual information load and by number of objects. Psychological Science, 15, 2. doi:https://doi.org/10.1111/j.0963-7214.2004.01502006.x

Awh, E., Barton, B., & Vogel, E. K. (2007). Visual working memory represents a fixed number of items regardless of complexity. Psychological Science, 18, 7. doi:https://doi.org/10.1111/j.1467-9280.2007.01949.x

Baron-Cohen, S., Wheelwright, S., Skinner, R., Martin, J., & Clubley, E. (2001). The autism-spectrum quotient (AQ) : Evidence from asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31(1), 5-17.

Baum, S. H., Stevenson, R. A., & Wallace, M. T. (2015). Behavioral, perceptual, and neural alterations in sensory and multisensory function in autism spectrum disorder. Progress in Neurobiology, 134, 140-160.

Bertelson, P., Pavani, F., Ladavas, E., Vroomen, J., & de Gelder, B. (2000). Ventriloquism in patients with unilateral visual neglect. Neuropsychologia, 38(12), 1634-1642. doi:https://doi.org/10.1016/s0028-3932(00)00067-1

Botta, F., Lupianez, J., & Sanabria, D. (2013). Visual unimodal grouping mediates auditory attentional bias in visuo-spatial working memory. Acta Psychologica, 144, 104-111. doi: https://doi.org/10.1016/j.actapsy.2013.05.010

Botta, F., Santangelo, V., Raffone, A., Lupianez, J., & Belardinelli, M. O. (2010). Exogenous and endogenous spatial attention effects on visuospatial working memory. The Quarterly Journal of Experimental Psychology, 63(8), 1590-1602. doi: https://doi.org/10.1080/17470210903443836

Botta, F., Santangelo, V., Raffone, A., Sanabria, D., Lupianez, J., & Belardinelli, M. O. (2011). Multisensory integration affects visuo-spatial working memory. Journal of Experimental Psychology: Human Perception and Performance, 37(4), 1099-1109. doi: https://doi.org/10.1037/a0023513

Castiello, U., & Umilta, C. (1989). Size of the attentional focus and efficiency of processing. Acta Psychologica, 73(3), 195-209. doi:https://doi.org/10.1016/00016918(90)90022-8

Cowan, N. (2001). The magical number 4 in short-term memory: a reconsideration of mental storage capacity. Behavioral Brain Science, 24, 87-114. doi:https://doi.org/10.1017/S0140525X01003922

Cowan, N. (2010). The magical mystery four: How is working memory capacity limited, and why? Current Directions in Psychological Science, 19(1), 51-57.

Deruelle, C., Rondan, C., Gepner, B., & Fagot, J. (2006). Processing of compound stimuli by children with autism and Asperger syndrome. International Journal of Psychology, 41(2), 97-106.

Eng, H. Y., Chen, D., & Jiang, Y. (2005). Visual working memory for simple and complex visual stimuli. Psychonomic Bulletin & Review, 12, 1127-1133. doi:https://doi.org/10.3758/BF03206454

Fernell, E., Eriksson, M. A., & Gillberg, C. (2013). Early diagnosis of autism and impact on prognosis: a narrative review. Clinical Epidemiology, 5, 33-43.

Fujisaki, W., Koene, A., Arnold, D., Johnston, A., & Nishida, S. (2006). Visual search for a target changing in synchrony with an auditory signal. Proceedings of the Royal Society B: Biological Sciences, 273(1588), 865-874. doi:https://doi.org/10.1098/rspb.2005.3327

Gleiss, S., & Kayser, C. (2014). Acoustic noise improves visual perception and modulates occipital oscillatory states. Journal of Cognitive Neuroscience, 26(4), 699-711. doi: https://doi.org/10.1162/jocn_a_00524

Gobet, F., Lane, P. C. R., Croker, S., Cheng, P. C.-H., Jones, G., Oliver, I., & Pine, J. M. (2001) Chunking mechanisms in human learning. Trends in Cognitive Sciences, 5236–243. doi:https://doi.org/10.1016/S1364-6613(00)01662-4

Golomb, J. D., McDavitt, J. R., Ruf, B. M., Chen, J. I., Saricicek, A., Maloney, K. H., ... & Bhagwagar, Z. (2009). Enhanced visual motion perception in major depressive disorder. Journal of Neuroscience, 29(28), 9072-9077.

Hommel, B. (1998). Event files: Evidence for automatic integration of stimulus-response episodes. Visual Cognition, 5, 183-216.

Hommel, B. (2004). Event files: Feature binding in and across perception and action. Trends in Cognitive Sciences, 8, 494-500.

Hommel, B. & Colzato, L. (2004). Visual attention and the temporal dynamics of feature integration. Visual Cognition, 11, 483-521.

Kanizsa, G. (1976). Subjective contours. Scientific American, 23(4), 48-52. doi:https://doi.org/10.1038/scientificamerican0476-48

Kawachi, Y., Grove, P. M., & Sakurai, K. (2014). A single auditory tone alters the perception of multiple visual events. Journal of Vision, 14(8), 16-16. doi:https://doi.org/10.1167/14.8.16

Kösem, A., & van Wassenhove, V. (2012). Temporal Structure in Audiovisual Sensory Selection. PLoS ONE, 7(7), 1-11. doi:https://doi.org/10.1371/journal.pone.0040936

Laberge, D., & Brown, V. (1986). Variations in size of the visual field in which targets are presented: An attentional range effect. Perception & Psychophysics, 40(3), 188-200. doi:https://doi.org/10.3758/bf03203016

Luck, S. J., & Vogel, E. K. (1997). The capacity of visual working memory for features and conjunctions. Nature, 390, 279-81. doi:https://doi.org/10.1038/36846

Manjarrez, E., Mendez, I., Martinez, L., Flores, A., & Mirasso, C. R. (2007). Effects of auditory noise on the psychophysical detection of visual signals: Cross-modal stochastic resonance. Neuroscience Letters, 415, 231-236. doi: https://doi.org/10.1016/j/neulet.2007.01.030

Miller, G. A. (1956). The magical number seven, plus or minus two some limits on our capacity for processing information. Psychological Review, 101, 343-352. doi:https://doi.org/10.1037/h0043158

Mottron, L., Dawson, M., Soulieres, I., Hubert, B., & Burack, J. (2006). Enhanced perceptual functioning in autism: an update, and eight principles of autistic perception. Journal of Autism and Developmental Disorders, 36(1), 27-43.

Nicholls, M. E. R., Loveless, K. M., Thomas, N. A., Loetscher, T., & Churches, T. (2015). Some participants may be better than others: Sustained attention and motivation are higher early in the semester. The Quarterly Journal of Experimental Psychology, 68(1), 10-18.

Olivers, C. N. L., Awh, E., & Van der Burg, E. (2016). The capacity to detect synchronous audiovisual events is severely limited: Evidence from mixture modelling. Journal of Experimental Psychology: Human Perception and Performance, 42, 2115-2124.

Pylyshyn, Z. W. (2001). Visual indexes, preconceptual objects, and situated vision. Cognition, 80, 127-58. doi:https://doi.org/10.1016/S0010-0277(00)00156-6

Sargent, J., Dopkins, S., Philbeck, J., & Chickha, D. (2010). Chunking in spatial memory. Journal of Experimental Psychology: Learning, Memory, and Cognition, 36, 576-589. doi: https://doi.org/10.1037/a0017528

Schutz, M., & Gillard, J. (2018). Generalizing audio-visual integration: what kinds of stimuli have we been using? Paper presented at the meeting of the International Multisensory Research Forum, Toronto, ON, Canada.

Schutz, M., & Vaisberg, J. M. (2014). Surveying the temporal structure of sounds used in Music Perception. Music Perception, 31(3), 288-296.

Seibold, V. C. (2018). Do alerting signals increase the size of the attentional focus? Attention, Perception, & Psychophysics, 80(2), 402-425. doi:https://doi.org/10.3758/s13414-017-1451-1

Seitz, A. R., Kim, R., & Shams, L. (2006). Sound facilitates visual learning. Current Biology, 16(14), 1422-1427.

Stevenson, R. A., Zemtsov, R. K., & Wallace, M. T. (2012). Individual differences in the multisensory temporal binding window predict susceptibility to audiovisual illusions. Journal of Experimental Psychology: Human Perception and Performance, 38(6), 1517-1529.

Usher, M., & Feingold, M. (2000). Stochastic resonance in the speed of memory retrieval. Biological Cybernetics, 83, 11-16.

Van der Burg, E., Awh, E., & Olivers, C. N. (2013). The capacity of audiovisual integration is limited to one item. Psychological Science, 24(3), 345-351. doi:https://doi.org/10.1177/0956797612452865

Van der Burg, E., Cass, J., Olivers, C., Theeuwes, J., & Alais, D. (2010). Efficient visual search from synchronized auditory signals requires transient audiovisual events. Journal of Vision, 10(7), 855-855. doi:https://doi.org/10.1167/10.7.855

Van der Burg, E., Olivers, C. N., Bronkhorst, A. W., & Theeuwes, J. (2008). Pip and pop: Nonspatial auditory signals improve spatial visual search. Journal of Experimental Psychology: Human Perception and Performance, 34(5), 1053-1065. doi:https://doi.org/10.1037/0096-1523.34.5.1053

Van der Stoep, N., Van der Stigchel, S., Nijboer, T. C. W., & Van der Smagt, M. J. (2016). Audiovisual integration in near and far space: effects of changes in distance and stimulus effectiveness. Experimental Brain Research, 234, 1175-1188. doi: https://doi.org/10.1007/s00221-015-4248-2

Van Wanrooij, M. M., Bremen, P., & Opstal, J. A. (2010) Acquired prior knowledge modulates audiovisual integration. European Journal of Neuroscience, 31, 1763-71. doi:https://doi.org/10.1111/j.1460-9568.2010.07198.x.

Vogel, E. K., Woodman, G. F., & Luck, S. J. (2001). Storage of features, conjunctions, and objects in visual working memory. Psychonomic Bulletin & Review, 27, 92-114. doi:https://doi.org/10.1037//0096-1523.27.1.92

Vroomen, J., & de Gelder, B. (2000). Sound enhances visual perception: Cross-modal effects of auditory organization on vision. Journal of Experimental Psychology: Human Perception and Performance, 26(5), 1583-1590. doi:https://doi.org/10.1037//0096-1523.26.5.1583

Weinbach, N., & Henik, A. (2012). The relationship between alertness and executive control. Journal of Experimental Psychology: Human Perception and Performance, 38(6), 1530-1540. doi:https://doi.org/10.1037/a0027875

Weinbach, N., & Henik, A. (2014). Alerting enhances attentional bias for salient stimuli; evidence from a global/local processing task. Cognition, 133, 414-419.

Wilbiks, J. M. P., & Dyson, B. J. (2013). Effects of temporal asynchrony and stimulus magnitude on competitive audio-visual binding. Attention, Perception, & Psychophysics, 75(8), 1883-1891.

Wilbiks, J. M. P., & Dyson, B. J. (2016). The dynamics and neural correlates of audio-visual integration capacity as determined by temporal unpredictability, proactive interference, and SOA. PLOS One, 11(12). doi:https://doi.org/10.1371/journal.pone.0168304

Wilbiks, J. M. P., & Dyson, B. J. (2018). The contribution of perceptual factors and training on varying audiovisual integration capacity. Journal of Experimental Psychology: Human Perception and Performance, 44(6), 871-884.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wilbiks, J.M.P., Pavilanis, A.D.S. & Rioux, D.M. Audiovisual integration capacity modulates as a function of illusory visual contours, visual display circumference, and sound type. Atten Percept Psychophys 82, 1971–1986 (2020). https://doi.org/10.3758/s13414-019-01882-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-019-01882-6