Abstract

In this paper we study the stochastic Galerkin approximation for the linear transport equation with random inputs and diffusive scaling. We first establish uniform (in the Knudsen number) stability results in the random space for the transport equation with uncertain scattering coefficients and then prove the uniform spectral convergence (and consequently the sharp stochastic asymptotic-preserving property) of the stochastic Galerkin method. A micro–macro decomposition-based fully discrete scheme is adopted for the problem and proved to have a uniform stability. Numerical experiments are conducted to demonstrate the stability and asymptotic properties of the method.

Similar content being viewed by others

1 Background

We consider the linear transport equation in one-dimensional slab geometry:

This equation arises in neutron transport, radiative transfer, etc., that describes particles (for example neutrons) transport in a background media (for example nuclei), in which f(t, x, v, z) is the density distribution of particles at time \(t\ge 0\), position \(x \in (0,1)\). \(v=\Omega \cdot e_x = \cos \theta \in [-1, 1]\) where \(\theta \) is the angle between the moving direction and x-axis. \(\sigma (x,z)\), \(\sigma ^a(x,z)\) are total and absorption cross section, respectively. S(x, z) is the source term. \(\varepsilon \) is the dimensionless Knudsen number, the ratio between particle mean free path and the characteristic length (such as the length of the domain). The Dirichlet boundary conditions are given in the incoming direction by

while the initial condition is given by

We are interested in the problem that contains uncertainty in the collision cross section, source, initial or boundary data. The uncertainty is characterized by the random variable \(z\in {\mathbb {R}}^d\) with probability density function \(\omega (z)\). Thus in our problem \(f, \sigma , \sigma ^a\) and S all depend on z.

In recent years, there have been extensive activities to study partial differential equations or engineering problems with uncertainties. Many numerical methods have been introduced. In this article, we are interested in the polynomial chaos (originally introduced in Wiener’s work [26])-based stochastic Galerkin method which has been shown to be competitive in many applications, see [4, 27, 28]. The stochastic Galerkin method has been used for linear transport equation with uncertain coefficients [25]. Here we are interested in the problem that contains both uncertainty and multiscale. The latter is characterized by the Knudsen number \(\varepsilon \), which, in the so-called optically thin region (\(\varepsilon \ll 1\)), due to high scattering rate of particles, leads the linear transport equation to a diffusion equation, known as the diffusion limit [1, 3, 18]. For the past decades, developing asymptotic-preserving (AP) schemes for (deterministic) linear transport equation with diffusive scaling has seen many activities, see for examples [5, 7,8,9, 17, 19, 20, 22]. Only recently AP scheme for linear transport equation with both uncertainty and diffusive scaling was introduced in [15] (in the framework of stochastic Galerkin method, coined as s-AP method). See more related recent works along this line in [6, 12, 13]. A scheme is s-AP if the stochastic Galerkin method for the linear transport equation, as \(\varepsilon \rightarrow 0\), becomes a stochastic Galerkin method for the limiting diffusion equation. It was realized in [15] that the deterministic AP framework can be easily adopted to study linear transport equations with uncertain coefficients. Moreover, as shown in [6, 12], kinetic equations, linear or nonlinear, could preserve the regularity in random space of the initial data at later time, which naturally leads to spectral accuracy of the stochastic Galerkin method.

When \(\varepsilon \ll 1\), however, the energy estimates and consequently the convergence rates given in [6, 12] depend on the reciprocal of \(\varepsilon \), which implies that one needs the degree of the polynomials used in the stochastic Galerkin method to grow as \(\varepsilon \) decreases. In fact, this is typical of a standard numerical method for problems that contain small or multiple scales. While AP schemes can be used with numerical parameters independent of \(\varepsilon \), to prove this rigorously is not so easy and has been done only in a few occasions [5, 14]. A standard approach to prove a uniform convergence is to use the diffusion limit, as was done first in [5] in the deterministic case and then in [11] for the uncertain transport equation. See also the review article [7]. However such approaches might not give the sharp convergence rate.

In this paper, we provide a sharp error estimate for the stochastic Galerkin method for problem (1). This requires a sharp (\(\varepsilon \)-independent) energy estimate on high-order derivatives in the random space for f, as well as \([f]-f\) where [f] is the velocity average of f defined in (5) which is shown to be bounded even if \(\varepsilon \rightarrow 0\). Then the uniform in \(\varepsilon \) spectral convergence naturally follows, without using the diffusion limit.

The s-AP scheme in [15] uses the AP framework of [8] that relies on the even- and odd-parity formulation of the transport equation. In this paper, we use the micro–macro decomposition-based approach (see [22]) to develop a fully discrete s-AP method. The advantage of this approach is that it allows us to prove a uniform (in \(\varepsilon \)) stability condition, as was done in the deterministic counterpart in [23]. In fact, we will show that one can easily adopt the proof of [23] for the s-AP scheme.

The paper is organized as follows. In Sect. 2 we summarize the diffusion limit of the linear transport equation. The generalized polynomial chaos-based stochastic Galerkin method for the problem is introduced in Sect. 3 and shown formally to be s-AP. The uniform in \(\varepsilon \) regularity of the stochastic Galerkin scheme is proven in Sect. 4, which leads to a uniform spectral convergence proof. The micro–macro decomposition-based fully discrete scheme is given in Sect. 5, and its uniform stability is established in Sect. 6. Numerical experiments are carried out in Sect. 7. The paper is concluded in Sect. 8.

2 The diffusion limit

Denote

as the average of velocity-dependent function \(\phi \). For each random realization z, there exists a positive function \(\phi (v)>0\), the so-called absolute equilibrium state that satisfies \([ \phi ]=1, [ v\phi (v)]=0\), (from Perron–Frobenius theorem, cf. [1]).

Define in the Hilbert space \(L^2\big ((-1,1);\;\phi ^{-1}\,\mathrm {d}v\big )\) the inner product and norm

The linear operator \({{{\mathcal {L}}}}\) satisfies the following properties [1]:

-

\([ {{{\mathcal {L}}}} f] =0\), for every \(f\in L^2([-1,1])\);

-

The null space of f is \({{{\mathcal {N}}}} ({{{\mathcal {L}}}}) = \text{ Span } \{\,\phi \mid \phi =[ \phi ]\,\}\);

-

The range of f is \({{{\mathcal {R}}}} ({{{\mathcal {L}}}}) = {{{\mathcal {N}}}} ({{{\mathcal {L}}}})^{\bot }=\{\,f\mid [f]=0\, \} ;\)

-

\({{{\mathcal {L}}}}\) is nonpositive self-adjoint in \(L^2((-1,1); \phi ^{-1}\,\mathrm {d}v)\), i.e. , there is a positive constant \(s_m\) such that

$$\begin{aligned} \langle f, {{{\mathcal {L}}}} f\rangle _{\phi } \le - 2 s_m \Vert f\Vert ^2_{\phi },\quad \forall \, f\in {{{\mathcal {N}}}} ({{{\mathcal {L}}}})^{\bot }; \end{aligned}$$(7) -

\({{{\mathcal {L}}}}\) admits a pseudo-inverse, denoted by \({{{\mathcal {L}}}}^{-1}\), from \({{{\mathcal {R}}}} ({{{\mathcal {L}}}})\) to \({{{\mathcal {R}}}} ({{{\mathcal {L}}}})\).

Let \(\rho = [ f]\). For each fixed z, the classical diffusion limit theory of linear transport equation [1, 3, 18] gives that, as \(\varepsilon \rightarrow 0, \rho \) converges to the following random diffusion equation:

where the diffusion coefficient

The micro–macro decomposition, a useful tool for the study of the Boltzmann equation and its fluid dynamics limit [24], and for the design of asymptotic-preserving numerical schemes for kinetic equations [2, 16, 22], takes the form

where \([g]=0\). Introducing (10) into (1), one gets its micro–macro form:

Diffusion limit (8) can be easily seen now. When \(\varepsilon \rightarrow 0\), (11b) gives

which, when plugged into (11a), gives diffusion equation (8)–(9).

3 The gPC-stochastic Galerkin approximation

We assume the complete orthogonal polynomial basis in the Hilbert space \(H({\mathbb {R}}^d; \omega (z)\,\mathrm {d}z)\) corresponding to the weight \(\omega (z)\) is \(\{\phi _i(z), i=0,1, \ldots ,\}\), where \(\phi _i(z)\) is a polynomial of degree i and satisfies

Here \(\phi _0(z)=1\), and \(\delta _{i j}\) is the Kronecker delta function. The inner product and norm in this space are, respectively,

Since the solution \(f(t,\cdot ,\cdot ,\cdot )\) is defined in \(L^2\big ( (0,1)\times (-1,1)\times {\mathbb {R}}^d; \omega (z)\,\mathrm {d}x\,\mathrm {d}v\,\mathrm {d}z\big )\), one has the generalized polynomial chaos (gPC) expansion

The mean and variance of f can be obtained from the expansion coefficients as

The idea of the stochastic Galerkin (SG) approximation [4, 28] is to truncate the above infinite series by

from which one can extract the mean and variance of \(f_M\) from the expansion coefficients as

Furthermore, we define

for \(0\le i,j \le M\). Let \( \text{ Id } \) be the \((M+1)\times (M+1)\) identity matrix. \(\Sigma , \Sigma ^a\) are symmetric positive-definite matrices satisfying [27]

If one applies the gPC ansatz (13) into transport equation (1), and conduct the Galerkin projection, one obtains [15, 25]:

where \({\hat{S}}\) is defined similarly as (13).

We now use the micro–macro decomposition

where \({{\hat{\rho }}}=[{\hat{f}}]\) and \([g]=0\), in (14) to get

with initial data

that satisfy

It is easy to see that system (16) formally has the diffusion limit as \(\varepsilon \rightarrow 0\):

where

Thus the gPC approximation is s-AP in the sense of in [15].

One can easily derive the following energy estimate for system (16)

On the other hand, the direct gPC approximation of the random diffusion equation (8)–(9) is:

where \(K_d = (\kappa _{ij}), \kappa _{i,j}= \langle \phi _i, \kappa \phi _j \rangle _\omega \).

4 The regularity in the random space and a uniform spectral convergence analysis of gPC-SG method

In this section, we assume \(\sigma ^a=S=0\) for clarity and periodic boundary condition

for simplicity. We prove that, under some suitable assumptions on \(\sigma (z)\), the solution to the linear transport equation with random inputs preserves the regularity in the random space of the initial data uniformly in \(\varepsilon \). Then based on the regularity result, we conduct the spectral convergence analysis and error estimates for the gPC-SG method and will also obtain error bounds uniformly in \(\varepsilon \).

4.1 Notations

We first recall the Hilbert space of the random variable introduced in Sect. 3,

equipped with the inner product and norm defined in (12). We also define the kth-order differential operator with respect to z as

and the Sobolev norm in H as

Finally, we introduce norms in space and velocity as follows:

where \(Q=[0,1]\times [-1,1]\) denotes the domain in the phase space. For simplicity, we will suppress the dependence of t and just use \(\Vert f\Vert _\Gamma , \Vert f\Vert _{\Gamma ^k}\) in the following proof.

4.2 Regularity in the random space

We will study the regularity of f with respect to the random variable z. To this aim, we first prove the following lemma. For simplicity, we state and prove the following lemma only for one-dimensional case. Proof for high-dimensional case is identical except the change of coefficient.

Lemma 4.1

Assume \(\sigma (z)\ge \sigma _{\mathrm {min}}>0\), then for any integer k and \(\sigma \in W^{k,\infty }, g\in H^k\) we have

Proof

Since

we have

By Young’s inequality

and Cauchy–Schwarz inequality

Combining (27), (28) and (29), one obtains

This completes the proof of Lemma 4.1. \(\square \)

Now we are ready to prove the following regularity result.

Theorem 4.1

(Uniform regularity) Assume

If for some integer \(m\ge 0\),

then the solution f to linear transport equation (1)–(2), with \(\sigma ^a = S = 0\) and periodic boundary condition (19), satisfies,

where \(C_\sigma , C_0\) and C are constants independent of \(\varepsilon \).

Proof

For \(\sigma ^a = S = 0\), the kth (\(0\le k\le m\))-order formal differentiation of (1) with respect to z is,

where \([ \cdot ]\) is the average operator defined in (5). Multiplying \(D^k f\) to both sides of (33) and integrating on \(Q=[0,1]\times [-1,1]\), one gets

Integration by parts yields

where we have used periodic boundary condition (19). Notice that

combining with (34) one obtains

Energy estimate We will establish the following energy estimate by using mathematical induction with respect to k: for any \(k\ge 0\), there exist k constants \(c_{kj}>0, j=0,\dots , k-1\) such that

When \(k=0\), (37) becomes

which satisfies (38).

Assume that for any \(k\le p\) where \(p\in {{\mathbb {N}}}\), (38) holds. Adding all these inequalities together we get

which is equivalent to

where

When \(k=p+1\), (37) reads

According to Lemma 4.1 with \(g = D^{p+1}([f] - f)\) and the assumption \(\Vert D^k\sigma (z)\Vert _{L^\infty }\le C_\sigma \), the right-hand side satisfies the estimate

where \(C'_{p+1} = (p+1)4^{p+1}\). Now we have the estimate

Adding this equation (45) with (41) multiplied by \(C_\sigma ^2 C'_{p+1}/ \sigma _{\mathrm {min}}^2\) gives,

where

This shows that (38) still holds for \(k= p+1\). By mathematical induction, (38) holds for all integer \(k\in {{\mathbb {N}}}\).

Finally, according to (38), we have

which yields

where C is clearly independent of \(\varepsilon \). This completes the proof of the theorem. \(\square \)

Theorem 4.1 shows the derivatives of the solution with respect to z can be bounded by the derivatives of initial data. In particular, the \(\Vert D^k f\Vert _{\Gamma }\) bound is independent of \(\varepsilon \)! This is crucial for our later proof that our scheme is s-AP. However, this estimate alone is not sufficient to guarantee that the whole gPC-SG method has a spectral convergence uniform in \(\varepsilon \) (since there is \(O(1/\varepsilon ^2)\) coefficient in front of the projection error, such we need \(O(\varepsilon ^2)\) estimation of \([ f] - f\) to cancel this coefficient). To this aim, we first provide the following lemma.

Lemma 4.2

Assume for some integer \(m \ge 0\),

Then holds:

Proof

First note that \(\partial _xf\) satisfies the same equation as f itself,

Thus according to Theorem 4.1 and our assumption (50),

with C independent of \(\varepsilon \). Then by Young’s inequality,

where \(C_1 = C^2\) is a constant. This completes the proof. \(\square \)

Now we are ready to prove the following theorem.

Theorem 4.2

(\(\varepsilon ^2\)-estimate on \([ \varvec{f}]-\varvec{f}\)) With all the assumptions in Theorem 4.1 and Lemma 4.2, for a given time \(T>0\), the following regularity result of \([ f] - f\) holds:

for any \(t\in (0,T]\) and \(0\le k\le m,\), where \(C'\) and C are constants independent of \(\varepsilon \).

Proof

First notice that \([ f]\) satisfies

so \([ f]-f\) satisfies the following equation:

As the proof in Theorem 4.1, differentiating this equation k times with respect to z, multiplying by \(D^k([ f]-f)\) and integrating on Q, one obtains

Notice that

and using Lemma 4.2, we have

For the second part by Lemma 4.1,

So we get the following estimate,

To prove the theorem we use mathematical induction. When \(k=0\) (62) turns to

By Grönwall’s inequality,

which satisfies (55).

Assume for any \(k\le p\) where \(p\in {{\mathbb {N}}}\), (55) holds. This implies

So when \(k=p+1\) by (62),

which means

Again, the Grönwall’s inequality yields

where \(C_{p+1}\) is a constant independent of \(\varepsilon \). So by mathematical induction, we complete the proof of the theorem. \(\square \)

Remark 4.1

We remark that all the above lemma and theorems are proved for \(z\in {{\mathbb {R}}}\) and \(\sigma \) depending only on z. However, our conclusions and techniques are not limited to these cases. For \(z\in {{\mathbb {R}}}^d\), it is straightforward to prove and for \(\sigma (x,z)\) also a function of x, we only need to modify the proof of Lemma 4.2 by using the same technique as in the proof of Theorem 4.1.

4.3 A spectral convergence uniformly in \(\varvec{\varepsilon }\)

Let f be the solution to linear transport equation (1)–(2). We define the Mth-order projection operator

The error arisen from the gPC-SG can be split into two parts \(r_N\) and \(e_N\),

where \(r_M = f - {\mathcal {P}}_M f\) is the truncation error, and \(e_M={\mathcal {P}}_M f - f_M\) is the projection error.

For the truncation error \(r_M\), we have the following lemma

Lemma 4.3

(Truncation error). Under all the assumptions in Theorems 4.1 and 4.2, we have for \(t\in (0,T]\) and any integer \(k=0,\ldots ,m\),

Moreover,

where \(C_1\) and \(C_2\) are independent of \(\varepsilon \).

Proof

By the standard error estimate for orthogonal polynomial approximations and Theorem 4.1, for \(0\le t\le T\),

with C independent of M.

In the same way, according to Theorem 4.2,

which completes the proof \(\square \)

It remains to estimate \(e_M\). To this aim, we first notice \(f_M\) satisfying

On the other hand, by doing the Mth-order projection directly on original linear transport equation we get

Now we can give the following estimate of the projection error \(e_M\),

Lemma 4.4

Under all the assumptions in Theorems 4.1 and 4.2, we have for \(t\in (0,T]\) and any integer \(k=0,\ldots ,m\),

where C(T) is a constant independent of \(\varepsilon \).

Proof

We use basically the same energy estimate as before: multiply (76) by \(e_M\) and integrate on Q, notice that

then one gets

Notice the projection operator \({\mathcal {P}}_M\) is a self-joint operator

and

thus

where for the last two inequalities we have used Young’s inequality and Lemma 4.3. Then by a integral over t we get

since \(e^0_M = {\mathcal {P}}_M f_0 - f^0_M = 0\) we complete the proof of this lemma. \(\square \)

Finally, we are now ready to state the main convergence theorem:

Theorem 4.3

(Uniform convergence in \(\varvec{\varepsilon }\)) Assume

If for some integer \(m\ge 0\),

Then the error of the whole gPC-SG method is

where C(T) is a constant independent of \(\varepsilon \).

Proof

From Lemmas 4.3 and 4.4, one has

which completes the proof. \(\square \)

Remark 4.2

Theorem 4.3 gives a uniformly in \(\varepsilon \) spectral convergence rate; thus, one can choose M independent of \(\varepsilon \), a very strong s-AP property. If the scattering is anisotropic, namely \(\sigma \) depends on \(\nu \), then one usually obtains a convergence rate that requires \(M\gg \varepsilon \) (see for example [12]). In such cases the proof of s-AP property is much harder, and one usually needs to use the diffusion limit, see [5] in the case of deterministic case and [11] in the random case.

5 The Full discretization

As pointed out in [15], by using the gPC-SG formulation, one obtains a vector version of the original deterministic transport equation. This enables one to use the deterministic AP scheme. In this paper, we adopt the AP scheme developed in [22] for gPC-SG system (16).

One of the most important and challenge problems for linear transport equation is the treatment of boundary conditions; here, we refer to the early work by Jin and Levermore [10] and more recent work by Lemou and Méhats [21] for their study of AP property and numerical treatment of physical boundary conditions.

We take a uniform grid \(x_i = ih, i = 0, 1, \ldots N\), where \(h=1/N\) is the grid size, and time steps \(t^n=n \Delta t\). \(\rho ^n_{i}\) is the approximation of \(\rho \) at the grid point \((x_i, t^n)\), while \(g^{n+1}_{i+\frac{1}{2}}\) is defined at a staggered grid \(x_{i+1/2} = (i+1/2)h, i = 0, \ldots N-1\).

The fully discrete scheme for gPC system (11) is

It has the formal diffusion limit when \(\varepsilon \rightarrow 0\) as can be easily checked, which is

where \(K =\frac{1}{3} \Sigma ^{-1}\). This is the fully discrete scheme for (17). Thus the scheme is stochastically AP as defined in [15].

We also notice that \([ \hat{g}^n_{i+\frac{1}{2}}]=0\) for every n which will be used later.

6 The uniform stability

One important property for an AP scheme is to have a stability condition independent of \(\varepsilon \), so one can take \(\Delta t \gg O(\varepsilon )\) when \(\varepsilon \) becomes small. In this section we prove such a result. The proof basically follows that of [23] for the deterministic problem.

For clarity in this section we assume \(\sigma ^a =S=0\). The main theoretical result about the stability is the following theorem:

Theorem 6.1

Denote

If \(\Delta t\) satisfies the following CFL condition

then the sequences \({\hat{\rho }}^n\) and \(\hat{g}^n\) defined by scheme (85) satisfy the energy estimate

for every n, and hence scheme (85) is stable.

Remark 6.1

Since the right-hand side of (87) has a lower bound when \(\varepsilon \rightarrow 0\) (and the lower bound being that of the stability condition of discrete diffusion equation (86)), the scheme is asymptotically stable and \(\Delta t\) remains finite even if \(\varepsilon \rightarrow 0\).

6.1 Notations and useful lemma

We give some useful notations for norms and inner products that are used in our analysis. For every grid function \(\mu =(\mu _i)_{i=0}^{N-1}\) define:

For every velocity-dependent grid function \(v\in [-1,1]\mapsto \phi (v)=(\phi _{i+\frac{1}{2}}(v))_{i=0}^{N-1}\), define:

If \(\phi \) and \(\psi \) are two velocity-dependent grid functions, their inner product is defined as:

Now we give some notations for the finite difference operators that are used in scheme (85). For every grid function \(\phi =(\phi _{i+\frac{1}{2}})_{i\in {{\mathbb {Z}}}}\), we define the following one-sided difference operators:

We also define the following centered difference operators:

Finally, for every grid function \(\mu =(\mu _{i})_{i\in {{\mathbb {Z}}}}\), define the following centered operator:

We first recall some basic facts. For every grid functions \(\phi =(\phi _{i+\frac{1}{2}})_{i=0}^{N-1}, \psi =(\psi _{i+\frac{1}{2}})_{i=0}^{N-1}\), and \(\mu =(\mu _{i})_{i=0}^{N-1}\), one has (see [23]):

6.2 Energy estimates

Now we provide the details of the energy estimate. The proof is similar to that for deterministic problem in [23].

First, multiplying (85a) and (85b) by \({{\hat{\rho }}}^{n+1}\) and \(\varepsilon ^2 {\hat{g}}^{n+1}\), respectively. With the assumption that \(\sigma _i^a=0, {\hat{S}}_i=0\), and using the fact that \(\Sigma \ge \sigma _{\text {min}} Id\), one has

Combining the second term on the left hand side and the last term on the right-hand side, one gets

Using the Young’s inequality,

This gives

We take the following decomposition

where

Using the Young’s inequality,

This leads to

Since \(|v| \le 1\),

These imply

Note

where \((a)_+=\max (0, a)\) denotes the positive part of a. Applying this in (6.2) then gives

This means that we have the final energy estimate

if \(\Delta t\) is such that

Since \(\sigma _{\text {min}} > 0\), an equivalent condition is \(\left( \dfrac{3\Delta t}{4}- \varepsilon \dfrac{\Delta x}{2}\right) \dfrac{4}{\Delta x^2}\le \sigma _{\text {min}}\), which gives the sufficient condition

This completes the proof of Theorem 6.1.

7 Numerical examples

In this section, we present several numerical examples to illustrate the effectiveness of our method.

We consider the linear transport equation with random coefficient \(\sigma (z)\):

with initial condition:

and the boundary conditions are:

7.1 Example 1

First we consider a random coefficient with one-dimensional random parameter:

The limiting random diffusion equation for kinetic equation (101) is

with initial condition and boundary conditions:

The analytical solution for (102) with the given initial and boundary conditions is

When \(\varepsilon \) is small, we use this as the reference solution, as it is accurate with an error of \(O(\varepsilon ^2)\). Hereafter we set \(\varepsilon =10^{-8}\). For large \(\varepsilon \) or in the case we cannot get an analytic solution, we will use the collocation method (see [27]) with the same time and spatial discretization to micro–macro system (11) as a comparison in the following examples. For more details about the collocation method, especially the s-AP property, we refer to the discussion in the work of Jin and Liu [12]. In addition, the standard 30-points Gauss–Legendre quadrature set is used for the velocity space to compute \(\rho \) in the following example.

To examine the accuracy, we use two error norms: the differences in the mean solutions and in the corresponding standard deviation, with \(\ell ^2\) norm in x:

where \(u^h,u\) are the numerical solutions and the reference solutions, respectively.

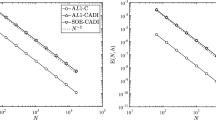

In Fig. 1, we plot the errors in mean and standard deviation of the gPC numerical solutions at \(t = 0.01\) with different gPC orders. Three sets of results are included: solutions with \(\Delta x = 0.04\) (squares), \(\Delta x = 0.02\) (circles), \(\Delta x = 0.01\) (stars). We always use \(\Delta t = 0.0002/3\). One observes that the errors become smaller with finer mesh. One can see that the solutions decay rapidly in N and then saturate where spatial discretization error dominates. It is then obvious that the errors due to gPC expansion can be neglected at order \(M = 4\) even for \(\varepsilon = 10^{-8}\). The solution profiles of the mean and standard deviation are shown on the left and right of Fig. 2, respectively.

We also plot the profiles of the mean and standard deviation of the flux vf in Fig. 3. Here we observe good agreement among the gPC-Galerkin method, stochastic collocation method with 20 Gauss–Legendre quadrature points and analytical solution (103).

Example 1. The mean (left) and standard deviation (right) of \(\rho \) at \(\varepsilon =10^{-8}\), obtained by the gPC Galerkin at order \(M=4\) (circles), the stochastic collocation method (crosses) and limiting analytical solution (103)

Example 1. Differences in the mean (solid line) and standard deviation (dash line) of \(\rho \) with respect to \(\varepsilon ^2\), between limiting analytical solution (103) and the 4th-order gPC solution with \(\Delta x = 0.04\) (squares), \(\Delta x = 0.02\) (circles) and \(\Delta x = 0.01\) (stars)

In Fig. 4, we examine the difference between the solution \(t = 0.01\) obtained by the 4th-order gPC method with \(\Delta x = 0.01, \Delta t = \Delta x^2/12\) and limiting analytical solution (103). As expected, we observe the differences become smaller as \(\varepsilon \) is smaller in a quadratic fashion, before the numerical errors become dominant.

7.2 Example 2: mixing regime

In this test, we still set \(\sigma = 2 + z\). We consider \(\varepsilon >0\) depending on the space variable in a wide range of mixing scales:

which varies smoothly from \(10^{-3}\) to O(1) as shown in Fig. 5. This tests the ability of the scheme for problems with mixing regimes or its uniform convergence in \(\varepsilon \).

In order to keep the conservation of the mass, the proper linear transport equation should be in the following form,

then micro–macro decomposition (11) changes to

We can find only the last term changed. For limiting equation (8), it also need to be changed to

where we assume that

exists. For the corresponding numerical scheme we only need to replace the last term

by

in (85b).

The initial data are

with

The reference solution is obtained using collocation method with 30 points. The parameters are set up as the following: the mesh size is \(\Delta x = 0.01\), and the corresponding t direction mesh size is \(\Delta t = \Delta x^2/3\). And we use the 5th-order gPC-Galerkin method to evolve the equation to different time \(t=0.005, t=0.01, t=0.05, t=0.1\). For the v integral, we use Legendre quadrature of 30 points.

Figure 6 shows the \(\ell ^2\) error of the mean and standard deviation with respect to the gPC order. We also see the fast (spectral) convergence of the method.

7.3 Example 3: random initial data

We then add randomness on the initial data (\(\sigma =2+z\) still random).

where f(x, v, 0) is the same as in (111). This time we set \(\Delta x=0.01\) and \(\Delta t = \Delta x^2/12\) and final time \(T=0.01\). First we test in the fluid limit regime \(\varepsilon =10^{-8}\) as shown in Fig. 7.

Then we test in \(\varepsilon =1\) which is shown in Fig. 8.

One can see a good agreement between the gPC-SG solutions and the solutions by the collocation method.

7.4 Example 4: random boundary data

For the next example, we then add randomness on the boundary conditions:

We also test when \(\varepsilon =10^{-8}\) and \(\varepsilon =10\) as shown in Figs. 9 and 10, again, good agreements are observed between the gPC-SG solutions and the solutions by the collocation method.

7.5 Example 5: 2D random space

Finally, model the random input as a random field, in the following form:

where we set \(\sigma =4\) and \(z_1, z_2\) are both uniformly distributed in \((-1,1)\). The mean and standard deviation of the solution \(\rho \) at \(t=0.01\) obtained by the 5th-order gPC Galerkin with \(\Delta x = 0.025, \Delta t = 0.0002/3\) are shown in Fig. 11. We then use the high-order stochastic collocation method over 40\(\times \)40 Gauss–Legendre quadrature points to compute the reference mean and standard deviation of the solutions. In Fig. 12, we show the errors of the mean (solid lines) and standard deviation (dash lines) of \(\rho \) with respect to the order of gPC expansion. The fast spectral convergence of the errors can be clearly seen.

8 Conclusions

In this paper we establish the uniform spectral accuracy in terms of the Knudsen number, which consequently allows us to justify the stochastic asymptotic-preserving property of the stochastic Galerkin method for the linear transport equation with random scattering coefficients. For the micro–macro decomposition-based fully discrete scheme we also prove a uniform stability result. These are the first uniform accuracy and stability results for the underlying problem.

It is expected that our uniform stability proof is useful for more general kinetic or transport equations, which is the subject of our future study.

Acknowledgements

Research was supported by NSF Grants DMS-1522184, DMS-1514826 and the NSF RNMS: KI-Net (DMS-1107291 and DMS-1107444). Shi Jin was also supported by NSFC Grant No. 91330203 and by the Office of the Vice Chancellor for Research and Graduate Education at the University of Wisconsin-Madison with funding from the Wisconsin Alumni Research Foundation.

References

Bardos, C., Santos, R., Sentis, R.: Diffusion approximation and computation of the critical size. Trans. Amer. Math. Soc. 284(2), 617–649 (1984)

Bennoune, M., Lemou, M., Mieussens, L.: Uniformly stable numerical schemes for the Boltzmann equation preserving the compressible Navier-Stokes asymptotics. J. Comput. Phys. 227(8), 3781–3803 (2008)

Bensoussan, A., Lions, J.-L., Papanicolaou, G.: Asymptotic Analysis for Periodic Structures, Volume 5 of Studies in Mathematics and its Applications. North-Holland Publishing Co., Amsterdam (1978)

Ghanem, R.G., Spanos, P.: Stochastic Finite Elements: A Spectral Approach. Springer, Berlin (1991)

Golse, F., Jin, S., Levermore, C.D.: The convergence of numerical transfer schemes in diffusive regimes. I. Discrete-ordinate method. SIAM J. Numer. Anal. 36(5), 1333–1369 (1999)

Hu, J., Jin, S.: A stochastic Galerkin method for the Boltzmann equation with uncertainty. J. Comput. Phys. 315, 150–168 (2016)

Jin, S.: Asymptotic preserving (AP) schemes for multiscale kinetic and hyperbolic equations: a review. Rivista di Matematica della Universita di Parma 3, 177–216 (2012)

Jin, S., Pareschi, L., Toscani, G.: Uniformly accurate diffusive relaxation schemes for multiscale transport equations. SIAM J. Numer. Anal. 38(3), 913–936 (2000)

Jin, S.: Efficient asymptotic-preserving (AP) schemes for some multiscale kinetic equations. SIAM J. Sci. Comput. 21(2), 441–454 (1999). (electronic)

Jin, S., Levermore, D.: The discrete-ordinate method in diffusive regimes. Transp. Theory Stat. Phys. 20(5–6), 413–439 (1991)

Jin, S., Li, Q., Wang, L.: The uniform convergence of generalized polynomial chaos based methods for multiscale transport equation with random scattering. preprint

Jin, S., Liu, L.: An asymptotic-preserving stochastic Galerkin method for the semiconductor Boltzmann equation with random inputs and diffusive scalings. SIAM Multiscale Model. Simul. 15, 157–183 (2017)

Jin, S., Hanqing, L.: An asymptotic-preserving stochastic Galerkin method for the radiative heat transfer equations with random inputs and diffusive scalings. J. Comput. Phys. 334, 182–206 (2017)

Jin, S., Tang, M., Han, H.: On a uniformly second order numerical method for the one-dimensional discrete-ordinate transport equation and its diffusion limit with interface. Netw. Heterog. Media 4, 35–65 (2009)

Jin, S., Xiu, D., Zhu, X.: Asymptotic-preserving methods for hyperbolic and transport equations with random inputs and diffusive scalings. J. Comput. Phys. 289, 35–52 (2015)

Klar, A., Schmeiser, C.: Numerical passage from radiative heat transfer to nonlinear diffusion models. Math. Models Methods Appl. Sci. 11(5), 749–767 (2001)

Klar, A.: An asymptotic-induced scheme for nonstationary transport equations in the diffusive limit. SIAM J. Numer. Anal. 35(3), 1073–1094 (1998). (electronic)

Larsen, E.W., Keller, J.B.: Asymptotic solution of neutron transport problems for small mean free paths. J. Math. Phys. 15, 75–81 (1974)

Larsen, E.W., Morel, J.E.: Asymptotic solutions of numerical transport problems in optically thick, diffusive regimes. II. J. Comput. Phys. 83(1), 212–236 (1989)

Larsen, E.W., Morel, J.E., Miller Jr., W.F.: Asymptotic solutions of numerical transport problems in optically thick, diffusive regimes. J. Comput. Phys. 69(2), 283–324 (1987)

Lemou, M., Méhats, F.: Micro-macro schemes for kinetic equations including boundary layers. SIAM J. Sci. Comput. 34(6), 734–760 (2012)

Lemou, M., Mieussens, L.: A new asymptotic preserving scheme based on micro-macro formulation for linear kinetic equations in the diffusion limit. SIAM J. Sci. Comput. 31(1), 334–368 (2008)

Liu, J.-G., Mieussens, L.: Analysis of an asymptotic preserving scheme for linear kinetic equations in the diffusion limit. SIAM J. Numer. Anal. 48(4), 1474–1491 (2010)

Liu, T.-P., Shih-Hsien, Y.: Boltzmann equation: micro-macro decompositions and positivity of shock profiles. Commun. Math. Phys. 246(1), 133–179 (2004)

Prinja, A.K., Fichtl, E.D., Warsa, J.S.: Stochastic methods for uncertainty quantification in radiation transport. In: International Conference on Mathematics, Computational Methods & Reactor Physics (M&C 2009), Saratoga Springs, New York, May 3-7, 2009 (2009)

Wiener, N.: The homogeneous chaos. Amer. J. Math. 60, 897–936 (1938)

Xiu, D.: Numerical Methods for Stochastic Computations. Princeton University Press, Princeton (2010)

Xiu, D., Karniadakis, G.E.: The Wiener–Askey polynomial chaos for stochastic differential equations. SIAM J. Sci. Comput. 24(2), 619–644 (2002)

Author information

Authors and Affiliations

Corresponding author

Additional information

In honor of the 70th birthday of Professor Björn Engquist

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Jin, S., Liu, JG. & Ma, Z. Uniform spectral convergence of the stochastic Galerkin method for the linear transport equations with random inputs in diffusive regime and a micro–macro decomposition-based asymptotic-preserving method. Res Math Sci 4, 15 (2017). https://doi.org/10.1186/s40687-017-0105-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40687-017-0105-1