Abstract

The evaluation and selection of inappropriate open source software in learning management system (OSS-LMS) packages adversely affect the business processes and functions of an organization. Thus, comprehensive insights into the evaluation and selection of OSS-LMS packages are presented in this paper on the basis of three directions. First, available OSS-LMSs are ascertained from published papers. Second, the criteria for evaluating OSS-LMS packages are specified.according to two aspects: the criteria are identified and established, followed by a crossover between them to highlight the gaps between the evaluation criteria for OSS-LMS packages and the selection problems. Third, the abilities of selection methods that appear fit to solve the problems of OSS-LMS packages based on the multi-criteria evaluation and selection problem are discussed to select the best OSS-LMS packages. Results indicate the following: (1) a list of active OSS-LMS packages; (2) the gaps on the evaluation criteria used for LMS and other problems (consisting of main groups with sub-criteria); (3) use of multi-attribute or multi-criteria decision-making (MADM/MCDM) techniques in the framework of the evaluation and selection of the OSS in education as recommended solutions.

Similar content being viewed by others

Background

A learning management system (LMS) is a web-based software application used to organize, implement, and evaluate education. LMS packages provide online learning material, evaluation, and collaborative learning environment. A number of LMSs, such as ATutor, Claroline, and Moodle, have been produced with an open source software license. These free-licensed LMSs are extremely popular for e-learning (Awang and Darus 2011). Open source software (OSS) is software without a license fee and includes its computer program source code. OSS is a means of addressing the rising costs of campus-wide software applications while developing a learner-centered environment (van Rooij 2012; Williams van Rooij 2011).

The number of available OSS in LMSs (OSS-LMSs) online is continuously growing and gaining considerable prominence. This repertoire of open source options is important for any future planner interested in adopting a learning system to evaluate and select existent applications. In response to growing demands, software firms have been producing a variety of software packages that can be customized and tailored to meet the specific requirements of an organization (Jadhav and Sonar 2011; Cavus 2011). The evaluation and selection of inappropriate OSS-LMS packages adversely affect the business processes and functions of the organization. The task of OSS-LMS package evaluation and selection has become increasingly complex because of the (1) difficulties in the selection of appropriate software for business needs given the large number of OSS-LMS packages available on the market, (2) the lack of experience and technical knowledge of the decision maker, and (3) the on-going development in the field of information technology (Lin et al. 2007; Jadhav and Sonar 2009a, b; Cavus 2010).

The task of OSS-LMS evaluation and selection is often assigned under schedule pressure, and evaluators may not have time or experience to plan the evaluation and selection in detail. Therefore, evaluators may not use the most appropriate framework for evaluating and selecting OSS-LMS packages (Jadhav and Sonar 2011). Evaluating and selecting an OSS-LMS package that meets the specific requirements of an organization are complicated and time-consuming decision-making processes (Jadhav and Sonar 2009a, b). Therefore, researchers have been investigating an improved means of evaluating and selecting OSS-LMS packages.

The comprehensive insights into the evaluation and selection of OSS-LMS packages in this paper are based on three directions: available OSS-LMSs from published papers are investigated; the criteria for evaluating OSS-LMS packages are specified; the abilities of the selection methods that appear fit to solve the problem of OSS-LMS packages based on multi-criteria evaluation and selection problem are discussed to select the best OSS-LMS packages. This paper is organized as follows. “Research method” section investigates the method for evaluating and selecting OSS-LMS packages. Section “Conclusions” and 4 discuss the limitations and research contributions of the study, respectively. Section 5 concludes.

Research method

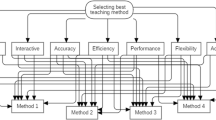

This study is based on the current and active OSS in the education field and presents a list of active OSS-LMS packages, the evaluation criteria with their descriptions to evaluate OSS-LMS packages, and the multi-attribute or multi-criteria decision-making (MADM/MCDM) techniques that are used as recommended solutions to select the best OSS-LMS packages. This study aims to (a) provide a summary of available OSS-LMS reviews and (b) bridge any gaps in technical literature regarding the evaluation and selection of OSS-LMSs by using MADM/MCDM. The conceptual framework (Fig. 1) offers an overview of the research design.

The range of our research in terms of the evaluation and selection of OSS-LMSs based on MADM/MCDM techniques applies only to the LMS packages detected in the search engine databases we used. The review of technical literature was conducted in early 2014 by using three electronic databases: Elsevier’s ScienceDirect, IEEE Xplore, and Web of Science. The search query included the keywords “evaluation learning management system,” “evaluation and selection learning management system,” “e-learning system,” “open source,” and “open source software.” In the process, the title, abstract, conclusion, and methodology were also reviewed to filter the papers by using the scope and inclusion criteria.

This portion describes an analysis of the information research taken through selected published papers. This section consists of three parts: investigation of availability of OSS LMS packages; evaluation criteria classification related to OSS LMS; recommended solutions for software selection problem based on MADM/MCDM techniques.

Available OSS-LMS packages

We surveyed papers that featured open source LMSs. After analyzing the scope of these papers, 55 studies were selected. From the review, we created an initial taxonomy of 5 categories. We used 4 papers for the adoption of OSS-LMS, 27 papers for the evaluation process, 12 papers for system-based reports, 8 papers for the utilization of an OSS-LMS, and 4 papers for the simple mention of OSS-LMSs (Abdullateef et al. 2015).

This survey aims to identify systems known to decision makers who intend to adopt such systems in their educational institutes. The following table lists OSS-LMSs and the papers in which they are cited.

Table 1 presents the frequency of references. The Moodle system is the most popular OSS-LMS because it is cited in over 40 papers. The Sakai system is the second most popular according to the amount of mentions. The Dot LRN, Claroline, and ATutor systems are fairly equal in the number of references. The Dokeos, Online Learning and Training (OLAT), and LON-CAPA systems are not as popular as the Moodle system and have 9 or less references each. WeBWork, Spaghetti Learning, and Bodington systems are mentioned in only 2 papers. The least cited systems are Totara LMS, Open Source University Support System (OpenUSS), Online Platform for Academic Learning (OPAL), LearnSquare, LogiCampus, Ganesha LMS, eFront, Chamilo, Canvas, and Bazaar LMS. The Moodle system can be deduced as the most studied system because it has the highest amount of references (Table 1).

Table 2 lists the summaries of OSS-LMS packages. The list consists of 23 OSS-LMS packages, along with their respective websites, and a brief description by the vendor to help administrators or decision makers who intend to evaluate and select an OSS-LMS.

Evaluation criteria of OSS-LMS packages

Software packages are evaluated to determine if they are suited to functional, non-functional, and user requirements. By comparing a well-prepared list of criteria, along with a number of realistic analyses, the evaluator can decide if the software is appropriate for his customer (Radwan et al. 2014). According to our study of technical literature, we selected the method for evaluating software on the basis of the following steps:

-

1.

Determine the availability of an OSS-LMS packages from a list of possibly suitable software (Blanc and Korn 1992; Jadhav and Sonar 2009a, b; Cavus 2011; Graf and List 2005).

-

2.

Specify the evaluation criteria for OSS-LMS packages (Jadhav and Sonar 2011; Cavus 2011).

The available OSS-LMS packages are presented in the previous section. The specification of the criteria for software evaluation is explained in detail in the following sections.

Specification of the evaluation criteria for OSS-LMS packages

The specification of evaluation criteria for OSS-LMS packages is divided into three main parts, namely, identified evaluation criteria, established evaluation criteria, and crossover between the identified and established evaluation criteria.

Identified evaluation criteria for OSS-LMS packages

A category was used for the evaluation process of the 27 papers were selected when surveyed the OSS-LMS packages. A total of 16 papers evaluated the learning process, whereas 11 papers evaluated the LMS. We selected the 11 papers, as well as 1 paper from the Google Scholar database that also evaluated OSS-LMS, to obtain the evaluation criteria for OSS-LMS. These studies are described in Table 3. The table offers a brief description about the evaluation criteria and which LMS was used for the evaluation process.

Established evaluation criteria for OSS-LMS packages

From our research of technical literature, we combined and classified a collection of criteria suitable for OSS-LMS evaluation. We also defined the meaning of each evaluation criterion. The criteria are categorized into several groups such as functionality, reliability, usability, efficiency, maintainability, and portability. These criteria have been featured in several studies (Franch and Carvallo 2002, 2003; Morisio and Tsoukias 1997; Oh et al. 2003; Ossadnik and Lange 1999; Rincon et al. 2005; Welzel and Hausen 1993; Stamelos et al. 2000; Jadhav and Sonar 2009a, b). Among the ISO/IEC standards related to software quality, ISO/IEC 9126-1 specifically provides a quality model definition, which is used as a framework for software evaluation (Jadhav and Sonar 2009a, b). The rest of the criteria evaluate e-learning standards, security, privacy, vendor criteria, and learner’s communication environment. The efficiency of an LMS is evaluated by the sub-criteria of the usability group. The following subsections explain the criteria groups with their sub-criteria.

Functionality group

Functionality is the ability of the software to provide functions that meet the user’s requirements when using the software under specific conditions (Bevan 1999). Functionality is used to measure the level in which an LMS satisfies the functional requirements of an organization (Jadhav and Sonar 2011). The functional group includes several criteria: course development, activity tracking, and assessment. Course development is a web interface for organizing the course’s materials. Activity tracking is important for students. Hence, we focused on the criteria that cover the students’ progress: analysis of current data, time analysis, and sign-in data. Assessment is the possibility for the tutor to test the student through various means (Arh and Blazic 2007). Table 4 presents the criteria with their sub-criteria, along with a description and availability of each sub-criterion.

Reliability group

Reliability is the ability of the software package to run consistently without crashing under specific conditions (Jadhav and Sonar 2011). Reliability is used to assess the level of fault tolerance for the software package. Furthermore, reliability can be measured by monitoring the number of failures in a given period of execution for a specific task (Bevan 1999). Table 5 depicts the reliability group, its several sub-criteria, and the description and presence of the procedure.

Usability group

Usability establishes how efficient, convenient, and easy a system is for learning (Kiah et al. 2014). The usability group and sub-criterion descriptions are indicated in Table 6 along with the presence of procedure.

Maintainability

Maintainability is the ability of the software to be modified. Modifications may include corrections, improvements, or adaptation of the software to changes in the environment, requirement, and functional specifications (Bevan 1999). Maintainability metrics are difficult to measure in a limited experimental setting; they require long-term real-world evaluation. Therefore, we will not consider the maintainability in our study (Kiah et al. 2014).

Portability group

Portability is the capability of software to be transferred from one environment to another. (Bevan 1999; Jadhav and Sonar 2011). Table 7 lists the portability criteria and its several sub-criteria along with their definitions and the presence of the procedure.

E-learning standards group

E-learning standards evaluate learning resources and provide descriptions of the learners’ profiles. E-learning standards are generally developed within the system to ensure interoperability, reusability, and portability, specifically for learning resources (Arh and Blazic 2007). Table 8 indicates the e-learning standards criteria, including several sub-criteria, the description of each sub-criterion, and the procedures.

Security and privacy group

An overall evaluation of the security of LMS systems is beyond the scope of this research. Evaluating security requires extensive analysis in several aspects; however, we have obtained certain important criteria regarding security and privacy from (Arh and Blazic 2007) and (Jadhav and Sonar 2011). We have used the security and privacy criteria to establish the ability of a system to safeguard personal data and safeguard communication from attacks and danger on a user’s computer, as well as the user’s level of permission (Arh and Blazic 2007). Table 9 depicts the security and privacy criteria, the sub-criteria, and the presence of the procedure.

Vendor criteria

Vendor criteria are utilized to evaluate the vendor capabilities of software packages. The vendor criteria are important for selecting software because they offer guides for establishing, operating, and customizing software packages (Jadhav and Sonar 2009a, b, 2011; Lee et al. 2013). Table 10 depicts the vendor criteria with a description of each sub-criterion description and presence of the procedure.

Learner’s communication group

To ensure continuous communication between teachers and students, LMSs require communication tools that use the latest technology. We use learner’s communication criteria to evaluate continuous communication and interaction (Arh and Blazic 2007). Learner’s communication has two types, namely, communication synchronous and communication asynchronous, (Arh and Blazic 2007). Table 11 describes the learner’s communication criteria, its sub-criteria, and the presence of the procedure.

Crossover between identified and established evaluation criteria for OSS-LMS packages

To determine the gap between the identified and established evaluation criteria, a crossover between the two is required. Table 12 uses x to indicate the criteria used in the papers. For each group of criteria, a percentage of papers that featured that group are provided.

Table 12 indicates a gap in the OSS-LMS evaluation criteria. The existing software evaluation criteria are insufficient, and establishing new overall quality criteria are needed to evaluate the OSS-LMS packages. The group criteria are insufficient. If we expand the analysis to calculate the criteria by using the percentage from the papers for each group, we find that the proportion of functional group criteria used is 41.8 %, which does not qualify the applicability of the functional group evaluation to a programmed LMS. The same issue is also present in the other groups. The reliability group has 13.3 %, usability group has 6.6 %, portability group has 7.5 %, e-learning standard has 33.3 %, security and privacy group has 34 %, vendor group has 6.2 %, and learner’s communication environment group has 42.5 %.

On the basis of these issues, we deduce that no group has completed the evaluation criteria compared with an established list. In our view, the problem of these percentages can be interpreted in two ways: first, the applicability of this type of criteria in the evaluation process is insufficient; second, this criterion does not meet international software engineering evaluation standards.

Another problem emerged when the software was evaluated by using several criteria (including functionality, reliability, usability, portability, e-learning standards support, security and privacy, vendor criteria, and learner’s communication environment). Each piece of software has several attributes, and each decision maker has different weights for these attributes. Thus, selecting the suitable software to use is difficult. On one hand, users who aim to use one kind of software may prioritize functionality, usability, and user support rather than other features, whereas users who intend to develop this software in actual education environments would probably target different attributes. On the other hand, LMS package selection (in particular, OSS) is an MADM/MCDM problem where each type of software is considered an available alternative for the decision maker. In other words, the MADM/MCDM problem refers to making preference decisions over the available alternatives that are characterized by multiple and usually conflicting attributes (Zaidan et al. 2014). The process of selecting the OSS-LMS packages involves the simultaneous consideration of multiple attributes to rank the available alternatives and select the best one. Thus, the selection process of the OSS-LMS packages can be considered a multi-criteria decision-making problem. Additional details of the fundamental terms of software selection based on multi-criteria analysis will be provided in the following section.

Recommended solution techniques based on MADM/MCDM

The useful techniques for dealing with MADM/MCDM problems in the real world are defined as recommended solutions in a collective method to help decision makers organize the problems to be solved and conduct the analysis, comparisons, and ranking of the alternatives or multiple platforms. Accordingly, the selection of a suitable alternative is described in previous literature (Jadhav and Sonar 2009a, b); MADM/MCDM methods seem to be suitable for solving the problem of OSS-LMS package selection. The goals of the MADM/MCDM are as follows: (1) help DMs to choose the best alternative, (2) categorize the viable alternatives among a set of available alternatives, and (3) rank the alternatives in decreasing order of performance (Zaidan et al. 2015; Jadhav and Sonar 2009a, b). Each platform has its own multiple criteria that depend on a matrix with—several names: the payoff matrix, evaluation table matrix (ETM), or decision matrix (DM) (Whaiduzzaman et al. 2014). In any MADM/MCDM ranking, the fundamental terms need to be defined, including the DM or the ETM, LMS, and its criteria (Al-Safwani et al. 2014). The ETM that consists of LMS m and n criteria must be created. With the intersection of each LMS and criteria given as x ij , we obtain matrix (x ij ) m*n :

where \(LMS_{1} , LMS_{2 } , \ldots , LMS_{m}\) are possible alternatives that decision makers must score (i.e., Moodle platform); \(C_{1} , C_{2 } , \ldots , C_{n}\) are the criteria for measuring each LMS’s performance (i.e., functionality criteria, reliability iteria, usability, etc.). Finally, x ij is the rating of alternative LMS i with respect to criterion \(C_{j}\). Certain processes need to be conducted to rank alternatives, such as normalization, maximization indicator, adding the weights, and other processes, depending on the method.

For example: Suppose D is the DM to rank the performance of alternative Ai (i = {1, 2, 3 and 4}) on the basis of Cj (j = {1, 2, 3, 4, 5 and 6}). Table 13 is an example of a multi-criteria problem reported in (Hwang and Yoon 1981).

The data in the chart is difficult to evaluate because of the large numbers of c2 and c3. See Fig. 2.

Graphic presentation of the Example in Table 13

Selecting the best software process from the software on offer is an important aspect of managing an information system. The selection process can be considered a MADM/MCDM problem that can address different and inconsistent criteria to select between predetermined decision alternatives (Oztaysi 2014). We will divide this section into two subsections. The first subsection describes the current selection methods applied for LMS selection. The second examines recent studies related to MCDM techniques applied for other applications, as shown in Fig. 3.

Selection techniques/tools applied on LMS

MADM/MCDM is an effective approach for addressing various types of decision-making problems. In the field of education, some papers employ MADM/MCDM techniques and tools to evaluate and select the best LMS. These techniques and tools include the decision expert shell (DEX shell) system (Arh and Blazic 2007; Pipan et al. 2007), easy way to evaluate LMS (EW-LMS) (Cavus 2011), and analytic hierarchy process (Srđević et al. 2012). Table 14 presents a brief description of these references, as well as the MADM/MCDM techniques and tools used for selecting the best LMS.

DEX shell system

In this section (Arh and Blazic 2007; Pipan et al. 2007), we looked at the DEX shell system for scoring, ranking, and selecting the best LMS. DEX is developed as an interactive expert system shell that offers tools to create and verify a knowledge base, evaluate choices, and explain the final results. The structure of the knowledge base and evaluation procedures closely match the multi-criteria decision-making paradigm; however, the system considers the consistency of the decision-making process and the weighed sum of the criteria is achieved with limited theoretical justification (Srđević et al. 2012).

EW-LMS

Cavus (2011) developed the EW-LMS. This system is a web-based system that can be used easily on the Internet anywhere and anytime. This system is designed as a decision support system that uses a smart algorithm derived from artificial intelligent concepts with fuzzy values. This system adopts fuzzy logic values to assign the weight of each criterion and utilizes the linear weighted attribute model to select the best alternative. However, the technique used to assign the criterion weight is inaccurate because the user weighs in the group arbitrarily uses fuzzy logic values (Jadhav and Sonar 2009a, b).

LMS selection based on AHP

Srđević et al. (2012) presented an evaluation method for selecting the most appropriate LMS. The authors propose a breakdown of complex criteria into easily comprehended sub-criteria through a method called analytic hierarchy process (AHP). AHP ranks alternative software when the features are considered and modified and deletes unsuitable software from the evaluation process (Zaidan et al. 2014; Jadhav and Sonar 2009a, b).

AHP was devised by Saaty in 1980. AHP has become a commonly used and widely distributed technique for MCDM. AHP allows the use of both qualitative and quantitative criteria at the same time. It also allows the utilization of independent variables and compares attributes in a hierarchal structure.

In a tree structure, the hierarchy begins at the top and comes down toward the goal. The lower levels correspond to the criteria, sub-criteria, and so on. In this hierarchal tree, the process starts from the leaf nodes and progresses up to the top level. Each output level represents the hierarchy that corresponds to the weight or influence of different branches originating from that level. Finally, the different branches are compared to select the most appropriate alternative on the basis of the attributes (Whaiduzzaman et al. 2014; San Cristóbal 2011; Zaidan et al. 2014; Oztaysi 2014; Srđević et al. 2012; Jadhav and Sonar 2011; Ngai and Chan 2005; Krylovas et al. 2014).

-

Step 1: Pairwise comparison between criteria;

-

Step 2: Raising the attained matrix to an arbitrarily large power;

-

Step 3: Normalizing row sums of the raised matrix using the following equation:

where

and \(a_{ij}^{\prime}\) is the corresponding element of i th and j th criterion for the raised matrix.

-

Step 4: Rating the alternatives in terms of the criteria;

-

Step 5: Synthesizing the vectors from the last two steps to obtain the final priority vectors for the alternatives

Selection techniques applied for other applications

Decision-making theories have been applied successfully in different fields over the past few decades. The variety and diversity of MADM/MCDM applications have helped decision makers. MADM/MCDM can allow the application of multiple conflicting criteria. One main objective of this study is to introduce a critical assessment of available MADM/MCDM approaches and describe how these approaches are used in OSS-LMS selection (Wang et al. 2013). We selected recent studies related to decision-making selection techniques, which are listed as follows according to Refs. (Wang et al. 2013; Jadhav and Sonar 2011; Triantaphyllou 2000; Triantaphyllou et al. 1998; Al-Safwani et al. 2014).

Analytic network process (ANP)

ANP is defined as a mathematical theory that can handle all types of dependencies systematically. It can be used in numerous fields. ANP was developed by Saaty (Wu and Lee 2007), and includes a multi-criteria decision-making method that compares different alternatives to select the best alternative.

ANP technique allows the addition of an extra relevant criterion to an existing one, which are either tangible or intangible, thus significantly influencing the decision-making process. Furthermore, ANP considers interdependencies for different levels of set criteria. Finally, ANP permits quantitative and qualitative feature analysis, thus making ANP a preferred technique in many real-world situations (Yazgan et al. 2009). ANP is composed of four major steps:

-

Step1: Model construction and problem structuring

-

Step2: Pairwise comparison matrices and priority vectors

-

Step3: Super matrix formation

-

Step4: Selection of the best alternatives

Elimination and choice expressing reality

Roy and his colleagues at the SEMA consultancy company developed Elimination and Choice Expressing Reality (ELECTRE) in 1991. Since then, several variations of the method have been coined, such as ELECTRE I, ELECTRE II, ELECTRE III, ELECTRE IV, ELECTRE IS, and ELECTRE TRI (ELECTRE Tree). All of these variations consist of two sets of parameters: veto thresholds and the importance coefficient (Mohammadshahi 2013; Whaiduzzaman et al. 2014).

The method is classified as an outranking MCDM method. Compared with previous methods, this approach is computationally complex because the simplest method of ELECTRE was reported to involve up to 10 steps. The mechanics of the method allow it to compare alternatives to determine their outranking relationships. The relationships are utilized to define and/or eliminate the alternatives subdued by others, thus subsequently reducing the amount of available alternatives.

Another feature of ELECTRE is its ability to handle both qualitative and quantitative criteria. This provides a basis for a complete order of different options. The preferred alternatives are weighed against dependence on concordance indices, and their thresholds allow the drafting of graphs that can be later used to obtain the ranking of alternatives (Rehman et al. 2012; San Cristóbal 2011; Whaiduzzaman et al. 2014).

The IF ELECTRE method includes eight steps (Wu and Chen 2009):

Step 1: Determine the DM

μij is the degree of membership of the ith alternative with respect to the jth attribute, ν ij is the degree of non-membership of the ith alternative with respect to the jth attribute, and π ij is the intuitionistic index of the ith alternative with respect to the jth attribute. M is an intuitionistic fuzzy DM where

In the DM M, we have m alternatives (from A1 to Am) and n attributes (from x1 to xn). The subjective importance of attributes, W, is given by the decision maker(s).

Step2: Determine the concordance and discordance sets

The method uses the concept of IFS relation to identify (determine) the concordance and discordance set.

The strong concordance set \(C_{\text{kl}}\) of \(A_{\text{k}}\) and \(A_{\text{l}}\) is composed of all criteria where \(A_{\text{k}}\) is preferred to \(A_{\text{l}}\). In other words, the strong concordance set \(C_{\text{kl}}\) can be formulate as

The moderate concordance set \(C_{\text{kl}}^{{''}}\) is defined as

The weak concordance set \(C_{\text{kl}}^{{''}}\) is defined as

The strong discordance set \(D_{\text{kl}}\) is composed of all criteria where \(A_{\text{k}}\). is not preferred to A 1. The strong discordance set \(D_{\text{kl}}\) can be formulated as

The moderate discordance set \(D_{kl}^{{\prime }}\) \({\prime }\) is defined as

The weak discordance set \(D_{kl}^{{''}}\) is defined as

The decision maker(s) provides the weight in different sets.

Step 3: Calculate the concordance matrix

The relative value of the concordance sets is measured by using the concordance index. The concordance index is equal to the sum of the weights associated with these criteria and relations that are contained in the concordance sets.

Thus, concordance index c kl c between A k and A l is defined as follows:

where wC, wC’, wC’’ are the weight in different sets defined in Step 2, and wj is the weight of attributes identified in Step 1.

Step 4: Calculate the discordance matrix

Discordance index dkl is defined as follows:

where wD* is equal to wD or wD’ or wD’’, which depends on the different types of discordance sets defined in Step 2.

Step 5: Determine the concordance dominance matrix

This matrix can be calculated by adopting a threshold value for the concordance index. A k can only dominate A l if its corresponding concordance index c kl exceeds a certain threshold value c−, i.e., c kl ≥ c−, and

On the basis of the threshold value, a Boolean matrix F can be constructed; the elements of which are defined as

Each element of “1” on matrix F represents a dominance of one alternative with respect to another.

Step 6: Determine the discordance dominance matrix

This matrix is constructed analogously to the F matrix on the basis of a threshold value d − to the discordance indices. The elements of g kl of the discordance dominance matrix G are calculated as follows:

The unit elements in the G matrix also represent the dominance relationships between any two alternatives.

Step 7: Determine the aggregate dominance matrix

This step involves the calculation of the intersection of the concordance dominance matrix F and discordance dominance matrix G. The resulting matrix, which is called the aggregate dominance matrix E, is defined by using its typical elements e kl as follows:

Step 8: Eliminate the less favorable alternatives

The aggregate dominance matrix E provides the partial-preference ordering of the alternatives. If e kl = 1, A k is preferred to A l for both the concordance and discordance criteria. However, A k still has the chance of being dominated by other alternatives. Hence, when A k is not dominated by ELECTRE, the following is obtained:

This condition appears difficult to apply. However, the dominated alternatives can be easily identified in the E matrix. If any column of the E matrix has at least one element, this column is “ELECTREcally” dominated by the corresponding row(s). Hence, we simply eliminate any column(s) with an element of one.

Fuzzy

Fuzzy theory was introduced by Zadeh in 1965. It is an extensive theory applied to man’s uncertainties when making a judgment. The theory can also rectify doubts associated with available data and information in multiple criteria decision making.

An MCDM model based on fuzzy theory can be used to evaluate and choose a specific alternative that matches the criteria set by the decision maker from a pool of options. Linguistic values represented by fuzzy numbers label suitable replacements and weigh them against importance. A comparison is then conducted between the numerical values and weighed values to determine the true values with Boolean logic and replace them with intervals in the decision-making process (Alabool and Mahmood 2013; Whaiduzzaman et al. 2014).

Let X be the universe of discourse, and X = {x1, x2,…, xn}. A* is a fuzzy set of X that represents a set of ordered couples {(x1, µA*(x1)), (x2, µA* (x2)),…, (xn, µA* (xn))}, µA*:X → [0,1] is the function of membership grade “Membership Function” of A*, and µA* (xi) stands for the membership degree of xi in A*.

A fuzzy number represents a fuzzy subset in the universe of discourse X that is both convex and normal. Triangular fuzzy number, trapezoidal fuzzy number, and bell-shaped fuzzy number are types of membership functions. However, this study aims to adopt the triangular fuzzy number type. A triangular fuzzy number is a fuzzy number represented by three points (p1, p2, p3) and (p1 < p2 < p3). The interpreted membership function µA* of the fuzzy number A* is:

Technique for order of preferences by similarity to ideal solution

The Technique for Order of Preferences by Similarity to Ideal Solution (TOPSIS) presents a preference index of similarity for ideal solutions. Thus, this approach can reach the closest possible solution to the ideal one and drive the solution as far away as possible from the anti-ideal solution at the same time.

A DM is first needed for this technique. The matrix is normalized using vectors, followed by the identification of both the anti-ideal and ideal solutions defined within the normalized DM. The technique was invented by Hwang and Yoon in 1981. It chooses the alternatives with the shortest and most positive distance from the ideal solution and the most negative distance from the anti-ideal solution. This technique is adopted to select a solution from a set of finite options.

Ideally, the optimal solution has the shortest distance from the ideal solution and the farthest possible distance from the anti-ideal solution at the same time. The cumulative function produced by the TOPSIS technique builds up the distance to be as near as possible from the ideal solution and the opposite from the anti-ideal solution; However, a reference point must be set near the ideal solution (ur Rehman et al. 2012; San Cristóbal 2011; Whaiduzzaman et al. 2014; Oztaysi 2014; Cui-yun et al. 2009). The TOPSIS method includes the following steps:

Step 1: Construct the normalized DM

This process tries to transform the various attribute dimensions into non-dimensional attributes. This process allows a comparison across attributes. The matrix \(\left( {{\text{x}}_{\text{ij}} } \right)_{\text{m*n}}\) is then normalized from \(\left( {{\text{x}}_{\text{ij}} } \right)_{\text{m*n}}\) to matrix \({\text{R}} = \left( {{\text{r}}_{\text{ij}} } \right)_{\text{m*n}}\) by using the normalization method:

This process will result in a new Matrix R:

Step 2: Construct the weighted normalized DM

In this process, a set of weights \(w = w_{1} , w_{2} , w_{3 } , \cdots ,w_{j} , \cdots , w_{n}\) from the decision maker is accommodated to the normalized DM. The resulting matrix can be calculated by multiplying each column from the normalized DM (R) with its associated weight \(w_{j}\). It should be noted that the set of the weights is equal to one

This process will result in a new Matrix V:

Step 3: Determining the ideal and negative ideal solutions

In this process, two artificial alternatives A * (ideal alternative) and A − (negative ideal alternative) are defined as follows:

J is a subset of \(\left\{ {i = 1,2, \ldots ,m} \right\}\), which presents the benefit attribute, whereas J − is the complement set of J and can be noted as \(J^{c}\), which is the set of cost attribute.

Step 4: Separation measurement calculation based on the Euclidean distance

In the process, the separation measurement is conducted by calculating the distance between each alternative in V and ideal vector A * by using the Euclidean distance, which is expressed as follows:

Similarly, the separation measurement for each alternative in V from the negative ideal A − is given by the following:

In the end of Step 4, two values, namely, \(S_{{i^{*} }}\) and \(S_{{i^{ - } }}\), for each alternative were counted. The two values represent the distance between each alternative and both alternative (the ideal and negative ideal).

Step 5: Closeness to the ideal solution calculation

In the process, the closeness of A i to ideal solution A * is defined as follows:

Evidently, \(C_{{i^{*} }} = 1\) if and only if (A i = A *). Similarly, \(C_{{i^{*} }} = 0\) if and only if (A i = A −)

Step 6: Ranking the alternative according to the closeness to the ideal solution

The set of alternative \(A_{i}\) can now be ranked according to the descending order of \(C_{{i^{*} }}\). The alternative with the highest value will have the highest performance.

VIKOR

The compromise ranking method, which is also known as VIKOR, is an effective technique with more than one criterion set for decision making. The acronym is derived from “Vise Kriterijumska Optimizacija I Kompromisno Resenje.” The multi-criteria ranking index is developed on the basis of the measurements of proximity to the ideal solution (usually in the form of distance). This technique was introduced by Opricovic in 2004 to optimize the evaluation dynamic and complicated processes through compromising. The technique uses linear normalization; however, the values are not dependent on just criterion evaluation. VIKOR also uses an aggregate function to balance the distance between both the ideal solution and its opposite. This helps the decision maker choose from a set of conflicting solutions (Alabool and Mahmood 2013; Whaiduzzaman et al. 2014; San Cristóbal 2011).

The VIKOR steps are as follows:

Step 1: Calculate x * i and x −i

where x ij is the value of the ith criterion function for alternative x i .

Step 2: Compute the values of Sj and Rj

where s i and R j denote the utility measure and regret measure for alternative x j . Furthermore, w i is the weight of each criterion.

Step 3: Compute the values of S *, R *

Step 4: Determine the value of \(Q_{j} for j = 1,2, \ldots ,m\) and rank the alternatives by values of Q j

where v is the weight to maximize group utility and (1 − v) is the weight of the individual regret. Usually, v = 0.5; when v > 0.5, the index of Q i will tend to show majority agreement. When v < 0.5, the index of Q i will indicate a dominantly negative attitude.

Weighted scoring method

The weighted scoring method (WSM) is a technique used to evaluate and select software packages. Ease of use is the main advantage of this technique. Suppose m alternatives A1, A2,…, Am has n criteria C1, C2,…, Cn.

The alternatives are fully characterized by DM Sij. Suppose that weights W1, W2,…, Wk is the importance value of the criteria. The suitable alternative has the highest score. To calculate the final score for alternative Ai, the following equation is employed (Jadhav and Sonar 2009a, 2011):

where Wj is the importance value of the jth criterion; Sij is the score that measures how well alternative Ai performs on criterion Cj.

According to Refs. Zaidan et al. (2015), Jadhav and Sonar (2011), Triantaphyllou and Lin (1996), Whaiduzzaman et al. (2014), the characteristics of the above MCDM techniques can be summarized as follows. The WSM technique is easy to use and understandable. However, the weights of the attribute are assigned arbitrarily; thus, the task becomes difficult when the number of criteria is high. In Refs. Silva et al. (2013), Jadhav and Sonar (2009a, b), the AHP approach was utilized for software selection because it is a flexible and powerful tool for handling both qualitative and quantitative multi-criteria problems. Furthermore, AHP procedures are applicable to individual and group decision making. However, AHP is time consuming because the mathematical calculations and number of pairwise comparisons increase with the increasing number of alternatives and criteria. Another problem is that decision makers need to re-evaluate alternatives when the number of criteria or alternatives changes. However, ranking the alternatives depends on the alternatives considered for evaluation. Thus, adding or deleting alternatives can change the final rank (rank reversal problem). The ELECTRE technique can handle both qualitative and quantitative criteria. This technique provides a basis for a complete order of different options. The VIKOR technique uses linear normalization. However, the values are not dependent on just criterion evaluation but also on an aggregate function to balance the distance between both the ideal solution and its opposite. TOPSIS is functionally associated with the problems of discrete alternatives. It is one of most practical techniques for solving real-world problems. The relative advantage of TOPSIS is its ability to identify the best alternative quickly. The major weakness of TOPSIS is that it does not provide weight elicitation and consistency checking for judgments. From this viewpoint, TOPSIS meets the requirement of paired comparisons, and the capacity limitation may not significantly dominate the process. Hence, this method would be suitable for cases with a large number of criteria and alternatives, particularly for objective or quantitative data. In a fuzzy-based approach, decision makers can use linguistic terms to evaluate alternatives that improve the decision-making procedure by accommodating the vagueness and ambiguity in human decision making. However, computing fuzzy appropriateness index values and ranking values for all alternatives are difficult.

The limitation of the study in this report is multifaceted, we covered the subject by reviewing technical literature. We recognized numerous limitations in our study. First, the work in this paper applies only to the OSS-LMSs found on search engine databases. The list was selected in January 2014 by using several databases including ScienceDirect, IEEE Xplore, and Web of Science. The keywords used in the search included “open source software”/“learning management system” or “open source software”/“e-learning system” among others. The list of included software is not comprehensive but represents current active and popular projects at the time of study to support a manageable and valid software sample. Second, in an open source world, considerable change could be expected in the span of one-and-a-half years, including the rise and fall of projects. Moreover, more studies are required to identify the current evaluation criteria because many OSS-LMSs may be updated and/or added over the coming years.

There are some contributions in this paper listed as the following:

-

Outlined samples of selection and active OSS-LMS packages with brief description in education

-

Specified the criteria to evaluate OSS-LMS packages based on two aspects; identified and established then a crossover between them to highlight the gaps in the evaluation criteria used for OSS-LMS packages and selection problems.

-

Discussed the ability of MADM/MCDM methods as a recommended solution in the future that is suitable to solve the problem of OSS-LMS packages in multi-criteria evaluation and selection problem and select the best OSS-LMS packages.

Conclusions

Several aspects related to the OSS-LMS evaluation and selection were explored and investigated. In this paper, comprehensive insights are discussed on the basis of the following directions: ascertain available OSS-LMSs from published papers; specify the criteria of evaluating OSS-LMS packages on the basis of two aspects; identify and establish a crossover between them to highlight the gaps in the evaluation criteria used for OSS-LMS packages and selection problems. The ability of selection methods that are appropriate for solving the problem of OSS-LMS packages on multi-criteria evaluation and selection problem is discussed to select the best OSS-LMS packages. The outcomes from these directions are presented in list of active OSS-LMSs consisting of 23 systems. The open issues and challenges for evaluation and selection are highlighted. Other research directions include coverage and MADM/MCDM techniques that are related to the recommended solutions, which can be discussed on the basis of researchers’ opinion of the problem design and adoption of each technique. This research direction is significant because it will help administrators and decision makers in the field of education to select the most suitable and appropriate open source LMS for their needs.

References

Abdullateef BN, Elias NF, Mohamed H, Zaidan AA, Zaidan BB (2015) Study on open source learning management systems: a survey, profile, and taxonomy. J Theor Appl Inf Technol 82(1):93–105

Ahmad M, Bakar JAA, Yahya NI, Yusof N, Zulkifli AN (2011a) Effect of demographic factors on knowledge creation processes in learning management system among postgraduate students. In: Open systems (ICOS), 2011 IEEE conference on, 25–28 Sept 2011, pp 47–52. doi:10.1109/ICOS.2011.6079250

Ahmad M, Husain A, Zulkifli AN, Mohamed NFF, Wahab SZA, Saman AM, Yaakub AR (2011b) An investigation of knowledge creation process in the LearningZone learning management system amongst postgraduate students. In: Advanced information management and service (ICIPM), 2011 7th international conference on, Nov 29 2011–Dec 1 2011, pp 54–58

Ai-Lun W, Shun-Jyh W, Shu-Ling L (2011) Grey relational analysis of students’ behavior in LMS. In: Machine learning and cybernetics (ICMLC), 2011 international conference on, 10–13 July 2011, pp 597–602. doi:10.1109/ICMLC.2011.6016794

Alabool HM, Mahmood AK (2013) Trust-based service selection in public cloud computing using fuzzy modified VIKOR method. Aust J Basic Appl Sci 7(9):211–220

Al-Ajlan A, Zedan H, Soc IC (2008) Why Moodle. In: 12th IEEE international workshop on future trends of distributed computing systems, Proceedings. International workshop on future trends of distributed computing systems, pp 58–64. doi:10.1109/ftdcs.2008.22

Alier M, Casañ M, Piguillem J (2010) Moodle 2.0: shifting from a learning toolkit to a open learning platform. In: Lytras M, Ordonez De Pablos P, Avison D et al (eds) Technology enhanced learning. Quality of teaching and educational reform, vol 73. Communications in computer and information science. Springer, Berlin, pp 1–10. doi:10.1007/978-3-642-13166-0_1

Alier M, Mayol E, Casañ MJ, Piguillem J, Merriman JW, Conde MÁ, García-Peñalvo FJ, Tebben W, Severance C (2012) Clustering projects for eLearning interoperability. J Univers Comput Sci 18(1):106–122

Al-Safwani N, Hassan S, Katuk N (2014) A multiple attribute decision making for improving information security control assessment. Int J Comput Appl 89(3):19–24

Andreou AS, Tziakouris M (2007) A quality framework for developing and evaluating original software components. Inf Softw Technol 49(2):122–141. doi:10.1016/j.infsof.2006.03.007

Arh T, Blazic BJ (2007) A multi-attribute decision support model for learning management systems evaluation. In: Digital society, 2007. ICDS ‘07. First international conference on the, 2–6 Jan 2007, pp 11–11. doi:10.1109/ICDS.2007.1

Arnold S, Fisler J (2010) OLAT: the swiss open source learning management system. In: e-education, e-business, e-management, and e-learning, 2010. IC4E ‘10. International conference on, 22–24 Jan 2010, pp 632-636. doi:10.1109/IC4E.2010.76

Awang NB, Darus MYB (2011) Evaluation of an open source learning management system: claroline. Proc Soc Behav Sci 67:416–426. doi:10.1016/j.sbspro.2012.11.346

Aydin CC, Tirkes G (2010) Open source learning management systems in e-learning and Moodle. In: Education engineering (EDUCON), 2010 IEEE, 14–16 April 2010, pp 593–600. doi:10.1109/EDUCON.2010.5492522

Bevan N (1999) Quality in use: meeting user needs for quality. J Syst Softw 49(1):89–96. doi:10.1016/S0164-1212(99)00070-9

Blanc LAL, Korn WM (1992) A structured approach to the evaluation and selection of CASE tools. Paper presented at the Proceedings of the 1992 ACM/SIGAPP symposium on applied computing: technological challenges of the 1990’s, Kansas City, Missouri, USA

Bohanec M, Rajkovič V (1990) DEX: an expert system shell for decision support. Sistemica 1(1):145–157

Bri D, Coll H, García M, Lloret J, Mauri J, Zaharim A, Kolyshkin A, Hatziprokopiou M, Lazakidou A, Kalogiannakis M (2008) Analysis and comparative of virtual learning environments. In: WSEAS international conference. Proceedings. Mathematics and computers in science and engineering, vol 5. WSEAS

Broisin J, Vidal P, Meire M, Duval E (2005) Bridging the gap between learning management systems and learning object repositories: exploiting learning context information. In: Telecommunications, 2005. Advanced industrial conference on telecommunications/service assurance with partial and intermittent resources conference/e-learning on telecommunications workshop. aict/sapir/elete 2005. Proceedings, 17–20 July 2005, pp 478–483. doi:10.1109/AICT.2005.33

Caminero AC, Hernandez R, Ros S, Robles-Gomez A, Tobarra L, Ieee (2013) Choosing the right LMS: a performance evaluation of three open-source LMS. In: 2013 IEEE global engineering education conference. IEEE global engineering education conference. IEEE, New York, pp 287–294

Capiluppi A, Baravalle A, Heap N (2010) Engaging without over-powering: a case study of a FLOSS project. In: Ågerfalk P, Boldyreff C, González-Barahona J, Madey G, Noll J (eds) Open source software: New Horizons, vol 319. IFIP advances in information and communication technology. Springer, Berlin, pp 29–41. doi:10.1007/978-3-642-13244-5_3

Cavus N (2010) The evaluation of learning management systems using an artificial intelligence fuzzy logic algorithm. Adv Eng Softw 41(2):248–254. doi:10.1016/j.advengsoft.2009.07.009

Cavus N (2011) The application of a multi-attribute decision-making algorithm to learning management systems evaluation. Br J Educ Technol 42(1):19–30. doi:10.1111/j.1467-8535.2009.01033.x

Chu LF, Erlendson MJ, Sun JS, Clemenson AM, Martin P, Eng RL (2012) Information technology and its role in anaesthesia training and continuing medical education. Best Pract Res Clin Anaesthesiol 26(1):33–53. doi:10.1016/j.bpa.2012.02.002

Conde MA, García F, Rodríguez-Conde MJ, Alier M, García-Holgado A (2014) Perceived openness of learning management systems by students and teachers in education and technology courses. Comput Hum Behav 31:517–526. doi:10.1016/j.chb.2013.05.023

Corrado M, De Vito L, Ramos H, Saliga J (2012) Hardware and software platform for ADCWAN remote laboratory. Measurement 45(4):795–807. doi:10.1016/j.measurement.2011.12.003

Cui-yun M, Qiang M, Zhi-qiang M (2009) A new method for information system selection. In: Future information technology and management engineering, 2009. FITME ‘09. Second international conference on, 13–14 Dec 2009, pp 65–68. doi:10.1109/FITME.2009.22

Dehnavi MK, Fard NP (2011) Presenting a multimodal biometric model for tracking the students in virtual classes. Proc Soc Behav Sci 15:3456–3462. doi:10.1016/j.sbspro.2011.04.318

del Blanco A, Torrente J, Serrano A, Martinez-Ortiz I, Fernandez-Manjon B (2012) Deploying and debugging educational games using e-learning standards. In: Global engineering education conference (EDUCON), 2012 IEEE, 17–20 April 2012, pp 1–7. doi:10.1109/EDUCON.2012.6201186

Erguzen A, Erel S, Uzun I, Bilge HS, Unver HM (2012) KUZEM LMS: a new learning management system for online education. Energy Educ Sci Technol Pt B 4(3):1865–1878

Franch X, Carvallo JP (2002) A quality-model-based approach for describing and evaluating software packages. In: Requirements engineering, 2002. Proceedings. IEEE Joint international conference on, 2002, pp 104–111. doi:10.1109/ICRE.2002.1048512

Franch X, Carvallo JP (2003) Using quality models in software package selection. Softw IEEE 20(1):34–41. doi:10.1109/MS.2003.1159027

Gil R, Sancristobal E, Martin S, Diaz G, Castro M, Peire J, Nishihara A (2008) New learning services: customized and secured evaluation. In: Internet and web applications and services, 2008. ICIW ‘08. Third international conference on, 8–13 June 2008, pp 457–462. doi:10.1109/ICIW.2008.47

Gotel O, Scharff C, Wildenberg A, Bousso M, Bunthoeurn C, Des P, Kulkarni V, Na Ayudhya SP, Sarr C, Sunetnanta T (2008) Global perceptions on the use of WeBWorK as an online tutor for computer science. In: Frontiers in education conference, 2008. FIE 2008. 38th annual, 22–25 Oct 2008, pp T4B-5–T4B-10. doi:10.1109/FIE.2008.4720331

Goyal E, Purohit S (2010) Study the applicability of Moodle in management education. In: Technology for education (T4E), 2010 International conference on, 1–3 July 2010, pp 202–205. doi:10.1109/T4E.2010.5550094

Graf S, List B (2005) An evaluation of open source e-learning platforms stressing adaptation issues. In: Advanced learning technologies, 2005. ICALT 2005. Fifth IEEE international conference on, 5–8 July 2005, pp 163–165. doi:10.1109/ICALT.2005.54

Hailong M, Jing L, Jun H (2009) Notice of retraction <BR> applied research on atutor. In: E-learning, e-business, enterprise information systems, and e-government, 2009. EEEE ‘09. International conference on, 5–6 Dec 2009, pp 107–110. doi:10.1109/EEEE.2009.19

Hao L, Qingtang L, Qiao W (2009) The research on permission management of the open-source Sakai platform. In: Industrial and information systems, 2009. IIS ‘09. International conference on, 24–25 April 2009, pp 66–69. doi:10.1109/IIS.2009.37

Hargis J, El Kadi M, Ryan J, Butler J, Aston P (2012) Facilitating teaching and learning: From proprietary to community source. In: Information technology based higher education and training (ITHET), 2012 International conference on, 21–23 June 2012, pp 1–8. doi:10.1109/ITHET.2012.6246067

Heller A, Englisch N, Schneider S, Hardt W (2012) Efficient course creation with templates in the OPAL learning management system. In: Interdisciplinary engineering design education conference (IEDEC), 2012 2nd, 19–19 March 2012, pp 56–59. doi:10.1109/IEDEC.2012.6186923

Heradio R, Fernández-Amorós D, Cabrerizo FJ, Herrera-Viedma E (2012) A review of quality evaluation of digital libraries based on users’ perceptions. J Inf Sci 38(3):269–283

Hwang CL, Yoon KP (1981) Multiple attribute decision making: methods and applications. Springer, New York

ISO/IEC9241-11 (1998) ISO/IEC, 9241-11 ergonomic requirements for office work with visual display terminals (VDT)s—part 11 guidance on usability

Itmazi JA, Megías MG (2005) Survey: comparison and evaluation studies of learning content management systems (unpublished)

Jadhav A, Sonar R (2009a) Analytic hierarchy process (AHP), Weighted scoring method (WSM), and hybrid knowledge based system (HKBS) for software selection: a comparative study. In: Emerging trends in engineering and technology (ICETET), 2009 2nd international conference on, 16–18 Dec 2009, pp 991–997. doi:10.1109/ICETET.2009.33

Jadhav AS, Sonar RM (2009b) Evaluating and selecting software packages: a review. Inf Softw Technol 51(3):555–563. doi:10.1016/j.infsof.2008.09.003

Jadhav AS, Sonar RM (2011) Framework for evaluation and selection of the software packages: a hybrid knowledge based system approach. J Syst Softw 84(8):1394–1407. doi:10.1016/j.jss.2011.03.034

Jianxia C, Qianqian L, Lin CYK, Huapeng C, Chunzhi W (2011) Application of innovative technologies on the e-learning system. In: Computer science & education (ICCSE), 2011 6th international conference on, 3–5 Aug 2011, pp 1033–1036. doi:10.1109/ICCSE.2011.6028812

Kavcic A (2011) Implementing content packaging standards. In: EUROCON-international conference on computer as a tool (EUROCON), 2011 IEEE. IEEE, pp 1–4

Keats DW, Beebe MA (2004) Addressing digital divide issues in a partially online masters programme in Africa: the NetTel@Africa experience. In: Advanced learning technologies, 2004. Proceedings. IEEE international conference on, 30 Aug–1 Sept 2004, pp 953–957. doi:10.1109/ICALT.2004.1357728

Kiah MLM, Haiqi A, Zaidan BB, Zaidan AA (2014) Open source EMR software: profiling, insights and hands-on analysis. Computer Methods Progr Biomed. doi:10.1016/j.cmpb.2014.07.002

Kokensparger B, Brooks D (2013) Using audio introductions to improve programming and oral skills in CS0 students. J Comput Sci Coll 29(1):68–74

Krylovas A, Zavadskas EK, Kosareva N, Dadelo S (2014) New KEMIRA method for determining criteria priority and weights in solving MCDM problem. Int J Inf Technol Decis Mak 13(06):1119–1133. doi:10.1142/S0219622014500825

Leba M, Ionica AC, Edelhauser E (2013) QFD—method for elearning systems evaluation. Proc Soc Behav Sci 83:357–361. doi:10.1016/j.sbspro.2013.06.070

Lee Y-C, Tang N-H, Sugumaran V (2013) Open source CRM software selection using the analytic hierarchy process. Inf Syst Manag 31(1):2–20. doi:10.1080/10580530.2013.854020

Ligus J, Zolotova I, Ligusova J, Karch P (2012) Cybernetic education centre: monitoring and control of learner’s e-learning study in the field of cybernetics and automation by coloured petri nets model. In: Information technology based higher education and training (ITHET), 2012 International conference on, 21–23 June 2012, pp 1–8. doi:10.1109/ITHET.2012.6246060

Lin H-Y, Hsu P-Y, Sheen G-J (2007) A fuzzy-based decision-making procedure for data warehouse system selection. Expert Syst Appl 32(3):939–953

Mekpiroon O, Tummarattananont P, Pravalpruk B, Buasroung N, Apitiwongmanit N (2008) LearnSquare: Thai open-source learning management system. In: Electrical engineering/electronics, computer, telecommunications and information technology, 2008. ECTI-CON 2008. 5th International conference on, 14–17 May 2008, pp 185–188. doi:10.1109/ECTICON.2008.4600403

Mohammadshahi Y (2013) A state-of-art survey on TQM applications using MCDM techniques. Decis Sci Lett 2(3):125–134

Mohd Bekri R, Ruhizan MY, Norazah MN, Faizal Amin Nur Y, Tajul Ashikin H (2013) Development of Malaysia skills certificate E-portfolio: a conceptual framework. Proc Soc Behav Sci 103:323–329. doi:10.1016/j.sbspro.2013.10.340

Morisio M, Tsoukias A (1997) IusWare: a methodology for the evaluation and selection of software products. Software engineering IEE Proceedings [see also software, IEE proceedings] 144 (3):162–174. doi:10.1049/ip-sen:19971350

Muhammad A, Iftikhar A, Ubaid S, Enriquez M (2011) A weighted usability measure for e-learning systems. J Am Sci 7(2):670–686

Nagi K, Suesawaluk P (2008) Research analysis of Moodle reports to gauge the level of interactivity in elearning courses at Assumption University, Thailand. In: Computer and communication engineering, 2008. ICCCE 2008. International conference on, 13–15 May 2008, pp 772–776. doi:10.1109/ICCCE.2008.4580710

Ngai EWT, Chan EWC (2005) Evaluation of knowledge management tools using AHP. Expert Syst Appl 29(4):889–899. doi:10.1016/j.eswa.2005.06.025

Oh K, Lee N, Rhew S (2003) A selection process of COTS components based on the quality of software in a special attention to internet. In: Chung C-W, Kim C-K, Kim W, Ling T-W, Song K-H (eds) Web and communication technologies and internet-related social issues—HSI 2003, vol 2713. Lecture notes in computer science, vol 2713. Springer, Berlin, pp 626–631. doi:10.1007/3-540-45036-X_66

Ossadnik W, Lange O (1999) AHP-based evaluation of AHP-software. Eur J Oper Res 118(3):578–588. doi:10.1016/S0377-2217(98)00321-X

Oztaysi B (2014) A decision model for information technology selection using AHP integrated TOPSIS-Grey: The case of content management systems. Knowledge-Based Syst 70:44–54

Pecheanu E, Stefanescu D, Dumitriu L, Segal C (2011) Methods to evaluate open source learning platforms. In: Global engineering education conference (EDUCON), 2011 IEEE, 4–6 April 2011, pp 1152–1161. doi:10.1109/EDUCON.2011.5773292

Pesquera A, Morales R, Pastor R, Ros S, Hernandez R, Sancristobal E, Castro M (2011) dotLAB: integrating remote labs in dotLRN. In: Global engineering education conference (EDUCON), 2011 IEEE, 4–6 April 2011, pp 111–117. doi:10.1109/EDUCON.2011.5773123

Pipan M, Arh T, Blazic BJ (2007) Evaluation and selection of the most applicable Learning Management System. In: Proceedings of the 7th WSEAS international conference on applied informatics and communications. World Scientific and Engineering Acad and Soc, Athens

Pishva D, Nishantha GGD, Dang HA (2010) A survey on how Blackboard is assisting educational institutions around the world and the future trends. In: Advanced communication technology (ICACT), 2010 The 12th international conference on, 7–10 Feb 2010, pp 1539–1543

Radwan NM, Senousy MB, Alaa El Din M (2014) Current trends and challenges of developing and evaluating learning management systems. Int J e-Education e-Business e-Management e-Learning 4(5):361

Rincon G, Alvarez M, Perez M, Hernandez S (2005) A discrete-event simulation and continuous software evaluation on a systemic quality model: an oil industry case. Inf Manag 42(8):1051–1066. doi:10.1016/j.im.2004.04.007

Rodríguez Ribón J, García Villalba L, Miguel Moro T, T-H Kim (2013) Solving technological isolation to build virtual learning communities. Multimed Tools Appl. doi:10.1007/s11042-013-1542-5

Romero C, López M-I, Luna J-M, Ventura S (2013) Predicting students’ final performance from participation in on-line discussion forums. Comput Educ 68:458–472. doi:10.1016/j.compedu.2013.06.009

Ros S, Hernandez R, Robles-Gomez A, Caminero AC, Tobarra L, Ruiz ES (2013) Open service-oriented platforms for personal learning environments. Internet Comput IEEE 17(4):26–31. doi:10.1109/MIC.2013.73

Rossi PG, Carletti S (2011) MAPIT: a pedagogical-relational ITS. Proc Comput Sci 3:820–826. doi:10.1016/j.procs.2010.12.135

San Cristóbal JR (2011) Multi-criteria decision-making in the selection of a renewable energy project in Spain: the Vikor method. Renew Energy 36(2):498–502. doi:10.1016/j.renene.2010.07.031

Sarrab M, Rehman OMH (2014) Empirical study of open source software selection for adoption, based on software quality characteristics. Adv Eng Softw 69:1–11. doi:10.1016/j.advengsoft.2013.12.001

Schober A, Keller L (2012) Impact factors for learner motivation in Blended Learning environments. In: Interactive collaborative learning (ICL), 2012 15th international conference on, 26–28 Sept 2012, pp 1–5. doi:10.1109/ICL.2012.6402063

Seffah A, Donyaee M, Kline RB, Padda HK (2006) Usability measurement and metrics: a consolidated model. Softw Qual J 14(2):159–178

Silva JP, Goncalves JJ, Fernandes J, Cunha MM (2013) Criteria for ERP selection using an AHP approach. In: Information systems and technologies (CISTI), 2013 8th Iberian conference on, 2013. IEEE, pp 1–6

Skellas AI, Ioannidis GS (2011) Web-design for learning primary school science using LMSs: evaluating specially designed task-oriented design using young schoolchildren. In: Interactive collaborative learning (ICL), 2011 14th international conference on, 21–23 Sept 2011, pp 313–318. doi:10.1109/ICL.2011.6059597

Srđević B, Pipan M, Srđević Z, Arh T (2012) AHP supported evaluation of LMS quality. In: International workshop on the interplay between user experience (UX) Evaluation and system development (I-UxSED 2012), p 52

Stamelos I, Vlahavas I, Refanidis I, Tsoukiàs A (2000) Knowledge based evaluation of software systems: a case study. Inf Softw Technol 42(5):333–345. doi:10.1016/S0950-5849(99)00093-2

Stoltenkamp J, Kasuto OA (2011) E-Learning change management and communication strategies within a HEI in a developing country: institutional organisational cultural change at the University of the Western Cape. Educ Inf Technol 16(1):41–54

Sung WJ, Kim JH, Sung-Yul R (2007) A quality model for open source software selection. In: Advanced Language processing and web information technology, 2007. ALPIT 2007. Sixth international conference on, 22–24 Aug 2007, pp 515–519. doi:10.1109/ALPIT.2007.81

Tawfik M, Cristobal ES, Pesquera A, Gil R, Martin S, Diaz G, Peire J, Castro M, Pastor R, Ros S, Hernandez R (2012) Shareable educational architectures for remote laboratories. In: Technologies applied to electronics teaching (TAEE), 2012, 13–15 June 2012, pp 122–127. doi:10.1109/TAEE.2012.6235420

Tawfik M, Sancristobal E, Martin S, Diaz G, Peire J, Castro M (2013) Expanding the boundaries of the classroom: implementation of remote laboratories for industrial electronics disciplines. Ind Electron Mag IEEE 7(1):41–49. doi:10.1109/MIE.2012.2206872

Terbuc M (2006) Use of free/open source software in e-education. In: Power electronics and motion control conference, 2006. EPE-PEMC 2006. 12th international, Aug 30 2006–Sept 1 2006, pp 1737–1742. doi:10.1109/EPEPEMC.2006.4778656

Tick A (2009) The MILES Military English learning system pilot project. In: Computational cybernetics, 2009. ICCC 2009. IEEE international conference on, 26–29 Jan 2009, pp 57–62. doi:10.1109/ICCCYB.2009.5393930

Triantaphyllou E (2000) Multi-criteria decision making methods. In: Multi-criteria decision making methods: a comparative study. Springer, Dordrecht, pp 5–21

Triantaphyllou E, Lin C-T (1996) Development and evaluation of five fuzzy multiattribute decision-making methods. Int J Approx Reason 14(4):281–310. doi:10.1016/0888-613X(95)00119-2

Triantaphyllou E, Shu B, Sanchez SN, Ray T (1998) Multi-criteria decision making: an operations research approach. Encycl Electr Electron Eng 15:175–186

UR Rehman Z, Hussain OK, Hussain FK (2012) IAAS cloud selection using MCDM methods. In: e-Business engineering (ICEBE), 2012 IEEE ninth international conference on, 9–11 Sept 2012, pp 246–251. doi:10.1109/ICEBE.2012.47

van Rooij SW (2012) Open-source learning management systems: a predictive model for higher education. J Comput Assist Learn 28(2):114–125. doi:10.1111/j.1365-2729.2011.00422.x

Wang X-F, Wang J-Q, Deng S-Y (2013) A method to dynamic stochastic multicriteria decision making with log-normally distributed random variables. Sci World J 2013:8. doi:10.1155/2013/202085

Wannous M, Nakano H (2010) Supporting the delivery of learning-contents with laboratory activities in Sakai: work-in-progress report. In: Education engineering (EDUCON), 2010 IEEE, 14–16 April 2010, pp 165–170. doi:10.1109/EDUCON.2010.5492582

Weinbrenner S, Hoppe HU, Leal L, Montenegro M, Vargas W, Maldonado L (2010) Supporting cognitive competence development in virtual classrooms—personal learning management and evaluation using pedagogical agents. In: Advanced learning technologies (ICALT), 2010 IEEE 10th international conference on, 5–7 July 2010, pp 573–577. doi:10.1109/ICALT.2010.163

Welzel D, Hausen H-L (1993) A five step method for metric-based software evaluation—effective software metrication with respect to quality standards. Microprocess Microprogram 39(2–5):273–276. doi:10.1016/0165-6074(93)90104-S

Wen T-S, Lin HC (2007) The study of e-learning for geographic information curriculum in higher education. In: Proceedings of 6th international conference on applied computer science, Hangzhou, China, published by World Scientific and Engineering Academy and Society, pp 626–621

Whaiduzzaman M, Gani A, Anuar NB, Shiraz M, Haque MN, Haque IT (2014) Cloud service selection using multicriteria decision analysis. Sci World J 2014:459375

Williams van Rooij S (2011) Higher education sub-cultures and open source adoption. Comput Educ 57(1):1171–1183. doi:10.1016/j.compedu.2011.01.006

Wu M-C, Chen T-Y (2009) The ELECTRE multicriteria analysis approach based on intuitionistic fuzzy sets. In: Fuzzy systems, 2009. FUZZ-IEEE 2009. IEEE international conference on, 2009. IEEE, pp 1383–1388

Wu W-W, Lee Y-T (2007) Selecting knowledge management strategies by using the analytic network process. Expert Syst Appl 32(3):841–847. doi:10.1016/j.eswa.2006.01.029

Nielsen J (1994) Usability engineering. Elsevier, Amsterdam

Yazgan HR, Boran S, Goztepe K (2009) An ERP software selection process with using artificial neural network based on analytic network process approach. Expert Syst Appl 36(5):9214–9222. doi:10.1016/j.eswa.2008.12.022

Zaidan AA, Zaidan BB, Al-Haiqi A, Kiah MLM, Muzamel H, Abdulnabi M (2014) Evaluation and selection of open-source EMR software packages based on integrated AHP and TOPSIS. J Biomed Inform. doi:10.1016/j.jbi.2014.11.012

Zaidan AA, Zaidan BB, Hussain M, Haiqi A, Mat Kiah ML, Abdulnabi M (2015) Multi-criteria analysis for OS-EMR software selection problem: a comparative study. Decis Support Syst 78:15–27. doi:10.1016/j.dss.2015.07.002

Authors’ contributions

This project has been originally suggested by BNA, Dr. NFE, Dr. HM, Dr. AAZ, BBZ as plan to review the evaluation and selection the best open source learning management system packages. BNA has been assigned to as a part of this research grant which has been funded by means of Malaysia Ministry of Education under the Exploratory Research Grant Scheme (ERGS) project code ERGS/1/2013/ICT01/UKM/02/3. We have success on this job base on the plan, through review the listed of active open source learning management systems; Identified and established an evaluation criteria for open source learning management systems (consisting of main groups with sub-criteria); Highlight the methods techniques, based on multi criteria decision making, for selecting the best software; A Insight into open source learning management systems issues and challenges were consistently stated as barriers to adopt and select OSS in education. The final result for the project has been provided on this paper by BNA. The analysis and the finding has been checked by, Dr. HM, AAZ and BBZ and the final paper has been reviewed by Dr. NFE. All authors read and approved the final manuscript.

Acknowledgements

This research was funded by means of Malaysia Ministry of Education under the Exploratory Research Grant Scheme (ERGS) project code ERGS/1/2013/ICT01/UKM/02/3. The authors take the responsibility for the contents.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Abdullateef, B.N., Elias, N.F., Mohamed, H. et al. An evaluation and selection problems of OSS-LMS packages. SpringerPlus 5, 248 (2016). https://doi.org/10.1186/s40064-016-1828-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40064-016-1828-y