Abstract

Background

Sepsis is a life-threatening clinical condition that happens when the patient’s body has an excessive reaction to an infection, and should be treated in one hour. Due to the urgency of sepsis, doctors and physicians often do not have enough time to perform laboratory tests and analyses to help them forecast the consequences of the sepsis episode. In this context, machine learning can provide a fast computational prediction of sepsis severity, patient survival, and sequential organ failure by just analyzing the electronic health records of the patients. Also, machine learning can be employed to understand which features in the medical records are more predictive of sepsis severity, of patient survival, and of sequential organ failure in a fast and non-invasive way.

Dataset and methods

In this study, we analyzed a dataset of electronic health records of 364 patients collected between 2014 and 2016. The medical record of each patient has 29 clinical features, and includes a binary value for survival, a binary value for septic shock, and a numerical value for the sequential organ failure assessment (SOFA) score. We disjointly utilized each of these three factors as an independent target, and employed several machine learning methods to predict it (binary classifiers for survival and septic shock, and regression analysis for the SOFA score). Afterwards, we used a data mining approach to identify the most important dataset features in relation to each of the three targets separately, and compared these results with the results achieved through a standard biostatistics approach.

Results and conclusions

Our results showed that machine learning can be employed efficiently to predict septic shock, SOFA score, and survival of patients diagnoses with sepsis, from their electronic health records data. And regarding clinical feature ranking, our results showed that Random Forests feature selection identified several unexpected symptoms and clinical components as relevant for septic shock, SOFA score, and survival. These discoveries can help doctors and physicians in understanding and predicting septic shock. We made the analyzed dataset and our developed software code publicly available online.

Similar content being viewed by others

Background

Sepsis is a dangerous clinical condition that happens when the body over-reacts to an infection, and its mortality is strictly related to sepsis severity. The more severe is the sepsis, the more risks there are for the patient.

Predicting the severity of a sepsis episode and if a patient will survive it are urgent tasks, because of the riskiness of this condition. A severe sepsis episode is called septic shock. Septic shocks require the prompt use of vasopressors, and must be treated immediately to improve the survival chances of the patient [1].

In addition to sepsis severity and survival prediction, another important task for doctors and physicians is to anticipate the possible sequential organ failure assessment that the patient will experience as a consequence of the sepsis episode. To diagnose the level of organ failure happening in the body, the biomedical community takes advantage of the sequential organ failure assessment (SOFA) score [1], which is based upon six different rates (respiratory, cardiovascular, hepatic, coagulation, renal and neurological systems) [1].

In this context, machine learning and artificial intelligence applied to electronic health records (EHRs) of patients diagnosed with sepsis can provide cheap, fast, non-invasive and effective methods that are able to predict the aforementioned targets (septic shock, survival, and SOFA score), and to detect the most predictive symptoms and risk factors from the features available in the electronic health records. Scientists, in fact, already took advantage of machine learning for survival or diagnosis prediction and for clinical feature ranking several times in the past [2], for example to analyze datasets of patients having heart failure [3, 4], mesothelioma [5], neuroblastoma [6–8], and breast cancer [9].

Several researchers employed computational intelligence algorithms to medical records of patients diagnosed with sepsis, too, especially for clinical decision-making purposes.

Gultepe and colleagues [10] applied machine learning to the EHRs of 741 adults diagnosed with sepsis at the University of California Davis Health System (California, USA) to predict lactate levels and mortality risk of the patients. Tsoukalas et al. [11] employed several pattern recognition algorithms to analyze medical record data of 1,492 patients diagnosed with sepsis at the same health centre. Their data-derived antibiotic administration policies improved the conditions of patients. Taylor and colleagues [12] analyzed medical records of a cohort of approximately 260 thousand individuals from three hospitals in the USA. They used machine learning to predict in-hospital mortality of patients diagnosed with sepsis, and to show the superior results of machine learning over traditional univariate biostatistics techniques. Horng et al. [13] applied computational intelligence techniques to medical records of 230,936 patient visits containing heterogeneous data: free text, vital signs, and demographic information. The dataset was collected at the Beth Israel Deaconess Medical Center (BIDMC) of Boston (Massachusetts, USA). Shimabukuro and colleagues [14] employed machine learning techniques to clinical records of 142 patients with severe sepsis from University of California San Francisco Medical Center (California, USA) to predict the in-hospital length of stay and mortality rate. Burdick et al. [15] used several computational intelligence methods on medical records of 2,296 patients related to sepsis, that were provided by Cabell Huntington Hospital (Huntington, West Virginia, USA). Their goal was to predict patients’ mortality and in-hospital length of stay. Calvert and colleagues [16] merged together several datasets of clinical records of sepsis-related patients to create a large cohort of approximately 500 thousand individuals. Then they used machine learning to forecast how the high-risk patients are likely to have a sepsis episode. Barton et al. [17], lastly, re-analyzed two datasets previously exploited [13, 14] to predict sepsis up to 48 hours in advance.

Scientists employed machine learning for the prediction of sepsis in infants in the neonatal intensive care unit (NICU), as well. In 2014, Mani and colleagues [18] applied nine machine learning methods to 299 infants admitted to the neonatal intensive care unit in the Monroe Carell Junior Children’s Hospital at Vanderbilt (Nashville, Tennessee, USA). Barton et al. [19] took advantage of data mining classifiers to analyze the EHRs of 11,127 neonatal patients collected at the University of California San Francisco Medical Center (California, USA). More recently, Masino and his team [20] applied computational intelligence classifiers to the data of infants admitted at the neonatal intensive care unit of the Children’s Hospital of Philadelphia (Pennsylvania, USA).

To recap, four studies applied machine learning to minimal electronic health records to diagnose sepsis or predict survival of patients [10, 11, 21, 22], while six other studies applied them to complete electronic health records for the same goals [12–14, 16, 17, 19]. The study of Burdick and colleagues [15] even reported an observed decreased in the mortality at the hospital where the computational intelligence methods were applied to recognize sepsis. Only two articles, additionally, include a feature ranking phase to the binary classification: Mani et al. [18] identified as most predictive variables hematocrit or packed cell volume, chorioamnionitis and respiratory rate, while Masino and coauthors [20] highlighted central venous line, mean arterial pressure, respiratory rate difference, systolic blood pressure.

Our present study fits in the latter category: we use several machine learning methods not only to predict survival, SOFA score, and septic shock, but also to detect the most relevant and predictive variables from the electronic health records. Moreover, we also perform a feature ranking through traditional biostatistics rates, and make a comparison between the results obtained with these two different approaches. And, differently from all the studies mentioned earlier, we do not focus only on predicting survival and diagnosing sepsis, but we also make computational predictions on the SOFA score, that means predicting how much and how many organs will fail because of the septic episode.

Regarding scientific challenges and competitions, in 2019 PhysioNet [23, 24], an online platform for physiologic data sharing, launched an online scientific challenge for the prediction of early sepsis in medical records [25].

On the business side, the San Francisco bay area startup company Dascena Inc. recently released InSight, a machine learning tool able to computational predict sepsis in EHR data [26]. Desautels et al. [21] applied InSight to predict sepsis in the medical records of the Multiparameter Intelligent Monitoring in Intensive Care (MIMIC)-III dataset [27].

In the present study, we analyzed a dataset of electronic health records of patients having cardiovascular heart diseases [28]: each patient profile has 29 clinical features, including a binary value for survival, a binary value for septic shock, and a numerical value for the sequential organ failure assessment (SOFA) score. We separately used each of these three features as an independent target, and employed several machine learning classifiers to predict it with high accuracy and precision. Afterwards, we employed machine learning to detect the most important features of the dataset for the three target separately, and compared its results with the results obtained through traditional biostatistics univariate techniques.

Dataset

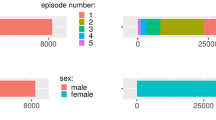

The original dataset contains electronic health records (EHRs) of 29 features for 364 patients, and was first analyzed by Yunus and colleagues to investigate the role of procalcitonin in sepsis [29]. These 364 patients with sepsis diagnosis entered the general medical ward and intensive care unit between September 2014 and December 2016 at the Methodist Medical Center and Proctor Hospital (today called UnityPoint Health – Methodist ∣ Proctor) in Peoria, Illinois, USA [29]. The group of patients include 189 men and 175 women, aged 20–86 years old [29, 30].

Each patient stayed at the hospital for a period between 1 and 48 days, and her/his dataset profile represent the co rresponding clinical record at the moment of discharge or death. Since the maximum observation window was 48 days, we consider our binary predictions in reference to the same time frame.

The dataset collectors defined septic shock “as a condition that requires the use of vasopressors in order to maintain a mean arterial pressure (MAP) of 65 mm Hg or above, and a persistent lactate greater than 2 mmol/L in spite of adequate fluid resuscitation” [29, 30].

We report the quantitative characteristics of the dataset (amount of individuals and percentage of individuals for each binary feature condition; median and mean for each numeric or category feature) in Table 1, and the interpretation details (meaning, measurement unit, and value range in the dataset) in Table 2. More information about the analyzed dataset can be found in the original dataset curators publication [29, 30].

We derived the survival feature from the outcome feature of the original dataset (Supplementary information) [31]. The extent of the infection feature can have 3 values that represent bacteremia, focal infection, or both. The urine output 24 hours feature can have 3 values that represent >500 mL, [200,500] mL, or <200 mL.

Regarding the dataset imbalance, considering septic shock as the target, there are 297 individuals without septic shock (having value 0 for the vasopressors feature), corresponding to 81.59% of the total size, and 67 individuals with septic shock (having value 1 for the vasopressors feature), corresponding to 18.41% of the total size.

When we consider the survival as target, instead we observe 48 deceased patients (class 0, corresponding to 13.19% of all the individuals), and observe 316 survived patients (class 1, corresponding to 86.81% of all the individuals).

The dataset with septic shock as target results therefore negatively imbalanced, and the dataset with survival as target results positively imbalanced.

Methods

We implemented our computational pipeline in the open-license, free R programming language, using common machine learning packages (randomForest, caret, e1071, keras, ROSE, DMwR, mltools, DescTools). We also released all our code scripts publicly online (“Availability of data and materials”).

As described in subsection S7.2, we can recap the computation pipeline of the analsys with the following steps:

-

1

construction of the dataset (“Dataset” section);

-

2

definition of the three tasks:

-

a

the binary classification problem of predicting septic shock (vasopressors);

-

b

the regression problem of predicting SOFA score;

-

c

the binary classification problem of predicting survival;

based on a subset of the available variables selected as input variables (Table 2);

-

a

-

3

for each of these three tasks (septic shock, survival, and SOFA score) and for each of the algorithms (DT, RF, SVM (linear), SVM (kernel), and NN, (DT, RF, SVM (linear), SVM (kernel), NB, k-NN, LR, and DL, noting that NB and LR can be used just for classification problems) we built a model using the MS strategy (“Methods” section) where we set the number of fold k=10. During the MS we searched the hyper-parameters using the following ranges

-

a

DT: \(\mathcal {H} = \{ d \} \in \{ 2, \, 4, \, 6, \, 8, \, 10, \, 12, \, 14 \}\);

-

b

RF: we set nt=1000 since increasing it does not increases the accuracy;

-

c

SVM (linear): \(\mathcal {H} = \{ C \} \in \mathcal {R}\);

-

d

NB: we use kernel density estimate no Laplace correction and no adjustment (R library caretnb algorithm);

-

e

k-NN: \(\mathcal {H} = \{ k \} \in \{ 1,3,5,11 \}\);

-

f

LR: \(\mathcal {H} = \{ \lambda \} \in \mathcal {R}\);

-

g

DL: \(\mathcal {H} = \{ l_{1}, l_{2}, l_{3}, wd \} \in \{ 2,4,8,16,32 \} \times \{ 2,4,8,16,32 \} \times \{ 2,4,8,16,32 \} \times \{.001,.01,.1,1 \} \) ;

-

h

SVM (kernel): \(\mathcal {H} = \{ C, \gamma \} \in \mathcal {R} \times \mathcal {R}\);

-

i

NN: \(\mathcal {H} = \{ n_{h}, \, p_{d}, \, p_{b}, \, r_{l}, \, \rho, \, r_{d} \} \in \{ 5, \, 10, \, 20, \, 40, \, 80, \, 160 \} \times \{ 0, \, 0.001, \, 0.01, \, 0.1 \} \times \{ 0.1, \, 1 \} \times \{ 0.001,0.01,0.1,1 \} \times \{ 0.9,0.09 \} \times \{ 0.001,0.01,0.1,1 \}\) and as activation function we used the rectified linear unit (ReLU) [32];

where \(\mathcal {R} = \{ 0.0001, \, 0.0005, \, 0.001, \, 0.005, \, 0.01, \, 0.05, \, 0.1, \, 0.5, \, 1, \, 5, \, 10, \, 50 \}\);

-

a

-

4

for each of the constructed models we reported the results using the EE strategy and previously introduced the metrics (“Methods” section) together with the standard deviation where we set nr=100;

-

5

for each of the tasks we reported the ranking of the features selected by the two feature ranking procedures (MDI and MDA, “Methods” section) together with the mode of the ranking position where we set pFR=0.7 and nFR=100, and aggregated through Borda’s method [33].

We report and discuss the results in the next sections.

Results

In this section we show the results of applying the classification and regression methods (“Methods” section) on the described dataset (“Dataset” section).

Target predictions

In this section, we describe the results obtained for the binary prediction of septic shock, for the SOFA score regression estimation, and for the binary prediction of survival in the ICU. For the two binary classifications (septic shock prediction and survival prediction), we used τ=0.5 as cut-off threshold for the confusion matrices. We chose this value because it corresponds to the value 0 for the Matthews correlation coefficient (MCC) [34], which means the predicted value is not better than random prediction.

We focused on and ordered our results by the scores of the MCC, because this rate provides a high score only if the classifier was able to correctly predict the majority of positive data instances and the majority of the negative data instances, despite of the dataset imbalance [35, 36].

In the interest of providing fuller information, we also reported the values of ROC AUCs [37] and PR AUCs [38], which are computed considering all the possible confusion matrix thresholds.

Septic shock prediction

We report the performance of the learned models for the septic shock (vasopressors) prediction with the different methods evaluated with the different metrics in Table 3, ranked by the MCC.

Our methods were able to obtain high prediction results and showed the ability of machine learning to predict septic shock (positive data instances), but showed low ability to identify patients without septic shock (negative data instances). In particular, Random Forests and the Multi-Layer Perceptron Neural Network outperformed the other methods (Table 3), by achieving average MCC equal to +0.32 and +0.31, respectively. All the classifiers obtained high scores for the true positive rate, accuracy, and F1 score, but achieved low scores on the true negative rates (Table 3). Decision Tree, kernel SVM, Logistic Regression, Deep Learning, and Naive Bayes were the only methods which predicted correctly most of the negative instances, by achieving average specificity equal to 0.50.

Regarding ROC AUC, it is interesting to notice that standard deviations for all the methods is high (standard deviation from 0.20 to 0.31. Table 3).

To check the predictive efficiency of the algorithms in making positive calls, we reported the positive predictive value (PPV, or precision). From a clinical perspective, the PPV represents the likelihood that patients with a positive screening test truly have the septic shock [39]. The PPV results show that Random Forests achieved the top performance among the methods tried, but was unable to correctly make the majority of the positive calls (PPV=0.47 in Table 3). This result means that, for each patient predicted to have septic shock, we cannot be sure that she/he will actually have a septic shock: there is an average top probability of 47% that she/he might have it, which leaves large room for uncertainty.

From a clinical perspective, the negative predictive value (NPV) represents the probability that a patient who got a negative screening test will truly not suffer from a septic shock [39]. Regarding this ratio of correct negative predictions, all the methods achieved good results, with Logistic Regression outperforming the other ones (NPV=0.90 in Table 3). This result means that, for each patient predicted not to have septic shock, we can be at 90% confident that he/she will not have septic shock, which leaves small room for uncertainty.

SOFA score prediction

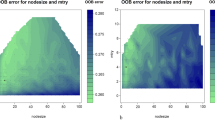

We report the performance of the learned models for the SOFA score prediction with the different methods evaluated with the different metrics in Table 4, ordered by the coef- ficient of determination R2, and the SOFA score scatterplot of the actual and predicted value of the ne test sets in Fig. 1. We used R2 for the method sorting because this rate incorporates the SOFA score distribution.

Our results show that machine learning can predict SOFA score with low error rates (Table 4). Differently from the septic shock prediction, here the Deep Learning model resulted as the top classifier by outperforming the other methods in the R2 and MAE. The linear SVM, the Multi-Layer Perceptron, the kernel SVM, and Random Forests obtained similar results, and resulted in being close to the top method for this task. It is interesting to notice that linear SVM resulted in being the top method when its predictions were measured through RMSE and MSE, but not in the other cases.

Survival prediction

We report the performance of the learned models for the survival prediction with the different methods evaluated with the different metrics in Table 5, ranked by the MCC.

Our results show that it is possible to use machine learning to predict the survival of sepsis patients, with high accuracy (Table 5). In this case, the MLP neural network outperformed the other classifiers by obtaining higher scores for MCC, F1 score, and true positive rate. All the methods obtained high results on the true negative rates, but only the MLP neural network and Random Forests were able to predict most of the positive data instances, obtaining average sensitivity equal to 0.75 and 0.58, respectively.

Regarding correct positive predictions (PPV), all the methods were able to correctly make positive predictions (Table 5), while they obtained low results for the ratio of correct negative predictions (NPV).

Contrarily to what happened previously for the septic shock (“Septic shock prediction” section), here we can be confident that the patients predicted to survive will actually survive (top PPV=0.94 for MLP). However, the low NPV values state that the probability of decease of patient predicted as “non survival” is just 0.31%on average for the best method (Naive Bayes), making our predictions less trustworthy in this case.

Feature rankings

In this section, we present the feature ranking results for the three targets (septic shock, SOFA score, and survival), obtained through Random Forests and through traditional univariate biostatistics approaches.

For complete information, we reported the feature rankings measured thorugh Random Forests as barcharts in the Supplementary Information (Figure S3, Figure S4, andFigure S2).

Septic shock feature ranking

We reported the feature ranking for the septic shock obtained by the two feature selections performed through Random Forests (Methods) in Table 6, and the feature rankings obtained through traditional biostatistics coefficients (Pearson correlation coefficient, Student’s t-test, p-values) in Table 7.

Random Forests identified creatinine, Glasgow coma scale, mean arterial pressure, and initial procalcitonin as the most important features to identify septic shock (Table 6), that resulted in top positions also in the traditional univariate biostatistics rankings (Table 7). The Student’s t-tests and p-values identified age as the top most important feature, that instead obtained the 10th position for the Pearson correlation coefficient (Table 7) and the 14th position for the Random Forests ranking (Table 6).

Overall, with the significant exception of age, the Random Forests ranking and the traditional univariate biostatistics rankings showed similar positions for the features importance, confirming also the importance of the Glasgow come scale value and the blood creatinine levels to recognize patients having septic shock.

SOFA score feature ranking

We reported the feature ranking for SOFA score obtained by the two feature selections performed through Random Forests (Methods) in Table 8, and the feature rankings obtained through traditional biostatistics coefficients (Pearson correlation coefficient, Student’s t-test, p-values) in Table 9.

Random Forests selected Glasgow come scale, creatinine, and platelets as most important feetures for SOFA score (Table 8). While all the biostatistics rates recognized Glasgow coma scale and platelets were recognized as relevant features too (Table 9), the Student’s t-test and the p-values ranked creatinine as 22 nd most important feature.

Similar to septic shock, the biostatistics techniques ranked age as a top feature, while Random Forests put it in the 11th position of its ranking. All the other features obtained similar rank positions in all the rankings.

Survival feature ranking

We reported the feature ranking for survival obtained by the two feature selections performed through Random Forests (Methods) in Table 10, and the feature rankings obtained through traditional biostatistics coefficients (Pearson correlation coefficient, Student’s t-test, p-values) in Table 11.

The feature ranking results obtained for the survival target generated more divergence between Random Forests and traditional biostatistics methods, among all three target feature rankings.

Random Forests identified platelets as the most important feature (Table 10), which resulted on a top position also in the Pearson correlation coefficient ranking, but not in the ranking of the Student’s t-test and the ranking of the p-values (Table 11). Random Forests then selected creatinine, and respiration (PaO2) as most relevant features for survival, but these three features were ranked in low positions by the traditional biostatitics techniques (Table 11).

Another difference regarded chronic kidney disease (CKD) without dialysis. While the Student’s t-test, p-values, and PCC ranked this feature in mid-high positions (7th, 7th, and 11th position, respectively) (Table 11), Random Forests considered CKD without dialysis as the penultimate less important feature (Table 10).

All the ranking methods, in this case, ranked age as a top feature.

Discussion

Our results showed that machine learning can be employed efficiently to predict septic shock, SOFA score, and survival of patients diagnosed with sepsis, from their electronic health records data. In particular, Random Forests resulted in being the top method in correctly classifying septic shock patients, even if no method achieved good prediction performance in correctly identifying patients without septic shock (“Septic shock prediction” section) The Deep Learning model outperformed the other classifier in the SOFA score regression (“SOFA score prediction” section). Regarding the survival prediction, the Multi-Layer Perceptron Neural Network achieved the top prediction score among all the classifiers (“Survival prediction” section).

This difference in the top performing methods might be due to the different kinds and different ratios of the dataset targets (negatively imbalance for the septic shock, regression for SOFA score, and positively imbalanced for survival, “Dataset” section), and the different data processing made by each algorithm.

Regarding feature ranking, Random Forests feature selection identified several unexpected symptoms and clinical components as relevant for septic shock, SOFA score, and survival.

For septic shock, Random Forests selected creatinine as a top feature, differently from the traditional univariate biostatistics approaches (“Septic shock feature ranking” section). Recent scientific discoveries confirm this trend: the level of creatinine in the blood is often used as a biomarker for sepsis [42], especially in presence of a serious kidney injury [43].

Random Forests also ranked initial procalcitonin (PCT) as a top feature, confirming the relationship between this protein and septic shock found by Yunus and colleagues [29].

About the SOFA score prediction, the ranking positions of the Random Forests feature selection resulted in being consistent with the ranking positions of the traditional univariate biostatistics analysis. Also in this case, Random Forests also ranked initial procalcitonin (PCT) as a mid-top feature, confirming the weak positive relationship between this protein and the SOFA score found by Yunus and colleagues [29].

On the contrary, Random Forests labeled as important several features that were not ranked in top positions by the Student’s t-test, p-values, and Pearson correlation coefficient rankings. Different from the univariate biostatistics analysis, Random Forests, in fact, identified creatinine, respiration (PaO2) as top components in the classification of survived sepsis patients versus deceased sepsis patients. Kang et al. [44] recently confirmed the strong association between serum creatinine level and mortality. Regarding respiration (PaO2), Santana and colleagues [45] recently showed how the SaO2/FiO2 ratio (a rate strongly correlated to the PaO2/FiO2 ratio) is associated with mortality from sepsis. This aspect suggests the need of additional studies and analyses in this direction.

Additionally, Random Forests feature ranking showed difference with the biostatistics rankings in the last ranking positions. Random Forests, in fact, considered having chronic kidney disease (CKD) without dialysis as a scarcely important component for survival, while the traditional biostatistics rates ranked that element in top positions. Maizel and colleagues [46] confirmed our finding in 2013 by stating: “Non-dialysis CKD appears to be an independent risk factor for death after septic shock in ICU patients” [46].

Conclusions

Sepsis is still a widespread lethal condition nowadays, and the identification of its severity can require a lot of effort. In this context, machine learning can provide effective tools to speed up the prediction of an upcoming septic shock, the prediction of the sequential organ failure, and the prediction of survival or mortality of the patient by processing large datasets in a few minutes.

In this manuscript, we presented a computational system for the prediction of these three aspects, the feature ranking of their clinical features, and the interpretation of the results we obtained. Our system consists of classifiers able to read the electronic health records of the patients diagnosed with sepsis, and to computationally predict the three targets for each of them (septic shock, SOFA score, survival) in a few minutes. Additionally, our computational intelligence system can predict the most important input features of the electronic health records of each of the three targets, again in a few minutes. We then compared the feature ranking results obtained through machine learning with the feature rankings obtained with traditional univariate biostatistics coefficients. The machine learning feature rankings highlighted the importance of some features that traditional biostatistics failed to underline. We found confirmation of the importance of these factors in the biomedical literature, which suggests the need of additional investigation on these aspects for the future.

Our discoveries can have strong implications on biomedical research and clinical practice.

First, medical doctors and clinicians can take advantage of our methods to predict survival, septic shock, and SOFA scores from any available electronic health record having the same variables of the datasets used in this study. This prediction can help doctors understand the risk of survival and septic shock for each patient, and how many organs risk to fail because of the septic episode. Doctors could use this information to decide the following steps of the therapy.

Additionally, the results of the machine learning feature ranking suggest additional, more thorough investigations on some factors of the electronic health records that would have been unnoticed otherwise: creatinine for septic shock, procalcitonin for SOFA score, and respiration (PaO2) for survival. We believe these discoveries could orientate the scientific debate regarding sepsis, and suggest to medical doctors to pay more attention to these three variables in the clinical records.

Regarding limitations, we have to report that our machine learning classifiers were unable to efficiently predict patients without septic shock among the dataset, and therefore obtained low true negative rates. We believe this drawback is due to the imbalance of the dataset, that contains 81.59% positive data instances (patients with septic shock), and only 18.41% negative data instances (patients without septic shock). In the future, we aim at exploring several over-sampling techniques to deal with this data imbalance problem [47].

Another limitation of our study was the employment of a single dataset: having an alternative dataset where to confirm our findings would make our results more robust. We looked for alternative datasets with the same clinical features to use as validation cohorts, but unfortunately could not find them. Because of this issue and of the small size of our dataset (364 patients), we cannot confirm that our approach is generalizable to other cohorts.

In the future, we plan to employ alternative methods for feature ranking, to compare their results with the results we obtained through Random Forests. We also plan to employ similarity measures to analyze the semantic similarity between patients [48].

Abbreviations

- AUC:

-

Area under the curve

- BIDMC:

-

Beth Israel deaconess medical center

- CAD:

-

Coronary artery disease

- CHF:

-

Congestive heart failure

- CKD:

-

Chronic kidney disease

- COPD:

-

Chronic obstructive pulmonary disease

- DM:

-

Diabetes mellitus

- DT:

-

Decision tree

- EE:

-

Error estimation

- EHRs:

-

Electronic health records

- FiO2:

-

Respiration

- FR:

-

Feature ranking

- HTN:

-

Hypertension

- Inc.:

-

Incorporation

- MAP:

-

Mean arterial pressure

- MCC:

-

Matthews correlation coefficient

- MDA:

-

Mean decrease in accuracy

- MDI:

-

Mean decrease in impurity

- MIMIC-III:

-

Multiparameter intelligent monitoring in intensive care III

- MLP:

-

Multilayer perceptron

- MS:

-

Model selection

- NPV:

-

Negative predictive value

- NICU:

-

Neonatal intensive care unit

- NN:

-

Neural network

- PaO2:

-

Respiration

- PCC:

-

Pearson correlation coefficient

- PCT:

-

Procalcitonin

- PE:

-

Pulmonary embolism

- PPV:

-

Positive predictive value

- R2 :

-

Coefficient of determination

- SOFA:

-

Sequential organ failure assessment

- SVM:

-

Support vector machine

References

Singer M, Deutschman CS, Seymour CW, Shankar-Hari M, Annane D, Bauer M, Bellomo R, Bernard GR, Chiche J-D, Coopersmith CM, Hotchkiss RS, Levy MM, Marshall JC, Martin GS, Opal SM, Rubenfeld GD, van der Poll T, Vincent J-L, Angus DC. The third international consensus definitions for sepsis and septic shock (Sepsis-3). J Am Med Assoc (JAMA). 2016; 315(8):801–10.

Kononenko I. Machine learning for medical diagnosis: history, state of the art and perspective. Artif Intell Med. 2001; 23(1):89–109.

Chicco D, Jurman G. Machine learning can predict survival of patients with heart failure from serum creatinine and ejection fraction alone. BMC Med Informat Decis Mak. 2020; 20(16):1–16.

Shin S, Austin PC, Ross HJ, Abdel-Qadir H, Freitas C, Tomlinson G, Chicco D, Mahendiran M, Lawler PR, Billia F, Gramolini A, Epelman S, Wang B, Lee DS. Machine learning vs. conventional statistical models for predicting heart failure readmission and mortality. ESC Heart Fail. 2020:1–10.

Chicco D, Rovelli C. Computational prediction of diagnosis and feature selection on mesothelioma patient health records. PLoS ONE. 2019; 14(1):0208737.

Cangelosi D, Pelassa S, Morini M, Conte M, Bosco MC, Eva A, Sementa AR, Varesio L. Artificial neural network classifier predicts neuroblastoma patients’ outcome. BMC Bioinformatics. 2016; 17(12):347.

Maggio V, Chierici M, Jurman G, Furlanello C. Distillation of the clinical algorithm improves prognosis by multi-task deep learning in high-risk neuroblastoma. PLoS ONE. 2018; 13(12):0208924.

Melaiu O, Chierici M, Lucarini V, Jurman G, Conti LA, Vito RD, Boldrini R, Cifaldi L, Castellano A, Furlanello C, Barnaba V, Locatelli F, Fruci D. Cellular and gene signatures of tumor-infiltrating dendritic cells and natural-killer cells predict prognosis of neuroblastoma. Nat Communi. 2020; 11(5992):1–15.

Patrício M, Pereira J, Crisóstomo J, Matafome P, Gomes M, Seiça R, Caramelo F. Using Resistin, glucose, age and BMI to predict the presence of breast cancer. BMC Cancer. 2018; 18(1):29.

Gultepe E, Green JP, Nguyen H, Adams J, Albertson T, Tagkopoulos I. From vital signs to clinical outcomes for patients with sepsis: a machine learning basis for a clinical decision support system. J Am Med Inform Assoc. 2013; 21(2):315–25.

Tsoukalas A, Albertson T, Tagkopoulos I. From data to optimal decision making: a data-driven, probabilistic machine learning approach to decision support for patients with sepsis. JMIR Med Inform. 2015; 3(1):11.

Taylor RA, Pare JR, Venkatesh AK, Mowafi H, Melnick ER, Fleischman W, Hall MK. Prediction of in-hospital mortality in emergency department patients with sepsis: a local big data–driven, machine learning approach. Acad Emerg Med. 2016; 23(3):269–78.

Horng S, Sontag DA, Halpern Y, Jernite Y, Shapiro NI, Nathanson LA. Creating an automated trigger for sepsis clinical decision support at emergency department triage using machine learning. PLoS ONE. 2017; 12(4):0174708.

Shimabukuro DW, Barton CW, Feldman MD, Mataraso SJ, Das R. Effect of a machine learning-based severe sepsis prediction algorithm on patient survival and hospital length of stay: a randomised clinical trial. BMJ Open Respir Res. 2017; 4(1):000234.

Burdick H, Pino E, Gabel-Comeau D, Gu C, Huang H, Lynn-Palevsky A, Das R. Evaluating a sepsis prediction machine learning algorithm in the emergency department and intensive care unit: a before and after comparative study. bioRxiv. 2018; 224014:1–13.

Calvert J, Saber N, Hoffman J, Das R. Machine-learning-based laboratory developed test for the diagnosis of sepsis in high-risk patients. Diagnostics. 2019; 9(1):20.

Barton C, Chettipally U, Zhou Y, Jiang Z, Lynn-Palevsky A, Le S, Calvert J, Das R, Evaluation of a machine learning algorithm for up to 48-hour advance prediction of sepsis using six vital signs. Comput Biol Med. 2019; 109:79–84.

Mani S, Ozdas A, Aliferis C, Varol HA, Chen Q, Carnevale R, Chen Y, Romano-Keeler J, Nian H, Weitkamp J-H. Medical decision support using machine learning for early detection of late-onset neonatal sepsis. J Am Med Inform Assoc. 2014; 21(2):326–36.

Barton C, Desautels T, Hoffman J, Mao Q, Jay M, Calvert J, Das R. Predicting pediatric severe sepsis with machine learning techniques. In: American Journal of Respiratory and Critical Care Medicine. New York: American Thoracic Society: 2018. p. A4282–A4282.

Masino AJ, Harris MC, Forsyth D, Ostapenko S, Srinivasan L, Bonafide CP, Balamuth F, Schmatz M, Grundmeier RW. Machine learning models for early sepsis recognition in the neonatal intensive care unit using readily available electronic health record data. PLoS ONE. 2019; 14(2):0212665.

Desautels T, Calvert J, Hoffman J, Jay M, Kerem Y, Shieh L, Shimabukuro D, Chettipally U, Feldman MD, Barton C, Wales DJ, Das R. Prediction of sepsis in the intensive care unit with minimal electronic health record data: a machine learning approach. J Med Internet Res. 2016; 4(3):28.

Chicco D, Jurman G. Survival prediction of patients with sepsis from age, sex, and septic episode number alone. Sci Rep. 2020; 10(1):1–12.

Moody GB, Mark RG, Goldberger AL. PhysioNet: a web-based resource for the study of physiologic signals. IEEE Eng Med Biol Mag. 2001; 20(3):70–5.

PhysioNet. PhysioNet, the research resource for the physiologic signals. https://www.physionet.org. URL visited on 19th May 2019.

PhysioNet. Early prediction of sepsis from clinical data: the PhysioNet/Computing in Cardiology Challenge 2019. https://physionet.org/challenge/2019/. URL visited on 19th May 2019.

Dascena Inc.InSight by Dascena. https://www.dascena.com/insight. URL visited on 19th May 2019.

Johnson AE, Pollard TJ, Shen L, Li-wei HL, Feng M, Ghassemi M, Moody B, Szolovits P, Celi LA, Mark RG. MIMIC-III, a freely accessible critical care database. Sci Data. 2016; 3:160035.

Ahmad T, Munir A, Bhatti SH, Aftab M, Raza MA. Survival analysis of heart failure patients: a case study. PLoS ONE. 2017; 12(7):0181001.

Yunus I, Fasih A, Wang Y. The use of procalcitonin in the determination of severity of sepsis, patient outcomes and infection characteristics. PLoS ONE. 2018; 13(11):0206527.

Yunus I, Fasih A, Wang Y. The use of procalcitonin in the determination of severity of sepsis, patient outcomes and infection characteristics. S2 Table – Interpretation key. https://doi.org/10.1371/journal.pone.0206527.s002. URL visited on 7th February 2019.

Yunus I, Fasih A, Wang Y. The use of procalcitonin in the determination of severity of sepsis, patient outcomes and infection characteristics. S1 Table – Data collection sheet. https://doi.org/10.1371/journal.pone.0206527.s001. URL visited on 7th February 2019.

Goodfellow I, Bengio Y, Courville A. Deep Learning. Cambridge, Massachusetts, USA: MIT Press; 2016.

Lansdowne ZF, Woodward BS. Applying the Borda ranking method. Air Force J Logist. 1996; 20(2):27–9.

Matthews BW. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. Biochim Biophys Acta (BBA) – Mol Basis Dis. 1975; 405(2):442–51.

Chicco D, Jurman G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genomics. 2020; 21(1):1–13.

Chicco D. Ten quick tips for machine learning in computational biology. BioData Min. 2017; 10(35):1–17.

Chicco D, Masseroli M. A discrete optimization approach for SVD best truncation choice based on ROC curves. In: Proceedings of IEEE BIBE 2013 – the 13th IEEE International Conference on BioInformatics and BioEngineering. Chania: IEEE: 2013. p. 1–4.

Ozenne B, Subtil F, Maucort-Boulch D. The precision–recall curve overcame the optimism of the receiver operating characteristic curve in rare diseases. J Clin Epidemiol. 2015; 68(8):855–9.

LaMorte WW. Screening for disease: positive and negative predictive value. 2016. http://sphweb.bumc.bu.edu/otlt/MPH-Modules/EP/EP713_Screening/EP713_Screening5.html. URL visited on 3rd February 2020.

Onwuegbuzie AJ, Daniel LG. Uses and misuses of the correlation coefficient. Res Sch. 1999; 9:73–90.

Haynes W. Student’s t-test. Encycl Syst Biol. 2013:2023–5.

Legrand M, Kellum JA. Serum creatinine in the critically ill patient with sepsis. J Am Med Inform Assoc. 2018; 320(22):2369–70.

Leelahavanichkul A, Souza ACP, Street JM, Hsu V, Tsuji T, Doi K, Li L, Hu X, Zhou H, Kumar P, et al. Comparison of serum creatinine and serum cystatin C as biomarkers to detect sepsis-induced acute kidney injury and to predict mortality in CD-1 mice. Am J Physiol Ren Physiol. 2014; 307(8):939–48.

Kang HR, Lee SN, Cho YJ, Jeon JS, Noh H, Han DC, Park S, Kwon SH. A decrease in serum creatinine after ICU admission is associated with increased mortality. PLoS ONE. 2017; 12(8):0183156.

Santana AR, de Sousa JL, Amorim FF, Menezes BM, Araújo FVB, Soares FB, de Carvalho Santos LC, de Araújo MPB, Rocha PHG, Júnior PNF. SaO 2/FiO 2 ratio as risk stratification for patients with sepsis. Crit Care. 2013; 17(4):1–59.

Maizel J, Deransy R, Dehedin B, Secq E, Zogheib E, Lewandowski E, Tribouilloy C, Massy ZA, Choukroun G, Slama M. Impact of non-dialysis chronic kidney disease on survival in patients with septic shock. BMC Nephrology. 2013; 14(1):77.

Pes B. Learning from high-dimensional biomedical datasets: the issue of class imbalance. IEEE Access. 2020; 8:13527–40.

Chicco D, Masseroli M. Software suite for gene and protein annotation prediction and similarity search. IEEE/ACM Trans Comput Biol Bioinforma. 2015; 12(4):837–43.

Vapnik VN. Statistical Learning Theory. New York, New York, USA: Wiley; 1998.

Aggarwal CC. Data Mining: the Textbook. Heidelberg, Germany: Springer; 2015.

Donders ART, Van Der Heijden GJ, Stijnen T, Moons KG. A gentle introduction to imputation of missing values. J Clin Epidemiol. 2006; 59(10):1087–91.

Haixiang G, Yijing L, Shang J, Mingyun G, Yuanyue H, Bing G. Learning from class-imbalanced data: review of methods and applications. Expert Syst Appl. 2017; 73:220–39.

Oneto L. Model selection and error estimation without the agonizing pain. Wiley Interdiscip Rev Data Min Knowl Discov. 2018; 8(4):1252.

Guyon I, Elisseeff A. An introduction to variable and feature selection. J Mach Learn Res. 2003; 3:1157–82.

Shalev-Shwartz S, Ben-David S. Understanding Machine Learning: From Theory To Algorithms. Cambridge, England, United Kingdom: Cambridge University Press; 2014.

Rokach L, Maimon OZ, Vol. 69. Data Mining with Decision Trees: Theory and Applications. Singapore: World Scientific; 2008.

Breiman L. Random forests. Mach Learn. 2001; 45(1):5–32.

Shawe-Taylor J, Cristianini N. Kernel Methods for Pattern Analysis. Cambridge, England, United Kingdom: Cambridge University Press; 2004.

Scholkopf B. The kernel trick for distances. In: Advances in Neural Information Processing Systems: 2001. p. 301–307.

Keerthi SS, Lin C-J. Asymptotic behaviors of support vector machines with Gaussian kernel. Neural Comput. 2003; 15(7):1667–89.

Bishop CM. Neural Networks for Pattern Recognition. Oxford, England, United Kingdom: Oxford University Press; 1995.

Rosenblatt F. The perceptron: a probabilistic model for information storage and organization in the brain. Psychol Rev. 1958; 65(6):386.

Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagating errors. Cogn Model. 1988; 5(3):1.

Cybenko G. Approximation by superpositions of a sigmoidal function. Math Control Signals Syst. 1989; 2(4):303–14.

Rish I. An empirical study of the naive Bayes classifier. In: Proceedings of IJCAI 2001 – the 2001 International Joint Conference on Artificial Intelligence, Workshop on Empirical Methods in Artificial Intelligence: 2001. p. 41–46.

Cover T, Hart P. Nearest neighbor pattern classification. IEEE Trans Inf Theory. 1967; 13(1):21–27.

Hosmer Jr DW, Lemeshow S, Sturdivant RX, Vol. 398. Applied Logistic Regression. New York: John Wiley & Sons; 2013.

LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015; 521(7553):436–44.

Kerr KF. Comments on the analysis of unbalanced microarray data. Bioinformatics. 2009; 25(16):2035–41.

Laza R, Pavón R, Reboiro-Jato M, Fdez-Riverola F. Evaluating the effect of unbalanced data in biomedical document classification. J Integr Bioinforma. 2011; 8(3):105–17.

Han K, Kim K-Z, Park T. Unbalanced sample size effect on the genome-wide population differentiation studies. In: Proceedings of BIBMW 2010 – the 2010 IEEE International Conference on Bioinformatics and Biomedicine Workshops. Hong Kong: IEEE: 2010. p. 347–52.

He H, Garcia EA. Learning from imbalanced data. IEEE Trans Knowl Data Eng. 2008; 21(9):1263–84.

Kohavi R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Proceedings of IJCAI 1995 – the International Joint Conference on Artificial Intelligence. Montreal, Quebec, Canada: IJCAI: 1995. p. 1137–45.

Saeys Y, Abeel T, Van de Peer Y. Robust feature selection using ensemble feature selection techniques. In: Proceedings of ECML PKDD 2008 – the 2008 Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer: 2008. p. 313–25.

Genuer R, Poggi J-M, Tuleau-Malot C. Variable selection using random forests. Pattern Recognit Lett. 2010; 31(14):2225–36.

Qi Y. Random forest for bioinformatics. In: Ensemble Machine Learning. Boston, Massachusetts, USA: Springer: 2012. p. 1–18.

Díaz-Uriarte R, De Andres SA. Gene selection and classification of microarray data using random forest. BMC Bioinformatics. 2006; 7(1):3.

Good P. Permutation Tests: a Practical Guide to Resampling Methods for Testing Hypotheses. Heidelberg, Germany: Springer; 2013.

Calle ML, Urrea V. Letter to the editor: stability of random forest importance measures. Brief Bioinform. 2010; 12(1):86–9.

Kursa MB. Robustness of Random Forest-based gene selection methods. BMC Bioinformatics. 2014; 15(1):8.

Wang H, Yang F, Luo Z. An experimental study of the intrinsic stability of random forest variable importance measures. BMC Bioinformatics. 2016; 17(1):60.

Sculley D. Rank aggregation for similar items. In: Proceedings of the 2007 SIAM International Conference on Data Mining. Santa Fe, New Mexico: Society for Industrial and Applied Mathematics (SIAM): 2007. p. 587–92.

Owen D. The power of Student’s t-test. J Am Stat Assoc. 1965; 60(309):320–33.

Benesty J, Chen J, Huang Y, Cohen I. Pearson correlation coefficient. In: Noise Reduction in Speech Processing. Heidelberg, Germany: Springer: 2009. p. 1–4.

Acknowledgments

The authors thankn Julia Lin (University of Toronto) for her help in the English proof-reading of this manuscript.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

DC retrieved the dataset, conceived the study, performed the biostatistics analysis, compared the results of the biostatistics feature rankings and the machine learning feature rankings, wrote the corresponding sections of the manuscript, and revised the final version of the manuscript. LO performed the machine learning classification, regression, and feature ranking, wrote the corresponding sections of the manuscript, revised the final version of the manuscript, and provided the funding for the publication costs. Both the authors revised and approved the final manuscript version.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The original study containing the dataset analyzed in this manuscript was approved by the Institutional Review Board of the University of Illinois, College of Medicine at Peoria, Peoria, Illinois, USA [29].

Consent for publication

Not applicable.

Competing interests

The authors declare they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1

Supplementary information containing details regarding data engineering, algorithms, and metrics employed in the analysis.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Chicco, D., Oneto, L. Data analytics and clinical feature ranking of medical records of patients with sepsis. BioData Mining 14, 12 (2021). https://doi.org/10.1186/s13040-021-00235-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13040-021-00235-0