Abstract

The links between increased participation in Physical Activity (PA) and improvements in health are well established. As this body of evidence has grown, so too has the search for measures of PA with high levels of methodological effectiveness (i.e. validity, reliability and responsiveness to change). The aim of this “review of reviews” was to provide a comprehensive overview of the methodological effectiveness of currently employed measures of PA, to aid researchers in their selection of an appropriate tool. A total of 63 review articles were included in this review, and the original articles cited by these reviews were included in order to extract detailed information on methodological effectiveness.

Self-report measures of PA have been most frequently examined for methodological effectiveness, with highly variable findings identified across a broad range of behaviours. The evidence-base for the methodological effectiveness of objective monitors, particularly accelerometers/activity monitors, is increasing, with lower levels of variability observed for validity and reliability when compared to subjective measures. Unfortunately, responsiveness to change across all measures and behaviours remains under-researched, with limited information available.

Other criteria beyond methodological effectiveness often influence tool selection, including cost and feasibility. However, researchers must be aware of the methodological effectiveness of any measure selected for use when examining PA. Although no “perfect” tool for the examination of PA in adults exists, it is suggested that researchers aim to incorporate appropriate objective measures, specific to the behaviours of interests, when examining PA in free-living environments.

Similar content being viewed by others

Background

Physical inactivity is the fourth leading cause of death worldwide [1]. Despite this, PA levels of adults across developed nations remain low and the promotion of regular participation in PA is a key public health priority [2]. Population level PA surveillance relies upon having tools to accurately measure activity across all population sub-groups. In addition to surveillance, it is essential that valid, reliable and sensitive measures of PA are available to practitioners, researchers and clinicians in order to examine the effectiveness of interventions and public health initiatives. The accurate measurement of PA in adults has relevance not only for refining our understanding of PA-related disorders [3], but also for defining the dose-response relationship between the volume, duration, intensity and pattern of PA and the associated health benefits.

A number of methods are available for the assessment of PA [4]. When selecting a measurement technique, researchers and practitioners need to consider not only feasibility and practicality of the measure, but also the methodological effectiveness, such as the validity, reliability and sensitivity. Validity refers to the degree to which a test measures what it is intended to measure, and is most often investigated by comparing the observed PA variables determined by the proposed measure with another comparable measure [5]. Criterion validity is when a measure is validated against the ‘gold standard’ measure. Good agreement between the proposed method and the gold standard provides some assurance that the results are an accurate reflection of PA behaviour. Other frequently examined forms of validity are concurrent validity (when two measures that give a result that is supposed to be equal are compared) and construct validity (when two measures that are in the same construct are compared). Reliability refers to the degree to which a test can produce consistent results on different occasions, when there is no evidence of change, while sensitivity is the ability of the test to detect changes over time [5].

In addition to methodological effectiveness, other factors need to be considered when selecting a method for assessing PA and interpreting the findings derived from these methods. Feasibility often drives the selection of the study measures. Some measures are more feasible than others depending on the setting, number of participants and cost. For example, the use of activity monitors to estimate PA may be less feasible in epidemiological studies where large numbers of individuals are being assessed. Reactivity may mean that the act of measuring PA may change a person’s behaviour: for example, being observed for direct observation [6] or wearing an activity monitor may cause the participant to alter their habitual PA behaviour [7]. When using self-report measures, social desirability may result in over-reporting of PA among participants keen to comply with the intervention aims [8]. These factors require careful consideration when selecting methods for assessing PA.

Although methods for the measurement of PA have been extensively examined, reviews to date have focused on specific categories of methods (i.e. self-report questionnaires [9,10,11], specific techniques i.e. Doubly Labelled Water (DLW) [12], smart phone technology [13], motion sensors and heart rate monitors (HRM) [14], pedometers [15] or a comparison of two or more methods [16]). Some reviews looked exclusively at specific PA behaviours (e.g. walking) [17] or focused solely on validity and/or reliability issues [18,19,20]. Other reviews have concentrated on methods for assessing PA in population subgroups (e.g. individuals with obesity [21] or older adults) [22,23,24,25,26,27,28,29,30]. Due to the level of variability in how information on measurement properties has been presented, and due to the wide range of different measures examined in existing reviews, it is extraordinarily difficult for researchers to compare and contrast measures of PA in adult populations.

The purpose of this article is to review existing reviews (a review of reviews) that have examined the methodological effectiveness of measures of PA. To aid in the comparison of measurement properties between different PA measures, original papers referred to within each review article were sourced, and additional analysis of these references was completed to enable better comparison and interpretation of findings. This review of reviews (as it will be referred to for the remainder of this article) is intended to provide a concise summary of PA measurement in adults. This work was completed as a component of the European DEDIPAC (DEterminants of DIet and Physical ACtivity) collaboration.

Methods

Literature search and search strategy

A systematic search of the electronic databases PubMed, ISI Web of Science, CINAHL, PsycINFO, SPORTDiscus and EMBASE took place in April 2014. The search strategy was developed by two of the authors from examining existing literature reviews, whereby common terminology utilised by published systematic reviews of specific methodologies or narrative reviews of all methodologies were included [4, 5, 31,32,33,34,35]. The developed search strategy was reviewed and agreed on by all members of the review team. The electronic databases were searched for the terms “Physical Activity” AND “Review OR Meta-Analysis” AND “Self-report” OR “Logs” OR “Diaries” OR “Questionnaire” OR “Recall” OR “Objective” OR “Acceleromet*” OR “Activity Monitor*” OR “Motion Sensor*” OR “Pedom*” OR “Heart Rate Monitor*” or “Direct Observation” AND “Valid*” OR “Reliab*” OR “Reproducib*” OR “Sensitiv*” OR “Responsiv*”. The search terms and criteria were tailored for each specific electronic database to ensure consistency of systematic searching. Only articles that were published in peer reviewed journals in the English language and were included in this review.

Eligibility for inclusion

Although DLW is suggested as the gold standard measure of energy expenditure [36], it has not been included in the search strategy, as its feasibility for use in population surveillance research is limited due to its high cost and participant invasiveness [34]. Due to similar limitations, indirect calorimetry has also not been included in this search strategy. However, reviews that discuss studies which have examined the validity of PA measures against DLW and indirect calorimetry were included. The term Global Positioning System (GPS) was not included as it was felt that the limitations associated with GPS used alone [37] deemed it an inappropriate measure of PA for population surveillance in its current form.

Review articles that focused solely on the methodological effectiveness of measures of PA in clinical populations and in children/adolescents were not included in this review. Reviews identified in this study which described the methodological effectiveness of measures of PA in both adult and youths were included, but only the adult data extracted and included.

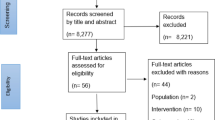

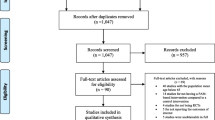

Article selection

A single reviewer screened all article titles, with only articles that were clearly unrelated to the review of reviews removed at this level. Two independent reviewers examined the article abstracts. Results were collated and reported to a third reviewer, who made the final decision in the case of conflicting results. The full texts of included articles were reviewed by two reviewers using the same protocol for handling conflicting results. Reference lists of identified articles were reviewed to ensure that no relevant articles were overlooked. The collated list of accepted reviews was examined by three leading PA measurement experts, who identified key reviews they felt were not included. The full screening protocol was repeated for all supplementary articles identified (Fig. 1).

Quality assessment

The methodological quality of the systematic reviews was evaluated using the Assessment of Multiple Systematic Reviews (AMSTAR) quality assessment tool [38]. No similar quality assessment tool exists for narrative reviews. The AMSTAR protocol was applied to each article by two researchers with any conflicting results resolved by a third reviewer.

Data extraction

Initially, the full text and the reference list of each review article meeting the inclusion criteria was screened by a single reviewer for all references to methodological effectiveness, and each methods paper was sourced, screened and all relevant data extracted. The extracted data included general information about the article, the specific measure of PA examined and the demographic characteristics, including the sample population age, size and gender.

Finally, all relevant information relating to properties of methodological effectiveness (i.e. reliability, validity and sensitivity) was recorded. This included the key methodological details of the study and all relevant statistics used to examine measures of methodological effectiveness.

Data synthesis

Data synthesis was conducted separately for each of the PA measurement methods, including general recommendations of the method and its effectiveness indicators. The results extracted from the methods papers were presented in the following order: Validity data is presented as mean percentage difference (MPD) in modified forest plots. Similar to Prince and colleagues (2008), where possible, the MPD was extracted or calculated from the original articles as (((Comparison Measure – Criterion Measure)/Criterion Measure)*100) [16]. Data points positioned around the 0 mark suggest high levels of validity compared to the reference measure. Data points positioned to the left of the 0 mark suggest an underestimation of the variable in comparison to the reference measure. Data points positioned to the right of the 0 mark suggest an overestimation of the variable in comparison to the reference measure. The further away from the 0 mark the point is positioned, the greater the under/overestimation. Data points 250% greater than or less than the reference measure were capped at 250%, and are marked with an asterisk. Due to the lack of reporting of variance results, and the use of differing and incompatible measurement units, confidence intervals are not reported.

Results

Study selection

The literature search produced 260 potentially relevant abstracts for screening, of which 58 were included in the review following abstract and full text review. After consultation from three international PA experts, and from bibliography review, a further 5 articles were identified for inclusion, providing a total of 63 articles for data extraction (Fig. 1) [4,5,6,7, 9,10,11, 13,14,15,16,17,18,19, 21,22,23,24,25,26,27,28,29,30,31,32,33,34,35, 39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72].

Quality assessment

For this article, reviews were categorised as either “Narrative Reviews” or “Systematic Reviews”. A systematic review was defined as a review which described a search strategy for identification of relevant literature. Of the 63 articles, 41 were categorised as narrative reviews, while 22 were identified as systematic reviews. Findings of the AMSTAR quality assessment and review are described in Table 1. The mean AMSTAR score across the 22 articles was 5.4 (out of a possible score of 11), with three articles achieving a score of 3, four articles scoring 4, six articles scoring 5, four articles scoring 6, two articles scoring 7, two articles scoring 8 and one article achieving a score of 9 (Table 1). Based on AMSTAR categorisation, three reviews were considered low quality, 16 reviews were of medium quality and three reviews were considered high quality. The predominant measures examined/discussed in the identified review articles were activity monitors (n=44; 70%), self-report measures (n=28; 44%), pedometers (n=23; 37%) and HRM (n=18; 29%). Other measures included combined physiologic and motion sensors, multi-physiologic measures, multiphasic devices and foot pressure sensors. These measures were incorporated under the combined sensors heading.

Data extraction

Self-report measures

Validity

Criterion validity: A total of 35 articles examined the criterion validity of self-reported measures by comparison to DLW determined energy expenditure [73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107]. Self-reported measures of PA included 7 day recall questionnaires, past year recall questionnaires, typical week questionnaires and PA logs/diaries were validated against 8-15 days of DLW measurement (Additional file 1: Table S1).

The mean values for self-reported and criterion determined PA energy expenditure were available for the calculation of MPD in 27 articles [73,74,75,76,77,78,79,80,81,82,83,84,85,86,87, 91,92,93, 95, 97, 99, 100, 102, 104,105,106,107]. Energy expenditure was calculated from a range of behaviours, including leisure time PA, work based PA and PA frequency. The MPD between self-reported PA energy expenditure (time spent in PA normally converted to energy expenditure using a compendium of PA) is presented in Fig. 2. The MPDs observed in studies that examined the validity of PA diaries ranged from -12.9% to 20.8%. MPD for self-reported PA energy expenditure recalled from the previous 7 days (or typical week) were larger, ranging from -59.5% to 62.1%. MPDs from self-reported PA energy expenditure for the previous month compared to DLW determined energy expenditure ranged from -13.3% to 11.4%, while the difference between self-reported PA from the previous twelve months and DLW determined energy expenditure ranged from -77.6% to 112.5%.

Concurrent validity: A total of 89 articles reported on concurrent validity of self-reported measures [75, 80, 83, 84, 97, 102, 108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190]. Articles were collated based on the types of referent measures used (Additional file 1: Table S2). The MPD between self-reported energy expenditure and energy expenditure from PA log/diaries for 12 studies ranged from -67.6% to 23.8% (Additional file 1: figure S2a) [80, 108, 110, 111, 128, 145, 152, 157, 159, 160, 169, 175]. These findings suggest that self-report underestimates energy expenditure compared to activity logs/diaries. Seven studies compared self-reported time spent in specific activity intensities with PA intensities from logs/diaries (Additional file 1: figure S2a) [109, 120, 121, 146, 152, 182, 187]. A wider MPD range (-69.0% to 438.5%) was evident, with the greatest differences occurring for moderate intensity physical activity (MPA) and vigorous intensity physical activity (VPA) [109, 120, 121].

Eight studies compared two different self-reported measures of PA energy expenditure [80, 83, 97, 152, 158, 162, 175, 190], and and 6 studies compared two different self-reported measures of time spent in PA [112, 135, 136, 146, 152, 153, 158] (Additional file 1: figure S2b). Additional file 1: figure S2c presents 15 studies that compared self-reported PA energy expenditure with PA energy expenditure from activity monitors [80, 132, 142,143,144, 150, 159, 168, 170, 172, 174, 178, 183, 185, 191]. The MPD ranged from -74.7% to 82.8%, with self-reported measures tending to overestimate energy expenditure.

Self-reported time spent in light intensity physical activity (LIPA) (n=6) [75, 119, 131, 146, 179, 189], MPA (n=17) [75, 115, 119, 130, 131, 133, 134, 139,140,141, 146, 147, 161, 163, 176, 177, 187, 189] and moderate-to-vigorous intensity physical activity (MVPA) (n=7) [115, 116, 127, 145, 149, 153, 179, 192] was validated against activity monitors that mainly employed count-to-activity thresholds to determine PA intensity (Additional file 1: figure S2d), with the MPD for LIPA ranging from -70.1% to 129.2%, MPA ranging from -78.9% to 1007.6% and MVPA ranging from -34.9% to 217.1%. The MPD for VPA was also validated against activity monitors (Additional file 1: figure S2e) [75, 115, 119, 130, 131, 133, 134, 140, 141, 146, 147, 161, 163, 177, 187, 189], with all studies identifying an overestimation of self-reported VPA (Additional file 1: figure S2e).

The concurrent validity of additional self-reported variables, including total PA [163, 181, 184, 193], frequency of MVPA [149], active time [151, 161], time standing [192] and time stepping [192] were also compared to activity monitor determined variables (Additional file 1: figure S2e).

The MPD between self reported energy expenditure and both pedometer and HRM determined energy expenditure [80, 102, 123, 142, 194]; and self-reported time spent in PA intensities and HRM determined time spent in PA intensities [118, 129, 146, 154, 174, 195] are presented in Additional file 1: figure S2f. Self-reported energy expenditure overestimated pedometer determined energy expenditure (range=17.1% to 86.5%). Self-reported measures notably overestimated time spent in PA intensities when compared to HRM. Although self-reported energy expenditure underestimated HRM determined energy expenditure, this underestimation was small compared to other measures (-17.7% to -1.3%).

Reliability

Intra-instrument reliability: One article examined the intra-instrument reliability of a self-reported measure of PA [196]. A self-reported instrument examining the previous 14 days of PA was administered [196]. After 3 days, the instrument examined the PA of the same 14 day period. The findings identified high levels of intra-instrument reliability for total activity (ICC=0.90; 95% CI=0.86-0.93), MPA (ICC=0.77; 95% CI=0.69-0.84), VPA (ICC=0.90; 95% CI=0.86-0.93), walking (ICC=0.89; 95% CI=0.85-0.93) and energy expenditure (ICC=0.86; 95% CI=0.80-0.90) (Additional file 1: Table S3).

Test-retest reliability: The test-retest reliability of self-reported measures was examined in 116 studies [75, 77, 83, 110, 116, 117, 122, 125,126,127, 129, 131, 132, 135, 137, 140, 144, 145, 147, 149, 152, 153, 155, 157, 159, 161, 162, 167,168,169, 172, 175, 176, 178,179,180,181, 184, 187, 188, 190, 191, 196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226,227,228,229,230,231,232,233,234,235,236,237,238,239,240,241,242,243,244,245,246,247,248,249,250,251,252,253,254,255,256,257,258,259,260,261,262,263,264,265,266,267,268,269]. Due to the wide test-retest periods, articles were allocated to one of 5 periods, ≤1 week (Additional file 1: Table S4a), >1 - <4 weeks (Additional file 1: Table S4b), >4 - <8 weeks (Additional file 1: Table S4c), >8 weeks - <1 year (Additional file 1: Table S4d) and >1 year (Additional file 1: Table S4e). Test-retest statistics employed were extracted and are presented in Table 2. An overview of all identified studies examining the test-retest reliability of PA/energy expenditure measured by self-report, along with all test-retest statistics is provided in Additional file 1: Table S4a-e.

Sensitivity: Two studies examined the sensitivity of self-reported measures to detect change in PA behaviours over time [256, 270]. Both studies identified small to moderate effect sizes for specific PA behaviours over a six month period in older adults (Additional file 1: Table S5).

Activity monitors

Validity

Criterion validity: Fifty-eight articles examined the criterion validity of activity monitor determined PA variables [73, 77, 80, 96, 105, 119, 271,272,273,274,275,276,277,278,279,280,281,282,283,284,285,286,287,288,289,290,291,292,293,294,295,296,297,298,299,300,301,302,303,304,305,306,307,308,309,310,311,312,313,314,315,316,317,318,319,320,321,322,323]. The majority of articles compared activity monitor determined energy expenditure with DLW [73, 77, 80, 96, 105, 274, 275, 277, 278, 280, 281, 285, 292, 293, 295, 296, 300, 303,304,305, 311, 313, 317, 323], while activity monitor determined steps [119, 271, 283, 287, 289, 298, 299, 306, 307, 314, 315, 318], distance travelled [282] and activity type [272, 273, 276, 279, 284, 286, 288, 290, 291, 294, 297, 301, 302, 308,309,310, 312, 316, 319,320,321,322] were also compared to direct observation (Additional file 1: Table S6).

The range of MPD observed in studies that examined the criterion validity of activity monitor determined energy expenditre ranged from -56.59% to 96.84% (Fig. 3a). However, a trend was apparent for activity monitor determined energy expenditure to underestimate the criterion measure. The range of MPD between activity monitor and direct observation determined steps was smaller, with values ranging from -48.52% to 7.47%, with 96% of studies having a MPD between -10% to 10% (Fig. 3b). Activity monitors overestimated distance walked/run (0.88% to 27.5%). Activity monitors also tended to underestimate activity classification, with MPD varying between -36.67% to 2.00%.

a Forest plot of percentage mean difference between accelerometer determined energy expenditure (TEE, PAEE, PAL) compared to criterion measure of energy expenditure (doubly labelled water). b Forest plot of percentage mean difference between accelerometer determined steps, distance walked and activity type compared to criterion measure of direct observation

Concurrent validity: A total of 103 articles examined the concurrent validity of activity monitor measures of PA [73, 77, 80, 119, 146, 151, 174, 192, 194, 195, 262, 271, 282, 295, 305, 316, 324,325,326,327,328,329,330,331,332,333,334,335,336,337,338,339,340,341,342,343,344,345,346,347,348,349,350,351,352,353,354,355,356,357,358,359,360,361,362,363,364,365,366,367,368,369,370,371,372,373,374,375,376,377,378,379,380,381,382,383,384,385,386,387,388,389,390,391,392,393,394,395,396,397,398,399,400,401,402,403,404,405,406,407,408,409]. Data extractions were grouped by the types of measures used (Additional file 1: Table S7).

The MPD of activity counts from two different activity monitors ranged from -40.6% to 13.2% [262, 327, 351, 389, 392, 405]. The MPD for a wide range of activity behaviours from two different activity monitors were examined; LIPA (-12.5% - 13.7%) [146, 340, 392, 405], MPA (-10.9% - 3.1%) [146, 340], VPA (-9.7% - 20.3%) [146, 352], MVPA (-57.5% - 3.3%) [344, 392, 405], total PA (1.1%) [146]. Stepping [151, 192] and step counts [77, 119, 340, 405] were compared between 2 activity monitor devices (MPD ranged from -21.7% - 0% for step counts and -57.1% - 56% for stepping). Energy expenditure estimated by two activity monitors were compared [372, 404, 408], with MPD ranging from -21.1% - 61% (Additional file 1: figure S3c).

Energy expenditure at different PA intensities from activity monitors were compared against estimates from indirect calorimetry and whole room calorimetry. For LIPA, the MPD ranged from -79.8% - 429.1% [349, 394]. For MPA, MPD ranged from -50.4% - 454.1% [349, 395], while estimates for VPA ranged from -100% - 163.6%. Energy expenditure estimates from activity monitoring devices for total PA were compared against indirect calorimetry estimates [368, 394, 396, 398, 404], where MPD ranged from -41.4% to 115.7%. The MPD range for activity monitor determined total energy expenditure compared with whole-room calorimetry were narrower (-16.7% to -15.7%) [343, 364] (Additional file 1: figure S3d).

Activity monitor estimates of energy expendture were compared to HRM estimates of energy expenditure for total PA (-10.4% - 22.2%) [80, 402], for LIPA (-75.4% - 72.8%) [146], for MPA (49.2% - 677.7%), VPA (-46.2% - 46.2%) [146, 361] and for total time spent in PA (-16.1% - 34.9%) [146, 174]. Self-reported measures were used to examine the concurrent validity of activity monitors for energy expenditure [80] and total time spent in PA [174], with MPD ranging from -6.0% - 32.1% (Additional file 1: figure S3e).

Estimated energy expenditure was compared between activity monitors and indirect calorimetry (kcal over specified durations; Additional file 1: figure S3f (-68.5% - 81.1%)) [282, 328, 341, 358, 367, 369, 370, 375, 376, 380, 382, 383, 385, 387]; (METs over specified durations; Additional file 1: figure S3g (-67.3% -- 48.4%)) [195, 325, 345,346,347, 349, 350, 353, 357, 362, 384, 397, 400, 407, 409]. A single study compared the estimated energy expenditure from 5 different activity monitors and indirect calorimetry at incremental speeds (54, 80, 107, 134, 161, 188 and 214 m.min-1) in both men and women (MPD ranged from -60.4% - 90.8%) (Additional file 1: figure S3h) [374].

Reliability

Inter-instrument reliability: The inter-instrument reliability of activity monitoring devices (e.g. the reliability of the same device worn by the same participant over the same time period) was examined in 18 studies [301, 315, 337, 344, 370, 385, 387, 406, 409,410,411,412,413,414,415,416,417,418]. Study methodologies included the wearing of devices over the left and right hip [337, 370, 385, 387, 406, 413, 415, 417], over the hip and lower back [409], the wearing of devices side by side at the same location on the hip [301, 344, 411, 414, 416,417,418], devices worn at 3rd intercostal space and just below the apex of the sternum [410], device worn on both wrists [412], worn on both legs [315] and worn side by side on the same leg [315]. Coefficients of variations ranged from 3% to 10.5% for the ActiGraph device [418] and from <6% to 35% for the RT3 accelerometer [387, 416]. All reported correlation coefficients were significant and greater than 0.56 [370, 385, 387, 406, 409, 412, 415, 417]. ICC values for the majority of devices were >0.90 [301, 315, 337, 344, 411, 413], excluding those observed for the RT3 accelerometer (0.72-0.95) [417], Actitrac (0.40 -0.87) and Biotrainer devices (0.60–0.71) [406] (Additional file 1: Table S8).

Test-retest reliability: Test-retest reliability of activity monitoring devices was examined in 26 studies [153, 155, 228, 234, 262, 282, 297, 314, 358, 385, 407, 411, 414, 416, 419,420,421,422,423,424,425,426,427,428,430]. For the laboratory-based studies, variables examined included distance walked [282], steps at different speeds [314, 420], resting periods [358], accelerometer counts [385, 407, 411, 414, 416, 425, 429, 430], energy expenditure [426] and postural position [297, 429]. For the free-living analyses, behaviours examined included activity behaviours [155, 419], accelerometer counts [262, 421, 422], step count [422], energy expenditure [228, 234] and the number of people achieving the recommended amount of PA [153] (Additional file 1: Table S9).

As the examination of PA over a number of days can be considered a measure of test-retest reliability, researchers have used statistical processes (i.e. generalizability theory or the Spearman Brown Prophecy formula) to determine the minimum number of days required to provide a reliability estimate of PA behaviours [431]. Studies reported that a minimum of three days of ActiGraph data are required to provide a reliable estimate of total PA [423] and time spent in MVPA [424], while a minimum of 2 days is required to provide a reliable estimate of ActiGraph determined steps per day, accelerometer counts per day and intermittent MVPA per day [427]. However, for the examination of continuous 10 minute bouts of MVPA (as suggested in the majority of international PA recommendations), a minimum of 6 days of measurement is required [427].

Sensitivity: The only study of responsiveness to change in activity monitors, using the ActiWatch, identified that this device was able to detect significant differences in activity counts accumulated between young adults and sedentary older adults and between active older adults and sedentary older adults [421]. However, no differences could be detected between the young adults and active older adults (Additional file 1: Table S10).

Pedometers

Validity

Criterion validity: A total of 30 studies were sourced that examined the criterion validity of step count in pedometer devices [283, 289, 298, 306, 307, 314, 318, 365, 391, 432,433,434,435,436,437,438,439,440,441,442,443,444,445,446,447,448,449,450,451,452], while 3 studies examined the criterion validity of pedometer determined energy expenditure compared to DLW [93, 453, 454]. Of the laboratory based studies assessing criterion validity, 30% used over ground walking protocols [307, 318, 365, 391, 442, 445,446,447, 450, 451] and the remaining treadmill-based protocols [283, 289, 298, 306, 314, 432,433,434,435,436,437,438,439,440,441, 443, 444, 448, 449] or a combination of the two [452]. In free-living studies which examined the criterion validity of pedometer determined energy expenditure, pedometers were worn for 2 [454], 7 [93] and 8 days [453] (Fig. 4; (-62.3% - 0.8%)). Pedometer determined step count was generally lower when compared to direct observation (-58.4% - 6.9%). Some studies also examined the effect of speed on pedometer output. Pedometers had relatively high levels of accuracy across all speeds, but appear to be more accurate at determining step-count at higher walking speeds compared to lower walking speeds (Additional file 1: Table S11) [306, 436, 438, 439].

Concurrent validity: The concurrent validity of pedometers was examined in a total of 22 articles [77, 194, 298, 376, 391, 399, 404, 422, 432,433,434, 441, 444, 448, 449, 451, 452, 455,456,457,458,459]. Various approaches were used to examine the concurrent validity of pedometers, with 14 studies comparing pedometer step count with steps determined from other pedometers [432, 451, 458] and activity monitors [77, 298, 391, 422, 433, 434, 444, 455,456,457, 459] and 4 studies comparing pedometer determined energy expenditure with energy expenditure determined from indirect calorimetry [376, 399, 404, 441, 448, 451] and/or energy expenditure determined from other activity monitors [451]. One study compared pedometer determined distance travelled with treadmill determined distance travelled [449], while one study compared pedometer determined MVPA with activity monitors determined MVPA [452] (Additional file 1: figure S4a). Pedometers appear to underestimate time spent in MVPA and estimated energy expenditure when compared to other measures. The findings are less clear for step count determined from pedometers when compared to other pedometers or activity monitors, with devices appearing to both over and underestimate step count (Additional file 1: Table S12).

Reliability

Inter-instrument reliability: A total of 6 articles examined the inter-instrument reliability of pedometer output obtained from 18 different devices [314, 315, 447, 449, 451, 457]. Many included articles examined the inter-instrument reliability of multiple devices in the same study (e.g. 2 pedometers [315], 5 pedometers [451], 10 pedometers [446, 449]). Inter-instrument reliability was examined by comparing pedometer outputs from two of the same model devices worn on the left and right hip [315, 449, 451, 457], on the left hip, right hip and middle back [447] and on the left and right hip and repeated with two further devices of the same model [446].

Three studies (1 examining the inter-instrument reliability of a single pedometer and 2 examining the inter-instrument reliability of multiple pedometers), identified that the majority of devices had acceptable levels of inter-instrument reliability (ICC ≥ 080) [446, 449, 457]. In the studies which examined the inter-instrument reliability of multiple devices, 8/10 pedometers [449] and 9/10 pedometers [446] achieved ICC ≥ 0.80. Using planned contrasts, Bassett and colleagues highlight that no significant differences were observed between devices worn on the left and right hip [451]. Two studies investigated the effect of walking speed on inter-instrument reliability, highlighting that ICC values increased as speed increased [315, 447] (Additional file 1: Table S13).

Test-retest reliability: A single laboratory-based test-retest reliability study in a laboratory-based treadmill protocol identified that the Yamax Digiwalker SW-200 (Tokyo, Japan) had appropriate test-retest reliability (ICC > 0.80 and significant) at 7 out of 11 treadmill speeds (non-significant speeds = 4, 20, 22 and 26 km.h-1) [314].

A total of 6 articles examined the reliability of pedometer steps obtained over a specified measurement period [423, 427, 460,461,462,463], presenting the minimum number of days of pedometer measurement to reliably estimate PA behaviours. The minimum number of days of measurement required for a reliable estimate (i.e. ICC >0.8) of pedometer steps was 2-4 days (Additional file 1: Table S14) [423, 427, 460,461,462,463].

Sensitivity: In the only study of pedometer responsiveness to change, effect size was used to examine the meaningfulness of difference between means [464]. A large effect size (>0.8) was observed, suggesting that pedometers, in this study, were sensitive to change (Additional file 1: Table S15).

Heart rate monitors

Validity

Criterion validity: All 12 studies that examined the criterion validity of HRMs were unstructured, free-living protocols [80, 85, 87, 96, 99, 100, 102, 123, 304, 371, 465, 466]. The duration of monitoring for HRM ranged from 24 hours [102, 465] to 14 days [96, 371]. Two studies examined the validity of HRM determined physical activity levels (PAL) compared to DLW determined PAL. All remaining articles compared estimates of energy expenditure determined by HRM techniques with DLW determined energy expenditure. The flex heart rate methodology, which distinguishes between activity intensities based on heart rate versus VO2 calibration curves, were utilised in all studies using individual calibration curves. MPDs between HRM determined energy expenditure and DLW determined energy expenditure ranged from -60.8% - 19.7% across identified studies (Fig. 5). No clear trend for over/under estimation was apparent (MPDs for energy expenditure ranging between -60.8% - 19.7%). For PAL, a slight trend in underestimation was apparent (-11.1 to -7.6) (Additional file 1: Table S16).

Concurrent validity: The concurrent validity of HRM determined energy expenditure [80, 467,468,469,470], PAL [80] and PA intensity [146, 174] was examined using a range of measures, including direct/indirect calorimetry [467, 469, 470], activity monitoring [80, 146, 174, 401] and measures of self-reported PA [80, 174, 468] (Additional file 1: Table S17). A slight trend in overestimation of energy expenditure and PAL was observed (Additional file 1: figure S5a). For PA intensities, MPDs were larger and more variable, with MPA underestimated and VPA overestimated. The MPD between HRM determined LIPA and LIPA determined by the Tritrac and MTI activity monitors fell outside the range for the presented forest plot, with values of +306.4% and +367.2%, respectively [146] (Additional file 1: figure S5a). No articles sourced through the data extraction reported on the reliability or responsiveness to change of HRM.

Combined sensors

Validity

Criterion validity: A total of 8 articles were identified that examined the criterion validity of multiple accelerometers [471,472,473,474] or accelerometers combined with gyroscopes [475] or HRMs [371, 476, 477]. The included studies had relatively small sample sizes, ranging from 3-31 participants. Studies primarily examined the effectiveness of data synthesis methodologies (i.e. Decision Tree Classification, Artificial Neural Networks, Support Vector Machine learning etc.) to identify specific postures/activities [471,472,473,474,475,476,477] or energy expenditure [371, 477]. Time spent in specific body postures/activity types tended to be underestimated from combined sensors when compared to direct observation (-33.3% to -3.2%; Fig. 6). In contrast, energy expenditure was overestimated by combined sensors when compared to DLW in free-living settings (13.0% to 26.8%) (Additional file 1: Table S18) [371].

Concurrent validity: Eleven studies examined the validity of combined accelerometry and HRM determined energy expenditure compared to whole room calorimetry [478,479,480] or indirect calorimetry [400, 477, 481,482,483,484,485,486] determined energy expenditure. No clear trend for under/overestimation was apparent, with combined sensors appearing to be relatively accurate in estimating energy expenditure when compared to indirect calorimetry in both a structured (-13.8% - 31.1%) and unstructured (0.13%) [485] settings (Additional file 1: Table S19). No articles sourced through the data extraction reported on the reliability or responsiveness to change of combined sensors.

Discussion

To the authors’ knowledge, this is the first systematic literature review of reviews to simultaneously examine the methodological effectiveness of the majority of PA measures. The greatest quantity of information was available for self-reported measures of PA (198 data extraction points), followed by activity monitors (179 data extraction points), pedometers (52 data extraction points), HRMs (19 data extraction points) and combined sensors (18 data extraction points).

The criterion validity of measures was determined through the examination of energy expenditure via DLW and by direct observation of steps and PA behaviours. For accelerometry, although variability was lower, a substantial proportion of studies (44/54) underestimated energy expenditure compared to DLW when proprietary algorithms or count-to-activity thresholds were employed. Based on the amended forest plots for the criterion validity of measures of PA, a greater level of variability was apparent for self-reported measures compared to objective measures (Figs. 2–6). Limited data on the criterion validity of HRM and combined sensors determined energy expenditure was available. HRMs tended to underestimate DLW determined energy expenditure, while combined sensors often overestimated energy expenditure. Unfortunately, due to the lack of measures of variability, resulting in the absence of meta-analysis, it was not possible to describe the extent of differences between measures statistically. For step counts, both activity monitors and pedometers achieved high levels of criterion validity. When comparing the two, pedometers appeared to be less accurate than activity monitors at estimating step count, tending to underestimate steps when compared to direct observation. Activity monitors tended to slightly overestimate distance travelled, while time spent in each activity type (or posture) determined by both activity monitors and combined sensors was slightly underestimated when compared to direct observation (Fig. 3a and Fig. 6). For concurrent validity of all measure of PA, high levels of variability were observed across a wide range of activity behaviours. In particular, high levels of variability were apparent in the estimation of PA intensities, with VPA substantially overestimated in the majority of concurrent validations across all measures. In summary, objective measures are less variable than recall based measures across all behaviours, but high levels of variability across behaviours are still apparent.

For activity monitors and pedometers, acceptable inter-instrument reliability was observed in the majority of studies. Variability for inter-instrument reliability across different activity monitors and pedometers was apparent, with some instruments demonstrating better reliability compared to others. However, a detailed examination of study methodology, device wear locations and activities performed is necessary when interpreting the inter-instrument reliability of pedometers and activity monitors.

A wide range of values were reported for the test-retest reliability of self-reported measures, with apparent trends for reduced levels of test-retest reliability as the duration of recall increased. Researchers must be cognisant of potential differences in test-retest reliability due to duration between administrations and between PA behaviours assessed within each tool when selecting a self-reported measure of PA. Moderate to strong test-retest reliability was observed for activity monitors in free-living environments. However, the reliability of activity monitors attenuated as the duration between measurements increased. As expected, the test-retest reliability of different devices varied, while intensity of activity often had a significant effect. The test-retest reliability of pedometer determined steps in a laboratory setting was high across the majority of speeds, but the reliability appeared to weaken at higher speeds (e.g. 20, 22 and 26 km·h-1). Although moderate to strong test-retest reliability of both pedometers and activity monitors were apparent, researchers should be aware of differences between models and devices when selecting a measure for use. Furthermore consideration should be given to the duration between test and retest and the behaviour being assessed when considering test-retest reliability, as although a measure may be reliable for one output, it may not be reliable for all outcomes.

When examining PA in free-living environments, it is essential that sufficient data is gathered to ensure a reliable estimate is obtained [7, 431]. By determining the inter- and intra-individual variability across days of measurement, researchers can define the number of days of monitoring required to reliably estimate such behaviours. For activity monitors and pedometers, analysis has been conducted to estimate the minimum number of days of measurement required to provide a reliable estimate of PA behaviors. For activity monitors, two days of measurement are recommended for a reliable estimate of steps per day, accelerometer counts per day and intermittent MVPA per day measured, 3 days for a reliable estimate of total PA and time spent in MVPA and 6 days are required for a reliable estimate of continuous 10 minute bouts of MVPA. For pedometers, a minimum of 2-4 days of measurement was required to provide a reliable estimate of steps in older adults, while 2-5 days of measurement was required in adults. These findings highlight the importance of knowing what behaviours are to be examined prior to collecting objective data from free-living environments, to ensure that sufficient information is recorded to provide reliable estimates of the behaviours of interest.

The responsiveness of measures to detect change over time was the least reported property of measures of PA. When evaluating interventions, or indeed evaluating changes in PA behaviours in longitudinal research, it is critical to utilise measures that can detect such changes. Although validity and reliability are requirements for sensitivity/responsiveness to change [5], this does not imply that a measure is responsive to change simply because it is valid and reliable. Responsiveness to change must be evaluated, and not assumed. Currently, the research on the responsiveness to change for all types of PA measurement is at best limited. Substantial investigation into the responsiveness of PA measures to detect change is required to ensure that measures employed in future intervention and longitudinal research can detect meaningful change.

Although the validity, reliability and responsiveness to change are key when selecting a measure of PA and energy expenditure, other factors including feasibility and cost should be considered. For example, wearing several sensors around the body for a short period in a laboratory setting is often quite feasible, but prolonging the wear period for several days may be uncomfortable for participants, while reattachment of sensors may require specific and detailed training. The appropriateness of the measure for use in specific populations is critical. Activity monitors or HRMs may need to be attached to body locations that are visible and may be considered “embarrassing” for certain populations in free-living environments, likely resulting in lower compliance to wear protocols. Finally, while the cost of objective measures have reduced significantly and are now feasible for inclusion in large scale data collections (i.e. UK Biobank study, HELENA study), worn devices can be expensive to use in large populations, especially if recording needs to be concurrent, requiring 100’s or 1000’s of devices. Although these issues are often the dominant determinant for researchers when selecting a measure of PA, it is critical that researchers consider selecting the measure with the best validity, reliability and responsiveness to change available to them; a larger dataset with less valid measures may not always be superior to a smaller dataset.

The findings of this review have highlighted the substantial quantity of research which has focused on the validity, reliability and responsiveness to change of measures of PA. A substantial number of review articles have been conducted on the measurement of PA in adult populations. The majority of such reviews were not systematic in nature. Of the systematic reviews articles identified, the methodological quality (as assessed by the AMSTAR quality assessment tool) was relatively poor, with 3 reviews considered low quality, 16 articles considered medium quality and 3 articles considered high quality. An obvious increase in the quantity of research using objective measures of PA over the past number of decades is apparent. Unfortunately, with the enormous quantity of research on the methodological effectiveness of PA measures comes extreme variability in study design, data processing and statistical analysis conducted. Such variability makes comparison between measurement type and specific measurement devices/tools extremely difficult. The sometimes questionable study designs and research questions in some of the existing published literature is a reanalysis of “suitable” data, rather than from a study designed to collect data to answer a specific research questions. The authors propose that to aid researchers in making informed decisions on the best available measure of PA, the development of “best practise” protocols for study design and data collection, analysis and synthesis are required, which can be employed across all measures, providing comparable information that is easy for researchers from outside of the field to digest. The authors also propose that any future undertaking of reviews on the measurement of PA follow best practise, and ensure that the reviews conducted are of the highest possible quality. Such improvements will provide researchers with the best available evidence for making a decision on which measure of PA to employ.

Strengths and limitations

This review of reviews had limitations that should be taken into account when considering the findings presented here. As this article reviewed existing literature reviews, and due to potential methodological errors within these reviews, it is likely that some relevant literature on the methodological effectiveness for measures of PA has been overlooked. Additionally, articles that have been published since the publication of each review will also have been overlooked. Due to the quantity of identified articles, and difficulties in contacting primary authors regarding articles published over the last 60 years, the primary data from these articles was not sourced. Although prior research has systematically reviewed the literature for accuracy of measures of PA, and some narrative reviews have compared the methodological effectiveness of different measures of PA, this is the first study to comprehensively examine and collate details on the validity, reliability and responsiveness to change of a range of measures of PA in adult populations. For researchers that are selecting a measure of PA, this will enable the comparison between different measures of PA within one article, rather than having to refer to a wide range of available literature that examines each single measure. Additionally, rather than focusing solely on information presented within each existing review of the literature, the original articles referred to within each review were sought and data was extracted independently.

Conclusion

In general, objective measures of PA demonstrate less variability in properties of methodological effectiveness than self-report measures. Although no “perfect” tool for the examination of PA exists, it is suggested that researchers aim to incorporate appropriate objective measures, specific to the behaviours of interests, when examining PA in adults in free-living environments. Other criteria beyond methodological effectiveness often influence tool selection, including cost and feasibility. However, researchers must be cognisant of the value of increased methodological effectiveness of any measurement method for PA in adults. Additionally, although a wealth of research exists in relation to the methodological effectiveness of PA measures, it is clear that the development of an appropriate and consistent approach to conducting research and reporting findings in this domain is necessary to enable researchers to easily compare findings across instruments.

References

Kohl HW, Craig CL, Lambert EV, Inoue S, Alkandari JR, Leetongin G, Kahlmeier S. Group LPASW: The pandemic of physical inactivity: global action for public health. Lancet. 2012;380:294–305.

Haskell WL, Lee I-M, Pate RR, Powell KE, Blair SN, Franklin BA, Macera CA, Heath GW, Thompson PD, Bauman A. Physical activity and public health: updated recommendation for adults from the American College of Sports Medicine and the American Heart Association. Circulation. 2007;116:1081–93.

Owen N, Healy GN, Matthews CE, Dunstan DW. Too much sitting: the population-health science of sedentary behavior. Exerc Sport Sci Rev. 2010;38:105–13.

Shephard RJ, Aoyagi Y. Measurement of human energy expenditure, with particular reference to field studies: an historical perspective. Eur J Appl Physiol. 2012;112:2785–815.

Warren JM, Ekelund U, Besson H, Mezzani A, Geladas N, Vanhees L. Assessment of physical activity - a review of methodologies with reference to epidemiological research: a report of the exercise physiology section of the European Association of Cardiovascular Prevention and Rehabilitation. Eur J Prev Cardiol. 2010;17:127–39.

Dishman RK, Washburn RA, Schoeller DA. Measurement of physical activity. Quest. 2001;53:295–309.

Trost SG, McIver KL, Pate RR. Conducting accelerometer-based activity assessments in field-based research. Med Sci Sports Exerc. 2005;37:S531–43.

Adams SA, Matthews CE, Ebbeling CB, Moore CG, Cunningham JE, Fulton J, Hebert JR. The effect of social desirability and social approval on self-reports of physical activity. Am J Epidemiol. 2005;161:389–98.

Sallis JF, Saelens BE. Assessment of physical activity by self-report: status, limitations, and future directions. Res Q Exerc Sport. 2000;71:1–14.

Neilson HK, Robson PJ, Friedenreich CM, Csizmadi I. Estimating activity energy expenditure: how valid are physical activity questionnaires? Am J Clin Nutr. 2008;87:279–91.

van Poppel MNM, Chinapaw MJM, Mokkink LB, van Mechelen W, Terwee CB. Physical Activity Questionnaires for Adults A Systematic Review of Measurement Properties. Sports Med. 2010;40:565–600.

Ainslie PN, Reilly T, Westerterp KR. Estimating Human Energy Expenditure: A Review of Techniques with Particular Reference to Doubly Labelled Water. Sports Med. 2003;33:683–98.

Bort-Roig J, Gilson N, Puig-Ribera A, Contreras R, Trost S. Measuring and Influencing Physical Activity with Smartphone Technology: A Systematic Review. Sports Med. 2014;44:671–86.

Freedson P, Miller K. Objective monitoring of physical activity using motion sensors and heart rate. Res Q Exerc Sport. 2000;71:21–9.

Tudor-Locke CE, Myers AM. Methodological considerations for researchers and practitioners using pedometers to measure physical (ambulatory) activity. Res Q Exerc Sport. 2001;72:1–12.

Prince SA, Adamo KB, Hamel ME, Hardt J, Gorber SC, Tremblay M. A comparison of direct versus self-report measures for assessing physical activity in adults: a systematic review. Int J Behav Nutr Phys Act. 2008;5:56.

Bassett DR Jr, Mahar MT, Rowe DA, Morrow JR Jr. Walking and measurement. Med Sci Sports Exerc. 2008;40:S529–36.

Kim Y, Park I, Kang M. Convergent validity of the international physical activity questionnaire (IPAQ): meta-analysis. Public Health Nutr. 2013;16:440–52.

Kwak L, Proper KI, Hagströmer M, Sjöström M. The repeatability and validity of questionnaires assessing occupational physical activity - a systematic review. Scand J Work Environ Health. 2011;37:6–29.

Bassett DR Jr. Validity and reliability issues in objective monitoring of physical activity. Res Q Exerc Sport. 2000;71:S30–6.

Bonomi AG, Westerterp KR. Advances in physical activity monitoring and lifestyle interventions in obesity: a review. Int J Obes. 2012;36:167–77.

Washburn RA. Assessment of physical activity in older adults. Res Q Exerc Sport. 2000;71:79–88.

Pennathur A, Magham R, Contreras LR, Dowling W. Daily living activities in older adults: Part I - a review of physical activity and dietary intake assessment methods. Int J Ind Ergon. 2003;32:389–404.

Meyer K, Stolz C, Rott C, Laederach-Hofmann K. Physical activity assessment and health outcomes in old age: how valid are dose-response relationships in epidemiologic studies? Eur Rev Aging Phys Act. 2009;6:7–17.

Murphy SL. Review of physical activity measurement using accelerometers in older adults: considerations for research design and conduct. Prev Med. 2009;48:108–14.

Kowalski K, Rhodes R, Naylor PJ, Tuokko H, MacDonald S. Direct and indirect measurement of physical activity in older adults: a systematic review of the literature. Int J Behav Nutr Phys. 2012;9:148.

Garatachea N, Torres Luque G, Gonzalez Gallego J. Physical activity and energy expenditure measurements using accelerometers in older adults. Nutr Hosp. 2010;25:224–30.

Gorman E, Hanson HM, Yang PH, Khan KM, Liu-Ambrose T, Ashe MC. Accelerometry analysis of physical activity and sedentary behavior in older adults: a systematic review and data analysis. Eur Rev Aging Phys Act. 2014;11:35–49.

Forsen L, Loland NW, Vuillemin A, Chinapaw MJM, van Poppel MNM, Mokkink LB, van Mechelen W, Terwee CB. Self-Administered Physical Activity Questionnaires for the Elderly A Systematic Review of Measurement Properties. Sports Med. 2010;40:601–23.

Cheung VH, Gray L, Karunanithi M. Review of Accelerometry for Determining Daily Activity Among Elderly Patients. Arch Phys Med Rehabil. 2011;92:998–1014.

Helmerhorst HJ, Brage S, Warren J, Besson H, Ekelund U. A systematic review of reliability and objective criterion-related validity of physical activity questionnaires. Int J Behav Nutr Phys Act. 2012;9:103.

Kowalski K, Rhodes R, Naylor PJ, Tuokko H, MacDonald S. Direct and indirect measurement of physical activity in older adults: a systematic review of the literature. Int J Behav Nutr Phys Act. 2012;9:148.

Plasqui G, Westerterp KR. Physical activity assessment with accelerometers: an evaluation against doubly labeled water. Obesity. 2007;15:2371–9.

Reiser LM, Schlenk EA. Clinical use of physical activity measures. J Am Acad Nurse Pract. 2009;21:87–94.

Centre for reviews dissemination. Systematic reviews: CRD’s guidance for undertaking reviews in health care: Centre for Reviews and Dissemination; 2009.

Melanson EL Jr, Freedson PS, Blair S. Physical activity assessment: a review of methods. Crit Rev Food Sci Nutr. 1996;36:385–96.

Duncan MJ, Badland HM, Mummery WK. Applying GPS to enhance understanding of transport-related physical activity. J Sci Med Sport. 2009;12:549–56.

Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, Porter AC, Tugwell P, Moher D, Bouter LM. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol. 2007;7:10.

Berlin JE, Storti KL, Brach JS. Using activity monitors to measure physical activity in free-living conditions. Phys Ther. 2006;86:1137–45.

Butte NF, Ekelund U, Westerterp KR. Assessing physical activity using wearable monitors: measures of physical activity. Med Sci Sports Exerc. 2012;44:5–12.

Chen KY, Bassett DR. The technology of accelerometry-based activity monitors: Current and future. Med Sci Sports Exerc. 2005;37:490–S500.

Corder K, Brage S, Ekelund U. Accelerometers and pedometers: methodology and clinical application. Curr Opin Clin Nutr Metab Care. 2007;10:597–603.

Davidson M, de Morton N. A systematic review of the Human Activity Profile. Clin Rehabil. 2007;21:151–62.

DeLany JP. Measurement of energy expenditure. Pediatric Blood and Cancer. 2012;58:129–34.

Haskell WL, Kiernan M. Methodologic issues in measuring physical activity and physical fitness when evaluating the role of dietary supplements for physically active people. Am J Clin Nutr. 2000;72:541s–50s.

Lamonte MJ, Ainsworth BE. Quantifying energy expenditure and physical activity in the context of dose response. Med Sci Sports Exerc. 2001;33:S370–8.

Lee PH, Macfarlane DJ, Lam TH, Stewart SM. Validity of the International Physical Activity Questionnaire Short Form (IPAQ-SF): a systematic review. Int J Behav Nutr Phys Act. 2011;8:115.

Levine JA. Measurement of energy expenditure. Public Health Nutr. 2005;8:1123–32.

Liu SP, Gao RX, Freedson PS. Computational Methods for Estimating Energy Expenditure in Human Physical Activities. Med Sci Sports Exerc. 2012;44:2138–46.

Lowe SA, Ólaighin G. Monitoring human health behaviour in one's living environment: A technological review. Med Eng Phys. 2014;36:147–68.

Mathie MJ, Coster AC, Lovell NH, Celler BG. Accelerometry: providing an integrated, practical method for long-term, ambulatory monitoring of human movement. Physiol Meas. 2004;25:R1–20.

Matthews CE. Calibration of accelerometer output for adults. Med Sci Sports Exerc. 2005;37:512–S522.

Pedišić Ž, Bauman A. Accelerometer-based measures in physical activity surveillance: current practices and issues. Br J Sports Med. 2014;49:219–23.

Pierannunzi C, Hu SS, Balluz L. A systematic review of publications assessing reliability and validity of the Behavioral Risk Factor Surveillance System (BRFSS), 2004-2011. BMC Med Res Methodol. 2013;13:49.

Plasqui G, Bonomi AG, Westerterp KR. Daily physical activity assessment with accelerometers: new insights and validation studies. Obes Rev. 2013;14:451–62.

Reilly JJ, Penpraze V, Hislop J, Davies G, Grant S, Paton JY. Objective measurement of physical activity and sedentary behaviour: review with new data. Arch Dis Child. 2008;93:614–9.

Ridgers ND, Fairclough S. Assessing free-living physical activity using accelerometry: Practical issues for researchers and practitioners. Eur J Sport Sci. 2011;11:205–13.

Schutz Y, Weinsier RL, Hunter GR. Assessment of free-living physical activity in humans: an overview of currently available and proposed new measures. Obes Res. 2001;9:368–79.

Shephard RJ. Limits to the measurement of habitual physical activity by questionnaires. Br J Sports Med. 2003;37:197–206.

Strath SJ, Kaminsky LA, Ainsworth BE, Ekelund U, Freedson PS, Gary RA, Richardson CR, Smith DT, Swartz AM, Council Clinical C, Council Cardiovasc Stroke N. Guide to the Assessment of Physical Activity: Clinical and Research Applications A Scientific Statement From the American Heart Association. Circulation. 2013;128:2259–79.

Tudor-Locke C, Rowe DA. Using cadence to study free-living ambulatory behaviour. Sports Med. 2012;42:381–98.

Tudor-Locke C, Williams JE, Reis JP, Pluto D. Utility of pedometers for assessing physical activity: convergent validity. Sports Med. 2002;32:795–808.

Tudor-Locke C, Williams JE, Reis JP, Pluto D. Utility of pedometers for assessing physical activity: construct validity. Sports Med. 2004;34:281–91.

Valanou EM, Bamia C, Trichopoulou A. Methodology of physical-activity and energy-expenditure assessment: A review. J Public Health. 2006;14:58–65.

Van Remoortel H, Giavedoni S, Raste Y, Burtin C, Louvaris Z, Gimeno-Santos E, Langer D, Glendenning A, Hopkinson NS, Vogiatzis I, et al. Validity of activity monitors in health and chronic disease: a systematic review. Int J Behav Nutr Phys Act. 2012;9:84.

Vanhees L, Lefevre J, Philippaerts R, Martens M, Huygens W, Troosters T, Beunen G. How to assess physical activity? How to assess physical fitness? Eur J Prev Cardiol. 2005;12:102–14.

Washburn RA, Heath GW, Jackson AW. Reliability and validity issues concerning large-scale surveillance of physical activity. Res Q Exerc Sport. 2000;71:104–13.

Welk GJ. Principles of design and analyses for the calibration of accelerometry-based activity monitors. Med Sci Sports Exerc. 2005;37:501–11.

Westerterp KR. Assessment of physical activity: a critical appraisal. Eur J Appl Physiol. 2009;105:823–8.

Westerterp KR, Plasqui G. Physical activity and human energy expenditure. Curr Opin Clin Nutr Metab Care. 2004;7:607–13.

Yang CC, Hsu YL. A review of accelerometry-based wearable motion detectors for physical activity monitoring. Sensors. 2010;10:7772–88.

Andrew NE, Gabbe BJ, Wolfe R, Cameron PA. Evaluation of Instruments for Measuring the Burden of Sport and Active Recreation Injury. Sports Med. 2010;40:141–61.

Adams SA, Matthews CE, Ebbeling CB, Moore CG, Cunningham JE, Fulton J, et al. The effect of social desirability and social approval on self-reports of physical activity. Am J Epidemiol. 2005;161:389–98.

Barnard J, Tapsell LC, Davies P, Brenninger V, Storlien L. Relationship of high energy expenditure and variation in dietary intake with reporting accuracy on 7 day food records and diet histories in a group of healthy adult volunteers. Eur J Clin Nutr. 2002;56:358–67.

Besson H, Brage S, Jakes RW, Ekelund U, Wareham NJ. Estimating physical activity energy expenditure, sedentary time, and physical activity intensity by self-report in adults. Am J Clin Nutr. 2010;91:106–14.

Bonnefoy M, Normand S, Pachiaudi C, Lacour JR, Laville M, Kostka T. Simultaneous validation of ten physical activity questionnaires in older men: a doubly labeled water study. J Am Geriatr Soc. 2001;49:28–35.

Colbert LH, Matthews CE, Havighurst TC, Kim K, Schoeller DA. Comparative validity of physical activity measures in older adults. Med Sci Sports Exerc. 2011;43:867–76.

Conway JM, Irwin ML, Ainsworth BE. Estimating energy expenditure from the Minnesota Leisure Time Physical Activity and Tecumseh Occupational Activity questionnaires–a doubly labeled water validation. J Clin Epidemiol. 2002;55:392–9.

Conway JM, Seale JL, Jacobs DR, Irwin ML, Ainsworth BE. Comparison of energy expenditure estimates from doubly labeled water, a physical activity questionnaire, and physical activity records. Am J Clin Nutr. 2002;75:519–25.

Fuller Z, Horgan G, O'Reilly L, Ritz P, Milne E, Stubbs R. Comparing different measures of energy expenditure in human subjects resident in a metabolic facility. Eur J Clin Nutr. 2008;62:560–9.

Goran MI, Poehlman ET. Total energy expenditure and energy requirements in healthy elderly persons. Metabolism. 1992;41:744–53.

Irwin ML, Ainsworth BE, Conway JM. Estimation of energy expenditure from physical activity measures: determinants of accuracy. Obes Res. 2001;9:517–25.

Maddison R, Mhurchu CN, Jiang Y, Vander Hoorn S, Rodgers A, Lawes CM, Rush E. International physical activity questionnaire (IPAQ) and New Zealand physical activity questionnaire (NZPAQ): A doubly labelled water validation. Int J Behav Nutr Phys Act. 2007;4:62.

Masse LC, Fulton JE, Watson KL, Mahar MT, Meyers MC, Wong WW. Influence of body composition on physical activity validation studies using doubly labeled water. J Appl Physiol. 2004;96:1357–64.

Morio B, Ritz P, Verdier E, Montaurier C, Beaufrere B, Vermorel M. Critical evaluation of the factorial and heart-rate recording methods for the determination of energy expenditure of free-living elderly people. Br J Nutr. 1997;78:709–22.

Paul DR, Rhodes DG, Kramer M, Baer DJ, Rumpler WV. Validation of a food frequency questionnaire by direct measurement of habitual ad libitum food intake. Am J Epidemiol. 2005;162:806–14.

Rothenberg E, Bosaeus I, Lernfelt B, Landahl S, Steen B. Energy intake and expenditure: validation of a diet history by heart rate monitoring, activity diary and doubly labeled water. Eur J Clin Nutr. 1998;52:832–8.

Rush E, Valencia ME, Plank LD. Validation of a 7-day physical activity diary against doubly-labelled water. Ann Hum Biol. 2008;35:416–21.

Schulz LO, Harper IT, Smith CJ, Kriska AM, Ravussin E. Energy intake and physical activity in Pima Indians: comparison with energy expenditure measured by doubly‐labeled water. Obes Res. 1994;2:541–8.

Starling RD, Toth MJ, Matthews DE, Poehlman ET. Energy Requirements and Physical Activity of Older Free-Living African-Americans: A Doubly Labeled Water Study 1. J Clin Endocrinol Metab. 1998;83:1529–34.

Staten LK, Taren DL, Howell WH, Tobar M, Poehlman ET, Hill A, Reid PM, Ritenbaugh C. Validation of the Arizona Activity Frequency Questionnaire using doubly labeled water. Med Sci Sports Exerc. 2001;33:1959–67.

Koebnick C, Wagner K, Thielecke F, Moeseneder J, Hoehne A, Franke A, Meyer H, Garcia A, Trippo U, Zunft H. Validation of a simplified physical activity record by doubly labeled water technique. Int J Obes. 2005;29:302–9.

Leenders N, Sherman WM, Nagaraja H, Kien CL. Evaluation of methods to assess physical activity in free-living conditions. Med Sci Sports Exerc. 2001;33:1233–40.

Livingstone M, Strain J, Prentice A, Coward W, Nevin G, Barker M, Hickey R, McKenna P, Whitehead R. Potential contribution of leisure activity to the energy expenditure patterns of sedentary populations. Br J Nutr. 1991;65:145–55.

Lof M, Forsum E. Validation of energy intake by dietary recall against different methods to assess energy expenditure. J Hum Nutr Diet. 2004;17:471–80.

Lof M, Hannestad U, Forsum E. Assessing physical activity of women of childbearing age. Ongoing work to develop and evaluate simple methods. Food Nutr Bull. 2002;23:30–3.

Mahabir S, Baer DJ, Giffen C, Clevidence BA, Campbell WS, Taylor PR, Hartman TJ. Comparison of energy expenditure estimates from 4 physical activity questionnaires with doubly labeled water estimates in postmenopausal women. Am J Clin Nutr. 2006;84:230–6.

Philippaerts R, Westerterp K, Lefevre J. Doubly labelled water validation of three physical activity questionnaires. Int J Sports Med. 1999;20:284–9.

Racette SB, Schoeller DA, Kushner RF. Comparison of heart rate and physical activity recall with doubly labeled water in obese women. Med Sci Sports Exerc. 1995;27:126–33.

Rafamantanantsoa HH, Ebine N, Yoshioka M, Higuchi H, Yoshitake Y, Tanaka H, Saitoh S, Jones PJH. Validation of three alternative methods to measure total energy expenditure against the doubly labeled water method for older Japanese men. J Nutr Sci Vitaminol (Tokyo). 2002;48:517–23.

Reilly J, Lord A, Bunker V, Prentice A, Coward W, Thomas A, Briggs R. Energy balance in healthy elderly women. Br J Nutr. 1993;69:21–7.

Schulz S, Westerterp KR, Brück K. Comparison of energy expenditure by the doubly labeled water technique with energy intake, heart rate, and activity recording in man. Am J Clin Nutr. 1989;49:1146–54.

Schuit AJ, Schouten EG, Westerterp KR, Saris WH. Validity of the Physical Activity Scale for the Elderly (PASE): according to energy expenditure assessed by the doubly labeled water method. J Clin Epidemiol. 1997;50:541–6.

Seale JL, Klein G, Friedmann J, Jensen GL, Mitchell DC, Smiciklas-Wright H. Energy expenditure measured by doubly labeled water, activity recall, and diet records in the rural elderly. Nutrition. 2002;18:568–73.

Starling RD, Matthews DE, Ades PA, Poehlman ET. Assessment of physical activity in older individuals: a doubly labeled water study. J Appl Physiol. 1999;86:2090–6.

Walsh MC, Hunter GR, Sirikul B, Gower BA. Comparison of self-reported with objectively assessed energy expenditure in black and white women before and after weight loss. Am J Clin Nutr. 2004;79:1013–9.

Washburn RA, Jacobsen DJ, Sonko BJ, Hill JO, Donnelly JE. The validity of the Stanford Seven-Day Physical Activity Recall in young adults. Med Sci Sports Exerc. 2003;35:1374–80.

Aadahl M, Jørgensen T. Validation of a new self-report instrument for measuring physical activity. Med Sci Sports Exerc. 2003;35:1196–202.

Ainsworth B, Bassett DR Jr, Strath SJ, Swartz AM, O'Brien WL, Thompson RW, Jones DA, Macera CA, Kimsey CD. Comparison of three methods for measuring the time spent in physical activity. Med Sci Sports Exerc. 2000;32:S457–64.

Ainsworth BE, Leon AS, Richardson MT, Jacobs DR, Paffenbarger R. Accuracy of the college alumnus physical activity questionnaire. J Clin Epidemiol. 1993;46:1403–11.

Ainsworth BE, Richardson MT, Jacobs DR, Leon AS, Sternfeld B. Accuracy of recall of occupational physical activity by questionnaire. J Clin Epidemiol. 1999;52:219–27.

Arroll B, Jackson R, Beaglehole R. Validation of a three-month physical activity recall questionnaire with a seven-day food intake and physical activity diary. Epidemiology. 1991;2:296–9.

Baranowski T, Dworkin RJ, Cieslik CJ, Hooks P, Clearman DR, Ray L, Dunn JK, Nader PR. Reliability and validity of self report of aerobic activity: Family Health Project. Res Q Exerc Sport. 1984;55:309–17.

Bassett DR Jr, Cureton AL, Ainsworth BE. Measurement of daily walking distance-questionnaire versus pedometer. Med Sci Sports Exerc. 2000;32:1018–23.

Boon RM, Hamlin MJ, Steel GD, Ross JJ. Validation of the New Zealand physical activity questionnaire (NZPAQ-LF) and the international physical activity questionnaire (IPAQ-LF) with accelerometry. Br J Sports Med. 2008;44:741–6.

Brown WJ, Burton NW, Marshall AL, Miller YD. Reliability and validity of a modified self‐administered version of the Active Australia physical activity survey in a sample of mid‐age women. Aust N Z J Public Health. 2008;32:535–41.

Bull FC, Maslin TS, Armstrong T. Global physical activity questionnaire (GPAQ): nine country reliability and validity study. J Phys Act Health. 2009;6:790–804.

Bulley C, Donaghy M, Payne A, Mutrie N. Validation and modification of the Scottish Physical Activity Questionnaire for use in a female student population. Int J Health Promot Educ. 2005;43:117–24.

Busse M, Van Deursen R, Wiles CM. Real-life step and activity measurement: reliability and validity. J Med Eng Technol. 2009;33:33–41.

Carter-Nolan PL, Adams-Campbell LL, Makambi K, Lewis S, Palmer JR, Rosenberg L. Validation of physical activity instruments: Black Women’s Health Study. Ethn Dis. 2006;16:943–7.

Cartmel B, Moon T. Comparison of two physical activity questionnaires, with a diary, for assessing physical activity in an elderly population. J Clin Epidemiol. 1992;45:877–83.

Craig CL, Marshall AL, Sjostrom M, Bauman AE, Booth ML, Ainsworth BE, Pratt M, Ekelund U, Yngve A, Sallis JF, Oja P. International physical activity questionnaire: 12-country reliability and validity. Med Sci Sports Exerc. 2003;35:1381–95.

Davidson L, McNeill G, Haggarty P, Smith JS, Franklin MF. Free-living energy expenditure of adult men assessed by continuous heart-rate monitoring and doubly-labelled water. Br J Nutr. 1997;78:695–708.

De Cocker KA, De Bourdeaudhuij IM, Cardon GM. What do pedometer counts represent? A comparison between pedometer data and data from four different questionnaires. Public Health Nutr. 2009;12:74–81.

Deng H, Macfarlane D, Thomas G, Lao X, Jiang C, Cheng K, Lam T. Reliability and validity of the IPAQ-Chinese: the Guangzhou Biobank Cohort study. Med Sci Sports Exerc. 2008;40:303–7.

Dinger MK. Reliability and convergent validity of the National College Health Risk Behavior Survey physical activity items. J Health Educ. 2003;34:162–6.

Dinger MK, Behrens TK, Han JL. Validity and reliability of the International Physical Activity Questionnaire in college students. J Health Educ. 2006;37:337–43.

Dishman R, Steinhardt M. Reliability and concurrent validity for a 7-d re-call of physical activity in college students. Med Sci Sports Exerc. 1988;20:14–25.

Duncan GE, Sydeman SJ, Perri MG, Limacher MC, Martin AD. Can sedentary adults accurately recall the intensity of their physical activity? Prev Med. 2001;33:18–26.

Ekelund U, Sepp H, Brage S, Becker W, Jakes R, Hennings M, Wareham NJ. Criterion-related validity of the last 7-day, short form of the International Physical Activity Questionnaire in Swedish adults. Public Health Nutr. 2006;9:258–65.

Fjeldsoe B, Marshall A, Miller Y. Measurement properties of the Australian women's activity survey. Med Sci Sports Exerc. 2009;41:1020–33.

Friedenreich CM, Courneya KS, Neilson HK, Matthews CE, Willis G, Irwin M, Troiano R, Ballard-Barbash R. Reliability and validity of the past year total physical activity questionnaire. Am J Epidemiol. 2006;163:959–70.

Grimm EK, Swartz AM, Hart T, Miller NE, Strath SJ. Comparison of the IPAQ-Short Form and accelerometry predictions of physical activity in older adults. J Aging Phys Act. 2012;20:64–79.

Hagstromer M, Ainsworth BE, Oja P, Sjostrom M. Comparison of a subjective and an objective measure of physical activity in a population sample. J Phys Act Health. 2010;7:541–50.

Hallal PC, Simoes E, Reichert FF, Azevedo MR, Ramos LR, Pratt M, Brownson RC. Validity and reliability of the telephone-administered International Physical Activity Questionnaire in Brazil. J Phys Act Health. 2010;7:402–9.

Hallal PC, Victora CG, Wells JCK, RdC L, Valle NJ. Comparison of short and full-length International Physical Activity Questionnaires. J Phys Act Health. 2004;1:227–34.

Hekler EB, Buman MP, Haskell WL, Conway TL, Cain KL, Sallis JF, Saelens BE, Frank LD, Kerr J, King AC. Reliability and validity of CHAMPS self-reported sedentary-to-vigorous intensity physical activity in older adults. J Phys Act Health. 2012;9:225–36.

Hopkins WG, Wilson NC, Russell DG. Validation of the physical activity instrument for the Life in New Zealand national survey. Am J Epidemiol. 1991;133:73–82.

Hurtig-Wennlöf A, Hagströmer M, Olsson LA. The International Physical Activity Questionnaire modified for the elderly: aspects of validity and feasibility. Public Health Nutr. 2010;13:1847–54.

Kurtze N, Rangul V, Hustvedt B-E. Reliability and validity of the international physical activity questionnaire in the Nord-Trøndelag health study (HUNT) population of men. BMC Med Res Methodol. 2008;8:63.

Lee PH, Yu Y, McDowell I, Leung GM, Lam T, Stewart SM. Performance of the international physical activity questionnaire (short form) in subgroups of the Hong Kong chinese population. Int J Behav Nutr Phys Act. 2011;8:1479–5868.

Leenders NY, Sherman WM, Nagaraja H. Comparisons of four methods of estimating physical activity in adult women. Med Sci Sports Exerc. 2000;32:1320–6.

Lemmer JT, Ivey FM, Ryan AS, Martel GF, Hurlbut DE, Metter JE, Fozard JL, Fleg JL, Hurley BF. Effect of strength training on resting metabolic rate and physical activity: age and gender comparisons. Med Sci Sports Exerc. 2001;33:532–41.

Macfarlane D, Chan A, Cerin E. Examining the validity and reliability of the Chinese version of the International Physical Activity Questionnaire, long form (IPAQ-LC). Public Health Nutr. 2011;14:443–50.

Macfarlane DJ, Lee CC, Ho EY, Chan K, Chan DT. Reliability and validity of the Chinese version of IPAQ (short, last 7 days). J Sci Med Sport. 2007;10:45–51.

Macfarlane DJ, Lee CC, Ho EY, Chan K-L, Chan D. Convergent validity of six methods to assess physical activity in daily life. J Appl Physiol. 2006;101:1328–34.

Mäder U, Martin BW, Schutz Y, Marti B. Validity of four short physical activity questionnaires in middle-aged persons. Med Sci Sports Exerc. 2006;38:1255–66.

Masse LC, Eason KE, Tortolero SR, Kelder SH. Comparing participants’ rating and compendium coding to estimate physical activity intensities. Meas Phys Educ Exerc Sci. 2005;9:1–20.

Matthews CE, Ainsworth BE, Hanby C, Pate RR, Addy C, Freedson PS, Jones DA, Macera CA. Development and testing of a short physical activity recall questionnaire. Med Sci Sports Exerc. 2005;37:986–94.

Matthews CE, Freedson PS. Field trial of a three-dimensional activity monitor: comparison with self report. Med Sci Sports Exerc. 1995;27:1071–8.

Matthews CE, Keadle SK, Sampson J, Lyden K, Bowles HR, Moore SC, Libertine A, Freedson PS, Fowke JH. Validation of a previous-day recall measure of active and sedentary behaviors. Med Sci Sports Exerc. 2013;45:1629–38.

Matthews CE, Shu X-O, Yang G, Jin F, Ainsworth BE, Liu D, Gao Y-T, Zheng W. Reproducibility and validity of the Shanghai Women’s Health Study physical activity questionnaire. Am J Epidemiol. 2003;158:1114–22.