Abstract

Background

Peripheral intravenous catheters (PIVCs) account for a mean of 38% of catheter associated bloodstream infections (CABSI) with Staphylococcus aureus, which are preventable if deficiencies in best practice are addressed. There exists no feasible and reliable quality surveillance tool assessing all important areas related to PIVC quality. Thus, we aimed to develop and test feasibility and reliability for an efficient quality assessment tool of overall PIVC quality.

Methods

The Peripheral Intravenous Catheter- mini Questionnaire, PIVC-miniQ, consists of 16 items calculated as a sum score of problems regarding the insertion site, condition of dressing and equipment, documentation, and indication for use. In addition, it contains background variables like PIVC site, size and insertion environment. Two hospitals tested the PIVC-miniQ for feasibility and inter-rater agreement. Each PIVC was assessed twice, 2–5 min apart by two independent raters. We calculated the intraclass correlation coefficient (ICC) for each hospital and overall. For each of the 16 items, we calculated negative agreement, positive agreement, absolute agreement, and Scott’s pi.

Results

Sixty-three raters evaluated 205 PIVCs in 177 patients, each PIVC was assessed twice by independent raters, in total 410 PIVC observations. ICC between raters was 0.678 for hospital A, 0.577 for hospital B, and 0.604 for the pooled data. Mean time for the bedside assessment of each PIVC was 1.40 (SD 0.0007) minutes. The most frequent insertion site symptom was “pain and tenderness” (14.4%), whereas the most prevalent overall problem was lack of documentation of the PIVC (26.8%). Up to 50% of PIVCs were placed near joints (wrist or antecubital fossae) or were inserted under suboptimal conditions, i.e. emergency department or ambulance.

Conclusions

Our study highlights the need for PIVC quality surveillance on ward and hospital level and reports the PIVC-miniQ to be a reliable and time efficient tool suitable for frequent point-prevalence audits.

Similar content being viewed by others

Background

Peripheral intravenous catheters (PIVCs) are the most used intravascular devices in hospitals, as up to 80% of hospitalized patients require intravenous (IV) therapy [1]. When managed properly, PIVCs are safe devices with little risk for serious complications. However, PIVC complications such as phlebitis, extravasation, infiltration and infections are common [2] and infected PIVCs account for a mean of 38% of catheter associated blood stream infections (CABSI) caused by Staphylococcus aureus (S. aureus) [1]. Preventable CABSI and sepsis are serious complications with high mortality [3,4,5,6], estimated to occur in 0.5 cases per 1.000 PIVC catheter days [7]. Considering the high proportion of PIVCs in clinical practice, even a low incidence of CABSI can still give a considerable impact [4, 8,9,10,11,12].

A large international multicenter study, The One Million Global catheters study (OMG), revealed poor current practices regarding insertion and management of PIVCs [13, 14]. In the OMG study, PIVCs were in use despite signs of local infection and pain, a large amount of PIVCs were kept without indication, and securement dressings were often blood stained or loose. Documentation of these complications were often lacking in patient journals [13, 14]. All these factors have been shown to increase infection risk and hamper patient safety and are not compliant with best practice [15].

Today, many screening tools are available for PIVC phlebitis assessment, and at least 71 phlebitis scales exists [16,17,18]. Recently, large studies have measured complications and risk factors regarding PIVC care [19, 20]. However, there exist no feasible and reliable quality surveillance tool assessing all important aspects of PIVC quality; including signs of local infection and pain, information on redundant catheters, lack of documentation of PIVC insertion date or poor condition of securement dressing and equipment connected to the PIVC [18, 21, 22]. A simple quality surveillance tool, reliable across raters, mirroring deviation in overall PIVC quality, can be used by ward or hospital managers in order to raise front line personnel’s risk awareness of these issues.

Health care organizations are challenged in creating systems for constant improvement [23]. In the present study, we used questions from comprehensive point-prevalence questionnaires [13, 21] in order to develop a quality assessment tool suitable for repeated measures aiming at reporting rates and magnitude of undesired PIVC practice. Further, the tool’s objective is to measure the effect of interventions, targeted at improving overall PIVC quality at the ward or hospital level. We labeled the tool Peripheral Intravenous Catheter mini questionnaire (PIVC-miniQ) (Additional file 1: Figure S1). The PIVC-miniQ is designed for repeated point-prevalence audits to improve and monitor quality. The overall goal of this study was to assess the feasibility and inter-rater agreement of the PIVC-miniQ in clinical practice.

Methods

In this study, feasibility is defined as time to complete the PIVC miniQ and number of missing values on each item [18]. Reliability is defined as inter-rater reliability i.e. the instruments ability to produce agreement between two raters that assess the same PIVC blinded from each other’s ratings [24].

Study sites

Two hospitals in Mid-Norway participated. Hospital A is the local hospital for 100,000 inhabitants and hospital B is the local hospital for 280,000 and referral hospital for 700,000 inhabitants. Both hospitals share the same documentation systems. Even though the two hospitals have electronic patient journals, observations regarding PIVCs and medication are on paper-based observation curves that are scanned into the patient record after the hospital stay is over.

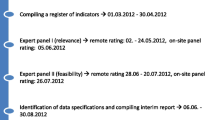

In 2015, Hospital A used front-line, research and education nurses to develop a short assessment tool for PIVC quality surveillance, based on experience from their participation in the OMG study [14]. The main target was local quality surveillance. Hospital A used the pre-version of the PIVC-miniQ in three point-prevalence audits. The poor baseline quality was presented at safety huddles at each ward using Epidata time series to display the sum score for each PIVC and mean of problems across all PIVCs. These time series were also used in a 30-min in-house training session about the gap between desired and observed PIVC quality. On the second and third PIVC-miniQ audit, one and two months after these improvements` actions took place, the measurements showed improved quality of PIVC care (Additional file 1: Figure S2).

As the PIVC-miniQ pre-version showed promise to enable overall PIVC quality improvements, we wanted to develop and test feasibility and reliability for the tool in a major context, and in 2017, a university hospital (B) was included.

PIVC-miniQ

The PIVC-miniQ underwent a revision, based on Hospital A’s experience, search for literature and guidelines regarding items mirroring PIVC quality [13, 16, 18, 21], together with discussions with IV team and expert nurses in Hospital B. The selection of the final items was based on thorough consideration and consensus in the group. For detailed overview of the development process, see Additional file 1: Table S1.

The final version of the PIVC-miniQ consists of four main assessment areas (Additional file 1: Figure S1). First area reflects phlebitis-related signs and symptoms; insertion site (9 items; including pain or tenderness, redness, swelling, warmth, purulence and hardness of tissue) [25, 26], where signs are assessed by the rater (redness, swelling etc.) and symptoms are expressed by the patient (pain and tenderness). Second area reflects PIVC dressing and IV connection related to PIVC failure [26] (5 items; soiled dressing, dressing with loose or lifting edges, blood in line and absence of insertion date on PIVC dressing). Further, it provides two assessment areas regarding process of care: lack of documentation of PIVC insertion in the patient journal (1 item), and if there is indication for use defined as procedures requiring a PIVC, even if it is not currently used; for instance when the patient has epidural for pain treatment or is connected with telemetry due to irregular heart function (1 item). This makes the PIVC-miniQ a hybrid of dimensions of processes (clinical practice and ability to follow procedures) and outcomes (PIVC related signs and symptoms). Each problem that is present accounts for 1 point, and all problems are summarized in an overall score (scale range 0–16) that can be used for point prevalence audits of overall PIVC quality. An overall score of 0 indicates very good PIVC quality and should be the goal for clinical practice.

In addition, two dimensions of process in clinical practice were added as background variables due to clinical evidence; PIVC placement and PIVC insertion environment. PIVC insertion is not recommended near joints [27] and PIVC inserted in emergency settings are advised to be replaced [1]. We find these variables important on a system level as high prevalence of these factors in a hospital may communicate a questionable practice. These variables are not part of the PIVC-miniQ total score but are useful both in research and prevalence audits and can be analyzed separately for identifying items of concern.

According the aim for inter-rater agreement, we made it mandatory to mark “yes” or “no” for each PIVC statement (either the problem exists or not) since proportions of positive and negative agreement between raters are important for evaluating an assessment instrument.

Selection of patients and raters

We collected PIVC characteristics from medical and surgical patients able to provide verbal consent across two hospitals. A few isolated infectious patients were excluded as isolation procedures are time consuming and we wanted to measure the time spent on the questionnaire. Data were collected between September 2017 and March 2018 from a convenience sample of patients for PIVC site assessment. Every available patient with PIVC on the wards, except the former mentioned were assessed, which makes it a population-based sampling.

For the inter-rater testing we used nurse educators, bedside nurses or nurse students, and mixed the raters as far as possible. None of the raters had been involved in the insertion of the PIVC they assessed. This heterogeneous group was chosen by purpose as we wanted to realistically assess agreement between potential users of the PIVC-miniQ. There was no training of personnel prior to data collection, only a brief explanation of the instrument. Hospital A had however some experience from their use of the pre-version of the PIVC-miniQ. Hospital B had sparse experience with PIVC assessment but was participating in further revisions of the PIVC-miniQ.

Testing procedure

Each PIVC was assessed twice; 2–5 min apart between two independent raters in random sequencing, i.e. the raters mixed between being first and second to assess the PIVC. The first rater explained the procedure to the patient which also was provided a pamphlet of information of the study, and then asked for verbal consent. Thereafter, the assessment was performed bedside while observing the PIVC site. The second rater was meanwhile assessing another patients PIVC as first rater. Both raters collected background variables to be sure the same PIVC was assessed and recorded. The time between assessments was held as short as possible to make sure the PIVC was in the same state at both assessments and to ensure that the patient was not discharged or was away to some procedure or diagnostics. After observing the PIVCs bedside, the paper-based record was considered for documentation of the PIVC or if the patient had any indication for the PIVC that were not obvious bedside. The data was collected on paper and the raters instructed not to discuss or compare their ratings, and imminently after sampling put data sheet in a folder in a locked closet, waiting to be plotted in SPSS.

Statistical analyses

Descriptive statistics regarding the raters, patients, PIVCs, score on each item and time to complete the PIVC miniQ are reported as frequencies (n) and proportions (%) for categorical data and mean (SD) for continuous data. We used ANOVA test for subgroups of raters. The distribution of the sum score for all PIVCs is shown in a histogram. Feasibility is presented as the number of missing data and time to complete the PIVC-miniQ. Missing values on single items were singly imputed using the Expectation maximation (EM) algorithm, using the 16 items on the PIVC-miniQ as predictors. Imputed values were thereafter rounded up to nearest integer 0 (problem does not exist) or 1 (problem exist). PIVCs located near joints, i.e. in antecubital fossa or wrist, was defined as an undesirable PIVC anatomic location, large PIVCs were defined as 14–18 G and PIVCs inserted under suboptimal conditions as prehospital or emergency departments were regarded objectionable on a system-level. For the sum scores, the degree of agreement was quantified as intraclass correlation coefficient (ICC). All ICC formulas consist of a ratio of variance [28]. We used a mixed model with PIVC sum score as the dependent variable, and PIVC and rater as crossed random effects. For ICC we used the correlation estimate provided by Rabe-Hesketh and Skrondal 2012, p. 437–441 [29], ICC (rater) = Variance component PIVC/ (Variance component PIVC + Variance component rater + residual variance). For each of the 16 items, we calculated negative agreement, positive agreement, absolute agreement, and Scott’s pi. Negative agreement can be interpreted as the probability that the second rater classifies “no” given that the first rater classifies the same as “no” [29]. Positive agreement is interpreted correspondingly. Absolute agreement is the probability that two raters evaluate the same item in the same category (yes/no). We also report Scott’s pi which is an agreement measure adjusted for chance [30]. We chose Scotts pi and not Cohen’s kappa for the latter purpose: As pointed out by DeVet [31], Cohens Kappa has some weaknesses regarding multiple raters and is also affected by bias [30, 32]. Statistical analyses were carried out using Stata 15.1 and SPSS 25.

Results

A total of 177 patients across two hospitals and 17 wards had their PIVCs screened by two raters using the PIVC-miniQ. The wards consisted of units of orthopedic, gastroenterological, urological and thoracic surgery as well as general medical units. Twenty-five patients had 2 PIVCs and three patients had 3 PIVCs. This resulted in 205 PIVCs, i.e. a total of 410 PIVC observations. For the procedure we recruited 63 raters, from experienced nurse educators to nursing students. The number of observations per rater ranged from 1 to 128 PIVCs (median 17). For the heterogeneous group of raters, subgroup analyses and ANOVA test between groups of raters were non-significant (p = 0.289). There were missing data in 6.5% of the cases and in 0.4% of all items. The item with most missing was documentation, with 15 missing observations out of 410 (3.6%).

Time used for the bedside assessment of the PIVC was 1.40 (SD 0.0007, minimum 30 s and maximum 5 min) and time used for finding the relevant information regarding the documentation item was 1.39 (SD 0.0009, minimum 15 s and maximum 6 min). Descriptive statistics for raters, patients and PIVCs are presented in Table 1. Observations of all the 410 PIVCs shows that in hospital B, 48.3% of PIVCs were inserted in undesirable anatomical locations near joints (wrist or antecubital fossa), and 46.8% of the PIVCs were larger than the recommended size, i.e. 20G or larger. In hospital A, 55.1% of the PIVCs remained in place despite insertion in objectionable environments. Indwell time of PIVCs varied from 0 to 9 days (median 1.0). The distributions for each of the 16 variables are shown in Fig. 1 (data from all 410 observations). The most frequent insertion site symptom was “pain and tenderness” (14.4%), next “redness” (12.2%), whereas the most prevalent overall problem was lack of documentation of the PIVC (26.8%). Figure 2 shows the distribution of the PIVC-miniQ sum score for all PIVCs assessed (n = 410) where a sum score of 9 was the worst measured, and overall mean score was 2.04 (SD 1.55). As shown in Table 2, the ICC between raters was 0.678 for hospital A and 0.577 for hospital B and 0.604 for the pooled data.

Specific positive and negative agreement and the agreement coefficient, Scott’s pi on each item are presented in Table 3. “Pain and tenderness” was the insertion site item with the highest level of positive agreement between raters, followed by redness. Other signs of local infection had poor positive agreement, and these also had a low prevalence of symptoms. There was however consistent negative specific agreement for each item (agreement on absence of symptoms). The dressing and documentation items had good overall positive and negative agreement.

Discussion

We found that the PIVC-miniQ was feasible; it was quick with little missing data and a reliable and efficient process measure for quality control, taking only 1 min and 40 s on average bedside. The measure of consistency can be described as moderate to high with an ICC of 0.604 for the sum score. Total and negative specific agreement for each item was excellent, but there was inconsistency in positive specific agreement, especially on the items capturing problems with low positive prevalence. However, as the sum score was consistent across individual raters, the PIVC- miniQ can be used to reliably measure development in PIVC overall quality in point-prevalence audits and to be used to improve patient safety.

Observer variation is a challenge in clinical quality assessment. Goransson et al.`s study of measurement tools for phlebitis found that the proportion of PIVC phlebitis varied within and across instruments [17]. Furthermore, most phlebitis scales consists of grades of assessments, i.e. degree of redness or swelling which makes them questionable in clinical settings [17]. Even when using “yes” and “no” in our study, we found it surprising that items that seemed easy to objectively assess, like “blood in line” or “PIVC insertion date in chart is lacking” only had 0.63 and 0.67 in positive agreement, respectively.

Most studies have used a limited number of educated raters [16, 17, 21] but the absolute agreement has remained elusive [16, 17]. Our use of multiple raters mirrored the real-life assessment situation where individual staff education and experience in PIVC assessment varies widely. Using multiple nurses most likely resulted in a great discrepancy between the raters. Nevertheless, the overall PIVC-miniQ score in our study had good inter-rater reliability and the nurses did observe problems with the PIVCs, even if they categorized the problems differently.

By using the PIVC-miniQ in clinical practice, we were able to show that many PIVCs are kept in place despite pain and redness around insertion site, blood in the line, and dressings not changed despite being soiled with blood and fluids. This is disturbing given the short dwell (average 1.8 days), but similar results were identified in the OMG study, assessing PIVCs worldwide [14]. Our study also revealed a high prevalence of stable patients with PIVCs inserted in emergency settings, despite the recommendation to replace such PIVCs as early as possible due to the possibility of non-aseptic insertion [25, 33]. If the patient still is critically ill and there had been no time to replace it, such findings are inevitable but a high prevalence on a hospital level gives reason for concern. Additionally, a high proportion PIVCs were placed near joints and defined as large size; both considered as an undesirable practice linked to premature device failure [4, 9]. Finally, undocumented and redundant PIVCs were a problem, which seems to be a worldwide issue in need of improvement [14]. A high overall prevalence of PIVC problems are however a quality issue that must be addressed as a common responsibility for all health personnel. These findings are clinically relevant, describing patient important quality problems well suited for quality improvement projects that the leaders can use to motivate and educate health personnel towards change and best practice [22, 34]. Frequent feedback on quality of care may contribute to improved patient safety [35]. Audits discovering PIVC care of low quality must call for an action and be discussed in safety huddles or in-house training sessions and may contribute to improved quality over time [36], as shown in Hospital A.

Learning from malpractice in patient care is essential for improving patient safety. Using the PIVC -miniQ in feedback loops for frontline health personnel, can create a platform of learning that benefits the patients. Investing in PIVC quality improvement is important as healthcare associated CABSI is preventable when knowledge on best practice is addressed. Surveillance of the quality related to ubiquitous devices as PIVCs in healthcare are, therefore, of utmost importance [3, 4, 9, 37,38,39,40]. Each item of the PIVC-miniQ gives indications of recommended practice, and as such, can be used as a data for training and education. The overall score can also be used as an evaluation and comparison of results after interventions or between wards (or hospitals) and PIVC standards. Future electronic systems should thus allow for the PIVC-miniQ to become a part of patient safety-surveillance programs, which would allow for a continuous and automated PIVC quality surveillance system.

Some of the items required patient involvement (“Pain and tenderness” or “Where was the PIVC inserted”). The item “pain and tenderness” had the highest agreement between raters and showed that patients are consistent in their reporting independent of whom asks them. Such patient reported outcome measures that involve the patients can enable awareness and engagement in deviations in PIVC care and thereby break down a traditional professional culture [36]. Further, organization of today’s healthcare provides patient discharge from hospital with intravascular devices in place [41]. Empowering patients and family to be alert on the quality on such common devices improves patient safety. The PIVC-miniQ should thus next be tested for inter-rater variability between nurses and patients or nurses and relatives.

Strengths and limitations

Previous literature has described that experienced raters are more coherent in their clinical judgements [42]. However, we consider our use of nurse educators; patients’ primary nurse or nurse students for the inter-rater testing as a strength. This heterogeneous group of nurses was chosen purposively to reflect the potentially users of the PIVC-miniQ in the future [43]. Our use of nursing students as raters could however have been a weakness due to potentially low experience and competence, which may have lowered the agreement. However, the subgroup of nursing students were too low to detect if there were any difference and nursing students have been used successfully in other PIVC assessment studies [17]. Nevertheless, we found it appropriate as neither nurses nor doctors receive any systematic education in evaluating PIVCs during school [44,45,46].

There were however differences between hospitals, and it seems like the former experience in the PIVC pre-study in Hospital A had positive effect on reliability. In the future, we therefore recommend some guidance of the raters before the PIVC-miniQ is used in daily practice.

Some items had low prevalence and low positive agreement. The low prevalence of each item is a factor known to produce lower positive agreement [47]. However, these items are extremely important for assessing the PIVC quality. Besides, the sum scores were most important in this study and these showed moderate to high agreement.

In our study some patient groups were excluded. Excluding isolated patients may have shortened the average time spent on each patient and thereby affected feasibility. In addition, due to the ethical constraints of the study we do not have information on patients not able to provide verbal consent, which may have led to a skewed prevalence of symptoms. However, when the patients are unable to participate (e.g. reduced consciousness), the PIVC-miniQ can still be used without self-report of “pain and tenderness” by calculating out of 15.

Conclusion

Measuring patient safety must highlight validated tools with indicators that can be reported as rates [48]. This study finds the PIVC-miniQ to be a reliable and feasible audit tool measuring rates of PIVC problems. The PIVC-miniQ sum score allowed easy and efficient evaluations of effects with interventions to improve PIVC quality. Here, we provide a tool that can be used for surveillance of problems and measure continuous improvement in patient safety projects and thus ease evaluation of progress. Further testing in other countries are needed.

Availability of data and materials

The datasets used during the current study are available from the corresponding author on reasonable request.

Change history

14 May 2020

An amendment to this paper has been published and can be accessed via the original article.

Abbreviations

- BSI:

-

Bloodstream infection

- CABSI:

-

Catheter-associated bloodstream infections

- EMS:

-

Emergency Medical Services

- G:

-

Gauge

- ICC:

-

Intraclass correlation

- ICU:

-

Intensive Care Unit

- IV:

-

Intravenous

- OMG-study:

-

One Million Global Catheters study

- PIVC:

-

Peripheral intravenous catheter

- PIVC-miniQ :

-

Peripheral Intravenous Catheter mini Questionnaire

References

Mermel LA. Short-term peripheral venous catheter-related bloodstream infections: a systematic review. Clin Infect Dis. 2017;65(10):1757–62.

Dychter SS, Gold DA, Carson D, Haller M. Intravenous therapy: a review of complications and economic considerations of peripheral access. J Infus Nurs. 2012;35(2):84–91.

Austin ED, Sullivan SB, Whittier S, Lowy FD, Uhlemann AC. Peripheral Intravenous Catheter Placement Is an Underrecognized Source of Staphylococcus aureus Bloodstream Infection. Open Forum Infect Dis. 2016;3(2):ofw072.

Pujol M, Hornero A, Saballs M, Argerich MJ, Verdaguer R, Cisnal M, Pena C, Ariza J, Gudiol F. Clinical epidemiology and outcomes of peripheral venous catheter-related bloodstream infections at a university-affiliated hospital. J Hosp Infect. 2007;67(1):22–9.

Sato A, Nakamura I, Fujita H, Tsukimori A, Kobayashi T, Fukushima S, Fujii T, Matsumoto T. Peripheral venous catheter-related bloodstream infection is associated with severe complications and potential death: a retrospective observational study. BMC Infect Dis. 2017;17(1):434.

Dick A, Liu H, Zwanziger J, Perencevich E, Furuya EY, Larson E, Pogorzelska-Maziarz M, Stone PW. Long-term survival and healthcare utilization outcomes attributable to sepsis and pneumonia. BMC Health Serv Res. 2012;12:432.

Maki DG, Kluger DM, Crnich CJ. The risk of bloodstream infection in adults with different intravascular devices: a systematic review of 200 published prospective studies. Mayo Clin Proc. 2006;81(9):1159–71.

Crnich CJ, Maki DG. The role of intravascular devices in Sepsis. Curr Infect Dis Rep. 2001;3(6):496–506.

Rhodes D, Cheng AC, McLellan S, Guerra P, Karanfilovska D, Aitchison S, Watson K, Bass P, Worth LJ. Reducing Staphylococcus aureus bloodstream infections associated with peripheral intravenous cannulae: successful implementation of a care bundle at a large Australian health service. J Hosp Infect. 2016;94(1):86–91.

Wallis MC, McGrail M, Webster J, Marsh N, Gowardman J, Playford EG, Rickard CM. Risk factors for peripheral intravenous catheter failure: a multivariate analysis of data from a randomized controlled trial. Infect Control Hosp Epidemiol. 2014;35(1):63–8.

Zingg W, Pittet D. Peripheral venous catheters: an under-evaluated problem. Int J Antimicrob Agents. 2009;34(Suppl 4):S38–42.

Tagalakis V, Kahn SR, Libman M, Blostein M. The epidemiology of peripheral vein infusion thrombophlebitis: a critical review. Am J Med. 2002;113(2):146–51.

Alexandrou E, Ray-Barruel G, Carr PJ, Frost S, Inwood S, Higgins N, Lin F, Alberto L, Mermel L, Rickard CM. International prevalence of the use of peripheral intravenous catheters. J Hosp Med. 2015;10(8):530–3.

Alexandrou E, Ray-Barruel G, Carr PJ, Frost SA, Inwood S, Higgins N, Lin F, Alberto L, Mermel L, Rickard CM, et al. Use of Short Peripheral Intravenous Catheters: Characteristics, Management, and Outcomes Worldwide. J Hosp Med. 2018;13(5). https://doi.org/10.12788/jhm.3039.

Zhang L, Cao S, Marsh N, Ray-Barruel G, Flynn J, Larsen E, Rickard CM. Infection risks associated with peripheral vascular catheters. J Infect Prev. 2016;17(5):207–13.

Marsh N, Mihala G, Ray-Barruel G, Webster J, Wallis MC, Rickard CM. Inter-rater agreement on PIVC-associated phlebitis signs, symptoms and scales. J Eval Clin Pract. 2015;21(5):893–9.

Goransson K, Forberg U, Johansson E, Unbeck M. Measurement of peripheral venous catheter-related phlebitis: a cross-sectional study. Lancet Haematol. 2017;4(9):e424–30.

Ray-Barruel G, Polit DF, Murfield JE, Rickard CM. Infusion phlebitis assessment measures: a systematic review. J Eval Clin Pract. 2014;20(2):191–202.

Simin D, Milutinovic D, Turkulov V, Brkic S. Incidence, severity and risk factors of peripheral intravenous cannula-induced complications: an observational prospective study. J Clin Nurs. 2018.

Aghdassi SJS, Schroder C, Gruhl D, Gastmeier P, Salm F: Point prevalence survey of peripheral venous catheter usage in a large tertiary care university hospital in Germany. Antimicrob Resist Infect Control 2019, 8:15.

Ahlqvist M, Berglund B, Nordstrom G, Klang B, Wiren M, Johansson E. A new reliable tool (PVC assess) for assessment of peripheral venous catheters. J Eval Clin Pract. 2010;16(6):1108–15.

Ray-Barruel G, Rickard CM. Helping nurses help PIVCs: decision aids for daily assessment and maintenance. Br J Nurs. 2018;27(8):S12–8.

Leape L, Berwick D, Clancy C, Conway J, Gluck P, Guest J, Lawrence D, Morath J, O'Leary D, O'Neill P, et al. Transforming healthcare: a safety imperative. Qual Saf Health Care. 2009;18(6):424–8.

Portney LG, Watkins, M.P: Foundations of clinical research, 3rd edn. New Jersey: Pearson Education, Inc.; 2009.

O'Grady NP, Alexander M, Burns LA, Dellinger EP, Garland J, Heard SO, Lipsett PA, Masur H, Mermel LA, Pearson ML, et al. Guidelines for the prevention of intravascular catheter-related infections. Clin Infect Dis. 2011;52(9):e162–93.

Rickard CM, Webster J, Wallis MC, Marsh N, McGrail MR, French V, Foster L, Gallagher P, Gowardman JR, Zhang L, et al. Routine versus clinically indicated replacement of peripheral intravenous catheters: a randomised controlled equivalence trial. Lancet. 2012;380(9847):1066–74.

Capdevila JA, Guembe M, Barberan J, de Alarcon A, Bouza E, Farinas MC, Galvez J, Goenaga MA, Gutierrez F, Kestler M, et al. 2016 expert consensus document on prevention, diagnosis and treatment of short-term peripheral venous catheter-related infections in adult. Rev Esp Quimioter. 2016;29(4):230–8.

De Vet HC, Terwee CB, Mokkink LB, Knol DL. Measurement in medicine. A practical guide, 8 edn. Cambridge, United Kingdom: Cambridge university press; 2017.

Rabe-Hesketh S, Skrondal A. Multilevel and longitudinal modeling using Stata, 3rd ed. edn. Texas: Stata Press Publication; 2012.

Feinstein AR, Cicchetti DV: High Agreement but Low Kappa .1. The Problems of 2 Paradoxes. J Clin Epidemiol 1990, 43(6):543–549.

de Vet HC, Mokkink LB, Terwee CB, Hoekstra OS, Knol DL. Clinicians are right not to like Cohen's kappa. BMJ. 2013;346:f2125.

Byrt T, Bishop J, Carlin JB. Bias, prevalence and kappa. J Clin Epidemiol. 1993;46(5):423–9.

Guembe M, Perez-Granda MJ, Capdevila JA, Barberan J, Pinilla B, Martin-Rabadan P, Bouza E, Group NS. Nationwide study on the use of intravascular catheters in internal medicine departments. J Hosp Infect. 2015;90(2):135–41.

Ray-Barruel G, Ullman AJ, Rickard CM, Cooke M. Clinical audits to improve critical care: part 2: analyse, benchmark and feedback. Aust Crit Care. 2018;31(2):106–9.

Kohn L.T. CJM, Donaldson M.S: To err is human: building a safer health system. Washington D.C.: National Academy Press; 2000.

Gandhi TK, Kaplan GS, Leape L, Berwick DM, Edgman-Levitan S, Edmondson A, Meyer GS, Michaels D, Morath JM, Vincent C, et al. Transforming concepts in patient safety: a progress report. BMJ Qual Saf. 2018.

Morris AK, Russell CD. Enhanced surveillance of Staphylococcus aureus bacteraemia to identify targets for infection prevention. J Hosp Infect. 2016;93(2):169–74.

Mylotte JM, McDermott C. Staphylococcus aureus bacteremia caused by infected intravenous catheters. Am J Infect Control. 1987;15(1):1–6.

Trinh TT, Chan PA, Edwards O, Hollenbeck B, Huang B, Burdick N, Jefferson JA, Mermel LA. Peripheral venous catheter-related Staphylococcus aureus bacteremia. Infect Control Hosp Epidemiol. 2011;32(6):579–83.

Thomas MG, Morris AJ. Cannula-associated Staphylococcus aureus bacteraemia: outcome in relation to treatment. Intern Med J. 2005;35(6):319–30.

Webster J, Northfield S, Larsen EN, Marsh N, Rickard CM, Chan RJ. Insertion site assessment of peripherally inserted central catheters: inter-observer agreement between nurses and inpatients. J Vasc Access. 2018;19(4):370–4.

Mokkink LB, Terwee CB, Gibbons E, Stratford PW, Alonso J, Patrick DL, Knol DL, Bouter LM, de Vet HC. Inter-rater agreement and reliability of the COSMIN (COnsensus-based standards for the selection of health status measurement instruments) checklist. BMC Med Res Methodol. 2010;10:82.

Mokkink LB, Terwee CB, Knol DL, Stratford PW, Alonso J, Patrick DL, Bouter LM, de Vet HC. The COSMIN checklist for evaluating the methodological quality of studies on measurement properties: a clarification of its content. BMC Med Res Methodol. 2010;10:22.

Carr PJ, Glynn RW, Dineen B, Devitt D, Flaherty G, Kropmans TJ, Kerin M. Interns' attitudes to IV cannulation: a KAP study. Br J Nurs. 2011;20(4):S15–20.

Cicolini G, Simonetti V, Comparcini D, Labeau S, Blot S, Pelusi G, Di Giovanni P. Nurses' knowledge of evidence-based guidelines on the prevention of peripheral venous catheter-related infections: a multicentre survey. J Clin Nurs. 2014;23(17–18):2578–88.

Simonetti V, Comparcini D, Miniscalco D, Tirabassi R, Di Giovanni P, Cicolini G. Assessing nursing students' knowledge of evidence-based guidelines on the management of peripheral venous catheters: a multicentre cross-sectional study. Nurse Educ Today. 2018;73:77–82.

de Vet HCW, Dikmans RE, Eekhout I. Specific agreement on dichotomous outcomes can be calculated for more than two raters. J Clin Epidemiol. 2017;83:85–9.

Pronovost PJ, Miller MR, Wachter RM. Tracking progress in patient safety: an elusive target. JAMA. 2006;296(6):696–9.

Acknowledgements

The authors would like to acknowledge the nurses at hospital A and B for their help in data sampling. Further, we would like to thank the quality managers, Frode Strømman (Hospital A) and Torstein Rønningen (Hospital B) for help with the design of the PIVC-miniQ.

Funding

The project is funded by The Liaison Committee for education, research and innovation in Central Norway. The funding source was not involved in the design of the study, statistical analyses or interpretation of the results. The researchers were independent from the funding.

Author information

Authors and Affiliations

Contributions

LHH, LTG and KHG have developed the final version of the PIVC-miniQ. LHH and LTG have been responsible for data sampling and the writing of the article. SL has contributed with statistical analyses. ES, JKD and CR have mentored the writing process. BR and LTG developed the pre-version of the PIVC-miniQ and used it in point prevalence surveys. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The regional ethical committee considered this project to be a health quality research project among patients 18 year or older (2017/583/REK midt). Further, the study was approved by the Norwegian Centre for Research Data (NSD) (project number 55096). As the study was regarded a health quality research project and no directly identifiable patient information was collected, the NSD evaluated informed verbal consent as adequate. The patients received verbal and written information about the study and verbal informed consent was obtained from each patient before assessing the PIVC. The patient consent was registered in a separate screening log.

PIVCs were not screened if the patient was unstable or in need of extra surveillance, had isolation precautions due to infection control, cognitive impairments (inability to provide informed consent) or when the patient declined consent. We were not allowed to record any patient identifying characteristics such as name, birthdate or hospital ID number (2017/583/REK midt, NSD project number 55096) and we were not allowed to collect any data of the patients that did not participate. All the data are thus anonymous and cannot be traced back to the patient. The ethical approval also mandated that rater #2 ensured appropriate actions related to the signs and symptoms found related to the PIVC.

Consent for publication

Not applicable.

Competing interests

None declared.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Table S1. Overview of the development process of the PIVC-miniQ. Figure S1. PIVC-miniQ. Figure S2. Results from prevalence surveys using pre version PIVC-miniQ. (DOCX 83 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Høvik, L.H., Gjeilo, K.H., Lydersen, S. et al. Monitoring quality of care for peripheral intravenous catheters; feasibility and reliability of the peripheral intravenous catheters mini questionnaire (PIVC-miniQ). BMC Health Serv Res 19, 636 (2019). https://doi.org/10.1186/s12913-019-4497-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-019-4497-z