Abstract

Background

To develop a regression neural network for the reconstruction of lesion probability maps on Magnetic Resonance Fingerprinting using echo-planar imaging (MRF-EPI) in addition to \(T_1\), \({T_2}^*\), NAWM, and GM- probability maps.

Methods

We performed MRF-EPI measurements in 42 patients with multiple sclerosis and 6 healthy volunteers along two sites. A U-net was trained to reconstruct the denoised and distortion corrected \(T_1\) and \({T_2}^*\) maps, and to additionally generate NAWM-, GM-, and WM lesion probability maps.

Results

WM lesions were predicted with a dice coefficient of \(0.61\pm 0.09\) and a lesion detection rate of \(0.85\pm 0.25\) for a threshold of 33%. The network jointly enabled accurate \(T_1\) and \({T_2}^*\) times with relative deviations of 5.2% and 5.1% and average dice coefficients of \(0.92\pm 0.04\) and \(0.91\pm 0.03\) for NAWM and GM after binarizing with a threshold of 80%.

Conclusion

DL is a promising tool for the prediction of lesion probability maps in a fraction of time. These might be of clinical interest for the WM lesion analysis in MS patients.

Similar content being viewed by others

Background

Assessment and segmentation of white matter (WM) lesions is an important step for the analysis and tracking of diseases such as multiple sclerosis (MS). WM lesions can be graded based on MRI images which showed a good correlation with symptom development in MS and clinical subtypes of MS. [1, 2] Lesion probability mapping is a method to differentiate between WM lesion groups as this corresponds to different ischemic components and neurodegeneration during disease progression. [3,4,5,6] Additionally, WM lesions exhibit an increased \(T_1\), \(T_2\), and \({T_2}^*\) relaxation time, and therefore, multiple quantitative approaches showed advantages in the detection, grading, and classification. [7,8,9] In particular, Magnetic Resonance Fingerprinting (MRF) has demonstrated a variety of applications for simultaneously quantifying multiple relaxation times at clinically acceptable scan times. In conventional MRF, thousands of highly undersampled images are acquired to produce a unique fingerprint, and these fingerprints are compared voxel-wise with a pre-calculated dictionary. [10, 11] Rieger et al. proposed an MRF method to quantify \(T_1\) and \({T_2}^*\) with an echo-planar imaging (EPI) readout, [12] which showed promising results in renal and neural applications. [13,14,15,16] The fact that only conventional undersampling factors lead to only slightly corrupted magnitude data reduces the time for reconstruction and increases its robustness. However, a major drawback of MRF is the tradeoff between reconstruction time and accuracy.

Deep learning (DL) has emerged into the field of MRI and achieved excellent results in data processing considering accuracy, precision, and speed. Hence, DL is increasingly outperforming conventional algorithms. Previous studies and reports suggest that convolutional neural networks (CNN) can solve high dimensional problems with excellent accuracy and in a short time for denoising, distortion correction, segmentation, classification, and reconstruction. [17,18,19,20,21,22,23] A promising architecture is the U-net, which has great diversity for applications such as segmentation and regression tasks. [24,25,26] Especially in MRF, the reconstruction of the enormous amount of acquired data can be improved and accelerated by using different network architectures such as CNN’s and fully convolutional networks. [27,28,29,30,31,32] In previous work, a CNN was used for the denoising, distortion correction, reconstruction, and generation of NAWM and gray matter (GM) probability maps yielding results comparable to conventional methods in a fraction of time. [16] The proposed architecture combined several post-processing tasks, making the application fast and easy. However, the WM lesions have to be segmented for further analysis, which is always time-consuming and suffers from high intra- and inter-observer variabilities. [33] To overcome these limitations of manual segmentation, different DL architectures and networks have been used, yielding dice coefficients ranging from 0.48 to 0.95 for WM lesion segmentation. [33,34,35,36] Therefore, in a recent publication, it was shown that the use of regression networks for generating distance maps of the lesions might improve the WM lesion segmentation process [37]. This could provide more information about lesion geometry, structure, and changes similar to lesion probability mapping. [2, 3, 38]

In this work, we use the U-net as previously reported [16] to predict WM lesion probability maps by training the CNN with the manual annotated binary lesion masks while combining several processing steps.

Methods

Data

As previously reported [16], an MRF sequence based on echo-planar imaging was acquired across 6 healthy subjects and 18 patient with WM lesions at a 3T scanner (Magnetom Skyra, Siemens Healthineers; site 1) and 24 patient with WM lesions at a 3T scanner (Magnetom Prisma, Siemens Healthineers; site 2). The sequence parameters for both scanners were FOV = 240 \(\times\) 240 mm, in-plane resolution = \(1\times 1\mathrm{mm}^2\), slice thickness = 2 mm, GRAPPA factor = 3, partial Fourier = 5/8, varying flip angle \(\alpha\) (34–86\(^\circ\)), TE (16–76.5 ms), TR (3530–6370 ms). At site 2, simultaneous multi-slice imaging was additionally used with an acceleration factor of 3.

CNN

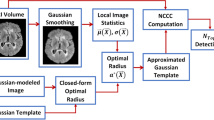

A U-net (Fig. 1a) was used for the denoising, distortion correction, and reconstruction of \(T_1\), \({T_2}^*\) maps, and the NAWM-, GM-, and additionally lesion probability maps. The \(T_1\) and \({T_2}^*\) maps for training the network were reconstructed using pattern matching with a precision of 5% for the variety of \(T_1\) (300–3500 ms), \({T_2}^*\) (10–2500 ms) times, and the flip angle scale factor (0.6–1.4, in steps of 0.1) to correct for \(B_1^+\) inhomogeneities. Beforehand, denoising was applied using Marchenko–Pastur Principal Component Analysis (MPPCA) [39]. The dictionary entries are in steps of 5%. Rigid registration was performed using B-spline interpolation from the undistorted \(T_1\) map to the in this protocol additionally acquired \(T_2\)-weighted image using the Advanced Normalization Tools (ANT). [40] The NAWM and GM maps were generated based on the distortion corrected \(T_1\) maps using SPM (Statistical Parametric Mapping) [41] with a probability between 0 and 100%. Additionally, WM lesions were segmented manually by an expert radiologist on the FLAIR data, and to assess the intra-observer variability, lesions from ten patients were segmented two times (at least one week time gap), and the mean dice coefficient was calculated. The manually annotated binary lesion masks were used as a fifth training output of the CNN. The training input was always the 35 magnitude MRF-EPI data. We used two patients from site 1 and three patients from site 2 as test data and the same amount as validation data. We trained our network patch-wise using 64 random patches per slice with a patch size of \(64 \times 64\) voxels, a mini batch-size of 64, 100 training epochs, and a learning rate of \(10^{-4}\). Slices containing white matter lesions with a minimum volume of 100 ml were augmented by a factor of five to overcome the small overall volume of the lesions compared to the whole brain. We trained our networks using four different loss functions (MAE, MSE, LCL) with all five output maps (\(T_1\), \({T_2}^*\), NAWM-, GM-probability maps, and WM lesion masks) and additionally the other networks using DICE loss with only the lesion masks as output for comparison with the conventional lesions segmentation. Additionally, the U-net was trained for the network number 8 with the denoised and distortion corrected \(T_1\) and \({T_2}^*\) maps and the lesion mask as output for comparison to conventional DL processing (MSE-2-1). The following loss functions were used for one and five outputs: mean squared error (MSE), mean absolute error (MAE), logarithmic cosinus hyperbolic loss (LCL), and dice loss (DICE) as listed in Table 1. The naming MSE-1 and MSE-5 correspond to the loss function with the number of outputs.

a Representation of the U-net with an encoder depth of three. b Feature maps of the second convolutional layer and the second last convolutional layer are depicted. One feature per layer is marked in red and shown below in c. Coronal, sagittal, and transversal slices of the corresponding features maps are shown. The second convolutional layer shows a WM-like feature, which, however, is not homogeneous in all three dimensions. The last convolution layer depicts a homogeneous WM-like feature in all three dimensions. No colorbars were shown because all features are in arbitrary units

For all loss functions, the network was trained with both the five output maps and also only the lesion as output to validate the loss in accuracy when using multiple outputs. In previous work, it was observed that for multiple outputs the accuracy decreases of the network. [16] The accuracy compared with the conventional methods was validated with the MSE-5 because this was the best architecture for the reconstruction of \(T_1\), \({T_2}^*\), NAWM-, and GM-probability maps and lesion probability maps.

Statistics

The Dice coefficient and the lesion detection rate were used as the similarity metric for the lesion segmentation. Therefore, the threshold for binarizing the reconstructed lesion probability maps was analyzed. NAWM and GM masks were binarized with a commonly used threshold of 80% [42] and mean dice coefficients along all subjects and slices were calculated. For the two other outputs (\(T_1\), \({T_2}^*\)) the mean relative difference was calculated.

Results

The reconstruction with DL showed good agreement with conventional pattern matching reconstruction and a mean relative deviation of 5.2% for \(T_1\) and 5.1% for \({T_2}^*\) in the whole brain using MSE-5. The Dice coefficients for NAWM and GM after binarization with a threshold of 80% were \(0.92\pm 0.04\) for NAWM and \(0.91\pm 0.03\) for GM using MSE-5. The reconstruction of all five outputs took around one minute for the whole brain per subject, which is several orders of magnitude faster compared to the conventional processing (denoising, MRF reconstruction, distortion correction, masking, and lesion segmentation) of about three hours.

Figure 2 shows the \(T_1\), \({T_2}^*\), NAWM-, GM-, and lesion probability maps generated by the CNN (MSE-5) for different training epochs (1, 5, 15, 30, 70, 100) compared to the conventionally reconstructed maps and the segmented masks. Visual good image quality was obtained for \(T_1\), \({T_2}^*\), NAWM-, and GM probability maps after already 5 epochs. After around 15 epochs, the network starts to predict the lesion probability maps and slowly converges towards 100 epochs.

The dice coefficient (left) and the lesion detection rate (right) for all training data (blue) and test data (orange) are shown over the threshold to binarize the lesion probability maps. The black lines depict the average across the test data. A maximum dice coefficient is observed at a threshold of around 50%. The lesion detection rate decreases for an increasing threshold because the background of the lesion probability map is non-zero

The dice coefficient was strongly dependent on the threshold for binarizing the probability maps which is shown in Fig. 3. A maximum dice coefficient of 0.75 is observed for a threshold of 41% for the training data (depicted in blue) and a maximum dice coefficient of 0.62 for a threshold of 23% for the test data (depicted in orange) respectively. For further analysis, a threshold of 33% was used to binarize the lesion probability maps into masks as the best compromise for both, training and test data. At lower thresholds, the lesion detection rate increases. The dice coefficient and the lesion detection rate were \(0.61\pm 0.09\) and \(0.85\pm 0.25\) for the test data using the threshold of 33%. The average dice coefficient with its intra-observer variability across different annotations was \(0.68\pm 0.15\). After training the network with this second set of annotations the dice coefficient and the lesion detection rate were \(0.60\pm 0.17\) and \(0.84\pm 0.19\). The prediction of the WM lesions does not depend on the lesion volume as illustrated in Additional file 1: Figure S1.

The dice coefficient for three different networks is depicted (five outputs with MSE [MSE-5], five outputs with MAE [MAE-5], only lesions with MSE [MSE-1]) and the reference network with the \(T_1\) and \({T_2}^*\) maps as input and lesions as output [MSE-2-1]. The dice coefficient is plotted for all three networks over the training epochs and the smoothed data is shown in the foreground colors. The corresponding lesion probability maps are shown for 1, 5, 15, 50, and 100 epochs below

In Fig. 4, the lesion probability is plotted versus the number of training epochs for different networks. It can be seen that training only with 1 output instead of 5 results in faster convergence of the dice coefficient, however, the dice coefficient for the three methods (MSE-5, MAE-5, MSE-1) converged to 0.61 after about 60 epochs. The reference network (MSE-2-1) reached after around 7 epochs the maximum dice coefficient of 0.61 but then decreases towards 0.5. The mean lesion detection rate over the entire test data was 0.85 for MSE-5, 0.79 for MAE-5, 0.78 for MSE-1, and 0.73 for MSE-2-1. The training with MAE-5 takes longer to start predicting lesions. The networks MAE-1, LCL-5, LCL-1, and DICE-1 converge to a local minimum while training, resulting in all lesion probabilities equal to zero and were therefore excluded from the analysis.

The reconstructed lesions probability maps are overlayed on the magnitude data in color encoding for all five different patients from the test set. Manual annotation is depicted in blue. Below the probability map is binarized and depicted in yellow in addition. The dice coefficient and white matter lesion detection rate is depicted for every patient and healthy subject for both sites. The average lesions detection rate is 0.88 and the average dice coefficient is 0.67 for all patients. The test data is shown in larger marks and brighter color and yields an average lesion detection rate of 0.85 and an average dice coefficient of 0.61 using the MSE-5

For every test patient, one representative slice is shown in Fig. 5 with the lesion probability color-encoded, and the manual annotation highlighted in blue. For patients number 1, 2, and 5 the depicted lesions of the slice correlate very well with the annotation. For patient number 3, the CNN predicted three lesions with a small probability, which were then excluded from the mask after thresholding. Only in test patient number 4, the network did not predict the annotated lesion near the GM. The dice coefficient and lesion detection rate are shown for all subjects in Fig. 5. The test data are shown in larger marks with lighter blue and yellow colors. The CNN predicted no lesions in healthy subjects.

One lesion is depicted in a zoomed-in version with a bilinear interpolation of factor 10. The increase in \(T_1\) and \({T_2}^*\) compared with the mean NAWM is color encoded in percentage and the lesion probability generated by the CNN is shown on the right side. The manual annotation is drawn as a blue line. Below the voxel-wise values are depicted for one horizontal (red) and one vertical (green) cut through the lesion

Figure 6 shows the percentage increase of a WM lesion compared to the mean NAWM times for \(T_1\), \({T_2}^*\), and the lesion probability generated by the CNN. The manually annotated lesion is marked in blue. A good visual correlation between the lesion probability and the increase in \(T_1\) and \({T_2}^*\) is observed, as depicted below for the two cross-sections (green and red). It was also observed that the lesion probability is increased and steeper for lesions that have increased relaxation times.

Discussion

In this study, we have shown that the CNN is capable of predicting lesion probability maps, which correlate with an increase in \(T_1\) and \({T_2}^*\) times in NAWM. After binarizing the probability maps, the dice coefficient was \(0.61\pm 0.09\) for the test data, which is comparable to the intra-observer variability of the manual drawer (\(0.68\pm 0.23\)) and is comparable to literature (0.47–0.95). [33, 36] The CNN might be more robust compared with manual annotations because the network has no variations for multiple annotations. We have shown that the network only predicts lesion probability maps for the loss functions MAE and MSE. This could be since outliers, such as the small spherical lesions, are weighted more heavily with MSE and MAE compared with LCL or the dice loss. This was also observed for MAE-1, despite MAE-5 was able to predict lesions. More augmentation or an advanced optimization of the network considering the layer structure could further improve the performance of the network. In each case, training with one or all five output masks converged to the same dice coefficient regardless of the network, demonstrating the ability to reconstruct all maps within a single architecture (Fig. 4). Compared to the conventional method of performing lesion segmentation on the \(T_1\) and \({T_2}^*\) maps, the network MSE-2-1 resulted in an average dice of 0.5 and yielding worse performance compared with the other proposed networks. With this, we state that the approach of combining all post-processing steps has no loss in accuracy when lesion probability maps are generated facilitating an advanced DL approach to save time and avoid further processing.

Additionally, the network was able to perform the tasks of reconstruction, denoising, distortion correction, and segmentation within a single architecture with promising accuracy. \(T_1\) and \({T_2}^*\) maps as well as the NAWM- and GM-probability maps showed good agreement as also previously reported [16] with a mean relative error of 5.2% for \(T_1\) and \({T_2}^*\) and mean dice coefficients of higher than 0.9 for NAWM and GM. The use of a single network is advantageous due to the faster and simpler reconstruction compared with several networks for the different processing steps although the accuracy seemed to be slightly compromised. [16] It was observed, that the network first learns to reconstruct the \(T_1\), \({T_2}^*\), NAWM, and GM probability maps, as evidenced by the good visual image quality after only 5 epochs (Fig. 2). This could be explained by the several orders increase in the number of non-zero voxels in these maps compared to the low number of lesion voxels per slice.

The lesion probability maps visually correlate well with the increase in \(T_1\) and \({T_2}^*\) compared to the mean NAWM times. This could indicate that larger or more intense lesions are also predicted as such by the CNN. Therefore, these lesion probability maps could be used to automatically rate and differentiate different lesions based on the MRF input data. This is similar to the results of other lesion probability mapping methods. However, these methods rely either on manual grading, voxel-wise, or local spatial dependent models, which are time-consuming and susceptible to patient-specific covariances. [1,2,3, 5] A large cohort is beneficial to prove this assumption and to correlate this behavior with follow-up measurements and disease specificities.

Besides, our approach could include the underlying information of the evolution of the MRF scan. It has been shown that principal component analysis (PCA), which also uses the input magnitude MRF data, allows separation of the brain into multiple components such as myelin and WM lesions [43, 44]. This is shown in Fig. 1b where all feature maps of the second and second last convolutional layer are depicted. At the beginning of the network architecture, features from the MRF magnitude images are extracted. They are not homogeneous along all three dimensions as also the magnitude MRF data are not. However, in subsequent layers, the feature maps are homogeneous in 3 dimensions meaning that anatomical features independently on the slice position and acquisition scheme are extracted. The CNN might be able to learn and distinguish the underlying components, improving lesion segmentation and prediction. This is an information gain compared to manual annotators and compared to lesion segmentation methods based solely on the quantitative parametric maps. [36, 37] In further work, this has to be compared with conventional methods for assessing these components such as the myelin or extracellular water. [43, 45, 46]

This study has some limitations. Because the lesions were manually segmented, there is a large amount of variation in the annotation, which was also evident in the relatively high intra-observer variability (0.68). This could be improved by performing more annotations from multiple annotators to reduce this variability, but this is very time-consuming. The reduced variability in the lesion masks could also lead to better and faster training performance of the network, yielding higher dice coefficients. WM lesions are often difficult to differentiate from NAWM in the \(T_1\) and \({T_2}^*\) maps without knowledge of surrounding layer information because NAWM lesions appear similar to lobes of the GM inside the NAWM. The reconstruction could be improved by using a 3D CNN with 3D patches. However, we have tried to train a 3D architecture, but the 3D CNN was not able to predict any lesions and the accuracy for the other outputs was compromised. This could be because 3D architectures require more data and longer training compared with 2D CNNs. Therefore, more data needs to be acquired for comparable 3D results, which will be the content of further work. This could also be the reason why some loss functions could not generate lesion probability maps.

Conclusion

In this work we showed, that training a neural network with lesion masks can be used to generate lesion probability maps, which might improve diagnostics. Additionally, the single CNN is a promising tool for the reconstruction, denoising, distortion correction of \(T_1\) and \({T_2}^*\) maps, and additionally to generate NAWM, GM probability maps. The reconstruction for a whole brain took less than one minute, which is more than a 100 fold acceleration compared with conventional processing which makes it clinically of great interest.

Availability of data and materials

The Matlab scripts for generating and training the U-net are available here: https://github.com/PhysIngo/Lesion-Probability-Mapping.git. The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- WM:

-

White matter

- NAWM:

-

Normal appearing white matter

- MS:

-

Multiple sclerosis

- MRI:

-

Magnetic resonance imaging

- MRF:

-

Magnetic resonance fingerprinting

- EPI:

-

Echo planar imaging

- DL:

-

Deep learning

- CNN:

-

Convolutional neural network

- GM:

-

Gray matter

- MPPCA:

-

Marchenko–Pastur principle component analysis

- ANT:

-

Advanced normalization tools

- SPM:

-

Statistical parametric mapping

- FLAIR:

-

Fluid attenuated inversion recovery

- MAE:

-

Mean absolute error

- MSE:

-

Mean squared error

- LCL:

-

Logarithmic cosinus loss

References

Kincses Z, Ropele S, Jenkinson M, Khalil M, Petrovic K, Loitfelder M, Langkammer C, Aspeck E, Wallner-Blazek M, Fuchs S, Jehna M, Schmidt R, Vécsei L, Fazekas F, Enzinger C. Lesion probability mapping to explain clinical deficits and cognitive performance in multiple sclerosis. Mult Scler. 2011;17(6):681–9. https://doi.org/10.1177/1352458510391342.

Ge T, Müller-Lenke N, Bendfeldt K, Nichols T, Johnson T. Analysis of multiple sclerosis lesions via spatially varying coefficients. Ann Appl Stat. 2014;8(2):1095–118.

Enzinger C, Smith S, Fazekas F, Drevin G, Ropele S, Nichols T, Behrens T, Schmidt R, Matthews P. Lesion probability maps of white matter hyperintensities in elderly individuals—results of the Austrian stroke prevention study. J Neurol. 2006;253:1064–70. https://doi.org/10.1007/s00415-006-0164-5.

DeCarli C, Fletcher E, Ramey V, Harvey D, Jagust J. Anatomical mapping of white matter hyperintensities (wmh): exploring the relationships between periventricular wmh, deep wmh, and total wmh burden. Stroke. 2005;36(1):50–5. https://doi.org/10.1161/01.STR.0000150668.58689.f2.

Filli L, Hofstetter L, Kuster P, Traud S, Mueller-Lenke N, Naegelin Y, Kappos L, Gass A, Sprenger T, Nichols TE, Vrenken H, Barkhof F, Polman C, Radue E-W, Borgwardt SJ, Bendfeldt K. Spatiotemporal distribution of white matter lesions in relapsing-remitting and secondary progressive multiple sclerosis. Mult Scler. 2012;18(11):1577–84. https://doi.org/10.1177/1352458512442756.

Holland CM, Charil A, Csapo I, Liptak Z, Ichise M, Khoury SJ, Bakshi R, Weiner HL, Guttmann CRG. The relationship between normal cerebral perfusion patterns and white matter lesion distribution in 1,249 patients with multiple sclerosis. J Neuroimaging. 2012;22(2):129–36.

Bonnier G, Roche A, Romascano D, Simioni S, Meskaldji D, Rotzinger D, Lin Y-C, Menegaz G, Schluep M, Du Pasquier R, Sumpf TJ, Frahm J, Thiran J-P, Krueger G, Granziera C. Advanced mri unravels the nature of tissue alterations in early multiple sclerosis. Ann Clin Transl Neurol. 2014;1(6):423–32.

Blystad I, Håkansson I, Tisell A, Ernerudh J, Smedby Ö, Lundberg P, Larsson E-M. Quantitative mri for analysis of active multiple sclerosis lesions without gadolinium-based contrast agent. Am J Neuroradiol. 2016;37(1):94–100. https://doi.org/10.3174/ajnr.A4501.

Hernández-Torres E, Wiggermann V, Machan L, Sadovnick AD, Li DKB, Traboulsee A, Hametner S, Rauscher A. Increased mean r2* in the deep gray matter of multiple sclerosis patients: have we been measuring atrophy? J Magn Reson Imaging. 2019;50(1):201–8. https://doi.org/10.1002/jmri.26561.

Ma D, Gulani V, Seiberlich N, Liu K, Sunshine JL, Duerk JL, Griswold MA. Magnetic resonance fingerprinting. Nature. 2013;495:187–92.

Panda A, Mehta BB, Coppo S, Jiang Y, Ma D, Seiberlich N, Griswold MA, Gulani V. Magnetic resonance fingerprinting-an overview. Curr Opin Biomed Eng. 2017. https://doi.org/10.1016/j.cobme.2017.11.001.

Rieger B, Zimmer F, Zapp J, Weingartner S, Schad LR. Magnetic Resonance Fingerprinting using echo planar imaging Joint quantification of T1 and relaxation times. Magn Reson Med. 2017;78(5):1724–33.

Rieger B, Akçakaya M, Pariente JC, Llufriu S, Martinez-Heras E, Weingartner S, Schad LR. Time efficient whole-brain coverage with mr fingerprinting using slice-interleaved echo-planar-imaging. Sci Rep. 2018;8(1):2045–322.

Hermann I, Chacon-Caldera J, Brumer I, Rieger B, Weingartner S, Schad LR, Zöllner FG. Magnetic resonance fingerprinting for simultaneous renal t1 and t2* mapping in a single breath-hold. Magn Reson Med. 2020;83(6):1940–8. https://doi.org/10.1002/mrm.28160.

Khajehim, M., Christen, T., Chen, J.J.: Magnetic resonance fingerprinting with combined gradient- and spin-echo echo-planar imaging: simultaneous estimation of t1, t2 and t2* with integrated-b1 correction. bioRxiv (2019). https://doi.org/10.1101/604546. https://www.biorxiv.org/content/early/2019/04/10/604546.full.pdf

Hermann I, Martínez-Heras E, Rieger B, Schmidt R, Golla A-K, Hong J-S, Lee W-K, Yu-Te W, Nagetegaal M, Solana E, Llufriu S, Gass A, Schad LR, Weingärtner S, Zöllner FG. Accelerated white matter lesion analysis based on simultaneous t1 and t2* quantification using magnetic resonance fingerprinting and deep learning. Magn Reson Med. 2021;00:1–16. https://doi.org/10.1002/mrm.28688.

Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on mri. Z Med Phys. 2019;29(2):102–27. https://doi.org/10.1016/j.zemedi.2018.11.002.

Benou A, Veksler R, Friedman A, Riklin Raviv T. Ensemble of expert deep neural networks for spatio-temporal denoising of contrast-enhanced mri sequences. Med Image Anal. 2017;42:145–59. https://doi.org/10.1016/j.media.2017.07.006.

Cao X, Yang J, Zhang J, Wang Q, Yap P, Shen D. Deformable image registration using a cue-aware deep regression network. IEEE Trans Bio-Med Eng. 2018;65(9):1900–11.

Maier A, Syben C, Lasser T, Riess C. A gentle introduction to deep learning in medical image processing. Z Med Phys. 2019;29(2):86–101. https://doi.org/10.1016/j.zemedi.2018.12.003.

Akcakaya M, Moeller S, Weingärtner S, Ugurbil K. Scan-specific robust artificial-neural-networks for k-space interpolation (raki) reconstruction: database-free deep learning for fast imaging. Magn Reson Med. 2019;81(1):439–53. https://doi.org/10.1002/mrm.27420.

Hammernik K, Klatzer T, Kobler E, Recht MP, Sodickson DK, Pock T, Knoll F. Learning a variational network for reconstruction of accelerated mri data. Magn Reson Med. 2018;79(6):3055–71. https://doi.org/10.1002/mrm.26977.

Schlemper J, Caballero J, Hajnal JV, Price AN, Rueckert D. A deep cascade of convolutional neural networks for dynamic mr image reconstruction. IEEE Trans Med Imaging. 2018;37(2):491–503.

Ronneberger, O., P.Fischer, Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention (MICCAI). LNCS, vol. 9351, pp. 234–241. Springer (2015). http://lmb.informatik.uni-freiburg.de/Publications/2015/RFB15a.

Yao W, Zeng Z, Lian C, Tang H. Pixel-wise regression using u-net and its application on pansharpening. Neurocomputing. 2018;312:364–71. https://doi.org/10.1016/j.neucom.2018.05.103.

Moeskops P, de Bresser J, Kuijf HJ, Mendrik AM, Biessels GJ, Pluim JPW, Isgum I. Evaluation of a deep learning approach for the segmentation of brain tissues and white matter hyperintensities of presumed vascular origin in mri. Neuroimage Clin. 2018;17:251–62. https://doi.org/10.1016/j.nicl.2017.10.007.

Hoppe E, Körzdörfer G, Würfl T, Wetzl J, Lugauer F, Pfeuffer J, Maier A. Deep learning for magnetic resonance fingerprinting: a new approach for predicting quantitative parameter values from time series. Stud Health Technol Inform. 2017;243:202–6. https://doi.org/10.1002/mrm.27198.

Fang Z, Chen Y, Liu M, Xiang L, Zhang Q, Wang Q, Lin W, Shen D. Deep learning for fast and spatially constrained tissue quantification from highly accelerated data in magnetic resonance fingerprinting. IEEE Trans Med Imaging. 2019;38(10):2364–74.

Balsiger, F., Scheidegger, O., Carlier, P.G., Marty, B., Reyes, M.: On the spatial and temporal influence for the reconstruction of magnetic resonance fingerprinting. In: Cardoso, M.J., Feragen, A., Glocker, B., Konukoglu, E., Oguz, I., Unal, G., Vercauteren, T. (eds.) Proceedings of machine learning research, vol. 102, pp. 27–38. PMLR, London, United Kingdom (2019).

Hoppe E, Thamm F, Körzdörfer G, Syben C, Schirrmacher F, Nittka M, Pfeuffer J, Meyer H, Maier A. Magnetic resonance fingerprinting reconstruction using recurrent neural networks. Stud Health Technol Inform. 2019;267:126–33. https://doi.org/10.3233/SHTI190816.

Fang Z, Chen Y, Hung S-C, Zhang X, Lin W, Shen D. Submillimeter mr fingerprinting using deep learning-based tissue quantification. Magn Reson Med. 2020;84(2):579–91. https://doi.org/10.1002/mrm.28136.

Chen Y, Fang Z, Hung S-C, Chang W-T, Shen D, Lin W. High-resolution 3d mr fingerprinting using parallel imaging and deep learning. NeuroImage. 2020;206:116329. https://doi.org/10.1016/j.neuroimage.2019.116329.

Lladó X, Oliver A, Cabezas M, Freixenet J, Vilanova JC, Quiles A, Valls L, Ramió-Torrentà L, Rovira À. Segmentation of multiple sclerosis lesions in brain mri: a review of automated approaches. Inf Sci. 2012;186(1):164–85. https://doi.org/10.1016/j.ins.2011.10.011.

La Rosa F, Abdulkadir A, Fartaria MJ, Rahmanzadeh R, Lu P-J, Galbusera R, Barakovic M, Thiran J-P, Granziera C, Cuadra MB. Multiple sclerosis cortical and wm lesion segmentation at 3t mri: a deep learning method based on flair and mp2rage. Neuroimage Clin. 2020;27:102335. https://doi.org/10.1016/j.nicl.2020.102335.

McKinley R, Wepfer R, Grunder L, Aschwanden F, Fischer T, Friedli C, Muri R, Rummel C, Verma R, Weisstanner C, Wiestler B, Berger C, Eichinger P, Muhlau M, Reyes M, Salmen A, Chan A, Wiest R, Wagner F. Automatic detection of lesion load change in multiple sclerosis using convolutional neural networks with segmentation confidence. Neuroimage Clin. 2020;25:102104. https://doi.org/10.1016/j.nicl.2019.102104.

Zeng C, Gu L, Liu Z, Zhao S. Review of deep learning approaches for the segmentation of multiple sclerosis lesions on brain mri. Front Neuroinform. 2020;14:55. https://doi.org/10.3389/fninf.2020.610967.

van Wijnen KMH, Dubost F, Yilmaz P, Ikram MA, Niessen WJ, Adams H, Vernooij MW, de Bruijne M. Automated lesion detection by regressing intensity-based distance with a neural network. In: Shen D, Liu T, Peters TM, Staib LH, Essert C, Zhou S, Yap P-T, Khan A, editors. Medical image computing and computer assisted intervention—MICCAI 2019. Cham: Springer; 2019. p. 234–42.

Schnurr, A.-K., Eisele, P., Rossmanith, C., Hoffmann, S., Gregori, J., Dabringhaus, A., Kraemer, M., Kern, R., Gass, A., Zöllner, F.G.: Deep voxel-guided morphometry (vgm): learning regional brain changes in serial mri. In: Third international workshop machine learning in clinical neuroimaging, MLCN 2020, Held in Conjunction with MICCAI 2020, Lima, Peru, pp. 159–168. Springer (2020).

Veraart J, Novikov DS, Christiaens D, Ades-aron B, Sijbers J, Fieremans E. Denoising of diffusion mri using random matrix theory. NeuroImage. 2016;15(142):394–406. https://doi.org/10.1016/j.neuroimage.2016.08.016.

Klein A, Andersson J, Ardekani BA, Ashburner J, Avants B, Chiang M-C, Christensen GE, Collins DL, Gee J, Hellier P, Song JH, Jenkinson M, Lepage C, Rueckert D, Thompson P, Vercauteren T, Woods RP, Mann JJ, Parsey RV. Evaluation of 14 nonlinear deformation algorithms applied to human brain mri registration. NeuroImage. 2009;46(3):786–802. https://doi.org/10.1016/j.neuroimage.2008.12.037.

Ashburner, J., Balbastre, Y., Barnes, G., Brudfors, M.: SPM12 (2014). https://www.fil.ion.ucl.ac.uk/spm/software/spm12/.

Tudorascu DL, Karim HT, Maronge JM, Alhilali L, Fakhran S, Aizenstein HJ, Muschelli J, Crainiceanu CM. Reproducibility and bias in healthy brain segmentation: comparison of two popular neuroimaging platforms. Front Neurosci. 2016;10:503. https://doi.org/10.3389/fnins.2016.00503.

MacKay AL, Laule C. Magnetic resonance of myelin water: an in vivo marker for myelin. Brain Plast. 2016;2(1):71–91.

Nagtegaal M, Koken P, Amthor T, de Bresser J, Mädler B, Vos F, Doneva M. Myelin water imaging from multi-echo t2 mr relaxometry data using a joint sparsity constraint. NeuroImage. 2020;219:117014. https://doi.org/10.1016/j.neuroimage.2020.117014.

Dong, Z., Wang, F., Chan, K.-S., Reese, T.G., Bilgic, B., Marques, J.P., Setsompop, K.: Variable flip angle echo planar time-resolved imaging (vfa-epti) for fast high-resolution gradient echo myelin water imaging. NeuroImage, 117897 (2021). https://doi.org/10.1016/j.neuroimage.2021.117897.

Lee J, Hyun J-W, Lee J, Choi E-J, Shin H-G, Min K, Nam Y, Kim HJ, Oh S-H. So you want to image myelin using mri: an overview and practical guide for myelin water imaging. J Magn Reson Imaging. 2021;53(2):360–73. https://doi.org/10.1002/jmri.27059.

Acknowledgements

The authors are grateful to Dr Núria Bargalló, Cesar Garrido and the IDIBAPS Magnetic resonance imaging facilities for their support during the realization of the study.

Funding

Open Access funding enabled and organized by Projekt DEAL. The author(s) disclose receipt of the following financial support for the research, authorship and/or publication of this article. This work was partially funded by: a Proyecto de Investigación en Salud (FIS 2015. PI15/00587, SL, AS; FIS 2018 PI18/01030, SL, AS), integrated into the Plan Estatal de Investigación Científica y Técnica de Innovación I+D+I, and co-funded by the Instituto de Salud Carlos III-Subdirección General de Evaluación and the Fondo Europeo de Desarrollo Regional (FEDER, “Otra manera de hacer Europa”); the Red Espaíola de Esclerosis Múltiple (REEM: RD16/0015/0002, RD16/0015/0003, RD12/0032/0002, RD12/0060/01-02); TEVA SLU; and the Fundació Cellex.

Author information

Authors and Affiliations

Contributions

IH conceived or designed the work. FGZ contributed with many discussions and guidance and corrections throughout the internal revision process. AKS contributed to the network design for the work. RS and AG annotated all lesion data. EMH, ES, SL contributed to the final approval of the version and the clinical site 2. LS encouraged to investigate deep learning for MRF. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This bicenter study was approved by the local institutional review board at both sites (2019-711N, BCB2012/7965), and written, informed consent was obtained prior to scanning.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Ethik-Kommission site 1 (2019-711N)

Medizinische Fakultít Mannheim der Ruprecht-Karls-Universitít Heidelberg z. Hd. Songhui Cao/Manja Goerner/Kathrin Heberlein Zentrum Medizinischer Forschung Haus 42, 3. OG Theodor-Kutzer-Ufer 1-3 68167 Mannheim, Germany

Ethik-Kommission site 2 (BCB2012/7965)

Secretario del Comité Ético de Investigación Clínica del Hospital Clínic de Barcelona. Joaquim Forés I Vineta, Andrea Scalise, Ana Lucia Arellano Andrino, Hospital Clinic, calle Villarroel 170 08036 Barcelona, Spain.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1

. Figure S1: A) Dice coefficient per lesion is depicted over the lesion volume inml in a logarithmic scale towards x. For every single lesion the dice coefficient was calculated between the lesion masks annotated manually and using the CNN. In blue the training data is shown and in orange the test data. B) Predicted lesion volume is plotted over the true lesion volume in ml in double logarithmic scale. It shows a linear dependency close to the bisector depicted in gray. Table S1: All network architectures are listed which are used in this manuscript.The loss functions mean absolute error (MAE), mean squared error (MSE), locarithmic hyperbolic cosinus loss (LCL), and dice loss (DICE) are used. The number of outputs is either 5 (T1, T2* maps and NAWM-, GM-, and lesion probability maps) or 1 (only lesion probability map).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Hermann, I., Golla, A.K., Martínez-Heras, E. et al. Lesion probability mapping in MS patients using a regression network on MR fingerprinting. BMC Med Imaging 21, 107 (2021). https://doi.org/10.1186/s12880-021-00636-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-021-00636-x