Abstract

Background

One major problem for cognitive neuroscience is to describe the interaction between stimulus and task driven neural modulation. We used fMRI to investigate this interaction in the human brain. Ten male subjects performed a passive listening and a semantic categorization task in a factorial design. In both tasks, words were presented auditorily at three different rates.

Results

We found: (i) as word presentation rate increased hemodynamic responses increased bilaterally in the superior temporal gyrus including Heschl's gyrus (HG), the planum temporale (PT), and the planum polare (PP); (ii) compared to passive listening, semantic categorization produced increased bilateral activations in the ventral inferior frontal gyrus (IFG) and middle frontal gyrus (MFG); (iii) hemodynamic responses in the left dorsal IFG increased linearly with increasing word presentation rate only during the semantic categorization task; (iv) in the semantic task hemodynamic responses decreased bilaterally in the insula with increasing word presentation rates; and (v) in parts of the HG the hemodynamic response increased with increasing word presentation rates during passive listening more strongly.

Conclusion

The observed "rate effect" in primary and secondary auditory cortex is in accord with previous findings and suggests that these areas are driven by low-level stimulus attributes. The bilateral effect of semantic categorization is also in accord with previous studies and emphasizes the role of these areas in semantic operations. The interaction between semantic categorization and word presentation in the left IFG indicates that this area has linguistic functions not present in the right IFG. Finally, we speculate that the interaction between semantic categorization and word presentation rates in HG and the insula might reflect an inhibition of the transfer of unnecessary information from the temporal to frontal regions of the brain.

Similar content being viewed by others

Background

Substantial data has been collected on the neural substrates of auditory speech perception and production. Lesion data as well as imaging studies have demonstrated that auditory information is processed in a bilateral neural network located in the perisylvian and inferior frontal regions of the brain [1–3]. However, it remains to be resolved how specific language functions are segregated within this linguistic macro-network and how these functions map onto specific anatomical areas. Recent functional neuroimaging studies of speech perception have begun to specify some of these functional subdivisions by demonstrating how specific anatomical regions are modulated by different types of the information (phonological, prosodic, and semantic). In particular, these studies have drawn attention to several distinct auditory processing streams, which originate in primary auditory cortex. Firstly, there is evidence for lateral neural projections within the superior temporal sulcus (STS) which are involved in the analysis of complex acoustic features [1, 4, 5]. Secondly, there is evidence for an anterior-posterior projection axis with two main neural nodes. One node is located within the lateral superior temporal gyrus (STG), mainly within the STS anterior to Heschl's gyrus (HG). It responds to speech-specific stimuli [6]. The other node has been found in the posterior STG and STS, primarily in the left hemisphere, and responds to the presence of auditory phonetic cues. Recent imaging studies of the IFG have also provided evidence of subsystems for word-frequency, naming vs. discrimination and syntactic difficulty [7]. Finally, neuroimaging studies have shown simultaneous activations in the inferior frontal gyrus (IFG) and in the STG/STS during semantic, phonetic, verbal-emotional categorizing and discrimination, and verbal working memory tasks. Thus, there is a close link between perisylvian and frontal brain areas during auditory speech perception.

The present study was designed to further examine the link between the perisylvian and frontal brain areas during auditory speech perception. Specifically, we investigated the responses of this temporal-frontal network to a stimulus manipulation designed to modulate a bottom-up process and a task manipulation designed to modulate top-down processes. We use the term "bottom-up" to denote the information output by the early automatic mechanisms that encode the physical properties of sensory inputs. One example of a bottom-up processing is the "rate-effect": When auditory stimuli are presented at different rates, several auditory areas show activations, which are positively correlated with the presentation rate (the "rate-effect", for the underlying neurophysiological mechanisms see [10]). The few studies, which have examined this auditory rate-effect, have yielded some inconsistent results. For example, three studies have reported rate-effects bilaterally in primary and secondary auditory cortices [11–13], while another study found no rate-effect within the left posterior STG [14]. However, methodological differences between these studies with respect to both the tasks employed (e.g. active discrimination task vs. passive listening [14] and the use of different imaging techniques (e.g. PET vs. fMRI) limit the value of cross study comparisons (see [15] for a discussion on PET/fMRI differences). The present study was designed to re-evaluate the influence of the presentation rate of auditory stimuli when a second top-down factor, the need to semantically categorize stimuli, was varied. Whereas effects of presentation rate have usually been found in superior temporal areas, semantic categorisation has been repeatedly shown to modulate activity in inferior frontal cortex (e.g. Fiez). By varying both these factors, we hoped to distinguish more generally how top-down and bottom-up factors interact in the human linguistic system (see methods).

Results

Performance

Subjects reported that they could understand all of the stimulus words and performed the semantic categorization task well, with average accuracy (percentage of correct detections) of 95.8%. False alarms occurred less than 1 %. Accuracy did not differ significantly for the different presentation rates (repeated measures ANOVA: F (2,8) = 0.327, p > 0.05).

Hemodynamic responses for the "Rate Effect"

Parametric analysis for the word presentation rate revealed strong positive linear correlations between word presentation rate and bilateral hemodynamic responses bilaterally in the superior temporal gyrus (STG) (see Fig. 1 and Fig. 2). Within these clusters there were three activation peaks in each hemisphere (Table 1). One pair was located at (x,y,z): -60, -4, 4 and 56, -8, -4, anterior to Heschl's gyrus (HG) in the Planum Polare, a region identified by Penhune's [16] probabilistic map as probable (25–50%) auditory cortex. A second pair of peaks was found posterior to the first at x,y,z: -64, -16, 8 and 64, -20, 12, within the 75–100% contour of Penhune's probabilistic map for HG. The third pair of peaks was found at x,y,z: -44, -28, 12 and 56, -28, 16, in a posterior part of the STG within the 50–75% contour of Westbury's probability map of the planum temporale (PT). In the right hemisphere, only voxels within the STG were modulated by the presentation rate (Fig. 1). In the left hemisphere, the region of activated voxels was larger, encompassing the entire STG and extending into the STS, anterior insula, and posterior MTG.

Bar graphs indicating local maxima of hemodynamic responses for brain regions significantly correlating with word presentation rate (see also Table 1). x-axis: word presentation rate in Hz; y-axis: effect size (and standard error) in arbitrary units. Abbreviations: HG = Heschl's gyrus (primary auditory area), PP = planum polare, PT = Planum temporale.

Hemodynamic responses for the "Semantic Categorization"

The main effect for semantic categorisation (categorisation > passive listening) was qualified by a strong bilateral activation in the IFG. In the left IFG the peak activation was located at (x,y,z): -44, 24, -4, ventrally and medially adjacent to the anterior insula. An additional local maximum was found in the ventral part of the MFG at x,y,z: -48, 52–8 (see Table 2 and Figure 3). The active cluster also covered parts of the left dorsal IFG (dIFG). The IFG activation in the right hemisphere was comprised of two clusters, one ventral with a peak at x,y,z: 40, 24, -8 and the other dorsal with a peak at: x,y,z: 24,56, 20. The dorsal cluster extended into the posterior MFG with a peak at x,y,z: 36, 48, 16.

Effects of semantic categorization (SC) overlaid on coronal slices of the group's mean brain (neurological convention). Numbers at the bottom left of each slice denotes y-coordinate in MNI-coordinates. Encircled areas indicate location of local maxima (see Table 2). Anatomical location of local maxima: 1/2 = inferior frontal gyrus (IFG); 3 = inferior frontal gyrus (IFG), 4 = inferior frontal gyrus (IFG), 5 = medial frontal gyrus (MFG); 6 = medial frontal gyrus (MFG); 7 = medial frontal gyrus (MFG).

Interactions between "Presentation Rate" and "Categorization"

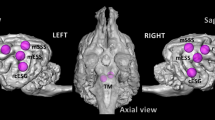

We found significant interactions between word presentation rate and semantic categorization within the right medial HG, right anterior PT, left PP, and bilaterally in the insula (Figure 4A). As can be seen in Figure 4B and 5, this interaction is the result of a negative correlation between the hemodynamic response strength and word presentation rate in the categorization condition, coupled with a positive correlation in the passive listening condition in the insular cortex (69% of modulated voxels, green areas (Fig. 4B); post hoc test, p < 0.05, uncorrected). 23 % of the voxels only showed a positive correlation between the hemodynamic response strength and word presentation rates in the passive listening condition (Fig 4B, red areas). Those voxels were located in the right and left HG with activation peaks at 60, -16, 8 and 52, -24, 16 (see Table 4 and Figure 5).

A) Effect 1 of interaction of presentation rate and semantic categorization overlaid on horizontal slices of the group's mean brain (neurological convention). Numbers at the bottom left of each slice denotes z-coordinate in MNI-coordinates. Statistical maps thresholded at p < 0.005, corrected for cluster size. B) Post-hoc test for interaction effect within significant clusters (see Fig. 3A). Areas of increased response for passive listening with increased presentation rate (red), areas showing an effect of decreased response with increased presentation rate during semantic categorization(blue) and overlap (green) (see Table 4).

Bar graphs show interaction effect for local maxima within each activated cluster at increasing presentation rates (local maxima > 8 mm apart, see Table 3). Blue line indicates passive listening, red line semantic discrimination condition. X-axis: rate in Hz; y-axis: effect size (and standard error) in arbitrary units. Abbreviations: INS = posterior insula, HG = Heschl's Gyrus (primary auditory area), PP = planum polare, PT = Planum temporale.

The inverse interaction pattern occurs in one cluster within the left dorsal IFG (Fig. 6 and Fig. 7). This interaction is mediated by a positive correlation between hemodynamic responses and word presentation rates in the categorization condition, but negatively correlated in the passive listening condition in 65% of the modulated voxels (green areas, Fig 8). Remaining voxels only showed a positive correlation with word presentation rate in the categorization condition (Table 5). A similar but non-significant cluster is present in left ventral IFG. Thus, we found an inverse interaction pattern in temporal and frontal structures (see Tables 3,4, and 5).

A) Main effect of categorization > listening (red) and Effect 2 of interaction of presentation rate and word processing (blue) overlaid on coronal slices of the group's mean brain (neurological convention). Statistical maps thresholded at p < 0.05 (see Table 3). B) Post-hoc test for interactioneffect within significant clusters (Blue cluster in Fig. 4A). Effect of increased response for semantic categorization with increased presentation rate (red) and overlap (green) with areas also showing an effect of decreased response with increased presentation rate during passive listening (see Table 5).

Bar graphs show interaction effect for local maxima within each activated cluster at increasing presentation rates (> 8 mm apart, see tab. 1). Blue line indicates passive listening, red line semantic categorization condition. x-axis: rate in Hz; y-axis: effect size (and standard error) in arbitrary units. Abbreviations: IFG = inferior frontal Gyrus.

Upper: Region of interest (ROI) centered in the left superior posterior temporal gyrus (-60,-36,12) superimposed on a SPM 'glass' brain. Within this spherical ROI (radius 12 mm) 84 voxels showed a word presentation rate effect at p > 0.005. Lower: Bar graph shows increasing effect correlated with increasing presentation rate in the ROI (x-axis presentation rate y-axis, mean effect size averaged across all voxels within the ROI in arbitrary units).

Discussion

This experiment was designed to evaluate the effect of auditory word presentation rate on hemodynamic responses within speech-related brain areas. Extending previous research, we introduced a top-down variable (semantic categorization vs. passive listening) to study whether the bottom-up effects of presentation rate would interact with the top-down control of semantic processing. In accord with previous studies, we found strong rate-effects bilaterally in HG, the PT, and the PP. Also in accord with previous studies, relative to passive listening, semantic categorization evoked increased hemodynamic responses bilaterally in the ventral and dorsal IFG, extending into the ventral part of the MFG. In addition, we observed activations in a right-sided cluster in the IFG and MFG, located more dorsally than the left-sided IFG activation. Finally, we found a surprising pattern of interactions between semantic categorization and presentation rate bilaterally in the HG, the posterior insula, and the left dorsal IFG. We will discuss these effects separately.

Presentation rate and the auditory cortex

The current results are consistent with previous studies demonstrating that hemodynamic responses in the primary and secondary auditory cortex increase with increasing rates of word presentation [18]. In accordance with Dhankhar et al. [12] we found rate-effects bilaterally (equally strong on both hemispheres) in the whole auditory cortex including HG, PT, and the dorsal bank of the STS. These findings are in contrast to those presented by Price et al[14]. Using PET these investigators reported a linear increase in signal from 0 to 90 words per minute (wpm) in all regions examined except for Wernicke's area (-58 -34 12), leading them to speculate that the left PT (Wernicke's area) is works in a time-invariant mode to subserve comprehension. However, we did not replicate this finding; in our study, the left posterior auditory cortex behaved similarly to the other auditory areas (see Figure 8). In general, our observations indicate that with each word presentation the auditory word processing nodes in HG, PT, and the PP were automatically activated irrespective of whether high level (categorization) processing was required.

Semantic categorization and frontal brain areas

When semantic categorization was explicitly required there were stronger bilateral hemodynamic responses in the IFG extending into the MFG. However, the left-sided activations were located more ventrally than those on the right, and additional right-sided activations were found in dorsal positions. Previous studies have shown that ventral parts of the left IFG are preferentially active during the performance of tasks requiring semantic as opposed to phonological processing, the latter function being associated with activations in a more posterior and dorsal part of the IFG [2, 3, 19–24]. Additional studies have argued this area is responsible for response selection in the context of semantic operations [25]. Placing these findings in a more global context, Gabrieli et al. [16] concluded that "activations in left inferior prefrontal cortex reflect a domain-specific semantic working memory capacity that is invoked more for semantic than nonsemantic analyses regardless of stimulus modality, more for initial than for repeated semantic analysis of a word or picture, more when a response must be selected from among many than few legitimate alternatives, and that yields superior later explicit memory for experiences".

The findings of the present study support this conclusion by showing that the left ventral IFG is active during semantic categorization. Interestingly, hemodynamic responses in most parts of this area were independent of word presentation rate, suggesting that the left ventral IFG mainly operates in a task-dependent rather than stimulus-independent fashion. Only a small ventral region of the ventral IFG was influenced by the word presentation rate during semantic categorization, and this only reached the p = 0.07 level. In contrast, the dorsal part of the left IFG was significantly influenced by varying word presentation rates, but we found increasing hemodynamic responses in these areas with increasing presentation rates only during semantic classification, while there was an opposite trend during passive listening. Therefore, the dorsal part of the left IFG is also involved in semantic classification, but in a different way to the ventral IFG. Following the argument made by Gabrieli et al. [19] and Fiez [2] that the left dorsal IFG is involved in phonological processing, one might suggest that the dorsal part is involved in the analysis of phonological features of those words entering semantic analysis later. Since each stimulus word comprises of several phonological features, increasing word presentation rates will increase the processing demands placed on this neural processor.

Although most studies found bilateral activations in the IFG during semantic tasks, right-sided activations in the dorsal right IFG have also been reported for other tasks; among them pattern encoding [26], processing of unusual semantic relationships [27], identification of emotional prosody [8], demanding working memory tasks [28], visually and auditory guided finger movements[29], and learning to associate sensory cues with particular movements according to arbitrary rules [30]. It is difficult to infer from the present data if one of these processes contribute to the right-sided IFG activation found in our study. However, a study by Rypma et al. [28] might support our findings. These authors found activations within the IFG during verbal working memory tasks had a left-sided activation dominance during the easier tasks but a right-sided dominance during difficult tasks, suggesting that the right IFG becomes increasingly active with increasing processing demands. Presumably, our semantic categorization task was more demanding than passive viewing, producing our observed right-sided activation in the IFG.

Interaction between "Presentation Rate" and "Semantic categorization" in temporal and frontal lobes

We found strong interactions between "Presentation Rate" and "Semantic Categorization" with peak activations located bilaterally in the posterior insula (extending into the right HG/PT and the left PP). These interactions were qualified by a strong negative rate effect for the semantic categorization condition (a decreasing hemodynamic response with increasing word presentation rates), and a positive rate effect (increasing hemodynamic response with increasing word presentation rate) for the passive listening condition. Since the insula is strongly interconnected with temporal and frontal structures [31], this brain region may play a role in linking together the different neural networks involved in auditory processing. However, although there is substantial data about the anatomical connections between insular and other brain regions in primates, relatively little is known about the precise function of the human insula in auditory processing.

Cytoarchitectonic studies of the human post-mortem brain have revealed cytoarchitectonic profiles for parts of the posterior insula (area PIA) that may correspond to early auditory areas of an intermediate level between primary auditory areas (A1) and the posterior supratemporal plane (area STA) [32]. Thus, there is some kind of cytoarchitectonic similarity between the auditory cortex and the posterior insula. However, functional and lesion studies in humans are rare.

A few lesion studies lend credence to the idea that the insula is involved in aspects of language [33, 34]. It has also been noted that injury to the insula appears to cause aphasia [35, 36]. Some brain imaging studies also demonstrate insular involvement during word generation [37], verbal memory tasks [38], auditory-vocal integration processes in the context of singing [39], the perception of moving sound [40], speech perception [41], and automatic word processing [42]. A recent study [43] which compares word vs. non word repetition in literate vs. illiterate subjects reports stronger connectivity between temporal areas and the posterior insula for illiterate subjects. This study concludes that the posterior insula might serve phonological processing. However, other studies report insular activations to non-auditory or non-verbal stimuli [44–46].

Most of the aforementioned studies found insular activations in the context of different verbal or auditory-verbal tasks. Thus, there seems to be a link between verbal-auditory processing and insular functions. However, the exact function of the insula in auditory and verbal processing remains unknown. Our data leads us to speculate that the posterior insula might be involved in specific modulation processes. The negative correlation between word presentation rates and hemodynamic response during semantic categorization could be explained by a down-regulation of early auditory areas (including HG and the insula) in situations when specific targets have to be semantically segregated out of a stream of auditory stimuli. This selection process might require specific tuning of the auditory networks in HG and the insula, whereas this tuning might not be necessary for the passive listening condition. Additionally, there might be a close functional connection from the insula region to the dorsal IFG region. In situations where the dorsal IFG is involved in demanding processing – as in the case during semantic categorization complicated by high presentation rates – the inhibitory influence from the insula might decrease.

Conclusions

The bottom-up-factor "word presentation rate" modulated hemodynamic responses bilaterally in the primary and secondary auditory cortices of the superior temporal lobe. Thus, these areas operate in a stimulus-dependent fashion. The top-down-factor "semantic categorization" modulated hemodynamic responses in the left and right ventral IFG and in the right dorsal IFG extending into the MFG, supporting earlier studies also using semantic tasks. Interactions between these factors were found bilaterally in the medial HG, the posterior adjacent insula, and in the left dorsal IFG. This interaction in the left dorsal IFG might point to the fact that phonological processing is controlled in these areas, whereas the interaction effects in the insula and HG can be seen in the context of modulating functions. All in all, this study demonstrates that the examination of interaction effects between top-down and bottom-up factors helps to disentangle the function of language-related neural networks.

Methods

Subjects

Ten healthy right-handed male subjects (age: 21–27 years) were run. Subjects gave their written informed consent according to the guidelines of the Research Center Juelich before participating in the study. Hand preference was assessed with the 12-item questionnaire of Annett [47], which allowed us to select subjects who were consistently right-handed (CRH). CRH was defined as performance of all 12 tasks with the right hand with up to two "either" preferences being acceptable. Female subjects were not examined in this study because it has been reported that they may have a more bilateral language representation than males [48].

FMRI measurements

BOLD dependent functional magnetic resonance images were obtained using a 1.5 T Siemens Magnetom Vision system (Siemens, Erlangen), with echo planar imaging capabilities and a radiofrequency (RF) head coil (gradient echo EPI, TR = 6 s, TE = 66 ms, FOV = 200 × 200 mm2, flip angle = 90°, matrix size = 64 × 64, in-plane resolution 3.125 × 3.125 mm2, slice thickness = 3 mm, interslice gap 0.3 mm, 16 slices oriented parallel to the AC-PC-line, specified with a midsaggital scout image). Additionally, a high-resolution anatomical image was acquired (MP-RAGE, T1-weighted, gradient-echo pulse sequence, TR = 11.4 ms, TE = 4.4 ms, flip angle = 15°, FOV = 250 mm, matrix size = 256 × 256, 128 sagittal slices, in-plane resolution 0.98 × 0.98 mm2, slice thickness = 1.25 mm).

Experimental set-up

After the structural scan was obtained, subjects performed 6 consecutive fMRI-runs lasting 8.4 min each. A 2 × 3 factorial block design was employed, with 2 levels of word processing (passive and active) and 3 levels of word presentation rate (0.25 Hz, 0.5 Hz, 1.0 Hz). Each experimental block began with a 0.4 min. interval to allow the fMRI acquisition signals to stabilize. Alternating cycles with a 1 minute rest period (off) and 1 minute activation (on) period followed this. Each block contained 4 of these 2 minute off-on cycles. In the passive listening blocks, subjects were asked to listen carefully to the presented stimuli, but no response was required. In the semantic categorization blocks, subjects were asked to press a response key when they heard animal words, which occurred in 20% of the presentations. For each of these words processing conditions, the order of the presentation-rate blocks was counterbalanced across subjects. However, all 3 levels of passive listening condition were always run before the 3 levels of the semantic categorization condition. This order was employed so that the prior occurrence of the classification condition would not prompt subjects to engage in semantic classifications during the passive listening task.

Stimulus material consisted of 840 German one- or two-syllabic concrete nouns. Word frequency differences between target and non-target words were not significant (p = 0.21). The words were spoken by a trained reader and had a duration of <0.9 sec. and were presented binaurally using an audio playback system ending in piezo-electric headphones. These words were randomly presented during periods of 1 minute duration. The presentation rate was adjusted by varying the inter-word ISI. The intensity of the stimuli was approximately 85 dB SPL. Scanner noise was approximately 90–100 dB, but the tightly fitting headphones suppressed at least 20 dB of this ambient noise. With a TR of 6 seconds and an acquisition time of less than 2 seconds per volume, subjects were able to listen to approximately 70% of the stimulus words without any masking by the background noise. However, the noise reduction produced by the headphones made it possible for subjects to understand all stimuli. This was confirmed in a post session interview.

Image analysis

Image analysis was performed on a PC workstation using MATLAB (Mathworks Inc., Natiek, MA, USA) and SPM99 software http://www.fil.ion.ucl.ac.uk/spm. For analysis, all images were realigned to the fifth volume, corrected for motion artefacts, co-registered with the subjects' corresponding anatomical (T1-weighted) images, resliced and normalised (4 mm3) into standard stereotaxic space using the template provided by the Montreal Neurological Institute [49], and smoothed using an 8 mm full-width-at-half-maximum Gaussian kernel. The data were analyzed by statistical parametric mapping in the context of the general linear model approach of SPM99. The effect of global differences in scan intensity was removed by scaling each scan in proportion to its global intensity.

The statistical analysis corresponds to a random effects analysis that can be generalised for the population as a whole. This was implemented in a two-stage procedure by first estimating the subject specific contrasts of interest for each condition (semantic classification and passive listening at 3 different presentation rates resulting in 6 contrast images for each subject) and then entering these contrast-images into a second level analysis to produce parametric maps of the T statistic. The contrasts at the first level contain parameter estimates pertaining to each of the six conditions. These six conditions were modelled with box-car stimulus functions convolved with a hemodynamic response function [50].

Significant activations were analyzed in a repeated measurement ANOVA with the main effects PR and SC. Voxels showing significant interaction effects (p < 0.005, uncorrected for the entire brain volume if not otherwise mentioned) were excluded from the analysis of main effects. The resulting sets of voxel values for both main effects and the interaction constitute statistical parametric maps of the T statistic (SPM(T)) which was then transformed to the unit normal distribution SPM(Z). Significant activations were thresholded at a t = 2.69 (p = 0.005) and a spatial extent criterion of p < 0.05, corrected for multiple comparisons. Because of the remarkably high between-subject variability with respect to anatomy and cytoarchitectonics in frontal brain regions [51, 52] and prespecified statistical hypotheses with respect to activations in the inferior frontal areas, we applied a lenient statistical threshold for these regions (height threshold of p = 0.05 and an extend criterion of p < 0.05, uncorrected for multiple comparisons).

References

Binder JR, Frost JA, Hammeke TA, Bellgowan PS, Springer JA, Kaufman JN, Possing ET: Human temporal lobe activation by speech and nonspeech sounds. Cereb Cortex. 2000, 10: 512-528. 10.1093/cercor/10.5.512.

Fiez JA: Phonology, semantics, and the role of the left inferior prefrontal cortex. Hum Brain Mapp. 1997, 5: 79-83. 10.1002/(SICI)1097-0193(1997)5:2<79::AID-HBM1>3.3.CO;2-M.

Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JD: Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999, 10: 15-35. 10.1006/nimg.1999.0441.

Binder JR, Frost JA, Hammeke TA, Rao SM, Cox RW: Function of the left planum temporale in auditory and linguistic processing. Brain. 1996, 119: 1239-1247.

Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B: Voice-selective areas in human auditory cortex. Nature. 2000, 403: 309-312. 10.1038/35002078.

Scott SK, Blank CC, Rosen S, Wise RJ: Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000, 123 (Pt 12): 2400-2406. 10.1093/brain/123.12.2400.

Fiebach CJ, Friederici AD, Muller K, von Cramon DY: fMRI evidence for dual routes to the mental lexicon in visual word recognition. J Cogn Neurosci. 2002, 14: 11-23. 10.1162/089892902317205285.

Buchanan TW, Lutz K, Mirzazade S, Specht K, Shah NJ, Zilles K, Jancke L: Recognition of emotional prosody and verbal components of spoken language: an fMRI study. Brain Res Cogn Brain Res. 2000, 9: 227-238. 10.1016/S0926-6410(99)00060-9.

Frost JA, Binder JR, Springer JA, Hammeke TA, Bellgowan PS, Rao SM, Cox RW: Language processing is strongly left lateralized in both sexes. Evidence from functional MRI. Brain. 1999, 122: 199-208. 10.1093/brain/122.2.199.

Phillips DP, Hall SE, Hollett JL: Repetition Rate and signal level effects on neuronal responses to brief tone pulses in cat auditory cortex. J Acoust Soc Am. 1989, 85: 2537-2549.

Binder JR, Rao SM, Hammeke TA, Frost JA, Bandettini PA, Hyde JS: Effects of stimulus rate on signal response during functional magnetic resonance imaging of auditory cortex. Brain Res Cogn Brain Res. 1994, 2: 31-38. 10.1016/0926-6410(94)90018-3.

Dhankhar A, Wexler BE, Fulbright RK, Halwes T, Blamire AM, Shulman RG: Functional magnetic resonance imaging assessment of the human brain auditory cortex response to increasing word presentation rates. J Neurophysiol. 1997, 77: 476-483.

Mummery CJ, Ashburner J, Scott SK, Wise RJS: Functional neuroimaging of speech perception in six normal and two aphasic subjects. J Acoust Soc Am. 1998, 106: 449-456. 10.1121/1.427068.

Price C, Wise R, Ramsay S, Friston K, Howard D, Patterson K, Frackowiak R: Regional response differences within the human auditory cortex when listening to words. Neurosci Lett. 1992, 146: 179-182. 10.1016/0304-3940(92)90072-F.

Mechelli A, Friston KJ, Price CJ: The effects of presentation rate during word and pseudoword reading. J Cogn Neurosci. 2000, 12: 145-156. 10.1162/089892900564000.

Penhune VB, Zatorre RJ, MacDonald JD, Evans AC: Interhemispheric anatomical differences in human primary auditory cortex: probabilistic mapping and volume measurement from magnetic resonance scans. Cereb Cortex. 1996, 6: 661-672.

Westbury CF, Zatorre RJ, Evans AC: Quantifying variability in the planum temporale: a probability map. Cereb Cortex. 1999, 9: 392-405. 10.1093/cercor/9.4.392.

Binder JR, Rao SM, Hammeke TA, Yetkin FZ, Jesmanowicz A, Bandettini PA, Wong EC, Estkowski LD, Goldstein MD, Haughton VM, et al: Functional magnetic resonance imaging of human auditory cortex [see comments]. Ann Neurol. 1994, 35: 662-672.

Gabrieli JD, Poldrack RA, Desmond JE: The role of left prefrontal cortex in language and memory. Proc Natl Acad Sci U S A. 1998, 95: 906-913. 10.1073/pnas.95.3.906.

Illes J, Francis WS, Desmond JE, Gabrieli JD, Glover GH, Poldrack R, Lee CJ, Wagner AD: Convergent cortical representation of semantic processing in bilinguals. Brain Lang. 1999, 70: 347-363. 10.1006/brln.1999.2186.

Devlin JT, Russell RP, Davis MH, Price CJ, Moss HE, Fadili MJ, Tyler LK: Is there an anatomical basis for category-specificity? Semantic memory studies in PET and fMRI. Neuropsychologia. 2002, 40: 54-75. 10.1016/S0028-3932(01)00066-5.

Desmond JE, Gabrieli JD, Glover GH: Dissociation of frontal and cerebellar activity in a cognitive task: evidence for a distinction between selection and search. Neuroimage. 1998, 7: 368-376. 10.1006/nimg.1998.0340.

Devlin JT, Russell RP, Davis MH, Price CJ, Wilson J, Moss HE, Matthews PM, Tyler LK: Susceptibility-induced loss of signal: comparing PET and fMRI on a semantic task. Neuroimage. 2000, 11: 589-600. 10.1006/nimg.2000.0595.

Thompson-Schill SL, D'Esposito M, Aguirre GK, Farah MJ: Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proc Natl Acad Sci. 1997, 94: 14792-14797. 10.1073/pnas.94.26.14792.

Golby AJ, Poldrack RA, Brewer JB, Spencer D, Desmond JE, Aron AP, Gabrieli JD: Material-specific lateralization in the medial temporal lobe and prefrontal cortex during memory encoding. Brain. 2001, 124: 1841-1854. 10.1093/brain/124.9.1841.

Seger CA, Desmond JE, Glover GH, Gabrieli JD: Functional magnetic resonance imaging evidence for right-hemisphere involvement in processing unusual semantic relationships. Neuropsychology. 2000, 14: 361-369. 10.1037//0894-4105.14.3.361.

Rypma B, Prabhakaran V, Desmond JE, Glover GH, Gabrieli JD: Load-dependent roles of frontal brain regions in the maintenance of working memory. Neuroimage. 1999, 9: 216-226. 10.1006/nimg.1998.0404.

Jancke L, Loose R, Lutz K, Specht K, Shah NJ: Cortical activations during paced finger-tapping applying visual and auditory pacing stimuli. Brain Res Cogn Brain Res. 2000, 10: 51-66.

Toni I, Passingham RE: Prefrontal-basal ganglia pathways are involved in the learning of arbitrary visuomotor associations: a PET study. Exp Brain Res. 1999, 127: 19-32. 10.1007/s002210050770.

Augustine JR: Circuitry and functional aspects of the insular lobe in primates including humans. Brain Res Brain Res Rev. 1996, 22: 229-244. 10.1016/S0165-0173(96)00011-2.

Rivier F, Clarke S: Cytochrome oxidase, acetylcholinesterase, and NADPH-diaphorase staining in human supratemporal and insular cortex: evidence for multiple auditory areas. Neuroimage. 1997, 6: 288-304. 10.1006/nimg.1997.0304.

Augustine JR: The insular lobe in primates including humans. Neurol Res. 1985, 7: 2-10.

Ojemann GA, Whitaker HA: Language localization and variability. Brain Lang. 1978, 6: 239-260.

Vignolo LA, Boccardi E, Caverni L: Unexpected CT-scan findings in global aphasia. Cortex. 1986, 22: 55-69.

Metter EJ, Riege WH, Hanson WR, Jackson CA, Kempler D, van Lancker D: Subcortical structures in aphasia. An analysis based on (F-18) – fluorodeoxyglucose, positron emission tomography, and computed tomography. Arch Neurol. 1988, 45: 1229-1234.

McCarthy G, Blamire AM, Rothman DL, Gruetter R, Shulman RG: Echo-planar magnetic resonance imaging studies of frontal cortex activation during word generation in humans. Proc Natl Acad Sci U S A. 1993, 90: 4952-4956.

Grasby PM, Frith CD, Friston KJ, Simpson J, Fletcher PC, Frackowiak RS, Dolan RJ: A graded task approach to the functional mapping of brain areas implicated in auditory-verbal memory. Brain. 1994, 117 (Pt 6): 1271-1282.

Perry DW, Alivisatos B, Evans AC, Meyer E, Petrides M, Zatorre R: Neural network supporting auditory-vocal integration. J Acoust Soc Am. 1993, 93: 2403-2404.

Griffiths TD, Bench CJ, Frackowiak RS: Human cortical areas selectively activated by apparent sound movement. Curr Biol. 1994, 4: 892-895.

Zatorre RJ, Evans AC, Meyer E, Gjedde A: Lateralization of phonetic and pitch discrimination in speech processing. Science. 1992, 256: 846-849.

Raichle ME, Fiez JA, Videen TO, MacLeod AM, Pardo JV, Fox PT, Petersen SE: Practice-related changes in human brain functional anatomy during nonmotor learning. Cereb Cortex. 1994, 4: 8-26.

Petersson KM, Reis A, Ingvar M: Cognitive processing in literate and illiterate subjects: a review of some recent behavioral and functional neuroimaging data. Scand J Psychol. 2001, 42: 251-267. 10.1111/1467-9450.00235.

Bottini G, Corcoran R, Sterzi R, Paulesu E, Schenone P, Scarpa P, Frackowiak RS, Frith CD: The role of the right hemisphere in the interpretation of figurative aspects of language. A positron emission tomography activation study. Brain. 1994, 117: 1241-1253.

Coghill RC, Talbot JD, Evans AC, Meyer E, Gjedde A, Bushnell MC, Duncan GH: Distributed processing of pain and vibration by the human brain. J Neurosci. 1994, 14: 4095-4108.

Annett M: The distribution of manual asymmetry. Br J Psychol. 1972, 63: 343-358.

Shaywitz BE, Shaywitz SE, Pugh KR, Skudlarski P, Fulbright RK, Bronen RA, Fletcher JM, Shankweiler DP, Katz L, Gore JC: Sex Differences in the Functional Organization of the Brain for Language. Nature. 1995, 373: 607-609. 10.1038/373607a0.

Evans AC, Collins DL, Mills SR, Brown ED, Kelly RL, Peters TM: 3D statistical neuroanatomical models from 305 MRI volumes. Proc IEEE-Nuclear Science Symposium and Medical Imaging. 1993, 3: 1813-1817.

Friston KJ, Frith CD, Turner R, Frackowiak RS: Characterizing evoked hemodynamics with fMRI [In Process Citation]. Neuroimage. 1995, 2: 157-165. 10.1006/nimg.1995.1018.

Rajkowska G, Goldman-Rakic PS: Cytoarchitectonic definition of prefrontal areas in the normal human cortex: II. Variability in locations of areas 9 and 46 and relationship to the Talairach Coordinate System. Cereb Cortex. 1995, 5: 323-337.

Tomaiuolo F, MacDonald JD, Caramanos Z, Posner G, Chiavaras M, Evans AC, Petrides M: Morphology, morphometry and probability mapping of the pars opercularis of the inferior frontal gyrus: an in vivo MRI analysis. Eur J Neurosci. 1999, 11: 3033-3046. 10.1046/j.1460-9568.1999.00718.x.

Goldman-Rakic PS: The prefrontal landscape: implications of functional architecture for understanding human mentation and the central executive. Philos Trans R Soc Lond B Biol Sci. 1996, 351: 1445-1453.

Amunts K, Schleicher A, Burgel U, Mohlberg H, Uylings HB, Zilles K: Broca's region revisited: cytoarchitecture and intersubject variability. J Comp Neurol. 1999, 412: 319-341. 10.1002/(SICI)1096-9861(19990920)412:2<319::AID-CNE10>3.0.CO;2-7.

Acknowledgements

The authors would like to thank Karsten Specht for helping us to analyse these data. This study was financed by the Deutsche Forschungsgemeinschaft (DFG JA 737/5-1 and DFG JA 737/5-2).

Author information

Authors and Affiliations

Corresponding author

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Noesselt, T., Shah, N.J. & Jäncke, L. Top-down and bottom-up modulation of language related areas – An fMRI Study. BMC Neurosci 4, 13 (2003). https://doi.org/10.1186/1471-2202-4-13

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2202-4-13