Abstract

Background

In recent theoretical developments, the information available (e.g. genotypes) divides the original population into two groups: animals with this information (selected animals) and animals without this information (excluded animals). These developments require inversion of the part of the pedigree-based numerator relationship matrix that describes the genetic covariance between selected animals (A22). Our main objective was to propose and evaluate methodology that takes advantage of any potential sparsity in the inverse of A22 in order to reduce the computing time required for its inversion. This potential sparsity is brought out by searching the pedigree for dependencies between the selected animals. Jointly, we expected distant ancestors to provide relationship ties that increase the density of matrix A22 but that their effect on

might be minor. This hypothesis was also tested.

Methods

The inverse of A22 can be computed from the inverse of the triangular factor (T-1) obtained by Cholesky root-free decomposition of A22. We propose an algorithm that sets up the sparsity pattern of T-1 using pedigree information. This algorithm provides positions of the elements of T-1 worth to be computed (i.e. different from zero). A recursive computation of is then achieved with or without information on the sparsity pattern and time required for each computation was recorded. For three numbers of selected animals (4000; 8000 and 12 000), A22 was computed using different pedigree extractions and the closeness of the resulting to the inverse computed using the fully extracted pedigree was measured by an appropriate norm.

Results

The use of prior information on the sparsity of T-1 decreased the computing time for inversion by a factor of 1.73 on average. Computational issues and practical uses of the different algorithms were discussed. Cases involving more than 12 000 selected animals were considered. Inclusion of 10 generations was determined to be sufficient when computing A22.

Conclusions

Depending on the size and structure of the selected sub-population, gains in time to compute are possible and these gains may increase as the number of selected animals increases. Given the sequential nature of most computational steps, the proposed algorithm can benefit from optimization and may be convenient for genomic evaluations.

Similar content being viewed by others

Background

For a population of n animals, the numerator relationship matrix (A), is an n-by-n matrix with the following properties:

-

(1)

a ij is the numerator relationship coefficient between two animals i and j among n, as defined by Wright [1];

-

(2)

diagonal element a ij is equal to 1 + F i , where F i is the inbreeding coefficient [1] of animal i;

-

(3)

A is non-singular and symmetric: for two animals i and j among n, a ij = a ji .

Because the numerator relationship matrix describes the additive similarity between animals, it is an important element explaining genetic (co)variances between animals and has numerous applications in the field of animal genetics, the most important one being its use in setting up the mixed model equations for estimation of breeding values [2].

In some situations, a particular type of information (genomic information, foreign genetic evaluation, phenotypes on a particular trait, etc.) is only available for some animals, which are selected for this particular purpose, while other animals are excluded. The original population can therefore be split into two sub-populations:

-

(1)

a sub-population composed of animals called “excluded” hereafter;

-

(2)

a sub-population composed of animals called “selected” hereafter.

Splitting the original population in this way leads to the following partition of A:

The four blocks include the relationships between excluded animals (A11), between excluded and selected animals (A12 and A21) and between selected animals (A22).

Recent methodological developments in animal breeding require inversion of A22, for example for genotyped animals in the context of genomic prediction using a single-step procedure [3–5]. Another example concerns external animals when integrating foreign information into a local genetic evaluation [6]. It is also noteworthy that the pedigree-based relationship matrix A22 and the genomic relationship matrix (G, [7]) show structural similarities: both matrices express polygenic/genomic similarities among animals inherited from ancestors that are not represented in these matrices. Thus, the present research on A22 can be extended to genomic relationships in G.

Based on the original work by Henderson [8] on inversion of A, a general framework for the inversion of relationship matrices follows (see Appendix 1). Henderson outlined a method that is based on the root-free factorization of A and showed the high sparsity of the inverse triangular factor of A. An efficient use of this sparsity then allows direct computation of A-1 as a sum of individual contributions based on a chronological reading of the pedigree. Applying partitioned matrix theory, van Arendonk et al. [9] gave a general expression for the sum of individual contributions outlined by Henderson [8]: an additional row/column in A leads to updating its inverse by increasing the order by 1 and by summing the square of a very sparse vector to A-1. The very sparse vector is the corresponding row (below the diagonal) of the inverse triangular factor. All details on these developments are given in Appendix 1.

When required, the inverse of A22 is currently obtained by brutal inversion algorithms (e.g. generalized inverse algorithm). In these algorithms, any potential sparsity occurring in the matrix to invert or in its inverse is brought out by matrix computations. In contrast, the main objectives of this paper were to investigate how potential sparsity in the inverse triangular factor of A22 can be characterized using only the pedigree, thus without requiring matrix computations, and then use the sparsity pattern of the inverse triangular factor of A22 in the computation of its inverse. Whereas the structure of the inverse triangular factor is known for A (positions are given by the pedigree; values are a priori known), no information is available on the structure of the inverse triangular factor of A22, neither on the positions of non-zero elements nor on the values of these elements. Moreover, the inverse triangular factor of A22 may be close to dense. Therefore, we addressed our objective in the following five steps:

-

(1)

inversion of A 22 with an algorithm that uses the inverse triangular factor;

-

(2)

development of an algorithm that uses pedigree information to find the positions of the non-zero elements (sparsity pattern) in the inverse triangular factor of A 22;

-

(3)

inversion of A 22 with the algorithm of step (1) but restricting computations to the non-zero elements identified by the algorithm in step (2);

-

(4)

assessment of the time reduction when computing the inverse as in step (3) instead of as in step (1);

-

(5)

and evaluation of the effect of the number of generations in the pedigree used to compute A 22, in order to reduce density of the inverse triangular factor.

Methods

Blockwise inversion of A22

For simplicity, we assume that we are working on the last selected animal, indexed as animal n. Similarly to inversion of A (see equation 1.6 in Appendix 1), assume that A22 is partitioned in a sub-matrix Z, of order (n-1), a (n-1)-long vector y, and a scalar m as:

Using blockwise inversion, can be recursively computed using the following equation:

where s is a scalar equal to m - y′Z- 1y.

Computing b = Z- 1y and defining α = s- 1 simplifies equation (2) as follows:

Similarly, as for A (see Appendix 1), there is a link between vector b and the root-free Cholesky factorization of A22 (A22 = TDT′), in that –b′ corresponds to the last row of the inverse triangular factor of A22 (T-1).

Equation (3) shows that can be constructed recursively by adding a vector product to the previous result (Z-1). This recursive construction of will be called “Algorithm A” and implies, from the second row to the last row, the computation of the whole vector b.

If an animal and its parents are all selected, vector b is as sparse as in the case of A, i.e. the only non-zero elements of b correspond to parents. Restricting computations to these elements, i.e. discarding computations involving elements that we know equal 0, results in saving computing time. Such a case is, however, highly trivial. In the next sections, we propose a method to deal with more complex cases.

Contribution of selected animals to relationships in A22: characterizing the sparsity pattern of T-1

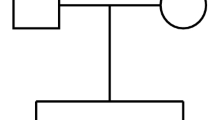

For animal n, vector b is the row of T-1 that spans from column 1 to column (n-1). By definition (b = Z-1y thus Zb = y), vector b contains the required coefficients to compute relationships (y) of animal n with the n-1 preceding animals from the relationships between those n-1 preceding animals (Z). In the case of A, only known parents of animal n are required to compute its relationships with the preceding animals. Therefore, only positions of known parents have a value different from zero in vector b. In the case of A22, some selected animals replace the parents if they are excluded: the value in b of these selected animals is different from 0, which means that they are needed to compute relationships between selected animals (y) from the relationships between all selected animals (Z). This can be illustrated by the example pedigree in Figure 1 and Tables 1 and 2, which specifyA22 and T-1 for the example pedigree. Three cases are outlined and detailed in the following:

-

(i)

animal G has two known parents, E and F. Animal E is excluded; its parent C (grandparent of G) is thus required (T - 1 GC ≠ 0) to explain the relationship between C and G (A 22;CG = 0.25).

-

(ii)

animal K has one known parent, F, that is also selected. Any relationship that K shares with other selected animals is necessarily and only explained by F ().

-

(iii)

animal L has one known parent, E, that is excluded. Its selected halfsib (G) and the selected parent of G (F, which is unrelated to L) are required, among others, to explain any relationship that L shares with other selected animals.

Animals that are required to explain relationships of a given selected animal with other selected animals will hereafter be denoted as the contributors of this selected animal. Contributors of a selected animal can be found by an exhaustive search of selected animals that replace any excluded parent of the selected animal. Their determination uses the pedigree and returns which elements of b (and thereby of T-1) are worth computing because they are expected to be non-zero. By subtraction, we obtain which elements are zeros, which is referred to as the “sparsity pattern” of T-1 in the following. In the next sub-section, we propose a heuristic algorithm that streamlines the determination of the sparsity pattern of T-1. Similar methodologies [10, 11] have been developed for the triangular factor of a symmetric-positive definite matrix rather than the inverse of the triangular factor.

An algorithm to set up the sparsity pattern

Our proposed heuristic algorithm to set up the sparsity pattern of the inverse triangular factor of A22 (see pseudo-code below) requires two inputs: the pedigree (of length n0, renumbered and ordered: parents precede progeny) and the subpopulation to which any animal belongs: excluded (population status is 1) or selected (population status is 2). The purpose of the algorithm is to complete two vectors of variable length for any animal i. The first vector (r(i)) contains references to excluded parents of animal i. The second vector (c(i)) contains selected contributors of animal i. The positions of non-zeros in the i-th row in T-1 (sparsity pattern) includes any position of the i-th row that is listed in c(i).

Initialize a vector x as the integer sequence from 1 to n0.

For any animal i in the whole population (i goes from 1 to n0),

-

(0)

initialize two vectors c (i) and r (i) as empty vectors

-

(1)

if the status of animal i is 2, then append element i to c (i); or else if the status of animal i is 1, append element i to r (i)

-

(2)

if the sire s of animal i is known and its status is 2, then append element s to c (i); or else if s is known but its status is 1, append vector r (s) to r (i)

-

(3)

if the dam d of animal i is known and its status is 2, then append element d to c (i); or else if d is known but its status is 1, append vector r (d) to r (i)

-

(4)

if the status of animal i is 2 and the vector r (i) is not empty, then:or else if the status of animal i is 1 or if the vector r (i) is empty, do nothing.

-

a.

Select all elements of x that are at positions given in r (i), remove duplicates and gather them in a temporary list t

-

b.

for any element k in list t,

-

i.

Append to c (i) the elements of vector c (k) not yet in c (i)

-

ii.

Select elements of x that are equal to k and replace them by i;

-

a.

If the whole population was selected (i.e. A22 = A, every animal has status 2), it can be easily deduced from the algorithm that only the animal itself (in step (1)) and its known sire and dam (in steps (2) and (3)) would enter vector c(i). The corresponding T-1 would be highly sparse, as it is for A. This also means that if numerous parents are selected, then this algorithm is expected to run very fast.

An example of the use of this algorithm is given in the Results section.

Use of the sparsity pattern in blockwise inversion of A22

The algorithm for blockwise inversion of A22 (Algorithm A, summarized in equation (3)) is modified to account for sparsity and will be called Algorithm B. For simplicity, we still consider the last selected animal (animal n). Algorithm B reduces computations to obtain b from y = Zb (equations 2 and 3) by three procedures, depending on the number (k) of elements in the corresponding vector c(n) and the length of b (n-1).

The first procedure (called EMPTY) is used when k = 0 (c(n) is empty). If so, only α is added to element . The value of α is just the inverse of A22,nn.

The second procedure (called PROD, for matrix PRODuct) is used when k is smaller than but relatively close to (n-1). In such a case, we perform a line-wise partition (equation (4)) of b and Z-1 between non-zeros (of subscript u) and null (subscript v) entries of b in order to avoid useless computations:

Since (n-1) is the number of elements in b and k the number of elements in b u , k dot products (of (n-1)-long vectors) would be performed instead of (n-1) dot products (of (n-1)-long vectors).

The third procedure (called LS, for Linear System of lower size) is used when k is much smaller than (n-1). In such a case, we extend the previous partition of b to a blockwise partition of Z and y (the non-zero and zero elements of b are respectively indexed by u and v):

Then, applying partitioned matrix theory to equation (5) returns the following expressions for b u and b v (with ):

Vector b u can be expressed in terms of b v () and, since b v is a vector of zeros, it comes that computing b u shrinks to compute only . In other words, the linear system Zb = y is replaced by a linear system of lower size Z uu b u = y u , and solving it is valuable only if the number of operations required to solve it is lower than the number of operations to achieve the product in procedure PROD. We chose the less expensive procedure (PROD or LS) by estimation of the number of expected floating-point multiplications.

Experimental design for tests on real populations

In order to evaluate Algorithm B in comparison with regular inversion (Algorithm A), different A22 were computed on the basis of a real pedigree provided by the Luxembourg breeders society CONVIS. This pedigree includes dairy cows from Luxembourg with their ancestors tracing back up to 24 generations and contains 387 499 animals. Statistics of the pedigree data are Table 3.

Selected sub-populations of three sizes (4000, 8000 and 12 000 animals) were designed and are identified hereafter as the three size scenarios S4k, S8k and S12k. Animals of the selected sub-populations were randomly chosen from a pool of animals born after 1999 (128 465 animals) on the assumption that only recent animals could be of interest (those being genotyped or in production).

Because a pedigree with a lower number of extracted generations is expected to provide a sparser T-1, the impact of the number of extracted generations was also evaluated for each size scenario. This enabled us to assess how many extracted generations were required in the pedigree to compute a that is a sufficient approximation to the computed using all available ancestors in the pedigree, which will be referred to as the “real inverse”. Extracting no animals other than selected animals refers to “generation 0”: the population is only made of selected animals. When extracting one generation of ancestors (“generation 1”), excluded parents enter the population. When extracting two generations of ancestors (“generation 2”), excluded grandparents also enter the population, and so on. Details on the number of animals extracted and the percentage of extraction after each generation, considered as the ratio between the number of animals in the population and the maximum number of animals available in the pedigree, are outlined in Figure 2.

Pedigree extraction facts. Generation by generation extraction of the pedigree of the selected population for three size scenarios (green: S4k; orange: S8k; blue: S12k): number of extracted animals (■) and proportion of selected animals in the extracted population (●), expressed as a percentage. Extraction went up to 23 generations for scenario S4k and up to 24 generations for scenarios S8k and S12k.

Deviations from the real inverse were measured by the following norm: , where is the inverse of A22 computed using g extracted generations and is the real inverse. This norm can be interpreted as the average difference between the value of any element of and its corresponding value in . The two matrices are equal when N is equal to 0.

Matrix A22 was computed in two steps. Inbreeding coefficients were first computed for each size scenario and number of extracted generations. The average inbreeding coefficient was never greater than 1.23 % and the greatest inbreeding coefficient was 44.53%. Matrix A22 was then computed using the method of Colleau [12].

Two test software programs

In order to evaluate potential gains in time when using Algorithm B instead of Algorithm A to invert A22, we developed two test programs in Fortran 95. The programs were neither optimized for speed, nor parallelized. Therefore, all comparisons have to be interpreted as relative figures.

The first program applies the recursive construction of the inverse, as outlined in Algorithm A (equations (2) and (3)). Potential null entries in y are checked to avoid useless computations when performing product Z-1y.

The second program restricts the same recursive construction of the inverse to non-zero elements by procedures EMPTY, PROD and LS. Potential null entries in y are also taken into account when performing the product Zuy (procedure PROD). The linear system Z uu b u = y u (procedure LS) is solved by factorization and by backward and forward substitutions.

For both programs, computing time was recorded using Fortran intrinsic subroutine CPU_TIME. For the program that uses Algorithm B, computing time includes the time required to determine the sparsity pattern. All computations and file storage were performed using double precision (15 digits). Each job was repeated 20 times on an Intel® Xeon® 64-bit processor (RAM: 8 Gb, cache size: 6 Mb, clock speed: 3 GHz).

Results

Characterizing the sparsity pattern: a numerical example

The algorithm to characterize the sparsity pattern was applied to the example pedigree of Figure 1 and specified in Table 4 (including animal status). The algorithm starts by initializing a vector x equal to [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12]. Then, we consecutively treat each animal depending on its status and the status of its parents.

Population status of the animal is given in a 4th column: 1 for excluded, 2 for selected.

Animal 1. Status 1 and unknown parents. Thus, r(1) = [1], c(1) = [-] and x = x.

Animal 2. Status 1 and unknown parents. Thus, r(2) = [2], c(2) = [-] and x = x.

Animal 3. Status 2 and known parents (1 and 2; both status 1). Thus, c(3) = [3] and r(3) = [1, 2]. The list of elements of x that match r(3) is [1, 2]. Then, c(3) = [3, c(1), c(2)] = [3] and any element of x equal to 1 or 2 is replaced by 3, returning x = [3, 3, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12].

Animal 4. Status 1 and unknown parents. Thus, r(4) = [4], c(4) = [-] and x = x.

Animal 5. Status 1 and known parents (status 1 and 2). Thus, c(5) = [3] and r(5) = [5, r(4)] = [5, 4]. No list to set up because animal has status 1; x = x.

Animal 6. Status 2 and unknown parents. Thus, r(6) = [-], c(6) = [6] and x = x.

Animal 7. Status 2 and known parents (status 1 and 2). Thus, c(7) = [7, 6] and r(7) = [r(5)] = [5, 4]. The list of elements of x that match r(7) is [4, 5]. Then, c(7) = [7, 6, c(5), c(4)] = [7, 6, 3] and any element of x equal to 5 or 4 is replaced by 7, returning x = [3, 3, 3, 7, 7, 6, 7, 8, 9, 10, 11, 12].

Animal 8. Status 1 and one known parent (status 1). Thus, r(8) = [8, r(4)] = [8, 4], c(8) = [-] and x = x.

Animal 9. Status 2 and one known parent (status 1). Thus, c(9) = [9] and r(9) = [r(8)] = [8, 4]. The list of elements of x that match r(9) is [7, 8]. Then, c(9) = [9, c(8), c(7)] = [9, 7, 6, 3] and any element of x equal to 8 or 7 is replaced by 9, returning x = [3, 3, 3, 9, 9, 6, 9, 9, 9, 10, 11, 12]

Animal 10. Status 2 and one known parent (status 1). Thus, c(10) = [10] and r(10) = [r(1)] = [1]. The list of elements of x that match r(10) is [3]. Then, c(10) = [10, c(3)] = [10, 3] and any element of x equal to 3 is replaced by 10, returning x = [10, 10, 10, 9, 9, 6, 9, 9, 9, 10, 11, 12]

Animal 11. Animal has status 2 and has one known parent (status 2). Thus, c(11) = [11, 6] and r(11) = [-]. No list to set up because r(11) is empty; x = x

Animal 12. Status 2 and one known parent (status 1). Thus, c(12) = [12] and r(12) = [r(5)] = [5, 4]. The list of elements of x that match r(12) is [9]. Then, c(12) = [12, c(9)] = [12, 9, 7, 6, 3] and any element of x equal to 9 is replaced by 12, returning x = [10, 10, 10, 12, 12, 12, 12, 12, 12, 10, 11, 12]

Vectors c(i) of the selected animals (3, 6, 7, 9, 10, 11 and 12) contain the non-zero elements of T-1 (Table 5) and these match with T-1 in Table 2.

Effect of accounting for sparsity on CPU time for inversion of A22

Algorithms A and B were both applied to the matrices created by different pedigree extractions of the three size scenarios. The elapsed CPU time results (averaged over 20 repetitions) are shown in Figure 3. Taking sparsity into account (Algorithm B) instead of using an inversion algorithm with cubic complexity (Algorithm A) reduced the elapsed CPU time for computing the inverse. For instance, the relative gains in computing speed of Algorithm B for the fully extracted pedigree were 1.67 faster for S4k, 1.75 faster for S8k, and 1.77 faster for S12k.

CPU time required for inversion of A 22 by two algorithms. Elapsed CPU time required for inversion of A22 of three different sizes (green: 4000; orange: 8000; blue: 12000), computed using pedigrees with different numbers of extracted generations, by algorithms B (■) and A (●). Red lines show upper and lower confidence intervals (99%; 20 repetitions).

Effect of the number of extracted generations on accuracy of

For each size scenario, A22 was computed using different numbers of extracted generations and the inverses were compared (Figure 4) to computed using the fully extracted pedigree (after 23, 24 and 24 generations respectively for scenarios S4k, S8k and S12k) by computing the norm N. As shown in Figure 4, regardless of the size of the matrix, the norm stabilized after 14 generations to values less than 1E-13, which can be attributed to errors due to precision.

Discussion

Computation time required by the algorithm to characterize the sparsity pattern

Figure 5 shows the elapsed CPU time (averaged over 20 repetitions) when running the proposed algorithm to determine the sparsity pattern of T-1 on populations with different numbers of selected animals (4000; 8000; 12 000) and that were extracted from several generations. The curves of the three size scenarios (S4k, S8k and S12k) presented a similar behavior. When the population consists only of selected animals (generation 0), the elapsed time was less than 1 second (S4k: 0.03 s, S8k: 0.11 s and S12k: 0.29 s). For this case, only non-zero entries occur for selected sires or dams of selected animals, a fortiori present in the pedigree. Then, elapsed CPU time increased linearly up to the 15th extracted generation, although at a different rate for the different size scenarios. Beyond that point, adding ancestors did not affect the elapsed time. These results have to be related with pedigree extraction (Figure 2): does it make sense to spend more time for additional generations? Almost all available ancestors have entered the population after extracting 10 generations (between 95-99% of the number of animals in the last extraction round). However, elapsed CPU time continued to increase at the same rate from generations 10 to 15. For instance, in scenario S12k, adding ~3% of the final population cost an additional ~4 seconds (or ~22% of the total elapsed time). The usefulness of this small group of remote ancestors for inversion of A22 is discussed hereafter (sub-section “Number of generations to extract”).

CPU time required for determination of the sparsity pattern of T-1. Elapsed CPU time required by the proposed algorithm for determination of the sparsity pattern of T-1, by number of extracted generations, for three size scenarios (green: S4k, orange: S8k and blue: S12k). Red lines show upper and lower confidence intervals (99%; 20 repetitions).

For the fully extracted population (after 23, 24 and 24 generations for scenarios S4k, S8k and S12k, respectively), there was a close-to-linear relationship between the size of the selected population and the elapsed CPU time (approximately 6 seconds for 4000 additional animals in the selected sub-population). The effective computational complexity of this algorithm is difficult to establish, however, because it mostly depends, first, on how the population was split (for instance, a selected sub-population that includes mainly a few lines or families would not contain that many excluded parents) and, secondly, on how the population is structured (depth of the pedigree, effective size of the population, average inbreeding). The embedded loop in the algorithm (step (4b) in the pseudo-code) is the main computational bottleneck and performs k iterations. In a population of n0 animals, if k is related to the two factors mentioned above (i.e. splitting and structure of the population), then the computing time required by the algorithm would behave as n0 · k, where k would be a case-specific factor. This agrees with the observations in Figure 5.

Memory requirements of the algorithm to characterize the sparsity pattern

For a population of n0 animals with n selected animals, vectors c(i) and y(i) have the greatest RAM requirements. In our implementation, vector y(i) stores few elements (positions of excluded ancestors) for all animals (thus ~ n0 integers). For selected animals, vector c(i) stores non-zero positions and includes approximately integers, where is the average density of T-1 (number of non-zeros in the lower part of T-1 averaged by line). For excluded animals, c(i) accounts for potential selected ancestors, therefore including approximately integers, where is the average number of selected ancestors per excluded animal. Memory would thus be allocated for approximately integers. None of these integers may be declared as 3-byte integers when n0 is lower than 224 (i.e. when pedigree contains less than 16.77 millions of animals).

Use of the algorithm to characterize the sparsity pattern on greater populations

If additional animals are selected, then the proportion of selected animals in the population would likely increase. In fact these additional animals would either bring new excluded ancestors (case 1), share ancestors with already selected animals (case 2), or have no registered parents in the pedigree (case 3). The two last cases are expected to be more important as the number of selected animals increases. Therefore, matrix T-1 of such a population should get sparser. These expectations were confirmed by randomly picking animals from the pool of 128 465 animals born after 1999, simulating eight larger selected sub-populations of 16 000 up to 128 000 animals. Table 6 gives sizes and proportions of the selected sub-populations. Using a computer with higher memory resources (64 Gb of RAM), the sparsity pattern of these new situations was computed. Then, the degree of sparsity was assessed as the percentage of null entries in the lower triangular part of T-1 for these new situations, as well as for previous size scenarios. The results in Figure 6 show that the degree of sparsity remained the same for low percentages of selected animals in the population (lower than 20%), while the degree of sparsity increased linearly beyond approximately 20 k animals in these specific cases. The average degree of sparsity by number of selected animals corresponded to the average number of contributors for a given animal in a given size situation. Figure 7 shows that the average number of contributors was linearly related to the number of selected animals up to ~80 k selected animals, beyond which the average number of contributors was constant. We expected the average number of contributors to decrease as the number of selected animals increased. These new selected animals would then cover more of the relationships due to excluded animals. Note that the average number of contributors would be less than 2 if all animals were selected (i.e. A22 = A).

Computation time required by the algorithm for inversion of A22 using the sparsity pattern (Algorithm B)

When running Algorithm B, the procedure (EMPTY, PROD or LS) to compute vector b was chosen according to the estimated number of floating-point multiplications to be performed. A view of this choice along all (n-1) lines of T-1 is given in Figure 8 for each size scenario (A22 was always computed using a fully extracted pedigree). Due to prior reordering of the pedigree by generation, the first lines of T-1 correspond to founders (unrelated animals) and are thus empty. Procedure LS occurred less than procedures EMPTY and PROD but was evenly distributed among line numbers, particularly for scenario S12k.

Considering Algorithm B led to estimation of the computational complexities based on the expected number of floating-point multiplications involved in the different tasks achieved by Algorithm B, as specified in Table 7. Total complexity is detailed for treatment of one line and for treatment of one full matrix of order n in Table 7, where treatment refers to all tasks to be performed, i.e. computing b and adding bb′ to the previous inverse. If k (average number of contributors) is considered as independent of n, the most complex term is O(n2 · k), which is required when using the PROD procedure (proportion p P of the total). The PROD procedure is used less frequently for greater matrices (see Figures 8 and 9 beyond 80 k animals). Treating k as independent of n is also a more reasonable assumption for greater matrices (Figure 7), since k is undoubtedly related to n for smaller matrices. The total complexity for a matrix of order n becomes:

where represents the average density of the matrix. The most complex term is tempered by two very low coefficients: the proportion of times the LS procedure is used (p L ), which may be very low for small matrices (Figure 9), and the cube of the average density , which was lower than 0.5 in our examples (Figure 6) for matrices of order beyond 32 000. Thus, Algorithm B seems more suitable for large matrices than for small matrices, regardless of whether there is dependence between n and k or not.

The issue of numerical stability was also addressed. When using procedure PROD, the result of the previous iteration was used in the current iteration through α and b. Accumulating errors could lead to instabilities and/or divergences. However, in LS procedure, the result of the previous iteration does not affect the b that is computed. Choosing the LS procedure at regular intervals among iterations using the PROD procedure (see Figure 8) stops the accumulation of errors that could have resulted from continuously choosing the PROD procedure. Therefore, interlacing choices for both procedures is a good way to prevent numerical instability. Independence between iterations also allows procedure LS to parallelized.

Memory requirements of the algorithm for inversion of A22 using sparsity pattern (Algorithm B)

Algorithm B requires allocation of more than twice the RAM than Algorithm A because it cannot store the results of the inversion in the input matrix. This is due to procedure LS working on different parts of A22. However, since elements that are required for LS are identified when determining the sparsity pattern, they could be stored separately in order to reduce the amount of RAM required. For that reason, sparsity patterns should be established prior to computation of A22 to determine which relationships are worth being computed.

Number of generations to extract

The depth of the pedigree to be used for instance in genetic evaluations, is still a question of debate, and often moderately deep pedigrees are used, especially when only recent data is analyzed.

Results in Figure 4 suggest that pedigree from a limited number of generations (5 to 10) is sufficient to compute with reasonable accuracy. The explanation is that distant ancestors do not greatly enhance a relationship. For instance, a common ancestor to animals i and j that enters the pedigree after g extracted generations and that is older than any selected animal, can only add up to 2- 2g to the value of the relationship between i and j. In generation g, i and j can have a maximum of 2g common ancestors. Therefore, extracting an additional generation can increase the relationship between i and j by only up to δ = 2- g. Regardless of the number of animals added to the pedigree when extracting generation 10, the maximum change brought to any relationship reduces to less than 0.001, which would have a minor effect on the inverse scale, as confirmed by Figure 4.

However, computing time required for determination of the sparsity pattern increases linearly after 10 generations (Figure 5). Thus, limiting extraction of pedigree to 10 generations appears to be a good balance between taking into account relationships due to distant ancestors and computing time. Applying a similar study to pedigree extractions for routine genetic evaluations would be meaningful and may lead us to consider extracting a number of generations instead of a birth year limit, which is current common practice.

Practical use in a genomic background

For genomic evaluations, two specific situations where is needed may require the use of Algorithm B. First, as explained above and shown in equation (3), the inverse of the matrix is computed recursively by adding a block specific to the current animal to the previous inverse. At each genomic evaluation, could therefore be stored in a file and reused at the next evaluation cycle. At each evaluation, the matrix would be enhanced by adding newly genotyped animals. However, this approach has some limits:

-

(1)

Animals have to be listed by generation order and only animals younger than those already genotyped can be added because older animals may cause changes in the sparsity pattern. This could be easily implemented in a cattle breed such as Holstein, where only few animals are key ancestors of the breed.

-

(2)

The resulting file may be large but this could be reduced by sparse storage approaches.

Meyer et al. [13] recently applied a similar methodology for computation of the inverse of the genomic relationship matrix (G): their methodology also updates the previous inverse of G, necessitating its storage on disk from an evaluation to the next one.

Secondly, when using a pedigree of only one extracted generation, which contains genotyped animals and their ungenotyped parents, inversion of A22 is even faster (Figure 6) and the inverse seems to be a reasonable approximation of computed with a full extracted pedigree (see Figure 4 and Discussion here above). Such a fair approximation of may be useful as a preconditioner to solve A22x = v, for instance, as required in the iterative solution of MME of single-step genomic BLUP (best linear unbiased prediction) proposed by Legarra and Ducrocq [14].

Current limits

The algorithm to determine the sparsity pattern of the inverse triangular factor of A22 is obviously useful only in inversion algorithms that use the inverse triangular factor. For other inversion algorithms, the algorithm to determine the sparsity pattern should not be useful.

Inversion algorithms that use the inverse triangular factor are useful in certain cases (e.g., for updating an inverted matrix or for obtaining quick approximations), but they would be less efficient, in terms of computing time, for the single purpose of inversion. The time required by Algorithms A and B was compared with the time required by subroutine “dkmxhf.f90” (K. Meyer, University of New England, Australia), which is a regular and efficient inversion algorithm. For inversion of the three different orders of A22 (4000, 8000 and 12 000), computing times of dkmxhf.f90 were lower than computing times obtained with Algorithm A and similar to those obtained with Algorithm B (accounting for sparsity). For small numbers of extracted generations, computing times were slightly lower for Algorithm B than dkmxhf.f90, but were greater when greater numbers of generations were extracted. However, the computing speed of Algorithm B can benefit from several optimizations (e.g., parallelization of the LS procedure and use of specific libraries for matrix products).

For computational ease, a small population (less than 1 million animals) was used in this study. Gains in computing time have to be tested for other sizes of population. This study was also restricted to only one population by size scenario and used repetitions (20) of the algorithm on the same data. Use of a Holstein population may also be criticized because although the average computed inbreeding was never greater than 1.23%, such a population has few key ancestors. Having the key ancestors in the selected sub-population might avoid density, because they would be contributors of many other selected animals.

Conclusions

The determination of the sparsity pattern of T-1 using pedigree information is a prior step that allows gains in computing time for inversion based on the use of T-1. This allowed the computing time for inversion of matrices of three different sizes (4000, 8000 and 12 000 selected animals) to be reduced by a factor 1.73 on average. Gains in computing time are expected to be higher if the number of selected animals exceeds 80 000. Memory requirements for inversion of such a matrix would increase and the algorithm would become numerically more stable, since the LS procedure would become more important than the PROD procedure. Moreover, computation of the inverse by a recursive method may be very helpful in the case of genomic prediction, where a new batch of younger selected animals at each upcoming evaluation must be added to the previous inverse matrix already computed.

The results on the number of pedigree generations required for the selected animals suggest that no more than 14 generations should be extracted. If the working precision is less than 15 digits, this can even be reduced. A good balance between computing time for determination of the sparsity pattern and accuracy may be achieved with 10 extracted generations.

Appendix

Appendix 1: Inversion of the numerator relationship matrix using the inverse triangular factor

The numerator relationship matrix (A) can be factorized as

Henderson [8] proposed a recursion rule to compute the triangular factor T:

In equation (1.2), T(i-1) and T(i) are two matrices of respective sizes (i-1) and i. They refer T computed after, respectively, (i-1) and i recursions. Vector b(i) is a vector of parental contributions: it summarizes the linear dependency between parents and offspring. This vector is null except on positions corresponding to parents of i where it is equal to 0.5. Henderson [8] also showed that the inverse triangular factor (T-1) only contains three different values: 0, 1and -0.5, since it is obtained by triangular matrix inversion (equation 1.3). The elements of the diagonal are equal to 1 and the lower off-diagonal elements are equal to the vector corresponding to the ith animal; they contain thus only 0 and -0.5 elements.

Besides T, the diagonal matrix D is computed one element at a time according to Henderson [8] and Quaas [15]. At the ith recursion D(i) has the form:

Replacing equations (1.2) and (1.4) in (1.1) shows that the recursion rule for computation of T is actually identical to that of the tabular method (equation 1.5.3, Emik and Terril [16]; Henderson [8]), since it computes the last below-diagonal row in A(i) as a linear combination of rows in A(i-1).

Replacing in equation (1.5.3) by a ii (the equivalence can be easily shown) expresses the tabular method as in van Arendonk et al. [9]:

Applying the partitioned matrix theory to equation (1.6), van Arendonk et al. [9] structured A-1 as a sum of n updates of a null matrix (recursion rule in equation 1.7) involving multiplication of a sparse vector (-b(i)) by itself.

The sparse vector -b(i) is actually the transpose of the i-th below-diagonal row of T-1 (see equation 1.3). Such a construction of A-1 requires thus to know the following:

-

(1)

the positions and values of non-zero elements in b (i), i.e. the structure of T -1;

-

(2)

some elements of the original matrix, to compute d ii as .

After meeting these requirements (determination of the structure of the inverse triangular factor and computation of some elements of the original matrix), the same framework was extended to the inversion of other relationship matrices used in animal breeding (e.g. gametic relationship matrix [17], dominance [18] and epistasis [19] effects or covariance matrix of marked QTL effects [20]).

References

Wright S: Coefficients of inbreeding and relationship. American Nat. 1922, 56: 330-338. 10.1086/279872.

Henderson CR: Sire evaluation and genetic trends. Proceedings of the Animal Breeding and Genetics Symposium in Honour of JL Lush. Edited by: American Society of Animal Science and American Dairy Science Association Champaign. 1973, Champaign, IL: , 10-41.

Misztal I, Legarra A, Aguilar I: Computing procedures for genetic evaluation including phenotypic, full pedigree, and genomic information. J Dairy Sci. 2009, 92: 4648-4655. 10.3168/jds.2009-2064.

Christensen OF, Lund MS: Genomic prediction when some animals are not genotyped. Genet Sel Evol. 2010, 42: 2-10.1186/1297-9686-42-2.

Gengler N, Nieuwhof G, Konstantinov K, Goddard M: Alternative single-step type genomic prediction equations. Book of Abstracts of the 63rd Annual Meeting of the European Association of Animal Production: 27-31 August 2012. 2012, Bratislava Wageningen: Wageningen Academic Publishers, 131-

Vandenplas J, Gengler N: Comparisons and improvements of different Bayesian procedures to integrate external information into genetic evaluations. J Dairy Sci. 2012, 95: 1513-1526. 10.3168/jds.2011-4322.

VanRaden PM: Efficient methods to compute genomic predictions. J Dairy Sci. 2008, 91: 4414-4423. 10.3168/jds.2007-0980.

Henderson CR: A simple method for computing the inverse of a numerator relationship matrix used in prediction of breeding values. Biometrics. 1976, 32: 69-83. 10.2307/2529339.

van Arendonk JAV, Tier B, Kinghorn BP: Use of multiple genetic markers in prediction of breeding values. Genetics. 1994, 137: 319-329.

Gilbert JR: Predicting structure in sparse matrix computations. SIAM J Matrix Anal Appl. 1994, 15: 62-79. 10.1137/S0895479887139455.

George A, Liu JWH: An optimal algorithm for symbolic factorization of symmetric matrices. SIAM J Comput. 1980, 9: 583-593. 10.1137/0209044.

Colleau JJ: An indirect approach to the extensive calculation of relationship coefficients. Genet Sel Evol. 2002, 34: 409-421. 10.1186/1297-9686-34-4-409.

Meyer K, Tier B, Graser HU: Technical note: updating the inverse of the genomic relationship matrix. J Anim Sci. 2013, 91: 2583-2586. 10.2527/jas.2012-6056.

Legarra A, Ducrocq V: Computational strategies for national integration of phenotypic, genomic and pedigree data in a single-step best linear unbiased prediction. J Dairy Sci. 2012, 95: 4629-4645. 10.3168/jds.2011-4982.

Quaas RL: Computing the diagonal elements and inverse of a large numerator relationship matrix. Biometrics. 1976, 32: 949-953. 10.2307/2529279.

Emik LO, Terril CE: Systematic procedures for calculating inbreeding coefficients. J Hered. 1949, 40: 51-55.

Schaeffer LR, Kennedy BW, Gibson JP: The inverse of the gametic relationship matrix. J Dairy Sci. 1989, 72: 1266-1272. 10.3168/jds.S0022-0302(89)79231-6.

Hoeschele I, VanRaden PM: Rapid inversion of dominance relationship matrices for noninbred populations by including sire by dam subclass effects. J Dairy Sci. 1991, 74: 557-569. 10.3168/jds.S0022-0302(91)78203-9.

VanRaden PM, Hoeschele I: Rapid inversion of additive by additive relationship matrices by including sire-dam combination effects. J Dairy Sci. 1991, 74: 570-579. 10.3168/jds.S0022-0302(91)78204-0.

Fernando RL, Grossman M: Marker assisted selection using best linear unbiased prediction. Genet Sel Evol. 1989, 21: 467-477. 10.1186/1297-9686-21-4-467.

Acknowledgements

Luxembourg breeders association CONVIS is gratefully acknowledged for providing the pedigree data used in this study. PF acknowledges the following peoples and institution: Fonds National de la Recherche Luxembourg (FNR) for funding through AFR grant, I Misztal for hosting at Animal and Dairy Sciences Department of University of Georgia and for providing computing facilities, G Gorjanc for hosting in Animal Science Department of University of Ljubljana, J Vandenplas for helpful discussions, C Charles and L Lecharlier for mathematical advising, C Bastin, JFDumasy and D. TeCroney for editing help, and several members of the Numerical Genetics, Genomics and Modeling group of Gembloux Agro-Bio Tech for testing the algorithm. Comments and edits by two anonymous reviewers and by editors are gratefully acknowledged. The authors acknowledge the financial support of the Ministry of Agriculture of the Walloon Region of Belgium through the project “DairySNP” (D31-1274/S1 and D31-1274/S2) and the collaboration with Walloon Breeding Association.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

PF developed the method, conceived the experimental design, ran the tests and wrote the first draft. NG directed the study and made substantial contributions for the discussion. Both authors participated in writing the manuscript. All authors read and approved the final version.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an open access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Faux, P., Gengler, N. Inversion of a part of the numerator relationship matrix using pedigree information. Genet Sel Evol 45, 45 (2013). https://doi.org/10.1186/1297-9686-45-45

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1297-9686-45-45