Abstract

The CERN cryogenic facilities demand a versatile, distributed, homogeneous and highly reliable control system. For this purpose, CERN conceived and developed several frameworks (JCOP, UNICOS, FESA, CMW), based on current industrial technologies and COTS equipment, such as PC, PLC and SCADA systems complying with the requested constraints. The cryogenic control system nowadays uses these frameworks and allows the joint development of supervision and control layers by defining a common structure for specifications and code documentation. Another important advantage of the CERN frameworks is the possibility to integrate different control systems into a large technical system with communication capability. Such a system is capable of sharing control variables from all accelerator apparatus in order to cope with the operation scenarios.The first implementation of this control architecture started in 2000 for the Large Hadron Collider (LHC). Since then CERN continued developing the hardware and software components of the cryogenic control system, based on the exploitation of the experience gained. These developments are always aimed at increasing the safety and improving the performance. To overcome the long-term maintenance challenges, key strategies such as the use of homogeneous hardware solutions and the optimization of the maintenance procedures were set up. They are easing the development of the control applications and the hardware configuration by allowing a structured and homogeneous approach. Furthermore, they reduce the needed manpower and minimize the financial impact of the periodical maintenance. In that context, the standardization of technical solutions both at hardware and software level simplify also the systems monitoring the operation and maintenance processes, while providing a high level of availability.

Similar content being viewed by others

Introduction

Cryogenics has widely contributed to the recent major successes in High Energy Physics (HEP). The increasing requirements have pushed cryogenic engineering developments to a high level of technical excellence. The control system of large cryogenic apparatus has evolved and solved the problem towards a fully automatic operation (due to the large number of correlated variables on wide operation ranges) with minimal human intervention through enhanced capabilities to handle the complexity of many processes. Nowadays, HEP laboratories have collected an enormous experience in operating such large and complex facilities [1, 2].

The European Organization for Nuclear Research (CERN) has achieved already two physics runs, Run1 first operational physic campaign (2009-2013) and Run2 second operational run (2015-2018) with its powerful and complex particle accelerator, the Large Hadron Collider (LHC). The cryogenic control system of the LHC is based on the highest technological standards and is capable of: collecting information from instrumentation distributed over long distances, interacting with several process logics of thousands of variables and loops, as well as communicating in different control layers with the operator and on-call maintenance technician (Tavian: Latest developments in cryogenics at CERN, presented at the 20th National Symposium on Cryogenics-2005 TNSC 2005, Surat, India, Feb. 24Ű26, 2005, unpublished) [3]. Finally, the control system must also deal with fault diagnostic scenarios and degraded modes in order to cope with the mandatory continuous operation guaranteeing a safe operation and the maintaining of the costly liquid helium inventory [4, 5].

In this paper, the CERN cryogenic control system is described by detailing the control ecosystem as a case study. In particular, Section 2 shows the present cryogenic control system architecture and in Section 3 the CERN frameworks used to build the cryogenic control system based on the CERN accelerator control environment functionalities are described. The full LHC (accelerator & detectors) helium inventory amounts to 136 t (respectively 130 t and 6 t of helium). Section 4 reports the case study of the LHC cryogenics control system by detailing the tunnel cryogenic control architecture and its communication protocols for large distances, as well as by giving details of how helium refrigeration process control needs were tackled. Finally, the evolution of the control system in view of the next CERN challenge: the project High Luminosity of the LHC [6] will be presented.

The architecture of the cryogenic control system

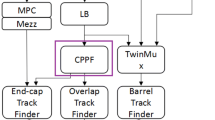

The CERN cryogenics control system is acting within the CERN industrial control ecosystem (Fig. 1). The control system of the CERN cryogenic process is compliant with the standard automation pyramidal organisation of IEC-62264 and is based on industrial components deployed at the control level. It covers the: supervision, control, and instrumentation layers.

2.1 Supervision layer

All cryogenics systems are supervised through clusters of Data Servers (DS) [7] running the Siemens Ⓡ WinCC-OA Ⓡ SCADA system (Fig. 2). These servers are currently implemented within off the shelf Linux machines. The Human-Machine Interface (HMI) allows operators to monitor and act on the cryogenics facilities. It is based on (Linux or Windows) Operator Work Stations (OWS) deployed within the control rooms or in cryogenic expert’s offices. In addition to the classical SCADA features, several functionalities have been added in the last decade: visualization of the process hierarchy, access to the interlocks per device, control loop auto-tuning, direct access to device documentation, etc. This layer provides also an interface or a dedicated connection towards the other CERN control systems, the Central Alarm system, the central long-term logging database (NXCALS [8]) to layer 3 applications such as (CMMS Infor-EAM [9]) and to the Accelerator Fault Track application (AFT [10]). The Engineering Workstations (EWS) used to configure, develop and maintain the applications are also connected at this level.

2.2 Control layer

The architecture of the cryogenic control system is based on standard industrial components. The control duties are executed within PLCs, with safety interlocks either cabled in the electrical cabinet or programmed in the local protection PLC. The radiation levels expected inside the LHC tunnel depend on the location. There are up to 1 Gy (air) and 5∗1014n∗cm−2 per year along the regular arcs where the beams are steered by a dipolar magnetic field and are not subjected to head-on collisions; the arcs length represent over 80% of the LHC total circumference. Around interaction or cleaning points the dose can significantly exceed 1000 Gy (air) and 5∗1014n∗cm−2 per year and in such areas no electronic equipment related to the cryogenic control system is installed. The system is designed to work only with moderate radiation levels (100 Gy (air)) and will be of “radiation tolerant” grade [11] (see section “The control system architecture in the LHC tunnel”). The main electronic equipment used for the LHC cryogenic control system presents a survival dose of 500 Gy. For most of the LHC tunnel, putting together these limits and the radiation levels implies that this equipment can be employed throughout the whole LHC lifespan. In this radiation exposed area a radiation tolerant field-bus is used coupled with standard CERN Front End Computer (FEC).The long-distance integration site to site or toward the supervision layer relies on the CERN Ethernet Technical Network (TN), whereas the local networks internal to a cryogenic site are implemented on field-buses using both fibers and copper cables.

2.3 Instrumentation layer

The control of the cryogenic system requires a large number of industrial sensors (pressure, temperature, rotation speed, etc.), electronic conditioning units and actuators. To ensure the communication with the devices either direct copper cable connections or a protected and dedicated Ethernet network and industrial field-buses are used. In the LHC tunnel, the instruments are radiation-resistant to withstand the hostile environment while providing reliable measurements. Their signal conditioners communicate via WorldFip (1 Mbit/s) controllers, which can be used thanks to their radiation tolerance characteristics. In the other cases PROFIBUS interfaced to the PLC via the PROFIBUS DP (Decentralized periphery 1.5 Mbit/s) or PROFIBUS PA (Process Automation) are used, depending on the equipment connected to it.

2.4 CERN communication infrastructure

Several CERN technical installations and especially the LHC cryogenic system are in operation 24/7. By the fact that they are partially situated in underground tunnels, they are exposed to radiation in some areas, they are difficult to access and some are classified as safety systems. These constraints call for the need of an infrastructure with high availability, with on-line, remote performance monitoring and control, and with a high level of redundancy with automatic switch-over capabilities in case of failure. In essence, the communication services up 24 hours 365 days a year, and downtime periods should be limited to planned maintenance interventions.

The global design of the LHC communication infrastructure for accelerator and detectors controls [12] has been guided towards technology solution, which is available from industry. The communication infrastructure has also been divided into several communication networks: the general-purpose network; the physics detector controls network; the technical services network and the transport network for physics data from the experiments. It should be noted that these networks are not necessarily separated, hence they may very well coexist on the same wire. The technical services network, however, is physically separated from the others in order to serve as a second channel for alarm transmission and for cyber-protection.

The average lifetime for the LHC accelerator complex is about 50 years, and several consolidation efforts or upgrade of equipment are foreseen over the accelerator global lifespan. When the accelerator complex is stopped, several maintenance tasks are being performed on all the technical infrastructure. This happens every year, for about 1 or 2 months maximum duration depending on the maintenance schedule. Optical fiber is the media of choice for the communication backbone, and IP/Ethernet is the protocol of choice for network connections with Gigabit capacity in the backbone. The Ethernet network is not only involved in interfacing the supervision and the control layers but also in the communication implicated in the close control loops. If the reliability is defined as the ability of a system to provide its services to clients under both routine and abnormal circumstances, the reliability of the CERN infrastructure has over the last 2 runs reached 99.9% [13].

CERN frameworks

CERN developed several frameworks (UNICOS, FESA, CMW, and several others) based on current technologies and commercial off the shelf (COTS) equipment, such as industrial PC, PLC and SCADA systems. The cryogenic control system nowadays uses these frameworks to allow the joint development of supervision and control layers, by defining a common structure for specifications and code documentation. Another important advantage is the possibility to integrate different control systems into a large technical system with communication capability. Such a system is capable of sharing variables from all the accelerator apparatus. For example, the cryogenic control can exchange vacuum signals, beam intensity signals, magnet current signals and any other important variable, in order to cope with the operation scenarios. With a common approach, CERN gathers together the development efforts for the controls of the accelerator and experiments and avoids the duplication of efforts. The control system for cryogenics of the LHC and its experiments adopts the formal approach for process decomposition, modelling norms (IEC61512-1) and engineering standards employed by high technology industry.

3.1 UNified COntrol System (UNICOS) & Joint COntrol Project (JCOP)

UNICOS and JCOP frameworks are complementary in their approach and employ common components in their stack of technologies. The framework JCOP [14] provides guidelines and tools to implement flexible and complex control systems with a large variety of equipment. Conversely, the UNICOS Framework (Gayet et al.: UNICOS: an open framework, unpublished) defines a strict engineering process and provides tools to develop PLC-based control systems and to generate parts through templates. The component-based approach of both frameworks allows the functionality to be composed as required by the control system developers and to be extended as necessary. JCOP is developed in a collaboration between the LHC Experiments and the CERN industrial controls group, where each of the partners develops, maintains, and supports generic components widely used across CERN.

UNICORE (Fig. 3) was developed to offer UNICOS application developers a configurable user interface, which does not require the knowledge of the WinCC OA scripting language nor the use of basic interfaces. In addition, it offers operators a homogeneous user interface that is entirely customizable with features such as navigation capabilities between panels and trends (WWW browser like, contextual buttons, pop-up navigation), access to the devices without creating a panel (tree device overview), process alarms and event lists. The supervision device hierarchy is based on a front-end device containing process devices (time stamping in the front-end is allowed). Typical examples of front-end devices are PLCs, Front-End Computers (FEC), and OPC servers. At the front-end level the communication is performed with time stamping at the source and optionally an event publishing mechanism (TSPP). A developer of a UNICOS supervision application does not need any WinCC OA Ⓡ scripting knowledge. He must import the front-end and process devices, to create the panels of the process views with the catalogue of device widgets and to configure the trends.

The UNICOS framework provides developers with the means to rapidly develop control applications and provides operators with ways to interact with little effort with all the items of the process, from the simplest (e.g. I/O channels) to the high-level compound devices. In addition, UNICOS offers sophisticated tools to diagnose problems. A basic package called UNICOS Continuous Process Control (CPC) is offered to develop process control applications (such a package contains a set of generic software components combined together and configured to produce a control and/or monitoring application).

The UNICOS CPC package proposes a method to design and develop complete process control applications. It is based on the modelling of the process in a hierarchy of devices (I/Os, field and abstract control devices). These devices are used as a common language by process engineers and programmers to define the functional analysis of the process. The package is deployed both in the supervision layer and in the PLCs. Tools have been developed to automate the instantiation of the devices in the supervision and process control layers and to generate either skeletons or complete PLC programs. The production of control code with this methodology is generally data-driven and a complete model driven software production tool has been implemented [16].

The main feature of the framework is to ease the operator’s ability to follow the process evolution and to understand the dynamic of a situation, the role of each physical component, and the reason of their present position. It also helps to identify the origin of a failure and to predict what may happen in the near future. To achieve these duties, operation teams can have access to classical SCADA tools (process synoptics, time stamped alarms and events lists, trend curves with long term storage etc.).

In addition, the control model used for UNICOS is based on the IEC61512-1 model [15]. This model decomposes (Fig. 4) a cryogenic plant (a unit) in smaller components (equipment modules) as many times as necessary down to the smallest equipment module possible. These equipment modules are then decomposed in control modules, representing the sensor and actuators (field objects) or regulator and alarms (control objects) connected to the process through input and outputs (I/O objects). To this aim, the device model (Fig. 5) is used in the PLC to allow the concurrent access (supervision or process hierarchy) to the various devices.

When combined with the implementation in the supervision layer it allows the access by the operators using the mechanism in Fig. 6.

3.2 Controls middleware framework (CMW)

The CMW [17] framework provides a communication infrastructure for all CERN accelerators, enabling client applications to connect, control and acquire data from all types of equipment. This is achieved via a common naming protocol called Device Property Model and a set of common operations a client application can execute on any server. Additionally, the CMW framework provides libraries for developing client & server applications in C++ & Java Ⓡ and several centrally managed services, required to run the distributed infrastructure.

3.3 Front-end software architecture (FESA)

The FESA framework [18] delivers a complete environment for the equipment specialists to design, develop, test and deploy real-time control software for front-end computers. This framework is used to develop the LHC ring and injection chain front-end equipment software. The primary objective of this framework is to standardize, simplify and optimize the task of writing front-end software. The FESA framework has overcome the diversity of the CERN accelerator front-end equipment software and has paved the way for efficient development, diagnostic and maintenance, becoming the CERN accelerator standard infrastructure for front end software [19].

The LHC cryogenics control system case study

The cryogenic infrastructures for the LHC accelerator [20, 21] and its detectors [22] (Bremer: The cryogenic system for the atlas liquid argon detector, unpublished) include large and complex facilities able to cool down equipment with liquid nitrogen (LN2 at 80 K), liquid helium (LHe at 4.5 K), and superfluid helium down to 1.9 K.

The LHC uses 1700 main superconducting magnets (36’000 t of cold mass), distributed along the 27 km circular tunnel, and operating in superfluid helium. The cryogenics equipment is divided in eight subsystems (Fig. 7) operating in an autonomous way. Each of these subsystems comprises two large cryogenic plants to produce the needed refrigeration power. A Cryogenic Distribution Line (QRL), mounted in parallel to the magnets, feeds the magnets through jumper connections placed 107 m apart. Several thousand of sensors and actuators are mounted either on the magnets or on the QRL.

The associated LHC physics detectors ATLAS and CMS host refrigerators to cool down their superconducting magnet systems with liquid helium at 4.5 K (respectively 1275 t and 225 t of cold mass) and cryogenic detectors (calorimeters) using liquefied gases (83’000 liter of ultra-pure liquid argon). The ATLAS liquid argon detector is continuously cooled by a nitrogen refrigerator. The process automation of the cryogenic plants (Fig. 8) and of the cryogenic distribution around the LHC circumference (Fig. 10) was implemented in the year 2000. It is based on Programmable Logic Controllers, each running several hundreds of control loops, thousands of alarms and interlocks, and phases sequencer. Spread along 27 km and exposed to ionizing radiation, 15 000 cryogenic sensors and actuators are accessed through industrial field networks. To automate the cryogenic process, these facilities demand a homogeneous, versatile, distributed and highly reliable control system.

After 2000, CERN has developed many additional projects to control its cryogenic systems [23], to upgrade hardware and software components for the existing cryogenic installations, and has largely exploited the gained expertise to increase the safety and improve the performances. To overcome the long-term maintenance challenges, key strategies such as the use of homogeneous hardware solutions and the optimization of the maintenance procedures, reducing the needed manpower and minimizing the financial impact, have been applied. They allow to achieve the deployment requirements in terms of time constraints and costs. The CERN in-house development of the cryogenic control system solutions facilitate the system monitoring, the operation and maintenance processes, while keeping a high quality and providing a high level of availability.

4.1 The control system of the cryogenic refrigerators of the LHC

In the LHC cryogenic project, the CERN strategy has always been to split the responsibility for the cryogenic plant process and the development of the process control. A tight technical synergy with the industrial partners, in the field of the cryogenic process, must be present. This task, occasionally difficult, shall result in a better CERN in-house knowledge of the cryogenic installation and a simplified smooth operation scenario in the commissioning phase. From the early time of the PLC automation, CERN has gathered a group of control experts working side by side with cryogenic engineers on the analysis, conception, construction and commissioning of the final cryogenic apparatus. For the large LHC cryoplants (8 units 18 kW@4.5k and 8 units 2.4 kW@1.8 K, 16 in total), control is carried out [24] by about 70 PLCs from the last generation, I/O boards for local instrumentation or remote boards connected with either proprietary network or Ethernet IP. For some sensitive equipment (mainly compressors and turbines) the control duties are performed by PLC, supplied by two different brands.

For both brands the power supplies are adapted to the PLC usage, the instrumentation is connected either via 4-20 mA direct cabling, or via PROFIBUS Ⓒ / PROFINET Ⓒ networks using DP/PA boards, twisted pair cables and PA instruments for smart configuration (Fig. 9).

Several optimisations were performed in the process control system during the years of operation. One in particular concerned the 1.8K cryoplant. Initially, they were installed and commissioned with control strategies proposed by the suppliers, considered by CERN as a “black box” for the lack of comprehension and information. During the LHC run campaign they caused several significant diagnostic limitations making it difficult to operate. Moreover, one of the units was not able to reach nominal operation pressure without any clear diagnostic possible. These constraints represented a serious difficulty to fulfil the operational flexibility and availability requirements of the cold compressors system. For this reason, alongside with the end of electronic components life cycle, an upgrade of the process control system was implemented during the LHC long shut-down maintenance period, where several hardware and software modifications have been realized [25]. On the hardware part, the simplification of the control and electricity architecture gives a better global reliability of the system. On the software part, the reverse engineering and rewriting of the control logic using CERN standards has given the possibility to better diagnose specific problems and has allowed to initiate new features and improvements. Those changes improved machine protection (surge detection) and automated a number of manual operations.

4.2 The control system architecture in the LHC tunnel

In the LHC tunnel, the control duties [26] are performed by PLCs, to drive the cryogenic process with actuators (valves or heaters), and by FEC to interface and collect the instrumentation located in irradiated environment.

They are installed in radiation-free surface rooms and connected to the cryogenic control system via the Ethernet network. The Ethernet network is involved in this specific case for the communication between PLC and FEC (Fig. 10). There are two PLCs per sector, one for the Long Strait Section (LSS), and one for the short straight sections (ARC). A dedicated Cryogenic Instrumentation Expert Tool (CIET) has been deployed based on the UNICOS framework and has been distributed within the operation supervision system. It gives the instrumentation engineers an alternative view of the process presenting all the instrumentation data. This tool is extensively used during the commissioning phase allowing setting up and diagnostics of the electronic signal conditioners. The classical instrumentation is connected to the PLC by a classical acquisition card. The presence of radiation on such COTS equipment has imposed to deport the the non radiation-tolerant electronics (Fig. 11) to the protected area, leaving the non-sensitive parts in the radiation area. These specific valves positioners were designed by industry [27, 28].

To deal with the radiation environment, the CERN standard solution based on FIP Network is used for communicating the control variables. It uses fibers for long distance and copper cables with copper/fibre converter and repeaters for the tunnel connections. Dedicated radiation-tolerant electronic crates (Fig. 12) were developed in-house (Casas Cubillos et al.: Instrumentation, Field Network and Process Automation for the LHC Cryogenic Line Tests, unpublished).

They integrate specific boards to access the pressure, temperature, level sensors and the local protection heaters. Table 1 details some I/O and control loops.

Figures 13 describes how the process engineer has divided the LHC sector in several control modules reaching the lowest level of the cryogenic equipment. This intuitive configuration led to a better control of the critical operation scenarios.

During run1 (2010-2012), the cryogenic control equipment suffered various radiation-induced failures during LHC operation due to Single Event Effects (SEE) on electronic equipment either destructive or non-destructive. They occur when the beam is present and are driven by the beam integrated luminosity, by collimation losses or by beam interactions with residual gas. In 2011 a total of 45 radiation-caused failures, classified in Table 2, lead to 25 beam dumps and 210 hours of cryogenic downtime [29].

On the basis of failure analysis, radiation monitoring, simulation data and radiation tests, the most critical zones with sensitive electronics were identified. To improve the availability of the cryogenic system, the most sensitive electronics were relocated into radiation protected areas, during winter stops. A dozen electrical cabinets were reviewed and adapted to new positions.

The availability of the superconducting magnet current lead cooling valves, controlled by the PLC through Profibus Ⓒ remote I/O, was affected by non-destructive SEEs on the communication module. An automatic reset routine, detecting communication failure and then executing a power-cycle reset was successfully tested and implemented in the PLCs as a short term solution [30]. Twelve automatic resets were triggered since the patch was deployed, all without losing cryogenic nominal conditions or circulating beams. The relocation to the protected areas was chosen as the final solution, finally executed in the maintenance campaign of 2013.

4.3 LHC Detectors cryogenic control system

The ATLAS and CMS experiments, placed at diametrically opposite locations on the LHC ring, have independent refrigeration plants separated from the cryogenics system of the LHC accelerator. Their control systems are standardized and homogenous to allow a single operation team to supervise the overall LHC and its detectors cryogenic facilities in a unique control room.

The distributed control systems reflect the architecture of the cryogenic infrastructure of the ATLAS and CMS magnets. The instrumentation, not aimed at operating within a the radiation environment of the experimental cavern, is installed in the technical cavern, more protected from radiation. Data are acquired from the 3500 cryogenic instrumentation channels, distributed on the surface as well as in the technical and experimental caverns, through fieldbus technologies (Fig. 14).

All the instrumentation channels are accessed by more than 300 input and output cards, controlled by ten PLCs. Each compressor station and refrigerator is supervised by a dedicated PLC. In addition, the ATLAS toroids, solenoids, and all the current leads are equipped with an individual PLC, while the CMS solenoid control is integrated to the refrigerator PLC. The man-machine interface, one per experiment, is based on Supervisory Control and two Data Acquisition (SCADA) and built on Siemens Ⓡ WinCC Open Architecture Ⓡ. All the control systems became operational for the first time in 2008, to ensure operability of the cryogenic infrastructure over the first LHC run [31].

4.4 Lifetime evolution: the LHC detectors cryogenic control upgrade case study

The control systems (together with the corresponding cryogenic installation) were designed and tested between the years 2000 and 2005. For most of the individual control systems, the final installation and commissioning were launched in 2005. All the systems were fully operational for the first LHC beam on the 10th of September 2008. Since that moment, the control systems have been ensuring availability of the cryogenic infrastructure during the first two LHC runs, which means until 2020. After over ten years of control systems exploitation, the number of faults related to the control system showed a tendency to increase mainly for abnormal ageing in instrumentation cables and for PLC-related causes. After a maintenance inspection, in several instrumentation cabinets, it was discovered that several wires had their insulation in a degraded state. It was decided to consolidate and replace these cables with an extensive cabling campaign applied to 4 cabinets out of 25 in total.

In 2015 the first PLC crash, causing cryogenic installation failures and a significant downtime (around 10 hours), was reported. Typically, a crash occurs when a PLC program stops functioning properly (due to PLC hardware problems) and exits the RUN mode. During the following years, three different PLC crashes, totalizing 4 faults distributed in 16 PLC units installed in LHC detectors causing more than 30 hours of downtime, were reported. Despite the detailed fault analysis and support from Schneider, these events could not be reproduced in laboratory and the causes could not be identified. Meanwhile, the life cycle of the industrial PLC entered the end-of-commercialization phase. The commercialization of the CPU stopped in 2018 while the remote IO cards commercialization is ending in 2021. The after-sales support is ending in 2026 and 2029, respectively. These events and the fact that the control systems of the ATLAS and CMS cryogenic installation must operate reliably for the next 20 years, led to the review of the long-term maintenance strategy. Firstly, the faulty equipment has been systematically replaced by spare parts from an internal stock. In the second stage, two phases of the long-term hardware upgrade plan were defined for the PLC and for the Remote IO upgrade (Fig. 15). The CERN multi-phase upgrade strategy minimizes the fault link to the upgrade and maximizes the flexibility for the control team in this task [32].

4.5 Evolution

The evolution of the cryogenic process control system has grown towards a 24h fully automatic operation without human intervention through enhanced capabilities to handle the complexity of many processes thanks to the accelerating technology and continuing profusion of smarter sensors, instruments, and integrated systems. In order to cope with this high level of automation, the control system features should allow the few operators to obtain detailed information on all the process and control devices (control loops, sensors, actuators, alarms, interlocks) existing in the control hierarchy. In particular, it includes an advanced diagnostic environment allowing the operators to navigate within a cryogenic hierarchy to identify the interlock associated to the fault root cause (mechanical, electrical, instrumentation, control hardware, control software, etc.). This will allow to take rapid corrective actions remotely and to operate in degraded conditions to overcome problems and minimize potential down-times. The control system will include early warnings based on data mining computation and several interlocks on threshold limits and enhance the associated fully automatic response in different states. In case of continuous failure, upon an alarm occurrence, the operator is warned, either by e-mail or cellular phone for on-site intervention On average this automatic call occurs every 1 or 2 hours depending on the status of the system itself; the maximum of period with no automatic warning was reported to be approximately 1 day.

Integration evolution with an intelligent data base [33], software production tasks and technologies using the continuous integration practice [34] allow CERN to implement periodical upgrades resulting in a continuous evolving robust cryogenic controls software, while reducing the required time and effort. For HL-LHC [35], CERN strategy is to design and deploy software tool in order to help automatizing the control system production using data extracted automatically. The complete information on cryogenic equipment to be controlled, its characteristics and parameters will be extracted from a set of views on the project database, to generate the specifications of the integrated control system (it models topographical organization layouts as functional positions and relationship). Finally, wireless communications are being studied in order to minimize the cabling cost in a new large-scale project with thousands of kilometers around the facility. The use of wireless communication and powering from the alcoves to the sensors/actuators wherever possible is a real alternative [36] considering the burden (resources, planning and costs) of the electrical cabling campaigns.

Results

The cryogenic availability, define such as the fraction of time in which the cryogenic actually runs and is available for accelerator beam operation, is highly critical, as the failure of the sub-systems will lead to an immediate stop of the accelerator beams or of the detector data taking. The CERN cryogenic installations are built-up of several ten thousand components and the availability of the whole system will depend on the availability of certain large components such as compressors or control system, as well as the availability of small and tiny components as e.g. temperature sensors or electric connectors. After a delicate start-up due to the extraordinary complexity of the LHC cryogenic system, a reference of 90% of overall availability has been achieved.The efforts performed by cryogenic teams allowed a continuous improvement of the global availability as can be seen in Fig. 16. The corresponding availability for the cryogenic control system is estimated to be more than 99.8%. In order to maintain high availability for the system (on top of an adapted and efficient maintenance policy), systematic analysis and treatment of failures is continuously performed in order to minimize downtime as much as technically possible.

Conclusion

The CERN cryogenic control systems have been operational for more than two decades. Over this period, the reliability and the capability of homogenizing the cryogenic operation have been extremely valuable for the global reliability of the cryogenic system (average 98% of availability [37–39] during the last LHC-Run).

After more than a decade, two problems have been faced, the continuous software optimization, requiring the yearly software redeployment, and the electronic hardware ageing, leading to system failures. Moreover, technical support of the systems started to be problematic due to the unavailability of some PLC hardware components, which became obsolete. This led to a review [40] of the hardware architecture and its upgrade to the latest technology, ensuring a longer equipment life cycle and facilitating the implementation of modifications to the process logic. The change of the hardware provided an opportunity to upgrade the process control applications using the most recent CERN frameworks and commercial engineering software, while improving the in-house software production methods and tools.

Integration of all software production tasks and technologies using the continuous integration practice allows CERN to prepare and implement more robust software, while reducing the required time and effort. The upgraded system benefits from the newest technology and the most recent versions of CERN frameworks (for developing industrial control applications) and commercial software. The upgrade of the PLC equipment extends the life cycle by another 20 years. Thanks to the continuous integration system and extensive database [41], the implementation of process logic modifications requires less time and effort, and the code produced is more robust.

Change history

04 July 2022

The License was not not correctly tagged in the HTML of the original publication, this article has been updated.

Abbreviations

- CERN:

-

Conseil Européen pour la Recherche Nucléaire

- LHC:

-

Large Hadron Collider

- JCOP:

-

Joint control project

- UNICOS:

-

Unified control system

- UNICOS CPC:

-

UNICOS continuous process control

- FESA:

-

Front-end software architecture

- CMW:

-

Controls middleware framework

- COTS:

-

Commercial off the shelf

- PC:

-

Personal computer

- PLC:

-

Programmable logic controller

- SCADA:

-

Supervisory control and data acquisition

- HEP:

-

High energy physics

- IEC:

-

International electrotechnical commission

- DS:

-

Data servers

- HMI:

-

Human-machine interface

- OWS:

-

Operator work stations

- EWS:

-

Engineering work-stations

- AFT:

-

Accelerator fault trac by CERN

- Infor EAM:

-

Software by Infor Global Solutions Ⓡ

- CMMS:

-

Computerized maintenance management systems

- NXCALS:

-

Next CERN accelerators logging service

- FEC:

-

Front end computer

- PROFIBUS:

-

(Process Field Bus) standard for fieldbus communication in automation technology

- PROFIBUS DP:

-

PROFIBUS decentralized periphery

- PROFIBUS PA:

-

PROFIBUS process automation

- PROFINET:

-

(PRPcess FIeld NET) industry technical standard for data communication

- IP:

-

Industrial protocol

- WinCC:

-

SCADA software by Siemens Ⓡ

- TSPP:

-

Time stamp push protocol

- UCPC:

-

UNICOS CPC

- LHe:

-

Liquid helium

- LN2:

-

Liquid nitrogen

- ATLAS:

-

A toroidal LHC apparatuS

- QRL:

-

Cryogenic distribution line

- LSS:

-

Long straight section

- ARC:

-

LHC arc section

- CIET:

-

Cryogenic instrumentation expert tool

- WorldFIP:

-

FIP standardization group

- FIP:

-

Factory instrumentation protocol

- SEE:

-

Single event effects

- CMS:

-

Compact muon solenoid

- CPU:

-

Central processing unit

- IO:

-

Input output

- HL-LHC:

-

High luminosity LHC

References

Delikaris D., Barth K., Claudet S., Gayet P, Passardi G., Serio L., Tavian L.Cryogenic operation methodology and cryogen management at CERN over the last 15 years. Proceedings of ICEC 22-ICMC 2008.

Rode C. H., Ferry B., Fowler W. B., Makara J., Peterson T., Theilacker J., Walker R. (1985) Operation of large cryogenic system. Vancouver, British Columbia. Particle Accelerator Conference (NPS).

Brodzinski K., Bradu B., Delprat L., Ferlin G. (2020) Cryogenic operational experience from the LHC physics run2 (2015–2018 inclusive) In: IOP Conference Series: Materials Science and Engineering, 012096.

Delikaris D.Experience from large helium inventory management at CERN. ILC Cryogenics and helium inventory meeting, CERN, 18 June 2014.

Claudet S., et al (2014) Helium inventory management and losses for LHC cryogenics: strategy and results for Run 1 In: 25th International Cryogenic Engineering Conference and the International Cryogenic Materials Conference.

(2019). https://hilumilhc.web.cern.ch/content/hl-lhc-project.

Bernard F., Blanco E., Egorov A., Gayet P., Milcent H., Sicard C. H. (2005) Deploying the UNICOS Industrial Controls framework in multiple projects and architectures In: 10th ICALEPCS Int. Conf. on Accelerator & Large Expt. Physics Control Systems. Geneva, 10 - 14 Oct 2005, WE2.2-6I.

Roderick C., Wozniak J. P. (2019) NXCALS-Architecture and challenges of the next CERN accelerator logging service In: 17th Int. Conf. on Acc. and Large Exp. Physics Control SystemsICALEPCS2019, New York. ISBN:978-3-95450-209-7ISSN:2226-0358.

Sapp D. (2016) Computerized Maintenance Management Systems (CMMS). https://www.wbdg.org.

Apollonio A., Schmidt R., Todd B., Roderick C., Ponce L., Wollmann D. (2016) LHC Accelerator Fault Tracker: First Experience In: 7th International Particle Accelerator Conference (IPAC 2016).

Agapito J. A., Casas-Cubillos J., Franco F. J., Palan B., Rodriguez Ruiz M. A. (2003) Rad-tol field electronics for the LHC cryogenic system:653–657.

Altaber J., Anderssen P. S., Burckhart H., Cittolin S., Faugeras P., Guerrero L., Lauckner R., Ninin P., Parker R., Sicard C. H. (2000) LHC Communication Infrastructure, Recommendations from the working group. LHC Project Report 438. CERN.

Apollonio A., Rey Orozco O., Ponce L., Schmidt R., Siemko A., Todd B., Uythoven J., Verweij A., Wollmann D., Zerlauth M. (2018) Reliability and availability of particle accelerators: concepts, lessons, strategy In: 9th International Particle Accelerator Conference IPAC2018.. Vancouver, BC, Canada.

Gonzalez-Berges M., et al (2003) The Joint Controls Project Framework. Comput. High Energy Nucl. Phys.La Jolla, California.

(1997) IEC 61512-1. Standard available at link https://webstore.iec.ch/publication/5528.

Golonka P., et al (2019) Consolidation and redesign of CERN industrial controls frameworks In: Proceedings of ICALEPCS2019, New York.

Kostro K., Andersson J., Di Maio F., Jensen S., Trofimov N. (2003) The controls middleware (CMW) at CERN status and usage In: Proceedings of ICALEPCS2003, 318, Gyeongju.

Guerrero A., Gras J. -J., Nougaret J. -L., Ludwig M., Arruat M., Jackson S. (2003) CERN front-end software architecture for accelerator controls In: Proceedings of ICALEPCS2003, Gyeongju.

Topaloudis A., Rachex S. C., Grenoble P. (2017) Visualisation of real-time Front-End Software Architecture (FESA) Developments at CERN In: International Conference on Accelerator and Large Experimental Physics Control Systems, Barcelona. https://doi.org/10.18429/JACoW-ICALEPCS2017-THPHA180.

The LHC cryogenic system. https://lhc-machine-outreach.web.cern.ch.

Lebrun P. (2000) Cryogenics for the large hadron collider. IEEE Trans. Appl. Supercond. 10(1):1500–1506.

Delikaris D. (1997) The cryogenic system for the superconducting solenoid magnet of the CMS experiment.

Blanco E., Gayet P.LHC cryogenics control system: integration of the industrial controls (UNICOS) and front-end software architecture (FESA) applications. ICALEPCS07, Knoxville, Tennessee, USA.

Serio L., Bremer J., Claudet S., Delikaris D., Ferlin G., Pezzetti M., Pirotte O., Tavian L., Wagner U. (2015) CERN experience and strategy for the maintenance of cryogenic plants and distribution systems In: IOP Conference Series: Materials Science and Engineering, 012140.

Bradu B., Delprat L., Duret E., Ferlin G., Ivens B., Lefebvre V., Pezzetti M. (2020) Process control system evolution for the LHC Cold Compressors at CERN In: IOP Conference Series: Materials Science and Engineering, 012086.

Gomes P., Anastasopoulos K., Antoniotti F., Avramidou R., Balle C. H., Blanco E., Carminati C. H., Casas J., Ciechanowski M., Dragoneas A., Dubert P., Fampris X., Fluder C., Fortescue E., Gaj W., Gousiou E., Jeanmonod N., Jodlowski P., Karagiannis F., Klisch M., Lopez A., Macuda P., Malinowski P., Molina E., Paiva S., Patsouli A., Penacoba G., Sosin M., Soubiran M., Suraci A., Tovar A., Vauthier N., Wolak T., Zwalinski L. (2008) The control system for the cryogenics in the LHC tunnel. LHC Project Report 1169.

Hees W., Trant R. (1999) Evaluation of Electro Pneumatic Valve Positioners for LHC Cryogenics. LHC Project Note 190.

Casas J., Quetsch J. -M. (2019) Radiation Hardness of the Siemens SIPART intelligent valve positioner In: IOP Conference Series: Materials Science and Engineering, 012196.

Fluder C., Blanco E., Bremer J., Bremer K., Ivens B., Casas-Cubillos J., Claudet S., Gomes P., Ivens B., Perin A., Pezzetti M., Tovar-Gonzalez A., Vauthier N. (2012) Main consolidations and improvements of the control system and instrumentation for the LHC cryogenics In: 24th International Cryogenic Engineering Conference and International Cryogenic Materials Conference, Fukuoka. Preprint: Ⓒ 2013-2021 CERN (License: CC-BY-3.0).

Claudet S. (2011) Cryogenics – analysis, main problems, SEUs, beam related issues. Materials form LHC Beam Operation Workshop.

Perinic G., Claudet S., Alonso-Canella I., Balle C., Barth K., Bel J. -F., Benda V., Bremer J., Brodzinski K., Casas-Cubillos J. (2012) First assessment of reliability data for the LHC accelerator and detector cryogenic system components In: AIP Conference Proceedings, 1399–1406. https://doi.org/10.1063/1.4707066.

Fluder C., et al (2019) Status of the process control systems upgrade for the cryogenic installations of the LHC based ATLAS and CMS detectors In: Proceedings of ICALEPCS2019, New York. https://doi.org/10.18429/JACoW-ICALEPCS2019-WEPHA050.

Fortescue-Beck E., et al (2011) The integration of the LHC cryogenics control system data into the CERN Layout database In: 13th International Conference on Accelerator and Large Experimental Physics Control Systems.

Fluder C., Lefebvre V., Pezzetti M., Tovar-Gonzalez A., Plutecki P., Wolak T. (2018) Automation of software production process for multiple cryogenic control applications In: 16th International Conference on Accelerator and Large Experimental Physics Control Systems (ICALEPCS 2017), 375–379.

Tavian L.Cryogenics for HL-LHC. 3rd Joint HiLumi, LHC-LARP Annual Meeting 11-15 November 2013, Daresbury Laboratory.

Elizabeth L.Multigigabit Wireless Data Transfer for High Energy Physics Applications. FCC week 2018, Beurs van Berlage, Netherland.

Delikaris D.The LHC cryogenic operation availability results from the first physics run of three years In: 4th International Particle Accelerator Conference (IPAC 2013).

Mito T., Maekawa R., Baba T., Moriuchi S., Iwamoto A., Nishimura A., Yamada S., Takahata K., Imagawa S., Yanagi N., Tamura H., Hamaguchi S., Oba K., Sekiguchi H., Satow T., Satoh S., Motojima O. (2000) Reliable long-term operation of the cryogenic system for the Large Helical Device. Adv. Cryog. Eng.:1253–1260.

Ferlin G., Bradu B., Brodzinski K., Delprat L., Pezzetti M.Cryogenics experience during Run2 and impact of LS2 on next Run. 9th LHC Operations Evian Workshop 2019.

Delprat L., Bradu B., Brodzinski K., Ferlin G., Hafi K., Herblin L., Rogez E., Suraci A. (2017) Main improvements of LHC Cryogenics Operation during Run 2 (2015-2018) In: Cryogenic Engineering Conference and International Cryogenic Materials Conference, Madison.

Tovar A., et al2013. Validation of the data consolidation in Layout database for the LHC tunnel cryogenics controls upgrade, San Francisco.

Acknowledgements

The CERN LHC cryogenic control system is a team work and long run experience (more than 20 years!), in which different CERN services have collaborated to reach the goals showed in this paper. I would like to express my gratitude to the many CERN colleagues for the participation to this enterprise with good professional dedication together with strong technical and human skills expressed during all these years.

Author information

Authors and Affiliations

Contributions

Authors’ contributions

The author read and approved the final manuscript.

Authors’ information

Graduated in Mechanical Engineering at Politecnico di Milano (Italy), Automation specialization. He graduated as PhD at the Picardie Jules Verne University and ESIEELMBE, Amiens, France. He is working at CERN as senior staff in the control section of the cryogenics group since 1998, specialized in large scale cryogenic control system.

Corresponding author

Ethics declarations

Competing interests

The declares the he has no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Pezzetti, M. Control of large helium cryogenic systems: a case study on CERN LHC. EPJ Techn Instrum 8, 6 (2021). https://doi.org/10.1140/epjti/s40485-021-00063-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjti/s40485-021-00063-w