Abstract

Gaussian processes (GP) provide an elegant and model-independent method for extracting cosmological information from the observational data. In this work, we employ GP to perform a joint analysis by using the geometrical cosmological probes such as Supernova Type Ia (SN), Cosmic chronometers (CC), Baryon Acoustic Oscillations (BAO), and the H0LiCOW lenses sample to constrain the Hubble constant \(H_0\), and reconstruct some properties of dark energy (DE), viz., the equation of state parameter w, the sound speed of DE perturbations \(c^2_s\), and the ratio of DE density evolution \(X = \rho _\mathrm{de}/\rho _\mathrm{de,0}\). From the joint analysis SN+CC+BAO+H0LiCOW, we find that \(H_0\) is constrained at 1.1% precision with \(H_0 = 73.78 \pm 0.84\ \hbox {km}\ \hbox {s}^{-1}\,\hbox {Mpc}^{-1}\), which is in agreement with SH0ES and H0LiCOW estimates, but in \(\sim 6.2 \sigma \) tension with the current CMB measurements of \(H_0\). With regard to the DE parameters, we find \(c^2_s < 0\) at \(\sim 2 \sigma \) at high z, and the possibility of X to become negative for \(z > 1.5\). We compare our results with the ones obtained in the literature, and discuss the consequences of our main results on the DE theoretical framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Several astronomical observations indicate that our Universe is currently in an accelerated expansion stage [1,2,3,4,5]. A cosmological scenario with cold dark matter (CDM) and dark energy (DE) mimicked by a positive cosmological constant, the so-called \(\Lambda \)CDM model, is considered the standard cosmological model, which fits the observational data with great precision. But, the cosmological constant suffers from some theoretical problems [6,7,8], which motivate alternative considerations that can explain the data and have some theoretical appeal as well. In this regard, numerous cosmological models have been proposed in the literature, by introducing some new dark fluid with negative pressure or modification in the general relativity theory, where additional gravitational degree(s) can generate the accelerated stage of the Universe at late times (See [9,10,11] for a review). On the other hand, from an observational point of view, it is currently under discussion whether the \(\Lambda \)CDM model really is the best scenario to explain the observations, mainly in light of the current Hubble constant \(H_0\) tension. Assuming the \(\Lambda \)CDM scenario, Planck-CMB data analysis provides \(H_0=67.4 \pm 0.5\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\) [12], which is in \(4.4\sigma \) tension with a cosmological model-independent local measurement \(H_0 = 74.03 \pm 1.42\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\) [13] from the Hubble Space Telescope (HST) observations of 70 long-period Cepheids in the Large Magellanic Cloud. Additionally, a combination of time-delay cosmography from H0LiCOW lenses and the distance ladder measurements are in \(5.2\sigma \) tension with the Planck-CMB constraints [14] (see also [15] for an update using H0LiCOW lens based new hierarchical approach where the mass-sheet transform is only constrained by stellar kinematics). Another accurate independent measure was carried out in [16], from Tip of the Red Giant Branch, obtaining \(H_0 = 69.8 \pm 1.1\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\). Several other estimates of \(H_0\) have been obtained in the recent literature (see [17,18,19,20,21]). It has been widely discussed in the literature whether a new physics beyond the standard cosmological model can solve the \(H_0\) tension [22,23,24,25,26,27,28,29,30,31,32,33,34,35,36]. The so-called \(S_8\) tension is also not less important. It is present between the Planck-CMB data with respect to weak lensing measurements and redshift surveys, about the value of the matter energy density \(\Omega _m\) and the amplitude or growth rate of structures (\(\sigma _8\), \(f\sigma _8\)). We refer the reader to [37, 38] and references therein for perspectives and discussions on \(S_8\) tension. Some other recent studies/developments [39,40,41,42,43,44,45,46,47,48,49,50] also suggest that the minimal \(\Lambda \)CDM model is in crisis.

A promising approach for investigation of the cosmological parameters is to consider a model-independent analysis. In principle, this can be done via cosmographic approach [51,52,53,54,55], which consists of performing a series expansion of a cosmological observable around \(z=0\), and then using the data to constrain the kinematic parameters. Such a procedure works well for lower values of z, but can be problematic at higher values of z. An interesting and robust alternative can be to consider a Gaussian process (GP) to reconstruct cosmological parameters in a model-independent way. The GP approach is a generic method of supervised learning (tasks to be learned and/or data training in GP terminology), which is implemented in regression problems and probabilistic classification. A GP is essentially a generalisation of the simple Gaussian distribution to the probability distributions of a function into the range of independent variables. In principle, this can be any stochastic process, however, it is much simpler in a Gaussian scenario and it is also more common, specifically for regression processes, which we use in this study. The GP also provides a model independent smoothing method that can further reconstruct derivatives from data. In this sense, the GP is a non-parametric strategy because it does not depend on a set of free parameters of the particular model to be constrained, although it depends on the choice of the covariance function, which will be explained in more detail in the next section. The GP method has been used to reconstruct the dynamics of the DE, modified gravity, cosmic curvature, estimates of Hubble constant, and other perspectives in cosmology by several authors [56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77].

In this work, our main aim is to employ GP to perform a joint analysis by using the geometrical cosmological probes such as Supernova Type Ia (SN), Cosmic chronometers (CC), Baryon Acoustic Oscillations (BAO), and the H0LiCOW lenses sample to constrain the Hubble constant \(H_0\), and reconstruct some properties of DE, viz., the equation of state parameter w, the sound speed of DE perturbations \(c^2_s\), and the ratio of DE density evolution \(X=\rho _\mathrm{de}/\rho _\mathrm{de,0}\). These are the main quantities that can represent the physical characteristics of DE, and possible deviations from the standard values \(w=-1\), \(c^2_s = 1\) and \(X=1\), can be an indication of a new physics beyond the \(\Lambda \)CDM model. To our knowledge, a model-independent joint analysis from above-mentioned data sets, as will be presented here, is new and not previously investigated in the literature. Indeed, a joint analysis with several observational probes is helpful to obtain tight constraints on the cosmological parameters.

This paper is structured as follows. In Sect. 2, we present the GP methodology as well as the data sets used in this work. In Sect. 3, we describe the modelling framework providing the cosmological information, and discuss our main results in detail. In Sect. 4, we summarize main findings of this study with some future perspectives.

2 Methodology and data analysis

In this section, we summarize our methodology as well as the data sets used for obtaining our results.

2.1 Gaussian processes

The main objective in a GP approximation is to reconstruct a function \(f(x_i)\) from a set of its measured values \(f(x_i) \pm \sigma _i\), where \(x_i\) represent the training points or the positions of the observations. It assumes that the value of the function at any point \(x_i\) follows a Gaussian distribution. The value of the function at \(x_i\) is correlated with the value at other point \(x_i'\). Therefore, we may write the GP as

where \(\mu (x_i)\) and \(\text {cov}[f(x_i),f(x_i)]\) are the mean and the variance of the random variable at \(x_i\), respectively. This method has been used in many studies in the context of cosmology (e.g. see [56,57,58]). For the reconstruction of the function \(f(x_i)\), the covariance between the values of this function at different positions \(x_i\) can be modeled as

where \(k(x,x')\) is a priori assumed covariance model (or kernel in GP language), and its choice is often very crucial for obtaining good results regarding the reconstruction of the function \(f(x_i)\). The covariance model, in general, depends on the distance \(|x-x'|\) between the input points (\(x, x'\)), and the covariance function \(k(x,x')\) is expected to return large values when the input points (\(x, x'\)) are close to each other. The most popular and commonly used covariance functions in the literature are the standard Gaussian Squared-Exponential (SE) and the Matérn class of kernels (\(M_{\nu }\)). The SE kernel is defined as

where \(\sigma _f\) is the signal variance, which controls the strength of the correlation of the function, and l is the length scale that determines the ability to model the main characteristics (global and local) in the evaluation region to be predicted (or coherence length of the correlation in x). These two parameters are often called hyperparameters. They are not the parameters of the function, but of the covariance function. For convenience, in what follows, we redefine \(\tau = |x-x'|\), which is consistent with all the kernels implemented here. The SE kernel, however, is a very smooth covariance function which can very well reproduce global but not local characteristics. To avoid this, the Matérn class kernels are helpful, and the general functional form can be written as

where \(K_{\nu }\) is the modified Bessel function of second kind, \(\Gamma (\nu )\) is the standard Gamma function and \(\nu \) is strictly a positive parameter. An explicit analytic functional form for half-integer values of \(\{\nu = 1/2, 3/2, 5/2, 7/2, 9/2, \ldots \}\) is provided by modified Bessel functions, and when \(\nu \rightarrow \infty \), the \( \text {M}_{\nu } \) covariance function tends to SE kernel. Among other possibilities, \(\nu = 7/2\) and \(\nu = 9/2 \) values are of primary interest, since these correspond to smooth functions with high predictability of derivatives of higher order, although these are not very suitable for predicting rapid variations. These Matern functions for GP in cosmology were first introduced in [58]. On the other hand, the hyperparameters \({\Theta } \equiv \{\sigma _f, l\}\) are learned by optimising the log marginal likelihood, which is defined as

where \(K_{\text {y}} = K(\mathbf{x} ,\mathbf{x} ') + C\), \(K(\mathbf{x} ,\mathbf{x} ')\) is the covariance matrix with components \(k(x_i,x_j)\), y is the vector of data, C is the covariance matrix of the data for a set of n observations, assuming mean \(\mu = 0\). After optimizing for \(\sigma _f\) and l, one can predict the mean and variance of the function \(f(\mathbf{x} ^{*})\) at chosen points \(\mathbf{x} ^{*}\) through

The GP predictions can also be extended to the derivatives of the functions \(f(x_i)\), although limited by the differentiability of the chosen kernel. The derivative of a GP would also be a GP. Thus, one can obtain the covariance between the function and/or the derivatives involved by differentiating the covariance function as

Then, we can write

where \(f'(x_i)\) represent the derivatives with respect to their corresponding independent variables, which for our purpose can be the redshift z. This procedure can similarly be extended for higher derivatives (\(f'(x), f''(x), \ldots \)) in combination with f(x). The mean of the \(i^{th}\) derivative and the covariance between \(i^{th}\) and \(j^{th}\) derivatives, are given by

If \(i =j\), then we get the variance of the \(i^{th}\) derivative in Eq. (10). If the data for derivative functions are available, we can perform a joint analysis, which is the case in our study. Since one data type can be in terms of f(x) while another can be rewritten in terms of \(f'(x)\), these different data sets can be combined. In what follows, we describe the data sets that we use in this work.

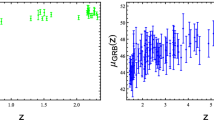

H(z) (in units of km s\(^{-1}\,\hbox {Mpc}^{-1}\)) vs z (E(z) vs z in case of SN data alone) with \(1\sigma \) and \(2\sigma \) CL regions, reconstructed from SN (top-left), BAO (top-right), CC (bottom-left) and SN+BAO+CC data (bottom-right). Data with errorbars in all the panels are the observational data as mentioned in the legend of each panel

2.2 Data sets

We summarize below the data sets used in our analysis.

Cosmic chronometers (CC): The CC approach is a powerful method to trace the history of cosmic expansion through the measurement of H(z). We consider the compilation of Hubble parameter measurements provided by [78]. This compilation consists of 30 measurements distributed over a redshift range \(0< z < 2\).

Baryon acoustic oscillations (BAO): The BAO is another important cosmological probe, which can trace expanding spherical wave of baryonic perturbations from acoustic oscillations at recombination time through the large-scale structure correlation function, which displays a peak around \(150\, {\text {h}}^{-1} \,\mathrm{Mpc}\). We use BAO measurements from Sloan Digital Sky Survey (SDSS) III DR-12 at three effective binned redshifts \(z = 0.38\), 0.51 and 0.61, reported in [3], the clustering of the SDSS-IV extended Baryon Oscillation Spectroscopic Survey DR14 quasar sample at four effective binned redshifts \(z = 0.98\), 1.23, 1,52 and 1.94, reported in [79], and the high-redshift Lyman-\(\alpha \) measurements at \(z = 2.33\) and \(z = 2.4\) reported in [80] and [81], respectively. Note that the observations are presented in terms of \(H(z) \times (r_d/r_{d,fid})\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\), where \(r_d\) is co-moving sound horizon and \(r_{d,fid}\) is the fiducial input value provided in the above references. In appendix A, we show that different \(r_d\) input values obtained from different data sets do not affect the GP analysis.

Supernovae Type Ia (SN): The SN traditionally have been one of the most important astrophysical tools in establishing the so-called standard cosmological model. For the present analysis, we use the Pantheon compilation, which consists of 1048 SNIa distributed in a redshift range \(0.01< z < 2.3\) [82]. Under the consideration of a spatially flat Universe, the full sample of Pantheon can be summarized into six model independent \(E(z)^{-1}\) data points [83]. We consider the six data points reported by [65] in the form of E(z), including theoretical and statistical considerations made by the authors there for its implementation.

H0LiCOW sample: The Lenses in COSMOGRAIL’s Wellspring programFootnote 1 have measured six lens systems, making use of the measurements of time-delay distances between multiple images of strong gravitational lens systems by elliptical galaxies [14]. In the analyses of this work, we implement these six systems of strongly lensed quasars reported by the H0LiCOW Collaboration. Full information is contained in the so-called time-delay distance \(D_{\Delta t}\). However, additional information can be found in the angular diameter distance to the lens \(D_l\), which offers the possibility of using four additional data points in our analysis. Thus, our total H0LiCOW sample comprises of 10 data points: 6 measurements of time-delay distances and 4 angular diameter distances to the lens for 4 specific objects in the subset information in H0LiCOW sample (see [84, 85] for the description).

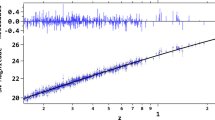

Left panel: \(\tilde{D}(z_l)\) vs \(z_l\) with \(1\sigma \) and \(2\sigma \) CL regions, reconstructed from H0LiCOW sample data plus other data (SN+CC+BAO). Right panel: H(z) vs z with \(1\sigma \) and \(2\sigma \) CL regions, enlarged in the redshift range \(z<0.3\), and reconstructed from the combined data SN + BAO + CC + H0LiCOW

3 Results and discussions

First, we verify that analyses carried out from \(k_{M_{\nu }}(\tau )\), with \(\tau = 9/2\) and \(\tau = 7/2\), and \(k_{SE}\) do not generate significantly different results, in the sense that all results are compatible with each other at \(1\sigma \) CL, and hence not generating any disagreement/tension between these input kernels. Thus, in what follows, we use GP formalism with an assumed \(M_{9/2}\) kernel in the whole analysis. For this purpose, we have used some numerical routines available in the public GaPP code [56].

Figure 1 shows the reconstructions from SN, BAO and CC data sets, using GP formalism on each data set individually. On the bottom-right panel, we show the H(z) reconstruction from all these data together. First, from the CC reconstruction, we obtain \(H_0=68.54 \pm 5.06\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\), which has been used in the rescaling process of SN data to carry out the joint analysis with SN+BAO+CC (bottom-right). From SN+BAO+CC analysis, we find \(H_0=67.85 \pm 1.53\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\). Figure 2 (left panel) shows the GP reconstruction of \(D(z_l)\) from H0LiCOW data, where \(z_l\) is the redshift to the lens. On the right panel, we show the reconstruction of H(z) function from SN+BAO+CC+H0LiCOW. In this joint analysis, we obtain \(H_0= 73.78 \pm 0.84\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\), which represents a \(1.1\%\) precision measurement. The strategy that we followed to obtain these results is as follows:

-

1.

The SN+BAO+CC data set is used as in the previous joint analysis, i.e., in terms of H(z) data reconstruction. Thus, now, we just need to re-scale the H0LiCOW data in some convenient way to combine all data for a joint analysis.

-

2.

The time-delay distance in H0LiCOW sample is quantified as

$$\begin{aligned} D_{\Delta t} = (1 + z_l) \frac{D_l D_s}{D_{ls}}, \end{aligned}$$(11)which is a combination of three angular diameter distances, namely \(D_l\), \(D_s\) and \(D_{ls}\), where the subscripts stand for diameter distances to the lens l, to the source s, and between the lens and the source ls.

-

3.

At this point, we can get the dimensionless co-moving distance through the relationship

$$\begin{aligned} \tilde{D}(z) = \frac{H_0}{c}(1+z)D_A, \end{aligned}$$(12)where \(D_A\) is the angular diameter distance and \(\tilde{D}(z)\) is defined as \(\tilde{D}(z) = \int ^{z}_0 \frac{dz'}{E(z')}\). In this way, we can have: 6 data points from time delay distance \(D_{\Delta t}\), which we referred to as \(\tilde{D}_{\Delta t}\), and 4 data points obtained from angular diameter distance \(D_l\), named as \(\tilde{D}_l\). Thus, we can add these 10 data points for joint analysis, and name simply the H0LiCOW sample (see left panel of Fig. 2). Note that, to get \(\tilde{D}_l\), we directly use the Eq. (12), where \(D_A = D_l\). On the other hand, to obtain \(\tilde{D}_{\Delta t}\), we have to take into account that Eq. (11) depends on the expansion rate of the Universe through \(D_s(z_s,H_0,\Omega _m)\) and \(D_{ls}(z_l, H_0,\Omega _m)\), and in this case, we use the \(H_0\) and \(\Omega _m\) best fit from our SN+BAO+CC joint analysis.

-

4.

For the joint analysis, the relation \(\tilde{D}(z) =\int ^{z}_0 \frac{dz'}{E(z')}\) can be reversed to obtain \(E(z) =\frac{1}{\tilde{D}'(z)}\). So, we can make use of this possibility that offers the reconstruction of the first derivative of the dimensionless co-moving distance \(\tilde{D}'(z)\). For this purpose, we introduce the SN + BAO + CC data set in the form of 1/E(z) and the H0LiCOW data set in the form of \(\tilde{D}(z)\), to obtain the GP reconstruction of dimensionless co-moving distance.

From the joint analysis SN+BAO+CC+H0LiCOW, we find \(H(z=0)= 73.78 \pm 0.84\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\). Figure 2 (right panel) shows the H(z) reconstruction from SN+BAO+CC+H0LiCOW. Figure 3 shows a comparison of our joint analysis estimates on \(H_0\) with others recently obtained in literature. We note that our constraint on \(H_0\) is in accordance with SH0ES and H0LiCOW+STRIDES estimates. On the other hand, we find \(\sim 6\sigma \) tension with current Planck-CMB measurements and \(\sim 2\sigma \) tension with CCHP best fit. We re-analyze our estimates removing BAO data (see appendix A). In this case, we find \(H_0 = 68.57 \pm 1.86\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\) and \(H_0 = 71.65 \pm 1.09 \ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\) from SN+CC and SN+CC+H0LiCOW, respectively.

Compilation of \(H_0\) measurements taken from recent literature, namely, from Planck collaboration (Planck) [12], Dark Energy Survey Year 1 Results (DES+BAO+BBN) [118], the final data release of the BOSS data (BOSS Full-Shape+BAO+BBN) [117], The Carnegie-Chicago Hubble Program (CCHP) [16], H0LiCOW collaboration (H0LiCOW+STRIDES) [14], SH0ES [13], in comparison with the \(H_0\) constraints obtained in this work from the GP analysis using SN+BAO+CC+H0LiCOW

In the context of the standard framework, we can also check the \(O_m(z)\) diagnostic [86]

If the expansion history E(z) is driven by the standard \(\Lambda \)CDM model, then \(O_m(z)\) is practically constant and equal to the density of matter \(\Omega _{m}\), and so, any deviation from this constant can be used to infer the dynamical nature of DE. Figure 4 shows the reconstruction of the \(O_m(z)\) diagnostic. We find \(\Omega _m = 0.292 \pm 0.046\) and \(\Omega _m =0.289 \pm 0.012\) at \(1\sigma \) from SN+BAO+CC and SN+BAO+CC+H0LiCOW analyses, respectively. To obtain these results, we normalize H(z) with respect to \(H_0\) to obtain E(z) for the entire data set except SN, where \(H_0\) is taken from SN+BAO+CC, and SN+BAO+CC+H0LiCOW cases, respectively. The prediction from SN+BAO+CC is compatible with \(\Omega _m = 0.30\) across the analyzed range, but it is interesting to note that for \(z > 2\), we have \(\Omega _m <0.30\) at \(\sim 2\sigma \) from SN+BAO+CC+H0LiCOW. These model-independent \(\Omega _m\) estimates will be used as input values in the reconstruction of w.

The EoS of DE can be written as [87,88,89]

where \(\Omega _{m}\) and \(\Omega _k\) are the density parameters of matter (baryonic matter + dark matter) and spatial curvature, respectively. In what follows, we assume \(\Omega _k = 0\), which is a strong, though quite general assumption about spatial geometry.

Figure 5 shows the w(z) reconstruction from SN+BAO+CC and SN+BAO+CC+H0LiCOW data combinations on the left and right panels, respectively. From both analyses, we notice that w is well constrained for \(z \lesssim 0.5\) with the prediction \(w=-1\). Most of the data correspond to this range in numbers and precision. The GP mean excludes any possibility of \(w \ne -1\) in the whole range of z under consideration. We observe that the best fit prediction is on \(w= -1\) up to \(z \sim 0.5\) for both cases. The addition of the H0LiCOW data considerably improve the reconstruction of w for \(z < 1\). Beyond this range, the best fit prediction can deviate from \(w = -1\), but statistically compatible with a cosmological constant. Evaluating at the present moment, we find \(w(z=0)= -0.999 \pm 0.093\) and \(w(z=0)= -0.998 \pm 0.064\) from SN+BAO+CC and SN+BAO+CC+H0LiCOW, respectively. Note that H0LiCOW sample improves the constraints on \(w(z=0)\) up to \(\sim 2.9\%\).

From the statistical reconstruction of w(z) and its derivative \(w'(z)\), we can analyze the DE adiabatic sound speed \(c^2_s\). Given the relation \(p=w\rho \), we can find

Figure 6 shows \(c^2_s\) reconstruction from SN+BAO+CC and SN+BAO+CC+H0LiCOW data combinations on the left and right panels, respectively. We note that the DE sound speed is negative at \(\sim 1\sigma \) from SN+BAO+CC when evaluated up to \(z \simeq 2.5\). It is interesting to note that the SN+BAO+CC+H0LiCOW analysis yields \(c^2_s < 0\) at \(2\sigma \) for \(z < 1\). At the present moment, we find \(c^2_s(z=0) = -0.218 \pm 0.137\) and \(c^2_s(z=0) = -0.273 \pm 0.068\) at 1\(\sigma \) CL from SN+BAO+CC and SN+BAO+CC+H0HiCOW, respectively. Therefore, this inference on \(c^2_s\) rules out significantly the possibility for clustering DE models, and also the models with \(c^2_s > 0\) up to high z at least at 1\(\sigma \) CL. The condition \(c^2_s > 0\) is usually imposed to avoid gradient instability. However, the perturbations can still remain stable under \(c^2_s < 0\) consideration [92,93,94,95]. Thus, if the effective sound speed is negative, this would be a smoking gun signature for the existence of an anisotropic stress and possible modifications of gravity. Recently, a possible evidence for \(c^2_s < 0\) is found in [46], and also in a model-independent way from the Hubble data. Now, we look at some models which can potentially explain this result.

The Lagrangian \(L = G_2(\phi , X) + \frac{M^2_{pl}}{2}R\) describes general K-essence scenarios. Here the function \(G_2\) depends on \(\phi \) and \(X = -\frac{1}{2} \nabla ^{\mu } \phi \nabla _{\mu } \phi \), and R is the Ricci scalar curvature. In this case, the sound speed is given by

where \(G_{2,X} \equiv \partial G_2 /\partial X\). Quintessence models correspond to the particular choice \(G_2 = X - V(\phi )\), given \(c^2_s = 1\). Thus, the usual quintessence scenarios are discarded from our results, which predict negative or low values of the sound speed.

Considering the so-called dilatonic ghost condensate [96], given by the Lagrangian,

where \(\lambda \) and M are free parameters of the model, we can write \(c^2_s\) as

with \(y = \frac{\dot{\phi }^2 e^{\lambda \phi /M_{pl}}}{2M^4}\). The condition \(y < -1/2\) ensures negative sound speed values.

Another interesting possibility pertains to a unified dark energy and dark matter scenario described by \(G_2 =-b_0 + b_2(X-X_0)^2\), where \(b_0\) and \(b_2\) are free parameters of the model [97]. In this case, the sound speed is

where \(c^2_s < 0\) for \(X < X_0\).

The above mentioned cases are theoretical examples under the consideration of a minimally coupled gravity scenario, which can reproduce a possible \(c^2_s < 0\) behavior. More generally, in the Horndeski theories of gravity [98,99,100], the speed of sound can be written as

where prime denotes \(d/d\ln a\), and \(\alpha _i\) are functions expressed in a way that highlights their effects on the theory space [101], namely, kineticity (\(\alpha _K\)), braiding (\(\alpha _B\)) and Planck-mass run rate (\(\alpha _M\)). Further, we define \(\alpha = \alpha _K + 3/2 \alpha ^2_B\). Motivated for the tight constraints on the difference between the speed of gravitational waves and the speed of light to be \(\lesssim 10^{-15}\) from the GW170817 and GRB 170817A observations [102, 103], we assume \(\alpha _T = 0\) (tensor speed excess). Without loss of generality, we can consider \(\alpha > 0\) and the relation \(\alpha _B = R \times \alpha _M\), with R being a constant. For instance, for \(R=-1\), we reproduce f(R) gravity theories. Different R values can manifest the most diverse possible changes in gravity. For a qualitative example, taking \(R=-1\), the running of the Planck mass must satisfy the relationship

for generating \(c^2_s < 0\). At late cosmic time, we have \(\frac{dH}{da}\frac{1}{H} < 0\), and we can consider the theories in a good approximation where \(|\alpha _M \ll 1|\). So we see that the condition \(\alpha _M < 0\), can generate negative \(c^2_s\) values in this case.

Finally, we analyze the function

quantifying the ratio of DE energy density evolution over the cosmic time.

Figure 7 shows X(z) reconstruction from SN+BAO+CC and SN+BAO+CC+H0LiCOW data combinations on the left and right panels, respectively. We note that the evolution of X is fully compatible with the \(\Lambda \)CDM model, and with the best fit model-independent prediction around \(X= 1\) up to \(z \sim 1\), in both analyses. It is interesting to note that X can cross to negative values when \(z > 1\) and \(z > 1.5\) at \(2\sigma \) CL from SN+BAO+CC and SN+BAO+CC+H0LiCOW, respectively. It can also have some interesting theoretical consequences. First, DE with negative density values at large z came to the agenda when it turned out that, within the standard \(\Lambda \)CDM model, the Ly-\(\alpha \) forest measurement from BAO data by the BOSS collaboration [104], prefers a smaller value of the dust density parameter compared to the value preferred by the CMB data. Thus, with the possibility of a preference for negative energy density values at high z, it is argued that the Ly-\(\alpha \) data at \(z \sim 2.34\) can be described by a non-monotonic evolution in H(z) function, which is difficult to achieve in any model with non-negative DE density [105]. Note that in our analysis, we are taking into account the high z Lyman-\(\alpha \) measurements reported in [80] and [81]. It is possible to achieve \(X < 0\) at high z when the cosmological gravitational coupling strength gets weaker with increasing z [106, 107]. A range of other examples of effective sources crossing the energy density below zero also exists, including theories in which the cosmological constant relaxes from a large initial value via an adjustment mechanism [108], and also by modifying gravity theory [109,110,111]. More recently, a graduated DE model characterized by a minimal dynamical deviation from the null inertial mass density is introduced in [112] to obtain negative energy density at high z. Also, seeking inspiration from string theory, the possibility of negative energy density is investigated in [113].

The reconstruction of w(z) and X(z) are robust at low z, where the DE effects begin to be considerable, and a slow evolution of the EoS is well captured at 68% CL. However, the error estimates are larger at high z, where the data density is significantly smaller and the dynamical effects of DE are weaker. The introduction of the H0LiCOW data slightly improves the estimated errors in this range, especially for \(1.0<z<1.5\). On the other hand, the uncertainties of smooth functions may have a greater amplitude than the highly oscillating functions, and in this way the propagation of errors to their derivatives can be overestimated [114]. In our case, the variation of the starting functions is quite smooth with respect to the data and their derivatives as well, leading to the propagation of errors with a greater amplitude, as can be seen in Figs. 5 and 7 at high z. Other aspects that may influence this fact could be the strong dependence on z, as in the case of w(z), and the integrability of the functions with respect to z, as in the case of X(z) (for a brief discussion in this regard, see for example [56]).

Recently, the authors in [115] have obtained a measurement \(H_0= 69.5 \pm 1.7\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\), showing that it is possible to constrain \(H_0\) with an accuracy of 2% with minimal assumptions, from a combination of independent geometric datasets, namely, SN, BAO and CC. They have not used the H0LiCOW data in their analyses as we have used in the present work. They have also reconstructed the DE density parameter X(z), finding similar conclusion as obtained here in this work.

4 Final remarks

We have applied GP to constrain \(H_0\), and to reconstruct some functions that describe physical properties of DE in a model-independent way using cosmological information from SN, CC, BAO and H0LiCOW lenses data. The main results from the joint analysis, i.e., SN+CC+BAO+H0LiCOW, are summarized as follows:

-

(i)

A 1.1% accuracy measurement of \(H_0\) is obtained with the best fit value \(H_0= 73.78 \pm 0.84\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\) at 1\(\sigma \) CL.

-

(ii)

The EoS of DE is measured at \(\sim \) 6.5% accuracy at the present moment, with \(w(z=0)=-0.98 \pm 0.064\) at \(1\sigma \) CL.

-

(iii)

We find possible evidence for \(c^2_s < 0\) at \(\sim \) \(2\sigma \) CL from the analysis of the function behavior at high z. At the present moment, we find \(c^2_s(z=0) = -0.273 \pm 0.068\) at \(1\sigma \) CL.

-

(iv)

We find that the ratio of DE density evolution, \(\rho _\mathrm{de}/\rho _\mathrm{de,0}\), can cross to negative values at high-z. This behavior has already been observed by other authors. Here, we re-confirm this possibility for \(z > 1.5\) at \(\sim 2\sigma \).

Certainly, the GP method having the ability to perform joint analysis has a great potential in search for the accurate measurements of cosmological parameters, and analyze physical properties of the dark sector of the Universe in a minimally model-dependent way. It can shed light in the determination of the dynamics of the dark components or even rule out possible theoretical cosmological scenarios. Beyond the scope of the present work, it will be interesting to analyze/reconstruct a possible interaction in the dark sector, where DE and dark matter interact non-gravitationally in a model-independent way, through a robust joint analysis. Such scenarios have been intensively investigated recently in literature. We hope to communicate results in that direction in near future.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: The data underlying this article will be shared on request to the corresponding author.]

Notes

References

A.G. Riess et al., Observational evidence from supernovae for an accelerating universe and a cosmological constant. Astron. J. 116, 1009 (1998). arXiv:astro-ph/9805201

S. Perlmutter et al., [Supernova Cosmology Project], Measurements of \(\Omega \) and \(\Lambda \) from 42 high-redshift supernovae. Astrophys. J. 517, 565 (1999). arXiv:astro-ph/9812133

S. Alam et al., [BOSS], The clustering of galaxies in the completed SDSS-III Baryon Oscillation Spectroscopic Survey: cosmological analysis of the DR12 galaxy sample. Mon. Not. Roy. Astron. Soc. 470, 2617 (2017). arXiv:1607.03155

T. Abbott et al., [DES], Dark Energy Survey year 1 results: Cosmological constraints from galaxy clustering and weak lensing. Phys. Rev. D 98, 043526 (2018). arXiv:1708.01530

S. Nadathur, W.J. Percival, F. Beutler, H. Winther, Testing low-redshift cosmic acceleration with large-scale structure. Phys. Rev. Lett. 124, 221301 (2020). arXiv:2001.11044

S. Weinberg, The cosmological constant problem. Rev. Mod. Phys. 61, 1 (1989)

T. Padmanabhan, Cosmological constant: the weight of the vacuum. Phys. Rept. 380, 235 (2003). arXiv:hep-th/0212290

R. Bousso, TASI lectures on the cosmological constant. Gen. Relat. Gravit. 40, 607 (2008). arXiv:0708.4231

D. Huterer, D.L. Shafer, Dark energy two decades after: observables, probes, consistency tests. Rep. Prog. Phys. 81, 016901 (2017). arXiv:1709.01091

S. Capozziello, R. D’Agostino, O. Luongo, Extended gravity cosmography. Int. J Mod. Phys. D 28, 1930016 (2019). arXiv:1904.01427

M. Ishak, Testing general relativity in cosmology. Living Rev. Rel. 1, 22 (2019). arXiv:1806.10122

N. Aghanim et al. (Planck Collaboration), Planck 2018 results. VI. Cosmological parameters. arXiv:1807.06209

A.G. Riess, S. Casertano, W. Yuan, L.M. Macri, D. Scolnic, Large magellanic cloud cepheid standards provide a 1% foundation for the determination of the hubble constant and stronger evidence for physics beyond \(\Lambda \)CDM. Astrophys. J. 876, 1 (2019). arXiv:1903.07603

K.C. Wong et al., (H0LiCOW Collaboration), H0LiCOW XIII. A 2.4% measurement of H0 from lensed quasars: \(5.3\sigma \) tension between early and late-Universe probes. arXiv:1907.04869

S. Birrer et al., TDCOSMO IV: Hierarchical time-delay cosmography – joint inference of the Hubble constant and galaxy density profiles. arXiv:2007.02941

W.L. Freedman et al., The Carnegie-Chicago Hubble Program. VIII. An independent determination of the hubble constant based on the tip of the red giant branch. arXiv:1907.05922

D. Camarena, V. Marra, Local determination of the Hubble constant and the deceleration parameter. Phys. Rev. Res. 2, 013028 (2020). arXiv:1906.11814

D. Camarena, V. Marra, A new method to build the (inverse) distance ladder. MNRAS 495, 3 (2020). arXiv:1910.14125

R.C. Nunes, A. Bernui, \(\theta _{BAO}\) estimates and the \(H_0\) tension, arXiv:2008.03259

N. Schoneberg, J. Lesgourgues, D.C. Hooper, The BAO+BBN take on the Hubble tension. J. Cosmol. Astrop. Phys. 10, 029 (2019). arXiv:1907.11594

O.H.E. Philcox, B.D. Sherwin, G.S. Farren, E.J. Baxter, Determining the Hubble Constant without the Sound Horizon: Measurements from Galaxy Surveys. arXiv:2008.08084

L. Verde, T. Treu, A.G. Riess, Tensions between the early and late Universe. Nat. Astron. 3, 891 (2019). arXiv:1907.10625

V. Poulin, T.L. Smith, T. Karwal, M. Kamionkowski, Early dark energy can resolve the hubble tension. Phys. Rev. Lett. 122, 221301 (2019). arXiv:1811.04083

E. Mörtsell, S. Dhawan, Does the Hubble constant tension call for new physics? J. Cosmol. Astrop. Phys. 09, 025 (2018). arXiv:1801.07260

R.C. Nunes, Structure formation in \(f(T)\) gravity and a solution for \(H_0\) tension. J. Cosmol. Astrop. Phys. 05, 052 (2018). arXiv:1802.02281

W. Yang et al., Tale of stable interacting dark energy, observational signatures, and the \(H_0\) tension. J. Cosmol. Astrop. Phys. 09, 019 (2018). arXiv:1805.08252

S. Pan, W. Yang, E. Di Valentino, A. Shafieloo, S. Chakraborty, Reconciling \(H_0\) tension in a six parameter space? arXiv:1907.12551

S. Kumar, R.C. Nunes, S.K. Yadav, Dark sector interaction: a remedy of the tensions between CMB and LSS data. Eur. Phys. J. C 79, 576 (2019). arXiv:1903.04865

E. Di Valentino, A. Melchiorri, O. Mena, S. Vagnozzi, Interacting dark energy after the latest Planck, DES, and \(H_0\) measurements: an excellent solution to the H0 and cosmic shear tensions. arXiv:1908.04281

S. Vagnozzi, New physics in light of the H0 tension: an alternative view. arXiv:1907.07569

R. D’Agostino, R.C. Nunes, Measurements of \(H_0\) in modified gravity theories: the role of lensed quasars in the late-time Universe. Phys. Rev. D 101, 103505 (2020). arXiv:2002.06381

S. Vagnozzi, E. Di Valentino, S. Gariazzo, A. Melchiorri, O. Mena, J. Silk, Listening to the BOSS: the galaxy power spectrum take on spatial curvature and cosmic concordance. arXiv:2010.02230

B.S. Haridasu, M. Viel, Late-time decaying dark matter: constraints and implications for the H0-tension. Mon. Not. Roy. Astron. Soc. 497, 2 (2020). arXiv:2004.07709

E. Di Valentino, A (brave) combined analysis of the \(H_0\) late time direct measurements and the impact on the Dark Energy sector. arXiv:2011.00246

E. Di Valentino, A. Melchiorri, O. Mena, S. Pan, W. Yang, Interacting Dark Energy in a closed universe. arXiv:2011.00283

S. Pan, W. Yang, C. Singha, E.N. Saridakis, Observational constraints on sign-changeable interaction models and alleviation of the \(H_0\) tension. Phys. Rev. D 100(8), 083539 (2019). arXiv:1903.10969

A. Bonilla Rivera, J. García Farieta, Exploring the dark universe: constraints on dynamical dark energy models from CMB, BAO and growth rate measurements. Int. J Mod. Phys. D 28(09), 1950118 (2019). arXiv:1605.01984

Eleonora Di Valentino et al., Cosmology Intertwined III: f\(\sigma _8\) and \(S_8\). arXiv:2008.11285

E. Di Valentino, A. Melchiorri, J Silk, Cosmic discordance: planck and luminosity distance data exclude LCDM. arXiv:2003.04935

E. Di Valentino, A. Melchiorri, J. Silk, Planck evidence for a closed Universe and a possible crisis for cosmology. Nat. Astron. 4, 196 (2020). arXiv:1911.02087

S. Kumar, R.C. Nunes, Probing the interaction between dark matter and dark energy in the presence of massive neutrinos. Phys. Rev. D 94, 123511 (2016). arXiv:1608.02454

S. Kumar, and R.C. Nunes, Echo of interactions in the dark sector. Phys. Rev. D 96, 103511 (2017). arXiv:1702.02143

G.B. Zhao et al., Dynamical dark energy in light of the latest observations. Nat. Astron. 1, 627 (2017). arXiv:1701.08165

S. Peirone, G. Benevento, N. Frusciante, S. Tsujikawa, Cosmological data favor Galileon ghost condensate over \(\Lambda \)CDM. Phys. Rev. D 100, 063540 (2019). arXiv:1905.05166

A. Chudaykin, D. Gorbunov, N. Nedelko, Combined analysis of Planck and SPTPol data favors the early dark energy models. arXiv:2004.13046

R. Arjona, S. Nesseris, Hints of dark energy anisotropic stress using Machine Learning. arXiv:2001.11420

L. Kazantzidis, L. Perivolaropoulos, Is gravity getting weaker at low z? Observational evidence and theoretical implications. arXiv:1907.03176

E. Di Valentino, A. Melchiorri, J. Silk, Cosmological hints of modified gravity? Phys. Rev. D 93, 023513 (2016). arXiv:1509.07501

S. Pan, W. Yang, A. Paliathanasis, Non-linear interacting cosmological models after Planck legacy release and the \(H_0\) tension. Mon. Not. Roy. Astron. Soc. 493(3), 3114–3131 (2018). arXiv:2002.03408

D. Benisty, Quantifying the S\(_{8}\) tension with the Redshift Space Distortion data set. Phys. Dark Univ. 31, 100766 (2021). arXiv:2005.03751

C. Cattoen, M. Visser, Cosmographic Hubble fits to the supernova data. Phys. Rev. D 78, 063501 (2008). arXiv:0809.0537

S. Capozziello, R. D’Agostino, O. Luongo, Cosmographic analysis with Chebyshev polynomials. Mon. Not. Roy. Astron. Soc. 476, 3924 (2018). arXiv:1712.04380

C. Cattoen, M. Visser, The Hubble series: Convergence properties and redshift variables. Class. Quant. Grav. 24, 5985 (2007). arXiv:0710.1887

E.M. Barboza Jr., F. Carvalho, A kinematic method to probe cosmic acceleration. Phys. Lett. B 715, 19 (2012)

C. Rodrigues Filho, E.M. Barboza, Constraints on kinematic parameters at \(z\ne 0\). J. Cosmol. Astrop. Phys. 07, 037 (2018). arXiv:1704.08089

M. Seikel, C. Clarkson, M. Smith, Reconstruction of dark energy and expansion dynamics using Gaussian processes. J. Cosmol. Astrop. Phys. 07, 036 (2012). arXiv:1204.2832

A. Shafieloo, A.G. Kim, E.V. Linder, Gaussian process cosmography. Phys. Rev. D 85, 123530 (2012). arXiv:1204.2272

M. Seikel, C. Clarkson, Optimising Gaussian processes for reconstructing dark energy dynamics from supernovae. arXiv:1311.6678

M.J. Zhang, J.Q. Xia, Test of the cosmic evolution using Gaussian processes. J. Cosmol. Astrop. Phys. 12, 005 (2016). arXiv:1606.04398

V.C. Busti, C. Clarkson, M. Seikel, Evidence for a Lower Value for \(H_0\) from Cosmic Chronometers Data? Mon. Not. Roy. Astron. Soc. 441, 11 (2014). arXiv:1402.5429

V. Sahni, A. Shafieloo, A.A. Starobinsky, Model independent evidence for dark energy evolution from Baryon Acoustic Oscillations. Astrophys. J. Lett. 793, L40 (2014). arXiv:1406.2209

E. Belgacem, S. Foffa, M. Maggiore, T. Yang, Gaussian processes reconstruction of modified gravitational wave propagation. Phys. Rev. D 101, 063505 (2020). arXiv:1911.11497

A.M. Pinho, S. Casas, L. Amendola, Model-independent reconstruction of the linear anisotropic stress \(\eta \). J. Cosmol. Astrop. Phys. 11, 027 (2018). arXiv:805.00027

R.G. Cai, N. Tamanini, T. Yang, Reconstructing the dark sector interaction with LISA. J. Cosmol. Astrop. Phys. 05, 031 (2017). arXiv:1703.07323

B.S. Haridasu, V.V. Luković, M. Moresco, N. Vittorio, An improved model-independent assessment of the late-time cosmic expansion. J. Cosmol. Astrop. Phys. 10, 015 (2018). arXiv:1805.03595

M.J. Zhang, H. Li, Gaussian processes reconstruction of dark energy from observational data. Eur. Phys. J. C 78, 460 (2018). https://doi.org/10.1140/epjc/s10052-018-5953-3. arXiv:1806.02981

D. Wang, X.H. Meng, Improved constraints on the dark energy equation of state using Gaussian processes. Phys. Rev. D 95, 023508 (2017). arXiv:1708.07750

C.A. Bengaly, C. Clarkson, R. Maartens, The Hubble constant tension with next-generation galaxy surveys. J. Cosmol. Astrop. Phys. 05, 053 (2020). arXiv:1908.04619

C.A. Bengaly, Evidence for cosmic acceleration with next-generation surveys: A model-independent approach. Mon. Not. Roy. Astron. Soc. 499, L6 (2020). arXiv:1912.05528

R. Arjona, S. Nesseris, What can Machine Learning tell us about the background expansion of the Universe? arXiv:1910.01529

R. Sharma, A. Mukherjee, H. Jassal, Reconstruction of late-time cosmology using principal component analysis. arXiv:2004.01393

R.C. Nunes, S.K. Yadav, J. Jesus, A. Bernui, Cosmological parameter analyses using transversal BAO data. arXiv:2002.09293

K. Liao, A. Shafieloo, R.E. Keeley, E.V. Linder, A model-independent determination of the Hubble constant from lensed quasars and supernovae using Gaussian process regression. Astrophys. J. Lett. 886, L23 (2019). arXiv:1908.04967

A.G. Valent, L. Amendola, H0 from cosmic chronometers and Type Ia supernovae, with Gaussian Processes and the novel Weighted Polynomial Regression methody. J. Cosmol. Astrop. Phys. 04, 051 (2018). arXiv:1802.01505

E. O Colgain and M. M. Sheikh-Jabbari, On model independent cosmic determinations of \(H_0\). arXiv:1601.01701

R. Briffa, S. Capozziello, J. Levi Said, J. Mifsud, E.N. Saridakis, Constraining Teleparallel Gravity through Gaussian Processes. arXiv:2009.14582

C. Krishnan, E. O Colgain, M. M. S. Jabbari, and T. Yang, Running Hubble tension and a \(H_0\) diagnostic. arXiv:2011.02858

M. Moresco et al., A 6% measurement of the hubble parameter at \(z\sim 0.45\): direct evidence of the epoch of cosmic re-acceleration. J. Cosmol. Astrop. Phys. 05, 014 (2016). arXiv:1601.01701

G.-B. Zhao et al., The clustering of the sdss-iv extended baryon oscillation spectroscopic survey dr14 quasar sample: a tomographic measurement of cosmic structure growth and expansion rate based on optimal redshift weights. Mon. Not. Roy. Astron. Soc. 482, 3497 (2019). arXiv:1801.03043

H. du Mas des Bourboux et al., The Completed SDSS-IV extended Baryon Oscillation Spectroscopic Survey: Baryon acoustic oscillations with Lyman-\(\alpha \) forests. arXiv:2007.08995

H. du Mas des Bourboux, Baryon acoustic oscillations from the complete sdss-iii ly\(\alpha \)-quasar cross-correlation function at \(z=2.4\). Astron. Astrophys. 608, A130 (2017). arXiv:1708.02225

D. Scolnic et al., The complete light-curve sample of spectroscopically confirmed SNe Ia from Pan-STARRS1 and Cosmological Constraints from the Combined Pantheon Sample. Astrophys. J. 859, 101 (2018). arXiv:1710.00845

A.G. Riess et al., Type Ia supernova distances at redshift \(>1.5\) from the Hubble space telescope multi-cycle Treasury Programs: The Early Expansion Rate. Astrophys. J. 853, 126 (2018). arXiv:1710.00844

S. Birrer et al., H0LiCOW - IX. Cosmographic analysis of the doubly imaged quasar SDSS 1206+4332 and a new measurement of the Hubble constant. Mon. Not. Roy. Astron. Soc. 484, 4726 (2019). arXiv:1809.01274

S. Pandey, M. Raveri, B. Jain, Model independent comparison of supernova and strong lensing cosmography: Implications for the Hubble constant tension. Phys. Rev. D 102, 023505 (2020). arXiv:1912.04325

V. Sahni, A. Shafieloo, A.A. Starobinsky, Two new diagnostics of dark energy. Phys. Rev. D 78, 103502 (2008). arXiv:0807.3548

A.A. Starobinsky, How to determine an effective potential for a variable cosmological term. JETP Lett. 68, 757 (1998). arXiv:astro-ph/9810431

T. Nakamura, T. Chiba, Determining the equation of state of the expanding universe: Inverse problem in cosmology. Mon. Not. Roy. Astron. Soc. 306, 696 (1999). arXiv:astro-ph/9810447

D. Huterer, M.S. Turner, Prospects for probing the dark energy via supernova distance measurements. Phys. Rev. D 60, 081301 (1999). arXiv:astro-ph/9808133

T. Abbott et al., Dark energy survey year 1 results: a precise H0 estimate from DES Y1, BAO, and D/H data. Mon. Not. Roy. Astron. Soc. 480, 3879 (2018). arXiv:1711.00403

B. Abbott et al. [LIGO Scientific and Virgo] A gravitational-wave measurement of the Hubble constant following the second observing run of Advanced LIGO and Virgo. arXiv:1908.06060

R. Arjona, W. Cardona, S. Nesseris, Unraveling the effective fluid approach for \(f(R)\) models in the subhorizon approximation. Phys. Rev. D 99, 043516 (2019). arXiv:1811.02469

R. Arjona, W. Cardona, S. Nesseris, Designing Horndeski and the effective fluid approach. Phys. Rev. D 100, 063526 (2019). arXiv:1904.06294

R. Arjona, J. García-Bellido, S. Nesseris, Cosmological constraints on non-adiabatic dark energy perturbations. arXiv:2006.01762

W. Cardona, L. Hollenstein, M. Kunz, The traces of anisotropic dark energy in light of Planck. J. Cosmol. Astrop. Phys. 07, 032 (2014). arXiv:1402.5993

F. Piazza, S. Tsujikawa, Dilatonic ghost condensate as dark energy. J. Cosmol. Astrop. Phys. 07, 004 (2004). arXiv:hep-th/0405054

R.J. Scherrer, Purely kinetic k-essence as unified dark matter. Phys. Rev. Lett. 93, 011301 (2004). arXiv:astro-ph/0402316

G.W. Horndeski, Second-order scalar-tensor field equations in a four-dimensional space. Int. J. Theor. Phys. 10, 363 (1974)

C. Deffayet, X. Gao, D.A. Steer, G. Zahariade, From k-essence to generalised Galileons. Phys. Rev. D 84, 064039 (2011). arXiv:1103.3260

T. Kobayashi, M. Yamaguchi, J. Yokoyama, Generalized G-inflation: Inflation with the most general second-order field equations. Prog. Theor. Phys. 126, 511 (2011). arXiv:1105.5723

E. Bellini, I. Sawicki, Maximal freedom at minimum cost: linear large-scale structure in general modifications of gravity. J. Cosmol. Astrop. Phys. 07, 050 (2014). arXiv:1404.3713

The LIGO Scientific Collaboration, the Virgo Collaboration, GW170817: observation of gravitational waves from a binary neutron star inspiral. Phys. Rev. Lett. 119, 161101 (2017). arXiv:1710.05832

LIGO Scientific Collaboration, Virgo Collaboration, Fermi Gamma-Ray Burst Monitor, INTEGRAL, Gravitational Waves and Gamma-rays from a Binary Neutron Star Merger: GW170817 and GRB 170817A. Astrophys. J. Lett. 848, L13 (2017). arXiv:1710.05834

T. Delubac et al., Baryon acoustic oscillations in the \(Ly\alpha \) forest of BOSS DR11 quasars. Astron. Astrophys. 574, A59 (2015). arXiv:1404.1801

É. Aubourg et al., [BOSS], Cosmological implications of baryon acoustic oscillation measurements. Phys. Rev. D 92, 123516 (2015). arXiv:1411.1074

B. Boisseau, G. Esposito-Farese, D. Polarski, A.A. Starobinsky, Reconstruction of a scalar tensor theory of gravity in an accelerating universe. Phys. Rev. Lett. 85, 2236 (2000). arXiv:gr-gc/0001066

V. Sahni, A. Starobinsky, Reconstructing dark energy. Int. J. Mod. Phys. D 15, 2105 (2006). arXiv:astro-ph/0610026

A.D. Dolgov, Field model with a dynamic cancellation of the cosmological constant. JETP Lett. 41, 345 (1985)

P. Brax, C. van de Bruck, Cosmology and brane worlds: a review. Class. Quant. Grav. 20, R201 (2003). arXiv:hep-th/0303095

Ö. Akarsu, N. Katırcı, S. Kumar, Cosmic acceleration in a dust only universe via energy-momentum powered gravity. Phys. Rev. D 97, 024011 (2018). arXiv:1709.02367

O. Akarsu, N. Katirci, S. Kumar, R. C. Nunes, B. Ozturk, S. Sharma, Rastall gravity extension of the standard \(\Lambda \)CDM model: theoretical features and observational constraints. arXiv:2004.04074

Ö. Akarsu, J.D. Barrow, L.A. Escamilla, J.A. Vazquez, Graduated dark energy: Observational hints of a spontaneous sign switch in the cosmological constant. Phys. Rev. D 101, 063528 (2020). arXiv:1912.08751

L. Visinelli, S. Vagnozzi, U. Danielsson, Revisiting a negative cosmological constant from low-redshift data. Symmetry 11, 1035 (2019). arXiv:1907.07953

C.E. Rasmussen, C.K.I. Williams, Gaussian processes for machine learning (MIT Press, London, 2006). ISBN 0-262-18253-X

F. Renzi, A. Silvestri, A look at the Hubble speed from first principles. arXiv:2011.10559

S. Alam , et al. (eBOSS Collaboration), The Completed SDSS-IV extended Baryon Oscillation Spectroscopic Survey: Cosmological Implications from two Decades of Spectroscopic Surveys at the Apache Point observatory. arXiv:2007.08991

O.H.E. Philcox, M.M. Ivanov, M. Simonovic, M. Zaldarriaga, Combining full-shape and BAO analyses of galaxy power spectra: a 1.6% CMB-independent constraint on H0. J. Cosmol. Astrop. Phys. 05, 032 (2020). arXiv:2002.04035

T.M.C. Abbott et al., (DES Collaboration), Dark Energy Survey Year 1 Results: A Precise H0 Measurement from DES Y1, BAO, and D/H Data. Mon. Not. Roy. Astron. Soc. 480, 3 (2018). arXiv:1711.00403

Acknowledgements

The authors thank to Sunny Vagnozzi, Valerio Marra and Chris Clarkson for a critical reading of the manuscript and useful comments. S.K. gratefully acknowledges the support from SERB-DST project No. EMR/2016/000258. R.C.N. would like to thank the agency FAPESP for financial support under the project No. 2018/18036-5.

Author information

Authors and Affiliations

Corresponding author

Appendix A: \(H_0\) without BAO data, and effects of \(r_d\)

Appendix A: \(H_0\) without BAO data, and effects of \(r_d\)

In this appendix, we derive constraints on \(H_0\) and \(O_m(z)\) diagnostic removing our BAO data set compilation as described in Sect. II. Figure 8 shows \(O_m(z)\) vs z reconstructed from SN+CC and SN+CC+H0LiCOW. For comparison, we also show the prediction with BAO. We find \(H_0 = 68.57 \pm 1.86\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\) and \(H_0 = 71.65 \pm 1.09\ \hbox {km s}^{-1}\,\hbox {Mpc}^{-1}\) from SN+CC and SN+CC+H0LiCOW data, respectively. Note that without BAO data these constraints are compatible with each other practically across the whole z range under consideration, where the addition of the H0LiCOW sample, significantly improves the reconstruction compared to SN+CC. It is also interesting to observe the behavior for \(z > 1.5\), where we see that \(Om < 0.31\). Combining BAO data with SN+CC+H0LiCOW, we observe a significant improvement in the reconstruction for the whole z range considered in the analysis. Predictions for \(z > 2\) disagree at \(\sim 1.5\sigma \) CL when the GP mean is compared between SN+CC+H0LiCOW and SN+CC+BAO+H0LiCOW.

On the other hand, the BAO measurements require a calibration of the sound horizon, either through BBN or the CMB. In all our analyses, we have used BAO data with the assumption \(r_d/r_{d, fid} = 1\), where \(r_{d,fid}\) is the fiducial input value. In order to quantify how much the \(r_d\) value can influence the GP reconstruction, we have analyzed Om(z) with different \(r_d\) input values. We have used \(r_d\) values obtained from Planck-CMB data [12] and eBOSS Collaboration [116]. Figure 9 shows \(O_m(z)\), reconstructed using \(r_d = 149.30\) Mpc (eBOSS estimation) and \(r_d = 147.09\) Mpc (Planck-CMB estimation). In short, we conclude that appropriate and different \(r_d\) input values do not change the results significantly in all the analyses carried out in this work. Any input value of \(r_d \in [135, 155]\) Mpc does not have statistical divergence compared to assumption \(r_d/r_{d, fid} = 1\). That is, all analyses are consistent with each other at \(< 1\sigma \). Therefore, the GP analyses here are not sensitive to \(r_d\). That is why, we have presented the results in the main text assuming \(r_d/r_{d, fid}=1\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3

About this article

Cite this article

Bonilla, A., Kumar, S. & Nunes, R.C. Measurements of \(H_0\) and reconstruction of the dark energy properties from a model-independent joint analysis. Eur. Phys. J. C 81, 127 (2021). https://doi.org/10.1140/epjc/s10052-021-08925-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-021-08925-z