Abstract

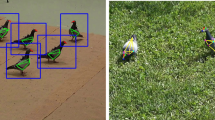

Factors such as drastic illumination variations, partial occlusion, rotation make robust visual tracking a difficult problem. Some tracking algorithms represent a target appearances based on obtained tracking results from previous frames with a linear combination of target templates. This kind of target representation is not robust to drastic appearance variations. In this paper, we propose a simple and effective tracking algorithm with a novel appearance model. A target candidate is represented by convex combinations of target templates. Measuring the similarity between a target candidate and the target templates is a key problem for a robust likelihood evaluation. The distance between a target candidate and the templates is measured using the earth mover’s distance with L1 ground distance. Comprehensive experiments demonstrate the robustness and effectiveness of the proposed tracking algorithm against state-of-the-art tracking algorithms.

Similar content being viewed by others

References

Y. Wu, J. Lim, and M. Yang, “Online object tracking: a benchmark,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Portland, 2013), pp. 2411–2418.

D. Ross, J. Lim, R. Lin, and M. Yang, “Incremental learning for robust visual tracking,” Int. J. Comput. Vision 77 (1), 125–141 (2008).

A. Adam, E. Rivlin, and I. Shimshoni, “Robust fragments- based tracking using the integral histogram,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (New York, 2006), pp. 798–805.

S. He, Q. Yang, R. Lau, J. Wang, and M. Yang, “Visual tracking via locality sensitive histograms,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Portland, 2013), pp. 2427–2434.

D. Wang, H. Lu, and M. Yang, “Least soft-thresold squares tracking,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Portland, 2013), pp. 2371–2378.

D. Wang and H. Lu, “Visual tracking via probability continuous outlier model,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Columbus, 2014), pp. 3478–3485.

J. Kwon and K. Lee, “Visual tracking decomposition,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (San Francisco, 2010), pp. 1269–1276.

J. Kwon and K. Lee, “Tracking by sampling trackers,” in Proc. IEEE Int. Conf. on Computer Vision (Colorado Springs, 2011), pp. 1195–1202.

S. Oron, A. Bar-Hillel, D. Levi, and S. Avidan, “Locally orderless tracking,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Providence, RI, 2012), pp. 1940–1947.

X. Li, A. Dick, C. Shen, A. Hengel, and H. Wang, “Incremental learning of 3D-DCT compact representations for robust visual tracking,” IEEE Trans. Pattern Anal. Mach. Intell. 35 (4), 863–881 (2013).

X. Li, C. Shen, Q. Shi, A. Dick, and A. Hengel, “Nonsparse linear representations for visual tracking with online reservoir metric learning,” in Proc. IEEE Conference on Computer Vision and Pattern Recognition (Providence, RI, 2012), pp. 1760–1767.

J. Supancic III and D. Ramanan, “Self-paced learning for long-term tracking,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Portland, 2013), pp. 2379–2386.

S. Avidan, “Ensemble tracking,” IEEE Trans. Pattern Anal. Mach. Intellig. 29 (2), 261–271 (2007).

Q. Bai, Z. Wu, S. Sclaroff, M. Betke, and C. Monnier, “Randomized ensemble tracking,” in Proc. IEEE Int. Conf. on Computer Vision (Portland, 2013), pp. 2040–2047.

H. Grabner, M. Grabner, and H. Bischof, “Real-time tracking via on-line boosting,” in Proc. British Machine Vision Conf. (Edinburgh, 2006), pp. 47–56.

B. Babenko, M. Yang, and S. Belongie, “Robust object tracking with online multiple instance learning,” IEEE Trans. Pattern Anal. Mach. Intell. 33 (8), 1619–1632 (2011).

S. Hare, A. Saffari, and P. Torr, “Struck: structured output tracking with kernels,” in Proc. IEEE Int. Conf. on Computer Vision (Colorado Springs, 2011), pp. 263–270.

Z. Kalal, K. Mikolajczyk, and J. Matas, “Trackinglearning-detection,” IEEE Trans. Pattern Anal. Mach. Intell. 34 (7), 1409–1422 (2012).

K. Zhang, L. Zhang, and M. Yang, “Real-time compressive tracking,” in Proc. Europ. Conf. on Computer Vision (Firenze, 2012), pp. 864–877.

K. Zhang, L. Zhang, and M. Yang, “Fast compressive tracking,” IEEE Trans. Pattern Anal. Mach. Intell. 36 (10), 2002–2015 (2014).

M. Danelljan, F.S. Khan, M. Felsberg, and J. van de Weijer, “Adaptive color attributes for real-time visual tracking,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Columbus, 2014), pp. 1090–1097.

J. Zhang, S. Ma, and S. Sclaroff, “MEEM: robust tracking via multiple experts using entropy minimization,” in Proc. Europ. Conf. on Computer Vision (Zurich, 2014), pp. 188–203.

J. Henriques, R. Caseiro, P. Martins, and J. Batista, “High-speed tracking with kernelized correlation filters,” IEEE Trans. Pattern Anal. Mach. Intell. 37 (3), 583–596 (2014).

J. Wright, A. Yang, A. Ganesh, S. Sastry, and Y. Ma, “Robust face recognition via sparse representation,” IEEE Trans. Pattern Anal. Mach. Intell. 31 (2), 210–227 (2009).

X. Mei and H. Ling, “Robust visual tracking and vehicle classification via sparse representation,” IEEE Trans. Pattern Anal. Mach. Intell. 33 (11), 2259–2272 (2011).

C. Bao, Y. Wu, H. Ling, and H. Ji, “Real time robust l1 tracker using accelerated proximal gradient approach,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Providence, RI, 2012), pp. 1830–1837.

X. Jia, H. Lu, and M. Yang, “Visual tracking via adaptive structural local sparse appearance model,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Providence, RI, 2012), pp. 1822–1829.

W. Zhong, H. Lu, and M. Yang, “Robust object tracking via sparsity-based collaborative model,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Providence, RI, 2012), pp. 1838–1845.

T. Zhang, B. Ghanem, S. Liu, and N. Ahuja, “Robust visual tracking via multi-task sparse learning,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Providence, RI, 2012), pp. 2042–2049.

T. Zhang, S. Liu, C. Xu, S. Yan, and B. Ghanem, “Structure sparse tracking,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Boston, MA, 2015), pp. 150–158.

T. Zhang, B. Ghanem, S. Liu, and N. Ahuja, “Lowrank sparse learning for robust visual tracking,” in Proc. Europ. Conf. on Computer Vision (Firenze, 2012), pp. 470–484.

N. Wang, J. Wang, and D. Yeung, “Online robust nonnegative dictionary learning for visual tracking,” in Proc. IEEE Int. Conf. on Computer Vision (Portland, 2013), pp. 657–664.

H. Cevikalp and B. Triggs, “Face recognition based on image sets,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (San Francisco, 2010), pp. 2567–2573.

Y. Rubner, C. Tomasi, and L. J. Guibas, “The Earth mover’s distance as a metric for image retrieval,” Int. J. Comput. Vision 42 (2), 99–121 (2000).

H. Ling and K. Okaka, “An efficient Earth movers distance algorithm for robust histogram comparison,” IEEE Trans. Pattern Anal. Mach. Intell. 29 (5), 840–851 (2007).

J. Wang, Y. Wang, C. Deng, H. Zhu, and S. Wang, “Convex hull for visual tracking with EMD,” in Proc. IEEE Int. Conf. on Audio, Language and Image Processing (Shanghai, 2016), pp. 433–437.

M. Isard and A. Blake, “Condensation–conditional density propagation for visual tracking,” Int. J. Comput. Vision 29 (1), 5–28 (1998).

X. Ren and D. Ramanan, “Histograms of sparse codes for object detection,” in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (Portland, 2013), pp. 3246–3253.

Author information

Authors and Affiliations

Corresponding author

Additional information

The article is published in the original.

Jun Wang is an Associate Professor in the School of Information Engineering at Nanchang Institute of Technology, China. He received the M.S. degree in Computer Science and Technology from Nanchang University, China, in 2007 and the PhD in Artificial Intelligence from Xiamen University, China, in 2015, respectively. His research interests include visual tracking and pattern recognition.

Yuanyun Wang is an Assistant Professor in the School of Information Engineering at Nanchang Institute of Technology, China. She received the B.S. degree and M.S degree in Computer Science and Technology from Nanchang University, China, in 2004 and 2007 respectively. Her research interest is in visual tracking and pattern recognition.

Chengzhi Deng is a Professor in the School of Information Engineering at Nan-chang Institute of Technology, China. He received the M.S. degree in Communication Engineering from Jiangxi Normal University, China, in 2005 and the PhD in Information and Communication Engineering from Huazhong University of Science and Technology, China, in 2008, respectively. His research interest is in pattern recognition and image reconstruction.

Shengqian Wang is a Professor in the School of Information Engineering at Nan-chang Institute of Technology, China. He received the M.S. degree in Theoretical Physics from Jiangxi Normal University, China, in 1995 and the PhD in Communication and Information System from Shanghai Jiao Tong University, China, in 2002. His research interest is in super-resolution and pattern recognition.

Rights and permissions

About this article

Cite this article

Wang, J., Wang, Y., Deng, C. et al. Robust Visual Tracking Based on Convex Hull with EMD-L1. Pattern Recognit. Image Anal. 28, 44–52 (2018). https://doi.org/10.1134/S1054661818010078

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S1054661818010078