Abstract

We present an alternate method for evaluating Lagrange multipliers of probability distributions usually used in maximum entropy principle. The Lagrange multipliers are evaluated using least square method. Both the methods are used to calculate Lagrange multipliers in fitting wind speed data. Kolmogorov–Smirnov test, Chi squared goodness of fit test, and root mean square error indicate the usefulness of this alternate method.

Similar content being viewed by others

1 Introduction

Maximum entropy principle has a variety of applications in modeling of stochastic data. These applications involve many branches of natural and social sciences. Pressé et al. [1] reviewed the origins and use of Maximum Entropy Principle in Statistical Physics and beyond in fields such as Biology. Karlin et al. [2] used MEP for constructing equilibria in lattice kinetic equations. Chen and Dai [3] investigated MEP of uncertainty distributions for uncertain variables. Županović et al. [4] took an alternative path to derive Kirchhoff’s loop rule where they employed MEP to show that in network branches currents are distributed to achieve maximum entropy. Alves et al. [5] found a connection between the maximum entropy principle and the Higgs boson mass without introducing any extra assumptions into the Standard Model. Hanel etal. [6] generalized the notion of entropy to complex and non-Ergodic systems and showed that the MEP is a consistent method for such systems. Roux and Weare investigated the consistency of the MEP and restrained-ensemble simulations. They showed that the conditions of the restrained-ensemble simulations were compatible with the maximum entropy principle [7]. Aldana-Bobadilla and Kuri-Morales proposed a method of clustering based upon the MEP and showed their method was more effective than supervised ones [8]. Pontzen and Governato used MEP to derive the phase-space distribution for a halo of dark matter. They used physically motivated constraints and found a good match with three simulations of dark matter [9]. Rahmati, Pourghasemi and Melesse applied random forest and maximum entropy models to map groundwater potential in the Mehran region of Iran and found MEP provided more successful predictions [10]. Ruggeri used non-linear MEP to model a polyatomic gas under changing pressure conditions. He found the framework to be in agreement with the phenomenological Extended Thermodynamics theory [11].

2 MEP and new method

For a probability density function \( p\left( x \right) \), the entropy is defined as

We need to maximize H subject to the condition that

here \( \phi_{n} \left( x \right) \) re known functions with \( \phi_{0} \left( x \right) = 1 \) nd \( \mu_{n} \) re moments about origin with \( \mu_{0} = 1 \).The classical form of \( p\left( x \right) \) is

here \( \lambda_{n} \) are called the Lagrangian parameters and make up a vector \( \varvec{\lambda} \). For a given data set we need to find the value of N that best fits that data set, and hence we need to determine the distribution it follows. Following Algorithm is usually used to calculate the best fit distribution.

-

1.

Define the range (xmin, xmax) and step size dx.

-

2.

Use some standard functions for \( \phi_{n} \left( x \right). \)

-

3.

Start iterative procedure with some \( \varvec{\lambda}_{\varvec{o}} . \)

-

4.

Calculate following two integrals.

$$ G_{n} \left(\varvec{\lambda}\right) = \smallint \phi_{n} \left( x \right)exp\left[ { - \mathop \sum \limits_{n = 0}^{N} \lambda_{n} \phi_{n} \left( x \right)} \right]dx = \mu_{n} , n = 0, \ldots ,N $$(4)$$ g_{nk} = g_{kn} = \frac{{\partial G_{n} \left(\varvec{\lambda}\right)}}{{\partial \lambda_{k} }} = - \smallint x^{k} x^{n} exp\left[ { - \mathop \sum \limits_{m = 0}^{N} \lambda_{m} x^{m} } \right]dx = - G_{n + k} \left(\varvec{\lambda}\right) $$(5) -

5.

Solve the equation \( \varvec{G\delta } = \varvec{v} \), where \( v = \left[ {\mu_{o} - G_{o} \left( {\lambda^{o} } \right), \mu_{1} - G_{1} \left( {\lambda^{o} } \right), \ldots \ldots , \mu_{N} - G_{N} \left( {\lambda^{o} } \right)} \right]^{t} \) to find \( \varvec{\delta} \)

-

6.

Calculate \( \varvec{\lambda}=\varvec{\lambda}^{0} +\varvec{\delta} \) The process is repeated with this new value as \( \varvec{\lambda}^{0} \) ntil \( \varvec{\delta} \) becomes negligible.

In this procedure, the first \( \varvec{\lambda}_{\varvec{o}} \) is basically an initial guess for the vector \( \varvec{\lambda} \). Then the new values of \( \varvec{\lambda} \) are taken as the new \( \varvec{\lambda}_{\varvec{o}} \cdot\varvec{\delta} \) are uncertainties in \( \varvec{\lambda}_{\varvec{o}} \).

3 Least square method

In least square method, the sum of square of error (SSE) is made minimum. If y and ŷ are the observed and calculated values of a dependent variable respectively, the sum of square of error is given by

The parameters of fitting are found by minimizing SSE.

To find the values of Lagrange multipliers, we have used least square fitting with \( \phi_{n} \left( x \right) = x^{n} \). The classical form of \( p(x) \) is reduced to a linear polynomial by taking logarithm of the equation.

The values of Lagrange coefficients are found by minimizing sum of square of errors, i.e.

These are (N + 1) linear equations in terms of Lagrange coefficients. They are solved to find the values of these coefficients. The process is repeated for various degrees of polynomials. The degree of the polynomial is selected for which Chi square, Kolmogorov–Smirnov test and Mean Square errors are minimal.

4 Wind speed data

Many different statistical distributions have been used to model wind speed data. The most widely used distribution is Weibull distribution [12, 13]. MEP more accurately models wind speed data [14,15,16,17]. Djafari developed a matlab program to model wind power density using maximum entropy distributions [18]. Wind speed data for this study has been downloaded from the site [19]. This site contains wind speed data of various cities of The Netherlands and it has been made available for free for educational and research purposes. As a sample we randomly picked the city, Hansweert and used hourly wind data for the year 2000. It has a longitude of 3.998 and latitude of 51.446. Hansweert is a village in southwest Netherlands with overall pleasant weather. The average temperature in winter is 2 °C, while in summer it is 18 °C. In Hansweert winters are windier.

5 Goodness of fit tests

Three different goodness of fit tests have been used to determine how well the datasets fit on the classical distribution of maximum entropy principle using both methods.

6 Kolmogorov–Smirnov test

Kolmogorov–Smirnov (KS) test is a goodness of fit test that checks if a given sample data follows a specific distribution. KS test measures the maximum distance between cumulative curves. It does not depend on cumulative distribution function (CDF). It is given by

where ‘n’ is the number of data points, \( F\left( {x_{i} } \right) \) is the CDF of hypothetical or given distribution and \( G\left( {x_{i} } \right) \) is the CDF of specific distribution.

To check the validity of the hypothesis that the given data follows a specific distribution, a test of hypothesis with given level of significance is conducted. If \( KS < KS_{\alpha } \) then the two distributions are similar. At 99% level of significance, \( KS_{99} = \frac{1.63}{\sqrt n } \). For n = 6 and \( \alpha = 99\% \), the critical value is 0.618. The best feature of KS test is that it does not depend on sample size.

7 Chi square goodness of fit test

Chi square test is another goodness of fit test which also determines how well a given dataset follows a specific distribution. This test requires dataset of observed frequency (Oi) and corresponding expected frequency (Ei). The Chi square test statistic is given by

If \( \chi^{2} < \chi_{\alpha ,k - 1}^{2} \) the two distributions are identical, \( \alpha \) is the level of significance and k is the number of data points. For k = 6 and \( \alpha = 95\% \), the critical value is 1.63.

8 Root mean square error (RMSE)

Root mean square error is another mean to determine the goodness of fit of a given dataset on a specific distribution. It is defined as the square root of mean of square error and is given by

where \( y_{i} \) is the actual value and \( \bar{y}_{i} \) is the fitted value calculated from given distribution, and n is the number of data points. The smaller the RMSE is, the better the goodness of fit.

9 Results and discussion

The probability of a real-valued random variable is always positive in a region ‘R’. If the random variable follows the maximum entropy principle, the probability distribution is given by

where \( \lambda_{n} \) are known as Lagrange coefficients and the values of these coefficients are determined using moments as constraints. In this study instead of using the moments as constraints, the least square method was used to fit a distribution on given wind speed data, that is, coefficients \( \lambda_{n} \) are determined by minimizing square of the difference of fitted and theoretical probabilities. The approach is different from maximum entropy method, even though the same classical probability distribution is fitted. A Python program has been developed for the fitting process, the code of which is given in “Appendix A” section.

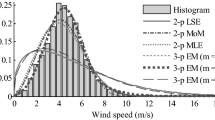

The Chi square, Kolmogorov–Smirnov and Root Mean Square Error test statistics have been calculated for fitting process for datasets of each month from January to December. All three tests of goodness of fit (Chi Square, Kolmogorov–Smirnov, and Root Mean Square Error) yield very low values, favoring the hypothesis that the datasets follow the determined distributions. The KS and RMSE are found to be very close. In Fig. 1 a comparison between MEP fit and least square fit of classical distribution of MEP is given for the wind speed data of month of January for Hansweert and Karachi, Pakistan. Both plots appear to be good fits for both cities. To compare the reliability of the fits, the values of test statistics for Chi square, KS and RMSE are given in Table 1. It is clear from the table that the values of these statistics are least in case of least square fit. The difference is not large for Karachi, but the superiority of the Least square fit is still apparent. The reason is that the main focus of MEP fitting is to determine exact values of the constraint parameters (moments) of the distribution. Hence if the statistical information of data is needed MEP is the better choice, and if fitting is the aim then least square fitting is the better choice.

In Table, values of all three test statistics for the wind speed data for January to December are given. On average the order of Chi square is 1E − 16 but in November the order is 1E − 11. The orders of KS and RMSE are almost same i.e. 1E − 08, in November however the orders are 1E − 5. The values show that the least square fitting of wind speed data on classical probability of MEP is excellent. The second column of Table 2 gives the degree of polynomial that fits in each month on the given dataset of wind speed. Mostly 6th degree polynomials fit well except for May & October where the degree of polynomial is 7. The Lagrange coefficients have been calculated and given in Table 3. These coefficients are then used to draw plots of classical probability distributions, which are overlapped on histograms generated from given wind speed data. In Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12 and 13, the plots show the least square fitted curves on the given dataset. The plots show that the fitting of the dataset of wind speed on the probability distribution is remarkable.

10 Conclusion

In this study, we evaluated Lagrange multipliers of classical probability distribution of MEP using Least Square Method on wind speed data. The hourly wind speed data of Hansweert (The Netherlands) from January to December (2015) is used in this study. The basic difference between MEP and the method used is that whereas MEP fits the distribution using moments as constraints, the alternative method minimizes the square of differences between theoretical and given probabilities. It is concluded that

-

(i)

MEP should be used if statistical information of the distribution is needed, and LSM is preferable when fitting of dataset is required.

-

(ii)

The results show the evaluation of Lagrange multipliers through LSM is better than that obtained by MEP (see Table 1).

A Python program has been developed that fits various degree polynomials as classical probability distributions and displays the degree for which the errors are least. It also displays the calculated values of Lagrange multipliers. For error calculation Chi square, Kolmogorov–Smirnov and Root Mean Square Error test statistics were used. The alternative method consists of using least square method to find the Lagrange multipliers of maximum entropy principle and has proven to provide a better fit of the dataset, as evidenced by lower values of tests of significance statistics.

References

Pressé S, Ghosh K, Lee J, Dill KA (2013) Principles of maximum entropy and maximum caliber in statistical physics. Rev Modern Phys 3(85):1115

Karlin IV, Gorban AN, Succi S, Boffi V (1998) Maximum entropy principle for lattice kinetic equations. Phys Rev Lett 81(1):6

Chen X, Dai W Maximum entropy principle for uncertain variables. Int J Fuzzy Syst 13(3):232–6 (2011)

Županović P, Juretić D, Botrić S. Kirchhoff’s loop law and the maximum entropy production principle. Phys Rev E 70(5):056108 (2004)

Alves A, Dias AG, da Silva R (2015) Maximum entropy principle and the Higgs boson mass. Phys A Stat Mech Appl 7(1):420

Hanel R, Thurner S, Gell-Mann M (2014) How multiplicity determines entropy and the derivation of the maximum entropy principle for complex systems. Proc Natl Acad Sci 111:6905–6910

Roux B (2013) Weare J On the statistical equivalence of restrained-ensemble simulations with the maximum entropy method. J Chem Phys 138(8):02B616

Aldana-Bobadilla E, Kuri-Morales A (2015) A clustering method based on the maximum entropy principle. Entropy 17(1):151–180

Pontzen A, Governato F (2013) Conserved actions, maximum entropy and dark matter haloes. Mon Notices R Astronom Soc 1(430):121–133

Rahmati O, Pourghasemi HR, Melesse AM (2016) Application of GIS-based data driven random forest and maximum entropy models for groundwater potential mapping: a case study at Mehran Region, Iran. CATENA 137:360–372

Ruggeri T (2015) Non-linear maximum entropy principle for a polyatomic gas subject to the dynamic pressure. arXiv preprint arXiv:1504.05857

Khan JK, Shoaib M, Uddin Z, Siddiqui IA, Aijaz A, Siddiqui AA, Hussain E (2015) Comparison of wind energy potential for coastal locations: Pasni and Gwadar. J Basic Appl Sci 11:211

Khan JK, Ahmed F, Uddin Z, Iqbal ST, Jilani SU, Siddiqui AA, Aijaz A (2015) Determination of Weibull parameter by four numerical methods and prediction of wind speed in Jiwani (Balochistan). J Basic Appl Sci 11:62

Zhang H, Yu YJ, Liu ZY (2014) Study on the maximum entropy principle applied to the annual wind speed probability distribution: a case study for observations of intertidal zone anemometer towers of Rudong in East China Sea. Appl Energy 114:931–938

Sobczyk K, Trcebicki J (1999) Approximate probability distributions for stochastic systems: maximum entropy method. Comput Methods Appl Mech Eng 168(1–4):91–111

Chellali F, Khellaf A, Belouchrani A, Khanniche R (2012) A comparison between wind speed distributions derived from the maximum entropy principle and Weibull distribution. Case of study; six regions of Algeria. Renew Sustain Energy Rev 16(1):379–385

Gurley KR, Tognarelli MA, Kareem A (1997) Analysis and simulation tools for wind engineering. Probab Eng Mech 12(1):9–31

Mohammad-Djafari A (1992) A Matlab program to calculate the maximum entropy distributions. In: Maximum entropy and Bayesian methods (pp. 221–233). Springer, Dordrecht

http://projects.knmi.nl/klimatologie/onderzoeksgegevens/potentiele_wind. Accessed July 2018

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Uddin, Z., Khan, M.B., Zaheer, M.H. et al. An alternate method of evaluating Lagrange multipliers of MEP. SN Appl. Sci. 1, 224 (2019). https://doi.org/10.1007/s42452-019-0211-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-019-0211-3