Abstract

In this manuscript, a new expansion technique namely residual power series method is used for finding the analytical solution of the Fractional Black–Scholes equation with an initial condition for European option pricing problem. The Black–Scholes formula is important for estimating European call and put option on a non-dividend paying stock in particular when it contains time-fractional derivatives. The fractional derivative is defined in Caputo sense. This technique is based on fractional power series expansion. The convergence analysis of the present method is also deliberated. Example problems are given to examine the efficacy of the proposed method. Obtained solutions are compared with exact solutions solved by other techniques which demonstrate that the present method is robust and easy to implement for other fractional problems arising in science and engineering.

Similar content being viewed by others

1 Introduction

Pricing of options is a critical element of mathematical finance problems. The important thought of their examinations is that one did not have to evaluate the regular return of a stock with a specific end goal to value an option written on that stock. In 1973, the familiar theoretical widespread valuation formula for options was derived with the aid of Fischer Black and Myron Scholes [1] which received them the 1997 Nobel Prize in Economics. The FBSE which is a second-order parabolic equation deals with the estimation of financial derivatives. Such equations support the usage of the no-arbitrage principle also. Hence, the BS equation is utilized for estimating call and put options on a paying stock [2]. In this association, various researchers have performed innovative work which includes Hilfer [3], Podlubny [4], Caputo [5], Miller and Ross [6], Kilbas et al. [7], Heydari et al. [8, 9] and others.

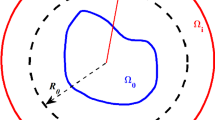

As such in this article, fractional BSOPE is considered as

where \(w\left( {x,t} \right),\,s\left( t \right),\,\,\sigma \left( {x,t} \right),\,\,T\,and\,\,t\) denote the European call option price, interest rate, volatility function, maturity and time respectively. The payoff functions are

where \(w_{call} \left( {x,t} \right)\) and \(w_{put} \left( {x,t} \right)\) signify the values of European call and put option respectively. \(E\) represents the expiration price of the option. The fractional BSOPE has been examined with the help of various techniques such as Laplace Transform Method (LTM) [10], Homotopy Perturbation Method (HPM) [11], Homotopy Analysis Method (HAM) [11], Sumudu Transform Method (STM) [12], Projected Differential Transformation Method (PDTM) [13], Adomian Decomposition Method (ADM) with conformal derivative and Modified Homotopy Perturbation Method (MHPM) [14], Multivariate Padé Approximation [15] and ADM [16]. Recently fractional order European Vanilla option pricing model has also been studied by Yavuz and Özdemir [17]. These methods have their particular limits and inadequacies. Also, these methods require tremendous computational work and take high running time. As regards, RPSM is found to be an efficient and effective method initially recommended by the mathematician Abu Arqub [18]. The RPSM has been implemented in the generalized Lane-Emden equation [19], fractional KdV-Burgers equation [20] and fractional foam drainage equation [21]. Moreover, RPSM has also been effectively applied to the time–space-fractional Benny-Lin equation [22].

In this article, RPSM has been implemented for solving the fractional BS European option pricing equation. The performance and precision of the present method are studied by comparing the solution of the titled problem solved by RPSM and other analytical methods. However, to the best of the authors’ information, the time-fractional BS equation has not yet been solved by RPSM.

2 Preliminaries of fractional calculus and RPSM

Definition 2.1 [4, 6]

The Abel–Riemann (A–R) fractional derivative operator \(D^{\alpha }\) of order \(\alpha\) is defined as

where \(m \in Z^{ + } ,\) \(\alpha \in R^{ + }\).

Definition 2.2 [4, 6]

The integral operator \(J^{\alpha }\) in A–R sense is defined as

Following Podlubny [4] we may have

Definition 2.3 [4, 5]

The Caputo fractional derivative operator \(D^{\alpha }\) is well-defined as

Definition 2.4 [4,5,6]

-

(a)

$$D_{t}^{\alpha } J_{t}^{\alpha } g\left( t \right) = g\left( t \right),$$(8)

-

(b)

$$J_{t}^{\alpha } D_{t}^{\alpha } g\left( t \right)\, = g\left( t \right) - \sum\limits_{k = 0}^{m} {g^{\left( k \right)} \left( {0^{ + } } \right)} \frac{{t^{k} }}{k!},\quad {\text{for}}\quad m - 1 < \alpha \le m\,, \quad {\text{and}}\quad t > 0.$$(9)

Definition 2.5

A series of the form

is called FPSE at \(t = t_{0} ,\) where \(a_{k}\) is the coefficient of series.

Theorem 2.1

If \(f\left( t \right) = \sum\nolimits_{k = 0}^{\infty } {a_{k} \left( {t - t_{0} } \right)^{k\alpha } }\) and \(D^{k\alpha } f\left( t \right) \in C\left( {t_{0} ,\,t_{0} + R} \right)\) for \(k = 0,\,1,\,2, \ldots\) then the value of \(a_{k}\) in Eq. (10) is given by \(a_{k} = \frac{{D^{k\alpha } f\left( {t_{0} } \right)}}{{\varGamma \left( {k\alpha + 1} \right)}}\).

Definition 2.6

An FPSE about \(t = t_{0}\) of the form \(\sum\nolimits_{k = 0}^{\infty } {b_{k} (x)\left( {t - t_{0} } \right)^{k\alpha } }\) is called multiple FPSE about \(t = t_{0}\), where \(b_{k}\)’s are coefficients of the series.

3 RPS solution for FBSE

Let us consider the FBSE as [23]

with IC

3.1 Procedure of RPS solution

Step 1 Let us assume that FPSE of Eq. (11) with IC Eq. (12) about the point \(t = t_{0}\) is written as

In order to evaluate the value of \(w\left( {x,t} \right)\), let \(w_{m} \left( {x,t} \right)\) signifies the mth truncated series of \(w\left( {x,t} \right)\) as

For \(m = 0\), the 0th RPS solution of \(w\left( {x,t} \right)\) may be written as

Using Eqs. (15) and (14) can be modified as

So mth RPS solution can be evaluated after obtaining all \(b_{k} \left( x \right)\), \(k = 1,\,2, \ldots ,m\).

Step 2 Let us consider the residual function (RF) of Eq. (11) as

and mth RF may be written as

Some useful results about \(res_{m} \left( {x,t} \right)\) have been included in [21, 22] which are given below

-

i.

$$res\left( {x,t} \right) = 0.$$

-

ii.

$$\mathop {Lim}\limits_{m \to \infty } \,\,res_{m} \left( {x,t} \right) = res(x,t).$$

-

iii.

$$D_{t}^{i\alpha } res\left( {x,0} \right) = D_{t}^{i\alpha } res_{m} \left( {x,0} \right) = 0,\quad i = 0,1,2, \ldots ,m.$$(19)

Step 3 Putting Eq. (16) into Eq. (18) and calculating \(D_{t}^{{\left( {k - 1} \right)\alpha }} res_{m} \left( {x,t} \right),\quad k = 1,2, \ldots\) at \(t = 0\), together with the above three results, we have the following algebraic systems

Step 4 By solving Eq. (20), we can get the coefficients \(b_{k} \left( x \right),\quad k = 1,2, \ldots ,m\). Thus mth RPS approximate solution is derived.

4 Convergence analysis

Lemma 1 [4]

If \(f\left( x \right)\) is a continuous function and \(\alpha ,\,\beta > 0\) then

Theorem 4.1

-

(a)

If the FPS of the form \(\sum\nolimits_{n = 0}^{\infty } {a_{n} x^{n\alpha } } ,\quad x \ge 0\) converges at \(x = x_{1}\), then it converges absolutely \(\forall\)\(x\) satisfying \(\left| x \right| < \left| {x_{1} } \right|\).

-

(b)

If the FPS diverges at \(x = x_{1}\), then it will diverge \(\forall\)\(x\) such that \(\left| x \right| > \left| {x_{1} } \right|\).

Theorem 4.2 [22]

For \(0 \le n - 1 < \alpha \le n,\) suppose \(D_{t}^{r + k\alpha } ,D_{t}^{r + (k + 1)\alpha } \in C\left[ {R,t_{0} } \right] \times \left[ {R,t_{0} + R} \right]\),

where \(D_{t}^{r + k\alpha } = \mathop {\left( {D_{t} D_{t} D_{t} \ldots } \right)}\limits_{r - times} \mathop {\left( {D_{t}^{\alpha } D_{t}^{\alpha } D_{t}^{\alpha } \ldots } \right)}\limits_{k - times}\).

Proof

Using Lemma 1,

\(\square\)

Theorem 4.3 [22]

Let \(w\left( {x,t} \right),\,\,D_{t}^{k\alpha } w\left( {x,t} \right) \in C\left[ {R,t_{0} } \right] \times \left[ {R,t_{0} + R} \right]\) where \(k = 0,1,2, \ldots ,N + 1\) and \(j = 0,1,2, \ldots ,n - 1.\) Also \(D_{t}^{k\alpha } w\left( {x,t} \right)\) may be differentiated \(n - 1\) times concerning “\(t\)”. Then

where \(W_{j + i\alpha } \left( x \right) = \frac{{D_{t}^{j + i\alpha } w\left( {x,t_{0} } \right)}}{{\varGamma \left( {j + i\alpha + 1} \right)}}.\) Also, \(\exists\) a value \(\varepsilon ,\,\,0 \le \varepsilon \le t\), the error term has the term as follows,

Proof

From Eq. (23),

That is,

Considering the second term of Eq. (27), we have

Now the error term is

As \(N \to \infty ,\,\,\left\| {E_{N} \left( {x,t} \right)} \right\| \to 0\), thus \(w\left( {x,t} \right)\) can be estimated as follows\(w\left( {x,t} \right) \cong \sum\nolimits_{j = 0}^{n - 1} {\sum\nolimits_{i = 0}^{N} {W_{j + i\alpha } \left( x \right)\left( {t - t_{0} } \right)^{j + i\alpha } } }\), with the error term in Eq. (25).\(\square\)

5 Numerical examples

Example 1

According to the RPSM,\(w_{0} \left( {x,t} \right) = \hbox{max} (0,\,e^{x} - 1)\) and the infinite series solution of Eq. (12) can be written as

mth truncated series solution of \(w\left( {x,t} \right)\) becomes

For \(m = 1\), 1st RPS solution for Eq. (11) may be written as

To determine the value of \(b_{1} \left( x \right)\), we substitute Eq. (31) in the 1st residual function of Eq. (18) \(res_{1} \left( {x,t} \right) = \frac{{\partial^{\alpha } w_{1} \left( {x,t} \right)}}{{\partial t^{\alpha } }} - \frac{{\partial^{2} w_{1} \left( {x,t} \right)}}{{\partial x^{2} }} - \left( {k - 1} \right)\frac{{\partial w_{1} \left( {x,t} \right)}}{\partial x} + kw_{1} \left( {x,t} \right)\), this gives

Using (iii) of Eq. (19) for \(i = 0\) that is \(res\left( {x,0} \right) = res_{1} \left( {x,0} \right) = 0\), we get

For \(m = 2\), 2nd RPS solution for Eq. (11) can be written as

To find the value of \(b_{2} \left( x \right)\), Eq. (34) is substituted in the 2nd residual function of Eq. (18) \(res_{2} \left( {x,t} \right) = \frac{{\partial^{\alpha } w_{2} \left( {x,t} \right)}}{{\partial t^{\alpha } }} - \frac{{\partial^{2} w_{2} \left( {x,t} \right)}}{{\partial x^{2} }} - \left( {k - 1} \right)\frac{{\partial w_{2} \left( {x,t} \right)}}{\partial x} + kw_{2} \left( {x,t} \right).\) Then we have

Using (iii) of Eq. (19) for \(i = 1\) that is \(D_{t}^{\alpha } res\left( {x,0} \right) = D_{t}^{\alpha } res_{2} \left( {x,0} \right) = 0\), we get

For \(m = 3\), 3rd RPS solution for the Eq. (11) can be written as

Putting Eq. (37) in the 3rd residual function of Eq. (18) we obtain \(res_{3} \left( {x,t} \right) = \frac{{\partial^{\alpha } w_{3} \left( {x,t} \right)}}{{\partial t^{\alpha } }} - \frac{{\partial^{2} w_{3} \left( {x,t} \right)}}{{\partial x^{2} }} - \left( {k - 1} \right)\frac{{\partial w_{3} \left( {x,t} \right)}}{\partial x} + kw_{3} \left( {x,t} \right)\), we have

Using Eq. (19) for \(i = 2\) that is \(D_{t}^{2\alpha } res\left( {x,0} \right) = D_{t}^{2\alpha } res_{3} \left( {x,0} \right) = 0\), it follows that

Continuing this way, one may find the values of \(b_{4} \left( x \right)\), \(b_{5} \left( x \right)\),……So the solution of Eq. (11) may be written as

where \(E_{\alpha } \left( t \right) = \sum\nolimits_{n = 0}^{\infty } {\frac{{t^{n} }}{{\varGamma \left( {1 + n\alpha } \right)}},}\) is Mittag–Leffler function. Equation (42) is the analytical solution of Eq. (11) which is same as [10,11,12,13].

Case 1

Considering the vanilla call option [25] for \(\alpha = 1\), \(\sigma = 0.2,\,r = 0.04,\,\tau = 0.5.\) years then \(k = 2.\) The solution of Eq. (11) for this case is \(w\left( {x,t} \right) = \hbox{max} \left( {0,\,e^{x} - 1} \right)\,e^{ - 2t} + e^{x} \left( {1 - e^{ - 2t} } \right)\).

Case 2

For vanilla call option [25] with parameter \(\sigma = 0.2,\,r = 0.01,\,\alpha = 1,\tau = 1\,\) year then \(k = 5\).In this example, we obtain the solution of Eq. (11) as \(w\left( {x,t} \right) = \hbox{max} \left( {0,\,e^{x} - 1} \right)\,e^{ - 5t} + e^{x} \left( {1 - e^{ - 5t} } \right)\,.\)

Example 2

Let us consider the generalized BS equation [24]

with IC

According to the RPSM, \(w_{0} \left( {x,t} \right) = \hbox{max} \left( {0,\,x - 25e^{ - 0.06} } \right)\), and the FPS solution of Eq. (43) can be written as

In consideration of the present method, mth truncated series solution of \(w\left( {x,t} \right)\) becomes

and mth residual function of Eq. (43) may be written as

For \(m = 1\), 1st RPS solution for the Eq. (43) can be written as

By substituting Eq. (48) into the 1st residual function of Eq. (47) as

We get

Using (iii) of Eq. (19) for \(i = 0\) that is \(res\left( {x,0} \right) = res_{1} \left( {x,0} \right) = 0\), we get

For \(m = 2\), 2nd RPS solution for the Eq. (43) is written as

To find out the value of \(b_{2} \left( x \right)\), substitute Eq. (52) into the 2nd residual function of Eq. (47) as \(res_{2} \left( {x,t} \right) = \frac{{\partial^{\alpha } w_{2} \left( {x,t} \right)}}{{\partial t^{\alpha } }} + 0.08\left( {2 + \sin x} \right)^{2} x^{2} \frac{{\partial^{2} w_{2} \left( {x,t} \right)}}{{\partial x^{2} }} + 0.06x\frac{{\partial w_{2} \left( {x,t} \right)}}{\partial x} - 0.06w_{2} \left( {x,t} \right),\) we get

Using (iii) of Eq. (19) for \(i = 1\) that is \(D_{t}^{\alpha } res\left( {x,0} \right) = D_{t}^{\alpha } res_{2} \left( {x,0} \right) = 0\), we get

For \(m = 3\), 3rd RPS solution for the Eq. (43) is reduced as

To determine the value of \(b_{3} \left( x \right)\), putting Eq. (55) into the 3rd residual function of Eq. (47) as \(res_{3} \left( {x,t} \right) = \frac{{\partial^{\alpha } w_{3} \left( {x,t} \right)}}{{\partial t^{\alpha } }} + \left( {2 + \sin x} \right)^{2} x^{2} 0.08\frac{{\partial^{2} w_{3} \left( {x,t} \right)}}{{\partial x^{2} }} + 0.06x\frac{{\partial w_{3} \left( {x,t} \right)}}{\partial x} - 0.06w_{3} \left( {x,t} \right),\) one may get

By Eq. (19) for \(i = 2\) that is \(D_{t}^{2\alpha } res\left( {x,0} \right) = D_{t}^{2\alpha } res_{3} \left( {x,0} \right) = 0\), it follows that

Continuing as above, one may obtain the values of \(b_{4} \left( x \right)\), \(b_{5} \left( x \right)\),……,So the solution of Eq. (43) may be written as

Equation (58) is the exact solution of Eq. (43) which is the same as given in [11]. For \(\alpha = 1\), we have \(w\left( {x,t} \right) = \hbox{max} \left( {x - 25e^{ - 0.06} ,0} \right)\,e^{0.06t} + x\,\left( {1 - e^{0.06\,t} } \right)\). This is an analytical solution of fractional BS Eq. (43).

6 Conclusion

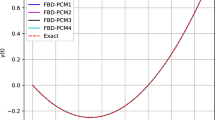

In this study, a new iterative technique namely RPSM is effectively applied for finding the exact solution of FBSE with high accuracy. The convergence analysis is also described to validate the efficacy and powerfulness of the present technique. Solution plots of Eqs. (42) and (58) have been illustrated in Figs. 1, 2, 3, 4, 5 and 6 for different values of \(\alpha\). It is clear from the figures that the European option prices increase with the decrease of \(\alpha\). Moreover at \(\alpha = 0.2\) it is seen that option is overpriced. The solutions in special cases achieved from the proposed method are in good agreement with the other methods described in [10,11,12,13] which shows that the method is effective, convenient and gives closed form solution in the series form.

The plot of Eq. (42) represents the surface \(w\left( {x,t} \right)\) at a \(\alpha = 1\), b \(\alpha = 0.2\), c \(\alpha = 0.5\), d \(\alpha = 0.6\) and e \(\alpha = 0.8\)

The solution plots of Eq. (42) for different values of \(\alpha\) at a \(x = 0.2\), b \(x = 0.5\)

The plot of Eq. (58) represents the surface \(w\left( {x,t} \right)\) at a \(\alpha = 1\), b \(\alpha = 0.2\), c \(\alpha = 0.4\), d \(\alpha = 0.6\) and e \(\alpha = 0.8\)

The solution plots of Eq. (58) for different values of \(\alpha\) at a \(x = 0.2\) and b \(x = 0.5\)

References

Black F, Scholes M (1973) The pricing of options and corporate liabilities. J Polit Econ 81:354–637

Manale JM, Mahomed FM (2000) A simple formula for valuing American and European call and put options. In: Banasiak J (ed) Proceeding of the Hanno Rund workshop on the differential equations. University of Natal, Durban, pp 210–220

Hilfer R (ed) (2000) Applications of fractional calculus in physics. World Scientific Publishing Company, Singapore, pp 87–130

Podlubny I (1999) Fractional differential equations. Academic Press, New York

Caputo M (1969) Elasticita e dissipazione. Zani-Chelli, Bologna

Miller KS, Ross B (1993) An introduction to the fractional calculus and fractional differential equations. Wiley, New York

Kilbas AA, Srivastava HM, Trujillo JJ (2006) Theory and applications of fractional differential equations. Elsevier Science Publishers, Amsterdam

Heydari MH, Hooshmandasl MR, Ghaini FMM, Cattani C (2016) Wavelets method for solving fractional optimal control problems. Appl Math Comput 286:139–154

Heydari MH, Hooshmandasl MR, Shakiba A, Cattani C (2016) An efficient computational method based on the hat functions for solving fractional optimal control problems. Tbilisi Math J 9:143–157

Kumar S, Yildirim A, Khan Y, Jafari H, Sayevand K, Wei L (2012) Analytical solution of fractional Black-Scholes European option pricing equation by using Laplace transform. J Fract Calc Appl 2:1–9

Kumar S, Kumar D, Singh J (2014) Numerical computation of fractional Black–Scholes equation arising in financial market. Egypt J Basic Appl Sci 1:177–183

Elbeleze AA, Kılıcman A, Taib BM (2013) Homotopy perturbation method for fractional Black–Scholes European option pricing equations using Sumudu transform. In: Mathematical problems in engineering, p 7

Edeki SO, Ugbebor OO, Owoloko EA (2015) Analytical solutions of the Black–Scholes pricing model for european option valuation via a projected differential transformation method. Entropy 17:7510–7521

Yavuz M, Özdemir N (2018) A different approach to the European option pricing model with new fractional operator. Math Model Nat Phenom 13:1–12

Özdemir N, Yavuz M (2017) Numerical solution of fractional Black–Scholes equation by using the multivariate Padé approximation. Acta Phys Pol A 132:1050–1053

Yavuz M, Özdemir N (2018) A quantitative approach to fractional option pricing problems with decomposition series. Konuralp J Math 6:102–109

Yavuz M, Özdemir N (2018) European Vanilla option pricing model of fractional order without Singular Kernel. Fractal Fract 2(1):1–11

Abu Arqub O (2013) Series solution of fuzzy differential equations under strongly generalized differentiability. J Adv Res Appl Math 5:31–52

Abu Arqub O, El-Ajou A, Bataineh A, Hashim I (2013) A representation of the exact solution of generalized Lane Emden equations using a new analytical method. In: Abstract and applied analysis, p 10

El-Ajou A, Abu Arqub A, Momani S (2015) Approximate analytical solution of the nonlinear fractional KdV-Burgers equation: a new iterative algorithm. J Comput Phys 293:81–95

Alquran M (2014) Analytical solutions of fractional foam drainage equation by residual power series method. Math Sci 8(4):153–160

Hira T, Ghazala A (2017) Residual power series method for solving time-space-fractional Benney-Lin equation arising in falling film problems. J Appl Math Comput 55:683–708

Gulkac V (2010) The homotopy perturbation method for the Black–Scholes equation. J Stat Comput Simul 80:1349–1354

Cen Z, Le A (2011) A robust and accurate finite difference method for a generalized Black–Scholes equation. J Comput Appl Math 235:3728–3733

Company R, Navarro E, Pintos JR, Ponsoda E (2008) Numerical solution of linear and nonlinear Black–Scholes option pricing. Comput Math Appl 56:813–821

Acknowledgements

The first author would like to thank Department of Science and Technology, Govt. of India for giving INSPIRE fellowship (IF170207) to carry out the present work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors state that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Jena, R.M., Chakraverty, S. A new iterative method based solution for fractional Black–Scholes option pricing equations (BSOPE). SN Appl. Sci. 1, 95 (2019). https://doi.org/10.1007/s42452-018-0106-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42452-018-0106-8