Abstract

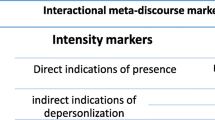

This study validates the measure, research-abstract writing assessment (RAWA), with two rating scales of global move of rhetorical purpose and local pattern of language use in applied linguistics (the scale level/score ranging from 0 to 5). The study adopted the embedded design of mixed-methods research that included both the quantitative latent regression model (LRM) for testing how the examinees’ (30 EFL doctoral students, 30 EFL master’s students) RAWA responses can be explained by examinee-group competence, scale-by-level difficulty of two scales, and rater expertise (5 raters); and the qualitative interviews on five raters’ perceptions. The LRM results revealed the scale-level difficulty effect, namely across the scales level 1 and level 5 of the global move being the easiest and the most difficult. The expert raters rated with lower scores. They also adopted the advanced subscales (i.e., content elements, brevity) as criteria and conducted self-monitoring while rating. The findings reveal the sub-competences of research-abstract writing, namely the global move sub-competence of move and content elements and the local pattern sub-competence of language use and brevity. Pedagogically, EFL graduate students should further develop the sub-competences of content element and brevity, once mastering those of move and language use as the basics.

中文摘要

本文驗證一個論文摘要寫作評量, 此評量使用兩個評分表 (整體性修辭文步、局部性語言構式)於應用語言學學門的論文摘要寫作評量之中 。本文使用鑲嵌式設計的混合研究法, 亦即以質性評分者訪談結果(5位評分者)補充量性潛在迴歸模式分析結果 。潛在迴歸模式用於考驗受試者 (碩士生、博士生各30位)的寫作評量作答反映是否可被受試者知能、評分量尺各等級的難度、評分者的專業度三個變項解釋 。模式考驗結果指出評分量尺各等級的難度對受試者反映有解釋效果(如:修辭文步評量表的一分、五分分別為困難度最低、最高的反映); 評分者的專業度有效果, 即專家評分者給分較低 。專家評分者通常採用進階的評分量尺 (內容要件、簡潔性), 作為評分歷程中自我檢視的標準 。這些研究發現揭示論文摘要寫作的次項知能, 即整體性修辭文部知能包含文步使用、內容要件, 局部性語言構式包含語言使用、簡潔性 。教學意涵如下 : 外語研究生應先發展語言和文步使用等基礎知能, 後續再發展內容要件和簡潔性知能 。

Similar content being viewed by others

References

Adams, R. & Wu, M. (2010). Unidimensional regression model. Retrieved December 1, 2017, from http://www.coe.int/t/dg4/linguistic/Source/CEF-refSupp-SectionH.pdf.

AUTHORS (2014).

AUTHORS (2015).

Cargill, M., Gao, X., Wang, X., & O’Connor, P. (2018). Preparing Chinese graduate students of science facing an international publication requirement for graduation: adapting an intensive workshop approach for early-candidature use. English for Specific Purposes, 52, 13–26.

Chang, P. (2012). Using a stance corpus to learn about effective authorial stance-taking: a textlinguistic approach. ReCALL, 24(2), 209–236.

Cho, D. W. (2009). Science journal paper writing in an EFL context: the case of Korea. English for Specific Purposes, 28, 230–239.

Christensen, K. B. & Kreiner, S. (2004). Testing the fit of latent regression models. Communications in statistics theory and methods, 33(6), 1341–1356.

Creswell, J. W. (2014). Research design: qualitative, quantitative, and mixed methods approaches (4th ed.). London: Sage Publications Ltd.

Dattalo, P. (2008). Determining sample size: balancing power, precision, and practicality. New York: Oxford University Press.

DeCarlo, L. T. (2002). A latent class extension of signal detection theory, with applications. Multivariate Behavioral Research, 37, 423–451.

Engelhard, G. (2013). Invariant measurement: using Rasch models in the social, behavioral and health sciences. New York: Routledge.

Field, A. (2009). Discovering statistics using SPSS (3rd ed.). London: Sage.

Green, A. (2013). Washback in language assessment. International Journal of English Studies, 13(2), 39–51.

Kane, M. T. (2013). Validating the interpretations and uses of test scores. Journal of Educational Measurement, 50(1), 1–73.

Hamp-Lyons, L. (2011). Writing assessment: shifting issues, new tools, enduring questions. Assessing Writing, 16(1), 3–5.

Hsieh, W. M., & Liou, H. C. (2008). A case study of corpus-informed online academic writing for EFL graduate students. CALICO Journal, 26(1), 28–47.

Lallmamode, S. P., Daud, N. M., & Kassim, N. L. A. (2016). Development and initial argument-based validation of a scoring rubric used in the assessment of L2 writing electronic portfolios. Assessing Writing, 30, 44–62.

Leckie, G., & Baird, J. A. (2011). Rater effects on essay scoring: a multilevel analysis of severity drift, central tendency, and rater experience. Journal of Educational Measurement, 48(4), 399–418.

Lee, D., & Swales, J. (2006). A corpus-based EAP course for NNS doctoral students: moving from available specialized corpora to self-compiled corpora. English for Specific Purposes, 25, 56–75.

Linacre, J. M. (2002). Optimizing rating scale category effectiveness. Journal of Applied Measurement, 3, 85–106.

Linacre, J. M. (2006). A user’s guide to FACETS: Rasch-model computer program. Retrieved July 30, 2017 from: https://www.winsteps.com/winman/misfitdiagnosis.htm.

Liou, H. C., Yang, P. C., & Chang, J. S. (2012). Language supports for journal abstract writing across disciplines. Journal of Computer Assisted Learning, 28(4), 322–335.

Masters, G. N. (1982). A Rasch model for partial credit scoring. Psychometrika, 47, 149–174.

Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). New York: Macmillan.

Messick, S. (1995). Validity of psychological assessment: validation of inferences from person’s responses and performance as scientific inquiry into score meaning. American Psychologist, 50(9), 741–749.

Sudweeks, R., Reeve, S., & Bradshaw, W. (2005). A comparison of generalizability theory and many-facet Rasch measurement in an analysis of college sophomore writing. Assessing Writing, 9, 239–261.

Swales, J. (1990). Genre analysis: English in academic and research settings. Cambridge, UK: Cambridge University Press.

Swales, J., & Feak, C. (2009). Abstracts and the writing of abstracts. Ann Arbor, MI: University of Michigan Press.

Swales, J., & Feak, C. (2012). Academic writing for graduate students: essential tasks and skills (2nd ed.). Ann Arbor, MI: University of Michigan Press.

Wang, J., Engelhard, G., Raczynski, K., Song, T., & Wolf, W. (2017). Evaluating rater accuracy and perception for integrated writing assessments using a mixed-methods approach. Assessing Writing, 33, 36–47.

Wright, B. D., & Masters, G. N. (1982). Rating scale analysis. Chicago: MESA Press.

Wright, B. D., & Stone, M. (1979). Best test design. Chicago: MESA Press.

Wu, M. L., Adams, R. J., Wilson, M. R., & Haldane, S. A. (2007). ACERConQuest version 2: generalised item response modelling software. Camberwell: Australian Council for Educational Research.

Wu, M., Tam, H. P., & Jen, T. H. (2016). Chapter 14 Bayesian IRT models (MML estimation). In M. Wu, H. P. Tam, & T. H. Jen (Eds.), Educational measurement for applied researchers (pp. 261–282). Singapore, SG: Springer.

Acknowledgments

This research was supported by Ministry of Science and Technology in Taiwan under Grant MOST 103-2410-H-656-003.

Author information

Authors and Affiliations

Corresponding author

Electronic Supplementary Material

ESM 1

(DOCX 21 kb)

Rights and permissions

About this article

Cite this article

Lin, MC. Validating Research-Abstract Writing Assessment Through Latent Regression Modeling and Rater’s Lenses. English Teaching & Learning 43, 297–315 (2019). https://doi.org/10.1007/s42321-019-00030-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42321-019-00030-5

Keywords

- Validation of the research-abstract writing assessment (the RAWA)

- The embedded design of mixed-methods research

- The latent regression modeling (the LRM)