Abstract

Study breaks and exam revision weeks are increasingly embedded in learning design under the assumption that students would make use of this time to catch up with their study or prepare for upcoming assessment tasks. However, there remains a paucity of empirical evidence to evaluate to what extent the implementation of study breaks, preparation and exam revision weeks impact students’ engagement and academic performance. By applying learning analytics in a computer-based assessment (CBA) setting, this study investigates how study break weeks and assessment preparation weeks impacted the odds of passing a module using a mixed-effect logistic regression on 123,916 undergraduate students in 205 modules over several semesters from 2015–2017 at the Open University. Furthermore, we investigated the intertemporal characteristics of student engagement during preparation weeks for a final assessment in an Introductory Business course over three semesters. A mixed-effect logistic regression was used to model behavioural engagement of 3385 students on the VLE (i.e. click counts) over three semesters during the assessment preparation weeks. Our findings indicated a positive association between study breaks and the odds of passing a course, while there was no statistically significant effect in relation to the number of assessment preparation and revision weeks. Analysis of behavioural engagement on the VLE suggested that there was a higher proportion of passed students remained active during preparation and exam revision weeks compared to failed students. Compared to the pass group, the fail group also exhibited a stronger pattern of procrastination. This study offers new insights that could help institution management and course designer to evaluate the efficacy of using study breaks and exam preparation weeks to improve student retention.

Similar content being viewed by others

1 Introduction

Assessment is a key driver for learning (Boud 2017; Coates 2016). In the last 20 years, a range of approaches supported by computers have been adopted to facilitate Assessment of Learning (AoL) (Boud 2017; Boud and Falchikov 2006; Coates 2016), as well as Assessment for Learning (AfL) (Carless 2007; Carless et al. 2011). Broadly speaking these approaches have been bundled under the umbrella term computer-based assessment (CBA). CBA can be defined as assessment presented using digital means and submitted electronically. CBA offers many advantages over traditional forms of assessment as it is electronically built and, therefore, generates potentially useful student engagement data on assessment activities (Nguyen et al. 2017).

In the last two decades, a wide range of CBA approaches have been developed using the affordances of technology and the internet, ranging from adaptive testing and intelligent tutoring (Koedinger et al. 2013; Tempelaar et al. 2009), e-portfolios (Aguiar et al. 2014), multiple-choice testing, peer-assessment (Van Zundert et al. 2010), stealth-assessment (Sharples et al. 2015), to CBA tools aiming to improve writing (Whitelock et al. 2015). For example, using an adaptive testing system called ALEKS in mathematics Tempelaar et al. (2009) showed that in particular novice and the so-called step-wise learners rather than deep learners mostly benefited from continuous opportunities to test and develop their mathematics skills via CBA, which was automatically tailored towards their abilities. Similarly, in a fine-grained log file study amongst 16,219 children in 44 countries on climate control that compared complex process solving, Greiff, Wüstenberg, and Avvisati (2015) found substantial differences in problem-solving strategies and performance, which differed across countries.

As argued by several researchers (Greiff et al. 2017; Koedinger et al. 2013; Trevors et al. 2016), these “micro-level” CBA studies can provide a rich, integrated understanding of how learners engage with particular learning activities. For example, in a study of 1476 Finnish children completing nine complex problems, Greiff et al. (2016) found that there was an optimal level of effort spent on these tasks and consecutive performance, as well as a negative relation between the frequency of changes made in learning strategy and performance. In particular, by linking what students are doing on a fine-grained level with what teachers are expecting students to do may help to inform how to improve CBA practice.

On a macro-level of analysis of CBA practice, a range of studies have recently become available from the Open University UK (OU), the largest distance learning provider in Europe, that have highlighted a rather diverse and eclectic mix of CBA practices (Nguyen et al. 2017; Rienties and Toetenel 2016; Toetenel and Rienties 2016). For example, Toetenel and Rienties (2016) compared the learning designs of 157 modules at the OU and found that, on average, 21.50% of students’ total workload was allocated for learning activities classified as assessment. Nonetheless, there was substantial variation in how modules used assessment (SD = 14.58%, range 0–78%), with some modules having no activities labelled as assessment and others having the vast majority of their activities labelled as assessment. In a follow-up fine-grained study amongst 38 modules using CBA (Nguyen et al. 2017) found that up to 69% of explained variance in weekly engagements by students was a direct result of how teachers designed their respective modules. In particular, assessment activities significantly predicted weekly engagement and the timing of engagement. Nonetheless, follow-up fine-grained analyses of six CBA modules indicated a wide diversity of practice in the number, timing, and types of assessments used (Nguyen et al. 2017).

Although substantial progress has been made in advancing our insights into how CBA directly and indirectly drive students’ behaviour and cognition (Greiff et al. 2016; Trevors et al. 2016), relatively few empirical studies have focussed on how students are making “time-investment choices” when and how to engage with these different types of CBAs. With the arrival of learning analytics and fine-grained log data, there is a wealth of opportunities to potentially test and critically examine how and when students are using CBAs in their learning processes and outcomes.

Indeed, recent research in the field of learning analytics has found that the notion of time is an essential but complex concept, whereby students make a range of conscious and self-regulated decisions when, where, what and how to study (Kovanovic et al. 2015; Malmberg et al. 2017; Nguyen et al. 2017). For example, our recent research in two implementations of an online environmental management module with 2 CBAs and a final exam (Nguyen et al. 2018) indicated that many students made conscious decisions not to follow the module schedule, by either studying well in advance or catching up. While most students were found to complete particular assignments at the prescribed due date, many students did not necessarily stick to the module schedule for other learning activities (e.g. reading, discussing, watching).

Building on a large dataset of 205 modules at the OU that has been extensively mapped regarding CBA approaches, the first main aim of this study is to provide a macro perspective of CBA approaches used at the OU, and how these CBA approaches might positively or negatively influence learning outcomes. In particular, we investigate whether the so-called “study break weeks” (i.e. weeks whereby teachers have specifically not included any learning activities so that students can take a breather) and “exam preparation weeks” may impact the odds of students passing a module. The second main aim of this study is to conduct a fine-grained log-data study of one large online module that extensively used CBA, whereby we want to critically examine how and when learners were engaging with respective CBA activities.

2 Computer-based assessment, time management and learning analytics

There is a wide body of psychological literature linking people’s time perspectives with how people make common judgements, decisions, and actions (e.g. Carelli et al. 2011; Zimbardo and Boyd 1999). Similarly, in educational research there is substantial interest in how students are strategically making study decisions, when and where to study (Gelan et al. 2018; Heileman et al. 2015; Kovanovic et al. 2015; Nguyen et al. 2017, 2018; Panadero et al 2016; Winne 2017). The use of CBA data may provide deep insights into how students learn and solve complex assignments and tasks (Greiff et al. 2015; Tempelaar et al. 2015), and eventually might help researchers to develop a specific CBA-specific cognitive theory (Greiff et al. 2017; Kirschner et al. 2017). As argued by a critical commentary on a recent special issue on CBA, Greiff et al. (2017, p. 718) indicated that “there is a rather urgent need for an integrated and comprehensive theoretical foundation that drives the design and the setup of CBAs and provides guidance for the entire process of developing, employing, interpreting, and making use of computer-delivered assessment instruments”.

In this study, we argue that the impact of CBA designs on the learning processes of students may be better understood, and eventually theoretically grounded, with the support of a better linkage of digital traces of actual learners’ interactions with CBA activities using learning analytics. As argued by (Nguyen et al. 2017), learning analytics research has found that the way in which teachers design tasks and assessments can influence how students are engaging with CBA tasks and their academic performance at a micro-level (within one assessment or task: see for example Greiff et al. (2015)) and a macro-level (across various assessments within or across modules: see for example (Koedinger et al. 2013; Nguyen et al. 2017; Rienties and Toetenel 2016; Toetenel and Rienties 2016)).

For example, Agudo-Peregrina et al. (2014) found that interactions with CBA tools, interactions with peers and teachers, as well as active participation were significant predictors of academic performance in six online and two blended modules. Similarly, in an introductory computer programming course, Brito and de Sá-Soares (2014) found that a high frequency of CBA at weekly level was one of the most effective ways of setting students on the route to success. In a flipped classroom of Business French, Gelan et al. (2018) used process-mining techniques amongst 285 students and found that most students followed their self-study sessions by the design of the course. In particular, most students tended to access the various reading materials in preparation for the exam, whereby failing students started to work either very late in the course or dropped out in the first 2 weeks (Gelan et al. 2018).

Weekly CBA may help to speed up the cycle of productive failure (Kapur 2008)—“fail fast to learn sooner” (Brito and de Sá-Soares, 2014)—because it can provide automated feedback. Indeed, a recent fine-grained study by Tempelaar et al. (2017) found that students with effective metacognitive strategies used worked examples, which provided help on a particular CBA task, at the beginning of their learning process, while students with sub-optimal learning strategies tended to use these examples only at the end of their learning process. Similar findings were reported by Gelan et al. (2018) and (Nguyen et al. 2018), whereby beyond the intensity of engagement the timing and types of engagements were primarily distinctive between “successful” and “less successful” students.

2.1 Online learning and self-regulated learning

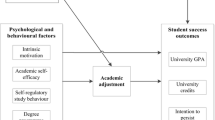

In particular when students are learning in online environments with a range of learning activities with a range of choices and options when and how to study, including CBA (Nguyen et al. 2017; Trevors et al. 2016), “appropriate” Self-Regulated Learning (SRL) strategies are needed for achieving individual learning goals. Zimmerman (2000) defined self-regulation as “self-generated thoughts, feelings and actions that are planned and cyclically adapted to the attainment of personal learning goals”. Indeed a vast body of research has consistently found that self-regulation, directly and indirectly, impacts goal setting, motivation, engagement, and academic performance (Trevors et al. 2016; Winne 2017).

For example, in a study of 788 MOOC learners Littlejohn et al. (2016) found that learners’ motivations and goals substantially influenced learners’ conceptualisations of the learning environment, and how they engaged with the learning processes. Indeed, in a recent meta-review of 12 studies of SRL nested in online higher education, Broadbent and Poon (2015) found that metacognition, time management, effort regulation and critical thinking were significantly associated with academic achievement. However, the effect sizes were relatively small, whereby correlations ranged between 0.05 and 0.14, in particular in comparison to face-2-face settings (Broadbent and Poon 2015). In part, this small effect might be explained by the complex nature of online learning, and in part because most of the selected studies did not specifically measure fine-grained log data of what students were doing.

An indicator of a poor self-regulating process is procrastination. Academic procrastination can be viewed as leaving the academic duties to the last minute like preparation for exams and doing homework (Solomon and Rothblum 1984). A recent meta-analysis of the relationship between procrastination and academic performance has reaffirmed most of the previous findings, which was a negative association between procrastinating and academic performance (Kim and Seo 2015). While the use of self-report questionnaire has been predominantly present in the literature (Kim and Seo 2015), recent research on procrastination in blended and online learning environment has started capturing behavioural engagement through log analysis to understand how and when students procrastinate (Cerezo et al. 2017; Goda et al. 2015). For example, a study on learning behavioural types of 441 students in five e-learning courses suggested that 69.16% of the students fit within a procrastination profile. Students exhibiting procrastinating behavioural patterns performed significantly worse than students with a ‘learning habit’ pattern and ‘chevron’ pattern (Goda et al. 2015). Another study on 140 undergraduate students in a blended learning setting which tracked and analysed students’ behaviour on an LMS confirmed the negative effect of procrastination on academic performance (Cerezo et al. 2017).

2.2 Providing flexibility in online learning

Given that studying online and at a distance in comparison to f2f education is perhaps especially hard (Broadbent and Poon 2015; Nguyen et al. 2017; Rienties and Toetenel 2016; Simpson 2013; Toetenel and Rienties 2016), many distance learning providers purposefully design some forms of flexibility in terms of workload and study breaks to accommodate adult learners, who mostly also have work, family and caring responsibilities. As highlighted by a recent report on designing effective online modules by van Ameijde et al. (2016), providing a consistent and balanced workload throughout an online module with opportunities to take a “breather”, or to catch-up, may be essential for online learners. Indeed, fine-grained analyses of six online CBA modules by Nguyen et al. (2017) of weekly workloads indicated that many teachers consciously or subconsciously designed non-study weeks into the module schedule. At the same time, these fine-grained analyses of six online CBA modules by Nguyen et al. (2017) showed that when teachers designed high workloads for a particular week, most students tried to balance this workload by working more intensively before or after that high workload week.

In follow-up work linking predictive analytics data from excellent (grade > 75%), pass (grade > 40%), and failing students with actual engagement data, which was combined with the respective learning design, Nguyen et al. (2018) found that most excellent students studied well before the respective study week. In addition, excellent students often revisited various previously engaged learning activities, while in particular failing students mainly lagged behind in terms of the module schedule, and were primarily in catch-up mode (Nguyen et al. 2018). A similar finding was noted by Gelan et al. (2018), who found that students who successfully passed Business French studied in line with the course schedule.

In particular, when modules do not specifically design study breaks, one obvious assumption would be that for some groups of students the lack of opportunities to catch up might eventually “force” them to stop, as they have fallen too far behind. Therefore, in many modules at the OU teachers design specific breaks in the study, where no study activities are planned (Cross et al. 2016; Nguyen et al. 2017). In part, these study breaks are a result of cultural festivities, such as Christmas and Easter, but in part, these study breaks are also specifically introduced to help students to catch-up or to allow students to take a breather before a new part of a module starts. Alternatively, one might hypothesise that for students who are progressing well and are “in the flow” of the module schedule, or students who prefer strict deadlines with no opportunities to “relax a bit”. A study break in the module schedule might actually disrupt their flow, so it would be important to test whether such study breaks might have a positive or negative effect on students’ engagement and academic performance over time.

In this study, we specifically distinguish study breaks from exam preparation, as study breaks can occur at any point of time during the online module, while exam preparation is specifically linked with the final assignment at the end of the module, and obviously this is linked to a concrete learning activity (i.e. exam). Indeed, Cross et al. (2016) argued that students’ behaviour during exam preparation and revising is distinct from an engagement at other times during the module. In a study investigating 281 students’ perceptions of assessment practices at the OU and revision practices, in particular, Cross et al. (2016) found that most students self-reported that they spent 20–30 h revising for the final exam. Students indicated to mostly benefit from using sample exams and answers, tutor support, and feedback from assignments. However, no correlations were found between (self-reported) time spent revising, design and satisfaction and completion of online modules (Cross et al. 2016).

2.3 Research questions

Most of the studies above have conceptualised and tested different variations of CBA in a single module context. By aligning the designs of a range of modules using different types and combinations of CBA with fine-grained data relating to behaviour, the researcher may obtain valuable insights as to how their students are “reacting” to the design of CBA in online distance learning settings. Given the complex and flexible nature of online learning and the perhaps more demanding intertwining of studying with balancing work and private lives of adult learners (Broadbent and Poon 2015; Simpson 2013), there is an urgent need to understand how online learners are choosing when and how to work with CBA.

Building on a large dataset of 205 modules at the OU that has been extensively mapped regarding CBA approaches, the first main aim of this study is to provide a macro perspective of CBA approaches used at the OU. The second main aim of this study is to conduct a fine-grained log-data study of one large online module, hereby labelled as “Introduction to Business”, that extensively used CBA, whereby we want to critically examine how students make use of their preparation week before the final report. Therefore, we will address the following two research questions.

RQ1 How do study break weeks and exam preparation weeks influence the odds of passing a module?

RQ2 How do students engage in the VLE during exam revision weeks?

3 Method

3.1 Setting and participants

This study took place at the OU, a distance learning institution with an open-entry policy and the largest university in the UK. As previous research at the OU has found substantial differences between postgraduate and undergraduate learning designs (Li et al. 2017; Rienties and Toetenel 2016), this study included only undergraduate modules that have run from 2015 to 2017. Withdrawn students were excluded given our research question focusing on the final academic outcome (pass/fail). A single 10 credit module was also excluded. There were in total 205 modules and 123,916 completed students that were included in the final analysis.

Our dataset consists of a representative sample across levels of study, level 1 (n = 79), level 2 (n = 67), and level 3 (n = 59) which correspond to the first year, the second year, and the third-year course level. The majority of these modules had 60 credits (67%), followed by 30 credits (33%). These modules related to six broad disciplines (45% in Social Sciences, 11% in STEM, 25% in Business, 10% in Law, 8% in Arts, and 3% in Languages).

In line with the OU demographics, there were more female students (61%) than male students (39%). Most of these students were from the UK (96%) and declared their ethnicity to be ‘white’ (85%). Students varied considerably in age, with 24% under 25 years old, 37% aged 26–35, 22% aged 36–45, 13% aged 45–55, and 5% aged 56 and over. More than half of them were working full-time (53%), while 21% were working part-time, 7% were looking after the home/family, and 5% were unemployed and looking for a job. Regarding learners’ qualifications, there are no formal academic entry requirements at an undergraduate level at the OU. In this study, 40% of the students had A levels or equivalent (suggesting that they had two or more years of post-compulsory schooling), 28% had less than A levels (suggesting that they had not progressed beyond compulsory schooling), 24% had higher education degrees, and 3% had a postgraduate qualification. On average, 10% of the students had a reported disability.

To address research question 2, trace data (373,189 log activities during EMA revision weeks) were collected on 3385 students who completed the course in Introduction to Business over three different semesters (2015J, 2016B, 2016J), with J refers to fall semesters, and B refers to spring semesters. This module was selected because it represents a typical learning design of level 1 modules at the OU, with 60 credits, 32 weeks in length, and consisted of five CBAs and a final report. There were two study break weeks during Christmas, one study break week for Easter, and 2–4 exam preparation weeks before the final report. Most of the assignments required students to write a short essay of 800–1000 words, around 2000 words for the fifth assignment, while the final report was a 3000 words essay. The five assignments and the final report each counted for 50% of the final mark. To pass the module, students needed to achieve at least 40% weighted average for the assignments, and 40% for the final report. On average, 65% of the enrolled students finished the module. On average, 60% of the total enrolled students passed Introduction to Business.

3.2 Instruments

3.2.1 Module data

Assessment data in this study were a result of a purposeful data collection on assessment strategies on undergraduate law and business modules. The original scope was to explore whether there were any relationships between the number of summative assessment points on a module and whether the module had an exam or End of Module Assessment (EMA), and the Tutor Marked Assignment (TMA) submission rates, completion rate and pass rate of students on each module. The scope was then broadened to include other assessment-related aspects of module design that might affect student success, such as the timing of summative assessment points, TMA/EMA/exam preparation weeks, and study break weeks. Within the scope of this study, we focused on three measurements: the number of study break weeks, and the percentage of TMA revision weeks, and EMA revision weeks relative to the total number of study weeks.

In addition to CBA data collected for each module, we also included the number of credits (as proxies of total workload, with each credit equal to 10 h of study), the number of registered students, the course level (1 = first year, 2 = second year, 3 = third year), and the type of final assessment (i.e. final report, sit-in exam) as controls.

3.2.2 Student data

The main outcome variable in this study was whether students pass (or fail) the module. Demographics data on gender, age, ethnicity, and prior education level were also included as controls.

Log counts of the Introduction to Business module were gathered for each type of learning activity from week -3 until week 36 over four different semesters. The use of log count on individual learning activities allowed us to explore the nature of learning tasks in finer detail. We differentiate between different types of learning content such as study materials, exam materials, forum activities, and other resources. Based on these data, we examined the intensity and temporality of student engagement during exam revision weeks. In particular, we consider the following aspects:

-

Type of activities:

-

Study materials from previous weeks

-

Exam materials

-

Forums (i.e. online tutorial, student online café, forums)

-

Other resources (library, additional resources)

-

-

Duration before exam deadline: The difference in the number of days between the exam deadline date and the timestamp of each study activities

3.3 Data analysis

All the data of 205 modules were anonymised in line with the university’s ethics guidelines.Footnote 1 Furthermore, trace data (log counts) on 3385 completed students from week -3 to week 36 in Introduction to Business were aggregated by each type of learning materials (study materials, exam materials, forums, and other resources.) All personal identities were removed and the results were anonymized following the OU learning analytics’ ethical guidelines.Footnote 2

To answer RQ1, we performed a mixed-effect logistic regression model using Pass/Fail as the outcome, and students’ demographics and modules’ design as predictors. Given the hierarchical nature of the dataset (students are nested within modules), a mixed-effect model allowed us to take into account random effects across modules (Bates et al. 2015). We gradually built up the models (single level, random intercept, adding level 1 predictors, adding level 2 predictors, and random slope model). The model fit was assessed using a combination of log-likelihood ratio test, AIC, and BIC as recommended by Goldstein (2011). We settled at a random slope model which allows the coefficients of study breaks, TMA revision, and EMA revision to vary across modules. Graphical diagnostics of the model residuals were examined which ensured the assumptions necessary for valid inference are upheld (e.g. homogeneity of residual variances, linearity, multi-collinearity, and normality). Odds ratio with 95% confidence interval were reported.

To answer RQ2, we performed a mixed-effect logistic regression model, the odds of being engaged as the outcome, and types of learning activities, duration (number of days) until the exam submission deadline, break weeks, and EMA revision weeks as the predictors. The reason why we chose to use log counts as a binary rather than a count variable is because log count is a poor proxy to capture the intensity of engagement in VLE. For example, two students can have the same number of log count, but the duration between each log could be different (e.g. student A spend 1 h with 20 log counts, and student B spend 20 min with 20 log counts). Therefore, log count at its best should only be used as a proxy for whether and when students engage in VLE, but not the intensity of their engagement. A mixed-effect model was chosen due to the complex structure of the dataset (log activities were nested within students nested within semesters).

All the tests were carried out in R studio statistical software (v1.1.423) (RC Team 2016). The mixed-effect logistic model was carried out using glmer function in lme4 package. Given our large sample size in both RQ1 and RQ2, we chose a more conservative cutoff significant value of 0.01 instead of 0.05 to mitigate the errors rate of detecting significant effect due to random chance in a large dataset (Lin et al. 2013).

4 Results

4.1 How do study break weeks and exam preparation weeks influence the odds of passing a module?

The module length was on average 29 weeks, with a range from 21 to 35 weeks (Table 1). Most modules starting in the fall semester had three study break weeks due to cultural festivities, including Christmas (2 weeks) and Easter (1 week), while modules starting in the spring semester often had two study break weeks (Easter and Summer). As a common practice at the OU, there was one preparation week for each TMA. The number of TMA preparation weeks was 4.16 on average (SD = 2.66), which on average accounted for 14% of the total module length. The number of exam preparation weeks was around three weeks on average with a 1.49 standard deviation.

Firstly, we found a significant effect of students’ demographics on the odds of passing. Compared to White ethnicity, other ethnic minorities (e.g. Asian, Black, Mixed) had lower odds of passing a course, with the odds ratio of 0.72, 0.51, 0.71, respectively (all p < 0.001) (Table 2). The prior level of education also had a strong influence on the odds of passing a module. Compared to students with A level or equivalent, students with no formal qualifications and less than A levels had 50% and 33% lower odds of passing, respectively (both p < 0.001). There was no significant difference between students with A levels and students with higher education qualification. Students with postgraduate qualification on average had 44% higher odds of passing compared to students with A levels (p < 0.001). Male students had a lower chance of passing a module compared to female students (OR = 0.87, p < 0.001). Students’ age had a positive relation to passing a module. The older the students get, the higher odds of passing a module is.

Secondly, the design of a module also significantly influenced the odds of passing. Students had 42% higher odds of passing a 30 credits module compared to a 60 credits module (OR = 1.42, p < 0.001). Semesters did not have any effect on the odds of passing. Students had 22% lower odds of passing a module with a final exam compared to a final report. Students had higher odds of passing level 2 and level 3 modules compared to level 1 modules. This is a common finding in educational literature, in particular in distance learning, whereby once students have mastered the skills to study at a distance during level 1 modules they often can successfully master subsequent modules (Rienties et al. 2017; Simpson 2013). Students who fail to adjust to the qualification or institute would typically drop-out, or re-register for different modules (Heileman et al. 2015). In particular when students are close to completion of their undergraduate degree, one would indeed expect submission and pass rates to be at their highest.

Thirdly, the number of break weeks had a positive impact on the odds of passing. An additional study break week was associated with 28% increase in the odds of passing with a 95% confidence interval from 1.14–1.43 (Table 2). While the percentage of EMA preparation weeks seemed to have a negative association with the odds of passing with a p value of 0.016, we advise against any conclusive interpretation as the 95% CI was rather large (0.07–0.76), and the p value could be biased due to our large sample size. The percentage of TMA preparation weeks also had no significant impact on the odds of passing. Finally, there was a large variance in the odds of passing across modules (variance = 0.52, SD = 0.72).

To sum up, regarding addressing Research Question 1 our results showed a strong positive effect of study break weeks on the odds of passing a module, while the effects of TMA and EMA preparation weeks were not significant. In the next step, we will explore in more details the intertemporal characteristics when students prepare for their assignments and exams in our Introductory Business module.

4.2 How do students engage in the VLE during exam revision weeks?

To answer RQ2, we analysed trace data from 3385 students in the introduction to business module from week -4 to week 36 over three different semesters. Figure 1 illustrates the percentage of active students for each group (pass and fail) throughout the module’s length. At a glance, one can observe that there was a higher proportion of active students in the pass group compared to the fail group. There were six peaks of engagement in both groups, which corresponded to five TMAs in weeks 4, 8, 12, 16, 24 and the final EMA in week 31 for Spring 2016, and week 32 for Fall 2015 and Fall 2016. A closer look at the pattern of engagement during the EMA preparation weeks (28–32), which in this specific module was a final report, showed that there was only a small proportion of students who failed remained active on VLE, compared to the pass group. In other words, compared to pass group, not only there was less engagement in the fail group, but the proportion of active students in the fail group has also drastically decreased over time. However, the number of students being active in each group increased as the deadline approached. There was a surge in the number of students being active in the VLE during the last 2 weeks (31–32) for both groups.

After visually exploring how different groups of students made use of their exam revision weeks, a mixed-effect logistic regression model was carried out to predict the odds of being active in the VLE (Table 3). As expected, the number of days until the deadline has a negative relationship with the odds of being active in the VLE. For each day closer to the submission deadline, the odds of students being active in the VLE increased by 5%. The pass group had on average 64% higher odds of being active than the fail group during the exam revision weeks. Compared to forums activities, students were more 12% likely to be engaged in EMA materials whereas they were less likely to be engaged in TMA materials, study materials and other materials.

5 Discussion

In the last two decades, a wide range of Computer-Based Assessment (CBA) approaches have been developed using the affordances of technology and the internet. A unique contribution of our study is that we unpacked on a large scale the effect of study break and exam revision weeks of 205 modules on the odds of passing a module of 123,918 students. With the arrival of learning analytics and fine-grained log data, there is a wealth of opportunities to explore and critically examine how and when students were making use of their exam revision weeks. Our analysis of log activities of 3385 students over three semesters during their exam preparation weeks had revealed significantly different patterns of engagement that differentiated pass from fail students.

Our first finding indicated that study break weeks had a positive impact on academic outcomes. On average, an additional study break week was associated with 1.28 times increase in the odds of passing. In other words, by providing explicit and implicit opportunities for students to take a breather to recharge their batteries, or to catch-up on missed learning activities, students in modules with more study break weeks had higher odds of passing. In contrast to previous research (Cross et al. 2016), we did not find specific empirical support that either TMA or EMA preparation weeks positively influenced whether students pass a module. One possible explanation of this difference might be related to the relatively small sample size in the study of Cross et al. (2016), as well as the self-reported nature of students’ responses to exam preparation weeks. Using a much larger and diverse dataset from a range of disciplines, in our study we found a small but positive effect of study break weeks on academic outcomes, while (the number of) exam preparation weeks did not appear to have an impact on learning outcomes.

While students’ demographics and other module design characteristics were not the foci of this study, it is worth noting that there was no significant difference in the odds of passing between semesters. This result challenged the previous findings at the OU, which consistently showed that given the same module, the Spring semester (B) version had consistently lower pass rate than the Fall semester (J) version. One possible explanation could be that modules started in Spring semester systematically had a lower number of study break than modules started in Fall semester.

A closer look at behavioural engagement patterns of 3385 students on VLE suggested that the proportion of active students in the pass group was higher than the fail group. The proportion of active students in the fail group drastically decreased over time compared to the proportion of active students in the pass group. In other words, most of the students in the fail group already stopped engaging on VLE toward the end of the course, and there was only a small proportion was active during exam preparation weeks. This finding has an important implication for institution management and module designer. One of the common justifications for the introduction of exam preparation weeks is to support students that were falling behind by giving them more time to prepare. It assumes that students who were failing behind will make use of these revision weeks to study. However, our results implied that students were failing behind would already stop engaging by the time of exam preparation weeks. Therefore, a potential explanation for why the introduction of exam preparation weeks could not make any difference could be that high-performing students would remain engaged until the end, while at-risk students might already stopped engaging.

Our results also confirmed the previous findings in academic procrastination literature. The number of active students in both pass and fail groups increased as the deadline approached. However, the number of active students in the pass group increased faster as the deadline approached compared to the fail group.

6 Conclusion and limitations

This study investigated the effect of study break weeks and exam preparation weeks on the odds of passing a module using data on 123,916 students in 205 modules from 2015 to 2017. Our results indicated a positive effect of study break weeks while there was no clear relation between EMA preparation weeks and the odds of passing a module. In addition, fine-grained log analysis of 3385 students in a level 1 Business module over three different semesters showed significant difference in both the intensity and timing of behavioural engagement on the VLE during the exam revision weeks. Students who failed the module were more likely to stop engaging by the time of exam preparation weeks. Of those students who remained active, the fail group exhibited inconsistent patterns of revising study materials and procrastinated more compared to the pass group.

There are some limitations to this study. Firstly, the outcome variable was simplified as pass or fail. However, a continuous outcome variable could be used to model both linear and non-linear effect of predictors. Furthermore, we have excluded students who withdrew before the EMA. Secondly, while the use of log count can inform us about the timing of engagement, it is not a suitable proxy for the intensity of engagement. We recommend the use of time-on-task as an alternative for measuring the intensity of engagement. Finally, while most modules at the OU are delivered online, the use of trace data cannot capture offline behaviours.

References

Agudo-Peregrina ÁF, Iglesias-Pradas S, Conde-González MÁ, Hernández-García Á (2014) Can we predict success from log data in VLEs? Classification of interactions for learning analytics and their relation with performance in VLE-supported F2F and online learning. Comput Hum Behav 31:542–550

Aguiar E, Chawla NV, Brockman J, Ambrose GA, Goodrich V (2014) Engagement vs performance: using electronic portfolios to predict first semester engineering student retention. In: Paper presented at the proceedings of the fourth international conference on learning analytics and knowledge, Indianapolis, Indiana

Bates D, Mächler M, Bolker B, Walker S (2015) Fitting linear mixed-effects models using lme4. J Stat Softw 67(1):1–48

Boud D (2017) Standards-based assessment for an era of increasing transparency. In: CD, BS, CC, GR (eds) Scaling up assessment for learning in higher education. The enabling power of assessment, vol 5. Springer, Singapore

Boud D, Falchikov N (2006) Aligning assessment with long-term learning. Assess Eval High Educ 31(4):399–413

Brito MA, de Sá-Soares F (2014) Assessment frequency in introductory computer programming disciplines. Comput Hum Behav 30:623–628

Broadbent J, Poon WL (2015) Self-regulated learning strategies and academic achievement in online higher education learning environments: a systematic review. Internet High Educ 27:1–13

Carelli MG, Wiberg B, Wiberg M (2011) Development and construct validation of the Swedish Zimbardo time perspective inventory. Eur J Psychol Assess 27(4):220–227

Carless D (2007) Conceptualizing pre-emptive formative assessment. Assess Educ Princ Policy Pract 14(2):171–184

Carless D, Salter D, Yang M, Lam J (2011) Developing sustainable feedback practices. Stud High Educ 36(4):395–407

Cerezo R, Esteban M, Sánchez-Santillán M, Núñez JC (2017) Procrastinating behavior in computer-based learning environments to predict performance: a case study in Moodle. Front Psychol 8:1403

Coates H (2016) Assessing student learning outcomes internationally: insights and frontiers. Assess Eval High Educ 41(5):662–676

Cross S, Whitelock D, Mittelmeier J (2016) Does the quality and quantity of exam revision impact on student satisfaction and performance in the exam itself?: Perspectives from undergraduate distance learners. Paper presented at the 8th international conference on education and new learning technologies (EDULEARN16), Barcelona, Spain. http://oro.open.ac.uk/46937/. Accessed 1 July 2018

Gelan A, Fastré G, Verjans M, Martin N, Janssenswillen G, Creemers M et al (2018) Affordances and limitations of learning analytics for computer-assisted language learning: a case study of the VITAL project. Comput Assist Lang Learn 31(3):294–319. https://doi.org/10.1080/09588221.2017.1418382

Goda Y, Yamada M, Kato H, Matsuda T, Saito Y, Miyagawa H (2015) Procrastination and other learning behavioral types in e-learning and their relationship with learning outcomes. Learn Individ Diff 37:72–80

Goldstein H (2011) Multilevel statistical models, vol 922. John Wiley, Hoboken

Greiff S, Wüstenberg S, Avvisati F (2015) Computer-generated log-file analyses as a window into students’ minds? A showcase study based on the PISA 2012 assessment of problem solving. Comput Educ 91:92–105

Greiff S, Niepel C, Scherer R, Martin R (2016) Understanding students’ performance in a computer-based assessment of complex problem solving: an analysis of behavioral data from computer-generated log files. Comput Hum Behav 61:36–46

Greiff S, Scherer R, Kirschner PA (2017) Some critical reflections on the special issue: current innovations in computer-based assessments. Comput Hum Behav 76(2017):715–718

Heileman GL, Babbitt TH, Abdallah CT (2015) Visualizing student flows: busting myths about student movement and success. Change Mag High Learn 47(3):30–39

Kapur M (2008) Productive failure. Cognit Instr 26(3):379–424

Kim KR, Seo EH (2015) The relationship between procrastination and academic performance: a meta-analysis. Personal Individ Differ 82:26–33

Kirschner PA, Park B, Malone S, Jarodzka H (2017) Toward a cognitive theory of multimedia assessment (CTMMA). In: Spector MJ, Lockee BB, Childress MD (eds) Learning, design, and technology: an international compendium of theory, research, practice, and policy. Springer, Cham, pp 1–23

Koedinger K, Booth JL, Klahr D (2013) Instructional complexity and the science to constrain it. Science 342(6161):935–937

Kovanovic V, Gasevic D, Dawson S, Joksimovic S, Baker RS, Hatala M (2015) Penetrating the black box of time-on-task estimation. In: Proceedings of 5th learning analytics knowledge conference, ACM, 184–193

Li N, Marsh V, Rienties B, Whitelock D (2017) Online learning experiences of new versus continuing learners: a large scale replication study. Assess Eval High Educ 42(4):657–672

Lin M, Lucas HC Jr, Shmueli G (2013) Research commentary—too big to fail: large samples and the p value problem. Inf Syst Res 24(4):906–917

Littlejohn A, Hood N, Milligan C, Mustain P (2016) Learning in MOOCs: motivations and self-regulated learning in MOOCs. Internet High Educ 29:40–48

Malmberg J, Järvelä S, Järvenoja H (2017) Capturing temporal and sequential patterns of self-, co-, and socially shared regulation in the context of collaborative learning. Contemp Educ Psychol 49:160–174

Nguyen Q, Rienties B, Toetenel L, Ferguson F, Whitelock D (2017) Examining the designs of computer-based assessment and its impact on student engagement, satisfaction, and pass rates. Comput Hum Behav 76:703–714

Nguyen Q, Huptych M, Rienties B (2018) Linking students’ timing of engagement to learning design and academic performance. In: Proceedings of eighth international learning analytics and knowledge conference, pp 141–150

Panadero E, Klug J, Järvelä S (2016) Third wave of measurement in the self-regulated learning field: when measurement and intervention come hand in hand. Scand J Educ Res 60(6):723–735

Rienties B, Toetenel L (2016) The impact of learning design on student behaviour, satisfaction and performance: a cross-institutional comparison across 151 modules. Comput Hum Behav 60:333–341

Rienties B, Rogaten J, Nguyen Q, Edwards C, Gaved M, Holt D et al (2017) Scholarly insight spring 2017: a data wrangler perspective. http://oro.open.ac.uk/49648/7/49648_Scholarly%20insight%20Spring%202017_short.pdf. Accessed 1 July 2018

Sharples M, Adams A, Alozie N, Ferguson F, FitzGerald E, Gaved M et al (2015) Innovating pedagogy 2015. Retrieved from Milton Keynes. http://proxima.iet.open.ac.uk/public/innovating_pedagogy_2015.pdf. Accessed 1 July 2018

Simpson O (2013) Supporting students for success in online and distance education. Routledge, New York

Solomon LJ, Rothblum ED (1984) Academic procrastination: frequency and cognitive-behavioral correlates. J Couns Psychol 31(4):503

RC Team (2016) A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/. Accessed 1 July 2018

Tempelaar DT, Rienties B, Giesbers B (2009) Who profits most from blended learning? Ind High Educ 23(4):285–292

Tempelaar DT, Rienties B, Giesbers B (2015) In search for the most informative data for feedback generation: learning analytics in a data-rich context. Comput Hum Behav 47:157–167

Tempelaar DT, Rienties B, Nguyen Q (2017) Towards actionable learning analytics using dispositions. IEEE Trans Learn Technol 10(1):6–16

Toetenel L, Rienties B (2016) Analysing 157 learning designs using learning analytic approaches as a means to evaluate the impact of pedagogical decision-making. Br J Edu Technol 47(5):981–992

Trevors G, Feyzi-Behnagh R, Azevedo R, Bouchet F (2016) Self-regulated learning processes vary as a function of epistemic beliefs and contexts: mixed method evidence from eye tracking and concurrent and retrospective reports. Learn Instr 42:31–46

van Ameijde J, Weller M, Cross S (2016) Designing for student retention: the ICEBERG model and key design tips. Retrieved from Milton Keynes: http://www.open.ac.uk/iet/learning-design/sites/www.open.ac.uk.iet.learning-design/files/files/ecms/web-content/2016-QE-student-retention.pdf. Accessed 1 July 2018

Van Zundert M, Sluijsmans D, van Merriënboer J (2010) Effective peer assessment processes: research findings and future directions. Learn Instr 20(4):270–279

Whitelock D, Twiner A, Richardson JTE, Field D, Pulman S (2015) OpenEssayist: a supply and demand learning analytics tool for drafting academic essays. Paper presented at the proceedings of the fifth international conference on learning analytics and knowledge, Poughkeepsie, New York

Winne PH (2017) Leveraging big data to help each learner upgrade learning and accelerate learning science. Teachers College Record 119(13):1–24

Zimbardo PG, Boyd JN (1999) Putting time in perspective: a valid, reliable individual-differences metric. J Pers Soc Psychol 77:1271–1288

Zimmerman BJ (2000) Attaining self-regulation: a social cognitive perspective. In: Handbook of self-regulation. Elsevier, New York, pp 13–39

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Communicated by Ronny Scherer and Marie Wiberg.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Nguyen, Q., Thorne, S. & Rienties, B. How do students engage with computer-based assessments: impact of study breaks on intertemporal engagement and pass rates. Behaviormetrika 45, 597–614 (2018). https://doi.org/10.1007/s41237-018-0060-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41237-018-0060-1