Abstract

Recently, Gimbutas et al. (J Fluid Mech, 2015. https://doi.org/10.1017/jfm.2015.302) derived an elegant representation for the Green’s functions of Stokes flow in a half-space. We present a fast summation method for sums involving these half-space Green’s functions (stokeslets, stresslets and rotlets) that consolidates and builds on the work by Klinteberg et al. (Res Math Sci 4(1):1, 2017. https://doi.org/10.1186/s40687-016-0092-7) for the corresponding free-space Green’s functions. The fast method is based on two main ingredients: The Ewald decomposition and subsequent use of FFTs. The Ewald decomposition recasts the sum into a sum of two exponentially decaying series: one in real space (short-range interactions) and one in Fourier space (long-range interactions) with the convergence of each series controlled by a common parameter. The evaluation of short-range interactions is accelerated by restricting computations to neighbours within a specified distance, while the use of FFTs accelerates the computations in Fourier space thus accelerating the overall sum. We demonstrate that while the method incurs extra costs for the half-space in comparison with the free-space evaluation, greater computational savings is also achieved when compared to their respective direct sums.

Similar content being viewed by others

1 Introduction

The determination of the motion of particles in bounded or unbounded flows is a central problem in microhydrodynamics. For a large class of industrial processes like particle filtration, sedimentation or aggregation, and deposition of pulp fibres in paper manufacturing, the fluid inertia is negligible and the governing equations are well approximated by the Stokes equations [13].

The system of Stokes equations is linear and can be reformulated as an integral equation. In a boundary integral method, once the integrals are discretized, discrete sums with fundamental solutions of Stokes flow remain. Typically, any exterior solid boundaries or interfaces between different fluids are discretized. Periodicity in one or more directions is however usually built into the definition of the fundamental solution, leading to an (infinite) summation of periodic images in the discrete sums. Thus one can speak of 1-periodic, 2-periodic, 3-periodic or free-space problems—indicating the periodicity built into the evaluation of the Stokes potentials. The finite free-space sums for evaluating Stokes potentials are of the form (or some variant of) (4), i.e., each summand is a convolution of the Green’s function with a source term.

For a simple geometry like a flat plane in unbounded space, it is also possible to avoid explicit discretization of this plane, and instead modify the evaluation of the Stokes potentials to achieve a no-slip condition. This requires the introduction of sources at image locations reflected in the wall, as well as correction terms. Such an explicit representation was first derived by Blake [4] and later in a more elegant form by Gimbutas et al. [11]. The fast evaluation of these sums will be the focus of this paper, and we start by giving some background to the problem.

If the Green’s function is the harmonic kernel and the source term a scalar, the sum corresponds to the formula for the Coulomb potential of a system of point charges. The interest in a fast evaluation of such sums actually stemmed from this particular problem, with Ewald’s investigation [7] of the 3-periodic case in 1921 now known as the Ewald summation technique. Hasimoto [12] then considered the 3-periodic sum of stokeslets. In these decompositions, some specific choices are made to turn a conditionally convergent sum into two rapidly converging sums—one in real space and one in Fourier space. The computational complexity is however quadratic in the number of points. The survey by Deserno and Holm [5] traces the development of fast methods based on FFTs for acceleration of the Fourier space sum in the context of electrostatics and molecular dynamics. An early method called Smooth Particle Mesh Ewald (SPME) [6] was utilized by Saintillan et al. [20] for fast evaluation of periodic stokeslet sums. Later, Tornberg et al. developed a Spectral Ewald (SE) method for the 3-periodic sum of stokeslets [15], stresslets [2] and rotlets [1] that is spectrally accurate and recovers the exponentially fast convergence of the Ewald sums that traditional Particle Mesh Ewald approaches cannot.

The SE method is best suited for the 3-periodic case. Otherwise, for every direction that is not periodic, oversampling of the FFTs becomes necessary to compute the aperiodic convolution, and this increases the computational cost. The work in [16] illustrates the use of the SE method for a 2-periodic sum of stokeslets, while in [21] the SE method is adapted for 1-periodic sums in the context of electrostatics. The case of free-space sum of stokeslets (no periodicity) is the most challenging for the SE method and it was solved recently by Klinteberg et al. [3] by combining two different ideas. The first idea is the free-space solution of harmonic and biharmonic equations using FFT on a uniform grid by Vico et al. [23] that amounts to the convolution of harmonic/biharmonic (radial) kernels with source terms by FFT on a uniform grid, and the second idea is that the stokeslet, stresslet and rotlet kernels, though not radial, can be expressed as a linear combination of differential operations on the harmonic or biharmonic kernels.

A popular method ideally suited for free-space problems is the Fast Multipole Method (FMM) [9]. In contrast to the SE method, it is best suited for problems with no periodicity. The FMM has been used successfully for harmonic and biharmonic kernels and stokeslets [8, 10, 22]. However, the SE method still compares favourably with FMM for free-space [3]. While this comparison was based on a uniform distribution of source points, for increasingly non-uniform distribution of sources, the adaptivity of the FMM will eventually come into play and be a decisive advantage. Irrespective of this observation, one of the merits of the SE method is its versatility, having been shown to work for 3-periodic, 2-periodic and free-space Stokes flow problems.

In many studies of sedimentation, it is natural to consider a half-space (\(\mathbb {R}^2 \times \mathbb {R^+}\)) domain bounded by a plane wall at the bottom. As mentioned above, this can be achieved without explicitly discretizing the wall, instead modifying the discrete sums to be evaluated [11].

This work deals with the following specific problem: that of fast summation of a large number of convolutions of the Green’s function for a half-space with source terms. We apply Ewald decomposition to the modified formulae and adapt the SE method to this case. The structure of the Green’s function for the half-space is however more complicated than other kernels investigated previously.

The outline of this paper is as follows: We introduce our notation and the free-space problem in Sect. 2. The half-space problem and its Green’s function are stated in Sect. 3. A brief explanation of the Ewald summation technique as it applies here is given in Sect. 4 before we describe the Spectral Ewald Method for the case of the half-space in Sect. 5. Some error estimates are discussed in Sect. 6 before we present computational results in Sect. 7.

2 The free-space problem

The three fundamental solutions for Stokes flow are the so-called stokeslet, stresslet and rotlet singularities; they are tensors that have an explicit representation as follows:

with the shorthand notation \(\left\| \mathbf {r}\right\| =: r\) and \(\mathbf {x}, \mathbf {y} \in \mathbb {R}^3\). Here \(\otimes \) is the standard tensor product for vectors in \(\mathbb {R}^3\), \(\varvec{I}\) is the second-order identity tensor in \(\mathbb {R}^3\) and \(\mathscr {E}\) is the alternating third-order tensor whose representation in the natural basis of \(\mathbb {R}^3\) coincides with that of Levi–Cevita’s symbol.

In the Einstein summation convention or index notation, the direct representations above reduce to

where \(\delta _{ij}, \varepsilon _{ijk}\) are the Kronecker delta and Levi–Cevita’s symbol, respectively. Wherever possible we shall use the direct notation for economy and provide the index notation as explanation.

Given that there are N point forces of intensity \(8\pi \mathbf {f}^{(m)}\) at locations \(\mathbf {y}^{(m)}, \; m = 1,\ldots , N\), the stokeslet induces a velocity field at \(\mathbf {x} \in \mathbb {R}^3\). Similarly, if there are N “double forces” of intensity \(8\pi \mathbf {g}^{(m)}\) and orientation \(\mathbf {q}^{(m)}\), the stresslet and rotlet tensors also induce a velocity as follows:

where \(\mathbf {S}^{(m)}= \mathbf {S}(\varvec{r}^{(m)})\), and \(\mathscr {T}^{(m)}\) and \(\mathbf {W}^{(m)}\) are defined similar to \(\mathbf {S}^{(m)}\) with the shorthand notation \(\mathbf {x}- \mathbf {y}^{(m)} =: \varvec{r}^{(m)}\). The formula for the rotlet can also be written in terms of the vector cross-product by replacing the skew-symmetric tensor with the axial vector as has been done in [3], but we do not do so in order to stay consistent with the notation in Gimbutas et al. [11]. The case where the evaluation point \(\mathbf {x} = \mathbf {y}^{(p)}, p = 1, 2, \ldots , N, p\ne m\) will be the one considered here on as it is of most interest and occurs in boundary integral methods and potential methods for Stokes equations.

In index notation, the velocity field induced in each case is written as

The result of summation over all N terms above in (4) gives the free-space velocity corresponding to a collection of stokeslets, stresslets or rotlets. Note that the operation in each case is a convolutionFootnote 1 between the kernel and the source term. In [3], a fast SE method is proposed for this evaluation.

3 The half-space problem

A natural extension of this question would be to ask if there exists an explicit representation for the velocity field due to discrete singularities in the half-space, and secondly, if there are fast methods to evaluate it. This paper deals with the latter question. Indeed, there are closed form expressions for the half-space, those given by Blake [4] and Gimbutas et al. [11] which use the method of images to derive an appropriate expression. While the older representation of Blake requires evaluation of multiple harmonic and dipole fields, the recent work by Gimbutas et al. provides a very elegant representation using correction terms expressed in terms of a single harmonic potential. Their key idea was to invoke the Papkovitch–Neuber [18, 19] representation formula for constructing a divergence-free velocity field that satisfies the Stokes equation using a harmonic potential. The free-space formula itself does not satisfy the boundary conditions at the wall, but the combination with its image ensures the tangential component satisfies the boundary condition. The Papkovitch–Neuber correction term is added to adjust the normal component of the velocity at the wall. The result is the formula (5) which is divergence-free, satisfies Stokes equations, and the no-slip boundary conditions at the wall. In this paper, we show how the SE method illustrated in [3] can be applied to this representation formula for the half-space (5) to yield a fast method of evaluation.

First we set out our notation in order to restate the formulae for the half-space. A system of Cartesian coordinates is set up such that the \(x_3 = 0\) plane coincides with the wall.

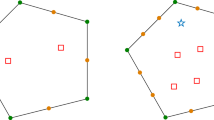

Let \(\mathbf {y}^I := (y_1, y_2, -y_3)^\mathrm{T}\) denote the reflection of \(\mathbf {y} := (y_1, y_2, y_3)^\mathrm{T}\) about the \(x_3\) plane (see Fig. 1). Furthermore, let \(\mathbf {\tilde{r}}^{(m)} := \mathbf {x} - {\mathbf {y}^{(m)}}^{I}, \; \mathbf {\tilde{S}}^{(m)} := \mathbf {S}(\mathbf {\tilde{r}}^{(m)})\).

Then the representation for the half-space in each case involves a harmonic function associated with each mirror image as follows:

The harmonic functions \(\phi _S, \phi _{\mathscr {T}}\) and \(\phi _W\) presented in [11] are written in terms of potentials due to point sources, dipoles and quadrupoles. Here we instead express them as gradients of the harmonic potential. To explain, suppose the harmonic potential G of a unit charge \(4\pi G:= \dfrac{1}{r}\), we can write the potential of a dipole with orientation \(\mathbf {a}\) as \(G^D := -\nabla G \cdot \mathbf {a}\) and that of a quadrupole with intensity \(\mathbf {b}\) and orientation \(\mathbf {a}\) as \(G^Q := \nabla \nabla G \mathbf {a} \cdot \mathbf {b}\). Thus, using these relations the expressions for \(\phi _S, \phi _T\) and \(\phi _W\) recorded in [11] are restated as

where \(G^{(m)} = G(\tilde{r}^{(m)})\).

Note that formulae (5) are not translation invariant, and it is essential that the origin of the coordinate system be located on the wall or boundary of the half-space.

4 The Ewald decomposition

Here we quickly summarize the motivation behind the Ewald decomposition. The idea is to introduce a scalar (Ewald) parameter \(\xi \) and split the fundamental solution or Green’s function (for the stokeslet, say) into

where \(\widehat{\mathbf {S}_{F}}\) is the Fourier transform of \(\mathbf {S}_{F}\) and \(\mathscr {F}^{-1}\) indicates the inverse Fourier transform operator (IFT). The formula for \(\mathbf {u}(\mathbf {x})\) then becomes

The first term represents local interactions and it will be seen below that it decays exponentially fast, and can hence be truncated. Thus, it is evaluated directly by summation over all sources located within a chosen distance \(r_\mathrm{c}\) of the target \(\mathbf {x}\). The second term is the IFT integral and if the integrand were smooth and compactly supported, the integral could be approximated to spectral accuracy with a trapezoidal rule in each coordinate direction, allowing for the use of FFTs for the evaluation. However, we will find that all the kernels and correction functions relevant to this present work will have a factor (like \(\widehat{B}(k)\) in the expression for \(\widehat{\mathbf {S}_{F}}(\mathbf {k}, \xi )\) recorded below) which makes them singular at \(k=0\). The method to circumvent this singularity in our quadrature is one of the key points and will be discussed in detail subsequently.

If the target location \(\mathbf {x}\) coincides with a source location, then the so-called self-interaction term must also be accounted for and removed. This is evaluated as the limit of \(\mathbf {S}_{R} - \mathbf {S}\) when \(r^{(m)} = \left\| \mathbf {x} - \mathbf {y}^{(m)}\right\| \rightarrow 0\).

For purpose of illustration, we explicitly show the Ewald decomposition of the stokeslet derived in [12].

where \(\mathbf {A}(\mathbf {k}, \xi ) := -\left( k^2\varvec{I} - \mathbf {k} \otimes \mathbf {k} \right) \left( 1 + \frac{k^2}{4\xi ^2} \right) , \;\; \mathbf {k} = (k_1, k_2, k_3)^\mathrm{T}\), \(k := \left\| \mathbf {k}\right\| , \hat{B}(k) := -\frac{8\pi }{k^4}\).

The corresponding expressions for the stresslet and rotlet are tabulated for reference in Appendix in Eqs. (14) and (15).

The computational complexity of the direct sum in (4) is \(O(N^2)\) for \(\mathbf {x} = \mathbf {y}^{(p)}, p = 1, 2, \ldots , N, p\ne m\), and the Ewald sum by itself does not reduce the complexity. The SE method keeps the real-space sum at O(N) by a specific choice/scaling of parameters when scaling up the system, and this combined with the use of FFTs reduces the overall complexity (see [3] for details) to \(O(N\log N)\).

One would like to use the SE method for (5) also to accelerate the process. While at first glance, the expressions involved in the sum may appear to be too cumbersome and complicated, a careful perusal will reveal that there is a structure to the formula; the summand consists of a linear combination of a stokeslet/stresslet/rotlet and its image about the \( x_3 = 0\) plane along with two other terms involving the respective harmonic functions, which could be viewed as corrections to satisfy the boundary conditions. The Ewald decomposition of the first two terms on the right-hand side of (5) follows directly from [3], so it only remains to derive an appropriate Ewald decomposition for the two correction terms involving the harmonic function and its gradient. We recognize that the Ewald decomposition of \(G, \nabla G, \nabla \nabla G\) and \(\nabla \nabla \nabla G\) is required to complete this task.

Turning to it, a lengthy (but straightforward) calculation finds the real-space components (denoted by the subscript R) to be

where, \(r_i\) is the ith component of the vector joining the source location to the target location, and \(f(r) := \dfrac{{\text {erf}}(\xi r)}{r}\) for convenience.

The components evaluated in Fourier space through their Fourier transforms and denoted by the subscript F are

where \(\mathbf {k} = (k_1, k_2, k_3)^\mathrm{T}\), \(k := \left\| \mathbf {k}\right\| \), \(\hat{k}_i := \dfrac{k_i}{k}\) and \(\widehat{H}(k) := \frac{4\pi }{k^2}\). The reason for this notation will become clear later.

These correction terms are centred at the image locations but the evaluation point \(\mathbf {x}\) is never an image location hence there is no need to account for the so-called self-interaction term.

5 The half-space Spectral Ewald method

The half-space formula described in Sect. 3 essentially transforms the problem into a new free-space problem, albeit with extra terms. Hence the computational framework used is the same as described in [3], following the recent idea introduced by Vico et al. [23] to solve free-space problems by FFTs on uniform grids. Thus, we keep our explanation of the method very general and brief, directing readers to [3] for complete details, while we highlight only the differences from it and finer points of note. A simple but useful observation is that for computations, the first two terms (kernel and its image) can be combined into a single term by absorbing the negative sign into the image source vectors, so that one can think of a kernel with 2N sources.

5.1 The real-space component

The real-space components for the stresslet and rotlet derived in [1, 3] are reproduced in Appendix, while that of the respective correction functions \(\phi _S, \phi _{\mathscr {T}}, \phi _W\) and their gradients can be written down directly by utilizing (7) in (6).

A cell-list of nearest neighbours within the cut-off radius \(r_\mathrm{c}\) is prepared for each target, the difference with [3] being that all target locations were also source locations in a free-space evaluation, but for the case of a half-space, target locations are on one side of the wall and also serve as sources, but their reflections act as sources only and are located on the other side of the wall. Other than that, the procedure to evaluate the local interactions is as before. The choice of the cut-off radius \(r_\mathrm{c}\) is made from the desired error-level using the truncation error estimates discussed in Sect. 6.

5.2 The Fourier-space component

The Fourier-space component is evaluated through the integral which always has the form

where the quantities \(\mathbf {A}(\mathbf {k}, \xi ),\hat{\mathscr {K}}(k)\) depend on the choice of kernel (see Appendix, \(\hat{\mathscr {K}}\) is either \(\hat{B}(k)\) or \(\hat{H}(k)\)), while the quantity \(\mathbf {c}^{(m)}\) is a scalar, vector or tensor that depends on the \(m^{\mathrm {th}}\) source term and location. For ease of explanation, we write out in full the expression that emerges for the stokeslet from (5),

Substituting from (6) and (8), it expands to

where we have rewritten the first integral as a sum over 2N terms by setting

While the equation above has been written out in full for the stokeslet, the method that will be discussed carries over without modification for the stresslet and rotlet as well since the expanded formula in that case too has the same structure and number of terms. The explicit presentation of the five integrals that need evaluation on the right-hand side of (11) serves a dual purpose:

-

1.

It shows that the integrals arising from the correction terms have the same form as that of the integral arising from the stokeslet in free-space. For a single integral of the type considered here, Klinteberg et al. [3] in Section 5.1 illustrate and justify the sequence of operations followed. In the case of the half-space however, we have five integrals of that type, and we shall now explain how to combine them together in the evaluation while following the same procedure.

-

2.

It also makes clear that all the integrands have the factor \(\widehat{B}(k)\) or \(\widehat{H}(k)\) which make them singular at \(k=0\).

To circumvent this issue, we will continue to follow [3, 23] and introduce modified Green’s functions where the Fourier transforms of these functions have no singularity at \(k = 0\). The truncated Green’s functions are denoted by the superscript \(\mathscr {R}\) that stands for the radius of the support in real space, and their Fourier transforms are given by

Using these truncated Green’s functions will yield exactly the same result for the harmonic/biharmonic equation as the original ones, as long as the right-hand side has compact support within the solution domain, and \(\mathscr {R}\) is chosen sufficiently large. Specifically, if the solution domain is such that the largest point-to-point distance is \(\mathscr {R}_{\mathrm {max}}\), then we need \(\mathscr {R} \ge \mathscr {R}_{\mathrm {max}}\).

We assume that the physical domain is a cube of size L that encloses all the sources, the computational domain is a cuboid of dimensions \(L \times L \times 2L\) that encloses both the sources and their images.

In the Ewald decomposition, sources are convolved with Gaussians or modified Gaussians to form the right-hand side that defines the Fourier-space problem. Hence, the assumption above of a compactly supported right-hand side is violated. In the actual discretization, we interpolate point sources to the grid using a window function. In the SE method, this window function is a (suitably scaled, hence not the same) Gaussian, as will be explicitly defined in Algorithm 1. The domain length L will be extended to \(\tilde{L}\) to accommodate the support around the source locations. With the parameter choices that we will soon detail, this extension is sufficient and further extension will not reduce the total error. A more detailed discussion of this issue can be found in Section 5.3 of [3] .

The resulting computational domain is discretized by an equi-spaced grid with spacing h containing \(\tilde{M} \times \tilde{M} \times 2\tilde{M} = 2{\tilde{M}}^3\) points. Note that such a grid induces, in its k-space counterpart, a spacing \(\varDelta k = 2\pi / \tilde{L} \).

Fundamentally, we are performing an aperiodic convolution in Fourier space, and hence need a twice oversampled representation of \(\widehat{H}^\mathscr {R}(k)\), i.e., a representation with k-space resolution of \(\varDelta k/2\) . It has been shown earlier by Vico et al. [23] that the terms \(\widehat{H}^\mathscr {R}(k), \widehat{B}^\mathscr {R}(k)\) can be evaluated knowing only the value of \(\mathscr {R}\), which itself is determined by the size or extent of the domain. Thus, for computational efficiency, we precompute \(\widehat{H}^\mathscr {R}(k)\), and \(\widehat{B}^\mathscr {R}(k)\) for the stokeslet or stresslet on a grid with \(16{\tilde{M}}^3\) points. We cannot compute this directly by starting from values on the physical grid since it is only the Fourier transform that is known analytically. This computation is thus carried out as follows:

-

1.

Evaluate \(\widehat{H}^\mathscr {R}(k)\) and \(\widehat{B}^\mathscr {R}(k)\) on a grid of spacing \(\varDelta k/s_f\) (or \(2(s_f \tilde{M})^3\) points) where the truncation radius for the domain \(\mathscr {R} = \sqrt{6}\tilde{L}\) and the oversampling factor \(s_f \ge 1 + \sqrt{6}\) is chosen as small as possible such that \(s_f \tilde{M}\) is an even integer.

-

2.

Compute the 3D-IFFT and truncate to get \(H^\mathscr {R}\) on a grid of \(16{\tilde{M}}^3\) points.

-

3.

Compute the 3D FFT now to get back \(\widehat{H}^\mathscr {R}(k)\) on a grid with spacing \(\varDelta k/2\), that is a twice oversampled representation.

This set of values is now used in the algorithm. Note that this is different from simply sampling \(\widehat{H}^\mathscr {R}(k)\) on a \(16{\tilde{M}}^3\) grid. The reason becomes clear if we consider the formulae in Section 4.3 in [3]. It is apparent that we need to truncate the Green’s function values centred at a particular point in the physical grid. Thus, to perform the truncation, we start with sampling values of \(\widehat{H}^\mathscr {R}(k)\), perform an IFFT to obtain values in physical space, and then perform an FFT after truncation.

The computation of \(\mathbf {u}_F(\mathbf {x}, \xi )\) has been outlined in Algorithm 1 and can be organized into the following main steps:

-

1.

Preprocessing (Steps 2, 3): Setting up the appropriate computational domain, performing reflections, etc.

-

2.

Spreading (Step 5): Computing the data on the grid using truncated Gaussians. This is essentially the source term or some component(s) of it scaled in various ways. The Gaussians are assumed to have a support of \(P^3\) grid points. (Explicit formula given in Algorithm 1)

-

3.

FFT (Step 6): Computing the three-dimensional FFT, zero-padded to double the size. The factor of 2 is necessary to perform the aperiodic convolution. Note that for the stokeslet, we perform 2 vector FFTs and one scalar FFT.

-

4.

Precomputation (Step 7) Computing \(\widehat{H}^\mathscr {R}(k)\) or \(\widehat{B}^\mathscr {R}(k)\). This step depends on the size of the computational domain only and is actually performed after Steps 2 and 3, but is listed later here for aiding the flow of ideas.

-

5.

Scaling (Steps 8 and 9): Computing product of FFT with \(\mathbf {A}(\mathbf {k}, \xi )\), etc., through precomputed \(\widehat{H}^\mathscr {R}(k)\) or \(\widehat{B}^\mathscr {R}(k)\). In this step, all the quantities involved have a twice oversampled representation.

-

6.

IFFT (Step 10): Applying the inverse three-dimensional FFT to the result of the scaling and truncating to obtain result on \(\tilde{M} \times \tilde{M} \times 2\tilde{M}\) grid.

-

7.

Quadrature (Step 11): Evaluating the resulting integral by trapezoidal rule for each \(\mathbf {x}\).

In comparison with the evaluation for free-space in [3], the number of FFTs and IFFTs increases and this information is summarized in Table 1.

The increase in the number of FFTs is consistent with the half-space formula (5); the correction terms require one vector and one scalar FFT (3 + 1) for the stokeslet, one vector FFT (3) for the rotlet, and one tensor and one vector FFT (9 + 3) for the stresslet. The increase in the number of FFTs required is thus not surprising, but the increase in the number of IFFTs is not so obvious, for after calculating the FFTs and performing the convolution as a product in Fourier space, it might seem that we could combine them all and perform a single IFFT and integration step. However, that is not possible due to the presence of the coordinate \(x_3\) multiplying the gradient. This term needs to be treated separately and hence it requires an extra IFFT and integration step. One might well ask if the Ewald summation technique is still worthwhile with this increase in the complexity of the problem in Fourier space; fortunately, the answer is affirmative, as demonstrated in the next section.

6 Truncation errors

The errors in the real-space calculation are caused by truncation. However, the errors in the SE method for the Fourier-space part are not due to truncation alone; there are approximation errors due to the quadrature rule for integration and the discretization and truncation of the Gaussians in the spreading and quadrature steps. Given P, the number of points across a truncated Gaussian, the parameter \(\eta = \eta (P)\) can be chosen to balance discretization and truncation errors, which leaves P as the only free parameter. Approximation errors decay exponentially with P and by using a sufficiently large P, the approximation errors may be considered negligible so that the measured error is due to truncation errors only [17], as the Ewald parameter \(\xi \) does not introduce any errors.

The truncation errors appear in the real-space part because we consider only local interactions within the radius \(r_\mathrm{c}\), while in the Fourier-space part, the integral in (9) over all of \(\mathbb {R}^3\) is truncated in practice to consider some large but finite wave number with magnitude \(k_{\infty }\). The value of \(k_{\infty }\) is related to the grid and computational domain by the relation \(k_{\infty } = \pi /h = \pi \tilde{M}/\tilde{L}\). The exact real-space contribution is obtained by letting \(r_\mathrm{c} \rightarrow \infty \) in the real-space sum, and in combination with the naive direct sum, it also yields the exact Fourier-space contribution.Footnote 2 From these, the computed truncation errors are found. In [3], the authors report truncation error estimates based on the methodology introduced by Kolafa and Perram [14] that agree closely with the computed errors for both real-space and Fourier-space evaluations. These are statistical error estimates for the root mean square (RMS) truncation error, defined as

Implicit in these estimates are the assumptions that the sources are randomly distributed, and that the error measure has a Gaussian distribution.

Since the derived estimates are statistical in nature, the methodology of Kolafa and Perram will not be able to account for possible cancellations due to symmetry in the half-space formula. On the contrary, this approach will yield an estimate that is the sum of two contributions:

-

1.

Truncation errors due to the stokeslet, stresslet or rotlet kernels (as in the tables).

-

2.

Truncation errors due to the correction terms.

Such an estimate is likely to be a conservative upper bound for the computed errors. Therefore, we examine the truncation error expressions to ascertain if one can neglect the error contribution made by the correction terms.

From Tables 2 and 3, it is evident that the kernel estimates have an exponential decay term (\(e^{-\xi ^2 r_\mathrm{c}^2}\) or \(e^{-k_{\infty }^2/4\xi ^2}\)) multiplied by some power of \(r_\mathrm{c}\) or \(k_{\infty }\). In case of the half-space, the correction terms are all harmonic functions and/or their derivatives, and their truncation error contribution (see [14]) decays much faster than the stokeslet or stresslet kernels and at the same rate as the rotlet kernel. Thus, the existing truncation error estimates for free-space are a good starting point for the half-space as well. Of course, in evaluating the estimate, the sum is over both sources and images, and this modifies the quantity Q and the RMS error.

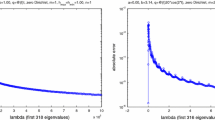

In Figs. 2 and 3, the truncation error estimate is compared to the computed error for both real-space and Fourier-space parts. This is done by calculating the relative RMS error which is the ratio of the RMS error and the RMS value of the velocity considered at all targets. For reference, the corresponding curves for the free-space problem are also plotted. For both the stokeslet and stresslet in Figs. 2 and 3, the computed errors are lesser than the half-space estimate, and the free-space computed error and estimate. The computed error for the half-space here is lower due to the cancellations induced by symmetry. For the rotlet, while all these curves are much closer to each other, closer examination reveals that the previous trend is no longer upheld. This is because the correction terms that have been neglected have the same order as the kernel. However, the overall agreement of the computed error with the existing estimates justifies the decision to neglect the contribution of the correction terms to the truncation error estimate.

RMS of relative Fourier-space truncation errors for the stokeslet, stresslet and rotlet. Dots are measured value, and solid lines are estimates based on Table 2. Red coloured dots and lines are associated with the half-space, while blue colour is associated with the free-space evaluation. The system is \(N=10^4\) randomly distributed point sources in a cube with sides \(L=3\), with \(k_{\infty }=\pi \tilde{M} /\tilde{L}\), \(\xi =3.49\), \(M=1\ldots 50\). a Stokeslet, b stresslet, c rotlet

RMS of relative real-space truncation errors for the stokeslet, stresslet and rotlet. Dots are measured value, and solid lines are computed using the estimates of Table 3. Red coloured dots and lines are associated with the half-space while blue colour is associated with the free-space evaluation.The system is \(N=2000\) randomly distributed point sources in a cube with sides \(L=3\), with \(\xi =4.67\), and \(r_\mathrm{c} \in [0, L/2]\). a Stokeslet, b stresslet, c rotlet

7 Numerical results

We consider N random point sources drawn from a uniform distribution from a box of dimension \(L \times L \times L\). The sum (4) is evaluated with stokeslets, stresslets and rotlets, at the same N target locations. All components of the source strengths and source orientations are random numbers from a uniform distribution on \([-\,1,1]\). All computationally intensive routines are written in C and are called from MATLAB using MEX interfaces. The results are obtained on a laptop workstation with an Intel Core i7-6500U Processor (2.50 GHz) and 16 GB of memory, running two cores unless stated so. Actual errors are measured by comparing the result with evaluating the sum by direct summation.

For a given system of N charges in a box of edge length L, the required parameters for our half-space Ewald method are the Ewald parameter \(\xi \), the real-space truncation radius \(r_\mathrm{c}\), the number of grid points M covering the computational domain, and the Gaussian support width P. Other parameters like \(\delta _L, \tilde{L}, \tilde{M}\) are then set automatically from these (see Algorithm 1 and [3] for details). For a large-scale numerical computation, \(\xi \), \(r_\mathrm{c}\), M and P must be set optimally. For a given value of \(\xi \) and absolute error tolerance \(\varepsilon \) for the free-space evaluation, close-to optimal values for M and \(r_\mathrm{c}\) were computed in [3] using the truncation error estimates in Tables 2 and 3. We use the very same optimal values as starting points for the half-space since the same truncation error estimates hold good for the half-space too as shown. Then we perturb \(\xi \) to achieve the smallest run-time while keeping \(\xi r_\mathrm{c}\) and \(\frac{k_{\infty }}{\xi }\) constant. Note that \(k_{\infty } = \frac{\pi \tilde{M}}{\tilde{L}}\). The optimal set of values found is used for larger systems by scaling \(k_{\infty }\) such that \(\frac{L}{k_{\infty }}\) is constant.

The results for free-space in [3] convincingly demonstrated the need for the Ewald decomposition by exhibiting the speed-up gained over the naive direct sum. The present work aims to make a similar case for the half-space. Before that however, it would be interesting to compare the computational expense of evaluating the direct sums themselves for the free-space and the half-space formulae. As shown in Fig. 4, it is seen that on average, the half-space sum is between 3.3 and 3.7 times more expensive (with rotlet the least, and stresslet the most) than the free-space sum and this factor stays constant even as the number of sources increases. The obtained range 3.3–3.7 is reasonable since the direct sum for the half-space involves 4N terms (kernel and correction terms), in contrast to that for the free-space which involves only N terms. The additional expense due to the correction terms is smallest for the rotlet, and most for the stresslet, and this is not surprising seeing formulae (6). We now tackle the question of whether it is worthwhile to consider the Spectral Ewald method for the half-space formula despite the substantial increase in the number of FFTs and IFFTs that need to be performed for the Fourier-space component. In Fig. 5a, the computing time for evaluation of the sums is plotted versus N, for all three kernels and for both the half-space Ewald (HSE) method and direct summation. The relative RMS error for HSE is kept below \(0.5 \times 10^{-8}\). As we vary N, we maintain a constant density \(N/L^3=2500\) by changing the size of the box. The system is thus scaled, while other parameters of the method like \(\xi , r_\mathrm{c}\), P and the grid resolution L / M are kept constant. As both N and L increase, so does the grid size.

For all kernels, we set \(P = 16\), while the pair \((\xi , r_\mathrm{c})\) is (6, 0.76), (5.8, 0.76), (7, 0.63) for the stokeslet, stresslet and rotlet, respectively. When \(L=2\), \(M=40\), 36 and 38 for the three kernels, and the ratio M / L is kept constant as the system is scaled. We have excluded the precomputation cost in our run-times since it is performed only once, and is easily amortized over multiple runs due to iterations or time steps when the size of the domain does not change. The figure allows us to determine the break-even value N, that is, the smallest value of N for which the Ewald summation is faster than the direct sum. In order to compare CPU run-times, it is necessary that the simulations should use the same system and number of cores. Hence the CPU run-time study for free-space was repeated with the same parameters as above except that \(\xi =7\), and \(r_\mathrm{c} = 0.63, 0.63, 0.58\) for the stokeslet, stresslet and rotlet, respectively. The results are shown in Fig. 5b.

The break-even values for all kernels (when excluding the precomputation cost) obtained from Fig. 5 are presented in Table 4. Since the cost of the direct sum for the half-space almost quadruples in comparison with that of free-space, it benefits more from the Ewald decomposition and Spectral Ewald method and the break-even is attained much earlier. As expected, the stresslet, with the steep increase in the number of FFTs, has the largest break-even, but it is only slightly greater than the break-even value for the other kernels. These numbers underline the advantage of the Spectral Ewald method for the evaluation of formulae (5) for the half-space.

We next study the computational run-time of different parts of the algorithm. In the left plot of Fig. 6, the run-times for real-space and Fourier-space evaluation are presented for the stresslet,Footnote 3 along with the cost of precomputation of the Green’s functions. For the stresslet kernels, the precomputation involves the evaluation of the Green’s function for the stresslet as well as that of the correction functions and including it in the total run-time will increase the cost by 25–33%. The usage of an optimized value of the Ewald parameter \(\xi \) balances the cost of the real-space and Fourier-space evaluation and they are thus of the same order of magnitude. The right plot of Fig. 6 shows a further breakdown of the Fourier-space cost into its main constituent steps, namely Gridding, Scaling and FFT. The scaling step is clearly the cheapest among them, and the overall results are very similar to the case of free-space despite the fact that here we perform 21 FFTs compared to only 9 for free-space.

8 Conclusion and further work

We have presented a fast summation method for the half-space Green’s functions of Stokes flow derived by Gimbutas et al. [11]. The fast summation method and its implementation follows for free-space Green’s functions by Klinteberg et al. [3]. The method is based on the Ewald decomposition that recasts the sum into a sum of two exponentially decaying series: one in real space (short-range interactions) and one in Fourier space (long-range interactions) with the convergence of each series controlled by a common parameter.

While the evaluation of the real-space component proceeded along expected lines, the presence of extra terms complicated the task for the Fourier-space component. We followed the framework of the Spectral Ewald method for free-space Stokes flow introduced recently, and exploited the structure of the terms to optimize the number of FFTs and IFFTs that need to be performed. Furthermore, we demonstrated that with very elementary modifications the truncation error estimates for free-space Stokes flow remain valid.

The implementation for the half-space does incur extra costs in comparison with the free-space in multiple ways such as the gridding of a larger computational domain, substantial increase in the number of FFTs and IFFTs that need to be evaluated but the computational savings are also greater.

Future work can take shape in one of two ways. For one, it would be beneficial to use this work in the framework of a boundary integral method for Stokes flow in a half-space motivated by a physical problem of sedimentation. A more involved and mathematically interesting question would be to consider a 2-periodic extension with periodicity in the in-plane directions, akin to the 2-periodic extension for Stokes flow considered by Lindbo et al. [16].

Notes

This is clear when the source \(\mathbf {f}^{(m)} = \mathbf {f}(\mathbf {y}^{(m)})\) is written in full as \(\mathbf {u}(\mathbf {x}) = \sum _{\begin{array}{c} m=1 \end{array}}^{N}\mathbf {S}(\mathbf {x}- \mathbf {y}^{(m)}) \mathbf {f}^{(m)}\delta (\mathbf {y}^{(m)})\).

By exact Fourier-space contribution, we mean the integral in (9) evaluated overall of \(\mathbb {R}^3\).

We choose the stresslet because it is the most complicated.

References

af Klinteberg, L.: Ewald summation for the rotlet singularity of Stokes flow (2016). arXiv:1603.07467 [physics.flu-dyn]

Af Klinteberg, L., Tornberg, A.K.: Fast Ewald summation for Stokesian particle suspensions. Int. J. Numer. Methods Fluids 76(10), 669 (2014). https://doi.org/10.1002/fld.3953

af Klinteberg, L., Shamshirgar, D.S., Tornberg, A.K.: Fast Ewald summation for free-space Stokes potentials. Res. Math. Sci. 4(1), 1 (2017). https://doi.org/10.1186/s40687-016-0092-7

Blake, J.R.: A note on the image system for a stokeslet in a no-slip boundary. Math. Proc. Camb. Philos. Soc. 70, 303–310 (1971)

Deserno, M., Holm, C.: How to mesh up Ewald sums. I. A theoretical and numerical comparison of various particle mesh routines. J. Chem. Phys. 109(18), 7678 (1998). https://doi.org/10.1063/1.477414, http://link.aip.org/link/JCPSA6/v109/i18/p7678/s1&Agg=doi

Essmann, U., Perera, L., Berkowitz, M.L., Darden, T., Lee, H., Pedersen, L.G.: A smooth particle mesh Ewald method. J. Chem. Phys. 103(19), 8577 (1995)

Ewald, P.P.: Die Berechnung optischer und elektrostatischer Gitterpotentiale. Ann. Phys. 369(3), 253 (1921). https://doi.org/10.1002/andp.19213690304

Fu, Y., Rodin, G.J.: Fast solution methods for three-dimensional Stokesian many-particle problems. Commun. Numer. Methods Eng. 16, 145 (2000)

Greengard, L., Rokhlin, V.: A fast algorithm for particle simulations. J. Comput. Phys. 73, 325 (1987)

Gumerov, N.A., Duraiswami, R.: Fast multipole method for the biharmonic equation in three dimensions. J. Comput. Phys. 215, 363 (2006)

Gimbutas, Z., Greengard, L., Veerapaneni, S.: Simple and efficient representations for the fundamental solutions of Stokes flow in a half-space. J. Fluid Mech. (2015). https://doi.org/10.1017/jfm.2015.302

Hasimoto, H.: On the periodic fundamental solutions of the Stokes equations and their application to viscous flow past a cubic array of spheres. J. Fluid Mech. 5(02), 317 (1959). https://doi.org/10.1017/S0022112059000222

Kim, S., Karilla, S.J.: Microhydrodynamics. Butterworth-Heineman, Oxford (1991)

Kolafa, J., Perram, J.W.: Cutoff errors in the Ewald summation formulae for point charge systems. Mol. Simul. 9(5), 351 (1992). https://doi.org/10.1080/08927029208049126

Lindbo, D., Tornberg, A.K.: Spectrally accurate fast summation for periodic Stokes potentials. J. Comput. Phys. 229(23), 8994 (2010). https://doi.org/10.1016/j.jcp.2010.08.026

Lindbo, D., Tornberg, A.K.: Fast and spectrally accurate summation of 2-periodic Stokes potentials (2011). arXiv:1111.1815v1 [physics.flu-dyn]

Lindbo, D., Tornberg, A.K.: Spectral accuracy in fast Ewald-based methods for particle simulations. J. Comput. Phys. 230(24), 8744 (2011). https://doi.org/10.1016/j.jcp.2011.08.022

Neuber, H.: Ein neuer ansatz zur lösung räumlicher probleme der elastizitätstheorie. der hohlkegel unter einzellast als beispiel. Z. Angew. Math. Mech. 14(4), 203 (1934)

Papkovich, P.F.: Solution générale des équations differentielles fondamentales délasticité exprimée par trois fonctions harmoniques. Comptus Rendus de l’ Académie de Sciences 195(3), 513 (1932)

Saintillan, D., Darve, E., Shaqfeh, E.: A smooth particle-mesh Ewald algorithm for Stokes suspension simulations: the sedimentation of fibers. Phys. Fluids 17(3), 033301 (2005)

Shamshirgar, D.S., Tornberg, A.K.: The Spectral Ewald method for singly periodic domains. J. Comput. Phys. 347, 341 (2017)

Tornberg, A.K., Greengard, L.: A fast multipole method for the three-dimensional Stokes equations. J. Comput. Phys. 227(3), 1613 (2008). https://doi.org/10.1016/j.jcp.2007.06.029

Vico, F., Greengard, L., Ferrando, M.: Fast convolution with free-space Green’s functions. J. Comput. Phys. 323, 191 (2016). https://doi.org/10.1016/j.jcp.2016.07.028, arxiv: 1604.03155

Author's contributions

Acknowledgements

Anna-Karin Tornberg thanks the Göran Gustafsson Foundation for Research in Natural Sciences and Medicine and the Swedish e-Science Research Centre (SeRC). Shriram Srinivasan gratefully acknowledges the financial support of Linné Flow Centre and thanks Ludvig af Klinteberg for helpful ideas and advice with the numerical implementation.

Author information

Authors and Affiliations

Corresponding author

A Appendix

A Appendix

We record the expressions for the first 3 derivatives of \(f(r):= \dfrac{{\text {erf}}(\xi r)}{r}\) that appear in (7).

Next we record the real and Fourier space parts for the stokeslet, stresslet and rotlet below. Due to the complicated formulae involved, we change our notation slightly and present them directly as quoted by Klinteberg et al. [3]. The modification to our case with images and sources is straightforward and left to the reader.

where \(\hat{\mathbf {r}}=\mathbf {r}/|\mathbf {r}|\).

For the Fourier space part we have

where

The self-interaction term is nonzero for the stokeslet only, given by

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Srinivasan, S., Tornberg, AK. Fast Ewald summation for Green’s functions of Stokes flow in a half-space. Res Math Sci 5, 35 (2018). https://doi.org/10.1007/s40687-018-0153-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40687-018-0153-1