Abstract

We summarize the results of the most important research of the past decade on the post-transplant monitoring of heart transplant patients by gene expression profiling and the quantification of immunoactivity, donor-derived cell-free DNA and rejection-related microRNAs.

Similar content being viewed by others

Introduction

Heart transplantation (HT) is the treatment of choice for end-stage heart disease in the absence of contraindications [1, 2]. However, despite advances in the criteria for selection of donors and recipients and in immunosuppression, rejection is still a significant problem. Acute rejection kills 11 % of patients in the first 3 years post-HT, and injury due to immune system activity (whether acute or chronic) is an important contributor to cardiac allograft vasculopathy (CAV) and unexplained chronic graft failure, the main causes of death in the long term [3]. Other major causes of post-HT morbidity and mortality are infections, malignancies and nephrotoxicity [3], all of which are related to immunosuppression. Thus, both overimmunosuppression and underimmunosuppression can have lethal consequences after HT, but tailoring immunosuppression to the individual patient is not easy: in principle, it requires knowledge of the state of his or her immune system, the state of the graft and the patient’s susceptibility to changes in immunosuppressive therapy.

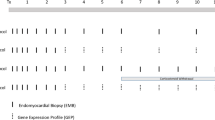

An ideal strategy for monitoring the effective level of immunosuppressive intervention and the state and cardiac effects of the immune system would be non-invasive, reliably allow discrimination between the presence and absence of rejection and detect states of overimmunosuppression. No such strategy currently exists. The best currently achievable approximation might combine (i) drug-level monitoring, (ii) assessment of endomyocardial biopsies (EMBs) both histologically and immunopathologically (including evaluation of markers such as C3d, C4d and CD68) [4, 5], (iii) graft function tests (imaging) and assays (B-type natriuretic peptide) [6], (iv) immune system monitoring (gene expression profiling (GEP) [7], T cell function tests [8] and monitoring of donor-specific antibodies) [9] and possibly (v) other promising biomarkers for rejection, such as circulating donor-derived cell-free DNA [10, 11] or micro-RNA [12, 13] (Table 1).

Current Monitoring Tools and Novel Strategies

Early diagnosis of rejection is one of the still-unsolved problems in HT. The diagnostic procedure currently regarded as the “gold standard” (though employed in accordance with different schedules by different centres) is evaluation of EMBs [4, 5], but this procedure is invasive (requiring intravenous catheterization), has poor sensitivity because of the necessary limitations on tissue sampling and suffers from significant inter-observer variability [14]. The development of a sensitive, objective, non-invasive technique for detection of allograft rejection is therefore a major goal for HT. Below, we indicate a number of approaches based on the measurement of putative biomarkers and predictors of rejection that have been proposed in recent years.

Gene Expression Profiling

Gene expression profiling (GEP) is a non-invasive technique aimed at assessing the risk of acute rejection by evaluation of immune system activation and leukocyte trafficking by measurement of the expression of relevant genes in peripheral blood mononuclear cells (PBMC) [7]. Its current commercial implementation is the AlloMap® molecular expression test (CareDx, Inc., Brisbane, CA), which was approved by the Food and Drug Administration (FDA) in 2008. This test assays the expression of 20 genes: 11 reflecting the probability of acute rejection and 9 for normalization and quality control [7]. An algorithm weighting the contribution of each gene affords a score in the range 0–40, low scores being associated with a low likelihood of moderate or severe cardiac allograft rejection (grade ≥3A according to the original ISHLT classification [15], grade ≥2R in the revised version [4]).

The AlloMap® test was developed in the Cardiac Allograft Rejection Gene Expression Observation (CARGO) study, a multicentre observational study of 629 HT patients at eight US centres [7]. The main objective of CARGO was to develop and evaluate a GEP test to discriminate between a quiescent state (ISHLT EMB grade 0R) and moderate or severe rejection (ISHLT EMB grade ≥2R). CARGO was carried out in three phases: (1) identification of a population of candidate genes using a combination of focused genomic and knowledge-based approaches, (2) selection of a panel of optimally informative genes using PCR assays and statistical methods and (3) validation. Validation was performed using 32 quiescent patients and 31 with acute rejection, all monitored prospectively by both GEP and EMB evaluation at the post-HT follow-up visits. All EMBs were graded by a local pathologist and by three independent core pathologists who were blind to the patients’ clinical data [7]. The rejection group had a significantly higher mean test score than the quiescent group (p = 0.0018), and a pre-defined score threshold of 20 correctly classified 84 % of the rejection group. However, scores increased with time post-HT (in association with the reduction of steroid doses that is generally effected in the first year). The main strength of this test at times greater than 1-year post-HT is claimed to be its high negative predictive value (NPV) for moderate or severe rejection, 99.6 % with a score threshold of 30. Although the positive predictive value of the test is low, 6.8 %, the high NPV may allow EMB to be dispensed with when the test is negative and there are no other indications of possible rejection either.

Since the main CARGO report appeared [7], there have been relevant papers by Bernstein et al. [16], Starling et al. [17] and Mehra et al. [18]. Bernstein et al. [16], reanalyzed CARGO data to investigate in greater detail the CARGO finding that test scores corresponding to grade 1B EMBs were similar to those associated with grades ≥3A. This was confirmed, as were the significant average score differences between grades ≥3A (32 ± 0.9) and grades 0 (25.3 ± 0.5), 1A (23.8 ± 2.1) and 2 (26.9 ± 1.5) (p = 0.00001, 0.001 and 0.01, respectively). The similarity between results for grade 1B and for grades ≥3A, which was seen not only in test scores but also in gene expression profiles, is in keeping with the difficulty, noted by Stewart et al. [4], in distinguishing histologically between grade 1B and grade 3B EMBs.

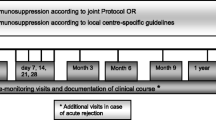

In keeping with the above-noted time dependence of AlloMap® scores and on the basis of early experience of its clinical use, Starling et al. [17] recommended that rejection surveillance with this test employs different thresholds for patients in different follow-up periods: 20 for months 3–6, 30 for months 7–12 and 34 for >12 months post-transplant. The protocol that they used at follow-up visits started with history taking, physical examination and assessment of graft function by echocardiography. If these procedures suggested rejection, heart failure or impaired left ventricular function, EMB was performed; if not, an AlloMap® test was carried out and EMB was performed only if the appropriate score threshold was exceeded.

Mehra et al. [18] carried out a nested case-control study of 104 HT patients enrolled in the CARGO study, all with a baseline EMB grade of 0 or 1A. The baseline AlloMap® scores of “controls” who subsequently remained rejection-free for 12 weeks were significantly lower than those of patients who suffered grade ≥3A rejection within 12 weeks, 23.9 ± 7.1 as against 27.4 ± 6.3 (p = 0.01), with a wider gap between non-rejectors and rejectors in the first 6 months post-HT (22.4 ± 7.5 vs 28.4 ± 4.9, p < 0.001). In this latter period, no scores from patients who went on to reject within 12 weeks were <20. Furthermore, mRNA measurements of genes not included in the AlloMap® panel suggested that pathways regulating T cell homeostasis and corticosteroid sensitivity are associated with future acute cellular rejection and are measurably active before rejection becomes histologically detectable.

Direct comparison of AlloMap-based monitoring with EMB-based monitoring among patients with low risk of rejection (clinically stable and with a left ventricular ejection fraction (LVEF) ≥45 %) was undertaken in the Invasive Monitoring Attenuation through Gene Expression trial (IMAGE) [19]. This trial randomized 602 patients who were between 6 months and 5 years post-HT at baseline to either AlloMap® or EMB monitoring (in both cases, together with clinical examination and echocardiography); median follow-up was 19 months. The 2-year cumulative incidence of the composite primary endpoint (rejection with haemodynamic compromise, graft dysfunction due to other causes, death or retransplantation) was no greater among AlloMap-monitored patients than among EMB-monitored patients (14.5 vs 15.3 %), and the all-cause death rates were likewise similar (6.3 and 5.6 %). EMB-monitored patients underwent an average 3.0 EMBs per person-year as against 0.5 for AlloMap-monitored patients; these latter prompted by clinical or echographic signs of rejection or by AlloMap® scores greater than a threshold initially set at 30 and later at 34. In 6 of the 34 episodes of rejection among AlloMap-monitored patients, the only initial indication of rejection was the AlloMap® score. The main limitation of this study was its restriction to low-risk patients, imposed not only through the stability and ejection fraction conditions but also by the exclusion of patients who had undergone HT less than 6 months previously [20]. Additionally, the change in AlloMap® threshold prevented evaluation of a well-defined value. A further weakness was the lack of blinding, which may possibly have influenced clinical decisions.

Kobashigawa et al. have recently published the results of an AlloMap-EMB comparison that complemented IMAGE to some extent by enrolling its 60 participants at times between 55 days post-HT (when GEP becomes valid) and 6 months post-HT [21]. The Early Invasive Monitoring Attenuation through Gene Expression trial (EIMAGE) was a single-centre randomized trial to assess the safety and efficacy of GEP in comparison to EMB between 55 days and 18 months post-HT. In the GEP arm, EMB was performed if indicated by clinical or echocardiographic findings or if GEP was ≥30 between 2 and 6 months or ≥34 at later times. As in IMAGE, participants were required to be clinically stable and to have normal allograft function (defined by LVEF ≥50 %). The coprimary outcome variables were (a) the composite death, retransplantation, rejection with haemodynamic compromise or graft dysfunction at any time between baseline and 18 months post-HT and (b) the change in intravascular ultrasound (IVUS)-measured maximal intimal thickness (MIT) between baseline and 12 months post-HT. The GEP and EMB groups did not differ significantly with respect to either of these outcome variables, nor with respect to cardiac index at 1 year, LVEF at 18 months or overall survival (100 % in both groups). The numbers of EMBs performed in the GEP and EMB groups were, respectively, 42 and 253, a ratio very similar to that occurring in IMAGE. The main weakness of EIMAGE was its small sample size. Like IMAGE, it was also limited to low-risk patients (only 60 % of patients evaluated for inclusion were finally included). These factors together led to their only occurring four EMB-proven cases of rejection and only eight cases of primary endpoint (a) (including five cases of haemodynamic compromise without EMB-proven rejection as first event, a reflection of the limitations of EMB).

Having observed informally that the AlloMap® scores of individual patients seemed to remain quite stable, Deng and coworkers investigated score variability and the prognostic significance of its magnitude [22, 23]. Score stability was formally corroborated in a study of 1135 GEP results from 238 patients without clinical signs or symptoms of rejection, in which within-patient score variability was significantly less than between-patient variability [22]. Then, in a sub-analysis of 369 participants in IMAGE [23], it was found that after adjusting for significant non-GEP variables (younger age, shorter time since HT and race), the probability of suffering the IMAGE composite primary outcome within the next 12 months was significantly correlated (hazard ratio 1.76) with the standard deviation of inverse-logit-transformed past AlloMap® scores but not with the scores themselves or with whether they exceeded a threshold of 34.

The usefulness of GEP for testing for antibody-mediated rejection (AMR) is not known. Cadeiras et al. [24] performed a single-centre pilot study that included 45 HT patients, five of them with clinical signs of AMR. They found that patients with and without AMR differed in the expression of 388 genes belonging to more than 30 gene ontology categories related to cell metabolism, protein synthesis, immune response, apoptosis and cell proliferation. These observations suggest that HT patients with AMR may have a specific gene expression profile in PBMC, but further studies are needed.

The utility of AlloMap® for testing for cardiac allograft vasculopathy (CAV) has also been explored. In one study [25], Yamani et al. found CAV to be associated with higher AlloMap® score among 69 HT patients with ISHLT grade 0R or 1R who were evaluated for CAV by coronary angiography a mean 35 months post-HT. After adjustment for different follow-up times and other potential confounders, the CAV group had scores of 32.2 ± 3.9 vs 26.1 ± 6.5 in the non-CAV group (p < 0.001), with 70 % of patients having a score >30 in the CAV group as against 30 % in the non-CAV group. In another study of 67 patients from whom AlloMap® scores were obtained a mean 34 months after HT, higher scores were found among 19 patients who had had evidence of early post-HT ischaemic injury [26]. These observations suggest that one cause of false-positive AlloMap® tests may be prior ischaemic injury.

Although the clinical use of GEP is not universally accepted in post-HT care, the current ISHLT Guidelines for the Care of Heart Transplant Recipients [27] consider that GEP with AlloMap® can be used to rule out the presence of acute cellular rejection of grade 2R or greater in appropriate low-risk patients between 6 months and 5 years after HT (recommendation class IIa, level of evidence C).

T Cell Function Assays

ImmuKnow

The ImmuKnow assay, also known as the Transplantation Immune Cell Function Assay (Cylex Inc., Columbia, MD, USA), is an FDA-approved commercial assay that measures ATP release from activated lymphocytes in peripheral blood and correlates with immune responsiveness. The higher the assay value, the greater the risk of rejection, and the lower the assay value, the greater the risk of infection. ATP levels are considered high if ≥525 ng/mL, moderate between 226 and 524 ng/mL and low if ≤225 ng/mL [28]; they have been investigated not only in regard to the incidence of infection, acute rejection, immunological quiescence and clinical stability, but also in relation to white blood cell count; absolute lymphocyte count; trough levels of cyclosporine, tacrolimus and mycophenolate mofetil; C-reactive protein levels; and Afro-American ethnicity [26, 28].

In a meta-analysis of 504 solid-organ transplant recipients attending ten US centres [29], statistical balance between infection and rejection was obtained at an ImmuKnow-measured ATP level of 280 ng/ml. This meta-analysis included only 86 HT patients, who together suffered only three rejection episodes and two infections. However, in a longitudinal study of 50 HT patients providing 327 samples, Israeli et al. [30] found that ATP levels during infection and EMB-proven acute rejection (median values 129 and 619 ng/mL, respectively) differed significantly from those observed during clinical quiescence (median 351 ng/mL). By contrast, in a longitudinal study of 296 HT patients with a total of 864 ImmuKnow assays performed between 2 weeks and 10 years post-HT, Kobashigawa et al. [8] observed that although ATP levels were significantly lower among patients in whom infections were diagnosed within 1 month post-test than among those with no diagnosed infection, 187 vs 280 ng/mL (p < 0.001), the levels recorded during rejection episodes did not differ significantly from those recorded during steady state; however, like the meta-analysis of Kowalski et al., this study included no more than a small number of acute rejection episodes (8, as against 38 episodes of infection). In another small study (125 patients, 182 assays, three rejection episodes, eight infection episodes), Gupta et al. [31] observed no correlation between baseline ATP levels and either infection or rejection during the following 6 months and no useful ATP threshold for either increased risk of rejection or increased risk of infection, and in a study of 56 HT patients providing 162 assays over 18 months, Heikal et al. [32] found that ATP levels were significantly lower during the 31 infection episodes within 1 month of assay (p = 0.04) but observed no episodes of acute cellular rejection allowing evaluation of ImmuKnow for its detection. Furthermore, a meta-analysis of nine studies [33] found that ImmuKnow did not perform well for diagnosis of either infection or rejection, except insofar as its NPV for rejection, 0.96, might allow it to be used in the same way as AlloMap®.

The utility of the ImmuKnow assay for monitoring paediatric HT patients is similarly questionable. Macedo et al. [34] found negative correlation between T cell ATP release and Epstein-Barr virus load, but Rossano et al. [35] found ImmuKnow results to be predictive of neither rejection nor infection.

In regard to outcomes other than infection or cellular rejection, Kobashiwaga et al. [8] reported that ATP levels were related to AMR, but Heikal et al. [32] found that IL-5, but not ATP, was associated with AMR. Heikal et al. also reported that IL-8 and tumour necrosis factor-α, but not ATP, were related to CAV, whereas Cheng et al. [36] reported that ImmuKnow values measured 2 months post-HT increased with the 2- to 12-month increase in IVUS-measured average percent stenosis, plaque volume and MIT. However, the sensitivity of the optimal ATP threshold for predicting an increase of ≥0.5 mm in MIT, 406 ng/mL, was only 66.7 %, although its specificity was 94.3 %.

Enzyme-Linked Immunospot

The enzyme-linked immunospot (ELISPOT) assay is capable of detecting cytokine secretion by individual antigen-reactive T cells; whereas an enzyme-linked immunosorbent assay (ELISA) for a cytokine determines the amount of cytokine produced, an ELISPOT assay determines the number of reactive cells [37, 38]. HT patients have been tested with ELISPOT assays in only a few small studies. In one, the activities of the direct and indirect antigen presentation pathways against irradiated and fragmented donor spleen cells, respectively, were separately quantified using ELISPOT assays for PBMC secreting interferon-γ (IFN-γ) before, during and after acute rejection episodes [39]. The direct presentation pathway was always present, the number of IFN-γ-producing cells increasing during rejection and decreasing after successful treatment. The indirect pathway also increased during rejection episodes when present but was present in only 8 of the 18 episodes investigated, 5 of these 8 occurring more than 6 months post-HT. In another study [40], evaluation of the response to donor-HLA-derived peptides by IFN-γ ELISPOT assays and of alloantibodies by flow cytometry using HLA-coated beads showed a cellular or humoral response in 17 of 32 patients with CAV as against only 4 of 33 CAV-free patients, though only in one patient, both pathways were active. Further studies are needed to determine the usefulness of such assays in everyday clinical practice.

Antibody Monitoring

Anti-HLA Antibodies

The development of anti-HLA antibodies after HT has been associated with allograft injury, cellular rejection, AMR, CAV and poor survival [9, 41–44]. Solid-phase immunoassays have allowed quantification of even low levels and variation of antibodies against donor-specific HLA (donor-specific antibodies (DSA)), although there are still technical issues that must be ironed out, including questions of assay standardization [45]. As in the case of kidney grafts [46], the ability to activate complement may be important in differentiating clinically more relevant from less relevant DSA [45]: in a large retrospective study of 565 pre-HT sera from patients with negative pre-HT crossmatch to donor cells, C4d-fixing DSA were even more detrimental to survival than DSA in general [47], and in a retrospective study of 18 paediatric HT patients [48], Chin et al. found that the presence of C1q-fixing DSA immediately after transplantation achieved perfect differentiation between patients who did and did not develop AMR.

“Non-HLA” Antibodies

Antibodies against various donor antigens other than HLA can also cause injury, although information in this area is relatively scant. Non-HLA antibodies can be directed against polymorphic non-HLA antigens such as major histocompatibility complex class I chain-related proteins (MIC). These antibodies bind to endothelium and result in apoptosis but not complement-mediated injury [9]. Antibodies against endothelial cells have been associated with acute humoral rejection, CAV and poor graft survival [49]. Vimentin is the most abundant immunoreactive endothelial cell antigen, and in experimental models [50, 51] and clinical studies [52, 53], anti-vimentin antibodies have been associated with poor outcomes and CAV. Antibodies against MIC A have also been associated with poor allograft outcome in some studies [54, 55], but not in others [56]. Other antibody targets related to rejection or poor graft outcome include myosin [57, 58], angiotensin type 1 receptor [59] and endothelial cell cytoskeletal proteins (actin, tubulin and anticytokeratin) [60].

Current Consensus

In spite of the growing evidence for the importance of antibody monitoring after HT, the optimal monitoring schedule and response to results have not yet been elucidated [9]. Kobashigawa et al. [61], reporting on the ISHLT Consensus Conference on AMR in Heart Transplantation, stated that circulating DSA was no longer believed to be required for diagnosis of AMR (since, for example, DSA might be immobilized on the allograft), but that when AMR is suspected, blood should be nevertheless be tested for DSA against HLA classes I and II and also, possibly, in its absence, for non-HLA antibodies. The conference recommended testing for DSA by solid-phase and/or cell-based assays 2 weeks and 1,3, 6 and 12 months after HT and then annually and when AMR is suspected on clinical grounds, with quantification if detected, and also after major decreases in immunosuppressive medication, to determine any increase in alloimmune activation.

The antibody measurement recommendations of a recent American Heart Association scientific statement on AMR after HT are as follows [9]. Solid-phase and/or cell-based assays of DSA should be performed during the first 90 days after transplantation or when AMR is suspected (recommendation class I, level of evidence C). It is reasonable to perform solid-phase and/or cell-based assays of DSA for surveillance 3, 6 and 12 months after transplantation and annually thereafter or in accordance with the centre’s routine surveillance protocol (recommendation class IIa, level of evidence C). Determination of non-HLA antibodies against targets such as endothelial cells, vimentin, MIC A and MIC B may be considered when anti-HLA antibodies are absent and AMR is suspected (recommendation class IIb, level of evidence C).

Donor-Derived Cell-Free DNA

Cell-free DNA (cfDNA) is the name given to short lengths of double-stranded DNA released from tissues as a result of either normal physiological cell turnover or pathological apoptotic or necrotic cell death. It is found in the circulating blood plasma at levels typically around 3 ng/mL. It has, for some years, been used for non-invasive prenatal testing [62, 63], is being intensively investigated in the field of oncology [64] and is now being considered for use in transplantation [65–67]. Just as its use in prenatal testing depends on the fraction of cfDNA in a mother’s circulation that is of foetal origin, so also its use to detect graft rejection depends on the fraction in a graft recipient’s circulation that originates in the graft. This donor-derived cfDNA (dd-cfDNA) was first established as detectable in recipients of sex-mismatched grafts [65]. Later work confirmed the hypothesis that dd-cfDNA correlates with graft rejection [68, 69], rising from less than 1 % to around 5 % during rejection episodes, and the development of massively parallel sequencing technology has potentially made dd-cfDNA detection and measurement available for all graft recipients, not just those with grafts from donors of the opposite sex [10, 70]. Although shotgun sequencing [11, 70] and targeted amplification [71] have the drawback of requiring both recipient and donor genotypes, more recent methods using digital droplet PCR of single-nucleotide polymorphisms with high minor allele frequencies seem promising [72].

MicroRNAs

MicroRNAs (miRNAs) are molecules of endogenous RNA some 18–25 nucleotides long that, instead of encoding proteins, act as post-transcriptional gene expression regulators. They are present not only intracellularly, but also in a highly stable form, in the bloodstream, which suggests the targeting of distant tissues. Although in principle, they have great potential as biomarkers of protein dysregulation [73], their interpretation is complicated. Nevertheless, in the past couple of years or so, there have been reports of modified levels of a number of miRNAs during rejection of transplanted livers [74], kidneys [75] and hearts [12, 13]. In the case of heart grafts, Öhman and coworkers identified seven miRNAs with higher levels during than before or after rejection [12], while Van Huyen et al. [13] reported that joint consideration of the levels of miR-10a, miR-31, miR-92a and miR-155 was able to discriminate between patients with and without rejection. It is to be hoped that these findings will not only be confirmed by subsequent studies, but will also throw light on the pathophysiology of heart graft rejection.

Conclusions

The development of sensitive, objective, non-invasive immune biomarkers of allograft rejection has being a major goal in HT. In the last years, there has been great progress in different approaches. GEP test seems to be useful for ruling out the presence of a moderate to severe acute cellular rejection in low-risk patients. Antibody monitoring is recommended at least during the first year after HT and if AMR is suspected. T cell functional assays have been investigated although its usefulness in HT remains controversial. Newer monitoring tools, like dd-cfDNA or miRNA, seem to be promising for individualizing immunosuppressive therapies and better understanding the mechanisms of rejection.

References

McMurray JJ, Adamopoulos S, Anker SD, Auricchio A, Bohm M, Dickstein K, et al. ESC guidelines for the diagnosis and treatment of acute and chronic heart failure 2012: the Task Force for the Diagnosis and Treatment of Acute and Chronic Heart Failure 2012 of the European Society of Cardiology. Developed in collaboration with the Heart Failure Association (HFA) of the ESC. Eur J Heart Fail. 2012;14(8):803–69.

Yancy CW, Jessup M, Bozkurt B, Butler J, Casey Jr DE, Drazner MH, et al. 2013 ACCF/AHA guideline for the management of heart failure: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines. J Am Coll Cardiol. 2013;62(16):e147–239.

Lund LH, Edwards LB, Kucheryavaya AY, Benden C, Christie JD, Dipchand AI, et al. The registry of the International Society for Heart and Lung Transplantation: thirty-first official adult heart transplant report—2014; focus theme: retransplantation. J Heart Lung Transplant. 2014;33(10):996–1008.

Stewart S, Winters GL, Fishbein MC, Tazelaar HD, Kobashigawa J, Abrams J, et al. Revision of the 1990 working formulation for the standardization of nomenclature in the diagnosis of heart rejection. J Heart Lung Transplant. 2005;24(11):1710–20.

Berry GJ, Angelini A, Burke MM, Bruneval P, Fishbein MC, Hammond E, et al. The ISHLT working formulation for pathologic diagnosis of antibody-mediated rejection in heart transplantation: evolution and current status (2005–2011). J Heart Lung Transplant. 2011;30(6):601–11.

Hunt SA, Haddad F. The changing face of heart transplantation. J Am Coll Cardiol. 2008;52(8):587–98.

Deng MC, Eisen HJ, Mehra MR, Billingham M, Marboe CC, Berry G, et al. Noninvasive discrimination of rejection in cardiac allograft recipients using gene expression profiling. Am J Transplant. 2006;6(1):150–60.

Kobashigawa JA, Kiyosaki KK, Patel JK, Kittleson MM, Kubak BM, Davis SN, et al. Benefit of immune monitoring in heart transplant patients using ATP production in activated lymphocytes. J Heart Lung Transplant. 2010;29(5):504–8.

Colvin MM, Cook JL, Chang P, Francis G, Hsu DT, Kiernan MS, et al. Antibody-mediated rejection in cardiac transplantation: emerging knowledge in diagnosis and management: a scientific statement from the American Heart Association. Circulation. 2015;131(18):1608–39.

Daly KP. Circulating donor-derived cell-free DNA: a true biomarker for cardiac allograft rejection? Ann Transl Med. 2015;3(4):47.

De Vlaminck I, Valantine HA, Snyder TM, Strehl C, Cohen G, Luikart H, et al. Circulating cell-free DNA enables noninvasive diagnosis of heart transplant rejection. Sci Transl Med. 2014;6(241):241ra77.

Sukma Dewi I, Torngren K, Gidlof O, Kornhall B, Ohman J. Altered serum miRNA profiles during acute rejection after heart transplantation: potential for non-invasive allograft surveillance. J Heart Lung Transplant. 2013;32(4):463–6.

Duong Van Huyen JP, Tible M, Gay A, Guillemain R, Aubert O, Varnous S, et al. MicroRNAs as non-invasive biomarkers of heart transplant rejection. Eur Heart J. 2014;35(45):3194–202.

Crespo-Leiro MG, Zuckermann A, Bara C, Mohacsi P, Schulz U, Boyle A, et al. Concordance among pathologists in the second Cardiac Allograft Rejection Gene Expression Observational Study (CARGO II). Transplantation. 2012;94(11):1172–7.

Billingham ME, Cary NR, Hammond ME, Kemnitz J, Marboe C, McCallister HA, et al. A working formulation for the standardization of nomenclature in the diagnosis of heart and lung rejection: Heart Rejection Study Group. The International Society for Heart Transplantation. J Heart Transplant. 1990;9(6):587–93.

Bernstein D, Williams GE, Eisen H, Mital S, Wohlgemuth JG, Klingler TM, et al. Gene expression profiling distinguishes a molecular signature for grade 1B mild acute cellular rejection in cardiac allograft recipients. J Heart Lung Transplant. 2007;26(12):1270–80.

Starling RC, Pham M, Valantine H, Miller L, Eisen H, Rodriguez ER, et al. Molecular testing in the management of cardiac transplant recipients: initial clinical experience. J Heart Lung Transplant. 2006;25(12):1389–95.

Mehra MR, Kobashigawa JA, Deng MC, Fang KC, Klingler TM, Lal PG, et al. Transcriptional signals of T-cell and corticosteroid-sensitive genes are associated with future acute cellular rejection in cardiac allografts. J Heart Lung Transplant. 2007;26(12):1255–63.

Pham MX, Teuteberg JJ, Kfoury AG, Starling RC, Deng MC, Cappola TP, et al. Gene-expression profiling for rejection surveillance after cardiac transplantation. N Engl J Med. 2010;362(20):1890–900.

Jarcho J. Fear of rejection, monitoring the heart-transplant recipient. N Engl J Med. 2010; 22:0–00.

Kobashigawa J, Patel J, Azarbal B, Kittleson M, Chang D, Czer L, et al. Randomized pilot trial of gene expression profiling versus heart biopsy in the first year after heart transplant: early invasive monitoring attenuation through gene expression trial. Circ Heart Fail. 2015;8(3):557–64.

Deng MC, Alexander G, Wolters H, Shahzad K, Cadeiras M, Hicks A, et al. Low variability of intraindividual longitudinal leukocyte gene expression profiling cardiac allograft rejection scores. Transplantation. 2010;90(4):459–61.

Deng MC, Elashoff B, Pham MX, Teuteberg JJ, Kfoury AG, Starling RC, et al. Utility of gene expression profiling score variability to predict clinical events in heart transplant recipients. Transplantation. 2014;97(6):708–14.

Cadeiras M, Burke E, Dedrick R, Gangadin A, Latif F, Shahzad K, et al. Gene expression profiles of patients with antibody-mediated rejection after cardiac transplantation. J Heart Lung Transplant. 2008;27(8):932–4.

Yamani MH, Taylor DO, Rodriguez ER, Cook DJ, Zhou L, Smedira N, et al. Transplant vasculopathy is associated with increased AlloMap gene expression score. J Heart Lung Transplant. 2007;26(4):403–6.

Yamani MH, Taylor DO, Haire C, Smedira N, Starling RC. Post-transplant ischemic injury is associated with up-regulated AlloMap gene expression. Clin Transpl. 2007;21(4):523–5.

Costanzo MR, Dipchand A, Starling R, Anderson A, Chan M, Desai S, et al. The International Society of Heart and Lung Transplantation Guidelines for the care of heart transplant recipients. J Heart Lung Transplant. 2010;29(8):914–56.

Andrikopoulou E, Mather PJ. Current insights: use of Immuknow in heart transplant recipients. Prog Transplant. 2014;24(1):44–50.

Kowalski RJ, Post DR, Mannon RB, Sebastian A, Wright HI, Sigle G, et al. Assessing relative risks of infection and rejection: a meta-analysis using an immune function assay. Transplantation. 2006;82(5):663–8.

Israeli M, Ben-Gal T, Yaari V, Valdman A, Matz I, Medalion B, et al. Individualized immune monitoring of cardiac transplant recipients by noninvasive longitudinal cellular immunity tests. Transplantation. 2010;89(8):968–76.

Gupta S, Mitchell JD, Markham DW, Mammen PP, Patel PC, Kaiser PA, et al. Utility of the Cylex assay in cardiac transplant recipients. J Heart Lung Transplant. 2008;27(8):817–22.

Heikal NM, Bader FM, Martins TB, Pavlov IY, Wilson AR, Barakat M, et al. Immune function surveillance: association with rejection, infection and cardiac allograft vasculopathy. Transplant Proc. 2012.

Ling X, Xiong J, Liang W, Schroder PM, Wu L, Ju W, et al. Can immune cell function assay identify patients at risk of infection or rejection? A meta-analysis. Transplantation. 2012;93(7):737–43.

Macedo C, Zeevi A, Bentlejewski C, Popescu I, Green M, Rowe D, et al. The impact of EBV load on T-cell immunity in pediatric thoracic transplant recipients. Transplantation. 2009;88(1):123–8.

Rossano JW, Denfield SW, Kim JJ, Price JF, Jefferies JL, Decker JA, et al. Assessment of the Cylex ImmuKnow cell function assay in pediatric heart transplant patients. J Heart Lung Transplant. 2009;28(1):26–31.

Cheng R, Azarbal B, Yung A, Chang DH, Patel JK, Kobashigawa JA. Elevated immune monitoring early after cardiac transplantation is associated with increased plaque progression by intravascular ultrasound. Clin Transpl. 2015;29(2):103–9.

Sho M, Sandner SE, Najafian N, Salama AD, Dong V, Yamada A, et al. New insights into the interactions between T-cell costimulatory blockade and conventional immunosuppressive drugs. Ann Surg. 2002;236(5):667–75.

Dinavahi R, Heeger PS. T-cell immune monitoring in organ transplantation. Curr Opin Organ Transplant. 2008;13(4):419–24.

van Besouw NM, Zuijderwijk JM, Vaessen LM, Balk AH, Maat AP, van der Meide PH, et al. The direct and indirect allogeneic presentation pathway during acute rejection after human cardiac transplantation. Clin Exp Immunol. 2005;141(3):534–40.

Poggio ED, Roddy M, Riley J, Clemente M, Hricik DE, Starling R, et al. Analysis of immune markers in human cardiac allograft recipients and association with coronary artery vasculopathy. J Heart Lung Transplant. 2005;24(10):1606–13.

Tambur AR, Pamboukian SV, Costanzo MR, Herrera ND, Dunlap S, Montpetit M, et al. The presence of HLA-directed antibodies after heart transplantation is associated with poor allograft outcome. Transplantation. 2005;80(8):1019–25.

Vasilescu ER, Ho EK, de la Torre L, Itescu S, Marboe C, Cortesini R, et al. Anti-HLA antibodies in heart transplantation. Transpl Immunol. 2004;12(2):177–83.

Zhang Q, Cecka JM, Gjertson DW, Ge P, Rose ML, Patel JK, et al. HLA and MICA: targets of antibody-mediated rejection in heart transplantation. Transplantation. 2011;91(10):1153–8.

Ho EK, Vlad G, Vasilescu ER, de la Torre L, Colovai AI, Burke E, et al. Pre- and posttransplantation allosensitization in heart allograft recipients: major impact of de novo alloantibody production on allograft survival. Hum Immunol. 2011;72(1):5–10.

Tait BD, Susal C, Gebel HM, Nickerson PW, Zachary AA, Claas FH, et al. Consensus guidelines on the testing and clinical management issues associated with HLA and non-HLA antibodies in transplantation. Transplantation. 2013;95(1):19–47.

Thammanichanond D, Mongkolsuk T, Rattanasiri S, Kantachuvesiri S, Worawichawong S, Jirasiritham S, et al. Significance of C1q-fixing donor-specific antibodies after kidney transplantation. Transplant Proc. 2014;46(2):368–71.

Smith JD, Hamour IM, Banner NR, Rose ML. C4d fixing, luminex binding antibodies—a new tool for prediction of graft failure after heart transplantation. Am J Transplant. 2007;7(12):2809–15.

Chin C, Chen G, Sequeria F, Berry G, Siehr S, Bernstein D, et al. Clinical usefulness of a novel C1q assay to detect immunoglobulin G antibodies capable of fixing complement in sensitized pediatric heart transplant patients. J Heart Lung Transplant. 2011;30(2):158–63.

Fredrich R, Toyoda M, Czer L, Galfayan K, Galera O, Trento A, et al. The clinical significance of antibodies to human vascular endothelial cells after cardiac transplantation. Transplantation. 1999;67:385–91.

Mahesh B, Leong HS, McCormack A, Sarathchandra P, Holder A, Rose ML. Autoantibodies to vimentin cause accelerated rejection of cardiac allografts. Am J Pathol. 2007;170(4):1415–27.

Leong HS, Mahesh BM, Day JR, Smith JD, McCormack AD, Ghimire G, et al. Vimentin autoantibodies induce platelet activation and formation of platelet-leukocyte conjugates via platelet-activating factor. J Leukoc Biol. 2008;83(2):263–71.

Jurcevic S, Ainsworth M, Pomerance A, Smith J, Robinson D, Dunn M, et al. Antivimentin antibodies are an independent predictor of transplant-associated coronary artery disease after cardiac transplantation. Transplantation. 2001;71:886–92.

Fhied C, Kanangat S, Borgia JA. Development of a bead-based immunoassay to routinely measure vimentin autoantibodies in the clinical setting. J Immunol Methods. 2014;407:9–14.

Kauke T, Kaczmarek I, Dick A, Schmoeckel M, Deutsch MA, Beiras-Fernandez A, et al. Anti-MICA antibodies are related to adverse outcome in heart transplant recipients. J Heart Lung Transplant. 2009;28(4):305–11.

Suarez-Alvarez B, Lopez-Vazquez A, Diaz-Pena R, Diaz-Molina B, Blanco-Garcia RM, Alvarez-Lopez MR, et al. Post-transplant soluble MICA and MICA antibodies predict subsequent heart graft outcome. Transpl Immunol. 2006;17(1):43–6.

Smith JD, Brunner VM, Jigjidsuren S, Hamour IM, McCormack AM, Banner NR, et al. Lack of effect of MICA antibodies on graft survival following heart transplantation. Am J Transplant. 2009;9(8):1912–9.

Warraich RS, Pomerance A, Stanley A, Banner NR, Dunn MJ, Yacoub MH. Cardiac myosin autoantibodies and acute rejection after heart transplantation in patients with dilated cardiomyopathy. Transplantation. 2000;69(8):1609–17.

Morgun A, Shulzhenko N, Unterkircher C, Diniz R, Pereira A, Silva M, et al. Pre-and post-transplant anti-myosin and anti-heat shock protein antibodies and cardiac transplant outcome. J Heart Lung Transplant. 2004;23:204–9.

Reinsmoen NL, Lai CH, Mirocha J, Cao K, Ong G, Naim M, et al. Increased negative impact of donor HLA-specific together with non-HLA-specific antibodies on graft outcome. Transplantation. 2014;97(5):595–601.

Alvarez-Marquez A, Aguilera I, Blanco RM, Pascual D, Encarnacion-Carrizosa M, Alvarez-Lopez MR, et al. Positive association of anticytoskeletal endothelial cell antibodies and cardiac allograft rejection. Hum Immunol. 2008;69(3):143–8.

Kobashigawa J, Crespo-Leiro MG, Ensminger SM, Reichenspurner H, Angelini A, Berry G, et al. Report from a consensus conference on antibody-mediated rejection in heart transplantation. J Heart Lung Transplant. 2011;30(3):252–69.

Chitty LS. Use of cell-free DNA to screen for Down’s syndrome. N Engl J Med. 2015;372(17):1666–7.

Norton ME, Jacobsson B, Swamy GK, Laurent LC, Ranzini AC, Brar H, et al. Cell-free DNA analysis for noninvasive examination of trisomy. N Engl J Med. 2015;372(17):1589–97.

Esposito A, Bardelli A, Criscitiello C, Colombo N, Gelao L, Fumagalli L, et al. Monitoring tumor-derived cell-free DNA in patients with solid tumors: clinical perspectives and research opportunities. Cancer Treat Rev. 2014;40(5):648–55.

Lo YM, Tein MS, Pang CC, Yeung CK, Tong KL, Hjelm NM. Presence of donor-specific DNA in plasma of kidney and liver-transplant recipients. Lancet. 1998;351(9112):1329–30.

Lo YM. Transplantation monitoring by plasma DNA sequencing. Clin Chem. 2011;57(7):941–2.

Gielis EM, Ledeganck KJ, De Winter BY, Del Favero J, Bosmans JL, Claas FH, et al. Cell-free DNA: an upcoming biomarker in transplantation. Am J Transplant. 2015;15(10):2541–51.

Gadi VK, Nelson JL, Boespflug ND, Guthrie KA, Kuhr CS. Soluble donor DNA concentrations in recipient serum correlate with pancreas-kidney rejection. Clin Chem. 2006;52(3):379–82.

Garcia Moreira V, Prieto Garcia B, Baltar Martin JM, Ortega Suarez F, Alvarez FV. Cell-free DNA as a noninvasive acute rejection marker in renal transplantation. Clin Chem. 2009;55(11):1958–66.

Snyder TM, Khush KK, Valantine HA, Quake SR. Universal noninvasive detection of solid organ transplant rejection. Proc Natl Acad Sci U S A. 2011;108(15):6229–34.

Hidestrand M, Tomita-Mitchell A, Hidestrand PM, Oliphant A, Goetsch M, Stamm K, et al. Highly sensitive noninvasive cardiac transplant rejection monitoring using targeted quantification of donor-specific cell-free deoxyribonucleic acid. J Am Coll Cardiol. 2014;63(12):1224–6.

Beck J, Bierau S, Balzer S, Andag R, Kanzow P, Schmitz J, et al. Digital droplet PCR for rapid quantification of donor DNA in the circulation of transplant recipients as a potential universal biomarker of graft injury. Clin Chem. 2013;59(12):1732–41.

Dimmeler S, Zeiher AM. Circulating microRNAs: novel biomarkers for cardiovascular diseases? Eur Heart J. 2010;31(22):2705–7.

Wei L, Gong X, Martinez OM, Krams SM. Differential expression and functions of microRNAs in liver transplantation and potential use as non-invasive biomarkers. Transpl Immunol. 2013;29(1–4):123–9.

Betts G, Shankar S, Sherston S, Friend P, Wood KJ. Examination of serum miRNA levels in kidney transplant recipients with acute rejection. Transplantation. 2014;97(4):e28–30.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Maria G Crespo-Leiro has been principal investigator in CARGOII study.

Eduardo Barge-Caballero has no conflicts relevant to this disclosure.

Maria J Paniagua-Martin has no conflicts relevant to this disclosure.

Gonzalo Barge-Caballero has no conflicts relevant to this disclosure.

Natalia Suarez-Fuentetaja has no conflicts relevant to this disclosure.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by the author.

Additional information

This article is part of the Topical Collection on Thoracic Transplantation

Rights and permissions

About this article

Cite this article

Crespo-Leiro, M.G., Barge-Caballero, E., Paniagua-Martin, M.J. et al. Update on Immune Monitoring in Heart Transplantation. Curr Transpl Rep 2, 329–337 (2015). https://doi.org/10.1007/s40472-015-0081-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40472-015-0081-6