Abstract

This paper investigates a portfolio optimization problem under uncertainty on the stock returns, where the manager seeks to achieve an appropriate trade-off between the expected portfolio return and the risk of loss. The uncertainty set consists of a finite set of scenarios occurring with equal probability. We introduce a new robustness criterion, called pw-robustness, which seeks to maximize the portfolio return in a proportion p of scenarios and guarantees a minimum return over all scenarios. We model this optimization problem as a mixed-integer programming problem. Through extensive numerical experiments, we identify the instances that can be solved to optimality in an acceptable time using off-the-shelf software. For the instances that cannot be solved to optimality within the time frame, we propose and test a heuristic that exhibits excellent practical performance in terms of computation time and solution quality for the problems we consider. This new criterion and our heuristic methods therefore exhibit great promise to tackle robustness problems when the uncertainty set consists of a large number of scenarios.

Similar content being viewed by others

1 Introduction

We consider the following portfolio optimization problem (F): a manager can invest in n assets, and for each asset \(i=1,\ldots ,n\) must determine the fraction of the portfolio \(x_i\) allocated to asset i, provided that \(x_i\) does not exceed a predetermined bound \(u_i\), for all i. Without uncertainty, and with \(r_i\) the return for asset i, the problem is formulated as a linear programming problem:

In practice, asset returns are subject to uncertainty. We consider a scenario-based approach to uncertainty, with a finite set of S possible scenarios: \(r_s=(r_{si})_{i=1, \ldots , n}\) with \(r_{si}\) representing the return of asset i for scenario \(s = 1, \ldots , S\). We will assume that scenarios are drawn from sampling or based on historical observations, and thus each scenario has equal probability 1 / S. Each feasible allocation x has then S possible portfolio returns associated with it.

The manager must select a solution x without knowing the future returns; he must therefore achieve a trade-off between the maximization of the expected portfolio value and the portfolio’s downside risk, i.e., the risk of the portfolio performing worse than expected. The extent of the trade-off depends on the manager’s degree of risk aversion: a highly risk-averse manager will prefer small downside risk, accepting a large performance degradation in expected value by selecting stocks with low volatility but also low expected return, while a more risk-loving manager will accept higher downside risk in order to achieve high expected performance using high-return, high-risk stocks.

The expected return and risk criterion are two competing objectives. They can be modeled and aggregated in various ways. Many variants have been proposed. Here, our goal is to propose a new criterion called pw-robustness. This criterion allows a better management of risk by guaranteeing a minimum return over all scenarios while maximizing portfolio return in the favorable (best) scenarios. We will see that this criterion has the advantage of allowing a very simple and direct dialogue with the decision maker.

In what follows, we propose a formulation of the pw-robustness criterion applied to portfolio optimization using a mixed-integer linear programming problem. In this context, our goal is to identify the instances for which the exact resolution of this model is not possible in an acceptable time frame. We also propose efficient approximate solution techniques.

The paper is structured as follows. In Sect. 2, we discuss existing formulations for portfolio optimization problems and provide a literature review. We present pw-robustness in Sect. 3. We discuss numerical experiments for small-size problems in Sect. 4 highlighting the performance and relevance of our mixed-integer linear problem. For solving large-size instances, we propose an effective solution heuristic method in Sect. 5, which we test in new numerical experiments. Finally, Sect. 6 contains concluding remarks.

2 Classical measures

2.1 Traditional decision-making criteria in portfolio optimization

Pioneering work in portfolio optimization is due to Markowitz (1952), which investigates mean–variance models. Approaches to implement Markowitz mean–variance approaches in practice and current trends are presented in Kolm et al. (2014). A disadvantage of using variance as a risk measure is that it penalizes upside and downside risk equally, while the manager only wants to protect himself against downside risk (portfolio performing worse than expected). Markowitz (1959) thus recommends to use semi-variance as a more suitable risk measure. Upon determining the efficient frontier for the criteria of maximizing expected portfolio return and minimizing downside risk, the manager must select a specific solution achieving the desired compromise between the two competing criteria. Many extensions of the Markowitz model have been proposed, which differ mostly on the risk criterion. See for instance Cornuejols and Tütüncu (2007) for the corresponding optimization problems, Kandasamy (2008) for a review of the state of the art, as well as Mansini et al. (2003).

Instead of studying the set of efficient solutions, which may be very large, other approaches consist in optimizing one of the criteria while enforcing a bound on the other(s). This has led to a vast body of literature, combining various risk and return measures. We present below the criteria most commonly used, in particular VaR and CVaR. In what follows, we maximize expected return under constraints on the risk measures.

Value-at-risk (VaR) has received particular attention in the literature because it was developed by industry practitioners in the late 1990s as a way to address concerns about existing risk measures and was subsequently adopted by the Basel committee (Jorion 2006). For a given time horizon, such as day, week or month, a \(100\,\alpha \%\) VaR (with \(0 \le \alpha \le 1\)) equal to \(r^{\texttt {VaR}}\) euros means that the portfolio may return less than \(r^{\texttt {VaR}}\) euros with a probability \(\alpha \); in other words, a return of at least \(r^{\texttt {VaR}}\) euros is guaranteed with probability \((1-\alpha )\). A common model in the literature consists in finding the portfolio allocation that maximizes the portfolio’s expected return while guaranteeing a given VaR. If we denote by \(R_i\) the random variable representing the future return of stock i, this optimization problem can be formulated as:

with \(r^{\texttt {VaR}}\) and \(\alpha \) two parameters determined by the decision maker.

Because the cumulative distribution function of a sum of random variables is typically very difficult to obtain in closed form except for special cases, data-driven models using a finite set of scenarios generated from sampling or historical data have received particular attention in the literature. Calafiore (2013) computes optimal portfolios directly from historical data by solving convex (typically linear) problems with a guaranteed shortfall probability, assuming i.i.d., stationary returns but without requiring the estimation of the distributions. This builds upon the theory of randomized convex programs (Calafiore and Campi 2006; Calafiore and Garatti 2008) and in particular random programs with violated constraints (Calafiore 2010). Cetinkaya and Thiele (2015) investigate an iterative, data-driven approximation to a problem where the investor maximizes the expected return of his portfolio subject to a quantile constraint, using historical data only. This approach only requires solving a series of linear programming problems. The authors compare this approach to a benchmark where Gaussian distributions are fitted to the returns. They extend the approach to the case where risk is measured by the inter-quartile range of the portfolio return. Feng et al. (2015) compare algorithms for solving portfolio optimization problems involving VaR, using mixed-integer programs and chance-constrained mathematical programs.

Also in the case of a finite set of scenarios, Benati and Rizzi (2007) propose a binary formulation of this problem:

with \(r^{\texttt {min}}\) the smallest return. In this model, the variables \(y_s\) count the scenarios for which the return achieves at least \(r^{\texttt {VaR}}\). In a related model (Lin 2009), \(r^{\texttt {VaR}}\) is maximized while a given expected return is guaranteed.

VaR suffers from two major drawbacks: the corresponding optimization problem is NP-hard and the manager does not know how low his portfolio return may be in the \(100\alpha \%\) of cases for which the portfolio return falls below \(r^{\texttt {VaR}}\). The conditional value-at-risk risk measure was thus proposed to address those concerns. Incorporating a lower bound on the average portfolio return in the \(100\,\alpha \%\) worst cases limits the average size of portfolio losses.

The problem where the expected return is maximized with an upper bound \(r^{\texttt {CVaR}}\) on CVaR with a discrete set of scenarios can be rewritten as a linear problem and is formulated as:

where \(\sigma (k)\) is a permutation of the returns r such that \(r_{\sigma (1)}^T x \le \cdots \le r_{\sigma (S)}^T x\) and \(T_{\alpha }\) represents the number of scenarios that corresponds to a probability \(\alpha \). A related problem where \(r^{\texttt {CVaR}}\) is maximized while enforcing a lower bound on the expected return is presented in Bertsimas et al. (2004). Mansini et al. (2014) provide a state of the art on linear programming-based models of portfolio optimization. This topic is also investigated in a tutorial format in Mansini et al. (2015).

An advantage of CVaR is that the corresponding optimization problems are easier to solve (Rockafellar and Uryasev 2000). Further, Iyengar and Ma (2013) design an iterative gradient descent algorithm for solving scenario-based mean-CVaR portfolio selection models. Their algorithm is fast and does not require any LP solver. Romanko and Mausser (2016) use CVaR proxies to VaR objectives and obtain robust results with low computational complexity.

Recently, Benati (2015) has advocated optimizing the median rather than the expected value of the portfolio return with, to control risk, constraints modeling various risk measures such as VaR, CVaR and a worst-case value on the returns. Benati (2015) argues that it leads to more diversified portfolios.

In spite of those advantages, because a constraint on CVaR bounds the average portfolio return for the worst cases, the portfolio may still have a return much lower than that average return over the worst cases, in some of the scenarios.

Decision making under uncertainty is the object of a vast body of work in a general optimization context (see Gabrel et al. 2014 for a recent survey on this topic). In the case of a discrete set of possible scenarios on the coefficients of the objective function, many robustness criteria have been proposed. In the following section, we discuss robustness criteria connected to VaR and CVaR.

2.2 Decision-making criteria in robust optimization

Kouvelis and Yu (1997) were the first to consider a worst-case criterion in the context of robust scenario-based optimization. Given the portfolio problem F, this leads to the following linear programming problem denoted \((F_\mathrm{worst})\):

We denote \(\overline{w}\) the optimal objective of the worst-case problem \((F_\mathrm{worst})\).

The numerical tractability and the absolute guarantee that the portfolio return will never fall below \(\overline{w}\) are appealing properties of \((F_\mathrm{worst})\) for the decision maker. A weakness of this approach is that it reduces the set of S scenarios to a single one (the worst one), perhaps losing important information contained in the other scenarios. For instance, consider two solutions \(x^1\) and \(x^2\) evaluated over 10 scenarios such that the value of solution \(x^1\) over all scenarios is always 0.9 and the value of solution \(x^2\) is 1.1 for the first 9 scenarios but 0.89 for the last one. The worst-case criterion leads the manager to conclude that \(x^1\) is better, since its worst-case value is higher, ignoring that \(x^2\) is much better 9 times out of 10. A small difference between worst-case values of solution can overshadow superior performance in other scenarios.

The worst-case criterion is considered in Young (1998) with a constraint on the expected return. Polak et al. (2010) study a variant where the expected return becomes the objective function and portfolio returns are constrained in each scenario.

When uncertainty is described using a finite number of scenarios, Perny et al. (2006) propose the ordered weighted averaging (OWA) operator as robustness criterion. Using this metric, a solution is said to be robust when it performs similarly well over all scenarios: the goal is to avoid solutions that perform very well for some scenarios and very poorly for others. The OWA criterion ranks the objective values from the worst to the best one. Let \(\sigma \) be the permutation such that \(r_{\sigma (1)}x \le \cdots \le r_{\sigma (S)}x\). The value of the solution x using the OWA criterion is computed as the following weighted average:

When \(w_1 \ge w_2 \ge \cdots \ge w_S\), the more adverse scenarios (low ranks) receive the higher weights. The goal is then to find the solution maximizing OWA. An appealing feature of OWA is that it helps capture the sophisticated ways in which human beings process information. In general, the OWA criterion is nonlinear and hard to implement. However, when the weights satisfy the monotonicity property, as shown in Ogryczak and Sliwinski (2003), the problem becomes easy to solve since it can be formulated as a linear programming problem. Depending on the weights chosen, OWA incorporates many criteria. For instance, worst-case optimization (over the worst-case scenario) is obtained by taking \(w_1=1\) and \(w_i=0\) for \(i \ge 2\). Further, in \((F_{\texttt {CVaR}})\), the constraint (1) is equivalent to \((1/T_{\alpha }){\hbox {OWA}}(x) \ge r^{\texttt {CVaR}}\) with \(w_1=\cdots =w_a=1\) for some a representing the desired quantile and \(w_i=0\) for \(i > a\). In this case, the weights satisfy the monotonicity property, and the problem remains easy because the OWA criterion takes part in a greater or equal constraint. Similarly, VaR can be formulated using as a special case of OWA with \(w_a=1\) for some a representing the desired rank and \(w_i=0\) for \(i \ne a\). The weights are no longer monotone, and the problem becomes more difficult to solve.

This criterion has been applied to portfolio optimization in Ogryczak (2000). Belles-Sampera et al. (2013) connect OWA operators with distortion risk measures. Laengle et al. (2017) replace mean and variance by the OWA operator and incorporate different degrees of optimism and pessimism in the decision-making process.

Recently, Roy (2010) proposed a robustness measure which he called the bw-robustness. A solution is viewed as robust if it exhibits good performance in most scenarios without ever exhibiting very poor performance in any scenario. In the previous example, solution \(x^2\) can be regarded as more robust than \(x^1\) since it presents the highest value in 9 scenarios out of 10 and, in the one scenario for which it underperforms \(x^1\), its objective value remains very close to that of \(x^1\). In this model, the decision maker defines two parameters: a hard bound w he never wants its solution value to fall below, and a target value \(b \ge w\) he would like the solution value to exceed or equal as often as possible. In other words, he seeks a solution x maximizing the number of scenarios for which \(r_sx \ge b\), while ensuring \(r_sx \ge w\) for each scenario s. In this model, the manager controls the risk level by choosing the value w that he wants to guarantee for all scenarios: a value lower than \(\overline{w}\) allows for riskier solutions to be considered.

The bw-robustness problem can be formulated mathematically using binary variables \(y=(y_s)_{s = 1, \ldots , S}\), which serve as indicator variables for \(r_sx \ge b\) (Gabrel et al. 2011). This leads to a mixed-integer problem:

If \(y_s=0\), the constraint about scenario s becomes \(r_s^T x \ge w\) and if \(y_s=1\), the constraint about scenario s becomes \(r_s^T x \ge b \ge w\).

Note the similarity between the first constraint for \((F_{\texttt {VaR}})\) in a scenario s, \(\sum _{i=1}^{n} r_{si} x_i \ge r^{\texttt {min}} + (r^{\texttt {VaR}} - r^{\texttt {min}})y_s\) and the constraint corresponding to scenario s in \((F_{bw})\). We observe that b is \(r^{\texttt {VaR}}\) (\((F_{bw})\) will determine the appropriate confidence level) and w is \(r^\mathrm{min}\). In \((F_{\texttt {VaR}})\), \(r^\mathrm{min}\) is an input parameter to write the constraints about \(r^{\texttt {VaR}}\), while in \((F_{bw})\), w is a parameter that controls risk. In that sense, bw-robustness extends VaR through the introduction of w, which limits the outcome in the worst scenario. Recent computational advances have led to steady increases in the size of MIPs that can be solved on a desktop computer within a reasonable amount of time, making the use of binary variables more and more tractable.

Implementing this model requires determining b and w. Regarding w, it is natural to define this parameter with respect to the worst case \(\overline{w}\): we will thus express w in terms of performance degradation the manager accepts regarding the worst-case guarantee, in exchange for a higher (or at least not worse) number of scenarios where the portfolio value is at least b.

Appropriate values for b depend on the context considered. For a portfolio problem, it is quite natural to pick b that represents a portfolio gain, i.e., \(b>1\); however, in that case the optimal objective of the bw-robustness problem (which is the number of scenarios for which the portfolio return is at least b) may be quite small. If the portfolio return exceeds a threshold only in a small number of scenarios, the corresponding allocation may not deserve to be called robust and the manager may then choose to adjust his value of b.

Another criticism that can be leveled at bw-robustness is that it does not allow the manager to sufficiently distinguish solutions. Going back to our earlier example, we consider the following 3 solutions, whose objective values over 10 scenarios are provided below (Table 1).

If we select \(w=0.89\) and \(b=1.1\), \(x^2\) and \(x^3\) have the same value of 9 (scenarios where they equal at least 1.1) for the criterion of the bw-robustness, but \(x^3\) dominates \(x^2\) since its return exceeds that of \(x^2\) in 9 of the 10 scenarios and is the same in the 10th one.

More generally, to illustrate this issue let us consider the (q, r) space defined as follows: the horizontal axis represents a number q of scenarios between 0 and S and the vertical axis the portfolio return r. Each point with coordinates (q, r) represents in Fig. 1 the existence of a solution x whose return is at least r on q scenarios. The maximum such return is denoted \(r_q\). Therefore, in that space, for a given q, there exists a segment of points for which the value on the vertical axis varies from 0 to \(r_q\). When we apply the criterion of bw-robustness, we only investigate solutions that guarantee the return b. Thus, for q given, two cases must be considered: if \(b>q\), no solution associated with the segment \([0,r_q ]\) guarantees the return b; otherwise, only the solutions associated with the sub-segment \([b,r_q ]\) guarantees the return b (see Fig. 1). Finding the optimal solution then amounts to determining the greatest value \(q^{\star }\) of q for which the interval \([b, r_{q} ]\) is non-empty. All the solutions on that segment \([b, r_{q^{\star }} ]\) have the same value, namely \(q^{\star }\) for the bw-robustness criterion. In particular if we denote \(x_A\) (resp. \(x_B\)) the solution associated with A (resp. B) in Fig. 1, these two solutions, which are equivalent in the framework of bw-robustness, do not yield the same guaranteed return on a number \(q^{\star }\) of scenarios: \(x_A\) guarantees \(r_{q^{\star }}\) and \(x_B\) guarantees \(b < r_{q^{\star }}\). In that space, only Point A belongs to the Pareto frontier; all the solutions on the segment \(]A,B ]\) are dominated by A. Consequently, the bw-robustness criterion can yield a dominated solution in the space (q, r).

In what follows, we investigate another criterion for robustness in portfolio optimization, which we call pw-robustness, in which the manager provides, as before, a worst-case bound w he never wants his portfolio value to fall below, for the discrete scenarios that he considers, but also a confidence level p; he will then seek to maximize the portfolio value that he can guarantee for his portfolio with probability p.

3 The pw-robustness criterion

The pw-robustness criterion, as its bw-robustness counterpart, seeks to guarantee that the portfolio value will not fall below the bound w in all scenarios and will achieve the bound b in “many” scenarios, but instead of specifying the value b and maximizing the proportion p of scenarios for which the portfolio value exceeds this value, we specify the proportion p and maximize the value of the soft bound b. Thus, the manager can specify through p the desired confidence level. This is similar to the confidence level \((1-\alpha )\) of VaR.

In summary, the pw-robustness criterion is defined using two parameters:

-

w is the minimum allowable portfolio value over all the scenarios,

-

p is the proportion of scenarios for which the portfolio value attains or exceeds a threshold (to be maximized).

Given p and w, the optimal solution of value \(b^*\) achieves an expected return of at least \(pb^*+(1-p)w\) because \(b^*\) is a lower bound on the \(p\%\) best scenarios and w a lower bound on the remaining \((1-p)\%\) worst scenarios. Since the optimal solution maximizes the value of b, it also maximizes this lower bound on the expected return.

In formulation \((F_{bw})\), the constraint \(\sum _{i=1}^{n}r_{si}\,x_i \ge w (1-y_s) + b y_s \) is no longer linear, due to the quadratic term \(by_s\), since b is now a decision variable. Therefore, we propose a different formulation to the problem, with two families of constraints: one for the S explicit constraints on w and the other, for b, under the form \(\sum _{i=1}^{n}r_{si}\,x_i - b \ge -M (1-y_s)\) where M is an arbitrarily big number. If \(y_s=1\), scenario s satisfies the constraint on b, which allows scenario s to be counted; otherwise, \(y_s=0\) allows the constraint on b to be relaxed for this scenario. The pw-robustness model can then be formulated as the mixed-integer problem:

We now illustrate the concept of pw-robustness on a small-size example.

Example 3.1

We consider a portfolio with 6 assets and 5 scenarios. The asset returns are given in percentages in Table 2.

When we choose an upper allocation bound of \(u_i=50\%\) for all assets \(i=A, \ldots , F\), the optimal solution \(x^\mathrm{worst*}\) obtained for the worst-case criterion and problem \((F_\mathrm{worst})\) is: \(x^\mathrm{worst*}_A=2.8\%\), \(x^\mathrm{worst*}_B=14.7\%\), \(x^\mathrm{worst*}_D=50\%\), \(x^\mathrm{worst*}_F=32.5\%\). Its optimal value is \(\overline{w}=99.44\%\), which represents the guaranteed minimum return for all 5 scenarios. Table 3 provides the returns of the optimal solution in each of the 5 scenarios.

We observe that the portfolio loses value (has a gross return less than 100) in all 5 scenarios.

Now, keeping \(u_i=50\%\) for all assets \(i=A, \ldots , F\), we use the pw-criterion taking \(p=80\%\) which correspond to 4 scenarios out of 5 and \(w=99\%\overline{w}= 98.45\). Its optimal solution denoted \(x^{80,99*}\) (since \(p=80\%\) and \(w=99\%\overline{w}\)) is: \(x^{80,99*}_A=2.1\%\), \(x^{80,99*}_B=45.9\%\), \(x^{80,99*}_D=41.4\%\), \(x^{80,99*}_F=10.6\%\). Its optimal value, \(b^{80,99*}=100.1\), represents the guaranteed return for 4 scenarios out of 5. Table 4 provides the returns of the optimal solution in each of the 5 scenarios.

Comparing those returns to those obtained for \(x^\mathrm{worst*}\), we observe a performance degradation in the worst-case scenario (98.45 instead of 99.44), but the solution \(x^{80,99*}\) performs better in all 4 other scenarios and achieves a profit (gross return higher than 100) in those cases. The main change in the optimal portfolio is a shift in allocation from stock F to the less risky stock B.

The optimal solution for \((B_{pw})\), denoted \((x^*,b^*)\), is such that:

-

\(\forall s=1,\ldots ,S\), \(r_s^T x^* \ge w\),

-

in \(\lceil pS \rceil \) scenarios s, we have: \(r_s ^T x^* \ge b^*\),

-

in \(S - \lceil pS \rceil \) scenarios, we have: \(w \le r_s^T x^* \le b^*\).

The bigger \(b^*\), the better the performance of \(x^*\) in a proportion p of scenarios. Note that it is possible to have \(r_sx^* = b^*\) in some of the \(S - \lceil pS \rceil \) scenarios for which \(y_s=0\), making it advisable, once the optimal \(x^*\) has been found, to compute the effective proportion \(p^* \ge p\) of scenarios for which the portfolio value is at least \(b^*\). Further, if w is small enough that the \(r_sx \ge w\) constraints are never binding at optimality for any s, the problem reduces to maximizing the \(100(1-p)\)th quantile of the portfolio return distribution. In the special case where \(p=0.5\), the objective consists in maximizing the median portfolio return.

For a given value of p, the set of feasible solutions grows when w decreases, including riskier solutions. This risk is controlled by the decision maker who chooses the value of w. With a given value of w, choosing a very small value for p amounts to evaluating a solution on its best scenarios only (the right tail of the distribution). By increasing p, we consider more scenarios. If p is relatively large (which brings the focus to the left tail of the return distribution, as is natural to design a robust solution), then the value of the portfolio return is smaller than \(b^*\) for a small proportion of scenarios, controlling risk.

Example 3.2

We now decrease the value for w to \(90\%\) of \(\overline{w}\). The optimal solution \(x^{80,90*}\) is such that \(x^{80,90*}_A=8.1\%\), \(x^{80,90*}_B=50\%\), \(x^{80,90*}_D=23.2\%\), \(x^{80,90*}_E=18.7\%\). Its optimal value is \(b^{80,90*}=100.17\%\) and achieves the following returns:

Returns | \(s=1\) | \(s=2\) | \(s=3\) | \(s=4\) | \(s=5\) |

|---|---|---|---|---|---|

\(x^{80,90*}\) | 100.17 | 101.21 | 100.17 | 100.17 | 96.6 |

Decreasing w leads to a slightly riskier solution, in the sense that its return is worse for scenario 5, but its return is better in all other 4 scenarios.

Now, in selecting \(p=60\%\) and \(w=99\%\overline{w}\), we decrease the confidence level and the optimal solution \(x^{60,99*}\) is: \(x^{60,99*}_A=4.2\%\), \(x^{60,99*}_B=45.9\%\), \(x^{60,99*}_D=49.9\%\). The optimal allocation has shifted from stock F to stocks A and D. Its optimal value is \(b^{60,99*}=100.63\%\) with the following returns in each scenario:

Returns | \(s=1\) | \(s=2\) | \(s=3\) | \(s=4\) | \(s=5\) |

|---|---|---|---|---|---|

\(x^{60,99*}\) | 99.21 | 100.63 | 100.63 | 100.87 | 98.45 |

The optimal value of this solution is \(b^{60,99*}=100.63\) and is therefore greater to that obtained for a greater proportion p, namely, \(b^{80,90*}=100.17\); however, it is only guaranteed for 3 scenarios.

In the (r, q) space defined in the previous section, applying the pw-robustness criterion amounts to set a proportion p and only consider the solutions corresponding to the segments \([0,r_q ]\) for all \(q \ge |S| \cdot p\). The optimal solution corresponds to the point that has the greatest \(r_q\) value over that set. For instance, if in Fig. 1 we select p corresponding to \(q^{\star }\), we obtain as unique optimal solution \(x_A\), the portfolio associated with A.

Let \(b^*(w)\) be the optimal objective value of \((B_{pw})\) as a function of w. \(b^*(w)\) is non-increasing as w increases, because the feasible set shrinks as w increases. The theorem below provides a lower bound on \(b^*(w)\), compared to the optimal objective in the VaR problem, \(b^*(0)\). (Since we use gross returns, the return of our portfolio is always nonnegative, even in the worst case, so \(w \ge 0\)). This leads to an upper bound on the performance degradation \(b^*(0)-b^*(w) \) on the \(100(1-p)\)-th quantile of the portfolio return that results from enforcing the worst-case constraint.

Theorem 1

Let \(b^*(0)\) be the optimal objective of \((F_{0w})\), i.e., the problem without the constraints on the worst-case return. Let \(y^0\) be the set of optimal binary variables for the scenarios in that case. We denote \(S_{-}\) the set of scenarios s for which \(y_s^0=0\). (Those are the scenarios with returns below the quantile b in \( (B_{0w})\).) We have:

-

(i)

For all \(w \le \bar{w}\), a lower bound on \(b^*(w)\) is given by the linear programming problem:

$$\begin{aligned} \begin{array}{rrl} \tilde{b}^*(w)= &{} \min &{} \displaystyle -w \, \sum _{s \in S_{-}} \alpha _s + \beta + \sum _{i=1}^n u_i \, \gamma _i \\ &{} {\hbox {s.t.}} &{} \displaystyle - \sum _{s=1}^S r_{si} \alpha _s + \beta + \gamma _i \ge 0, \ \forall i, \\ &{} &{} \displaystyle \sum _{s \not \in S_{-}} \alpha _s = 1 , \\ &{} &{} \alpha \ge 0, \ \gamma \ge 0. \end{array} \end{aligned}$$Further, \( \tilde{b}^*(w)\) is concave, non-increasing in w.

-

(ii)

Further, the optimal portfolio allocation in \( B_{pw}\) is piecewise linear in w.

Proof

(i) The optimal objective value of \((B_{pw})\) is at least (because it is a maximization) that of the problem solved with the y equal to \(y^0\), which we rewrite as:

This problem is feasible for all \(w \le \bar{w}\), further, the feasible set is bounded, so we invoke strong duality to obtain the expression of \(\tilde{b}^*(w) \). The minimization is achieved at a corner point of the feasible set in \(\alpha ,\beta ,\gamma \) so that \(\tilde{b}^*(w) \) can be written as the minimum of a finite number of functions linear in w, allowing us to conclude on both concavity and monotonicity. (ii) follows from observing that at optimality for \(B_{pw}\), x satisfies the system of equations: \( \sum _{i=1}^{n}r_{si}\,x_i =w\) for some set \(S_1\) of scenarios, \( \sum _{i=1}^{n}r_{si}\,x_i -b =0\) for some other set \(S_2\) of scenarios, \( \sum _{i=1}^{n}x_i =1 \), \(0 \le x_i \le u_i\) for all i. \(\square \)

A side benefit of this approach is to provide a sequence of optimal scenarios \(y^*\) as the decision maker varies w, p or both. In our numerical experiments, some scenarios are never selected (never have \(y_s=1\)), some are always or almost always selected, and a handful are selected some of the time. This allows the decision maker a better picture of which scenarios are driving his robustness approach.

Having presented the mixed-integer programming problem resulting from the pw-robustness criterion, we now investigate its resolution through numerical experiments. In Sect. 4, we investigate computation times when we attempt to solve problems exactly, using real financial data to design our data sets. In Sect. 5, we propose an effective heuristic when the problems are too large to be solved exactly in an appropriate amount of time.

4 Numerical experiments for exact solution

The purpose of these numerical experiments is to investigate the following questions:

-

Can the mixed-integer formulation be exactly solved in a reasonable amount of time ?

-

For a given p, what is the impact on the objective value and solution of decreasing w?

-

For a given w, what is the impact on the objective value and solution of decreasing p?

We will use for our analysis daily return data for the components of the Dow Jones over the 10-year period from January 2, 2003 to December 31, 2012, which captures the run-up to the financial crisis of 2007–2008, the financial crisis itself and its aftermath, as well as the aftermath of the stock market downturn of 2002. This allows a range of scenarios broad enough to test the models in many different conditions. We will divide the time horizon as follows:

- Small-size problems :

-

20 non-overlapping 6-month periods (each calendar half-year in the data set), which corresponds to 125 scenarios on average,

- Medium-size problems :

-

10 non-overlapping 12-month periods (each calendar year in the data set), which corresponds to 250 scenarios on average,

- Large-size problems :

-

6 problems spanning overlapping 5-year periods, which corresponds to 1260 scenarios on average (daily return data over 5 years, starting each January of year 2003 to 2007).

In \((B_{pw})\), for the value of M we select: \(M= \max _{i = 1, \ldots , n} \max _{s=1, \ldots S} r_{si}\).

In the following section, we identify instances of \((B_{pw})\) that can be solved within an acceptable time frame.

4.1 Computational time

Our experiments were carried out on a HP with Intel (R) Core TM i7-3770 CPU, with 3.4 Ghz processor and 8 GB RAM, under Windows 7, AMPL and CPLEX solver 12.7. In order to test the instances that can be solved in a reasonable time, we first use the small-size problems for which we consider different values for parameters p and w. Results, given in seconds, are summarized in Table 5. For p, we consider 4 possible values: 90, 80, 70 and 60%. We limit ourselves to \(p \ge 60\%\) since in other cases, the solution is not really a robust one. For w, we consider 6 possible values: 100, 98, 95, 90, 80 and \(50\%\overline{w}\). The reason for these values is twofold: first, we aim to find out whether small decreases of w lead to a significant increase in the optimal value of \((B_{pw})\); second, we seek to evaluate computational times for very small values of w. Recall that when w is very small, the set of feasible solutions of \((B_{pw})\) includes very risky solutions, with a worst-case return that is insignificant. We limit the computational time to 3600 s. In Table 5, we report the average solution time for the solutions solved to optimality and, in parentheses, the number of instances (out of 20) solved to optimality in less than 3600 s.

For a given w (besides \(w=\overline{w}\)), the number of instances solved to optimality decreases significantly (slowly initially and more quickly for \( p \ge 80\%\)). At w given, computation times increase as p decreases, as is intuitive; the increase is particularly significant between \(p=90\%\) et \(p=80\%\). Decreasing p increases the combinatorial aspect of the problem by increasing the number of feasible solutions for the variables y.

At a given p, allowing a small deterioration of w (increasing the set of feasible solutions x) leads to a significant increase in computation times as well as a significant decrease in the number of instances solved to optimality. These results then show little sensitivity to further decreases in w.

In addition, if we consider the objective solution value in Table 6, we observe that the value does not change for \(w \le 98 \% \overline{w}\). For a given p, the objective value obtained within 3600 s no longer increases as soon as \(w < 98 \% \overline{w}\). It suffices to accept a deterioration of w between 100 and \(98 \% \overline{w}\) to achieve solutions providing better guaranteed returns in a proportion p of scenarios. It is therefore not necessary to consider decreasing w beyond \(98 \% \overline{w}\) in this example.

In the following, the analysis focuses instead on values of w varying between \(\overline{w}\) and \(98 \% \overline{w}\) with a step of 0.25%. We also consider additional values for p, specifically, \(p=99.5\%\), \(p=99\%\), \(p=98\%\) and \(p=95\%\).

For small-size instances, the corresponding computation times are presented in Table 7. For such instances, when the value of p is at least equal to 95%, all small-size instances are solved in less than 3 s no matter the value of w. This is why Table 7 only contains the computational results obtained for \(p \le 90\%\).

When p is equal to 0.9, all the instances are solved to optimality and we observe that the computational times increase significantly as soon as w goes from \(\overline{w}\) to \(99.75\%\overline{w}\). For the other values of w, the increase in computational time is more moderate. This trend is confirmed for \(p=80\%\), \(p=70\%\) and \(p=60\%\), since the number of instances solved to optimality decreases significantly for the first decrease in w and in a more moderate manner thereafter. It is more difficult to interpret the trend in the average computational times since they do not include the instances that are solved to optimality in more than 3600 s.

In conclusion, we observe that as soon as \(p \le 70\%\) and \(w \le 99.5\%\overline{w}\), the solver is unable to find the optimal solution in over half the instances; however, the solutions of greatest interest to the decision maker in terms of risk and return are those that optimize \((B_{pw})\) for large values of p, with \(p \ge 80\%\). In that case, no matter what values of w are considered, CPLEX finds the optimal solution for all but two instances of small size. It is then natural to ask if similar results hold for larger-size instances.

For the 10 medium-size instances, computation times are reported in Table 8. When the values for p are at least equal to 98%, all medium-size instances are solved in less than 2 s no matter the value of w. This is why Table 8 only includes computation times obtained for values of \(p \le 95\%\). We observe that, as soon as \(p \ge 80\%\), the solver is no longer able to find the optimal solution in less than 3600 s.

For the 6 large-size instances, computation times are reported in Table 9. When \(p<90\%\) and \(w<\overline{w}\), no large-size instance is solved to optimality in less than 3600 s. This is why Table 9 only shows computational times obtained for \(p >90\%\). We observe that as soon as \(p \ge 98\%\), the solver is unable to find the optimal solution of the large-size instances in less than 3600 s, which is quite restrictive.

In these numerical experiments, it is not possible to solve the large-size instances to optimality in less than 3600 s. It is thus necessary to investigate heuristics in those cases, which we do in Sect. 5. First, we provide a qualitative analysis of the optimal solutions.

4.2 Qualitative analysis

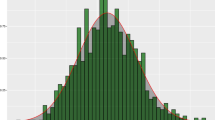

For small-size instances, for a given p we determine how the objective value decreases when w increases. In Fig. 2, each curve corresponds to a given value for p and shows the average value for \(b^*\) over the 20 instances. We observe that the curves exhibit similar trends for all p with two different trends: a stationary trend when the allowed worst-case return w is low enough compared to \(\bar{w}\), and a sharp decrease in \(b^*\) when we only tolerate a small decrease in w, i.e., only a small performance degradation in the worst-case returns. For a given p, the optimal value of \((B_{pw})\) is therefore very sensitive to small decreases in w. Hence, it is by accepting a more managed risk, corresponding to a small decrease in the guaranteed portfolio return over all scenarios, that the decision maker obtains portfolios where the guaranteed return in a proportion p of scenarios is significantly improved.

Finally, for a given w we determine how the objective value decreases when p increases. In Fig. 3, each curve corresponds to a given value for w and shows the average value for \(b^*\) over the 20 instances. We observe that here again, curves exhibit similar trends for any w considered. \(b^*\) decreases quasi-linearly in p for \(p \le 90\%\). Beyond that threshold, the decrease is more marked, indicating that \(b^*\) is more sensitive to changes in p.

Consider now a specific instance to compare the solutions obtained by the classical criteria of VaR, CVaR and worst-case value with the pw-robustness. We have chosen randomly a small-size instance. We first applied the worst-case criterion and obtained the solution \(x^\mathrm{worst*}\). We set \(r^{\texttt {VaR}}\) to 100.0001 in order to find a portfolio guaranteeing gains. In this case, with \(\alpha =0.3\), we found an optimal solution denoted \(x^{\texttt {VaR}*}\). From this value of \(\alpha \), we set p to 0.7, meaning that the portfolio guarantees gains in 70% of the scenarios. In order to apply CVaR, we need to set \(r^{\texttt {CVaR}}\). For that, we considered the average value of the portfolio returns for the 30% worst scenarios of \(x^{\texttt {VaR}*}\) and sought to improve it. Empirically, we set \(r^{\texttt {CVaR}}\) to 99.30; the set of feasible solutions is empty beyond that. We denote \(x^{\texttt {CVaR}*}\) the optimal solution obtained with the criterion CVaR and the previous parameters. With the pw-robustness criterion, we seek to obtain a solution guaranteeing the worst-case return for the solution obtained using CVaR, namely, \(w=98.05\). The solution \(x^{pw*}\) obtained using pw-robustness with \(p=0.7\) and \(w=98.05\) is then attractive to the decision maker since it guarantees a gain over 90 scenarios while minimizing risk.

In Table 10, we present several evaluations of these solutions. The “AR” column provides the average return of a solution over the scenario set. The “Worst” (respectively, “Best”) column gives the worst-case (respectively, best case) return of a solution. The “AR over 30% worst” (respectively, “AR over 70% best”) column gives the average return of the solution over its 30% worst scenarios (respectively, its 70% best scenarios). Finally, the “Values guaranteed” column presents the number of scenarios, among the 128 ones considered, for which a given return is guaranteed. Recall that \(b^*(98.05)\) is the \(b^*\) provided by the pw-robustness criterion with \(w=98.05\).

We observe that the optimal solution \(x^{\texttt {VaR}*}\) is the riskiest one since it has a very low worst-case return (96.71) but provides the highest return in the best case (105.88). The solution obtained using CVaR is slightly less risky than the one obtained with VaR, but the trade-off is a rather important degradation of performance over the best-case scenarios, since we can only guarantee a gain (a portfolio return at least equal to \(r^{\texttt {VaR}}=100.0001\)) over 75 scenarios. The use of the pw-robustness criterion leads to an appealing solution for the decision maker, with both a guaranteed worst-case portfolio return and a gain on the portfolio value guaranteed in 70% of the scenarios. The solution obtained using pw-robustness is superior to that obtained using VaR because it is less risky.

In pw-robustness, we accept a degradation of worst-case performance of \((98.78-98.05)/98.78=0.7\%\) to obtain a guaranteed portfolio return of at least 100.145 in 90 scenarios rather than 66, with an improvement of \((90-66)/66=36\%\) in the number of scenarios for which we can guarantee the value of 100.145. Further, we observe that setting parameters for both pw-robustness and the VaR criterion is rather easy, but less intuitive for CVaR if other criteria have not been applied first. This further makes pw-robustness attractive to the decision maker.

Clearly, pw-robustness represents a novel, promising approach to achieve an acceptable trade-off between expected return and risk taken; however, it is presently unrealistic, given available off-the-shelf software, to solve large-scale instances to optimality within a reasonable time frame. Therefore, we now propose heuristic algorithms to apply the pw-robustness criterion. This is the focus of the next section.

5 Heuristic methods for computing pw-robustness

5.1 Description of the heuristics

In this section, we present two heuristics to obtain quickly solutions for the problem of pw-robustness with large-size instances.

In \((B_{pw})\), a given y describes a subset in S of scenarios defining \(\lceil pS \rceil \) constraints. If \(y_s=1\) then \(s \in \mathcal{S}\) and \(\sum _{i=1}^{n} r_{si}x_i \ge b\). When \(y_s=0\), the constraint corresponding to that scenario is relaxed. To find the optimal solution to \((B_{pw})\), it suffices to determine the \(q = S - \lceil pS \rceil +1 \) constraints to relax.

With that goal in mind, we consider the linear programming problem (P) obtained using \((B_{pw})\) but without the variables y and including all the constraints \(r_sx \ge b\), \(\forall s = 1, \ldots , S\). The linear program (P) is:

Since \(b \ge w\), the constraints on w are dominated by those on b. Problem (P) is therefore equivalent to \((F_\mathrm{worst})\). Thus, the optimal value of (P) is equal to \(\overline{w}\). We consider two methods to choose the q constraints on b to be removed by using the information contained in the dual variables.

We denote \(\lambda _s\), \(s=1,\ldots ,S\) the dual variable associated with the sth constraint of the type \(\sum _{i=1}^{n}r_{si}x_i \ge b\). From duality theory in linear programming, we know that \(\lambda _s \le 0\), and its value at optimality can be interpreted as follows: if the right-hand side of the constraint is decreased by 1, the objective value changes by \(-\lambda _s\). If \(\lambda _s <0\), decreasing the right-hand side of this constraint to make it inactive leads to an increase in the objective value. We are therefore going to choose the constraints to be removed among those having strictly negative dual variables.

We suggest two different methods to select the constraints that will be removed. In the first heuristic H1, we remove constraints one by one. At iteration k, given a set \(\mathcal{S}^k\) of constraints, we solve the following linear programming problem:

and we denote \(\lambda ^k\) the dual variables associated with the constraints of the type \(\sum _{i=1}^{n}r_{si}x_i \ge b\). We choose to remove the constraint on b corresponding to the scenario s that has the smallest, negative dual variable. (At least one such scenario s exists since one of the scenarios achieves a return equal to b.) This heuristic is described in Algorithm 1.

In the second heuristic H2, described in Algorithm 2, we remove constraints in block by choosing among those for which \(\lambda _s <0\) : either there are at least q such constraints, in which case we choose the constraints corresponding to the q smallest \(\lambda _s\) in one iteration; otherwise, let \(l<q\) be the number of constraints for which \(\lambda _s <0\), resolve the problem with \(S-l\) constraints, and perform another iteration for choosing at most \(q-l\) constraints.

In the following section, we present numerical experiments to determine empirically the approximation ratio of our heuristics.

5.2 Exact versus approximate formulation: numerical experiments

We measure the quality of the heuristics H1 and H2 through the ratio \(\max (b^\mathrm{H1}, b^\mathrm{H2}) / b^*\), where \(b^\mathrm{H1}\) (resp. \(b^\mathrm{H2}\)) is the objective value found with H1 (resp. H2) and \(b^*\) is the exact (optimal) objective value. The largest-size instances for which we obtain all the optimal solutions in less than 3600 s are the medium-size instances with \(p\ge 95\%\). Table 11 contains the average values for the ratio obtained for the 10 medium-size instances.

In the worst case, this ratio is of 0.99892 in less than 1 s. Overall, Heuristic H1 generally finds better solutions, but occasionally H2 outperforms H1. On the other hand, H2 is faster than H1, which is why we consider the best of both solutions.

For large-scale instances, when \(p \ge 95\%\), the results are similar to those presented in Table 11. When \(p \le 90\%\), the approximation ratio cannot be computed since no optimal solution is found in less than 3600 s. We observe in Table 12 that the computation time of H1 and H2 is always smaller than 15 s. To quantify the heuristic’s performance, we have compared \(b^{H_1}\) to that obtained with CPLEX with a maximum solution time of 15 s, denoted \(\hat{b}\). In 184 cases over 192 (for the 6 large-size instances, \(p \in \{90, 80, 70, 60\% \}\) and \(w \in \{ 99.75,\) 99.5, 99.25, 99, 98.75, 98.50, 98.25, \(98\%\overline{w}\}\)) H1 finds an approximate solution with a higher objective value. Over those 184 instances, the average ratio \(b^{H_1}/\hat{b}\) is 100.31% with a maximum of 100.99%. In the 8 cases, where CPLEX finds a better solution, the average ratio is equal to 99.96%.

6 Conclusions

The concept of pw-robustness offers a novel perspective on risk management by considering both quantile optimization and worst-case outcome. We have presented algorithms that solve the problem efficiently as MIPs. We obtain exact solutions for problems of small size and approximate solutions of high quality for problems of larger size. Possible extensions include further improving the algorithms for large-size instances, including using the solutions obtained by our heuristics as hot start for CPLEX, and investigating how this algorithm performs in solving quantile optimization problems such as VaR or median optimization. Further, we plan to investigate in more detail the properties of this new criterion and in particular to apply it to purely combinatorial problems.

References

Belles-Sampera J, Merigo J, Guillén M, Santolino M (2013) The connection between distortion risk measures and ordered weighted averaging operators. Insur Math Econ 52:411–420

Benati S (2015) Using medians in portfolio optimization. J Oper Res Soc 66:720–731

Benati S, Rizzi R (2007) A mixed integer linear programming formulation of the optimal mean value at risk portfolio problem. Eur J Oper Res 176(1):423–434

Bertsimas D, Lauprete GJ, Samarov A (2004) Shortfall as a risk measure: properties, optimization and applications. J Econ Dyn Control 28(7):1353–1381

Calafiore GC (2010) Random convex programs. SIAM J Optim 20:3427–3464

Calafiore GC (2013) Direct data-driven portfolio optimization with guaranteed shortfall probability. Automatica 49:370–380

Calafiore GC, Campi MC (2006) The scenario approach to robust control design. IEEE Trans Autom Control 51:742–753

Calafiore GC, Garatti S (2008) The exact feasibility of randomized solutions of uncertain convex programs. SIAM J Optim 19:1211–1230

Cetinkaya E, Thiele A (2015) Data-driven portfolio management with quantile constraints. OR Spectr 37:761–786

Cornuejols G, Tütüncu R (2007) Optimization methods in finance. Cambridge University Press, New York

Feng M, Wächter A, Staum J (2015) Practical algorithms for value-at-risk portfolio optimization problems. Quant Finance Lett 3:1–9

Gabrel V, Murat C, Wu L (2011) New models for the robust shortest path problem: complexity, resolution and generalization. Ann Oper Res. https://doi.org/10.1007/s10479-011-1004-2

Gabrel Virginie, Murat Cécile, Thiele Aurélie (2014) Recent advances in robust optimization: an overview. Eur J Oper Res 235(3):471–483

Iyengar G, Ma AKC (2013) Fast gradient descent method for mean-CVaR optimization. Ann Oper Res 205:203–212

Jorion P (2006) Value at risk: the new benchmark for managing financial risk, 3rd edn. McGraw-Hill Education, New York

Kandasamy H (2008) Portfolio selection under various risk measures. Ph.D. thesis, Clemson University

Kolm PN, Tütüncü R, Fabozzi FJ (2014) 60 years of portfolio optimization: practical challenges and current trends. Eur J Oper Res 234:356–371

Kouvelis P, Yu G (1997) Robust discrete optimization and its applications. Kluwer Academic Publishers, Boston

Laengle S, Loyola G, Merigo J (2017) Mean–variance portfolio selection with the ordered weighted average. IEEE Trans Fuzzy Syst 25:350–362

Lin C (2009) Comments on a mixed integer linear programming formulation of the optimal mean value at risk portfolio problem. Eur J Oper Res 194(1):339–341

Mansini R, Ogryczak W, Speranza MG (2003) LP solvable models for portfolio optimization: a classification and computational comparison. IMA J Manag Math 14(3):187–220

Mansini R, Ogryczak W, Speranza MG (2014) Twenty years of linear programming based portfolio optimization. Eur J Oper Res 234:518–535

Mansini R, Ogryczak W, Speranza MG (2015) Linear models for portfolio optimization. Springer, Berlin, pp 19–45

Markowitz H (1952) Portfolio selection. J Finance 7(1):77–91

Markowitz H (1959) Portfolio selection: efficient diversification of investments. Number 16. Yale University Press, New Haven

Ogryczak W (2000) Multiple criteria linear programming model for portfolio selection. Ann Oper Res 97:143–162

Ogryczak W, Sliwinski T (2003) On solving linear programs with the ordered weighted averaging objective. Eur J Oper Res 148(1):80–91

Perny P, Spanjaard O, Storme L-X (2006) A decision-theoretic approach to robust optimization in multivalued graphs. Ann OR 147(1):317–341

Polak GG, Rogers DF, Sweeney DJ (2010) Risk management strategies via minimax portfolio optimization. Eur J Oper Res 207:409–419

Rockafellar RT, Uryasev S (2000) Optimization of conditional value-at-risk. J Risk 2:21–42

Romanko O, Mausser H (2016) Robust scenario-based value-at-risk optimization. Ann Oper Res 237:203–218

Roy B (2010) Robustness in operational research and decision aiding: a multi-faceted issue. Eur J Oper Res 200(3):629–638

Young MR (1998) A minimax portfolio selection rule with linear programming solution. Manag Sci 44(5):673–683

Acknowledgements

We would like to dedicate our work to Professor Bernard Roy (1934–2017) who passed away as we were revising this paper. Professor Roy, who founded the LAMSADE group (Laboratoire d’Analyse et de Modélisation des Systèmes pour l’Aide à la Décision), was instrumental in getting us started in this area of research and provided valuable feedback on our paper. We are deeply honored to have benefited from the guidance of this pioneer of operations research. We would also like to thank two anonymous referees, whose comments have significantly improved the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gabrel, V., Murat, C. & Thiele, A. Portfolio optimization with pw-robustness. EURO J Comput Optim 6, 267–290 (2018). https://doi.org/10.1007/s13675-018-0096-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13675-018-0096-8