Abstract

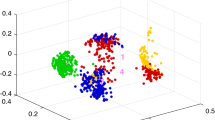

When handling special multi-view scenarios where data from each view keep the same features, we may perhaps encounter two serious challenges: (1) samples from different views of the same class are less similar than those from the same view but different class, which sometimes happen in local way in both training and/or testing phases; (2) training an explicit prediction model becomes unreliable and even infeasible for test samples in multi-view scenarios. In this study, we prefer the philosophy of the k nearest neighbor method (KNN) to circumvent the second challenge. Without an explicit prediction model trained directly from the above multi-view data, a new multi-view local linear k nearest neighbor method (MV-LLKNN) is then developed to circumvent the two challenges so as to predict the label of each test sample. MV-LLKNN has its two reliable assumptions. One is the theoretically and experimentally provable assumption that any test sample can be well approximated by a linear combination of its neighbors in the multi-view training dataset. The other assumes that these neighbors should demonstrate their clustering property according to certain commonality-based similarity measure between the multi-view test sample and these multi-view neighbors so as to avoid the first challenge. MV-LLKNN can realize its effective prediction for a test multi-view sample by cheaply using both on-hand fast iterative shrinkage thresholding algorithm (FISTA) and KNN. Our theoretical analysis and experimental results about real multi-view face datasets indicate the effectiveness of MV-LLKNN.

Similar content being viewed by others

References

Cleuziou G, Exbrayat M, Martin L, Sublemontier J-H (2009) CoFKM: a centralized method for multiple-view clustering. In Proceedings 9th IEEE ICDM. pp. 752–757

Huang X, Lei Z, Fan M, Wang X, Li SZ (2013) Regularized discriminative spectral regression method for heterogeneous face matching. IEEE Trans Image Process 22(1):353–362

Kan M, Shan S, Zhang H et al (2016) Multi-view discriminant analysis. IEEE Trans Pattern Anal Mach Intell 38(1):188–194

Ding Z, Fu Y (2014) Low-rank common subspace for multi-view learning. In: Proceedings IEEE ICDM. pp. 110–119

Yu J, Rui Y, Tang YY, Tao D (2014) High-order distance-based multiview stochastic learning in image classification. IEEE Trans Cybern 44:2431–2442

Jiang Yizhang, Chung Fu-Lai, Wang Shitong, Deng Zhaohong, Wang Jun, Qian Pengjiang (2015) Collaborative fuzzy clustering from multiple weighted views. IEEE Trans Cybern 45(4):688–701

Farquhar J, Hardoon D, Meng H, Shawe-Taylor J, Szedmak S (2006) Two viewlearning: SVM-2 K, theory and practice. Adv Neural Inf Process Syst 18:355–362

Sun S (2013) Multi-view Laplacian support vector machines. Lect Notes Artif Intell 41(4):209–222

Zhu F, Shao L, Lin M (2013) Multi-view action recognition using local similarity random forests and sensor fusion. Pattern Recognit Lett 34(1):20–24

Xu Z, Sun S (2010) An algorithm on multi-view AdaBoost. In: Proceedings of 17th International conference on neural information processing, pp. 355–362

Peng J, Luo P, Guan Z, Fan J (2017) Graph-regularized multi-view semantic subspace learning. Int J Mach Learn Cybern 3(4):1–17

Xia T, Tao D, Mei T, Zhang Y (2010) Multiview spectral embedding. IEEE Trans Syst Man Cybern B Cybern 40(6):1438–1446

Tzortzis GF, Likas AC (2012) Kernel-based weighted multi-view clustering. In: Proceedings of the 2012 IEEE 12th international conference on data mining, pp. 675–684

Tzortzis G, Likas A (2009) Convex mixture models for multi-view clustering. In: Proceedings of 19th international conference artificial neural networks, pp 205–214

Zong L, Zhang X, Zhao L, Yu H, Zhao Q (2017) Multi-view clustering via multi-manifold regularized non-negative matrix factorization. Neural Netw 88:74–89

Kakade SM, Foster DP (2007) Multi-view regression via canonical correlation analysis. In Proceedings of 20th annual conference on learning theory 2007, pp. 82–96

Merugu S, Rosset S, Perlich C (2006) A new multi-view regression approach with an application to customer wallet estimation. In: Proceedings 12th ACM SIGKDD international conference on knowledge discovery and data mining, pp. 656–661

Kusakunniran W, Wu Q, Zhang J, Li H (2010) Support vector regression for multi-view gait recognition based on local motion feature selection. In: Proceedings IEEE conference CVPR, pp. 974–981

Zhao J, Xie X, Xu X, Sun S (2017) Multi-view learning overview: recent progress and new challenges. Inform Fusion 38:43–54

Zhang Z, Xu Y, Yang J, Li X, Zhang D (2015) A survey of sparse representation: algorithms and applications. IEEE Access 3:490–530

Wright J, Yang AY, Ganesh A, Sastry S, Ma Y (2009) Robust face recognition via sparse representation. IEEE Trans Pattern Anal Mach Intell 31(2):210–227

Liu Q, Liu C (2017) A novel locally linear KNN method with applications to visual recognition. IEEE Trans Neural Netw Learn Syst 28(9):2010–2021

Zheng H, Zhu J, Yang Z, Jin Z (2017) Effective micro-expression recognition using relaxed K-SVD algorithm. Int J Mach Learn Cybern 8(6):2043–2049

CandŁs E, Romberg J (2007) Sparsity and incoherence in compressive sampling. Inverse Prob 23(3):969

Lu X, Wu H, Yuan Y, Yan P, Li X (2013) Manifold regularized sparse NMF for hyperspectral unmixing. IEEE Trans Geosci Remote Sens 51(5):2815–2826

Mao W, Wang J, Xue Z (2017) An ELM-based model with sparse-weighting strategy for sequential data imbalance problem. Int J Mach Learn Cybern 8(4):1333–1345

Yang J, Yu K, Gong Y, Huang T (2009) Linear spatial pyramid matching using sparse coding for image classification. In: Proceedings IEEE conference CVPR, pp. 1794–1801

Wang J, Yang J, Yu K, et al (2010) Locality-constrained linear coding for image classification. In: Proceedings of IEEE conference on CVPR, pp. 3360–3367

Gao S, Tsang IW-H, Chia L-T (2013) Laplacian sparse coding, hypergraph Laplacian sparse coding, and applications. IEEE Trans Pattern Anal Mach Intell 35(1):92–104

Deng W, Hu J, Guo J (2012) Extended SRC: undersampled face recognition via intraclass variant dictionary. IEEE Trans Pattern Anal Mach Intell 34(9):1864–1870

Deng W, Hu J, Guo J (2013) In defense of sparsity based face recognition. In: Proceedings of IEEE conference CVPR, pp 399–406

Zhang Q, Li B (2010) Discriminative K-SVD for dictionary learning in face recognition. In: Proceedings of IEEE conference on CVPR, pp. 2691–2698

Nigam K, Ghani R (2000) Analyzing the effectiveness and applicability of co-training. In: Proceedings of 9th ACM conference CIKM, pp. 86–93

Muslea I, Minton S, Knoblock C (2006) Active learning with multiple views. J Artif Intell Res 27:203–233

Sun S, Jin F (2011) Robust co-training. Int J Pattern Recognit Artif Intell 25(07):1113–1126

Huang Chengquan, Chung Fu-Lai, Wang Shitong (2016) Multi-view L2-SVM and its multiview core vector machine. Neural Netw 75:110–125

Sun S, Chao G (2013) Multi-view maximum entropy discrimination. In: Proceedings of 23rd IJCAI, pp. 1706–1712

Chao G, Sun S (2016) Alternative multi-view maximum entropy discrimination. IEEE Trans Neural Netw Learn Syst 27(07):1445–1456

Chao G, Sun S (2016) Consensus and complementarity based maximun entropy discrimination for multi-view classification. Inf Sci 367:296–310

Xu C, Tao D, Xu C (2013) A Survey on Multi-view Learning. Computer Science

Xia T, Tao D, Mei T, Zhang Y (2010) Multiview spectral embedding. IEEE Trans Syst Man Cybern Part B 40:61438–61446

Xie B, Mu Y, Tao D, Huang K (2011) M-sne: multiview stochastic neighbor embedding. IEEE Trans Syst Man Cybern Part B 41(4):1088–1096

Han Y, Wu F, Tao D et al (2012) Sparse unsupervised dimensionality reduction for multiple view data. IEEE Trans Circuts Syst Video 22(10):1485

Jiang Z, Lin Z, Davis LS (2013) Label consistent K-SVD: learning a discriminative dictionary for recognition. IEEE Trans Pattern Anal Mach Intell 35(11):2651–2664

Yang M, Zhang L, Feng X, Zhang D (2014) Sparse representation based Fisher discrimination dictionary learning for image classification. Int J Comput Vis 109(3):209–232

Duda RO, Hart PE, Stork DG (2000) Pattern classification, 2nd edn. Wiley, New York

Nesterov Y (2004) Introductory lectures on convex optimization: a basic course. Springer, New York

Halldorsson GH, Benediktsson JA, Sveinsson JR (2003) Supportvector machines in multisource classification. In: Proceedings IGARSS, Toulouse, France, Jul. 2003, pp. 2054–2056

Beck A, Teboulle M (2009) A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J Imaging Sci 2(1):183–202

Sim T, Baker S, Bsat M (2002) The cmu pose, illumination, and expression (pie) database. In: Proceedings of the fifth IEEE international conference on Automatic Face Gesture Recognition. IEEE, pp 46–51

Cai D, He X, Han J (2007) Spectral regression for efficient regularized subspace learning. In: Proceedings of the 11th international conference on Computer Vision. IEEE, pp 1–8

https://www.cl.cam.ac.uk/Research/DTG/attarchive/facedatabase.html

Samaria FS, Harter AC (1994) Parameterisation of a stochastic model for human face identification. In: Proceedings of 1994 IEEE Workshop on Applications of Computer Vision, pp. 138–142

Sharmanska V, Quadrianto N, Lampert CH (2013) Learning to rank using privileged information. In: Proceedings of 14th IEEE ICCV, pp 825–832

Motiian S, Piccirilli M, Adjeroh DA, Doretto G (2016) Information bottleneck learning using privileged information for visual recognition: In: Proceeding of international conference on computer vis pattern recognition, June 2016, pp 1496–1505

Parambath SP, Usunier N, Grandvalet Y (2014) Optimizing F-measures by cost-sensitive classification. In: Proceedings NIPS, pp 2123–2131

Jiang Y, Deng Z, Chung F-L, Wang S (2017) Realizing two-view TSK fuzzy classification system by using collaborative learning. IEEE Trans Syst Man Cybern 47(1):145–160

Jiang Y, Deng Z, Chung F-L, Wang G, Qian P, Choi K-S, Wang S (2017) Recognition of epileptic EEG signals using a novel multiview TSK fuzzy system. IEEE Trans Fuzzy Syst 25(1):3–20

Wang X, Lu S, Zhai J (2008) Fast fuzzy multicategory SVM based on support vector domain description. Int J Pattern Recognit 22(1):109–120

Turk M, Pentland A (1991) Eigenfaces for recognition. J Cognit Neurosci 3(1):71–86

Comaniciu D, Meer P (1999) Mean shift analysis and applications. In: Proceedings of 7th IEEE ICCV, pp 1197–1203

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Proof of Theorem 1.

Proof:

If \({\mathbf{w}}^{k}\) is the representation obtained by MV-LLKNN on the multi-view data, then \(J\left( {{\mathbf{w}}^{K} } \right) \le J\left( {\mathbf{0}} \right)\),

Since

we know that \(\left\| {{\mathbf{w}}^{k} - \eta {\mathbf{s}}} \right\|_{2}\) is actually bounded by a small positive constant. That is to say, \({\mathbf{w}}^{k} \approx \eta {\mathbf{s}} + {\mathbf{const}}\).

The transformations in Eqs. (12) and (13) guarantees that each term in \({\mathbf{w}}^{k}\) satisfies that \(0 \le w_{i}^{k} \le 1\) and \(\sum\nolimits_{i = 1}^{m} {w_{i}^{k} } = 1\). It is worth noting that the transformations do not affect the classification results. Based on the similarity measure between the test sample and the training sample, MV-LLKNN+ and MV-LLKNN* are designed.

For MV-LLKNN+, it can be approximated as follows:

In this study, let us consider the Epanechnikov kernel [61] : \(h\left( u \right) = \frac{3}{4}\left( {1 - u^{2} } \right)\). Then

where \(\sum\nolimits_{{{\mathbf{a}}_{i}^{k} \in {\mathbf{A}}_{c}^{k} }} {\sum\nolimits_{l = 1}^{K} {h\left( {\frac{{{\mathbf{x}}^{l} - {\mathbf{a}}_{i}^{l} }}{\sigma }} \right)} }\) becomes the kernel density estimation of the conditional probability \(p\left( {{\mathbf{x}}^{k} \left| c \right.} \right)\left( {k = 1,2, \ldots ,K} \right)\).

Since each view is assumed to be classified separately and independently. Therefore, if the prior probability \(p\left( c \right)\) is the same for all the classes, then

Similarly, we have the following derivations for MV-LLKNN*:

Then, we also consider another Epanechnikov kernel

Therefore, if the prior probability \(p\left( c \right)\) is the same for all the classes, then

In summary, from the perspective of density estimation, MV-LLKNN+ and MV-LLKNN* approximate the Bayes decision rule for minimum error and the approximation error mainly comes from \({\mathbf{w}}^{k} \approx \eta {\mathbf{s}} + {\mathbf{const}}\) and the kernel density estimation error.

Appendix 2

Proof of Theorem 2.

Proof

Let us observe Eq. (3) which is equivalent to

Let \({\tilde{\mathbf{w}}}^{k} \varvec{ = }\left[ {\tilde{w}_{1}^{k} ,\tilde{w}_{2}^{k} , \ldots ,\tilde{w}_{m}^{k} } \right]^{T}\) is the representation obtained by MV-LLKNN on the multi-view data, then we take the derivatives with respective \(\tilde{w}_{i}^{k}\) and \(\tilde{w}_{j}^{k}\):

Let the above two derivatives to be zero. Since \({\text{sign}}(\tilde{w}_{i}^{k} ) = {\text{sign}}(\tilde{w}_{j}^{k} )\), then, \(\frac{\partial J}{{\partial \tilde{w}_{i}^{k} }} - \frac{\partial J}{{\partial \tilde{w}_{j}^{k} }}\) is:

By \(J\left( {{\tilde{\mathbf{w}}}^{k} } \right) \le J\left( {\mathbf{0}} \right), \, \left\| {{\mathbf{x}}^{k} } \right\|^{2} = 1\), we can get:

Then, since

so we have

where \(G = \sqrt {1 + \beta \eta^{2} \left\| {\mathbf{s}} \right\|^{2} }\).

Rights and permissions

About this article

Cite this article

Jiang, Z., Bian, Z. & Wang, S. Multi-view local linear KNN classification: theoretical and experimental studies on image classification. Int. J. Mach. Learn. & Cyber. 11, 525–543 (2020). https://doi.org/10.1007/s13042-019-00992-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-019-00992-9