Abstract

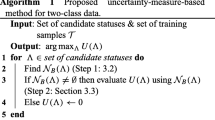

The training of the support vector machine (SVM) classifier has high computational complexity and is not suitable for large data classification. Since the classification hyperplane is determined by the support vectors and the other instances do not have an effect on the classifier, a method is introduced that does not use all instances for training. Data set may include inappropriate instances such as noisy and outlier instances. In this paper, a novel method is introduced in which at the first step using the belief function theory, the instances uncertainty such as noisy and outlier instances are identified and discarded, at the second step using the geometric method, called, boundary region, the boundary instances are determined. Finally, at the last step, by using the obtained boundary instances, the training of the SVM classifier is done. In the proposed method BF–BR (Belief Function–Boundary Region), the computational cost of the classification training is reduced without losing classification accuracy. The performance has been evaluated on real world data sets from UCI repository by the tenfold cross validation method. The results of the experiments have been compared with the other methods, which indicate superiority of the proposed method in terms of the number of training instances and training time while good classification accuracy for SVM training is achieved.

Similar content being viewed by others

References

Vapnik V (1995) The nature of statistical learning theory. IEEE Trans Neural Netw 10(5):988–999

Yang L, Xu Z, Feature extraction by PCA and diagnosis of breast tumors using SVM with DE-based parameter tuning. Int J Mach Learn Cyber (2017) 1–11

Mao WT, Xu JC, Wang C et al (2014) A fast and robust model selection algorithm for multi-input multi-output support vector machine. Neurocomputing 130:10–19

Santhanama V, Morariua VI, Harwooda D, Davisa LS (2016) A non-parametric approach to extending generic binary classifiers for multi-classification. Pattern Recogn 58:149–158

Feng C, Liao S (2017) Scalable Gaussian kernel support vector machines with sublinear training time complexity. Inf Sci 419:480–494

Yan H, Ye Q, Zhang T, Yu DJ (2018) Least squares twin bounded support vector machines based on L1-norm distance metric for classification. Pattern Recogn 74:434–447

Abe S (2014) Fusing sequential minimal optimization and Newton’s method for support vector training. Int J Mach Learn Cyber 7:345–364

Colbert R, Bagnio S, Bagnio Y (2002) A parallel mixture of SVMs for very large scale problems. Neural Comput 14(5):1105–1114

Han D, Liu W, Dezert J, Yang Y (2016) A novel approach to pre-extracting support vectors based on the theory of belief functions. Knowl Based Syst 110:210–223

Chau AL, Li X, Yu W (2013) Convex and concave hulls for classification with support vector machine. Neurocomputing 122:198–209

Shafer G (1976) A mathematical theory of evidence. Princeton University Press, Princeton

Jousselme AL, Liu CS, Grenier D (2006) Measuring ambiguity in the evidence theory. IEEE Trans Syst Man Cybern 36(5):890–903

Yager RR (2007) Entropy and specificity in a mathematical theory of evidence. Int J General Syst 9(4):249–260

Karal O (2017) Maximum likelihood optimal and robust Support Vector Regression with lncosh loss function. Neural Networks 94:1–12

Zhou C, Lu X, Huang M (2016) Dempster–Shafer theory-based robust least squares support vector machine for stochastic modelling. Neurocomputing 182:145–153

Lu X, Liu W, Zhou C, Huang M (2017) Probabilistic weighted support vector machine for robust modeling with application to hydraulic actuator. IEEE Trans Ind Inform 13(4):1723–1733

Han DQ, Han CZ, Yang Y (2009) Approach for pre-extracting support vectors based on K-NN. Control Decis 24(4):494–498

Yang X, Tan L, He L (2014) A robust least squares support vector machine for regression and classification with noise. Neurocomputing 140:41–52

Hu J, Zheng K (2015) A novel support vector regression for data set with outliers. Appl Soft Comput 31:405–411

Khosravani HR, Ruano AE, Ferreira PM (2016) A convex hull-based data selection method for data driven models. Appl Soft Comput 47:515–533

Mavroforakis ME, Theodoridis S (2006) A geometric approach to support vector machine classification. IEEE Trans Neural Netw 17(3):671–682

Wu SJ, Pham VH, Nguyen TN (2017) Two-phase optimization for support vectors and parameter selection of support vector machines: two-class classification. Appl Soft Comput 59:129–142

Cai F, Cherkassky V (2012) Generalized SMO algorithm for SVM-based multitask learning. IEEE Trans Neural Netw Learn Syst 23:997–1003

Huang X, Shi L, Suykens JAK (2015)” Sequential minimal optimization for SVM with pinball loss. Neurocomputing 149:1596–1603

Nalepa J, Kawulok M (2016) Adaptive memetic algorithm enhanced with data geometry analysis to select training data for SVMs. Neurocomputing 185:113–132

Zeng M, Yang Y, Luo S, Cheng J (2016) One-class classification based on the convex hull for Bearing fault detection. Mech Syst Signal Process 81:274–293

Lucidi S, Palagi L, Risi A, Sciandrone M (2009) A convergent hybrid decomposition algorithm model for SVM training. IEEE Trans Neural Netw 20:1055–1060

Vanek J, Michalek J, Psutka J (2017) A GPU-architecture optimized hierarchical decomposition algorithm for support vector machine training. IEEE Trans Comput Soc 28:3330–3343

Schleif FM, Tino P (2017) Indefinite core vector machine. Pattern Recogn 71:187–195

Tsang IW, Kwok JT, Zurada J (2006) Generalized core vector machines. IEEE Trans Neural Netw 17:1126–1140

Xu J (2013) Fast multi-label core vector machine. Pattern Recogn 46:885–898

Almeida AR, Almeida OM, Junior BFS, Barreto LHSC, Barros AK (2017) ICA feature extraction for the location and classification of faults in high-voltage transmission lines. Electr Power Syst Res 148:254–263

Shah S, Batool S, Khan I, Ashraf M, Feature extraction through parallel probabilistic principal component analysis for heart disease diagnosis. Phys A Stat Mech Appl (2017) 796–807

Lia K, Xiea J, Sunb X, Maa Y (2011)” Multi-class text categorization based on LDA and SVM. Procedia Engineering 15:1963–1967

Wang G, Zhang G, Choi KS, Lu J (2017) Deep additive least squares support vector machines for classification with model transfer. IEEE Trans Syst Man Cybern Soc 99:1–14

Hamidzadeh J, Monsefi R, Sadoghi Yazdi H (2014) Large symmetric margin instance selection algorithm. Int J Mach Learn Cyber 7:25–45

Mavroforakis ME, Theodoridis S A geometric approach to support vector machine (SVM) classification IEEE Trans Neural Netw 17: 671–382 (2006)

Zeng M, Yang Y, Zheng J, Cheng J (2015) Maximum margin classification based on flexible convex hulls Neurocomputing 149:957–965

Han DQ, Dezert J, Duan ZS (2016) Evaluation of probability transformations of belief functions for decision making. IEEE Trans Syst Man Cybern 46(1):93–108

Liu ZG, Pan Q, Dezert J (2013) Evidential classifier for imprecise data based on belief functions. Knowl Based Syst 52:246–257

Liu ZG, Pan Q, Dezert J, Mercier G (2015)” Credal c-means clustering method based on belief functions. Knowl Based Syst 74:119–132

Smets P, Kennes R (1994) The transferable belief model. Artif Intell 66(2):191–234

Denœux T, Kanjanatarakul O, Sriboonchitta S (2015) “EK-NNclus: a clustering procedure based on the evidential K-nearest neighbor rule. Knowl Based Syst 88:57–69

Moghaddam VH, Hamidzadeh J (2016) New Hermite orthogonal polynomial kernel and combined kernels in support vector machine classifier. Pattern Recogn 60:921–935

Hamidzadeh J, Monsefi R, Yazdi HS (2015) IRAHC: Instance reduction algorithm using hyperrectangle clustering. Pattern Recogn 48:1878–1889

Yuan F, Xia X, Shi J, Li H, Li G, Non-linear dimensionality reduction and gaussian process based classification method for smoke detection IEEE Access (2017) 5:6833–6841

Liu C, Wang W, Wang M, Lv F, Konan M (2017) An efficient instance selection algorithm to reconstruct training set for support vector machine. Knowl Based Syst 116:58–73

Hamidzadeh J, Sadeghi R, Namaei N (2017)” Weighted support vector data description based on chaotic bat algorithm. Appl Soft Comput 60:540–551

Berg M, Cheong O, Kreveld M, Overmars M, Computational geometry: algorithms and applications. Springer, New York (2008)

Jarvis RA (1973) On the identification of the convex hull of a finite set of points in the plane. Inf Process Lett 2:18–21

Lichman M, UCI Machine Learning Repository (2013) http://archive.ics.uci.edu/ml

Demsar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

Denœux T (1995) A k-nearest neighbor classification rule based on Dempster–Shafer theory. IEEE Trans Syst 25:804–813

Asaeedi s, Didehva F, Mohades M (2017) α-Concave hull, a generalization of convex hull. Theor Comput Sci 702:48–59

Nandan M, Khargonekar PP, Talathi SS (2014) Fast SVM training using approximate extreme points. J Mach Learn Res 15(1):59–98

Vanir V (1999) An overview of statistical learning theory. IEEE Trans Neural Netw 10(5):988–999

Bang S, Kang J, Jhun M, Kim E (2016) Hierarchically penalized support vector machine with grouped variables. Int J Mach Learn Cyber 8:1211–1221

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297

Xu P, Davoine F, Zha H, Denœux T (2016) Evidential calibration of binary SVM classifiers. Int J Approx Reason 72:55–70

Gu B, Sheng VS, Wang Z, Ho D, Osman S, Li S (2015) Incremental learning for ν-support vector regression. Neural Netw 67:140–150

Gu B, Wang JD, Yu YC, Zheng GS, Huang YF, Xu T (2012) Accurate on-line ν-support vector learning. Neural Netw 27:51–59

Xiaa Sy, Xiong Zy, Luo Yg, Dong Lm (2015) A method to improve support vector machine based on distance to hyperplane. Optik Int J Light Electron Opt 126:2405–2410

Gu B, Quan X, Gu Y, Sheng VS, Zheng G (2018) Chunk incremental learning for cost-sensitive hinge loss support vector machine. Pattern Recogn 83:196–208

Triguero I, Peralta D, Bacardit J, García S, Herrera F (2015) MRPR: a MapReduce solution for prototype reduction in big data classification. Neurocomputing 150:331–345

Chang CC, Lin CJ (2011) Libsvm: a library for support vector machines. ACM Trans Intell Syst Technol (TIST) 2(3):27

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Moslemnejad, S., Hamidzadeh, J. A hybrid method for increasing the speed of SVM training using belief function theory and boundary region. Int. J. Mach. Learn. & Cyber. 10, 3557–3574 (2019). https://doi.org/10.1007/s13042-019-00944-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-019-00944-3