Abstract

Sound evaluation needs sound numbers. But measuring energy savings is measuring something that is not used and can meet unexpected difficulties. The European Commission has made strong efforts to harmonize methods to measure energy efficiency and energy savings and to monitor the progress towards the goals of the Energy Efficiency Directive. However, in practice, multiple methods are still used, which may lead to confusion. In this paper, we use the Netherlands as a case study to analyze this phenomenon. In the Netherlands, three national indicators on energy efficiency exist, next to a European indicator next to the impact of individual policy instruments. The large differences and sometimes contradictory results of the different indicators lead to questions about what is the “best” method. This paper studies the reasons behind the differences between the methods used for industrial energy efficiency improvement in the Netherlands. It compares detailed bottom-up data from individual policy instruments with top-down national figures. We disentangle the impact of volume, efficiency, and structure effects. In this way, we visualize the differences between several methods and the impact of the choice of metrics used in those methods. This helps understanding why care should be taken when comparing industrial energy efficiency results from different countries.

Similar content being viewed by others

Introduction

A 20% reduction of primary energy consumption in 2020 is part of Europe’s Climate and Energy package (European Commission 2012). To know whether this target will be achieved, it is necessary to measure progress towards the target and get insight in the effect of the implemented policy instruments. In 2013, the European Environmental Agency reported that some progress is made in reducing energy consumption (EEA 2013), but EEA (2015) reported a gap of 67.9 pJ (1621 ktoe) between energy use and the linear target path to 2020 if current trends would continue towards 2020.

Policy makers can use a range of instruments to realize energy efficiency, e.g., financial instruments, voluntary agreements, labelling. Together, these instruments should form a “coherent policy package,” i.e., a mix of instruments that strengthen each other (Coalition for energy saving 2013).

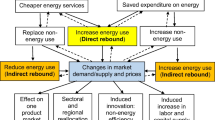

Tools such as decomposition analysis show the effect of production changes and structural changes in the economy. However, the results of such analysis do not distinguish between savings achieved as a result of policies and other factors. Instead, they reflect aggregate changes brought about by a variety of factors, such as price effects, autonomous effects, direct rebound effects, hidden structure effects, and the effects of old and new policies (Cahill and Ó Gallachoír 2010). Moreover, the result of a decomposition method depends on the chosen method. In some top-down decomposition methods, the policy effect is a residual, which remains when all other possible explanations are removed (Boonekamp et al. 2001), in other words—an indirect measurement. In some cases, the difference between volume, structure, autonomous, and policy effects is not obvious. In Smit et al. (2014), the difference in intensity effect between two scenarios is used as a proxy for the policy effect, even though this effect also includes the difference in autonomous intensity improvements in those scenarios. Different organizations use different methods, which is confusing for users of these data.

Theoretically, top-down effects can be further explained by bottom-up analysis, although available bottom-up data often do not match with top-down national data. There have been multiple efforts to develop good methods to measure energy efficiency, and a wide range of literature on this topic exists (e.g., Phylipsen et al. 1997; Boonekamp 2005, 2006; Cahill and Ó Gallachoír 2012a; Farla and Blok 2001). However, users of this information have different requirements regarding the energy efficiency indicators that have to be produced. Some are interested in the development of energy efficiency regardless whether it is policy-induced or not. Policy makers, on the other hand, are often more interested in the effect of policy intervention. This has led to a range of methods, each with their own characteristics. In the formulation of energy efficiency goals as well as in monitoring of the energy data, discussions arise concerning the definition and the measurement of energy efficiency (Schlomann et al. 2015). Many energy efficiency–related concepts are still misunderstood, not only by laymen but even by specialists, e.g., the difference between efficacy and efficiency or the difference between intensity and efficiency. Therefore, methodological issues are still a subject of ongoing debate (Pérez-Lombard et al. 2013). The term “energy efficiency” can have a totally different meaning in different studies.

In this paper, we show how these issues occur in practice, using the Netherlands as a casus. Energy efficiency policy of the Netherlands consists of a mix of many instruments, each with different characteristics. There is a strong overlap between some of these instruments, e.g., between the voluntary agreements for improving industrial energy efficiency and subsidy schemes that support investments in energy saving equipment. The European 2020 energy target was translated in a reduction of 482 pJ of national final energy use for the Netherlands as a whole (Schoots and Hammingh 2015). Dutch progress on energy saving policy is reported in the “Nationale Energieverkenning” (Schoots and Hammingh 2015) that describes general developments, progress in implementation of policy instruments, and the expected amount of energy savings.

In the Netherlands, three national indicators on energy efficiency exist: (1) a national energy saving result (ECN 2012), (2) energy savings reported for art. 7 of the European Energy Directive (Tweede Kamer der Staten-Generaal 2017), and 3) a saving result for the National Energy Agreement, all with a refinement towards industrial savings (Schoots and Hammingh 2015). In addition, results exist of individual policy instruments focusing on specific sectors. In the 1990s, the EU started the Odyssee project (http://www.odyssee-mure.eu/) to gather information on energy efficiency in its Member States. This project has developed the so-called ODEX indicator to compare energy efficiency in European countries (Enerdata 2010), including the Netherlands. Although one would expect the figures to be reasonably equal, large differences are observed. Especially for industry, the results differ a lot. In the last official national report on energy savings (ECN 2012), a mean annual trend of 1% energy savings for the period 2000–2010 was reported for Dutch industry, while Odyssee reports a total efficiency gain of 27.5% over the period 2000–2012 (meaning 2.6%/year) (Gerdes 2012). The differences between these numbers arise from differences in definitions and scope. The large differences between these reports are a cause for confusion among policy makers.

The aim of this paper is to investigate the reasons behind the differences between the methods used in the Netherlands. The main research question is therefore:

How can figures on energy efficiency, with a focus on industry in the Netherlands, be compared and interpreted?

To answer this main question, the following subquestions are addressed:

-

What are the differences between existing energy efficiency methods?

-

What is the reason behind these differences?

-

How are these methods applied in the Netherlands?

-

What are the (dis)advantages of these methods?

In this article, the “Methods” section provides the methods. The “Results and discussion” section presents and discusses the results. After a description of the development of methods to calculate energy efficiency in the “What are the differences between existing methods for energy efficiency?” section, we describe the impact of the choice of metrics on the outcome of the methods that are used to report on energy efficiency in the “What are the reasons for the differences in methods?” section. In the “How are these methods applied in the Netherlands?” section, the Netherlands is used as a case to see how these methods are applied. The advantages and disadvantages of the different methods are discussed in the “What are the advantages and disadvantages of the methods?” section. The “Conclusions and recommendations” section gives conclusions and recommendations.

Methods

To answer the research questions posed in the introduction, we followed a four-step approach linked to the four subquestions provided in the “Introduction” section.

Step 1

What are the differences between existing energy efficiency methods?

The aim of this research step was to show the differences between existing energy efficiency methods. Therefore, step 1 included a review of literature on the methods to report on energy saving. We described definitions that have been developed for specific energy use and energy intensity, useful output, and energy saving and the metrics that are needed for decomposition analysis. We focused on literature that described methods for energy efficiency in industry.

Step 2

What is the reason behind these differences?

In order to analyze these differences, we visualized the differences between methods to calculate energy efficiency in step 2. We used a fictitious dataset that describes all factors that influence energy consumption. By using a fictitious dataset we can show the impact of changes in individual variables. This fictitious dataset stands model for the chemical industry in the Netherlands, which in the last decade featured a shift towards more specialized products, with a higher added value. Companies participating in the Dutch Long-Term Agreement for Industrial Energy Efficiency are obliged to report all these factors (except for added value) in annual monitoring reports (Abeelen et al. 2016). In a second analysis, we use the same table, but now in more detail, where the sector is divided in two subsectors, one of which is growing, the other not.

For step 2, we used an additive Log Mean Divisia Index (LMDI) method to decompose the change in energy use, first with a two-factor decomposition (Table 1), followed by a three-factor decomposition (Table 2). The LMDI method was used as this method assures a perfect decomposition without a residual (Ang 2004). In other words, all changes in energy use are assigned to one of the factors.

Table 1 shows the formulas used for calculating the volume, structure, and efficiency effect, respectively. We used two- and three-factor decompositions next to each other to show the change in the efficiency effect. The advantage of the two-factor decomposition was its limited need of data (being relatively easily available). The major disadvantage of two-factor decomposition is that structure effects are “hidden” in the efficiency effect. This problem was partly solved by also decomposing in three factors, although structure effects on a lower aggregation level were still hidden.

The formulas in Table 1 were applied to four scenarios in which we combined different choices for the metrics used. The four scenarios are outlined in Fig. 1.

Step 3

How are these methods applied in the Netherlands?

In step 3, we focused on the Netherlands. Based on the literature, we made an inventory of the methods that are used in the Netherlands, both top-down and bottom-up methods based on the implementation of energy saving projects in industry. For each selected method, we describe the main features, scope and definition and the most relevant results. To compare these approaches, we adapted a table from Schoots and Hammingh (2015) in which the methods are compared on all the essential features that were identified in step 1, e.g., choice of energy and production metrics or the type of savings that are eligible under the selected method. From the original table, we deleted the line on inclusion of CHP-savings, as this distinction is already evident from the distinction between final and primary energy. We added the type of production data, the visibility of structural changes, and other factors and a possible residual.

Step 4

What are the advantages and disadvantages of these methods?

This step builds on the outcome of step 1–3, using a more qualitative approach. As we will see in chapter 4, every method has its own context and this context defines the choices that are made in terms of scope, aim, and target audience. These choices also define how the outcome of the method should be used and how they should not be used. This is done for each method from step 1 to 3, based on the literature review in step 1 and the finding of the analysis in step 2.

Results and discussion

What are the differences between existing methods for energy efficiency

Energy efficiency is generally defined as the amount of energy used for a unit of useful output. Frequently used energy efficiency indicators are specific energy consumption (SEC) and energy intensity (EI). The SEC is a physical energy efficiency indicator, which is sometimes also called physical energy intensity (PEI) (Phylipsen et al. 1997; Farla and Blok 2000). Several authors (Worrell et al. 1994; Phylipsen et al. 1997; Boonekamp 2006; Cahill and Ó Gallachoír 2012b) reserve the term SEC for the ratio between energy and physical production (Joule/kg) and define energy intensity as the ratio of energy and monetary values (Joule/€).

The main choice in defining the reference energy use is to measure the “useful output” in physical (e.g., tons) or economic (€) terms. The choice of the indicator for an activity can have a large effect on the estimate of the energy intensity development (Worrell et al. 1997; Farla and Blok 2000). Freeman et al. (1997) already showed a low correlation between physical and economic measures of output in industry. Trends in energy intensity based on the economic value of output can diverge quite sharply from trends in energy intensity based on physical volume of output. They suggest that economic indicators may serve to exaggerate year-to-year changes in efficiency (Freeman et al. 1997). Therefore, economic indicators should not be compared to physical indicators.

Boonekamp (2005) has produced an overview of different “achievements” on different aggregation levels. Which unit is used best depends on the aggregation level, unit of analysis, complexity of products, and data availability. Indicators at a macro level (both physical and monetary) can contain many structural effects that can bias the results. Therefore, as indicators are calculated at a more aggregated level, the influence of external factors increases. Generally, indicators measured in monetary units are applied at the macro-economic level, while physical units are applied to (sub)sectoral level indicators (APERC 2000). The higher the level of industry aggregation, the more desirable is the use of market value of output relative to physical volume of output as measure of energy intensity. This is due to the difficulty of sensibly measuring the physical volume of output across very diverse products, like different chemicals with very different energy requirements (Freeman et al. 1997). Although physical indicators are preferred because of their closer relation to energy use, energy intensity is often used as a proxy for efficiency due to a lack of data.

Apart from the terms energy efficiency and intensity, the term energy savings is used as well. The fundamental difference between energy efficiency improvement and energy savings is that energy efficiency is a relative, dimension-free ratio, e.g., x% less energy consumption per unit of output or a conversion efficiency that has been raised from 80 to 90% (Abeelen et al. 2016). A change in energy intensity (or efficiency) can be a result of energy saving activities, but also of structural changes in the economy. Energy savings on the other hand are, by definition, a consequence of energy saving activities. Energy savings means an amount of saved energy determined by measuring and/or estimating consumption before and after implementation of an energy efficiency improvement measure, while ensuring normalization for external conditions that affect energy consumption (European Commission 2012). These are defined as the difference of actual energy use and a reference energy use. The reference energy use is the amount of energy that would have been used in the absence of energy-saving activities (Boonekamp 2005). The (theoretical) reference use is defined by calculating the impact of changes in volume and structure and possibly other known factors, using a decomposition method. Ang (2004) and Boonekamp (2005) describe several decomposition methods. Despite the differences between these terms, in practice, energy efficiency is used for all of them, which may lead to confusion.

To know why energy consumption has changed and what impact energy policy has had on this change, all factors influencing consumption have to be known. This is especially true in economies subject to large changes like strong economic growth or recession, population changes or economies in transition. The difficulty lies in disentangling the different drivers of energy efficiency development.

Not only the metrics itself, but also the level of detail of the source data can have a large effect on the size and distribution of the different decomposition factors. Generally, a greater level of disaggregation will give a more reliable set of results (Cahill and Ó Gallachoír 2010). For example, if there is only one number known for the production volume in a sector (e.g., tons of paper in the paper industry), a shift in production from low- to high-quality paper (a structure effect) will be hidden in the volume effect. A shift in production between companies or subsectors with different energy characteristics will be hidden if energy and production data are only known on sectoral level. The resulting estimated energy savings effect can differ significantly in the two cases. The effect of aggregation level on saving results is shown in Fisher-Vanden et al. (2002).

A special aspect of the aggregation level is the choice between final or primary energy. This choice defines if developments in the efficiency of energy conversion will be observed or not. Looking at final energy alone, one gets a good view on efficiency developments on the level of the end user: in the case of industry, on the industrial process level. However, changes in energy conversion (in the electricity producing sector) will not be observed. A shift towards electrification might improve efficiency on the end-use level, but when this electricity is generated by inefficient electricity generation plants, the efficiency of the total system might decrease. Moreover, savings by industrial CHP are not assigned to the energy-producing sector, even when these savings are induced by actions from industrial companies. The choice for final or primary energy is often made deliberately, depending of the purpose of the analysis.

What are the reasons for the differences in methods?

Main variables

For a decomposition analysis, one has to decide which source data to use. Important are the choices between final or primary energy and that between physical or monetary production data.

To illustrate the effect of this choice on decomposition results, we use the dataset in Table 2. The data in the set stand model for a sector like the chemical industry in the Netherlands, which in the last decade featured a shift towards more specialized products, with a higher added value. This means that added value grows faster than physical production. Both added value and physical production increase faster than energy use (both final and primary). As a result both “physical” energy intensity and “monetary” energy intensity decrease, with monetary intensity decreasing faster. A comparable scenario has been used by Cahill and Ó Gallachoír (2010). Our dataset contains 5-pJ savings on primary energy as a result of more efficient energy conversion and 1 pJ of dissaving effects, e.g., an increase of energy use as a result of extra treatment of fume gases due to increased air pollution regulations. Such savings and other effects are reported by companies in annual monitoring reports (see also Abeelen et al. 2016 which contains a decomposition of the change in energy use of the chemical sector between 2006 and 2011).

The following sections show the outcome for a two-factor and three-factor decompositions, respectively.

Two-factor decomposition

Figure 2 shows the outcome of a two-factor decomposition where the change in energy use is decomposed in a volume and efficiency effect.

Two-factor decomposition for four scenarios applied to dataset Table 2

All four scenarios come to different efficiency effects, depending on choices of final or primary energy and physical or economic production data. In the scenarios I and II using final energy use, the efficiency effect is lower than in the scenarios using primary energy (III and IV). Methods focusing on final energy will not discern savings as a result of more efficient energy conversion, like CHP. Energy use in industrial CHP-installations is excluded from the industrial consumption and is considered as part of the energy conversion sector, even when those installations are situated on industrial sites. This means that those savings are not shown in the industrial sector, but are attributed to the energy sector. This might be understandable if one is only interested in the developments in end-use sectors. However, in many cases, this is not logical from the point of view of the end user itself. Many CHP-installations are built, owned, and operated by end users. Since it is their decision to invest in a more efficient energy generation, it is debatable to attribute the results of their efforts to another sector.

The second important choice is that between a physical or monetary activity indicator. In our example, the efficiency effect is higher in the monetary scenarios I and III, as value added grows faster than physical production. The efficiency effect is highest in the scenario III using both primary energy use and monetary production data, as this scenario captures both the CHP savings as the efficiency gain due to the growing value added. This difference between physical and monetary indicators confirms the conclusions of Freeman et al. (1997).

The downside of the scenarios that use value added as a proxy for production is the influence of price effects. If value added increases while energy consumption remains stable, this will show up as a volume effect, but also as an efficiency effect of the same size. This is correct in the sense that Joule/€ has decreased. However, value added can be influenced by factors that have no direct relation to production, i.e., market prices or changes in other production factors. In other words, we do not know if production processes have become more energy-efficient.

Three-factor decomposition

In a real economy, changes in the structure of the economy can have a significant impact on energy intensity as well. If a sector with a relatively low energy intensity grows, while a sector with high energy intensity remains stable, the energy intensity of the whole economy decreases, even though individual sectors remain at the same intensity level. This is visualized in the dataset in Table 3. In this dataset, sector A, as treated in the former section, is divided in two subsectors (A1 and A2).

When we use these data to decompose the change in energy consumption in a volume, structure, and efficiency effect, using the same four scenarios (Fig. 1), we get the results as presented in Fig. 3.

Three-factor decomposition for four scenarios applied to dataset Table 3

In the combination of final energy use and physical production data (scenario II), the efficiency effect is the smallest, due to the dissaving “other factors” and the fact that CHP-savings do not contribute to final energy savings. The efficiency effect is highest in scenario III, as this scenario captures both the industrial CHP savings and the “efficiency” gain due to the growing value added. In the scenarios using primary energy, it should be noted that industrial companies do have control over the efficiency of their own industrial CHP-installations, but not on the efficiency of power plants in the energy sector. The difference of a factor 7 between the scenarios underlines the conclusions of Schlomann et al. (2015) who found that different meaningful definitions of energy efficiency can differ by a factor 10. This is in line with Cahill and Ó Gallachoír (2010) who concluded that energy intensity results include changes brought about by a variety of factors, such as hidden structural changes.

The volume effect in these scenarios is the same as found in the two-factor decomposition analysis. However, in all scenarios, the efficiency effect is smaller. This can be explained as part of the efficiency effect has now become a structure effect. In the combination of final energy use and physical production data, the efficiency effect is even positive (dissaving), due to the fact that CHP-savings are not counted, while the dissaving of “other factors” cause a higher energy use. In the first scenario (final/monetary), both volume and structure effects are largest, as the two sectors show different developments. This shows the effect of the choice of metrics for production and energy use.

We can illustrate this with an example from the asphalt industry where more energy efficient but also more expensive lower temperature asphalt competes with hot-temperature (normal) asphalt. Lower temperature asphalt uses considerably less energy during production than hot-temperature asphalt (Thives and Ghisi 2017). If policy aims to decrease the energy use (and hence emissions) from asphalt production, a tax on hot-temperature asphalt is a possible instrument. This instrument would increase the market share of lower temperature asphalt and contribute to the policy target. Let us first consider all lower temperature and hot-temperature asphalt as the same product (ton asphalt). After all, the two products have the same basis characteristics. We would observe a decrease in average energy use and, therefore, a savings effect. But if we consider lower temperature and hot-temperature asphalt as different products, we would not see a savings effect, but a structure effect. If the tax would decrease the total asphalt production (as the price increases and therefore might lower total demand), it would be visible not as a saving, but as a volume effect.

This example shows that whether an effect is accounted as a volume, structure, or a savings effect depends on the level of detail of volume and structure data being used; when data are known on a more detailed, subsectoral level, effects become visible that remain hidden when only data on a higher aggregation level are used. This has important consequences: detailed data lead to other results than aggregated data. If specific volume and structure information is not available, full decomposition is not possible.

How are these methods applied in the Netherlands?

Methods in use

The “What are the reasons for the differences in methods?” section showed the impact of the use of different metrics to calculate progress in energy efficiency. In this section, we look how these choices were made in the different methods used in the Netherlands to calculate energy efficiency indicators for industry. Three of these are top-down methods. Three methods are used nationally while a fourth is used for international comparison. Next to these methods, several policy instruments have their individual monitoring instruments using bottom-up data of implemented projects. The voluntary agreements LTAFootnote 1 and LEE in the Netherlands are used as an example of the latter methods, making it possible to compare bottom-up with top-down methods. We introduce these methods shortly below.

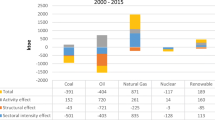

The oldest method is the protocol monitoring energy (PME) saving, originating in 2001. The PME was developed on request of the Dutch Ministry of Economic Affairs to develop a uniform method to annually measure energy consumption and saving. An energy saving effect is calculated nationally and for every main sector, based on data by National Statistics. For industry, the average annual saving in the period 2000–2010 is 1.1% (see Fig. 4) (ECN 2012). The protocol defines energy savings as the difference between actual energy use and a frozen efficiency reference energy use. The saving effect is the residual after correction for volume and structure effects (Boonekamp et al. 2001).

Final energy savings in industry (excluding chemistry) based on PME (ECN 2012)

Several adaptations to the original method have been made to adapt the method to European reporting formats (ECN 2012).

The European Energy Efficiency Directive (EED) (European Commission 2012), in place since 2012, is a framework directive which sets overarching objectives and targets to be achieved by a coherent and mutually reinforcing set of measures covering virtually all aspects of the energy system (Coalition for Energy Saving 2013). It is the successor of the 2006 Energy Services Directive (ESD) (European Parliament and Council 2006). Following art. 7, Member States must ensure that the required amount of energy savings is achieved through national energy efficiency obligation schemes or other “policy measures.” These policy measures need to be designed to achieve “end-use energy savings” which are “among final customers” over the 2014–2020 period. Member States shall express the amount of energy savings required of each obligated party in terms of either final or primary energy consumption (European Commission 2012). Besides, following art. 3, Member States should define an indicative national energy efficiency target, based on either primary or final energy consumption, primary or final energy savings, or energy intensity (European Commission 2012).

Implementation of the EED in the Netherlands did not lead to one distinct new policy instrument, but rather a series of adaptations of existing instruments. Art. 7 of the EED prescribes a method to calculate energy efficiency improvement. Policy measures that are primarily intended to support policy objectives other than energy efficiency or energy services as well as policies that trigger endues savings that are not achieved among final consumers are excluded only energy savings that are a result of real “individual actions” that result from the implementation of these policy measures are to be taken into account. Member States may not count actions that would have happened anyway (European Commission 2012). For the Netherlands industry, this means that an existing instrument like the Long-Term Agreements can only partly be counted towards EED savings (under the exemption that savings can be counted against the target when they result from energy saving actions newly implemented between 31 December 2008 and the beginning of the obligation period and still have an impact in 2020), but adaptations to this instrument (extra demands on participants) can be counted as EED savings.

ODEX is the index used in the ODYSSEE-MURE project to measure the energy efficiency progress by main sector (industry, transport, households) and for the whole economy (all final consumers) for all 28 countries in the EU (Enerdata 2010). The ODYSSEE database contains detailed energy efficiency and CO2-indicators, i.e., about 180 indicators on energy consumption by sector and end-uses, activity drivers and related CO2-emissions, about 600 data series. The ODYSSEE database provides comprehensive monitoring of energy efficiency trends in all the sectors and priority areas addressed by EU policies. The database contributes to the development of a monitoring methodology based on top-down assessment of energy savings, through different types of indicators (Ademe 2015). There is no formal relation between the Directive and Odyssee, although the Odyssee reports were meant to monitor progress towards implementation of the EED targets of Member States, The Energy Efficiency Directive itself does not mention ODEX or the Odyssee project.

Indices are calculated from variations of unit energy consumption indicators, measured in physical units. If no physical unit is available, an economic unit (Joule/value added euro) is provided.

Bottom-up methods are based on monitoring or evaluation of policy instruments, like the voluntary agreements LTA and LEE. These differ from the abovementioned methods in that they do not provide a figure on national level, but are limited to the effects of their own instrument. These methods are based on bottom-up savings by individual projects implemented by companies, reported in yearly monitoring reports or subsidy request forms. These methods could help in providing explanations of developments.

A special case of a bottom-up method is the monitoring of the Energy Agreement. It is special since it sets targets for the Netherlands as a whole and for individual sectors, but counts only those savings that can be attributed to one of 150 actions to reach 1.5% efficiency improvement per year and 100-pJ final energy savings. As such, it is a bottom-up method, although it also uses PME results to report on general progress in efficiency.

Comparison

Table 4 shows the differences between the methods described above. These criteria were selected based on an inventory of all possible differences and similarities between the numbers on energy efficiency in industry that were reported in the Netherlands. Important differences between the methods are the choice for final or primary energy use, the choice for a reference energy use, and the extent to which policy induced savings from different instruments are distinguished from autonomous developments. PME and ODEX are independent methods not coupled to a policy target, while the others are directly coupled to policy targets. The many aspects in which the methods differ make a comparison difficult. The only aspect on which the three national top-down methods agree is that decrease of the final energy use and the effects of national policy counts as savings.Footnote 2 In most other aspects, the methods differ so much that comparison is impossible (Schoots and Hammingh 2015). The decomposition in the “What are the reasons for the differences in methods?” section provides a theoretical interpretation of the differences in results.

Policy makers do not only want to know if the policy target is reached but also if savings are induced by their policy instruments (effectiveness). Have their instruments brought about the desired effect? However, not all savings are induced by policy instruments and the other way round: policy instruments will not only influence savings, but possibly also sector activity and structure.

The used methods differ in the way changes are assigned to a volume, structure, or efficiency effect. For example, PME is stricter in its definition of savings than the EA. The EA is aimed towards lowering final energy use, regardless of the cause. Therefore, instruments that result in a decrease in the activity level or in a shift towards a less energy-intensive mix of activities, will be seen as a policy effect by the EA. The PME classifies these not as a savings effect, but as a volume or structure effect (Schoots and Hammingh 2015). In other words, the same development will be assigned to a different effect dependent on the method applied.

Considering the fact that methods have been developed for different purposes and the differences mentioned in Table 4, it is not surprising that the methods yield different results. Table 5 shows indicators on energy efficiency in the Dutch industry since 2000. The results differ by more than a factor 2. There are no data for EA and EED in this table, as there are no data for a comparable period; EED art. 7 specifically targets cumulative savings over the period 2014–2020, which are calculated according to EED art. 7 requirements and are not comparable with normally used annual cumulative savings in another period. Schoots and Hammingh (2016) report 1-pJ final energy saving for industry in 2016 compared to 2000 that can be attributed to the EA, and 91-pJ energy savings for industry eligible under art. 7 of EED. The many different outcomes resemble the results of Voswinkel (2018), who was able to calculate eight different but correct results from the same dataset.

To better understand the reasons behind these differences, we evaluate developments in more detail. In Dutch industry, growth of value added is structurally higher than the increase in physical production (Schoots and Hammingh 2015), resulting in a decrease in energy intensity for the whole sector even while production of individual products is not becoming more efficient. The industrial subsectors have experienced a decrease in energy consumption (2000–2010), in line with a lower energy intensity within these sectors. This is a result of a structural change towards less energy intensive production (Gerdes 2012). This decrease even compensates for an increase in the contribution of the (energy-intensive) chemical sector in the Netherlands between 2000 and 2008 (Ademe 2012). In the case of the Netherlands, the fact that in ODEX the production of the chemical industry (which covers half the total industrial energy use of the Netherlands) is measured in euros, results in a large efficiency effect (2.3%/year) is compared to PME (1.1%/year). Added value in this sector has increased faster than physical production (Odyssee 2012). In the PME method, the reference energy use in the chemical sector is based on physical production of several products (via Prodcom).Footnote 3 In PME, a change in the product-mix will be visible as a structure effect or—if no data on subsectors are available—as a smaller volume effect. This difference in outcome is visible in the “Two-factor decomposition” section as the difference between Figs. 1 and 4.

LTA savings are higher than PME savings as the bottom-up reporting of savings by implemented energy-saving projects measures only savings and does not observe any developments that might have adverse effects on energy use or energy efficiency.

What are the advantages and disadvantages of the methods?

The “How are these methods applied in the Netherlands?” section showed the differences that follow from the choices that have been made in terms of scope and used data. These choices also define the possible use of the outcome; the outcome of one method cannot be used for all possible purposes. This should always be kept in mind when interpreting results, especially when results are compared. What method is most suitable depends on the aim of the analysis; every method has its own (dis)advantages, which are summarized in Table 6.

The PME method has been in use since 2001 and can be used to compare historical and future trends in energy efficiency in the Netherlands. However, due to the differences with the European methods ODEX and EED, the results cannot be compared to other countries. Another disadvantage is that the PME results are sometimes confusing to policy makers: some policy effects are counted as volume or structure effects, hiding the policy effect. Only part of the efficiency effect that is measured in the PME counts as savings for the EED, as PME also counts the effect of EU and older policy instruments.

ODEX is designed to compare European countries, which makes it the best available method for this purpose. However, the method is largely dependent on availability of data. The choice between physical or economical production data can have a large effect on the outcome, especially in economies in transition. As countries make different choices regarding this issue, comparability between countries is doubtful.

Cahill and Ó Gallachoír (2010) also showed that fluctuations in the dataset used to calculate the indices can significantly influence the values yielded by the indices. Both ODEX (using unit consumption) and the LMDI approach (using energy intensity based on value added) overestimated the savings for Irish industry, with ODEX showing the greater overestimation of energy efficiency achievements of around 0.3 percentage points per year.

Another difference between ODEX and PME is the use of final or primary energy. This means a different allocation of energy consumption to sectors, but also a different observation of industrial CHP. Netherlands’ industrial CHP surged in the 1990s, but has been in decline since 2008 due to an unfavorable market situation. This means that CHP is replaced by less efficient power plants. PME, using primary energy, will therefore now see an increase in primary energy, while final energy use remains the same.

For most sectors, ODEX is comparable with the PME method, but for reasons of comparison and lack of data between Member States for some subsectors, energy intensity is used as a measure for energy efficiency. Where the former method includes volume effects (services, physical productions units, etc.), the latter does not, or at least to a lesser extent. This difference in approach needs to be kept in mind when looking at the ODEX figures. ODEX cannot discern structural changes within the chemical industry, as data on the (physical) amount of produced chemicals is not collected for ODEX. Compared to bottom-up evaluations, efficiency gains measured in Odyssee have a broader scope and include all sources of energy efficiency improvements: policy measures, price changes, autonomous technical progress, other market forces (Odyssee 2015). Horowitz and Bertoldi (2015) have disaggregated that part of the changes in ODEX that are due to the effects of EU and national energy policy. They also conclude that there is a statistical relationship between bottom-up estimates of energy efficiency progress and actual energy consumption.

For the Energy Agreement, only those savings are eligible that are a result of the deals that are part of the EA. As the EA is a mix of new instruments and adaptation to existing European and national instruments, it is difficult to compare the results with other instruments or national saving figures. As such, the results are only useful to monitor the progress of the EA itself.

The main advantage of the EED method is that it defines the gap of a country towards its target as defined by the EED. However, Member States can choose from a number of exemptions in the definition of their end-use target under art. 7. For instance, Member States can choose to exclude the transport sector and/or part of the ETS sectors from their energy use. This makes targets from different countries incomparable and difficult to interpret. Not only target setting, but also the reported realized savings are difficult to interpret. This problem originates a.o. from (Coalition for Energy Saving 2013):

-

A vague baseline, because of the many possible exemptions

-

Possible double counting (e.g., subsidy schemes and LTA/LEE both reporting the same saving)

-

Not all policy measures are eligible

In general, the bottom-up results of individual policy instruments based on implemented projects give a good insight in actual investments in energy efficiency projects and provide detailed insight in the implementation of new technologies, but cannot be aggregated to a national level, because of the overlap in savings of different instruments. Moreover, it is very difficult to make a distinction between autonomous and additional savings. Efforts have been made to establish additionality, but are associated with a high degree of uncertainty. The bottom-up methods also disregard other factors that influence energy consumption and, therefore, have no direct relation to actual development of energy consumption or energy efficiency. A company can install energy-saving equipment, while at the same time implement changes in the production that lead to a higher energy consumption. Other choices that can have a large effect on the size of the savings effect are whether or not corrections are made, e.g., for weather influences, capacity utilization, free riders, rebound effect, or double counting.

Conclusions and recommendations

Conclusions

The most important difference between the methods described in the “Results and discussion” section lies in the choice of metrics used, which has a large effect on the outcome. The main choices for any energy efficiency method are the energy (final or primary energy) and the activity indicator (monetary or physical).

The choice of metrics is sometimes made deliberate, but is often a consequence of data availability. However, a choice made due to lack of data leads to misleading results. Whether an effect is shown as a volume, structure or an efficiency effect depends on the selected indicator for production and on the aggregation level of information available and used in the particular method. The scenarios in the “What are the reasons for the differences in methods?” section showed that a different choice of metrics can lead to a difference of a factor 7 in the efficiency effect. Because of the large and fundamental differences between the methods, it is not possible to compare the outcome. Up to a certain extent, it is possible to translate the results from one method to that of another. However, this is only possible with good understanding of the underlying method, definitions, and data used. Therefore, one should not translate results of one method to that of another and always bear in mind the aim and background of the particular method before interpreting the result. This important conclusion holds for methods that are used within one country, but even more so when comparing results from different countries, when differences in structure add to the differences in methodology.

As we saw in the “Methods in use” section, the current Dutch Energy policy to reduce energy use is a mix of instruments, existing and new, targeting generic or specific groups, and steering to convince target groups to change their behavior by enforcement, seduction, and/or information. To follow this mix of policy measures, the Netherlands use several methods to calculate energy savings. Importantly, the methods differ in aim, scope, the data used, and the factors in which effects are decomposed in the calculations. It seems that every new policy instrument leads to a new evaluation method, resulting in a set of figures that cannot be compared, not only within the Netherlands but also the EU. One reason for different methods is also the diverging requirements in different legislative texts and their reporting. Therefore, it is unlikely, at least at the short/medium term, that harmonized methods will be used in all countries.

While comparing efficiency data within a country is difficult, comparing efficiency results of different countries is even more challenging. Art. 7 of the EED leaves countries many options to design their own method. Therefore, it is not sensible to compare EED savings to other results.

An important finding from this paper is that it is not possible to nominate one of these methods as the “methodologically most correct” method. It is only possible to tell which method is most suitable for a certain purpose. This depends on the aim of the analysis; every method has its own (dis)advantages (see Table 6). In evaluating energy savings indicators, one needs to keep in mind that indicators in some cases do not purely represent energy savings. It is important to have good insight in the method to know whether other effects, like volume or structure, are included as well. The used method should therefore be clearly reported with the results.

Recommendations

Our first recommendation is that scientists should not only look for that indicator that best represents progress in efficiency, but also the indicator that gives the best support for the policy makers. For an indicator to be useful for a policy maker, it is necessary that the indicator has a close relation to the policy target and to the policy instruments that are used to reach that target. One might question whether it is useful to know if an effect should be considered an efficiency effect or a structure effect, as long as the effect is there. The first priority for a policy maker is to know if the policy target is reached. The second priority is to know if this effect was reached as a consequence of policy instruments. The monitoring system should be designed bearing in mind what is in practice possible with reasonable resources.

Our second recommendation is that in order to design a monitoring system that suits better to the needs of policy makers, one should design a system that is more simple and simple to communicate, focusing on either the development of easy to understand indicators or the effect of individual policy instruments, but not both.

Solutions thus far have tried to provide more detail, to decompose changes in energy consumption in ever more separate factors, trying to isolate the effect that one is searching for. But a more detailed monitoring instrument is more expensive and does not necessarily lead to better insight in the effect of policy instruments, as this study shows. Even a very detailed decomposition will not succeed in isolating the effect of a single policy instrument. Evaluation of individual instruments is more suited to this task.

Scientists have tried to design better monitoring systems. However, these efforts have not led to more comprehensive indicators. On the contrary, it has led to solutions with more degrees of freedom regarding the choices in metrics and applied corrections. The solutions provided thus far have focused too much on mathematical correctness, thereby forgetting the applicability for policy makers.

Notes

LTA = Long- Term Agreement on energy efficiency. LEE = Long-Term Agreement on energy efficiency for EU ETS enterprises

Savings on final energy use automatically lead to savings on primary energy, but this is not necessarily the case the other way round.

Prodcom provides statistics on the production of manufactured goods. The term comes from the French “PRODuction COMmunautaire” (Community Production) for mining, quarrying, and manufacturing: sections B and C of the Statistical Classification of Economy Activity in the European Union (NACE 2). http://ec.europa.eu/eurostat/web/prodcom

References

Abeelen, C. J., Harmsen, R., & Worrell, E. (2016). Counting project savings—An alternative way to monitor the results of a voluntary agreement on industrial energy savings. Energy Efficiency [1570-646X], 9, 755–770.

Ademe. (2015). Energy efficiency trends and policies in industry 2015. France: Ademe.

Ademe. (2012). Energy efficiency policies in industry. Lessons learned from the ODYSSEE-MURE project. Paris: Ademe.

Ang, B. W. (2004). Decomposition analysis for policymaking in energy: Which is the preferred method? Energy Policy, 32, 1131–1139.

APERC. (2000). A study of energy efficiency indicators for industry in APEC economies. Japan: APERC.

Boonekamp, P. G. M. (2005). Improved methods to evaluate realized energy savings. PhD thesis. the Netherlands: Utrecht University.

Boonekamp, P. G. M. (2006). Evaluation of methods used to determine realized energy savings. Energy Policy, 34, 3977–3922.

Boonekamp, P.G.M., Tinbergen, W., Vreuls, H.H.J., Wesselink, B. (2001). Protocol monitoring energy savings. ECN, Petten. ECN-C-01-129; RIVM 408137005.

Cahill, C., & Ó Gallachoír, B. P. (2010). Monitoring energy efficiency trends in European industry: Which top-down method should be used? Energy Policy, 38, 6910–6918.

Cahill, C., & Ó Gallachoír, B. P. (2012a). Quantifying the savings of an industry energy efficiency programme. Energy Efficiency, 5, 211–224.

Cahill, C., & Ó Gallachoír, B. P. (2012b). Combining physical and economic output data to analyse energy and CO2 emissions trends in industry. Energy Policy, 10, 422–429.

Coalition for energy saving. (2013). EU Energy Efficiency Directive (2012/27/EU). Guidebook for strong implementation.

ECN. (2012). Energiebesparing in Nederland 2000–2010. Petten: ECN.

EEA (European Environmental Agency). (2013). Trends and projections in Europe 2013 – Tracking progress towards Europe's climate and energy targets until 2020. EEA Report No 10/2013.

EEA (European Environmental Agency). (2015). Trends and projections in Europe 2015 – Tracking progress towards Europe’s climate and energy targets until 2020. EEA Report No 4/2015.

Enerdata. (2010). Definition of ODEX indicators in Odyssee database.

European Commission. (2012). Directive 2012/27/EU of the European Parliament and of the council of 25 October 2012 on energy efficiency, amending directives 2009/125/EC and 2010/30/EU and repealing directives 2004/8/EC and 2006/32/EC. OJ L 315, 14.11.2012.

European Parliament & Council. (2006). Directive 2006/32/EC of the European Parliament and of the Council of 5 April 2006 on energy end-use efficiency and energy services and repealing Council Directive 93/76/EEC. OJ L 114, 27.4.2006, p. 64–85.

Farla, J., & Blok, K. (2000). The use of physical indicators for monitoring of energy intensity developments in the Netherlands 1980-1995. Energy, 25(7), 609–638.

Farla, J., & Blok, K. (2001). The quality of energy intensity indicators for international comparison in the iron and steel industry. Energy Policy, 29, 523–543.

Fisher-Vanden, K., et al. (2002). What is driving China’s decline in energy intensity? Resource and Energy Economics, 26(1), 77–97.

Freeman, S. L., Niefer, M. J., & Roop, J. M. (1997) Measuring industrial energy intensity: practical issues and problems. Energy Policy 25(7–9):703–714

Gerdes, J. (2012). Energy efficiency policies and measures in the Netherlands. Odyssee-mure 2012. Monitoring of EU and national energy efficiency targets. ECN, Petten.

Horowitz, M. J., & Bertoldi, P. (2015). A harmonized calculation model for transforming EU bottom-up energy efficiency indicators into empirical estimates of policy impacts. Energy Economics, 51, 135–148, ISSN 0140-9883, (http://www.sciencedirect.com/science/article/pii/S0140988315001723). https://doi.org/10.1016/j.eneco.2015.05.020. Accessed 23 Aug 2016

Odyssee. (2012). Profiel energie-efficiëntie: Nederland. Odyssee-MURE, August 2012.

Odyssee. (2015). Synthesis: energy efficiency trends and policies in the EU. An analysis based on the ODYSSEE and MURE databases. Odyssee-MURE, September 2015.

Pérez-Lombard, L., Ortiz, J., & Velázquez, D. (2013). Revisiting energy efficiency fundamentals. Energy Efficiency, 6, 239–254.

Phylipsen, G. J. M., Blok, K., & Worrell, E. (1997). International comparisons of energy efficiency—Methodologies for the manufacturing industry. Energy Policy, 25(7–9), 715–725.

Schlomann, B., Rohde, C., & Plötz, P. (2015). Dimensions of energy efficiency in a political context. Energy Efficiency, 8, 97–115.

Schoots, K. & Hammingh, P. (2015). Nationale energieverkenning 2015. ECN-0—15-033. Petten: Energieonderzoek Centrum Nederland.

Schoots, K. & Hammingh, P. (2016). Nationale energieverkenning 2016. ECN-0—16-035. Petten: Energieonderzoek Centrum Nederland.

Smit, T. A. B., Hu, J., & Harmsen, R. (2014). Unravelling projected energy savings in 2020 of EU member states using decomposition analyses. Energy Policy, 74, 271–285.

Thives, L. P., & Ghisi, E. (2017). Asphalt mixtures emission and energy consumption: A review. Renewable and Sustainable Energy Reviews, 72, 473–484.

Tweede Kamer der Staten-Generaal. (2017). Vierde Nationale Energie Efficiëntie Actie Plan voor Nederland. kst-31209-213.

Voswinkel, F. (2018). Accounting of energy savings in policy evaluation. How to get at least 8 different (correct!) results from the same data. 2018 IEPPEC conference paper-Vienna, Austria.

Worrell, E., Cuelenaere, R. F. A., Blok, K., & Turkenburg, W. C. (1994). Energy consumption by industrial processes in the European Union. Energy, 19(11), 1113–1129.

Worrell, E., Price, L., Martin, N., Farla, J., & Schaeffer, R. (1997). Energy intensity in the iron and steel industry: A comparison of physical and economic indicators. Energy Policy, 25, 727–744.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Abeelen, C.J., Harmsen, R. & Worrell, E. Disentangling industrial energy efficiency policy results in the Netherlands. Energy Efficiency 12, 1313–1328 (2019). https://doi.org/10.1007/s12053-019-09780-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12053-019-09780-4