Abstract

Due to the subjective nature of music mood, it is challenging to computationally model the affective content of the music. In this work, we propose novel features known as locally aggregated acoustic Fisher vectors based on the Fisher kernel paradigm. To preserve the temporal context, onset-detected variable-length segments of the audio songs are obtained, for which a variational Bayesian approach is used to learn the universal background Gaussian mixture model (GMM) representation of the standard acoustic features. The local Fisher vectors obtained with the soft assignment of GMM are aggregated to obtain a better performance relative to the global Fisher vector. A deep Gaussian process (DGP) regression model inspired by the deep learning architectures is proposed to learn the mapping between the proposed Fisher vector features and the mood dimensions of valence and arousal. Since the exact inference on DGP is intractable, the pseudo-data approximation is used to reduce the training complexity and the Monte Carlo sampling technique is used to solve the intractability problem during training. A detailed derivation of a 3-layer DGP is presented that can be easily generalized to an L-layer DGP. The proposed work is evaluated on the PMEmo dataset containing valence and arousal annotations of Western popular music and achieves an improvement in \(R^2\) of \(25\%\) for arousal and \(52\%\) for valence for music mood estimation and an improvement in the Gamma statistic of \(68\%\) for music mood retrieval relative to the baseline single-layer Gaussian process.

Similar content being viewed by others

References

Brinker B, Dinther R and Skowronek J 2012 Expressed music mood classification compared with valence and arousal ratings. EURASIP Journal of Audio, Speech and Music Processing 24: 1–14

Zhang K, Zhang H, Li S, Yang C and Sun L 2018 The PMEmo dataset for music emotion recognition. In: Proceedings of the\(8{th}\)International Conference on Multimedia Retrieval, ICMR 2018, pp. 135–142

Wang J, Yang Y, Wang H and Jeng S 2015 Modeling the affective content of music with a Gaussian mixture model. IEEE Transactions on Affective Computing 6: 56–68

Panda R, Malheiro R and Paiva R 2018 Novel audio features for music emotion recognition. IEEE Transactions on Affective Computing 1–14

Chin Y, Wang J, Wang J and Yang Y 2016 Predicting the probability density function of music emotion using emotion space mapping. IEEE Transactions on Affective Computing 1–10

Fukayama S and Goto M 2016 Music emotion recognition with adaptive aggregation of Gaussian process regressors. In: Proceedings of the\(41^{st}\)IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2016, pp. 71–75

Wang J, Wang H and Lanckriet G 2015 A histogram density modeling approach to music emotion recognition. In: Proceedings of the\(40{th}\)IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2015, pp. 698–702

Chen Y, Yang Y, Wang J and Chen H 2015 The AMG1608 dataset for music emotion recognition. In: Proceedings of the\(40{th}\)IEEE International Conference on Acoustics, Speech and Signal Processing, ICASSP 2015, pp. 693–697

Aljanaki A, Yang Y and Soleymani M 2017 Developing a benchmark for emotional analysis of music. PLoS ONE 12: e0173392

Schmidt E and Kim Y 2011 Modeling musical emotion dynamics with conditional random fields. In: Proceedings of the\(12{th}\)Conference International Society for Music Information Retrieval, ISMIR 2011, pp. 777–782

Zhang J, Huang X, Yang L, Xu Y and Sun S 2017 Feature selection and feature learning in arousal dimension of music emotion by using shrinkage methods. Multimedia Systems 23: 251–264

Chapaneri S and Jayaswal D 2017 Structured prediction of music mood with twin Gaussian processes. In: Shankar B, Ghosh K, Mandal D, Ray S, Zhang D and Pal S (Eds.) Pattern Recognition and Machine Intelligence, PReMI 2017, Lecture Notes in Computer Science, vol. 10597, pp. 647–654

Markov K and Matsui T 2014 Music genre and emotion recognition using Gaussian processes. IEEE Access 2: 688–697

Jaakkola T and Haussler D 1999 Exploiting generative models in discriminative classifiers. In: Proceedings of Advances in Neural Information Processing Systems, pp. 487–493

Sánchez J, Perronnin F, Mensink T and Verbeek J 2015 Image classification with the Fisher vector: theory and practice. International Journal of Computer Vision 105: 222–245

Moreno P and Rifkin R 2000 Using the Fisher kernel method for web audio classification. In: Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2417–2420

Mariethoz J, Grandvalet Y and Bengio S 2009 Kernel based text-independent speaker verification. In: Automatic Speech and Speaker Recognition: Large Margin and Kernel Methods, pp. 195–220

Marchesotti L, Perronnin F, Larlus D and Csurka G 2011 Assessing the aesthetic quality of photographs using generic image descriptors. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1784–1791

Csurka G, Dance C, Fan L, Willamowski J and Bray C 2004 Visual categorization with bags of keypoints. In: Proceedings of ECCV Statistical Learning in Computer Vision Workshop, pp. 1–22

Perronnin F, Sánchez J and Mensink T 2010 Improving the Fisher kernel for large-scale image classification. In: Proceedings of ECCV Computer Vision Workshop, pp. 143–156

Liu X, Chen Q, Wu X, Liu Y and Liu Y 2017 CNN based music emotion classification. arXiv:1704.05665

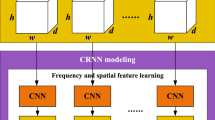

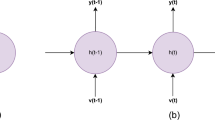

Malik M, Adavanne S, Drossos K, Virtanen T, Ticha D and Jarina R 2017 Stacked convolutional and recurrent neural networks for music emotion recognition. arXiv:1706.02292

Damianou A and Lawrence N 2013 Deep Gaussian processes. In: Proceedings of the\(16^{th}\)International Conference on Artificial Intelligence and Statistics, AISTATS 2013, pp. 207–215

Bui T, Lobato J, Lobato D, Li Y and Turner R 2016 Deep Gaussian processes for regression using approximate expectation propagation. In: Proceedings of the\(33{rd}\)International Conference on Machine Learning, ICML 2016, pp. 1472–1481

Vafa K 2016 Training deep Gaussian processes with sampling. In: Proceedings of the\(3^{rd}\)NIPS Workshop on Advances in Approximate Bayesian Inference, NIPS 2016, pp. 1–5

Chen S, Lee Y, Hsieh W and Wang J 2015 Music emotion recognition using deep Gaussian process. In: Proceedings of the\(7{th}\)Signal and Information Processing Association Annual Summit and Conference, APSIPA 2015, pp. 495–498

Liang C, Su L and Yang Y 2015 Musical onset detection using constrained linear reconstruction. IEEE Signal Processing Letters 22: 2142–2146

Schuller B, Steidl S, Batliner A, Vinciarelli A, Scherer K, Ringeval F, Chetouani M, Weninger F, Eyben F, Marchi E, Mortillaro M, Salamin H, Polychroniou A, Valente F and Kim S 2013 The INTERSPEECH 2013 computational paralinguistics challenge: social signals, conflict, emotion, autism. In: Proceedings of the\(14^{th}\)Annual Conference of the International Speech Communication Association, INTERSPEECH 2013, pp. 148–152

Eyben F, Wöllmer M and Schuller B 2010 openSMILE: the Munich versatile and fast open-source audio feature extractor. In: Proceedings of the\(18{th}\)ACM International Conference on Multimedia, pp. 1459–1462

Lartillot O and Toiviainen P 2007 A Matlab toolbox for musical feature extraction from audio. In: Proceedings of the\(10{th}\)International Conference on Digital Audio Effects, DAFx 2007, pp. 237–244

Bishop C 2006, Pattern recognition and machine learning. New York: Springer-Verlag

Perronnin F and Dance C 2007 Fisher kernels on visual vocabularies for image categorization. IEEE Computer Vision and Pattern Recognition, pp. 1–8

Jegou H, Perronnin F, Douze M, Sanchez J, Perez P and Schmid C 2012 Aggregating local image descriptors into compact codes. IEEE Transactions on Pattern Analysis and Machine Intelligence 34: 1704–1716

Rasmussen C and Williams C 2006 Gaussian processes for machine learning. MIT Press

Maclaurin D, Duvenaud D and Adams R 2015 Autograd: effortless gradients in pure Numpy. In: Proceedings of the\(32{nd}\)International Conference on Machine Learning AutoML Workshop, ICML 2015, pp. 1–3

Han J, Kamber M and Pei J 2011 Data mining: concepts and techniques, 3rd ed. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc.

Chatterjee S, Mukhopadhyay A and Bhattacharyya M 2019 A review of judgment analysis algorithms for crowdsourced opinions. IEEE Transactions on Knowledge and Data Engineering

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

(i) The expected value of the Fisher score with respect to the UBM-GMM model is zero as shown in Eq. (32):

(ii) Due to the use of ML estimation approach, the second integral of Eq. (14) is zero as shown in Eq. (33):

(iii) The FIM \(\mathbf {F}_{{\varvec{\Theta }}}\) is equivalent to the negative expected Hessian of the model’s log-likelihood as shown in Eq. (35). Since the Hessian can be written as the Jacobian of the gradient, we have

Taking the expectation of Eq. (34) with respect to the UBM-GMM model, we have

Rights and permissions

About this article

Cite this article

Chapaneri, S., Jayaswal, D. Deep Gaussian processes for music mood estimation and retrieval with locally aggregated acoustic Fisher vector. Sādhanā 45, 73 (2020). https://doi.org/10.1007/s12046-020-1313-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12046-020-1313-8