Abstract

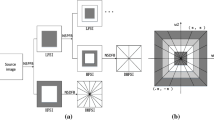

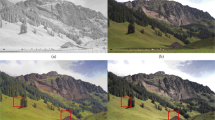

The fusion of infrared and visible images is difficult because of their different modalities. Current fusion methods are difficult to maintain both complementary information and good visual effects, such as methods of region discrimination based on visual saliency and methods based on total variation (TV). Among them, methods of region discrimination based on visual saliency for fusion have better complementary information but poor visual consistency, while methods based on total variation for fusion have good visual consistency, but there is no proper regularization to ensure sufficient selection and fusion of complementary information. In this paper, an improved infrared and visible image fusion method via visual saliency and fractional-order total variation is proposed. First, the infrared and visible images are fused through the saliency map to obtain a fused image, and then the fused image and an selected original image are fused by the fractional-order total variation to obtain the final fused image. In this paper, visual saliency map-based fusion makes the fused image contain as much complementary information as possible from the source image, while fractional-order total variation-based fusion makes the fused image have better visual effects. Compared with the state-of-the-art image fusion algorithm, the experimental results show that the proposed method is more competitive in retaining image texture details and having visual effects.

Similar content being viewed by others

References

Ma, J., Yu, W., Liang, P., Li, C., Jiang, J.: FusionGAN: a generative adversarial network for infrared and visible image fusion. Inf. Fusion 48, 11 (2019)

Suraj, A.A., Francis, M., Kavya, T., Nirmal, T.: Discrete wavelet transform based image fusion and de-noising in FPGA. J. Electr. Syst. Inf. Technol. 1(1), 72 (2014)

Lewis, J.J., OCallaghan, R.J., Nikolov, S.G., Bull, D.R., Canagarajah, N.: Pixel-and region-based image fusion with complex wavelets. Inf. Fusion 8(2), 119 (2007)

Dong, L., Yang, Q., Wu, H., Xiao, H., Xu, M.: High quality multi-spectral and panchromatic image fusion technologies based on Curvelet transform. Neurocomputing 159, 268 (2015)

Wang, J., Li, Q., Jia, Z., Kasabov, N., Yang, J.: A novel multi-focus image fusion method using PCNN in nonsubsampled contourlet transform domain. Optik 126(20), 2508 (2015)

Gambhir, D., Manchanda, M.: Waveatom transform-based multimodal medical image fusion. SIViP 13(2), 321 (2019)

Zhang, Q., Liu, Y., Blum, R.S., Han, J., Tao, D.: Sparse representation based multi-sensor image fusion for multi-focus and multi-modality images: a review. Inf. Fusion 40, 57 (2018)

Yang, B., Li, S.: Multifocus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 59(4), 884 (2009)

Li, S., Yin, H.: Multimodal image fusion with joint sparsity model. Opt. Eng. 50(6), 067007 (2011)

Gao, Z., Yang, M., Xie, C.: Space target image fusion method based on image clarity criterion. Opt. Eng. 56(5), 053102 (2017)

Xiang, T., Yan, L., Gao, R.: A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking PCNN in NSCT domain. Infrared Phys. Technol. 69, 53 (2015)

Liu, Y., Chen, X., Peng, H., Wang, Z.: Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 36, 191 (2017)

Ma, J., Chen, C., Li, C., Huang, J.: Infrared and visible image fusion via gradient transfer and total variation minimization. Inf. Fusion 31, 100 (2016)

Zhao, J., Cui, G., Gong, X., Zang, Y., Tao, S., Wang, D.: Fusion of visible and infrared images using global entropy and gradient constrained regularization. Infrared Phys. Technol. 81, 201 (2017)

Li, H., Yu, Z., Mao, C.: Fractional differential and variational method for image fusion and super-resolution. Neurocomputing 171, 138 (2016)

Zhao, J., Chen, Y., Feng, H., Xu, Z., Li, Q.: Infrared image enhancement through saliency feature analysis based on multi-scale decomposition. Infrared Phys. Technol. 62, 86 (2014)

Zhang, Y., Wei, W., Yuan, Y.: Multi-focus image fusion with alternating guided filtering. SIViP 13(4), 727 (2019)

Ch, M.M.I., Riaz, M.M., Iltaf, N., Ghafoor, A., Sadiq, M.A.: Magnetic resonance and computed tomography image fusion using saliency map and cross bilateral filter. SIViP 13(6), 1157 (2019)

Li, S., Kang, X., Hu, J.: Image fusion with guided filtering. IEEE Trans. Image Process. 22(7), 2864 (2013)

Liu, Y., Liu, S., Wang, Z.: Multi-focus image fusion with dense SIFT. Inf. Fusion 23, 139 (2015)

Liu, C., Yuen, J., Torralba, A.: Sift flow: dense correspondence across scenes and its applications. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 978 (2010)

Liu, Y., Chen, X., Cheng, J., Peng, H., Wang, Z.: Infrared and visible image fusion with convolutional neural networks. Int. J. Wavelets Multiresolut. Inf. Process. 16(03), 1850018 (2018)

Liu, Y., Liu, S., Wang, Z.: A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 24, 147 (2015)

Ma, J., Zhou, Z., Wang, B., Zong, H.: Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 82, 8 (2017)

Kong, W., Zhang, L., Lei, Y.: Novel fusion method for visible light and infrared images based on NSST–SF–PCNN. Infrared Phys. Technol. 65, 103 (2014)

Zhang, J., Chen, K.: A total fractional-order variation model for image restoration with nonhomogeneous boundary conditions and its numerical solution. SIAM J. Imaging Sci. 8(4), 2487 (2015)

Pu, Y.F., Zhou, J.L., Yuan, X.: Fractional differential mask: a fractional differential-based approach for multiscale texture enhancement. IEEE Trans. Image Process. 19(2), 491 (2009)

Podlubny, I., Chechkin, A., Skovranek, T., Chen, Y., Jara, B.M.V.: Matrix approach to discrete fractional calculus II: partial fractional differential equations. J. Comput. Phys. 228(8), 3137 (2009)

Wang, H., Du, N.: Fast solution methods for space-fractional diffusion equations. J. Comput. Appl. Math. 255, 376 (2014)

Daubechies, I., Defrise, M., De Mol, C.: An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. J. Issued Courant Inst. Math. Sci. 57(11), 1413 (2004)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183 (2009)

Du, Q., Xu, H., Ma, Y., Huang, J., Fan, F.: Fusing infrared and visible images of different resolutions via total variation model. Sensors 18(11), 3827 (2018)

Chen, C., Li, Y., Liu, W., Huang, J.: SIRF: simultaneous satellite image registration and fusion in a unified framework. IEEE Trans. Image Process. 24(11), 4213 (2015)

Chen, C., Li, Y., Huang, J.: Calibrationless parallel MRI with joint total variation regularization. In: International conference on medical image computing and computer-assisted intervention. Springer, pp. 106–114 (2013)

Ma, J., Ma, Y., Li, C.: Infrared and visible image fusion methods and applications: a survey. Inf. Fusion 45, 153 (2019)

Roberts, J.W., Van Aardt, J.A., Ahmed, F.B.: Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J. Appl. Remote Sens. 2(1), 023522 (2008)

Cui, G., Feng, H., Xu, Z., Li, Q., Chen, Y.: Detail preserved fusion of visible and infrared images using regional saliency extraction and multi-scale image decomposition. Opt. Commun. 341, 199 (2015)

Qu, G., Zhang, D., Yan, P.: Information measure for performance of image fusion. Electron. Lett. 38(7), 313 (2002)

Xydeas, C., Petrovic, V.: Objective image fusion performance measure. Electron. Lett. 36(4), 308 (2000)

Piella, G., Heijmans, H.: A new quality metric for image fusion. In: Proceedings 2003 International Conference on Image Processing (Cat. No. 03CH37429), vol. 3, pp. III-173. IEEE (2003)

Wang, Z., Bovik, A.C.: A universal image quality index. IEEE Signal Process. Lett. 9(3), 81 (2002)

Zhao, Y., You, X., Yu, S., Xu, C., Yuan, W., Jing, X.Y., Zhang, T., Tao, D.: Multi-view manifold learning with locality alignment. Pattern Recogn. 78, 154 (2018)

Xu, C., Tao, D., Xu, C.: Multi-view intact space learning. IEEE Trans. Pattern Anal. Mach. Intell. 37(12), 2531 (2015)

Xie, P., Xing, E.: Multi-modal distance metric learning. In: Proceedings of the 23 International Joint Conference on Artificial Intelligence, vol. 1, pp. 1806–1812. AAAI Publications (2013)

Acknowledgements

This work has been partially supported by the Ministry of education Chunhui Project (Grant No. Z2016149), the Key scientific research fund of Xihua University (Grant No. Z17134), Xihua University Graduate Innovation Fund Research Project (Grant Nos. ycjj2018067, ycjj2018044), and Sichuan science and technology program (Grant No. 2019YFG0108).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, Q., Gao, Z., Xie, C. et al. Fractional-order total variation for improving image fusion based on saliency map. SIViP 14, 991–999 (2020). https://doi.org/10.1007/s11760-019-01631-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-019-01631-0