Abstract

We consider the problem of minimizing a sum of clipped convex functions. Applications of this problem include clipped empirical risk minimization and clipped control. While the problem of minimizing the sum of clipped convex functions is NP-hard, we present some heuristics for approximately solving instances of these problems. These heuristics can be used to find good, if not global, solutions, and appear to work well in practice. We also describe an alternative formulation, based on the perspective transformation, that makes the problem amenable to mixed-integer convex programming and yields computationally tractable lower bounds. We illustrate our heuristic methods by applying them to various examples and use the perspective transformation to certify that the solutions are relatively close to the global optimum. This paper is accompanied by an open-source implementation.

Similar content being viewed by others

Notes

If \(0 \not \in \mathop {{\mathbf{dom}}}f\), replace \(\gamma f_0(x / \gamma )\) with \(\gamma f_0(y + x / \gamma )\) for any \(y \in \mathop {{\mathbf{dom}}}f\). See [19, Thm. 8.3] for more details.

References

Agrawal, A., Amos, B., Barratt, S., Boyd, S., Diamond, S., Kolter, J.Z.: Differentiable convex optimization layers. In: Advances in Neural Information Processing Systems, pp. 9558–9570 (2019)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J.: Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 3(1), 1–122 (2011)

Gabay, D., Mercier, B.: A dual algorithm for the solution of nonlinear variational problems via finite element approximation. Comput. Math. Appl. 2(1), 17–40 (1976)

Glowinski, R., Marroco, A.: Sur l’approximation, par éléments finis d’ordre un, et la résolution, par pénalisation-dualité d’une classe de problèmes de dirichlet non linéaires. ESAIM: Math. Model. Numer. Anal. 9(R2), 41–76 (1975)

Grant, M., Boyd, S.: Graph implementations for non smooth convex programs. In: Recent Advances in Learning and Control, pp. 95–110. Springer, London (2008)

Günlük, O., Linderoth, J.: Perspective reformulations of mixed integer nonlinear programs with indicator variables. Math. Program. 124(1–2), 183–205 (2010)

Günlük, O., Linderoth, J.: Perspective reformulation and applications. In: Mixed Integer Nonlinear Programming, pp. 61–89. Springer, New York (2012)

Huber, P.: Robust regression: asymptotics, conjectures and monte carlo. Ann. Stat. 1(5), 799–821 (1973)

Huber, P., Ronchetti, E.: Robust Statistics. Wiley, London (2009)

Karp, R.: Reducibility among combinatorial problems. In: Complexity of Computer Computations. Springer, Boston (1972)

Lan, G., Hou, C., Yi, D.: Robust feature selection via simultaneous capped \(\ell \)2-norm and \(\ell \)2, 1-norm minimization. In: IEEE International Conference on Big Data Analysis, pp. 1–5 (2016)

Lipp, T., Boyd, S.: Variations and extension of the convex-concave procedure. Optim. Eng. 17(2), 263–287 (2016)

Liu, D., Nocedal, J.: On the limited memory BFGS method for large scale optimization. Math. Program. 45(1–3), 503–528 (1989)

Liu, T., Jiang, H.: Minimizing sum of truncated convex functions and its applications. J. Comput. Graph. Stat. 28(1), 1–10 (2019)

Moehle, N., Boyd, S.: A perspective-based convex relaxation for switched-affine optimal control. Syst. Control Lett. 86, 34–40 (2015)

Ong, C., An, L.: Learning sparse classifiers with difference of convex functions algorithms. Optim. Methods Softw. 28(4), 830–854 (2013)

Portilla, J., Tristan-Vega, A., Selesnick, I.: Efficient and robust image restoration using multiple-feature \(\ell \)2-relaxed sparse analysis priors. IEEE Trans. Image Process. 24(12), 5046–5059 (2015)

Rockafellar, T.: Convex Anal. Princeton University Press, Princeton (1970)

Safari, A.: An e-E-insensitive support vector regression machine. Comput. Stat. 29(6), 1447–1468 (2014)

She, Y., Owen, A.: Outlier detection using nonconvex penalized regression. J. Am. Stat. Assoc. 106(494), 626–639 (2011)

Sun, Q., Xiang, S., Ye, J.: Robust principal component analysis via capped norms. In: Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining, pp. 311–319. (2013)

Suzumura, S., Ogawa, K., Sugiyama, M., Takeuchi, I.: Outlier path: A homotopy algorithm for robust SVM. In: International Conference on Machine Learning, pp. 1098–1106 (2014)

Tao, P., An, L.: Convex analysis approach to DC programming: Theory, algorithms and applications. Acta Math. Vietnam. 22(1), 289–355 (1997)

Torr, P., Zisserman, A.: Robust computation and parametrization of multiple view relations. In: Sixth International Conference on Computer Vision , pp. 727–732 (1998)

Xu, G., Hu, B.G., Principe, J.: Robust C-loss kernel classifiers. IEEE Trans. Neural Netw. Learn. Syst. 29(3), 510–522 (2016)

Yu, Y.l., Yang, M., Xu, L., White, M., Schuurmans, D.: Relaxed clipping: A global training method for robust regression and classification. In: Advances in Neural Information Processing Systems, pp. 2532–2540 (2010)

Yuille, A., Rangarajan, A.: The concave-convex procedure. Neural Comput. 15(4), 915–936 (2003)

Zhang, T.: Multi-stage convex relaxation for learning with sparse regularization. In: Advances in Neural Information Processing Systems, pp. 1929–1936 (2009)

Zhang, T.: Analysis of multi-stage convex relaxation for sparse regularization. J. Mach. Learn. Res. 11(Mar), 1081–1107 (2010)

Acknowledgements

S. Barratt is supported by the National Science Foundation Graduate Research Fellowship under Grant No. DGE-1656518.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Difference of convex formulation

In this section we make the observation that (1) can be expressed as a difference of convex (DC) programming problem.

Let \(h_i(x) = \max (f_i(x) - \alpha _i, 0)\). This (convex) function measures how far \(f_i(x)\) is above \(\alpha _i\). We can express the ith term in the sum as

since when \(f_i(x) \le \alpha _i\), we have \(h_i(x)=0\), and when \(f_i(x) > \alpha \), we have \(h_i(x)=f_i(x)-\alpha _i\). Since \(f_i\) and \(h_i\) are convex, (1) can be expressed as the DC programming problem

with variable x. We can apply then well-known algorithms like the convex-concave procedure [24, 28] to (approximately) solve (16).

Appendix B: Minimal convex extension

If we replace each \(f_i\) with any function \({\tilde{f}}_i\) such that \({\tilde{f}}_i(x) = f_i(x)\) when \(f_i(x) \le \alpha _i\), we get an equivalent problem. One such \({\tilde{f}}_i\) is the minimal convex extension of \(f_i\), which is given by

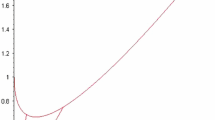

In general, the minimal convex extension of a function is often hard to compute, but it can be represented analytically in some (important) special cases. For example, if \(f_i(x)=(a^T x - b)^2\), the minimal convex extension is the Huber penalty function, or

Using the minimal convex extension leads to an equivalent problem, but, depending on the algorithm, replacing \(f_i\) with \({\tilde{f}}_i\) can lead to better numerical performance.

Rights and permissions

About this article

Cite this article

Barratt, S., Angeris, G. & Boyd, S. Minimizing a sum of clipped convex functions. Optim Lett 14, 2443–2459 (2020). https://doi.org/10.1007/s11590-020-01565-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-020-01565-4