Abstract

Meta-reasoning refers to processes by which people monitor problem-solving activities and regulate effort investment. Solving is hypothesized to begin with an initial Judgment of Solvability (iJOS)—the solver’s first impression as to whether the problem is solvable—which guides solving attempts. Meta-reasoning research has largely neglected non-verbal problems. In this study we used Raven’s matrices to examine iJOS in non-verbal problems and its predictive value for effort investment, final Judgment of Solvability (fJOS), and confidence in the final answer. We generated unsolvable matrix versions by switching locations of elements in original Raven’s matrices, thereby breaking the rules while keeping the original components. Participants provided quick (4 s) iJOSs for all matrices and then attempted to solve them without a time limit. In two experiments, iJOS predicted solving time, fJOS, and confidence. Moreover, although difficulty of the original matrices was dissociated from solvability, iJOS was misled by original matrix difficulty. Interestingly, when the unsolvable matrices were relatively similar to the originals (Experiment 2), iJOSs were reliable, discriminating between solvable and unsolvable matrices. When the unsolvable matrices involved greater disruption of the rules (Experiment 1), iJOS was not consistently predictive of solvability. This study addresses a gap in meta-reasoning research by highlighting the predictive value of iJOS for the solving processes that follow. The study also provides many future directions for meta-reasoning research in general, and regarding non-verbal problems, in particular.

Similar content being viewed by others

The metacognitive monitoring and control that take place in parallel to the performance of cognitive tasks have typically been studied in the context of learning and question answering (see Bjork et al. 2013, for a review). The meta-reasoning framework advanced by Ackerman and Thompson (2017a) relates to monitoring and control decisions which accompany problem-solving and reasoning tasks (see also Ackerman and Thompson 2015, 2017b). These tasks involve representing a given situation, planning, and applying a set of mental operations (Jonassen 1997; Mayer 1999). Similarly to learning processes, which are hypothesized to begin with an Ease of Learning judgment, meta-reasoning processes are hypothesized to begin with an initial Judgment of Solvability (iJOS). This judgment reflects the solver’s assessment as to whether the currently considered problem is solvable or unsolvable, prior to starting to solve it (see Ackerman and Thompson 2017a). It is now well-established that metacognitive judgments are based on heuristic cues, which are often reliable but sometimes generate biases (Koriat 1997). Therefore, iJOS, like other metacognitive judgments, is hypothesized to be susceptible to biases.

Overall, people are reluctant to invest time and effort in a task for which the probability of success is low (e.g., De Neys et al. 2013; see Stanovich 2009, for a review). According to the meta-reasoning framework, the very first decision made when encountering a problem is whether to attempt solving at all. By this theorizing, biases in iJOS are expected to mislead effort regulation, leading people to waste time on unsolvable problems and/or to abandon solvable ones (see Ackerman and Thompson 2017a).

Very little is known about how people infer their iJOS, its reliability, and its consequences. A central question when considering any metacognitive judgment is what cues underlie (and therefore potentially bias) it. In the case of iJOS, the question of interest is: How do people judge the solvability of a problem before beginning the attempt to solve it? A few studies (Bowers et al. 1995; Topolinski and Strack 2008) dealt with this question using the Remote Associates Test (RAT). In this task, participants are presented with triads of words, one at a time. In each solvable triad, all three words are commonly associated with the same fourth word (e.g., PIPE – WOOD – HOT: STOVE). An unsolvable triad is a random collection of three words without a common association. Topolinski (2014) reviewed studies dealing with factors that affect how people distinguish between coherent triads (solvable RAT items) and those which are non-coherent (unsolvable RAT items) after a brief exposure to each triad. He proposed that coherent triads are processed more fluently than non-coherent triads, and that the subjective feeling of fluency is the basis for distinguishing between coherent and non-coherent triads. This inference process resembles the way ease of processing informs judgments in the context of learning (see Bjork et al. 2013, for a review) and decision making (see Unkelbach and Greifeneder 2013, for a review). Similarly, iJOS was found to be associated with concreteness (Markovits et al. 2015), affect (Topolinski and Strack 2009), and accessibility (Ackerman and Beller 2017).

Most of what is currently known about metacognitive processes stems from empirical evidence collected with verbal tasks, like memorizing words and answering knowledge questions. Similarly, within the meta-reasoning domain, one explanation suggested by Topolinski for the coherence effect reviewed is based on unconscious spreading activation of semantic associations (Bolte and Goschke 2005; Bowers et al. 1990; Sio et al. 2013). This explanation is based on the stimuli being verbal as well. Thus, it is not clear whether the factors found to take effect with verbal stimuli generalize to non-verbal tasks. In the present study, we begin to address this gap by studying the reliability and the predictive power of iJOS in non-verbal problems.

Reber et al. (2008) provided rare evidence suggesting biased judgments in non-verbal tasks. They found that symmetry was used as a heuristic cue when participants were asked to provide quick intuitive judgments about the correctness of dot-pattern addition equations. Given that research of biases in judgments of solvability is still scarce even for verbal problems (see Ackerman and Beller 2017), the first aim of the present study is to expose conditions that affect the reliability of iJOS in non-verbal problems.

When discussing iJOS it is important to distinguish between difficulty and solvability. Difficult problems have solutions, even if these are not necessarily within the reach of all potential solvers. Respondents do not always differentiate between the questions “Can I find the solution?” and “Does the problem have a solution?” Ackerman and Beller (2017) found equivalence between these two questions, both in the judgments themselves and in their sensitivity to certain heuristic cues. We hypothesized that the information used for assessing the difficulty of a problem is also used as a cue for iJOS, although the two information sources, difficulty and solvability, are in fact separate and can be dissociated. Thus, in this study we examined whether cues which hint at the difficulty of a problem bias the solver’s iJOS.

Beyond a theoretical understanding of factors affecting iJOS, it is important to investigate the practical associations between iJOS and later stages of the problem-solving process. Specifically, we considered the predictive value of iJOS for effort regulation and for metacognitive judgments regarding the final answer.

Initial judgment of solvability (iJOS) and effort regulation

While the metacognitive literature deals extensively with strategic time allocation across items within a given task, so far this has been done mainly with memorization tasks (e.g., Son and Sethi 2010). This meta-memory line of research has shown that people generally spend more time on challenging items than on easier ones (see Koriat et al. 2014, for a review). However, there is debate over the time spent on the most difficult items. Metcalfe and Kornell (2005) found that some people can quickly skip extremely difficult items, while Undorf and Ackerman (2017) found across several conditions that people invest as much time in memorizing the most difficult items as they invest in items of intermediate difficulty. Notably, all theories that explain these findings assume reliable identification of the most challenging items, based on reliable heuristic cues which are immediately available when the item is encountered (e.g., familiarity of the words, Benjamin 2005; concrete vs. abstract meanings, Undorf et al. 2018). Moreover, these heuristic cues are, again, all semantic and thus depend on the stimuli being verbal.

The above-mentioned assumption regarding immediate availability of reliable cues cannot be transferred from memorization tasks to problem-solving tasks. A memorization task is always a possible task, at least for young and healthy people, and is mainly a matter of enough time investment. Problems, unlike items to be memorized, are not always solvable, or may be solvable only for those who have the relevant skills or knowledge. Moreover, it is unclear how people with the relevant knowledge to solve a given type of problem can assess the solvability of any individual item without engaging in the solving process, because cues such as familiarity and concreteness are not necessarily related to solvability. Thus, while available cues in memorization tasks are generally reliable, similar cues in the problem phrasing (or presentation) are not necessarily related to solvability. This distinction raises questions about the role played by judgments of solvability in the puzzle of time allocation and regulation of the problem-solving process more generally.

Theoretically, meta-reasoning research suggests that effort regulation should be predictable by iJOS, but this assumption has not yet been examined empirically. While research exists into time and effort regulation in environments containing both solvable and unsolvable problems, most of this research deals with personal traits. For instance, one study found that worriers were slower to disengage from unsolvable tasks than non-worriers, and thus performed less efficiently (Davis and Montgomery 1997). Likewise, optimists were found to be more likely than pessimists to disengage from unsolvable problems (Aspinwall and Richter 1999). With respect to effort regulation, Aspinwall and Richter (1999) found that positive affect is related to less investment in unsolvable problems, allowing more time for solvable problems. Payne and Duggan (2011) manipulated prior information about the probability that items were solvable. They found that information suggesting a high probability of solvability, relative to low probability, led to longer persistence before giving up, although this finding was significant in only one of two similar experiments. In short, there is still little evidence regarding factors that lead people to persist in finding a solution to a problem, or alternatively, the stopping rules that guide them to disengage from a given problem and move on to the next one (see Ackerman 2014).

Ongoing monitoring during the solving process is necessarily based on weighing various sources of information. In particular, factors that affect early stages in the solving process are integrated with new information collected or generated in later processing stages. This complex process may sometimes result in unjustified fixations (or anchoring). For example, Smith et al. (1993) showed that providing a pictorial example of a solution for a design problem constrains the formulation of alternative solutions (see also Wiley 1998). In line with such carryover effects, we considered the possibility of a metacognitive monitoring fixation on a certain judgment of solvability (solvable or unsolvable) over the course of the solving process. People who hold a fixed, but incorrect, judgment of a problem as solvable could be constrained from giving up attempts to find a solution despite repeated failure. Thus, our aim was to examine the extent to which the early iJOS predicts the time invested in trying to solve a problem, above and beyond actual solvability. We hypothesized that, as in other cases, a carryover effect leads people to fixate on their early impression of solvability during the later processing stages. We explored whether this was the case to a similar extent for solvable and for unsolvable problems.

Initial judgment of solvability (iJOS) and final judgment of solvability (fJOS)

Last but not least is the question of how iJOS influences later stages of the solving process beyond time allocation. In particular, we are interested in the relationship between iJOS and final JOS (fJOS)—the judgment that follows a sincere attempt at solving the problem, without time limitations. A large body of research concerned with judgments under uncertainty (Kahneman et al. 1982) has strongly challenged the efficacy of quick judgments, or what we might call intuition. According to this literature, intuitive judgments are often misguided by various irrelevant heuristics and are overturned by constructive considerations. Hence, this line of thought suggests there should be no (or little) correlation between iJOS and fJOS, which reflects an analytic and structured process. Against this literature, some researchers emphasize the productive facet of intuition, proposing that cues for coherence (patterns, meanings, structures) activate relevant mnemonic networks which guide thought to explore the nature of the coherence in question (Bowers et al. 1990). By this theory, an initial judgment tacitly directs efforts to support that judgment. If this is the case, then iJOS should be predictive of fJOS. Indeed, Ackerman and Beller (2017) found that iJOS and fJOS were similarly associated with accessibility. However, accessibility had unique predictive value only for iJOS, while other cues—the frequency of words in the language and response times—overrode its explanatory power for the variance in fJOS.

The solving process ends when the solver (a) provides a solution, (b) decides that the problem is unsolvable, or (c) gives up with the understanding that there exists a solution which was not found. This final stage is accompanied by a confidence judgment. Ackerman and Beller (2017) found that confidence was negatively correlated with accessibility—the cue they examined—only for solvable problems, while confidence and accessibility were not significantly correlated for unsolvable problems. This finding underscores the need to consider cue utilization processes separately for solvable and unsolvable problems. Hypothesizing that the metacognitive processes involved in solving non-verbal problems are similar to those involved in verbal problems in their use of cues, we expected that confidence would increase as difficulty declines, but only for solvable matrices. Thus, our fourth aim in this study was to investigate the final products of the solving process in non-verbal problems. Specifically, we looked for the associations between iJOS, on the one hand, and fJOS and confidence, on the other hand.

Overall, we expect this study to make two key contributions. First, we examined potentially biasing factors for iJOS in non-verbal problems. Second, we examined the predictive value of iJOS for the solving processes that follow, regardless of its reliability. To the best of our knowledge, such metacognitive analysis of monitoring and control processes over the course of solving non-verbal problems has never been done before.

Overview of the study

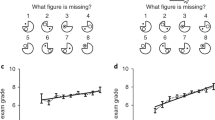

We used Raven’s matrices as our non-verbal problems. Raven’s Progressive Matrices (RPM), in both standard and advanced versions (Raven et al. 1993), are designed to test general reasoning ability. Each problem consists of shapes and patterns (elements) organized in a 3 × 3 matrix, with a blank space in the right bottom corner. See Fig. 1, panel a, for an example. The solver must identify the rules governing the arrangement of shapes and patterns in the other eight cells and then apply them to identify the missing element from among eight options given below the matrix, in a multiple-choice test format. The correlations commonly found between Raven’s test scores and other measures of intellectual achievement suggest that the underlying processes may be general rather than specific to this particular test (Ackerman et al. 2005; Carpenter et al. 1990; Raven 2000). The fact that Raven’s matrices represent general cognitive processes in a non-verbal manner makes them apposite for the present research.

Example of an easy Raven’s matrix (Raven et al. 1993) in a solvable version (a) and in an unsolvable version in which four elements of the solvable version were switched (b)

One of our hypotheses is that some of the cues used to assess the difficulty of a problem also inform iJOS. Research has suggested that the objective difficulty of Raven’s matrices originates from attributes such as the number of components in each shape (Primi 2001), the concreteness of shapes, or the ease with which they are named (i.e., all else being equal, familiar shapes like rectangles or circles indicate, either reliably or not, that the matrix is easier than when including unfamiliar shapes; Meo et al. 2007; Roberts et al. 2000). The availability of known cues which hint at an item’s difficulty is yet another advantage of using Raven’s matrices for our purposes.

For each original Raven’s matrix, we created an unsolvable version by randomly exchanging two or four of the eight elements, thereby breaking the matrix’s rules. See Fig. 1, panel b, for an example. Importantly, the factors mentioned above as affecting difficulty are attributes of the elements, rather than of the rules by which the elements are arranged. Thus, by switching elements from different locations we dissociated difficulty—or the cues normally used to assess difficulty—from solvability. This allowed us to examine the extent to which people use hints which are relevant for difficulty to assess solvability.

Following the procedure used by Ackerman and Beller (2017) with the verbal CRA problems, the two experiments had a two-phase structure. Participants provided their iJOS for all matrices in phase 1, before going on to the solving phase (phase 2). In the first phase, participants looked briefly at each matrix and provided their iJOS. In the second phase they attempted to solve each problem, provided an answer (either one of the eight options or “unsolvable”), and rated their confidence in their answer. No indications or reminders of the participant’s iJOS were provided at phase 2. This procedure allowed for a quick phase focused on iJOS, and then a relaxed phase focused on solving time and the final judgments of confidence and fJOS. The problems were presented in the same order in both phases, such that any inter-problem effects would be similar in both.

The two experiments differed in only one respect, namely the number of elements switched when generating the unsolvable versions of the problems. In Experiment 1 we switched four of the eight elements, making the unsolvable matrix particularly disarranged, in order to increase the chance that participants would provide substantive iJOSs rather than random ones. In Experiment 2 we looked for boundary conditions by comparing solvable to unsolvable matrices with only two elements switched. This is the smallest difference between solvable and unsolvable problems possible while keeping the elements constant across the two versions.

Experiment 1

Experiment 1 was conducted with the more disarranged unsolvable versions, in which we switched four of the eight elements. The independent variables were the solvability of the presented versions and the difficulty of the original matrices. The difficulty scores were defined as the mean success rate achieved for each original solvable matrix by another sample from the same population (N = 26) in a preliminary experiment. The dependent variables were iJOS (solvable/unsolvable), iJOS accuracy, response time in both phases, solution accuracy, fJOS (solvable/unsolvable), and confidence.

Method

Participants

Forty-three undergraduate students from the Technion participated in the study (53% female; Mage = 25.0, SD = 2.4) in exchange for course credit. All participants provided informed consent.

Materials

We used thirty Raven’s matrices, most (25) from the Advanced Progressive Matrices (APM) Set II and the rest (5) from the Standard Progressive Matrices (SPM) (Raven et al. 1993). The matrices were chosen based on a pretest with another sample from the same population (N = 19) to generate a large range of success rates, 17%–96%. Another eight matrices were used for instruction and practice.

Each matrix had two versions, the original (solvable) version and an unsolvable version. The unsolvable versions were created by randomly choosing four of the eight elements and switching their locations, breaking the rules of the matrix. Thus, both versions contained exactly the same elements. The original matrices were divided into two sets balanced for difficulty. Each participant received the solvable matrices from one set and the unsolvable matrices from the other set, counterbalanced across participants. The matrices were presented intermixed and with their order randomized individually for each participant.

Procedure

The experiment was administered in small groups of up to seven participants in a small computer lab. At the start, participants read instructions for solving Raven’s matrices and practiced on three matrices. The instructor then explicitly informed them that half the matrices they would encounter were unsolvable.

In the first phase participants provided only an iJOS. Ackerman and Beller (2017), with the three-word CRA problems, used a time limit of two seconds for eliciting participants’ first impression of each problem. An initial trial in which we presented Raven’s matrices for two seconds to our research assistants revealed that people simply cannot take in these particular stimuli in this brief timeframe. In a pre-test, 33 participants from the same population as the main study invested 43 s on average (SD = 33 s; Minimum = 3.1 s) in solving each matrix in the set used here, and only 0.3% of the answers were given in four seconds or less. We therefore settled on a four-second limit, on the grounds that this would allow time for participants to gain a first impression of the figures but not to solve the problems.

In accordance, in the first phase the thirty matrices were displayed one by one for up to four seconds each, without the answer options. Participants were instructed to click a button marked “yes” if they judged the matrix to be solvable and a button marked “no” otherwise. They were instructed to make their decision quickly, based on their “gut feeling,” without trying to solve the matrix. They practiced this task on four matrices, half of them unsolvable. If participants did not respond in the allotted time, a message reading “too slow” appeared on the screen, and the computer moved on to the next matrix without collecting a response for the one that was missed.

In the second phase, all thirty matrices were presented again, in the same order. Each matrix, whether solvable or unsolvable, was presented with the eight original solutions along with a button marked “unsolvable.” After choosing an answer, participants reported their confidence that their answer, whether a solution or the “unsolvable” response, was correct, on a 0 to 100% scale. There was no time limit for this phase. The entire procedure, including instruction and practice, took about 45 min.

Results

Seven participants were excluded from the analyses. Of these, four spent an unreasonably short time on the task, shorter than 2SD below the sample’s mean time distribution. The other three failed to provide more than 20% of their iJOSs on time.

Descriptive statistics for both experiments are presented in Table 1.

Missed initial judgments of solvability (iJOS)

Of all iJOSs, 5.4% were not provided within the allotted four seconds, with 48% of those relating to unsolvable matrices. Missed iJOSs were excluded from all analyses. The valid responses (i.e., responses within the time limit) were provided well before the four-second limit (M = 2.24 s., SD = 0.82), t(1021) = 87.77, p < .0001.

Discrimination between solvable and unsolvable problems

We started our analysis by examining whether iJOS responses were substantive, rather than random guesses. Overall, there was a bias towards positive iJOSs, with more than half the matrices being categorized as solvable (see Table 1), t(35) = 6.29, p < .0001. In contrast to our hypothesis, participants’ differentiation between solvable and unsolvable matrices did not reach significance, t(35) = 1.56, p = .13, even though the unsolvable matrices were generated by changing the locations of four elements out of eight.

Sensitivity to solvability might also be indicated by a difference in response times between solvable and unsolvable matrices. To test this, we examined differences in iJOS response times through a two-way within-participant analysis of variance (ANOVA) of iJOS (solvable/unsolvable) × Solvability (yes/no). This analysis revealed a main effect of iJOS, such that “solvable” judgments were provided faster (M = 2.21 s., SD = 0.82) than “unsolvable” judgments (M = 2.29 s., SD = 0.81), F(1, 35) = 6.73, MSE = 0.609, p = .01, ηp2 = 0.16. There was no main effect of actual solvability and no interactive effect, both Fs < 1. Thus, response times also did not point to discrimination between solvable and unsolvable problems.

Confusion between problem difficulty and solvability

We hypothesized that the information used to assess the difficulty of a problem is also used as a cue for iJOS. To obtain relevant matrix difficulty scores, we conducted a preliminary experiment with 26 other participants sampled from the same population as the main experiments. They faced only the original, solvable matrix versions used in this study and solved them. Matrix difficulty scores were generated by standardizing mean success rates for each matrix. Easy matrices have high success rates and, therefore, high Z scores. The correlation between our difficulty scores and the difficulty scores published in the Raven’s manual (Raven et al. 1998) was 0.9. In this preliminary experiment the average success rate was 69% (SD = 46).

In Experiment 1 the average success rate for the solvable versions was 61% (SD = 34.8). The wrong answers were divided between judging solvable matrices to be unsolvable in the solving phase (M = 21%), and choosing a wrong answer from the eight solution options (M = 17.8%).

To examine the association between the difficulty of the original matrix and iJOS, we fitted a multilevel generalized linear mixed-effects regression model with a logit link. To account for the nested, non-independent nature of the data, two-level hierarchical linear regression models were employed (level 1: participants; level 2: matrices) using the R package lme4 (Bates et al. 2015; R Development Core Team 2008). The dependent variable was the binary iJOS and the independent variables were matrix difficulty score and solvability (yes/no). Matrix difficulty score was a predictor of iJOS, b = 0.47, SE = 0.18, p < .01, indicating that the probability of a positive iJOS was greater for matrices that were originally easier than for the more difficult ones. Solvability also had a main effect, b = 0.30, SE = 0.14, p < .05, indicating a greater probability of a positive iJOS for solvable matrices. This effect is significant despite the above-reported lack of differentiation between solvable and unsolvable matrices found by paired t-test. The result of multilevel mixed model, which considers the dependency both within each person and within each matrix, is more reliable then t-test. Still, the reliability of iJOS was weak. The following interaction result suggested an explanation for this uncertainty. There was an interaction of difficulty score and solvability, b = 0.43, SE = 0.16, p < .01, with a larger effect of difficulty on the solvable matrices than on the unsolvable matrices. As can be seen in Fig. 2, panel a, this interaction suggests that only when the original matrices were easy participants could discriminate reliably in their iJOS between solvable and unsolvable matrices.

The mere finding that people can act in a predictable way in accordance with the difficulty of matrices in four seconds is remarkable. However, in this case cues which reflect matrix difficulty were misleadingly applied for iJOS, and iJOS’s reliability was inconsistent.

Time investment in the solving phase

An important goal of our research was to examine whether iJOS has practical implications. In particular, we were interested to know whether iJOS predicted solving response time (RT), and whether actual solvability and matrix difficulty contribute to RT. A mixed linear regression was used to estimate effects of iJOS, solvability, and difficulty score on RT. See Fig. 3. The results revealed a main effect of iJOS, b = 4.41, SE = 1.89, t(1012) = 2.33, p = .02, indicating that more time was spent on matrices which were judged to be solvable in the prior phase. Solvability had a main effect, b = -4.93, SE = 2.07, t(1012) = 2.39,p = .02, with participants investing on average less time on solvable than unsolvable matrices. There was no main effect of the matrix difficulty score, p = .8, but there was an interaction between actual solvability and matrix difficulty score, b = 7.27, SE = 1.36, t(1012) = 5.36,p < .0001, as can be seen in Fig. 3. For solvable matrices there was a significant correlation between difficulty and RT, such that more time was spent on the difficult matrices. There was no similar correlation for the unsolvable matrices, t < 1, indicating that participants spent a similar amount of time on all the unsolvable matrices, regardless of the difficulty of the matrices they were based on. Allowing for a triple interaction of iJOS, solvability, and matrix difficulty did not improve the model fit, χ2 = 0.62, p = .4.

Association between initial (iJOS) and final judgments of solvability (fJOS)

The proportion of fJOSs that were correct was 79% for both solvable and unsolvable matrices. A within participant t-test revealed a higher rate of positive fJOS when iJOS was positive (55%) than negative (44%),t(35) = 3.19, p < .01, regardless of actual solvability. We farther examined whether iJOS stays predictive of fJOS even when considering matrix difficulty. We fitted a multilevel logistic regression model (level 1: participants; level 2: matrices) as before. The independent variables were iJOS, solvability, and difficulty score. Main effects were found for solvability, b = 3.14, SE = 0.19, p < .0001, and for matrix difficulty score, b = 0.80, SE = 0.13, p < .0001, but not, at this stage, for iJOS. Still, iJOS predicts fJOS, which may be useful when the objective difficulty of the items is unknown or when different items are equivalent in difficulty. This finding is valuable in its own right.

Confidence

The association between iJOS and confidence in the final answer is a secondary question in this study, as we focus more on judgments of solvability and RT. Nevertheless, to predict confidence, we again fitted a multilevel regression model (level 1: participants; level 2: matrices), with iJOS and solvability as independent variables. The results revealed no main effect of iJOS (p = .3), but a main effect of solvability, b = 11.05, SE = 1.82, p < .0001. Confidence was significantly higher for solvable matrices (M = 81.3, SD = 8.9) than for unsolvable matrices (M = 65.4, SD = 12.9). In addition, there was an interaction of iJOS and solvability, b = 7.78, SE = 2.35, p = .001. Delving into this interaction revealed that confidence was higher when iJOS was positive, but only for solvable matrices (p = .001), while for unsolvable matrices confidence was equivalent for positive and negative iJOS, p = .7. However, introducing matrix difficulty into the model reduced this interaction to a marginal level, p = .09, while the interaction of solvability and matrix difficulty remained significant, b = 7.3, SE = 1.4, p < .0001. As before, confidence was higher for the easier matrices than for the more difficult ones, but only for solvable matrices. Thus, confidence, like fJOS, is predicted by a combination of variables that includes iJOS.

Discussion

Although the unsolvable versions of the matrices were created to be very different from their original versions, participants could not reliably differentiate between solvable and unsolvable matrices after viewing the matrices briefly. Nevertheless, iJOS responses were clearly not arbitrary, as they were associated with matrix difficulty. Thus, it seems that factors beyond solvability underlie the predictive value of iJOS. Importantly, iJOS predicted time investment, fJOS, and confidence in provided answers after controlling for actual solvability. Experiment 2 examined the generalizability of these findings.

Experiment 2

In Experiment 1 we generated unsolvable versions of the matrices by changing the locations of half the elements and found an association between iJOS and original matrix difficulty. We also found that iJOS was predictive of response times, fJOS, and confidence. These results led us to inquire about the conditions under which iJOS has predictive value. One possibility is that there is a quantitative continuum of discriminability between solvable and unsolvable problems, with a threshold required for iJOS to be predictive. In this case, a smaller difference between solvable and unsolvable items should reduce the effect. On the other hand, it is possible that the effect is qualitative rather than quantitative, such that the effect becomes apparent when the rules are broken, regardless of the degree of difference between the original and disarranged versions. Furthermore, it is possible that broken rules are more evident when there is some regularity, while they are less evident when there is no regularity at all, as was the case in Experiment 1. Thus, in Experiment 2 we replicated Experiment 1 with the smallest difference possible between the two matrix versions—replacing only two elements rather than four when generating the unsolvable versions.

Method

Participants

Thirty-five undergraduate students from the Technion participated in the study (58% female; Mage = 24.8, SD = 2.8). All participants provided informed consent and participated in exchange for course credit.

Materials

The same thirty Raven’s matrices that were used for Experiment 1 were used in this experiment. However, this time the unsolvable version of each matrix was created by randomly switching only two of the eight elements.

Procedure

The procedure was identical to that of Experiment 1.

Results

Four participants were excluded from the analyses due to spending an unreasonably short time on the task (shorter than 2SD below the sample’s mean time distribution).

Missed initial judgments of solvability (iJOS)

All missing iJOS responses (those not provided within the time limit—4.3%) were excluded from the analyses. Of those, 40% related to unsolvable matrices. The valid iJOS responses were provided well before the four-second limit (M = 2.19 s., SD = 0.78), t(889) = 83.17, p < .0001.

Discrimination between solvable and unsolvable problems

As in Experiment 1, participants were biased toward positive iJOS responses, with more than half the matrices categorized as solvable (see Table 1), t(30) = 7.62, p < .0001. A within-participants t-test revealed a higher proportion of positive iJOS for solvable than unsolvable matrices, t(30) = 2.36, p < .05. Thus, unlike in Experiment 1, iJOS was reliable and solvable matrices were judged as solvable more often than unsolvable matrices, on top of an overall tendency towards positive judgments, which was present in Experiment 1 as well.

Consistent with the results of Experiment 1, an ANOVA for the effects of iJOS (solvable/unsolvable) × Solvability (yes/no) on iJOS response time revealed a main effect of iJOS, F(1, 30) = 17.59, MSE = 1.093, p < .0001, ηp2 = 0.37, such that “solvable” responses were provided faster (M = 2.11 s., SD = 0.76) than “unsolvable” responses (M = 2.30 s., SD = 0.81). There was again no main effect of actual solvability and no interactive effect, both Fs < 1.

Confusion between problem difficulty and solvability

The difficulty score for each matrix was the same as in Experiment 1, as the solvable matrices were the same ones. In this experiment the average success rate for the solvable versions was 61.3% (SD = 37.7). The wrong answers, again, involved either judging a solvable matrix to be unsolvable (M = 20.6%) or choosing a wrong solution (M = 17.9%)—a similar division to that found in Experiment 1.

We examined the predictive value of difficulty score and solvability (yes/no) for iJOS using the same regression model used in Experiment 1 (level 1: participants; level 2: matrices). Replicating the findings in Experiment 1, matrix difficulty score was a predictor of iJOS regardless of actual solvability, b = 0.81, SE = 0.15, p < .0001, indicating that the easier matrices were more likely to be assessed as solvable. See Fig. 2, Panel b. Thus, again we see confusion between difficulty and solvability. Solvability had a main effect, b = 0.38, SE = 0.15, p = .01, with no interaction, p > .5. This finding further strengthens the conclusion that participants differentiated in their iJOS between solvable and unsolvable matrices. This effect was more pronounced here than in Experiment 1, where there was a weaker differentiation between the matrix versions. This finding is discussed below.

Time investment in the solving phase

We examined whether the results of Experiment 1 regarding the time invested in the solving phase replicate when the unsolvable versions were more similar to the original ones. We fitted a multilevel regression model (level 1: participants; level 2: matrices) with iJOS, solvability, and matrix difficulty score as the independent variables, and RT as the dependent variable. As in Experiment 1, iJOS showed a main effect, b = 4.95, SE = 1.95, t(880) = 2.54, p = .01, indicating that more time was spent on matrices which were judged to be solvable in the initial phase. The results did not reveal a main effect of solvability, p = .3. Thus, unlike in Experiment 1, participants invested roughly the same amount of time on solvable and unsolvable matrices. The interactive effect found in Experiment 1 between iJOS and matrix difficulty was replicated (Fig. 4)., b = 3.82, SE = 1.62, t(880) = 2.36, p = .02. For matrices judged to be solvable in the initial phase (positive iJOS), there was a significant negative correlation between matrix difficulty and RT, b = −5.6, p < .001, while no correlation was found for matrices judged to be unsolvable, p = .2. In addition, two interactions not seen in Experiment 1 were found. First, solvability interacted with matrix difficulty, b = −5.64, SE = 1.42, t(880) = 3.97, p < .0001, such that a significant negative correlation between matrix difficulty score and RT was found for solvable matrices, b = −6.6, p = .0001, but not for unsolvable matrices, p = .3. This finding indicates that participants spent around the same amount of time trying to solve unsolvable matrices regardless of their original difficulty. The second additional interaction was between iJOS and solvability, b = 5.65, SE = 2.78, t(880) = 2.03, p = .04. Regardless of difficulty, participants invested more time on unsolvable matrices that were mistakenly judged to be solvable in the first phase compared with all other matrices.

When comparing Figs. 3 and 4, it is evident that the main difference between the experiments stems from the association between difficulty and time invested in unsolvable matrices that were judged to be solvable. In Experiment 1, the time allocated to these matrices was similar to that allocated to matrices judged to be unsolvable, whereas in Experiment 2 the pattern of time investment resembled that for solvable matrices. It thus seems that the misleading effect of the unsolvable matrices on time allocation was greater in Experiment 2 than in Experiment 1, as any time invested in trying to solve unsolvable matrices is essentially labor in vain.

Association between initial (iJOS) and final judgments of solvability (fJOS)

The proportion of correct fJOSs was 79.4% for solvable matrices and 66% for unsolvable matrices, which reflects a bias towards judging problems as solvable, ps < .0001. A within participant t-test revealed a higher rate of positive fJOS responses when iJOS was positive (63%) compared with negative (46%), t(30) = 5.68, p < .0001, regardless of actual solvability. This finding is remarkable considering that fJOS was given after deep processing and iJOS was given after a brief glance. As in Experiment 1, we examined whether iJOS stays predictive even when taking account of matrix difficulty score. We fitted a multilevel logistic regression model (level 1: participants; level 2: matrices) as before. The independent variables were iJOS, solvability, and difficulty score. Introducing the matrix difficulty score did not suppress the main effect of iJOS, b = 0.58, SE = 0.19, p < .01. Matrix difficulty also had a main effect, b = 0.57, SE = 0.19, p < .01, and there was a trivial main effect of solvability, b = 2.52, SE = 0.19, p < .0001. Thus, the notable finding of this analysis is that iJOS was predictive of fJOS above and beyond the matrices’ original difficulty and objective solvability.

Given that in this experiment iJOS was consistently predictive of solvability across all analyses, in order to better understand the joint effect of difficulty and iJOS on fJOS we used a mediation analysis. Specifically, we examined whether the effect of difficulty on fJOS was mediated by iJOS, while accounting for actual solvability in all the following regressions. First, a mediation mixed model was fitted to predict iJOS by matrix difficulty. Second, an outcome mixed model was fitted to predict the proportion of fJOS by iJOS and matrix difficulty. A mediation analysis was then performed with these two models (using the R mediation package; Tingley et al. 2014), testing whether the influence of matrix difficulty on fJOS was mediated by iJOS, conditional on solvability. The analysis revealed that of the total effect of matrix difficulty on fJOS (β = 0.10, 95% CI = [0.07, 0.130], p < .001), 11.3% of the variance was mediated by iJOS (95% CI = [0.02, 0.25], p < .01). Although only a small portion of the total effect was mediated by iJOS, this finding suggests that iJOS does indeed mediate between matrix difficulty and fJOS, thereby presumably contributing to the explanatory value of matrix difficulty. However, we acknowledge that mediation analysis is a correlational technique and therefore cannot provide categorical evidence for causality here.

Confidence

We fitted a multilevel regression model (level 1: participants; level 2: matrices) for predicting confidence, with iJOS and solvability as the independent variables. As in Experiment 1, there was no main effect of iJOS, p = .08. There was only marginally significant interaction between iJOS and solvability, p = .09, while this interaction was significant in Experiment 1. Thus, in this experiment iJOS did not predict confidence.

Discussion

Most findings of Experiment 1 were replicated in Experiment 2, despite the smaller difference between solvable and unsolvable matrices. Of particular importance is that Experiment 2 replicated the predictive power of iJOS for time allocation and fJOS, and also its sensitivity to the objective difficulty of the original matrices. Notably, participants in this experiment accurately discriminated between solvable and unsolvable matrices in less than four seconds. In Experiment 1 this finding was not consistent across the various analysis methods, while in Experiment 2 this effect is decisive. It seems that making only a small change emphasized the violation of the rules, traces of which remained. Potential explanations for this finding are discussed below.

General discussion

This study addresses two topics which have received as yet very little research attention: a) judgments of solvability and b) metacognitive aspects of solving non-verbal problems. We developed a method to explore these topics by manipulating Raven’s matrices to generate unsolvable versions with exactly the same formative characteristics as the original ones. This method enabled us to investigate several aspects of judgments of solvability in non-verbal problems. These were, first, the reliability of these judgments; second, the use of difficulty indicators for inferring solvability; and third, the association between iJOS and two metacognitive aspects of the problem-solving process: monitoring (specifically, fJOS and confidence) and control (specifically, time allocation).

In Experiment 1, iJOS was not decisively predictive of actual solvability. In contrast, in Experiment 2, when the solvable and unsolvable versions were more similar to each other than in Experiment 1, discrimination between them was consistent across the various analyses we employed. This finding suggests that the time limit we set for iJOS allowed discrimination, at least under certain conditions. Notably, in Experiment 2 we made the smallest change possible and hence left more traces of the rules than in Experiment 1, where we replaced half the elements. Thus, the third possible explanation suggested in the introduction to Experiment 2 was supported—namely, that participants were better at recognizing violations of regularity against the background of existing regularity in the less-disturbed unsolvable matrices used in Experiment 2 than in the more-disarranged ones used in Experiment 1. This explanation was considered in an exploratory manner in this experiment and thus clearly calls for further research.

Participants’ iJOS responses, provided after a brief glance at the matrices, were clearly not arbitrary, as these judgments were consistently associated with matrix difficulty, regardless of objective solvability. This association could be regarded as a kind of simple transformation, where a different name is given to the same thing: the more an individual perceives a given matrix as difficult to solve, the more likely he is to conclude that the matrix is simply unsolvable, rather than simply challenging. But the results for time allocation refute such an interpretation, because time allocation was associated with matrix difficulty differently for instances of positive versus negative iJOS (Figs. 3 and 4). Experiment 2 demonstrated this difference in particular for the unsolvable matrices, since time investment for unsolvable matrices where iJOS was positive resembled the pattern of time investment in the solvable matrices. The extra time invested in matrices mistakenly judged to be solvable was in fact labor in vain.

The objective difficulty of Raven’s matrices reflects several factors, including the characteristics of the individual elements (Meo et al. 2007; Primi 2001; Roberts et al. 2000), the number and nature of the rules by which the elements are arranged, the degree of harmony between the rules, and the existence of perceptual grouping (Primi 2001). The identification of original difficulty even when the rules were disturbed across elements suggests that people base their iJOS on attributes of the individual elements rather than on inter-element rules. Future studies are called for to further examine what cues people use to identify the difficulty of Raven matrices so quickly.

Both experiments revealed the same phenomenon of more time spent on solving matrices that generated a first impression of solvability, especially with the unsolvable matrices. Importantly, the study does not allow inferring causality, since there is no evidence that the value of iJOS itself affected time allocation. It could be that the same factors that underlay iJOS took effect also during the solving process. Nevertheless, we demonstrated the predictive value of iJOS for time management. This is a major contribution to meta-reasoning research (Ackerman and Thompson 2015, 2017a, 2017b).

As for fJOS, the results revealed that iJOS is predictive of fJOS regardless of objective solvability. Thus, fJOS, which was given without time constraints, was not determined solely by whether or not a participant was successful in solving the problem. The mediation analysis conducted in experiment 2 suggests that participants base their fJOS upon matrix difficulty both directly and indirectly. The indirect path is mediated by iJOS. Another direction worth examining involves time allocation: Since participants spent less time trying to solve matrices that they had initially judged to be unsolvable, they were less likely to discern the rules in those cases where that original judgment was wrong. More generally, Benjamin (2005) found judgments to be particularly prone to bias when participants are forced to judge quickly. We join Ackerman and Beller (2017) in finding that iJOS is not necessarily more prone to bias than fJOS; rather, there are both shared and distinct biases for iJOS and fJOS.

Conclusion

In this study, we considered properties of initial judgments of solvability (iJOS) in non-verbal problems and their association with aspects of the broader problem-solving process. We dealt with three main questions regarding this judgment: 1) Is it reliable? 2) Is it arbitrary? 3) Is it meaningful? We found that iJOS was not decisively reliable in Experiment 1 but showed clear validity in Experiment 2, suggesting that the reliability of iJOS depends on attributes of the task. Regardless of its validity, iJOS was not arbitrary, even when it was unreliable. iJOS was revealed to be meaningful since it was predictive of time allocation and final decisions regarding solvability.

This study is clearly only a starting point, which should lead future research to delve deeper into iJOS, fJOS, and confidence across different types of problems, and into the issue of cue utilization in non-verbal problems in general. More broadly, questions concerning metacognitive processes involved in solving non-verbal problems are relevant to many real-life scenarios, such as navigation, engineering, design, and education, but so far have been almost completely neglected in metacognitive research. We call for future studies to continue to probe these and other meta-reasoning research questions (see Ackerman and Thompson 2017a).

Change history

29 August 2019

The original version of this article unfortunately contained a mistake in page 7, particularly in the second paragraph of “Procedure” section of Experiment 1: "… and only 0.3% of the answers were given in four seconds or more." The word "more" should be replaced with "less".

References

Ackerman, R. (2014). The diminishing criterion model for metacognitive regulation of time investment. Journal of Experimental Psychology: General, 143(3), 1349–1368.

Ackerman, R., & Beller, Y. (2017). Shared and distinct cue utilization for metacognitive judgements during reasoning and memorisation. Thinking & Reasoning, 23(4), 376–408.

Ackerman, R., & Thompson, V. (2015). Meta-reasoning: What can we learn from meta-memory? In A. Feeney & V. Thompson (Eds.), Reasoning as memory (pp. 164–182). Hove, UK: Psychology Press.

Ackerman, R., & Thompson, V. A. (2017a). Meta-reasoning: Monitoring and control of thinking and reasoning. Trends in Cognitive Sciences, 21, 607–617.

Ackerman, R., & Thompson, V. (2017b). Meta-reasoning: Shedding meta-cognitive light on reasoning research. L. Ball & V. Thompson (Eds.), The Routledge International Handbook of Thinking & Reasoning (pp.1-15). In Psychology press.

Ackerman, P. L., Beier, M. E., & Boyle, M. O. (2005). Working memory and intelligence: The same or different constructs? Psychological Bulletin, 131(1), 30–60.

Aspinwall, L. G., & Richter, L. (1999). Optimism and self-mastery predict more rapid disengagement from unsolvable tasks in the presence of alternatives. Motivation & Emotion, 23, 221–245.

Bates, D., Maechler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48.

Benjamin, A. S. (2005). Response speeding mediates the contributions of cue familiarity and target retrievability to metamnemonic judgments. Psychonomic Bulletin & Review, 12(5), 874–879.

Bjork, R. A., Dunlosky, J., & Kornell, N. (2013). Self-regulated learning: Beliefs, techniques, and illusions. Annual Review of Psychology, 64, 417–444.

Bolte, A., & Goschke, T. (2005). On the speed of intuition: Intuitive judgments of semantic coherence under different response deadlines. Memory & Cognition, 33, 1248–1255.

Bowers, K. S., Regehr, G., Balthazard, C., & Parker, K. (1990). Intuition in the context of discovery. Cognitive Psychology, 22, 72–110.

Bowers, K. S., Farvolden, P., & Lambros, M. (1995). Intuitive antecedents of insights. In S. Smith, T. B. Ward, & R. A. Finke (Eds.), The creative cognition approach (pp. 27–51). London: MIT Press.

Carpenter, P. A., Just, M., & Shell, P. (1990). What one intelligence test measures: A theoretical account of processing in the Raven’s progressive matrices test. Psychological Review, 97, 404–431.

Davis, M., & Montgomery, I. M. (1997). Ruminations on worry: Issues related to the study of an elusive construct. Behavior Change, 14(4), 193–199.

De Neys, W., Rossi, S., & Houdé, O. (2013). Bats, balls, and substitution sensitivity: Cognitive misers are no happy fools. Psychonomic Bulletin & Review, 20(2), 269–273.

Jonassen, D. H. (1997). Instructional design model for well-structured and ill-structured problem-solving learning outcomes. Educational Technology Research and Development, 45(1), 65–95.

Kahneman, D. S., Slavic, P., & Tversky, A. (Eds.). (1982). Judgment under uncertainty: Heuristics and biases. Cambridge: Cambridge Univ. Press.

Koriat, A. (1997). Monitoring one's own knowledge during study: A cue-utilization approach to judgments of learning. Journal of Experimental Psychology: General, 126(4), 349–370.

Koriat, A., Ackerman, R., Adiv, S., Lockl, K., & Schneider, W. (2014). The effects of goal-driven and data-driven regulation on metacognitive monitoring during learning: A developmental perspective. Journal of Experimental Psychology: General, 143(1), 386–403.

Markovits, H., Thompson, V. A., & Brisson, J. (2015). Metacognition and abstract reasoning. Memory & Cognition, 43(4), 681–693.

Mayer, R. E. (1999). Problem solving. In M. A. Runco & S. R. Pritzker (Eds.), Encyclopedia of creativity (Vol. 2, pp. 437–447). San Diego, CA: Academic.

Meo, M., Roberts, M. J., & Marucci, F. S. (2007). Element salience as a predictor of item difficulty for Raven's progressive matrices. Intelligence, 35(4), 359–368.

Metcalfe, J., & Kornell, N. (2005). A region of proximal learning model of study time allocation. Journal of Memory & Language, 52, 463–477.

Payne, S. J., & Duggan, G. B. (2011). Giving up problem solving. Memory & Cognition, 39(5), 902–913.

Primi, R. (2001). Complexity of geometric inductive reasoning tasks: Contribution to the understanding of fluid intelligence. Intelligence, 30(1), 41–70.

R Development Core Team (2008). R: A language and environment for statistical computing. R Foundation for Statistical Computing.

Raven, J. (2000). The Raven's progressive matrices: Change and stability over culture and time. Cognitive Psychology, 41(1), 1–48.

Raven, J., Raven, J. C., & Court, J. H. (1993). Manual for Raven's progressive matrices and Mill Hill vocabulary scales. Oxford: Oxford Psychologists Press.

Raven, J., Raven, J. C., & Court, J. H. (1998). Manual for Raven’s Progressive Matrices and Vocabulary Scales. Section 4: The Advanced Progressive Matrices. San Antonio, TX: Harcourt Assessment.

Reber, R., Brun, M., & Mitterndorfer, K. (2008). The use of heuristics in intuitive mathematical judgment. Psychonomic Bulletin & Review, 15, 1174–1178.

Roberts, M. J., Welfare, H., Livermore, D. P., & Theadom, A. M. (2000). Context, visual salience, and inductive reasoning. Thinking & Reasoning, 6, 349–374.

Sio, U. N., Monaghan, P., & Ormerod, T. (2013). Sleep on it, but only if it is difficult: Effects of sleep on problem solving. Memory & Cognition, 41, 159–166.

Smith, S. M., Ward, T. B., & Schumacher, J. S. (1993). Constraining effects of examples in a creative generation task. Memory & Cognition, 21, 837–845.

Son, L. K., & Sethi, R. (2010). Adaptive learning and the allocation of time. Adaptive Behavior, 18, 132–140.

Stanovich, K. E. (2009). Distinguishing the reflective, algorithmic, and autonomous minds: Is it time for a tri-process theory? In J. Evans & K. Frankish (Eds.), In two minds: Dual processes and beyond (pp. 55–88). Oxford: Oxford University Press.

Tingley, D., Yamamoto, T., Hirose, K., Keele, L., & Imai, K. (2014). Mediation: R package for causal mediation analysis. Journal of Statistical Software, 59(5), 1–38.

Topolinski, S. (2014). Introducing affect into cognition. In A. Feeney & V. Thompson (Eds.), Reasoning as memory (pp. 146–163). Hove, UK: Psychology Press.

Topolinski, S., & Strack, F. (2008). Where there's a will—there's no intuition: The unintentional basis of semantic coherence judgments. Journal of Memory and Language, 58, 1032–1048.

Topolinski, S., & Strack, F. (2009). The architecture of intuition: Fluency and affect determine intuitive judgments of semantic and visual coherence and judgments of grammaticality in artificial grammar learning. Journal of Experimental Psychology: General, 138(1), 39–63.

Undorf, M., & Ackerman, R. (2017). The puzzle of study time allocation for the most challenging items. Psychonomic Bulletin & Review, 24(6), 2003–2011.

Undorf, M., Söllner, A., & Bröder, A. (2018). Simultaneous utilization of multiple cues in judgments of learning. Memory & Cognition, 46(4), 507–519.

Unkelbach, C., & Greifeneder, R. (2013). A general model of fluency effects in judgment and decision making. In The experience of thinking: How feelings from mental processes influence cognition and behavior (pp. 11–32).

Wiley, J. (1998). Expertise as mental set: The effects of domain knowledge in creative problem solving. Memory & Cognition, 26(4), 716–730.

Acknowledgments

The study was supported by a grant from the Israel Science Foundation (Grant No. 234/18). We thank Yael Sidi for valuable feedback on an early version of the paper and to Meira Ben-Gad for editorial assistance.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: In page 7, particularly in the second paragraph of “Procedure” section of Experiment 1: "… and only 0.3% of the answers were given in four seconds or more." The word "more" should be replaced with "less".

Rights and permissions

About this article

Cite this article

Lauterman, T., Ackerman, R. Initial judgment of solvability in non-verbal problems – a predictor of solving processes. Metacognition Learning 14, 365–383 (2019). https://doi.org/10.1007/s11409-019-09194-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11409-019-09194-8