Abstract

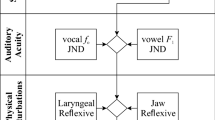

Realization of an intelligent human-machine interface requires us to investigate human mechanisms and learn from them. This study focuses on communication between speech production and perception within human brain and realizing it in an artificial system. A physiological research study based on electromyographic signals (Honda, 1996) suggested that speech communication in human brain might be based on a topological mapping between speech production and perception, according to an analogous topology between motor and sensory representations. Following this hypothesis, this study first investigated the topologies of the vowel system across the motor, kinematic, and acoustic spaces by means of a model simulation, and then examined the linkage between vowel production and perception in terms of a transformed auditory feedback (TAF) experiment. The model simulation indicated that there exists an invariant mapping from muscle activations (motor space) to articulations (kinematic space) via a coordinate consisting of force-dependent equilibrium positions, and the mapping from the motor space to kinematic space is unique. The motor-kinematic-acoustic deduction in the model simulation showed that the topologies were compatible from one space to another. In the TAF experiment, vowel production exhibited a compensatory response for a perturbation in the feedback sound. This implied that vowel production is controlled in reference to perception monitoring.

Similar content being viewed by others

References

Denes P, Pinson E. The Speech Chain. 2nd Edition, New York: W.H. Freeman and Co. 1993.

Lombard E. Le signe de l'elevation de la voix. Annales Maladies Oreilles Larynx Nez Pharynx, 1911, 37: 101–119.

Lee B S. Effects of delayed speech feedback. J. Acoust. Soc. Ame., 1950, 22: 824–826.

Kawahara H. Interactions between speech production and perception under auditory feedback perturbations on fundamental frequencies. J. Acoust. Soc. Jpn., 1994, 15(3): 201–202.

Liberman A M, Cooper F S, Shankweiler D P, Studdert-Kennedy M. Perception of the speech code. Psych. Rev., 1967, 74(6): 431–461.

Liberman A M, Mattingly I G. The motor theory of speech perception revised. Cognition, 1985, 21: 1–36.

Savariaux C, Perrier P, Orliaguet J. Compensation strategies for the perturbation of the rounded vowel [u] using a lip tube: A study of the control space in speech production. J. Acoust. Soc. Ame., 1995, 98(5): 2428–2442.

Honda M, Fujino A, Kaburagi T. Compensatory responses of articulators to unexpected perturbation of the palate shape. J. Phonetics, 2002, 30: 281–302.

Nota Y, Honda K. Brain regions involved in control of speech. Acoust. Sci. & Tech., 2004, 25(4): 286–289.

Sakai K L, Homae F, Hashimoto R, Suzuki K. Functional imaging of the human temporal cortex during auditory sentence processing. Am. Lab., 2002, 34: 34–40.

Honda K. Organization of tongue articulation for vowels, J. Phonetics, 1996, 24: 39–52.

Dang J, Honda K. Construction and control of a physiological articulatory model. J. Acoust. Soc. Ame., 2004, 115(2): 853–870.

Baer T, Alfonso J, Honda K. Electromyography of the tongue muscle during vowels in /∂pvp/environment. Ann. Bull. R. I. L. P., Univ. Tokyo, 1988, 7: 7–18.

Maeda S. Compensatory Articulation During Speech: Evidence from the Analysis of Vocal Tract Shapes Using an Articulatory Model. Hardcastle, Marchal Speech Production and Speech Modeling, Dordrecht: Kluwer Academic Publishers, 1990, pp.131–149.

Carré R, Mrayati M. Articulatory-Acoustic-Phonetic Relations and Modeling, Regions and Modes. Speech Production and Speech Modeling, Hardcastle W, Marchal A (eds.), Netherland: Kluwer Academic Publishers, 1990, pp.211–240.

Honda K, Kusakawa N. Compatibility between auditory and articulatory representations of vowels. Acta Otolaryngol. (Stockh), Suppl., 532: 103–105.

Niimi S, Kumada M, Niitsu M. Functions of tongue-related muscles during production of the five Japanese vowels. Ann. Bull. R. I. L. P., Univ. Tokyo, 1994, 28: 33–40.

Stone M, Davis E, Douglas A, Ness Aiver M, Gullapalli R, Levine W, Lundberg A. Modeling motion of the internal tongue from tagged cine—MRI images. J. Acoust. Soc. Am., 2001, 109(6): 2974–2982.

Dang J, Honda K. Estimation of vocal tract shape from sounds via a physiological articulatory model. J. Phonetics, 2002, 30: 511–532.

Houde J, Jordan M. Sensorimotor adaptation in speech production. Science, 1998, 279(5354): 1213–1216.

Callan E, Kent D, Guenther H, Vorperian K. An auditory-feedback-based neural network model of speech production that is robust to developmental changes in the size and shape of the articulatory system. Journal of Speech, Language, and Hearing Research, 2000, 43: 721–736.

Purcell D, Johnsrude I, Munhall K. Perception of altered formant feedback influences speech production. In Proc. ISCA Workshop on Plasticity in Speech Perception, London, UK, 2005, pp.15–17.

Masaki S, Honda K. Estimation of temporal processing unit of speech motor programming for Japanese words based on the measurement of reaction time. In Proc. ICSLP 94, Yokohama Japan, 1994, pp.663–666.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research has been supported in part by the National Institute of Information and Communications Technology and in part by a Grant-in-Aid for Scientific Research of Japan (Grant No. 16300053).

Jianwu Dang received his B.E. and M.S. degrees from Tsinghua Univ., China, in 1982 and 1984, respectively. He worked for Tianjin University as a lecturer from 1984 to 1988. He was awarded the Ph.D. Eng. from Shizuoka Univ., Japan in 1992. Dr. Dang worked for ATR Human Information Processing Lab., Japan from 1992 to 2001. He joined the University of Waterloo, Canada, as a visiting scholar for one year in 1998. He has been with the Japan Advanced Institute of Science and Technology (JAIST) since 2001, where he is a professor. He joined the Institute of Communication Parlee, Center of National Research Scientific (CNRS), France, as a research scientist the first class for one year in 2002. His research interests are in all of the fields of speech science, especially in speech production. He is a member of the Acoustic Societies of America and Japan, and also a member of the Institute of Electronics, Information and Communication Engineers.

Masato Akagi received the B.E. degree in electronic engineering from Nagoya Institute of Technology in 1979, and the M.E. and the Ph.D. Eng. degrees in computer science from Tokyo Institute of Technology in 1981 and 1984. In 1984, he joined the Electrical Communication Laboratory, Nippon Telegraph and Telephone Corporation (NTT). From 1986 to 1990, he worked at the ATR Auditory and Visual Perception Research Laboratories. Since 1992, he has been with the School of Information Science, JAIST, where he is currently a professor. His research interests include speech perception mechanisms of humans, and speech signal processing. Dr. Akagi received the IEICE Excellent Paper Award from the IEICE in 1987, and the Sato Prize for Outstanding Paper from the ASJ in 1998.

Kiyoshi Honda graduated from Nara Medical University in 1976 and joined the Faculty of Medicine at the University of Tokyo to work in the voice clinic and conduct speech research. He was also a visiting scholar at Haskins Laboratory, New Haven, for three years from 1981. He was awarded a Ph.D. degree in medical science in 1985. Dr. Honda moved to Kanazawa Institute of Technology in 1986 and continued speech research as an associate professor. He then moved to ATR in 1991 to be the supervisor of the Auditory and Visual Processing Research Laboratories and Human Information Processing Research Laboratories. He was also a senior scientist in the field at the University of Wisconsin for three years from 1995. Currently he is the head of Department of Biophysical Imaging (speech production group) at ATR Human Information Science Laboratories. His research work focuses on speech science and physiological experimental phonetics using MRI to investigate the form and function of the speech organs.

Rights and permissions

About this article

Cite this article

Dang, J., Akagi, M. & Honda, K. Communication Between Speech Production and Perception Within the Brain—Observation and Simulation. J Comput Sci Technol 21, 95–105 (2006). https://doi.org/10.1007/s11390-006-0095-8

Received:

Accepted:

Issue Date:

DOI: https://doi.org/10.1007/s11390-006-0095-8