Abstract

Multi-modal learning has gained significant attention due to its ability to enhance machine learning algorithms. However, it brings challenges related to modality heterogeneity and domain shift. In this work, we address these challenges by proposing a new approach called Relative Norm Alignment (RNA) loss. RNA loss exploits the observation that variations in marginal distributions between modalities manifest as discrepancies in their mean feature norms, and rebalances feature norms across domains, modalities, and classes. This rebalancing improves the accuracy of models on test data from unseen (“target”) distributions. In the context of Unsupervised Domain Adaptation (UDA), we use unlabeled target data to enhance feature transferability. We achieve this by combining RNA loss with an adversarial domain loss and an Information Maximization term that regularizes predictions on target data. We present a comprehensive analysis and ablation of our method for both Domain Generalization and UDA settings, testing our approach on different modalities for tasks such as first and third person action recognition, object recognition, and fatigue detection. Experimental results show that our approach achieves competitive or state-of-the-art performance on the proposed benchmarks, showing the versatility and effectiveness of our method in a wide range of applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Humans have the ability to perceive the world around them through signals that come from multiple sensory systems. Our perceptual experiences can be visual, auditory, tactile, olfactory, and gustatory. Psychologists and neurologists agree that our perception does not depend on a single modality at a single time, but is fundamentally multi-modal in nature (Bertelson & Gelder, 2004; Stein et al., 2002). Moreover, the interpretation of data from one sensory channel is influenced by data from other modalities (Driver & Spence, Jul. 1998; O’Callaghan, 2012).

The same ability to effectively process and integrate information from multiple sensory channels has been shown to significantly improve the performance of current machine learning algorithms. For example, recent video understanding models use complementary audio-visual (Zhu et al., 2021) and appearance-motion information (Munro & Damen, 2020; Sevilla-Lara et al., 2019; Sun et al., 2018) to improve accuracy and generalization performance. Object recognition algorithms use depth information to extract more accurate information and classify objects more effectively (Loghmani et al., 2020; Bo et al., 2011), and so on.

Despite its potential benefits, Multi-Modal Learning (MML) also comes with some challenges, such as learning how to summarize data while retaining their complementary information (Wang et al., 2020) or understanding how to effectively combine information from multiple modalities when making a prediction (Baltrušaitis et al., 2019). The same issue is addressed in Yang et al. (2022) when data from multiple views are used. Heterogeneity between modalities is another critical issue, as the difference between their marginal distributions may prevent the model from learning equally from all of them (Razzaghi et al., 2021). Another well-known problem in the literature is the so-called “domain shift”, i.e., that a model trained on a labeled source dataset does not generalize well to an unseen target dataset. Different modalities may be affected differently by the domain shift (Lv et al., 2021). For example, when using audio-visual data for egocentric action recognition, the action “cut” in a cooking scenario may reveal differences between domains (Planamente et al., 2022), as cutting boards in different kitchens may differ in their visual and auditory impressions (e.g., wooden cutting board vs. plastic cutting board), different types of food may be cut, and so on. This highlights the need for robust models that can handle variation across modalities and domains.

To address both the cross-modal and cross-domain challenges in MML, we recently proposed in Planamente et al. (2022) a simple but effective audio-visual approach in the context of egocentric action recognition. We observed that differences in the marginal distributions of the audio and visual modalities could lead to variations in feature informativeness that do not only negatively affect the training process and lead to suboptimal performance, but also typically translate into discrepancies between the mean norms of their features. This imbalance in norms leads the network to “favor” the modality with the larger features, which prevents the model from fully exploiting the synergies and complementarities between modalities and reduces its generalization capabilities (Barbato et al., 2021).

To tackle this issue, in Planamente et al. (2022) we proposed to reduce such imbalance with a simple loss called Relative Norm Alignment (RNA) loss. In the Domain Generalization (DG) setting, i.e., when the model does not have access to the target data at training time, this loss attempts to align the average norms of the different modalities to a common value. This objective also leads to successful transfer between source and target (Zhou et al., 2020; Zheng et al., 2018; Xu et al., 2019; Barbato et al., 2021). In the Unsupervised Domain Adaptation (UDA) setting, i.e., when target data are available during training, RNA is defined as the sum of two domain-specific terms that aim to achieve a cross-modality norm balance on both source and target domains. However, in this setting, the RNA loss operated separately on the two domains (cross-modal alignment, Fig. 1), resulting in discrepancies between the mean feature norms of the two. This discrepancy can be explained by the presence of domain-specific features from the source domain that may have low activations in the target domain.

Overview of Relative Norm Alignment (RNA) loss for RGB and audio modalities. Given visual and audio input from both source and target domains, we perform an alignment at feature level by re-balancing (i) the mean feature norms of visual and audio modalities (cross-modal alignment, \(\mathcal {L}_{\textit{RNA}}^\textit{g}\)), (ii) per-class mean feature norms of visual and audio modalities (per-class alignment, \(\mathcal {L}_{\textit{RNA}}^\textit{c}\)) and (iii) mean feature norms of source and target features independently for each modality (cross-domain alignment, \(\mathcal {L}_{\textit{RNA}}^\textit{mod}\))

To improve the effectiveness of RNA, in this work we extend it to independently align feature norms for each modality across domains (cross-modal alignment, Fig. 1) so that the network can prioritize more transferable features (Xu et al., 2019). In addition, we address the problem of imbalanced feature norms between classes by introducing an intra- and inter-domain alignment component per class (per-class alignment, Fig. 1), resulting in improved overall accuracy.

Furthermore, we combine RNA with two additional components in UDA settings. First, we incorporate an adversarial loss to improve domain-invariant feature learning. Second, we observe that the original RNA loss only affects the modality embedding models and neglects the classification layers. To mitigate the prediction uncertainty in the target domain, we extend the training loss of the model with an Information Maximization term that uses pseudo-labels on target data.

The solution we propose differs from previous approaches in that it is simple, it does not require changes to the training process and, differently from recent constrastive-learning based approaches (Sahoo et al., 2021; Song et al., 2021; Kim et al., 2021), it does not require effective mining of hard negative samples. This makes our solution a desirable choice for a broader range of modalities and tasks. In particular, we extend the audio-visual loss proposed in Planamente et al. (2022) to a variety of visual and nonvisual modalities (optical flow, event data, depth, EGG, facial keypoints) and to a variety of tasks, including first- and third-person action recognition, object recognition, and fatigue detection. Despite its simplicity, experiments show that our approach performs equally well, if not better, than existing methods, with a leaner and more efficient implementation.

In summary, the main contributions of this work are as follows:

-

it updates the definition of RNA to improve the transferability of features between domains in DG and UDA settings;

-

it introduces the use of pseudo-labeling to regularize predictions in the context of transfer learning between source and target;

-

it addresses the challenges of multi-modal domain shift by extending our analysis to multiple modalities and multiple tasks;

-

it presents a comprehensive analysis and ablation of our approach in both DG and UDA settings, showing state-of-the-art or competitive performances on all benchmarks.

2 Related Work

MML has gained popularity due to its potential for better performance, robustness, and deeper understanding. Previous surveys Baltrušaitis et al. (2019), Rahate et al. (2022) have discussed the challenges and opportunities of MML. Here we focus on the problem of generalizing MML across domains, and in particular explore computer vision applications that are most relevant to the experiments in our work.

2.1 Domain Adaptation

In DG, the goal is to build a model that uses knowledge from one or multiple source domains, without having access to data from the target domain during training, to improve the generalization performance of the model to any unseen domain. In such a setting, the lack of knowledge about the target distributions prevents the possibility to estimate the domain discrepancy between source and target domains. Computer vision based DG approaches have mainly focused on image data and can be broadly classified into several categories. Feature-based methods aim to learn domain-invariant representations by aligning domain distributions with metrics such as MMD (Li et al., 2018; Gretton et al., 2012) or CORAL (Sun & Saenko, 2016), or with domain adversarial networks (Ganin et al., 2016). Data-based methods increase the amount of training data to prevent overfitting or use style transfer to reduce the domain sensitivity (Volpi et al., 2018; Zhang et al., 2022; Chen et al., 2022a, b; Wang et al., 2020; Xu et al., 2020). Meta-Learning methods simulate the shift in distributions between domains (Balaji et al., 2018; Dou et al., 2019; Li et al., 2019). Self-Supervision (Bucci et al. 2021) uses auxiliary tasks to learn generalizable representations.

In the context of video data, VideoDG (Yao et al. 2021) observes that it is important to find a balance between the ability to generalize and the ability to discriminate. To achieve this, the relationships between frames in the source domain are extended to ensure that they can generalize to potential target domains while maintaining their discriminative capabilities.

As for UDA methods (which can benefit from unlabeled target data available during training), they can be broadly divided into two categories: discrepancy-based and adversarial-based methods. Discrepancy-based methods minimize a distance metric between the source and target distributions (Xu et al., 2019; Saito et al., 2018; Long et al., 2015). Adversarial-based methods, on the other hand, use adversarial training to align source and target distributions (Deng et al., 2019; Tang & Jia, 2020). Another research direction focuses on incorporating self-supervised learning as an auxiliary task to improve feature learning, as in Bucci et al. (2021).

While the aforementioned approaches have mainly been applied to standard image classification tasks, there has also been a significant amount of research on UDA for video-related tasks, such as action detection (Agarwal et al., 2020), segmentation (Chen et al., 2020), and classification (Chen et al., 2019; Munro & Damen, 2020; Choi et al., 2020a; Jamal et al., 2018; Pan et al., 2020; Song et al., 2021).

In video classification, several methods have been proposed to align the temporal dynamics of the feature space. TA\(^3\)N (Chen et al. (2019) uses a multi-level adversarial framework with temporal relation and attention mechanisms to achieve this goal. TCoN (Pan et al. 2020) aligns feature distributions between source and target domains with a cross-domain co-attention mechanism that focuses on aligning temporal relationship features to increase robustness across domains. In Choi et al. (2020a), the network is trained to solve an auxiliary self-supervised task on source and target data. SAVA (Choi et al. 2020b) addresses the domain adaptation problem by proposing to use clip order prediction as an auxiliary task to be solved in both source and target domains. In addition, Contrastive Learning (CL) methods have also been proposed for UDA in video analysis. For example, CoMix (Sahoo et al. 2021) introduced a new framework for contrastive learning that aims to learn discriminative invariant feature representations.

2.2 Multi-modal Adaptation and Generalization

Several methods have been proposed to exploit the availability of multiple modalities for domain adaptation. They can be divided into three main categories: adversarial approaches, co-training, and contrastive learning based methods.

Adversarial-based approaches, such as MDANN (Qi et al., 2018) and AUDA (Liu et al., 2021), focus on learning discriminative and domain adaptive features under an adversarial objective, showing their effectiveness in cross-domain emotion recognition using audio-visual data and cross-media retrieval using images and text from different domains. MM-SADA (Munro and Damen 2020) is another approach that extends adversarial alignment to a self-supervised task based on modality correspondence.

Co-training methods such as DLMM (Lv et al., 2021) and XM-UDA (Jaritz et al., 2020) exploit the diverse properties of the different modalities by treating the classifiers of the various modalities as a set of teacher/student models trained with a curriculum learning approach. These methods have been applied to tasks such as event recognition using audio-visual data, fatigue detection using EEG signals and facial keypoints, and action recognition using RGB images and optical flow.

Contrastive learning based methods such as STCDA (Song et al., 2021) and the approach described in Kim et al. (2021) exploit the complementarity of different modalities to regularize both cross-modal and cross-domain feature representations. They treat each modality as a view and perform contrastive learning across modalities and domains to align representations between source and target domains in each modality. CIA (Yang et al., 2022) uses cross-modal interaction and generative modelling to align cross-domain representations.

RNA-Net (Planamente et al., 2022) addresses multi-modal video DG by using both audio and RGB features, but recognizes that the simple fusion of multi-modal information may not improve generalizability. To overcome this problem, a cross-modal audio-visual Relative Norm Alignment (RNA) loss is proposed to align the relative feature norms of audio and visual modalities from source domains, resulting in domain-invariant audio-visual features. In this work, we further extend this approach to improve feature transferability across domains in both UDA and DG settings, and address the issues of multi-modal domain shift across different tasks and datasets.

2.3 Norm Alignment

Several works highlighted the existence of a strong correlation between the mean feature norms and the amount of “valuable” information for classification (Zheng et al., 2018; Wang et al., 2017; Ranjan et al., 2017) and the negative impact of different feature norms on multiview clustering approaches (Peng et al., 2019). In particular, the cross-entropy loss has been shown to promote well-separated features with a high norm value (Wang et al., 2017). Starting from this observation, the authors of Xu et al. (2019) show that the main reason behind performance degradation on unseen data is the reduction in feature norms compared to the source domain. This stems from the fact that the supervision on the source domain causes the classifier to rely on domain-specific features that may not be present in the target domain, thus reducing the activations in the representation of the target features and consequently the norms of the target features. To address this problem, Xu et al. (2019) introduced a loss that forces the norms between the two domains to adapt to increasingly larger scalars, resulting in improved transfer between domains.

Similarly, Barbato et al. (2021) proposed a regularization objective that promotes uniform feature norms between source and target representations while also inducing progressively higher norm values. Furthermore, they introduced an inter-class norm alignment objective, based on the observation that classes with higher confidence are associated with larger feature norms, to soften distribution biases towards the most frequent classes, whose higher classification confidence is typically associated with larger feature norms.

Subsequent works have demonstrated the effectiveness of incorporating this regularization term into various approaches to learn domain-invariant features, such as the adversarial distribution adaptation network proposed in Zhou et al. (2020) and the hierarchical transfer network described in Yang et al. (2021).

In this work, we apply the concept of norm alignment to domain adaptation by extending it to a multi-modal setting, where the alignment is performed not only between domains, but also across modalities and classes. This allows us to better handle the complexity of multi-modal data and improve the transferability of features across different domains and modalities.

3 Proposed Method

In the following, we detail the proposed Relative Norm Alignment (RNA) loss, which aims to mitigate the domain shift in MML by aligning the mean feature norms from different modalities (cross-modal alignment) and from different domains (cross-domain alignment), both globally and at class level.

3.1 Intuition and Motivation

Joint training of multi-modal models may result in sub-optimal synergies between the different modalities. This observation has been theoretically demonstrated in Huang et al. (2022), showing that naive joint training prevents efficient learning from all modalities. From an optimization perspective, the modality with better performance contributes to lower joint discriminative loss and dominates the training progress, while smaller gradient updates are propagated through the other modalities, leading to an under-optimized situation in which the dominant modality learn faster than the others (Peng et al., 2022). In turn, the cross entropy loss encourages the network to learn more separable features (Xu et al., 2019), thus increasing their feature norms unevenly. This problem becomes particularly relevant in cross-domain scenarios, where the accuracy drop is further exacerbated by domain shift.

For this reason, in this work we introduce a new loss function based on the mean features norm of the different modalities. This loss promotes balanced learning and synergistic integration of modalities. By addressing the issues of modality imbalances and domain shift, RNA improves the model’s ability to effectively exploit multi-modal information and improve overall performance.

3.2 Setting

Suppose we observe data \(\mathcal {X_S} = \{(x_{s,i},y_{s,i})\}^{n_s}_{i=1}\) from a source distribution \(\mathcal {S}\), where \(n_s\) is the total number of samples, each associated with a label \(y_{s,i}\) from the label space \(\mathcal {Y}_s\). Each sample \(x_{s,i}\) contains multiple modalities, i.e., \(x_{s,i} = \{x_{s,i}^1, \ldots , x_{s,i}^M \}\), where \(x_{s,i}^m\) denotes the mth modality of the ith sample and M is the number of modalities. The target domain \(\mathcal {T}\) comprises \(n_t\) annotated target samples \(\mathcal {X_T}={\{x_{t,i}\}}^{n_t}_{i=1}\), each characterized by the same M modalities of the source samples (i.e., \(x_{t,i} = \{x_{t,i}^1, \ldots , x_{t,i}^M \}\)).

We assume that the distributions of all involved domains are different, i.e., \(\mathcal {D}_{d_1}^j \ne \mathcal {D}_{d_2}^k\), where \(d_1\) and \(d_2\) are the domains (source or target) and j and k represent different modalities on the same domain and the same or different modalities on different domains. We also assume that the label space is shared between sources and targets, i.e., \(\mathcal {Y}_{s} = \mathcal {Y}_{t}\).

3.3 RNA for Domain Adaptation

In the following, without loss of generality, we consider a single-source single-target problem in which two modalities are available. In Sect. 3.5 we show how the approach can be extended to any number of modalities.

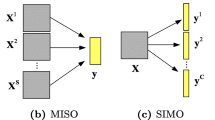

We denote each input sample i as \(x_i=(x^u_i,x^v_i)\), where u and v represent the two modalities (e.g., visual and audio modality). As shown in Fig. 2, each input modality m is fed to a separate features extractor \(F^m\). The features \(f_i^m=F^m(x^m_i)\) are then processed by a classifier \(G^m\), which outputs the score predictions for the mth modality of the ith sample. Finally, the prediction scores from all modalities are combined using a late fusion approach to obtain the final predictions. In UDA settings, the \(F^m\) feature extractors are shared between source and target.

Labeled source and unlabeled target samples from the modalities u (e.g., visual) and v (e.g., audio) are fed to the respective feature extractors. \(\mathcal {L}_\textit{RNA}\) aims to balance the relative feature norms of the two modalities, through a combination of the (domain-specific) cross-modal components (\(\mathcal {L}_{\textit{RNA}}^\textit{g}\) and \(\mathcal {L}_{\textit{RNA}}^\textit{c}\)) and the cross-domain ones (\(\mathcal {L}_{\textit{RNA}}^\textit{mod}\)) in each u and v modality. In DG, only the components computed on the source are used

As previously mentioned, in this work we extend the approach introduced in Planamente et al. (2022), which proposes to train the entire architecture by minimizing the following loss:

where \( \mathcal {L}_C \) is the standard cross-entropy loss on source data. The latter aims at globally minimizing the difference between the feature norms of the two modalities and is defined as:

where \(h(x^m_i)=({\Vert { \cdot }\Vert }_2 \circ F^m)(x^m_i)\) is the \(L_2\)-norm of mth modality features of the ith sample, \({{\,\mathrm{\mathbb {E}}\,}}[h(X^m)] = 1 / {B} \sum _{x^m_i \in \mathcal {X}^m}h(x^m_i)\) is the average norm for the mth modality of the B samples composing the batch, and \(\lambda _g\) weights \(\mathcal {L}_{\textit{RNA}}^\textit{g}\). To ensure that all features have the same dimension, we project them to a common shape using a fully connected layer when this condition is not met.

In DG, the RNA objective is defined as \(\mathcal {L}_\textit{RNA}= \mathcal {L}_{\textit{RNA}}^\textit{g}(\mathcal {S})\) while in UDA \(\mathcal {L}_\textit{RNA}= \mathcal {L}_{\textit{RNA}}^\textit{g}(\mathcal {S}) + \mathcal {L}_{\textit{RNA}}^\textit{g}(\mathcal {T})\), where \(\mathcal {L}_{\textit{RNA}}^\textit{g}(\mathcal {S})\) and \(\mathcal {L}_{\textit{RNA}}^\textit{g}(\mathcal {T})\) are the loss in Eq. 1 applied to the source and target domains, respectively.

The dividend/divisor structure of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) promotes a relative adjustment between the global norm of the two modalities aimed at achieving an optimal equilibrium between the two. The square of the difference forces the network to take larger steps when the ratio of the two modality norms is too different, leading to faster convergence. We note that Eq. 1 redefines the loss presented in Planamente et al. (2022) to ensure a symmetric form (i.e., \(\mathcal {L}_{\textit{RNA}}^\textit{g}(u,v) = \mathcal {L}_{\textit{RNA}}^\textit{g}(v,u)\)).

3.4 RNA Extensions

While the results in Planamente et al. (2022) show the effectiveness of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) in reducing domain shift, the formulation in Eq. 1 has two major limitations. First, the global cross-modal alignment performed by \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) may also lead to unbalanced norms between modalities at the class level, which in turn tends to favor one modality over the others when making decisions about particular classes. Second, in UDA, the alignment is performed separately for each domain. As a result, the average feature norms may still show large differences between source and target domains. These differences can be attributed to the presence of domain-specific features that originate from training in the source domain and may have low activations in the target domain (Xu et al., 2019; Barbato et al., 2021), affecting overall accuracy.

To address both problems, we propose the following extensions to the RNA formulation. First, we introduce an intra-domain class constraint \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) to address the cross-modal norm imbalance at class level, defined as follows:

where \(\lambda _c\) weights the loss, and \({{\,\mathrm{\mathbb {E}}\,}}[h(X_c^m)]\) denotes the average norm of the features of modality m for samples of class c, with C the total number of classes. We note that in computing \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) for the target, the pseudo-labels are used to assign the target samples to classes.

The second extension of \(\mathcal {L}_\textit{RNA}\) addresses the problem of different norms in different domains by re-balancing the average and per-class norms of features in each modality across domains, so that the network can focus on features that are more transferable between domains (Xu et al., 2019). To this end, we include the following term in the RNA formulation:

where \(m \in \{u,v\}\). Combining the three components we have previously defined, the extended RNA formulation in DG settings becomes:

and the one for UDA setting is:

The individual contribution of the three losses is exemplified in Fig. 3. \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) globally aligns the norms of modalities for each domain. \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) aligns the norms of modalities per class for each domain. \(\mathcal {L}_{\textit{RNA}}^\textit{mod}\) aligns the norms between domains, separately for each modality. Taken together, the three losses act synergistically. In DG, \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) supports the work of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\), which in turn facilitates the alignment of norms per class to a common value. The addition of \(\mathcal {L}_{\textit{RNA}}^\textit{mod}\) in UDA helps the other two components to ensure that the average and per-class norms of the different modalities are also aligned between source and target.

Individual effects of \(\mathcal {L}_\textit{RNA}\) components on feature norms. Each diagram shows norms per class for a single modality and domain (u or v for source or target). 1st row: \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) minimizes overall average norms (larger bars on the right) of u and v modalities. 2nd row: \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) achieves balanced norms at class level. 3rd row: \(\mathcal {L}_{\textit{RNA}}^\textit{mod}\) balances class and average norms of the same modality across domains. The diagrams show the norms before (left) and after (right) applying the corresponding \(\mathcal {L}_\textit{RNA}\) component

3.5 Extension to Multiple Modalities

The RNA objective in Eqs. 2 and 3 can be trivially extended to more than two modalities. In DG, the loss can be rewritten as:

where i and j span the M modalities. Similarly, the UDA loss becomes:

where \(\mathcal {L}_\textit{RNA}(\mathcal {S})\) and \(\mathcal {L}_\textit{RNA}(\mathcal {T})\) are the loss in Eq. 4 for the source and target domains, respectively.

3.6 Learning Objective in UDA

In addition to the loss defined in Eq. 3, to further improve the domain invariant properties of the features (and thus reduce the divergence between domains), we apply an adversarial domain alignment (Ganin & Lempitsky, 2015; Wang et al., 2019). We follow the recipe used in other recent UDA work (Chen et al., 2019; Munro & Damen, 2020; Wei et al., 2022; Jamal et al., 2018), and introduce a classifier that predicts whether features are from the source or the target. This classifier is directly connected to the feature extractors via a Gradient Reversal Layer (GRL) (Ganin & Lempitsky, 2015). The domain classification loss \(\mathcal {L}_d\) is then multiplied by a weight \(\lambda _d\) and added to the total loss.

The loss we have introduced so far (i.e., the combination of \(\mathcal {L}_\textit{RNA}\) and \(\mathcal {L}_d\)) aims to improve the informative and domain invariant properties of the embeddings of the different modalities. However, these two loss components affect the feature extractors \(F^m\) and are not back-propagated through the classifier, which therefore only sees the source data and thus has no way to benefit from the target data. The result is that during training, the classifier focuses only on how best to integrate the multi-modal features to improve accuracy in the source domain, and completely ignores the classification uncertainty on target.

One approach commonly used in UDA to improve class discrimination in the target domain is to use a mutual information criterion (Bridle et al., 1991) applied to the target data that not only minimizes the prediction uncertainty, but also promotes a uniform distribution of samples between classes. This is achieved through an Information Maximization (IM) loss defined as the difference between the average entropy of the outputs and the entropy of the average output:

where C is the total number of classes, \(p_c\) is the posterior probability for class c, and \(\bar{p}_c\) is the mean output score for the current batch.

When we put all the pieces together, we train the model in the UDA setting to minimize the following loss:

where \(\mathcal {L}_\textit{RNA}\) is from Eq. 3 and \(\lambda _\textit{IM}\) is the IM loss weight.

4 Experiments

In this section, we aim to verify the effectiveness of our proposed approach through an empirical evaluation on different multi-modal benchmarks corresponding to a variety of datasets and tasks. These range from action classification (on EPIC-Kitchens-55 Damen et al., 2018, EPIC-Kitchens-100 Damen et al., 2022, and UCF-HMDB Chen et al., 2019) to object recognition (on ROD Lai et al., 2011) and fatigue classification (on CogBeacon Papakostas et al., 2019).

In the analysis, the results are obtained and presented as follows. When a dataset includes different domains, we optimized the models using the average accuracy over all the domain splits reported in the respective experimental protocol. Results were obtained using the same set of hyperparameters for all splits. Therefore, in the following, we excluded from evaluation the methods for which it was obvious (either from the description or from the available source code) that the hyperparameters were optimized for each split.

The rest of the section is organized as follows. We begin by introducing the experimental benchmarks used in our work (Sect. 4.1). The ablation study is given in Sect. 4.2, and the results are presented from Sects. 4.3–4.7.

4.1 Datasets

4.1.1 EPIC-Kitchens-55 (EK55)

This is a large-scale egocentric video benchmark recorded by 32 participants in their own kitchens while performing unscripted activities (Damen et al., 2018). RGB, Audio and Flow data are available in the dataset. To validate our approach, we use the experimental protocol defined in Munro and Damen (2020). According to this protocol, (i) we only use the three kitchens with the largest amount of annotated samples (hereafter referred to as D1, D2, and D3) and (ii) we consider only verb classification task and a subset of eight labels. The challenges lie not only in the large domain shift that exists between the different kitchens, but also in the unbalanced distribution of classes within and between domains.

4.1.2 EPIC-Kitchens-100 (EK100)

EK100 (Damen et al., 2022) extends EK55 to 45 kitchens, with almost 100 h of video and 89,977 annotated action segments. The UDA setting of EK100 is defined as two domains, Source (containing labeled training data from 16 participants collected in 2018) and Target (i.e., unlabeled videos from the same 16 participants in the same kitchens but collected 2 years later). The segments are annotated with 97 nouns and 300 verbs corresponding to 3369 unique action classes, largely unbalanced and characterised by a long-tailed distribution.

4.1.3 UCF-HMDB

The UCF-HMDB dataset (Chen et al., 2019) was published to study video domain adaptation in third-person action classification. The dataset consists of 3209 videos from the original UCF101 (Soomro et al., 2012) and HMDB51 (Kuehne et al., 2011) datasets, which define the source and target domains used in DG e UDA. The videos are annotated with 12 classes.

4.1.4 ROD

ROD (Lai et al., 2011) is an image-based dataset developed for object recognition tasks. ROD consists of 41,877 samples of 300 everyday objects grouped into 51 categories and captured by an RGB-D camera. ROD is coupled with SynROD (Loghmani et al., 2020), which contains photorealistic renderings from 3D models of the same categories as ROD, and N-ROD (Cannici et al., 2021), which extends both datasets to event modality by introducing real event recordings obtained from ROD samples, as well as simulated events extracted from SynROD’s synthetic images. Following the settings proposed in Loghmani et al. (2020), Cannici et al. (2021), this dataset allows the exploration of domain shift between synthetic (source domain) and real data (target domain) in a multi-modal object classification task using RGB-Depth and RGB-Event.

4.1.5 CogBeacon

CogBeacon is a multi-modal dataset collected to analyze the effects of cognitive fatigue on human performance (Papakostas et al., 2019). Volunteers completed three different computerized versions (V1, V2, and V3) of the Wisconsin Card Sorting Test, a test widely used in experimental and clinical psychology (Lange et al., 2018). Experimental sessions are divided into rounds in which subjects can signal their cognitive fatigue (i.e., sample classes are “fatigue” and “no-fatigue”, with a strong class imbalance towards fatigue in split V3). Two modalities are available: (i) EEG data, and (ii) user’s movements and facial expressions (recorded by capturing 68 facial keypoints and the face bounding box).

4.2 Ablation Studies

In this section, we present the ablation studies of our approach, all of which have been performed using EK100, as this is the largest and most diverse of all the benchmarks used in our work, thus increasing the statistical significance of these studies.

4.2.1 Experimental Settings

For the sake of clarity, we introduce here the details of the experimental settings used to obtain the results discussed in this Section and in Sect. 4.3.

Evaluation Protocol. We follow the experimental setup for UDA proposed in Damen et al. (2022), where the fine-grained nature of the dataset annotations combined with the large domain and temporal shifts between the source and target domains make the adaptation task very challenging. All the experiments in this section (and in Sect. 4.3) use all three modalities (RGB, Audio, and Flow) available in the dataset. The setting includes a validation split, for which labels are available, and a non-annotated test split. The results of this work are reported on the former, although previous work has also demonstrated the effectiveness of RNA on test data as well (Plizzari et al., 2021; Planamente et al., 2022). Performance is evaluated in terms of Top-1 and Top-5 accuracy of verb and noun predictions and on the combination of the two predictions (action).

Input. RGB, Flow and Audio are processed following Kazakos et al. (2019) by uniformly sampling 25 frames and 1.28 s audio segments along the action. During both training and inference, five of these segments are selected for each modality and fed to the network.

Verb feature norms across different modalities and settings (DG and UDA). Light ( ) and dark colors (

) and dark colors ( ) denote source and target validation domains, respectively. a In the “Source Only” setting, different modalities and domains result in unbalanced feature norms. b \(\mathcal {L}_\textit{RNA}\) in DG improves the alignment between different modalities, but leaves a gap between the source and target domains. c Finally, the contribution of \(\mathcal {L}^{mod}\) in \(\mathcal {L}_\textit{RNA}\) reduces this gap in UDA, resulting in more consistent feature norms across different modalities and domains

) denote source and target validation domains, respectively. a In the “Source Only” setting, different modalities and domains result in unbalanced feature norms. b \(\mathcal {L}_\textit{RNA}\) in DG improves the alignment between different modalities, but leaves a gap between the source and target domains. c Finally, the contribution of \(\mathcal {L}^{mod}\) in \(\mathcal {L}_\textit{RNA}\) reduces this gap in UDA, resulting in more consistent feature norms across different modalities and domains

Implementation Details. Frame-level features \(f_m \in \mathbb {R}^{25 \times 1024}\) from each modality m are extracted using a TBN architecture (Kazakos et al., 2019) pre-trained on Kinetics (Zisserman et al. 2017) and fine-tuned on the source domain, following the recipe from Damen et al. (2022). Our model is trained on pre-extracted features using this backbone. Five frame features for each segment are uniformly selected and fed to a linear layer, followed by a ReLU activation and dropout with probability 0.5. Frame features are temporally aggregated using a TRN (Zhou et al., 2018) module to obtain action-level features \(f'_m \in \mathbb {R}^{1024}\).Footnote 1 To account for the multi-task nature of this setting, we map the features into two components \(f'_{m,v} ,\; f'_{m,n} \in \mathbb {R}^{256}\) using a single linear layer, which we call verb and noun features. These are fed to two separate classifiers to obtain the modality logits for the verb (\(y_{m,v}\)) and the noun (\(y_{m,n}\)). Since this benchmark includes a single source and a single target domain, the network is trained for action recognition by applying cross-entropy loss to the sum of per-modality logits. We extend RNA to work in this multi-task context by applying the alignment losses separately to the verb and noun features, immediately before the final classifier. Applying the RNA losses to these features ensures that the alignment effect provided by RNA is as close as possible to the classifier, which is heavily influenced by the feature norm values. The network is trained for 30 epochs using a batch size of 128 samples and SGD optimizer with momentum 0.9 and weight decay \(10^{-4}\). The learning rate is initially set to 0.003 and decreased by a factor of 10 after epochs 10 and 20.

4.2.2 Effects of \(\mathcal {L}_\textit{RNA}\) on Norm Alignment

We begin by discussing the contribution of the components of the proposed \(\mathcal {L}_\textit{RNA}\) loss. Its goal is to mitigate domain shift issues by balancing the mean feature norms of the different modalities globally (\(\mathcal {L}_{\textit{RNA}}^\textit{g}\)), at the class level (\(\mathcal {L}_{\textit{RNA}}^\textit{c}\)), and across domains (\(\mathcal {L}_{\textit{RNA}}^\textit{mod}\)). In the following, we present the results of experiments in which these components are introduced incrementally.

Global alignment: a qualitative analysis. In Fig. 4 we report the mean feature norms for each modality. For simplicity, we will base our discussion on the verb feature norms, since the same observations apply to nouns. In particular, in Fig. 4 we show how the average norms of verb features for different modalities change on DG and UDA with the contribution of \(\mathcal {L}_\textit{RNA}\).

A preliminary qualitative analysis of the data presented in Fig. 4 shows that \(\mathcal {L}_\textit{RNA}\) in DG (Fig. 4b) leads to a better alignment of the average feature norms of the different modalities and to an overall increase of their values with respect to the “Source Only” (Fig. 4a). Recall that the norm formulation in Eq. 2 attempts to solve the alignment task at the batch level, and thus does not guarantee an exact alignment of all average norms. In Fig. 4b, we can also observe the increase in Flow norm in DG compared to “Source Only” (Fig. 4a). Previous studies have shown that Flow is the modality least affected by domain shift in egocentric action recognition (Munro & Damen, 2020), potentially allowing for greater generalization. This could explain why, in DG, the network pays more attention to this modality.

In addition, the availability of target data in UDA enables \(\mathcal {L}_\textit{RNA}\) to improve the balance between the norms of the different modalities, so that the model can better use the contributions of each modality to make its final decisions. This improved mutual contribution between modalities (reflected in the increased accuracy reported in Table 2) may explain the (relatively) lower norm of Flow in UDA, which is balanced by increased norms of (i.e., attention to) the other two modalities (RGB and Audio).

Global alignment: a quantitative analysis. To facilitate the assessment of the balancing effect of \(\mathcal {L}_\textit{RNA}\) between “Source Only”, DG and UDA norms, we also introduce a quantitative metric. We use the coefficient of variation (CV) as a measure of the norm imbalance, with lower CVs indicating more balanced sets of values. CV is defined as follows:

where \(\sigma \) is the standard deviation and \(\mu \) is the mean of the observed norm values. The CV values obtained are summarized in Table 1, where, for better clarity, we also report the percentage of improvement (%) with respect to the CV values of the “Source Only”.

As for the average feature norms in DG (Fig. 4b), we have a 40.4% decrease in CV compared to the “Source Only”. It is interesting to note that the application of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) alone only contributes to a 29.3% reduction of CV, highlighting the (positive) combined effect of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) and \(\mathcal {L}_{\textit{RNA}}^\textit{c}\). For the target domain in DG, we can observe that the imbalance between modalities increases (instead of decreasing) by 28.7%, which highlights the need for an alignment loss that works not only between modalities but also between domains.

In UDA, the ability to use the target data contributes to a larger reduction in CV over the “Source Only” on both source (by 61.0%) and target domains (22.7%). When we consider the total imbalance (i.e., we calculate CV considering all source and target values together), CV shows an improvement of 20.1% in DG and of 50.7% in UDA. These values are reflected in progressively greater accuracy in the DG and UDA settings compared to the “Source Only” settings (Table 2).

Class alignment. For assessing the contribution of \(\mathcal {L}_{\textit{RNA}}^\textit{c}\), we show in Fig. 5 the evolution of the verb norms of the ten most frequent and the least frequent classes in the DG settings. In the “Source Only” (Fig. 5a) the per-class mean features norms are largely unbalanced. While the exclusive use of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) contributes to a better global balance of the modality norms, it has a small effect on the balancing of the norms per-class (Fig. 5b). On the contrary, when \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) is also minimized, we can observe a significant improvement of their alignment (Fig. 5c).

Feature norms of the top 10 most and least common classes from the target validation split of EPIC-Kitchens-100. While \(\mathcal {L}_{RNA}^g\) improves the alignment of different modalities, there is still an imbalance between classes. The addition of the per-class variant of RNA greatly improves this alignment, resulting in more uniform feature norms across different classes

These qualitative observations are also reflected in the CV metric computed on the class norms. Indeed, the use of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) leads to a minor improvement in “Source Only” CV (37.8% and 19.5%, respectively, for source and target features) compared to that obtained by the combination of \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) and \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) (62.5% and 49.1%).

Overall effect on feature norms. To give further insight into the impact of \(\mathcal {L}_\textit{RNA}\), we show in Fig. 6 a scatter plot of the validation set in DG. This diagram is obtained by plotting the RGB, Flow and Audio feature norms of each sample in a 3D space whose axes are the norms of the three modalities. To make the plot easier to read, rather than using a single 3D representation, we present it as three separate sections along the coordinate planes defined by the feature pairs. The goal of these visualizations is to illustrate the changes in the shape of the resulting manifold.

It can be seen that the “Source Only” features are widely distributed and correspond to a manifold with a largely irregular shape. This is due to misalignment between the feature norms of the different modalities. When the \(\mathcal {L}_\textit{RNA}\) loss is applied, the manifold becomes more spherical and compact, reflecting the improvement in the alignment of the modality norms. It is also possible to note an increase in the average feature norm values that moves the manifold towards the upper right region of the 2D dimensional plots.

4.2.3 Effect of Loss Components

Table 2 details the contribution of the different loss components to the final performance in both DG and UDA settings. For better evaluation, we also show the average improvement in Top-1 accuracy of verb, noun, and action with respect to “Source Only” (\(\Delta \) Acc.). The combination of global and class components in DG (\(\mathcal {L}_{\textit{RNA}}^\textit{g}+ \mathcal {L}_{\textit{RNA}}^\textit{c}\), \(\Delta \) Acc. = 2.20) improves accuracy over \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) and \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) alone (1.36 and 1.94, respectively), showing that the combination of the two components effectively reduces domain shift. Indeed, while \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) aligns modalities globally, possibly penalizing minority classes in unbalanced distributions, \(\mathcal {L}_{\textit{RNA}}^\textit{c}\) enforces alignment for each class individually. The ability to use target data in UDA boosts the accuracy improvement to 1.78 for \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) and 2.28 for \(\mathcal {L}_{\textit{RNA}}^\textit{g}\) + \(\mathcal {L}_{\textit{RNA}}^\textit{c}\)), with \(\mathcal {L}_{\textit{RNA}}^\textit{mod}\) further contributing to reach an average improvement of 2.48.

Comparison of the feature norms before (top) and after (bottom) application of \(\mathcal {L}_{RNA}^g\) and \(\mathcal {L}_{RNA}^c\). The dots represent the samples in the validation dataset. The color bar on the right represents increasing density values. The original features, i.e. “Source Only”, show a wide range of values and an irregular shape, reflecting the misalignment between the features norms of the two modalities. The RNA loss re-balances the two, as evidenced by the more globular distribution while also shifting the average norms towards higher values

As explained in Sect. 3.6, the learning objective in the UDA setting also benefits from two other losses, namely the adversarial domain loss \(\mathcal {L}_d\), which aims to improve the transferability of features across domains, and the Information Maximization loss \(\mathcal {L}_{\textit{IM}}\), which aims to minimize the classification uncertainty between target classes. \(\mathcal {L}_d\) provides a stronger improvement in this particular case (2.71), while \(\mathcal {L}_{\textit{IM}}\) has a minimal effect on the overall accuracy. However, we note that the mutual contribution of the latter two terms (\(\mathcal {L}_d\) and \(\mathcal {L}_{\textit{IM}}\)) also depends on the task and benchmark considered, as other experiments show more pronounced benefits for \(\mathcal {L}_{\textit{IM}}\).

4.2.4 Multi-modal Adaptation Capabilities

Another interesting question is whether the proposed method allows effective integration of multiple modalities in the final decision and whether the use of multiple modalities also helps to improve the domain adaptation capabilities of the model.

Table 3 summarizes the results obtained comparing experiments with modality pairs and with all three modalities. It shows that the latter not only outperforms all other modality pairs in terms of results, but also shows better generalization properties, showing an improved delta compared to its “Source only” (2.73) compared to 2.06, the best two-modality improvement obtained with Flow + Audio. These results suggest that our method is effective in combining the different modalities to improve the overall accuracy and the generalizability of the features obtained.

4.2.5 Modality Drop

In Table 4, we present an experiment to investigate the impact of modality imbalance during training. In particular, we investigate the scenario in which a modality is “unexpectedly” lost at inference time, without a training strategy accounting for this possibility. This scenario, also presented in Gong et al. (2023), is relevant because there may be constraints at inference time, such as power, computational or privacy constraints, or an anomaly of an input device that prevent the use of all modalities.

The basic idea of our approach is to help the model learn equally from the different modalities by integrating their contribution. While it is clear that the unexpected loss leads to a drop in accuracy, we can also expect that the effect of RNA is to make the model more robust to such a modality drop than the “Source Only” model, since the latter is less able to exploit the synergies between modalities and, thus, more vulnerable to dominant modalities. This expectation is confirmed by the results in Table 4, which are consistent with the observations of Gong et al. (2023), and show different but consistent effects on “Source Only” when different modalities are dropped at test time (i.e., large accuracy drops compared to the results in Table 2). At the same time, these results show that the balancing effect of RNA can potentially help the model reduce the impact of the lost modality, as it can take advantage of a better mutual contribution from the remaining ones.

4.3 Experiments on EK100

Unlike the following benchmarks, where we describe the experimental protocol, inputs, implementation details, and baselines used to evaluate the results, in this section we present only the baselines for EK100, as the previous elements were introduced in Sect. 4.2.1.

4.3.1 Baselines

We compare our method with MM-SADA (Munro & Damen, 2020), TA\(^3\)N (Chen et al., 2019), and CIA (Yang et al., 2022). As for MM-SADA, the original approach works only with RGB and Flow modalities. Therefore, to integrate the Audio modality, we use two separate branches, one for RGB-Flow and the other for RGB-Audio modalities (as in Planamente et al. (2022)). The adversarial branch is applied individually to each modality.

4.3.2 Results

Results are given as Top-1 and Top-5 accuracy for verb, noun, and action (Table 5). For each baseline, we also report the relative “Source Only”, average improvement in terms of Top-1 accuracy. For the DG setting, we compare our approach to two alternative methods. The first is MM-SADA (SS), a modified version of MM-SADA, which applies only the original self-supervised alignment task to the source domain modalities and does not consider the adversarial alignment component of the method (which requires target data). The second approach is Gradient Blending (GB), which attempts to find an optimal mixture of modalities according to their overfitting behavior. Such a mixture is achieved by combining a cross-entropy loss for each modality and a loss for their fusion with appropriate weights.Footnote 2

Analyzing the accuracy across different labels, we observe that GB performs best, while our approach ranks as the runner-up and MM-SADA (SS) lags slightly behind. However, when considering the improvements relative to the “Source Only” baseline, our method shows higher delta accuracy compared to GB. This result seems to indicate that our method makes a more significant contribution to reducing the domain shift. We also find the approach proposed in Wang et al. (2020) interesting as it shares similarities with our method in terms of improving the balance between modalities for better classification accuracy. To investigate this further, we perform additional experiments by applying our method to the “Source Only” results obtained from Gradient Blending, i.e., using multiple classification losses but without reweighting them. These additional experiments are indicated with a \(^\dagger \) symbol. The results shown in Table 5 are promising. Our method achieves the best action accuracy and is competitive with GB (and also comparable with CIA, the state-of-the-art in UDA). It is important to note that our standard solution addresses the alignment problem with an adaptive approach that, unlike GB, is independent of the model and dataset used and requires only two hyperparameters: \(\lambda _g\) and \(\lambda _c\).

In the UDA experiments, we observe that although our method ranks second in terms of action accuracy, it has better delta accuracy improvements compared to all other competitors. Furthermore, the results on other evaluation metrics are comparable to those of the other proposed baselines. It is noteworthy that a significant portion of the improvements can be observed in the DG phase, where the target domain is not accessed. This observation highlights the generalization advantage of RNA in coping with domain shifts.

4.4 Experiments on EK55

4.4.1 Evaluation Protocol

We adopt the experimental protocol of Munro and Damen (2020) and evaluate performance in a single-source setting (\(D_i \rightarrow D_j\)) on the three domains described in Sect. 4.1. Despite the small size of this setting compared to EK100, it remains a highly valued and challenging benchmark in the field of egocentric action recognition due to the large domain shift between these domains and the unbalanced label distribution. In the experiments, we restrict our analysis to the RGB+Flow and RGB+Audio modality combinations, which are the ones recent work in the literature focus on.

4.4.2 Baselines

We compare our results with several state-of-the-art UDA methods. The first group (GRL Ganin et al., 2016, MMD Long et al., 2015, AdaBN Li et al., 2018, and MCD Saito et al., 2018) includes approaches originally developed as image-based methods and later adapted to work with video inputs. The second group includes more recent methods such as MM-SADA (Munro & Damen, 2020), the contrastive-based methods proposed in Kim et al. (2021) and Song et al. (2021) (STCDA), and the recently published CIA (Yang et al., 2022). In our comparison, we use the results reported in the original paper for each baseline.

4.4.3 Input

As for the input, different sampling strategies are used to allow a fair comparison with the existing baselines. When using dense sampling, a clip of 16 consecutive frames is randomly sampled from the video. When using uniform sampling, 16 frames evenly distributed over the video are sampled. At test time, the same sampling strategy is used as in training, except that five clips are fed into the network instead of one, as suggested in Wang et al. (2016), and the predictions are averaged. Following the experimental setting from Munro and Damen (2020), during training, random clipping, scale shifts, and horizontal flipping are used for data augmentation, while in testing, only central cropping is applied. As for the aural information, we follow Kazakos et al. (2019) and convert the audio track into a \(256\times 256\) matrix representing the log spectrogram of the signal. The audio clip is first extracted from the video and sampled at 24 kHz. Then, the Short-Time Fourier Transform (STFT) is calculated with a window length of 10 ms, a skip size of 5 ms, and 256 frequency bands. For the Flow input, we use the same sampling strategy as for RGB.

4.4.4 Implementation Details

Both the RGB and Flow streams use an I3D model (Carreira & Zisserman, 2017) pretrained on Kinetics (Zisserman et al., 2017), following the experimental setting from Munro and Damen (2020). Following Kazakos et al. (2019), the audio feature extractor uses the BN-Inception model (Ioffe & Szegedy, 2015) pre-trained on ImageNet (Deng et al., 2009). The feature extraction backbones are trained end-to-end. For each modality m, features have shape \(f_m \in \mathbb {R}^{1024}\). Logits are computed separately for each modality using a linear layer and summed. We train the network for 5000 iterations using the SGD optimizer using momentum 0.9 and weight decay \(10^{-7}\). The learning rate for RGB and Flow is set to 0.001 and reduced to \(2 \times 10^{-4}\) at step 3000, while for Audio the learning rate is set to 0.001 and decremented by a factor of 10 at steps \(\{1000,2000,3000\}\). The batch size is set to 128.

4.4.5 Results

We begin by discussing the UDA results, which are summarized in Table 6. Given the relevance of sampling strategies in the video context, especially for the RGB+Flow combination (Chen et al., 2021), we divide the results into different sections based on the sampling used for each modality: dense (D) or uniform (U). Most baselines use dense sampling (D–D), while CIA is the only method that uses uniform sampling (U–U) for both modalities. In both cases, we compare the baselines to our UDA method using the same sampling strategy.

The results show that CIA with uniform sampling outperforms the dense sampling-based methods. This observation confirms the findings in Chen et al. (2021), which emphasizes that uniform sampling usually allows the network to learn more information. We also observe that our UDA approach achieves state-of-the-art results for both dense and uniform samplings.

To further confirm the importance of sampling, we conduct experiments with a mixed sampling strategy (i.e., D for RGB and U for Flow, Table 6). Since none of the baselines use this sampling, we only present our results for the “Source Only”, DG, and UDA methods. We note that the “Source Only” method already achieves remarkable results (up to 3% better than our method with uniform sampling), which are further improved in both DG and UDA (despite the smaller difference with the “Source Only” compared to other samplings). One possible explanation for the improved performance with mixed sampling is that it allows for better exploitation of the distinct properties of the two modalities. Dense sampling facilitates a more accurate characterization of static appearance information (RGB) over a short temporal range, while uniform sampling enables the use of a wider temporal range to capture the dynamic information conveyed by Flow.

When combining RGB and Audio modalities, our UDA approach consistently achieves the best results (7% improvement over “Source Only” and 1% improvement over the state-of-the-art method). This result confirms the potential of our method, even when dealing with the fusion of heterogeneous modalities.

Finally, we discuss the results we obtained in DG for both modality combinations. For RGB+Flow, we report the results obtained with the mixed sampling strategy (D–U), i.e., the sampling that yields the best performance. In the DG setting, our method improves the “Source Only” by up to 2% and 5% for RGB+Flow and RGB+Audio, respectively. Furthermore, the performance obtained in the DG setting is comparable to that of the UDA setting, with a deviation of \(-\) 1.01% and \(-\) 2.55% for RGB+Flow and RGB+Audio, respectively. Although no other DG methods are available for comparison in this context, these results show that the DG setting can compete with several existing UDA methods that benefit from target data during training.

4.5 Experiments on UCF-HMDB

4.5.1 Evaluation Protocol

We follow the same experimental setting proposed in Zhou et al. (2018), which includes the U \(\rightarrow \) H and H \(\rightarrow \) U shifts in a multi-modal setting that includes the RGB and Flow modalities available with this dataset.

4.5.2 Input

For both RGB and Flow, the training input consists of 16 consecutive frames with resolution \(224 \times 224\) pixels. In testing, we use five clips uniformly sampled across the video and average the predictions. We use the same data augmentations as described in Sect. 4.4 for EK55.

4.5.3 Baselines

We compare our approach with various multi-modal UDA approaches (MM-SADA Munro & Damen, 2020, STCDA Song et al., 2021, the method of Kim et al., 2021 and CIA Yang et al., 2022). To allow a fair comparison, all multi-modal results are based on the same backbones and the same pre-training.

4.5.4 Implementation Details

The backbone for both RGB and Flow is an I3D pre-trained on Kinetics (Zisserman et al., 2017). The learning rate is set to 0.01 and we train the model for 20 epochs with batch size of 32. We use SGD as the optimizer with a momentum of 0.9 and a weight decay of \(10^{-7}\).

4.5.5 Results

In Table 7, we present the classification accuracy of our method and several baselines. To ensure a fair comparison, we report the results of the “Source Only” model from the original paper for all baselines.

In absolute terms, our approach achieves very competitive performance under the UDA setting and is the second best of all methods in terms of accuracy, outperforming all baselines except CIA. However, the better “Source Only” result of CIA, which was difficult to reproduce in our experiments, should be emphasized. This result could be attributed to its particular architectural design choices and the integration of spatial consensus between RGB and Flow modalities. However, it should be noted that the method proposed by CIA cannot be easily extended to other modalities, as shown by their work on integrating the Audio modality in EK100 (Yang et al., 2022). In contrast, our approach provides a more versatile and adaptable solution that can be applied to different modalities without significant architectural changes.

Furthermore, our approach shows remarkable domain shift reduction capabilities. When we compare the performance gains of our method with other baselines, we observe that our approach achieves improvements over the “Source Only” baseline that are comparable to those obtained by other methods. For example, MM-SADA, STCDA, Kim et al. (2021), and CIA show gains of 0.9%, 1.3%, 2% and 1.9%, respectively, while our approach achieves a gain of 1.5%, with a maximum improvement of up to 3% in the U \(\rightarrow \) H shift. This highlights the effectiveness of our method in adapting to target domains and mitigating the negative effects of domain shift.

4.6 Experiments on ROD

4.6.1 Evaluation Protocol

We follow the experimental protocol in Loghmani et al. (2020) for RGB-depth modalities, and the one in Cannici et al. (2021) for RGB-event. The studied shift is a synthetic-to-real domain shift, with synthetic source data and real target data (SynROD \(\rightarrow \) ROD). RGB and depth modality in the synthetic domain are rendered, while events in the synthetic domain are simulated using ESIM (Rebecq et al., 2018).

4.6.2 Baselines

We compare our results with standard image-based UDA methods, namely GRL (Ganin et al., 2016), MMD (Long et al., 2015), SAFN (Xu et al., 2019) and Entropy (Wu et al., 2021), which we extend to operate on multiple modalities. We also compare with Relative Rotation (Loghmani et al., 2020), a method specifically designed to operate on multiple modalities. It consists in a self-supervised task asking the network to predict the relative rotation between two modalities of the same input sample, e.g., an RGB and a depth image.

4.6.3 Input

Event representations, depth images and RGB images are pre-processed and augmented during training following the procedure in Loghmani et al. (2020). Depth images are colorized with surface normal encoding, as in Aakerberg et al. (2017). Input images are normalized with the same mean and variance used for the ImageNet pre-training, while we kept event representations un-normalized as this provided better performance. We use voxelgrid representation for events with 9 bins as in Cannici et al. (2021).

4.6.4 Implementation Details

All backbones are implemented using ResNet-18 (He et al., 2016), pre-trained on ImageNet (Deng et al., 2009). All the parameters of the network, including the pre-trained parameters, are updated during training, as in Loghmani et al. (2020). We train all network configurations using SGD as optimizer, batch size 64 and weight decay 0.003.

4.6.5 Results

Table 8 provides a comparison of our method with different baselines for the SynROD\(\rightarrow \)ROD adaptation task using RGB, depth, and event modalities.

In UDA setting, our method shows remarkable performance gains over all baselines for both RGB+Depth and RGB+Event combinations. For RGB+Depth, our approach achieves improvements of up to 20% over the baselines. these results deomonstrate the capability of our method in reducing the domain shift and improving classification accuracy when adapting from the synthetic domain (SynROD) to the real-world domain (ROD). Our method also achieves improvements of up to 10% over the baseline values for the RGB+Event combination. This is a further evidence of the effectiveness of our method in handling the domain shift and improving classification accuracy even in the presence of event data.

However, for DG, the performance gains are relatively smaller compared to the Source Only model for both modality combinations. This can be attributed to the inherent challenges of the synthetic-to-real setting, where there is a significant gap between the feature distributions of the source (SynROD) and target (ROD) domains. The unavailability of target data during training limits the generalization capabilities of the model, resulting in modest improvements over Source Only. In contrast, in UDA setting, our method achieves better performance by effectively using the target domain information to bridge the domain gap.

Thus, we can conclude that the success of our method in mitigating the shift from synthetic to real domains highlights its potential for various fields and applications, such as robotics, autonomous driving, and augmented reality, where synthetic training data are largely used.

4.7 Experiments on CogBeacon

4.7.1 Evaluation Protocol

We follow the experimental protocol in the supplemental of Lv et al. (2021), evaluating the performance in the single-source setting (\(V_i \rightarrow V_j\)) using three different domains (V1, V2, and V3), for a total of six splits.

4.7.2 Baselines

We compare our results with those in Lv et al. (2021) (in particular with DLMM, the Differentiated Learning framework proposed in Lv et al., 2021) and with those obtained in our experiments with different UDA methods, namely SAFN (Xu et al., 2019), GRL (Ganin et al., 2016), MMD (Long et al., 2015), and MM-SADA (Munro & Damen, 2020). These two lists of results are presented separately in Table 9 because the number of samples does not match that used in Lv et al. (2021) (i.e., we have 2,240, 2,432, and 2,300 for domains V1, V2, and V3, respectively).

4.7.3 Input

The EEG signals are characterized using a total of 24 temporal and spectral features (see Papakostas et al., 2019 for details). The face data are represented as a vector combining the average values of the face data and their standard deviation, yielding a total of 280 values.

4.7.4 Implementation Details

Both backbones are implemented by three 1D convolutional blocks with kernel size three and stride one, followed by a MaxPool layer and ReLU as the activation function. The output channels are 16, 32 and 64 for EEG signals and 8, 16 and 32 for the face keypoint model. The latter ends with a fully connected layer with an output of 64 to match the output of the EEG backbone. Model weights are randomly initialized. Predictions for each modality are computed with a single FC layer followed by a LogSoftmax. We train the model for 90 epochs using Adam as the optimizer. In all experiments, the learning rate was set to \(1e-3\) and decremented by a factor of 10 after 70 epochs.

4.7.5 Results

In Table 9, we present the classification accuracy of our method and several baselines for the CogBeacon dataset.

When we compare our approach to the “Source Only” baseline, we observe a significant improvement in the UDA setting. Our UDA accuracy of 68.63% significantly outperforms the “Source Only’s” accuracy of 61.80%. On the other hand, the improvement in the DG setting is not so significant, with an accuracy of 62.64%. However, even in this setting, we observe a potential for softening the domain shift and achieve better performance.

It is worth noting that, on our settings, our method outperforms the other UDA methods used for comparison. Among the UDA baselines, MMD achieves the highest accuracy of 65.58%, which is 3.05% less than our method. We are also competitive with more complex domain matching approaches such as CBST, which involve the generation of pseudo-labels or the use of confidence-based selection strategies (Zou et al., 2019).

However, compared to the results reported in Lv et al. (2021), our method falls behind DLMM, which achieves 70.47% accuracy, significantly better than our results. Nevertheless, a more detailed analysis reveals interesting insights when considering the improvements over the “Source Only” baseline. DLMM achieves an improvement of 6.83%, while our method has an improvement of 7.03%, which can be considered equivalent. That said, it is worth mentioning that our approach is characterized by its simplicity compared to DLMM. DLMM requires multiple training stages and uses a more complex curriculum learning approach with teacher/student models for different modalities. In contrast, our method is lighter and simpler to train, making it a more practical option for real-world applications.

4.8 Limitations

The proposed approach provides interesting performance in many cases, as shown by our experiments with a variety of tasks and scenarios. While the simplicity of the method is certainly a strength, it may be viewed as less effective when compared to methods developed and tuned for a specific task and benchmark. However, we believe that this limitation does not undermine the overall effectiveness of the proposed approach, as it provides a viable alternative for addressing various tasks without requiring significant computational resources or architectural changes.

Another limitation we observed arises from the fact that in many real-world cases the data distributions are strongly unbalanced, leading to lower precision for the tail classes (Buda et al., 2018). The literature shows how this imbalance translates into unbalanced norms of classification weights per class (Guo & Zhang, 2017; Kim & Kim, 2020) as well as unbalanced norms of features per class (Wu et al., 2017; Li et al., 2022). In developing our method, we expected that balancing the norms per class could have a positive effect also in rebalancing the weights of the classifier for the tail classes. However, our experimental results show that this effect is not present. This opens up possibilities for future developments to incorporate this objective into RNA as an additional component that rebalances the weights of the classifier.

5 Conclusion

This work introduces a novel approach to address the problem of multi-modal domain adaptation. Our method is motivated by the observation that differences in the marginal distributions of modalities can significantly affect the training process, leading to suboptimal performance and imbalances in feature norms. To tackle these issues, we introduced the Relative Norm Alignment (RNA) loss, which aims to balance the norms of features extracted by the network across different domains and modalities to improve overall accuracy. This loss is combined with adversarial domain loss and Information Maximization in UDA settings to enhance feature transferability and regularization in the target domain. Our experimental results have shown that the proposed RNA approach can either outperform or compete with several state-of-the-art methods in various multi-modal classification tasks, demonstrating its effectiveness and flexibility. Most notably, our approach is characterized by its simplicity and lightweight nature, allowing it to be easily integrated into different architectures and contexts without requiring complex modifications. This inherent adaptability makes RNA a promising solution for real-world applications where multi-modal data is prevalent. Future research will further explore RNA’s capabilities and adaptability to diverse domains and modalities, addressing challenges in unbalanced data distributions, and investigating the integration with other techniques for domain shift mitigation and improved generalization.

Data Availability

The datasets generated during and/or analysed during the current study are available in the following repositories: EK55:https://data.bris.ac.uk/data/dataset/3h91syskeag572hl6tvuovwv4d EK100:http://dx.doi.org/10.5523/bris.2g1n6qdydwa9u22shpxqzp0t8m UCF: https://www.crcv.ucf.edu/data/UCF101.php HMDB:https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database/ ROD: http://tiny.cc/NRODDatasetDownload CogBeacon:https://github.com/MikeMpapa/CogBeacon-MultiModal_Dataset_for_Cognitive_Fatigue

Notes

Up to this point, the implementation closely follows the official code provided for the EK100 UDA challenge (Damen et al., 2022).

The original version of GB uses only RGB and Audio. The optimal weights for combining losses were taken from Damen et al. (2020), and the weight for the missing component, i.e., Flow, was tuned appropriately for this work.

References

Aakerberg, A., Nasrollahi, K., & Heder, T. (2017). Improving a deep learning based RGB-D object recognition model by ensemble learning. In 2017 Seventh international conference on image processing theory, tools and applications (IPTA) (pp. 1–6). IEEE.

Agarwal, N., Chen, Y.-T., Dariush, B., & Yang, M.-H. (2020). Unsupervised domain adaptation for spatio-temporal action localization. arXiv:2010.09211.

Balaji, Y., Sankaranarayanan, S., & Chellappa, R. (2018). Metareg: Towards domain generalization using meta-regularization. In NeurIPS.

Baltrušaitis, T., Ahuja, C., & Morency, L.-P. (2019). Multimodal machine learning: A survey and taxonomy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(2), 423–443.

Barbato, F., Toldo, M., Michieli, U. & Zanuttigh, P. (2021). Latent space regularization for unsupervised domain adaptation in semantic segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR) workshops (pp. 2835–2845).

Bertelson, P. & Gelder, B. D. (2004). The psychology of multimodal perception. In Crossmodal space and crossmodal attention (pp. 141–177). Oxford University Press.

Bo, L., Ren, X., & Fox, D. (2011). Depth kernel descriptors for object recognition. In 2011 IEEE/RSJ international conference on intelligent robots and systems (pp. 821–826). IEEE.

Bridle, J., Heading, A., & MacKay, D. (1991). Unsupervised classifiers, mutual information and ’phantom targets. In Advances in neural information processing systems, vol. 4. Morgan-Kaufmann.

Bucci, S., D’Innocente, A., Liao, Y., Carlucci, F. M., Caputo, B., & Tommasi, T. (2021). Self-supervised learning across domains. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(9), 5516–5528.

Buda, M., Maki, A., & Mazurowski, M. A. (2018). A systematic study of the class imbalance problem in convolutional neural networks. Neural Network, 106, 249–259.

Cannici, M., Plizzari, C., Planamente, M., Ciccone, M., Bottino, A., Caputo, B., & Matteucci, M. (2021). N-rod: A neuromorphic dataset for synthetic-to-real domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 1342–1347).

Carreira, J., & Zisserman, A. (2017). Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 6299–6308).

Chen, M.-H., Kira, Z., AlRegib, G., Yoo, J., Chen, R., & Zheng, J. (2019). Temporal attentive alignment for large-scale video domain adaptation. In Proceedings of the IEEE international conference on computer vision (pp. 6321–6330).

Chen, M.-H., Li, B., Bao, Y., AlRegib, G., & Kira, Z. (2020). Action segmentation with joint self-supervised temporal domain adaptation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 9454–9463).

Chen, C., Li, J., Han, X., Liu, X. & Yu, Y. (2022a). Compound domain generalization via meta-knowledge encoding. In CVPR.

Chen, C.-F. R., Panda, R., Ramakrishnan, K., Feris, R., Cohn, J., Oliva, A., & Fan, Q. (2021). Deep analysis of CNN-based spatio-temporal representations for action recognition. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 6165–6175).

Chen, C., Tang, L., Liu, F., Zhao, G., Huang, Y. & Yu, Y. (2022b). Mix and reason: Reasoning over semantic topology with data mixing for domain generalization. In NeurIPS.

Choi, J., Sharma, G., Chandraker, M., & Huang, J.-B. (2020a). Unsupervised and semi-supervised domain adaptation for action recognition from drones. In The IEEE winter conference on applications of computer vision (pp. 1717–1726).

Choi, J., Sharma, G., Schulter, S., & Huang, J.-B. (2020b). Shuffle and attend: Video domain adaptation. In European conference on computer vision (pp. 678–695). Springer.