Abstract

Personalised content adaptation has great potential to increase user engagement in video games. Procedural generation of user-tailored content increases the self-motivation of players as they immerse themselves in the virtual world. An adaptive user model is needed to capture the skills of the player and enable automatic game content altering algorithms to fit the individual user. We propose an adaptive user modelling approach using a combination of unobtrusive physiological data to identify strengths and weaknesses in user performance in car racing games. Our system creates user-tailored tracks to improve driving habits and user experience, and to keep engagement at high levels. The user modelling approach adopts concepts from the Trace Theory framework; it uses machine learning to extract features from the user’s physiological data and game-related actions, and cluster them into low level primitives. These primitives are transformed and evaluated into higher level abstractions such as experience, exploration and attention. These abstractions are subsequently used to provide track alteration decisions for the player. Collection of data and feedback from 52 users allowed us to associate key model variables and outcomes to user responses, and to verify that the model provides statistically significant decisions personalised to the individual player. Tailored game content variations between users in our experiments, as well as the correlations with user satisfaction demonstrate that our algorithm is able to automatically incorporate user feedback in subsequent procedural content generation.

Similar content being viewed by others

1 Introduction

Computer games have become an integral part of modern leisure-time. There is intense competition among game companies as they are being faced with challenges to retain user engagement in a saturated market. Steels (2004), based on the work done by Csikszentmihalyi (2000), suggests that for an activity to be self-motivating or “autotelic”, there must be a balance between task challenge and the person’s skill. Estimating the skills of the player and adapting the game challenge accordingly can lead to more engaging and immersing games.

Serious games, and in particular simulators, offer a medium for training and evaluating individuals in high risk scenarios, including for example flying, driving or performing surgery. Since individuals differ in terms of skills and preferences, a variety of training methods should be adapted to maximise training outcomes. In tasks where the end goal is similar between individuals ( for example, successfully tackling a sharp turn with high speed in a car racing game), people with less experience will need more assistance to reach the desired level. As we will clarify later, we relate this amount of assistance to the user’s Zone of Proximal Development (ZPD) (Vygotsky 1978), and we use it to estimate the challenge that a game will pose to a user. If the challenge level is higher than the user skill, this might result into increased user anxiety. On the other hand, if the user skill is higher than the game challenge this might result in increased user boredom.

In this paper, we focus on learning a user (or player) model using a combination of behavioural and physiological data. The model infers the current user’s experience, attention and performance from combinations of extracted features while playing a car racing game. We monitor these abstractions, update the user model and provide decision adjustments for the alteration of the racing track according to the user. We propose an algorithm that monitors the user performance and modifies the game experience in real-time, with the purpose of maintaining high player satisfaction and enhancing the learning process.

As shown in Fig. 1, the proposed adaptive user model is constructed in sequential abstract layers following the Trace Theory framework (Settouti et al. 2009). Several machine learning techniques, such as Affinity Propagation (Frey and Dueck 2007) and Random Forests (Breiman 2001) are utilised to process the incoming data and transform them into available metrics, coupled with a weighting model (such as linear regression) that specifies their significance to the particular user. Finally, the higher layers are built up using ideas from educational theoretical frameworks such as the concept of flow (Csikszentmihalyi 1990), Zone Theory (Valsiner 1997) and the Zone of Proximal development (Vygotsky 1978); these layers provide path altering decisions for the particular user.

An overview of our personalised user modelling approach for evaluating a driver in car racing. Low level inputs are being converted to performance metrics using either the expert or the user’s “best” data. They are then transformed using Trace Theory through game related rules and significance weights into values representing variables from the concept of flow. Finally, the performance and state of the user, that is represented by these variables, are exploited to instruct how each segment path is going to be altered

Our experiments are focused on the engineering and user modelling challenges underlying the detection of the optimal level of adaptation for each individual. We validate the model’s outputs against the performance and feedback data from 52 users. We conducted a user profiling analysis in order to verify and find the patterns emerging from our user responses and determine our user types.

The purpose of this article is to build a user modelling framework that triggers the alteration of the path of the track in a way that fits the skills and weaknesses of the driver. However, the algorithm of changing the track and the real human evaluation of the new segments is outside the scope of this article. In this article we studying the feasibility of the proposed approach by correlating features of the framework to the user responses.

1.1 Background and motivation

A well-designed computer game can promote engagement and provide an effective learning environment (Whitton 2011). The perception of being good at an activity and the perception of rapid improvement both contribute positively to user engagement (Whitton 2011). Coyne (2003) analysed the design and characteristics of various existing games and found repetition as one of the main factors of engaging games, which is usually concealed through variation either in the form of difficulty levels (new opponents, track, etc.) and/or through a narrative. Such games are based on “variation across repetitive operations” where repetition lulls the user into expectations which are subsequently challenged to enhance the user’s engagement. In our car racing game, the driving task itself is repetitive with variation introduced in the form of new tracks. The challenge that arises is customising the progression of the chosen tracks to suit the abilities of each user. Several authors have called for a balance between task difficulty and skill (Steels 2004; Demiris 2009), so that the user remains in a cognitive optimal (flow) state, avoid sensory overload, and remain highly engaged (Whitton 2011; Koster 2013).

Our user model aims to adapt the game challenge—path of the track—according to three concepts adopted from the concept of flow (Csikszentmihalyi 1990): Experience, Exploration and Attention.

-

1. Experience estimates the skill level of the user through the user’s performance in the game. The value is determined from the average proximity of the user’s model characteristics to those of the expert.

-

2. Exploration quantifies the level of consistency that the user is displaying in his/her game actions, i.e. how varied, or probing, are his current set of actions. Actions can include taking different racing paths, eye fixations, operating the interface in a different manner, among others. High values for this concept indicate that the user is finding the current task challenging, and is exploring suitable game options. Low values indicate that the user does not vary his/her actions, and has settled to a low level of variation. The reasoning behind this is that the user tries to overcome the presented challenge by attempting a new action and therefore improving their skills either through positive or negative result.

-

3. Attention quantifies the continuous engagement of the user. This notion is based on the assumption that the expert’s model, from which the user features are compared, represents a fully engaged user according to physiological and non-physiological features. The attention of the user is based on the game metrics. It assumes that the user is engaged if s(he) is doing well in the game or tries to see if the physiological data are coherent to the user’s game model. To determine the attention metric, we first evaluate the average proximity (experience) of the user to the expert model using only non-physiological data. Getting high values from user input and game output metrics shows that user is performing well with respect to segment times and racing lines; therefore, attention should be high. This is based on the assumption that in order for the user to accomplish high non-physiological (game related) values, the user should be highly engaged in the game. Otherwise attention is evaluated from the current variations (exploration) of the user’s physiological data. Since the data are relative to those of the expert’s, positive physiological exploration means that the current features of the user are closer to the expert’s.

The main assumption underlying the implementation of these three concepts is that we are considering the expert model, which the user is compared with, as optimal in respect to engagement and performance levels. It is what the user should imitate and achieve, whereas any deviations from that are conveyed as lack of skill, challenge or attention. It is also important to mention that the model uses both physiological features from external sensors and behavioural data from the game and calculates the significance of each feature obtained according to the time performance of the user in a path. Merging the data from both domains gives the model more potential to explain the events that are unfolding in the game. Multimodal player models have been reported to be more accurate and match the user’s responses better than corresponding models built on unimodal data (Yannakakis 2009; Yannakakis et al. 2013). For example, an incorrect sequence of input actions can explain why the user crashed over a sharp turn and as a result segment’s time was poor. However, this might also have been a consequence of the user’s lack of experience in identifying the correct speed and position to brake and steer or lack of attention. The latter can only be interpreted through the concurrent monitoring of head pose and eye gaze. Doshi and Trivedi (2012) demonstrated the evoking of different pattern dynamics in eye gaze and head pose between sudden visual cues and task-oriented attention shifts.

These three high level concepts monitor the user experience while playing the game and can describe the state and engagement of the user with the game according to the combination of their values. Based on the theory of flow (Csikszentmihalyi 1990) there has to be balanced between challenge level and user skills, whereas attention determines how much these values are sensitive to each other. As a result, there are four main hypotheses that are possible for the particular task:

-

1.

Both Experience and Exploration are High: This is the optimal state. Since experience is high, the user is engaged with the task and begins to learn the particular path. However, exploration is also high, therefore the user has space for more self-improvement. This means that the user’s skills are above a threshold value but not as close to the expert’s; high exploration indicates that the user hasn’t reached the optimal steady values of the expert’s yet. According to the interpretation of skill development by Valsiner (1997), the user is discovering a better way to tackle a path, however, this is not yet embraced as part of his/her experience.

-

2.

High Experience, Low Exploration: The user is performing well; the low value of exploration is indicating that the user found an optimal way to handle a path and this has been adopted into the user’s skills. In order to keep the user engaged, the level of difficulty should be raised so as to challenge the user.

-

3.

Both Experience and Exploration are Low: The user is lacking the skills for the challenge faced. Therefore, the attention value will be consulted to determine if the user is getting bored and giving up (low value) or if the user is engaged with the task through self-motivation to succeed (high value).

-

4.

Low Experience, High Exploration: Challenges are much greater than the skills of the user. The user is performing poorly even when different techniques are being tried. If this state continues to persist then user’s anxiety level will increase; therefore, in order to push the user back in the engagement-training region we should drop the difficulty level of the game.

Based on the calculated values of the notions and the hypotheses, the model outputs decisions on whether a path should become easier, challenging or kept the same.

2 Related research

Player modelling in games has received a lot of interest in recent years. The primary goal of player modelling is to understand the interaction of the player experience at an individual level. This can be either cognitive, affective or/and behavioural. There have been many different approaches for the understanding of a player in games (Yannakakis et al. 2013). Research is split between two areas: player modelling and player profiling. The former tries to model complex phenomena during gameplay whereas the latter tries to categorise the players based on static information, like personality or cultural background, that does not change during gameplay. Profiling is usually performed through the use of questionnaires (e.g. Five Factor Model of personality (Costa Jr and McCrae 1995), demographics) and information collected through that method can lead to a construction of better user models.

Player modelling is further split into three approaches:

-

1.

The model-based approach: involves the mapping of user responses to game stimuli through a theory-driven model.

-

2.

The model-free approach: assumes that there is a relation between the user input and the game states, but the underlining structure function is unknown.

-

3.

The hybrid approach: which is the one we embraced, contains methods from both model approaches mentioned.

Game metrics, defined as statistical spatiotemporal features, are a significant component of player modelling. When these metrics are the only data available, they don’t provide sufficient information about individual users and can infer erroneous states when coming only from the game context. This can be avoided by getting feedback from users, either directly using user annotations or indirectly through sensors (e.g. cameras, eye trackers, etc.).

User annotations can be done through questionnaires or third-person reports. These are mainly of three types:

-

1.

Rating-based format using scalar/vector values [e.g. The Game Experience Questionnaire (IJsselsteijn et al. 2008)].

-

2.

Class-based format using Yes/No questions (Boolean).

-

3.

Preference-based format by contrasting the user experience between consecutive gaming sessions.

In addition to the user annotation methods reviewed by Yannakakis et al. (2013) there are other methods such as think-aloud protocols for continuous annotation (Wolfe 2008) that have been used in other studies. However, those might interfere with the user engagement and can potentially become obtrusive to the game experience, so we do not use them in this paper.

2.1 Physiological user modelling

Analysis of physiological patterns during game play has been a vital technique to assess the engagement and enjoyment of the player. Kivikangas et al. (2011) reviews a comprehensive list of references in the area of psychophysiological methods for game research. They emphasise that a commonly accepted theory for game experience does not currently exist with game researchers frequently using theoretical frameworks from other fields of study.

Similar to our concept, Tognetti et al. (2010a) presented a methodology for estimating the user preference of the opponent skill from physiological states of the user while playing a car racing game. They recorded different physiological data [e.g. heart rate (HR), galvanic skin response (GSR), respiration (RESP), temperature (T), blood volume pulse (BVP)] during each game scenario and then classified them, using Linear Discriminant Analysis (LDA), according to the user’s “Boolean” responses; their questionnaire consisted of a pairwise preference scheme (2-alternative forced choice answers) (Yannakakis and Hallam 2011). They concluded that HR and T gave poor performance on classifying the users’ emotional state where the rest (mostly GSR) achieved high correlations against user feedback. An interesting side result was that most of the users preferred an opponent of similar skills, whereas the rest were not consistent with their responses. This shows that levels of difficulty in the game are hard to pre-set for each user, and a more adaptive approach should be explored.

Similarly, Yannakakis and Hallam (2008) investigated the relationship of physiological signals (e.g. HR, BVP, GSR) with children’s entertainment preferences in various physical activity games, by utilising artificial neural networks (ANN). Through the accuracy of their ANNs on specific features (e.g. maximum, minimum, average) of the recorded signals, they demonstrated that there was a correlation between the children’s responses and the signals. They concluded that when children were having “fun”, they were more engaged displaying increased physical activity and mental effort. In addition, Yannakakis et al. (2010) investigated the effect of camera viewpoints (distance, height, frame coherence) in a PacMan-like game using physiological signals (e.g. HR, BVP, GSR) and questionnaires about the affective states of the user (fun, challenge, boredom, frustration, excitement, anxiety, relaxation). Statistical analysis of the data obtained showed that camera viewpoint parameters directly affected player performance. Emotions were correlated with HR activity (e.g high significant effects between average and minimum HR versus fun; time of minimum HR versus frustration).

Defining the level of immersion and affective state of the user in a game has been approached through different techniques. Brown and Cairns (2004) carried out a qualitative research for understanding the concept of immersion in games, by interviewing their subjects. Respectively, Jennett et al. (2008) performed three experiments on quantifying the immersion of the users in games subjectively and objectively. In the first experiment, the subject switched from a “real-world” physical task to an immersive computer game or simple mouse click activity (control group) and then back to the task. They concluded that the group playing the immersive game improved less on the time taken on carrying out the “real-world” task when compared with the control group. The explanation given by the authors was this was due to the fact that the game decreases one’s ability to re-engage in reality. The second experiment involved the investigation of eye fixation with immersion from users completing either the non-immersive task and the immersive one from the previous experiment. Self-reports revealed that there was a significant difference between the immersion level results of the two tasks, therefore, the questionnaires were a good indicator of immersion. In addition, eye gaze fixations per second increased over time for the non-immersive task group where it decreased over time on the immersive one. This shows that the control (non-immersive task) group was getting distracted more easily in irrelevant areas whereas the experiment group increased their attention in areas more relevant to the game. As we will show later in Sect. 3.4.2, the eye gaze fixations, blinking rate as well as main eye gaze positions are being utilised as features by the user model. The third experiment explored the user’s interaction and emotional state through different modes of the simple mouse clicking task (non-immersive). Through these modes the pace of the appearing box to be clicked was changing. The results showed that emotional involvement is correlated to immersion where sometimes emotion can be negative as well (e.g. anxiety in the increasing pace mode).

2.2 User generated adaptive content and training

According to Yannakakis et al. (2013) “future games are expected to have less manual and more user-generated or procedurally-generated content”. This allows the potential for novel and enjoyable game content that is personalised to the player. Charles et al. (2005) reviews the current approaches towards player-oriented game design, examines the research conducted for better understanding and effective modelling of players and proposes a general framework that can adaptively and continuously alter the game to fit the model of the player. In order to personalise you must first understand the player through a particular game. This involves knowing the game Mechanics (the components and rules of the game), that give rise to game Dynamics (how mechanics behave on user inputs) and fuses to game Aesthetics (user experiences invoked by the game). Hunicke et al. (2004) refer to this as the MDA framework and show how the seamless attributes of each property relate to each other in a variety of games.

According to Malone (1980), the characteristics of an enjoyable game are: challenge, fantasy and curiosity. In an extension of the work, there was the addition of the control factor, empowered either by the actions available to the user, actions already taken or the potential of a great effect of the user’s decisions (Malone and Lepper 1987). In racing games the generation of content mainly targets the track’s path. Pushing the car to the limits and handling tight turns at high speeds is what engages the users in that category of games. Loiacono et al. (2011) derived an algorithm for generating new tracks in a car simulator using single and multi-objective genetic algorithms. Through certain constraints (e.g. path curvature radius, closed tracks, etc.) and the use of polar coordinates, their algorithm fills the path through particular “control” points that the road needs to cross. Cardamone et al. (2011) proposed a framework for advancing the Loiacono et al. (2011) algorithm through a human-assisted generation of tracks. Subjects voted for each generated track using scoring interfaces (5 Likert scale or Boolean type) that were influencing the algorithm over the next generations of tracks. They showed that there was an improvement of user satisfaction in younger generations.

A similar approach for user-oriented track generation was proposed by Togelius et al. (2006, 2007). They used a neural-network based controller (Togelius and Lucas 2006), that was trained on human driver behaviour, in order to test if a generated track is challenging enough for a particular driver. Fitness metrics were used to evaluate the suitability of a new track for the controller. However, the research was focused on the methodology and creativity of the generated tracks instead of their evaluation with the real drivers.

Apart for just being entertaining and stimulating, games can be used for training and educating as well. Zyda (2005) refers to this as “Serious Games”; a medium to enhance education in a more entertaining way. Based on the notion of teaching through games, Backlund et al. (2006) conducted research on driver behaviour between people that play racing, action, sport games (RAS-gamers) and non-gamers. People were categorised into two groups through a questionnaire, whereas their driving skills (attention, decision making, risk assessment, etc.) and attitude (respect speed limits, speed margins and fellow drivers) were rated by driving school instructors, using 7-point Likert scale. Their findings show a positive correlation between gaming and skill oriented aspects of driving, although there was no statistically significant effect of attitude from the two groups. An important concluding statement suggested that games and more specifically driving simulators are able to provide positive effects on driving behaviour and user skill enhancement thus motivating further research.

3 Methods and materials

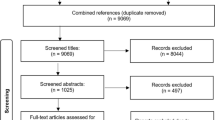

Our approach of the user model implementation, described in Sect. 3.4, utilises a sequential hybrid modelling approach and integrates several algorithms, techniques and theories from literature. Figure 1 encapsulates the model processes and identifies the backbones of each layer. In this section we give an overall view of the methods adopted in our model and explain why and where we apply them.

3.1 Theoretical background

In order to achieve high levels of engagement and training quality we employ ideas from educational theoretical frameworks such as Concept of Flow, Zone of Proximal Development and Zone Theory. The following section describes the various theoretical as well as psychological frameworks that inspired our user model implementation.

3.1.1 Trace Theory

As shown in Fig. 1, Trace Theory (TT) is responsible for bridging the low levels of our model to the higher ones through the transformation rules. TT is a popular and efficient framework for collecting, analysing and transforming users’ low level interactions, with a particular system, into more contextual and meaningful high-level traces. These low level interactions can be the inputs of the user to the system as mentioned by Clauzel et al. (2011). We define them as low level primitives. A formalisation of the trace framework found in Settouti et al. (2009) suggests that a particular combination of several low level primitives set by the system designer creates a primary trace.

As in Bouvier et al. (2014), primitives were set to be mouse and keyboard entries whereas a collection of them on specific timestamps and specific places in their user interface were generating the primary traces. However, a primary trace is still low level and not meaningful enough. The introduction of a rule based system that is determined by a system expert aims to transform the primary traces into higher level abstract entities that can remark on user’s profile and model. As a result of the rules being set by an expert, they are human explainable and therefore more specific about their underlying assumptions.

Our approach encapsulates the ideology of TT and makes use of the low level primitives, primary traces and transformed traces in order to create the model of the driver, while the player is operating the simulator. In Sect. 3.4.6 we provide a detailed description on how we adopt this framework into our model.

3.1.2 The concept of “flow”

The concept of Flow has been introduced by Csikszentmihalyi (1990), whereas being in Flow has been identified by Nakamura and Csikszentmihalyi (2002) as having certain perceptions. Flow represents the feeling of being completely immersed and engaged in an activity and also experiencing high levels of enjoyment and fulfilment (Csikszentmihalyi 1990). These kinds of activities were identified as self-motivating or “autotelic” and for an activity to be autotelic there has to be a balance between the challenge and the personal skill (Csikszentmihalyi 2000). In the case of challenge being higher than the skill then anxiety builds up whereas if the skill is higher than the challenge then boredom sets in. However, among different individuals the balance between those two entities are not of the same quantity.

Steels (2004) discusses the main components of an autotelic artificial intelligence agent that utilises the concept: (1) the user should feel in control and be able to alter the level challenge and (2) the agent should be capable of generating new experiences and challenges within the environment. Similarly, Chen (2007) mentioned the concept of Flow as a mechanism of keeping the game challenges and players skills in balance through the adaptation of game’s challenges in response to player’s actions.

Through our model, the aim is to create an autotelic activity by monitoring the skills and challenges of the user so as to adjust the difficulty of the game. As shown in Sect. 3.4.7, the main principles of the concept (Experience and Challenges) are evaluated through our model, at the highest level (see Fig. 1), to describe the current state of the user.

3.1.3 Zone of proximal development

Developmental research in children by Vygotsky (1978) addressed the general relation between learning and development and the specific features of this relationship through the approach of “Zone of Proximal Development” (ZPD). In ZPD we determine at least two developmental levels. The first is the Actual Developmental Level (ADL), which defines the mental functions that have been established by the individual (completed the developmental cycles). The second is the Potential Development (PD), which is the development level that an individual can reach under guidance, assistance or collaboration. ZPD is the distance between ADL and PD and defines functions that are not yet matured but are in the process of maturation.

Demiris (2009) operationalised the ZPD concept through the creation of an hierarchical user model, which was used in order to infer the amount of assistance that should be given to a user driving a wheelchair.

In the racing experiments of this paper, each individual has different expertise and rate of learning, therefore a variety of training methods should be adapted to fit each of the users. In a task where the goal is similar (e.g. in a racing game: tackling a sharp turn with high speed), people with lower experience would have a larger ZPD and will need more assistance to reach the desired level. As shown in Sect. 4, through our data collection process we observed how the notion of ZPD operates on users with variant set of skills in driving. This was taken into account so that the model would create personalised decisions for each user.

3.1.4 Zone theory

Valsiner (1997)’s developed the Zone Theory to explain human development in educational setting, describing the process that establishes the development of a skill in an individual. Valsiner (1997)’s theoretical framework includes three zones:

-

The Zone of Free Movement (ZFM) represents a cognitive structure of the relationship between a person and the environment in terms of constraints that limit the freedom of these actions and thoughts.

-

The Zone of Promoted Action (ZPA)—defines the set of activities, objects or areas within the environment from which the tutor is promoting to the tutee. With the tutee having no obligation to accept it.

-

The Zone of Proximal Development (ZPD) from Vygotsky (1978)—defines the amount of assistance the tutee needs to reach his/her potential development (PD).

These zones constitute an interdependent system between the constraints enforced on the environment of the learner and the actions being promoted for the learner where both constraints and actions are imposed by external stimulus.

The development is optimised when the relationship between ZPA and ZPD is such that what is promoted (ZPA) lies within the individual’s ability to achieve (ZPD). The ZPD cannot be fully incorporated in the ZFM. Since ZFM is determined by the tutor and a tutee can only develop aspects of the ZPD that have been advertised (ZPA) and not restricted (ZFM). When all zones merge it emerges “a family of possible novel forms of change” where ZPD is dependent on the state of the ZFM/ZPA complex (Galligan 2008).

In our case, the theory of zones serves as an interesting approach to explain the relationship between our users and our adaptive training framework. The ZFM describes the practical space that the user (tutee) can operate. This is the car simulator, the hardware utilised and the various tracks that are available. The ZPA represents the constraints that are promoted by the framework. The model tries to reach the engagement and learning goals set on development, and through the feedback that it receives from the user’s actions, it generates new tailored tracks that advertise new spaces (tracks) where learning and development are most likely to be realised for a particular user. An optimised relationship between ZPA and user’s ZPD is realised through the new track, however, the user has to willingly co-operate, with their engagement and attention, for the learning and development to take place, as suggested by Blanton et al. (2005) through the concept of self-scaffolding (Valsiner 2005).

3.2 Methodological background

In this section we describe the algorithms adopted to perform feature extraction from the user’s data.

3.2.1 Affinity propagation

The first phase of the data collection process consists of storing streams of sequential data and clustering them into Low Level Primitives (Fig. 1). Ideally the clustering method should conform to the following requirements:

-

Automatic determination of optimal number of clusters. The number of clusters is one of the extracted features of the user model.

-

Flexibility on the choice of similarity measure to make the clusters more relative to the feature they describe.

-

Simplicity and fast performance while maintaining low error levels. Our user modelling is performed in real-time.

As a result, the Affinity Propagation (AP) algorithm was chosen. AP (Frey and Dueck 2007) is an unsupervised clustering based approach. The method groups the data by finding data’s center points, called exemplars, through data specific similarity. The difference in approach with respect to other methods [e.g. k-centers (MacQueen et al. 1967)] is that the initial conditions suspect all data points as exemplars. Therefore, the number of clusters is not pre-defined but it can be influenced (if a prior information is known) by values in the similarity measure matrix. The novelty of the method is that it recursively transmits real-value messages throughout the data network of the suitability of each point being an exemplar, until a good set of clusters is found. In addition, the similarity measure matrix is a user defined function (usually Euclidean distance) whereas through its diagonal, the user can increase the values of some data points so that they are more likely to be used as exemplars. The diagonal is usually set to the median or the minimum of input similarities.

3.2.2 Random forests for real time head pose estimation

Apart from eye gaze, the other main physiological feature of our model is the real-time estimation of the head pose of the user. There are several reasons for combining eye gaze and head pose together. Our main considerations were to keep the costs low when providing sensors for our model and provide an unobtrusive experience. Our particular eye-tracker has a limited angle visibility. This means that it can no longer track the eyes of the user when head angles are large. Head position aids to fill in this gap of information when there are not any gaze data available.

Fanelli et al. (2011) used Random Forests (RFs) (Breiman 2001) to estimate, in real time, the 3D coordinates of the nose tip and the angles of rotation of the head through data obtained from a depth camera. In order to accomplish high prediction accuracy, they trained their algorithm using a generated large database of synthetic depth images of faces by rendering a 3D morphable model in different poses. Their method was proven to be fast and reliable even when there was a percentage of occlusion on the nose. This algorithm is suitable for our experiment since it can operate in real time, it does not need any manual initialisation to find the head pose and also does not require utilisation of GPU power like similar methods.

3.3 Implementation

This section discusses the simulator used, the experimental protocol as well as the Framework’s configuration chosen for the data collection process, that aided in the design of our user model.

a Our custom car simulator setup consisting of Vision Racer Seat with a force feedback steering wheel, pedals and three monitors to enhance the user’s immersion to the game. Two RGB-D cameras as well as an eye tracker was installed to capture the user’s physiological signals. b Screenshot from our customised track. c Track used for the experiments split manually into six segments

3.3.1 The simulator

Data is collected through a racing car simulator software, rFactor,Footnote 1 recommended for its excellent graphics and realistic vehicle dynamics by many users and racing teams. As shown in Fig. 2a, we are using a custom set-up of the Vision Racer VR3Footnote 2 seat with Logitech G27 Force Feedback Steering Wheel and a combination of three monitors to enhance the user’s experience. We have installed a Tobii EyeX eye tracker and 2 RGB-D cameras (Kinect) which are positioned in such a way that one of them captures the player’s face and the other captures both the player’s actions and the output from the monitors. Real-time performance metrics, 3D-car position and user actions are being displayed on the wide screen monitor on the wall. For the experiment, the users were asked to complete 20 laps on a particular track that we developed and to fill a questionnaire with demographic and user profiling questions before and after the experiment.

3.3.2 Car and track selection

The car, selected from the ones available in the game, was a sport version of the Renault Megane. The requirements were to have a car that was easy to drive by an amateur driver, quite stable on the road, with a decent acceleration and top speed.

The experiments are loaded with a custom designed track called BrandHatch Indy, which is a short (\(\approx \)1.93 km) but still challenging track with various types of segments (e.g. chicanes, straights, sharp turns). As seen in Fig. 2c, the track was split into six segments which we use to group the data in the user model. Segments were defined in such a way that they will consist only of a single path type (e.g straight, turn, chicane, etc.). In the track’s implementation, each side of the road was fenced (after 20 m of grass), so the user cannot skip a segment by taking shortcuts.

3.3.3 Data collection

We collected data from 52 users, where each user was asked to drive our customised track once for 20 laps. The user answered a set questions before and after the experiment. Prior to starting the experiment, each user was informed about the controls, the visual information of the simulator and the fastest lap of the particular track as a form of motivation.

It was important not to restrain the users with any unnecessary game-specific constraints or tasks. Thus users were advised to play and enjoy the game in their own way. This is because in a similar set up, Backlund et al. (2006) reported that users who were not assigned any task during a driving experiment enjoyed the game more than the ones who were given a task. Each subject was given the opportunity to get to know the simulator through a single practice lap at the beginning of the experiment which was discarded from further analysis. It is important to note that none of the users had previous experience on the particular game or track. At the end of the trial and after the completion of the questionnaire, the users were informed about the aims of the experiment and a short informal interview was carried out.

3.3.4 Questionnaire

Through questionnaires, we collected demographic as well as subjective metrics from our users. Subjective metrics were designed to aid the evaluation of the user model. As will be shown in Sect. 4, we based the validation of our user model’s outcomes and high level variables on the reports obtained by the users.

Before starting the experiment, the users were asked about their age, gender and if they had a driving license or not as well as how long they have been driving. Furthermore, they were asked questions (Likert-scale) shown in Table 1.

3.4 User modelling

Our approach to the user model implementation utilises a sequential hybrid modelling approach and integrates several algorithms, techniques and theories from literature. A top down view of the user model shown in Fig. 1 encapsulates the model processes and identifies the backbones of each layer.

Real time raw data from the user input, game, eye tracker and head pose (Low Level Primitives) are being converted into Performance Metrics by comparing them against the user’s previous “best” data or the expert’s data. Each metric has been given a certain weight through a linear model (Weighting Model), forecasting against the time taken for a certain path to be completed. Through the utilisation of Trace Theory, the performance primitives—along with their weights—are transformed via game specific rules, into variables of the concept of flow (Csikszentmihalyi 1990) (Theoretical Frameworks). This concept can evaluate the challenges that the user is facing (Exploration), the skills that the user attained (Experience) and the Attention that the user is paying to the task. From then on, the algorithm tries to balance those abstract concepts according to the individual by giving certain instructions to alter the track.

In this section we break down each of the layers of the user model—shown in Fig. 1—and explain the processes carried out to reach to a decision for each segment.

3.4.1 Low level primitives

Our focus was to use data from unobtrusive sensors, so that we can avoid the attachment of sensors to body parts, as this “might affect the experience of our user” (Yannakakis et al. 2013). Data from the game (e.g. position, speed, number of collisions), user’s actions, eye gaze and RGB-D data are collected during the task and timestamped through a common clock. One of the novelties of our method is that the data is grouped and analysed according to predefined split segments of the track (see Fig. 2c).

Segments can either be a turn, a straight line or a chicane. The advantage of this method is that the user is being monitored more frequently than once every lap and also our metrics reflect on the performance of more primitive paths where it is easier to identify the specific weaknesses of the driver. As seen in the bottom part of Fig. 1, the low level primitives of the user model consist of data from two main categories (physiological and non-physiological data), extensively listed in Table 2.

3.4.2 Analysis of low primitives

The raw data of Table 2 cannot be directly used as a comparison measure for creating a performance metric. It has to be transformed into a value or sequence of values that can exert a significant performance result when contrasted with ones of the same kind. Over the next paragraphs we are going to describe the process of converting the variables of Table 2 into the primitives of Table 3.

User inputs These are the principal links between the game and the user. The user has three main inputs to the car simulator: the steering wheel, the throttle and the brake pedals. For each of the three series of data we compute the mean value for a particular path to create the corresponding primitives.

We also perform the Affinity Propagation (AP) clustering method, described in Sect. 3.2.1 over all three inputs to find the number and position of their dominant values along the path. Clustering results point to several combinations of inputs that act as the centres of all data. The number and values of these centres are both used as a primitive in the user model. AP clustering facilitates the use of a user specified similarity measure to group the data into clusters. The similarity measure used to cluster the three inputs is their Euclidean distance.

Game outputs The simulator we are using provides real time output data of the car and the environment’s states with a high sampling rate (100 Hz). By using the lap number, time and path position we are able to create indices at the points at which each segment starts and ends for each user, so we can split and group the data together. As game output primitives, we extract the number of collisions per second for each segment. We also store primitives of the user’s path as well as the user’s path accompanied with the car speed.

Eye tracker From the eye tracker we obtain four kinds of information; a parameterised real world position of the eyes, the eye gaze on the screen, the eye gaze fixations and the presence of a user. Through a combination of this information, we calculate and define as the user’s primitives (see Table 3): the number of blinks, the number of fixations per second for a particular segment, as well as the screen concentration time normalised to the segment’s time.

We also perform AP clustering on the eye gaze to determine the position and the number of important targets on which the user was fixating. Instead of clustering only on the eye gaze, we determine the number and important centres of user’s gaze according to the orientation of the car in the virtual space for a particular segment. This addition encapsulates the relationship between the user’s gaze and the orientation (in radians) of the virtual world in the game. The similarity measure used for both clustering approaches is the Euclidean distance of the 2 and 3 dimensions respectively.

RGB-D and head pose Apart from using the cameras to record the experiments, we also use the depth information to find the head pose of the user. Incorporating the algorithm, described in Sect. 3.2.2, we determine the head position and orientation of the user at any given time. As with the eye gaze, we use the orientation data as well as the virtual orientation of the game to create two sets of clusters with the AP algorithm. This results in four different primitives regarding the head pose: number of spots and the location of the centres for each of the approaches. The orientation of the head pose is given as a quaternion. Therefore, for a similarity measure in the AP algorithm we use a dot product to relate the angles between different quaternions. In addition, in the approach with the added virtual orientation, the extra dimension is related with the Euclidean distance.

After the primitives are computed, they are stored into the lowest level of the user model. The next step is to convert them into performance metrics so that we can assess the efforts of the user towards the task.

3.4.3 Performance metrics

An interesting study by Hong and Liu (2003) analysed the thinking strategies between different users in a game of “Klotski”.Footnote 3 They concluded that expert users, determined by the fewer number of steps and operation time, were using more analogical thinking towards a solution (e.g. devising a series of next moves) whereas novice players’ movements were mostly random. Having in mind that the solving ability for a problem is content oriented, they suggested that by understanding the ways the experts operate, we can determine the type of training required for a novice learner to acquire the experts’ skills faster. The concept of our performance metrics employ this idea by assessing the user’s actions with the expert’s.

In the user model structure (see Fig. 1), the layer above the low level primitives involves their conversion to performance metrics. From each category in Table 3 several performance metrics are obtained comparing either the current user’s values to an “expert driver” (if they exist) or to a previous user “best”. Therefore, there are metrics appraising the performance of the user at a global level and at a personal level.

A collection of data for a segment is defined to be more optimal than others according to the amount of time the segment took to complete. As a result, we would expect that the “expert driver” would have the fastest possible time on that segment. In our experiments, the expert driver for each segment was defined at the end of the data collection process by selecting the user with the fastest segment times.

For each of the primitives in Table 3 we use different methods as comparison against the “optimal” values. Most of the primitives (Nos. 1–4, 6, 10–12, 14, 16, 17, 19) create the metric by finding the absolute difference from the optimal values, however, time performance (No. 9) is being divided by the optimal one for each segment. Cluster centres (Nos. 5, 13, 15, 18, 20) are being matched with their closest optimal centre and the average value of their Euclidean distance is obtained.

For Nos. 7 and 8 we use a different approach, since they consist of a sequence of data. In general, to find the dissimilarity of the path covered between two different sequences of car positions, it would be wrong to compare the points one-to-one since there is a high probability that the sequences are not of the same size (since the path has a very low probability of being identical but the sampling rate is constant). A solution is to use a form of dynamic time warping between the two sequences so that the temporal domain is abstracted out and we are only comparing the spatial domain which is the path. In our case, we simply find the two closest points of the optimal path to each of the user’s points in the path. We then calculate the point that is perpendicular to the vector joining the two optimal points and the user’s point.

For example, Fig. 3a shows, in blue, the trajectory of the expert driver and in red is the recorded user’s trajectory. If we zoom in on the points, as shown in Fig. 3b, we have A and B, which are two consecutive points of the optimal path and C. C is a point created by the path of the user in the track. Our algorithm tries to find the closest points from the optimal path, A and B, for every user point, C. It then finds a point D on the vector  that forms a perpendicular vector

that forms a perpendicular vector  . This calculates the difference of point C to its closest point on the optimal path. However, the path consists of discrete points and therefore, a perpendicular point on the path might not exist. D is found by optimising the solution for the 3 dot product equations shown in (1). The summation of all the absolute distances of the points in the user’s path, denoted by the vector

. This calculates the difference of point C to its closest point on the optimal path. However, the path consists of discrete points and therefore, a perpendicular point on the path might not exist. D is found by optimising the solution for the 3 dot product equations shown in (1). The summation of all the absolute distances of the points in the user’s path, denoted by the vector  creates the path performance metric (No. 7 in Table 3) of the user.

creates the path performance metric (No. 7 in Table 3) of the user.

For calculating the path with speed metric (No. 8 in Table 3) we use a similar method as with the path metric. This time we have to find the speed of the new Point D as well. Therefore, speed is found by interpolating the speeds of points A and B using their distances as a weight as shown by (2). Then the sum of their squared differences (SSD) of all matched speeds is noted as a metric, as shown by (3).

The presumption made to create those two metrics is that the former analyses the user performance only on the closeness to the optimal path, whereas the latter comments on the speed as well. As we notice later in Sect. 3.4.4, the latter is more correlated to the segment time since time is indirectly involved in the speed-path points.

The performance metrics in the model are converted to represent percentages, through exponential functions, so that (1) they are comparable against each other, without the need of rescaling, and also (2) outliers are brought closer together. Regarding the latter, when two users perform poorly in a metric, their values are expected to be well beyond and below the mean of an average user. However, the two values could still be far from each other. This doesn’t provide any more information rather than a poor performance from both on the particular metric.

The use of exponential functions helps to decrease that gap and keep the linearity of the rest, up to the point where the original value is significant. For example, if two users’ paths have a huge difference from the expert’s then we know that both users’ time and path performance would be low. However, the time versus path performance proportionality is not as linear as the ones coming from average-high metric performances.

The exponential function used to convert the metrics to percentages is shown in (4). Each performance metric is using a modified exponential function according to the metric value, X, of the expert to expert comparison. The Constant, C, for each different metric is determined by solving (4). This is by assigning the Metric variable to the median value of all the metrics collected (from all users for the particular metric), and the %Metric variable to 50%.

3.4.4 Performance metrics weights

In racing, “time” is the most general and important metric that can reflect on the performance, skills and engagement of the user to the task. However, we have to get deeper into the data in order to understand whether the driver actually achieved a good time. In our user model we try to justify and break down the time taken by the user, through a particular segment, using several descriptive metrics. Therefore for finding the best “weight” of each metric towards the user’s segment time performance, we used various modelling approaches; whereas the best weights describing the correlation (5) from our collected data were adopted.

The metrics are split into three groups: All, Non-Physio, Physio. All includes all metrics in Table 3, Non-Physio consists of all metrics obtained from the user inputs and game outputs and Physio includes metrics associated with the user’s physiological data, such as those obtained from eye tracker and head pose. Time performance is not included in any groups since it is used as reference variable in (5).

where

-

\(\rho \) is the correlation coefficient

-

\({\textit{TimeMetric}}_{s}^l\) is the time performance metric of a segment s and user lap l (No. 9 in Table 3)

-

\(W^s_k\) is the weight for a particular segment s of a particular metric k

-

m is the number of metrics defined in each of their group: All, Non-Physio, Physio

-

\(M^{sl}_k\) is the Performance Metric k of segment s and lap l.

We evaluated the weights through various models, using different versions of Spearman correlation and linear regression:

-

Spearman correlation The first approach to determine the relation and significance of each metric to time is to perform non-parametric Spearman’s correlation (Spearman 1904) between each performance metric and their respective performance segment time. The statistical significance of the correlation values is defined by the p values (\(p <0.05\)).

The correlation results are shown in Table 4. As previously mentioned, metrics are either created from comparisons with the expert’s or the user’s “best” data. All metrics’ p values are well below 0.001, indicating that the results are statistically significant. It is also noticeable that most of the correlations coming from the comparisons of user’s “best” metrics are larger than those of the expert. This is expected since each user will behave according to their own knowledge and skills, therefore a small change towards improvement will be more noticeable. Evaluating (5) , the weights \(W^s_k\) are set to be the normalised value of the correlations found from each metric k. Also, since the Spearman correlation is performed from all data collected including all segments, the weights of each segment s are the same.

-

Spearman segment correlation By grouping the data according to their segments, we performed Spearman correlation within each segment. This approach revealed that the significance of each performance metric also depends on the segment’s path as well (see Table 5). Therefore, for this second method we set the weights \(W^s_k\) to be the normalised correlations found for each individual segment s. Thus, the difference of this method to the previous is that the weight of each metric k is varying across the segments. Segment 1, which is the straight line in the path, was not included in the model since it has to remain unaltered due to track design issues.

-

Linear regression (LR) The main issue with both of the previous approaches is that the Spearman correlation calculates the significance of each metric without considering the correlations between them as well. If two metrics are highly correlated to each other then one of them can be removed since this makes the whole model simpler and more robust to small changes. In order to reduce those redundancies and find the best weights that describe (5), we perform a linear regression for each group of segment data, according to (6).

$$\begin{aligned} TimeMetric_{s}^l = C + \sum _{k=1}^{m} X_{k}^s M_{k}^{sl} \end{aligned}$$(6)

The linear regression model calculates the coefficients \(X_{k}^s\) of each metric k for a particular segment s accompanied with an interception coefficient C. Coefficients are found using the least squares problem method by minimising the sum of the squared error (\(\min \left||Ax - b\right||_{2}^{2}\)) where in our case A represents a matrix of our metrics observations and b is their corresponding time metrics (Mead and Renaut 2010; Lawson and Hanson 1974). We experimented with six different approaches using this model (The name in the brackets specifies the id given to each of the approaches):

-

1.

(LM Perc) The linear model was forced to provide either positive or zero valued coefficients \(X_{k}^s\) (C was unconstrained) for solving (6), where the data were all expressed as percentages. Then the coefficients for each segment \(X_{k}^s\) were normalised and set as the weights \(W^s_k\). The reasoning for the positive bounds is because metrics were designed to have positive correlations to time and also the conversion of the metrics to percentages was one sided with 100% being the expert.

-

2.

(LM Pos Raw) The linear model was bound to calculate positive coefficients but metrics were not converted into percentages. The coefficients found for each segment \(X_{k}^s\) were then set directly as the weights \(W^s_k\).

-

3.

(LM Raw) In order to check that our reasoning for positive bounds was valid, we used the same approach as 2 but with no positive bounds. Therefore, coefficients could be negative as well.

-

4.

(LM * OPT) All of the above linear models are set with the maximum number of metrics available. However, this might impact on fitness of the model since they could provide coefficients that are overfitting the data due to the high complexity of the model and the degrees of freedom introduced. Therefore, using all of the three methods mentioned (LM Perc, LM Pos Raw, LM Raw), we found the optimal combination and number of metrics of each method that gave the best linear model by finding the lowest calculated root mean square error (RMSE)—all combinations were tested using exhaustive search.

3.4.5 Weight model selection

For choosing the right weights that fit the data into (5), we evaluated the users’ data from the weights obtained from each model and then performed a Spearman correlation test between the Time Metric (right side) and the summation of the left side of (5), for each group. The Spearman correlation of all models and groups are shown in Table 6 whereas the plots in Figs. 4, 5, and 6 show only the best of each model type for clarity.

The most correlated model, among all groups of metrics, was the LM Perc where the weights were bound to be positive and data were converted to percentages. All versions of the optimal LM models (LM * Opt) didn’t substantially improve their correlation result but through the plots we can spot that the optimal models corrected some peaks in the values. Each dot in the figures represents a segment trial from a particular user. Therefore, each dot is a set of metrics collected from a respective segment (multiplied by their respective weights), plotted against the time metric that is normalised so we can compare all the data from all segments together.

The model selected for our Framework is the LM Percentage (LM Perc) since it gives the highest Spearman correlation and also the steeper linear curve in all the results. The weights used for each metric generated from expert comparisons are shown in Tables 7, 8, and 9. The metrics that haven’t been found to be significant in the user model or they are already described by other metrics, have a very low weight value.

Outcomes of different models for group All. Between the models tested, LM Perc provides the best weights for a linear correlation between the combined metrics and the time performance. LM Perc and LM Perc Opt have identical correlations (\(\rho = 0.95\)) since the latter only corrected some peaks in the values

Outcomes of different models for group Physio. Between the models tested, LM Perc provides the best weights for a linear correlation between the combined physio metrics and the time performance. LM Perc and LM Perc Opt have identical correlations (\(\rho = 0.58\)) since the latter only corrected some peaks in the values

Outcomes of different models for group Non-Physio. Between the models tested, LM Perc provides the best weights for a linear correlation between the combined non-physio metrics and the time performance. LM Perc and LM Perc Opt have identical correlations (\(\rho = 0.94\)) since the latter only corrected some peaks in the values

3.4.6 Transformation rules

The metric-weight pairs described in the previous sections specify the significance of each metric to the good time performance of a particular path. They are the fundamental information that transformation rules are using to define the higher level concepts.

Following the theoretical frameworks of behavioural analysis like the Concept of Flow (Steels 2004; Csikszentmihalyi 1990), the Zone of Proximal Development (Vygotsky 1978), the Zone Theory (Valsiner 1997) and the Trace-Based System theory described in Settouti et al. (2009) and Bouvier et al. (2014), we further analysed the metrics using game specific assumptions into three classes that make up the high level in the driver model (called Transformed Modelled Traces in Trace Theory): Experience, Exploration and Attention The value of each class is determined by a combination of the performance metrics analysed according to certain game-specific rules:

Experience The skills of the user, is determined by the proximity of the user’s primitives to the expert’s. Since the metrics are now expressed as percentages, the higher the value the better the user is performing on that particular metric. Although the significance of this metric to the user’s skill is determined by the weight calculated using the chosen linear regression method (LM Perc). Skill cannot be determined by a single group of values. It has to be an overall value of several trials. Therefore, it is calculated by the weighted sum of the mean of each performance metric over a certain number of laps, as shown by (7). It can be argued that Experience could also be determined by the average lap time of the user, as (7) is similar to (6). However, with (7) we are able to comment on the specific aspects (through the weight-metric pairs) that derive a high/low value. This feature broadens the use of our Framework, as we will discuss later in Sect. 5.1.

where

-

s is the id of a particular segment of segment’s set S

-

l is the lap number we are interested in

-

n defines the number of laps the value is defined from

-

\(W_{k}^s\) is the normalised weight of a metric k and segment s in the All group

-

m is the number of all metrics defined in the All group

-

\(M^{i,s}_k\) is the Performance Metric k of lap i and segment s

-

\(Experience_{n}^{l,s}\) is the experience value of lap number l and segment s over n laps.

Exploration As defined in the introduction, is a metric of how varied (explorative) is the range of actions that the user is using in the current task. Through the metrics we define this as the weighted sum, of a metric’s absolute shift to a proportion of its mean (defined as “jump”). If the difference between two consecutive metrics passes a fixed percentage value of the current experience then the value is positive, otherwise it is negative. This is expressed by (8).

where

-

\(J^s_k\) defines the proportion of “jump” of the experience value of that metric

-

\(Exploration_{n}^{l,s}\) is the exploration value of lap number l and segment s from which current experience value for each metric k was calculated over n laps.

Attention keeps a record of the continuous attention of the user along consecutive segments. This is calculated by first evaluating the experience of the user only from non-physiological data through consecutive segments. If the value is above a threshold, then the user’s attention is high and positive and the value is kept as the Attention. Otherwise, we calculate the exploration value only from the physiological data and use that for Attention. This is expressed by (9).

where

-

np and p prescripts define the Non-Physio and the Physio group metrics respectively

-

I is the index number representing segment s in lap l. Function f transforms s and l to I so that \(I-1\) defines the previous consecutive segment of index I

-

n defines the number of consecutive segments the value is defined from

-

\(W_k\) is the normalised weight of a metric k defined in the respective group

-

m is the number of metrics defined in each group

-

\(M^{i}_k\) is the Performance Metric k of segment-lap index i

-

\(J_k\) defines the proportion of “jump” of the Experience value of that metric

-

\(^{np}T\) defines the threshold set for separating low and high values in the Non-Physio group np.

To sum up, we described the way the performance metrics are created, how their weights are evaluated and defined the values of our high level variables through game specific rules. The next step is to utilise these values to determine the current state and performance of the user and provide instructions to change the segments of the track.

3.4.7 Exploiting the flow

The high level variables mentioned in Sect. 3.4.6 monitor the user experience while playing the game and control the segment altering decision algorithm according to the combination of their values. The balance between the level of challenge and the user’s skill is considered as the optimal operating region (Flow Region) whereas attention defines the range they are operating in, as shown by Fig. 7. When attention is low then the flow region is much narrower, therefore, experience and exploration values should be treated with more care than when attention is high. Our approach is that thresholds define low/high experience/exploration values in high attention, whereas in low attention they are compared with each other.

The two figures show how the area of the region of flow reduces between high and low Attention. The flow region defines the optimal region for user engagement. In the low attention case, the region of flow becomes narrower and the user model’s variables are compared between themselves than to the threshold parameters

a The outcome of the Segment Altering Decision algorithm depends on the values of Exploration, Experience and Attention and their assigned thresholds. b 3D Lattice of User Model showing the implicit space of the high level variables instructing the algorithm to change a particular segment. Colour coding of the decisions is respected between the two figures. (Color figure online)

Giving instructions to change the track at this point is straightforward and is shown in Fig. 8a. In more detail, when there is a high Attention, defined through a threshold, and:

-

1.

Both Experience and Exploration are High: The user is in the optimal region so the segment should be kept the same.

-

2.

Both Experience and Exploration are Low: The user is engaged since Attention is high, therefore we should allow more time for the user to adapt and build up skill so the segment should still stay the same.

-

3.

High Experience, Low Exploration: A more challenging track should be provided (e.g. one with more or sharper curves) since the user’s skills are high and challenges drop below the threshold.

-

4.

Low Experience, High Exploration: An easier segment should be created (e.g. one that imitates a previous user path, or with less curvature) since the user’s skills cannot cope with the current challenge of the path.

When the algorithm detects low Attention, Experience and Exploration values are compared to themselves and not to a threshold, therefore:

-

5.

Experience larger than Exploration: Skills are greater than the challenges so the path should become more challenging.

-

6.

Experience lower than Exploration: Skills are lower than the challenges of that path so an easier path should be provided.

-

7.

Experience same as Exploration (both low): Both values are low and Attention of the user is also low therefore an easier segment should be provided.

Using the above information and Fig. 8a we constructed a 3D lattice, shown in Fig. 8b, that covers the implicit space of those three variables. Each region in the lattice (indicated by the different colours/shapes) instructs the algorithm to either leave the segment untouched (blue—diamond) or change the segment to either an easier (green—square) or harder (red—circle) path according to the user model.

In order to plot the lattice, we used a 50% threshold for each class in order to define high and low values. Our user model consists of various parameters and thresholds (e.g. proportion jump—J) that can be altered as user spends more time with the game. In this article we are not focusing on how these parameters are changing the outcome of the algorithm, however, the reported values (see Table 10) are the ones used to derive the results of our experiment. Their values were chosen to mid-percentage or common values regarding the variability of the subjects collected, although there might be more optimal ones.

Specifically, the two variables representing the “jump” (\(J^s_k\), \(^{p}J^s_k\))—amount of improvement of the user in respect to their average experience—were set at 20% as this was found empirically to be a good proportion of improvement. The thresholds relating to the experience (\(^{np}T\), \(Experience_T\)), were set at the median of the reported experience of our participants, so that the algorithm would create a good distribution of all the outcomes. The thresholds deciding for high/low exploration (\(Exploration_T\)) and attention (\(Attention_T\)) were set at 50% (middle range) as both of their definitions (Transformation Rules) were designed to give values equally in the negative and positive region.

4 Results

The simulator has been driven by 52 users and all of the data were recorded and analysed offline. In the following section we conducted a user profiling analysis in order to verify and find the patterns emerging from our user responses to determine our user types. We also validated our Framework’s outcomes using the data collected from the users through their responses.

4.1 User profiling

An outstanding user model needs to be compatible with the user’s game play experience. The latter depends on the type of the player as well. Type can be subcategorised into multiple groups, like regular gamers versus non-gamers or/and racing enthusiasts or not, etc. As we noticed from our experiments, the users who were involved in racing communities were more willing to do the experiment, they were better engaged and they have more constructive feedback than novice. A successful user model is to detect these kinds of patterns and act according to the individual’s conceptions.

For better understanding of the different categories of our subjects, we performed user profiling on the questionnaire of our experiment. As shown in Table 11, we collected data from subjects of various groups (e.g. age, gender, racing gamers and real driving experience) so that we can implement a user model that can adapt to diverse player types.

From analytics Nos. 9 and 10 of Table 11, which reports the score that the users gave to themselves before and after the experiment, we noticed (as expected) a higher population in the lower scores since most of our subjects were not frequent racing game players (No. 5). From data obtained from analytic No. 8, we can also infer that most of the users enjoyed the game and didn’t feel tired through the process. We acknowledged from the user’s feedback that the low score on tiredness was either because 20 laps were not enough for them to become fatigued or the subjects were very excited to try a racing simulator. Likewise, the improvement (No. 7), was mostly minor since large sample of the users were either beginners or intermediates, therefore they couldn’t improve much in such a short time, considering that any pre-training was not provided.

Analytic No. 6 is the score of the track on the factor of difficulty. Our aim was to provide a starting track that was in general not very challenging but also not particularly easy. Most of the scores lie in the middle range therefore, our objective was accomplished. However, user feedback disclosed that the particular question might not have been answered as initially envisaged. Subjects were supposed to respond with a level of difficulty regarding their performance. Instead, some responded by taking into account only the appearance of the track’s path and length.

Some users commented on the fact that there should have been more categories to rate themselves, especially towards the higher range. Also, we notice that only 37% of the users changed their skills rating by a level, as shown by Fig. 9a. Specifically, subjects that lowered their scores commented that they didn’t perform as well as they expected. Since the change was only by a level and most of the users haven’t altered their score, we decided to combine the two (pre and post) responses. By adding the ordinal values of the two Self-Rate (Pre and Post) responses we created a much elaborated description of our users (see Fig. 9b). However, since eight groups are far too many for the number of our subjects we decided to re-evaluate them into three (see Fig. 9c). People who changed their mind were re-evaluated to their lower choice (self-assigned skill).

Kruskal–Wallis test between the two Self-Rate and Re-evaluate skill groups (Pre versus Re-evaluate, Post versus Re-evaluate) gave very low p values (\(p < 0.01\)). Therefore we can state that the assigned groups are still dependent to the users’ self-reported skill. For the rest of our results section we are using the Re-evaluate skill groups (see Fig. 9c) to correlate the characteristics of the Framework and user responses.

The next step is to find particular patterns in the users’ responses and further understand their profile type. The game specific questions labelled as: Frequency, Fatigue, Difficulty and User Self-Rate (Re-evaluate) were tested with each other using Kruskal–Wallis test.

Low p value (\(p < 0.01\)) was observed between the Self-Rate response with game play frequency response. As anticipated, people who tend to play racing games frequently are expected to be Intermediate or Advanced whereas those that don’t, will rate themselves as Beginners. Under a pairwise test, there is no distinct separation in the middle-upper classes (e.g. Frequently versus Occasionally, Intermediate versus Advanced). Responses from each self-rate group towards game play frequency response are shown in Fig. 10a.

An interesting correlation was also detected between Self-Rate and Improved responses with \(p < 0.05\). Beginners tend to respond that their improvement was little to nothing. Whereas most of the Intermediate and Advanced users responded with high improvement as show in Fig. 10b. This can be explained using the ZPD. Players with the required skills and experience could learn on every lap repetition and improve themselves up to their ADL, which is in turn much higher than the player’s marked as Beginners. Therefore, assuming all users start at scoring their improvement from the same point then more experienced users will improve more than the rest.

Apart from those mentioned, there were not any other statistically significant results between the responses. Therefore, we can assume from the responses that are not mentioned [track difficulty (\(\chi ^2(3) = 2.565\), \(p = 0.46\)) and fatigue (\(\chi ^2(3) = 6.772\), \(p = 0.0795\))] that they cannot be directly associated with the user’s Self-Rate response and the user profile in general, however, they can be retrieved during game play. In fact as we will show later, responses concerning fatigue are correlated to the user attention calculated by our Framework.

4.2 Algorithm’s validation

It is important to mention that the algorithm for finding the metrics’ significance (weights) for the expert’s model used data from only after the fifth lap, since feedback from the users suggested that familiarity of the track and simulator in general happened after 5–8 laps.

What follows in the rest of this section is the validation of the algorithm’s high level variables and outcomes through the user responses: