Abstract

In the current UK Research Excellence Framework (REF) and the Excellence in Research for Australia (ERA), societal impact measurements are inherent parts of the national evaluation systems. In this study, we deal with a relatively new form of societal impact measurements. Recently, Altmetric—a start-up providing publication level metrics—started to make data for publications available which have been mentioned in policy documents. We regard this data source as an interesting possibility to specifically measure the (societal) impact of research. Using a comprehensive dataset with publications on climate change as an example, we study the usefulness of the new data source for impact measurement. Only 1.2 % (n = 2341) out of 191,276 publications on climate change in the dataset have at least one policy mention. We further reveal that papers published in Nature and Science as well as from the areas “Earth and related environmental sciences” and “Social and economic geography” are especially relevant in the policy context. Given the low coverage of the climate change literature in policy documents, this study can be only a first attempt to study this new source of altmetrics data. Further empirical studies are necessary, because mentions in policy documents are of special interest in the use of altmetrics data for measuring target-oriented the broader impact of research.

Similar content being viewed by others

Introduction

Academic science emerged at the beginning of the 19th century (Ziman, 1996). During academic science, the evaluation of scientific results focuses on their excellence and originality in a self-regulated process—the peer review process (Bornmann 2011; Petit 2004). The post-academic science which begins in the 1980s (Ziman 2000) is characterized by an increasing competition for research funds (national and international) which are mostly project-dependent distributed. Applicants of project proposals are more and more forced to be specific about the expected outcome and its wider economic and societal impact (Ziman 1998): The context of application becomes the interesting topic which decides on funding (besides excellence and originality). The objective of post-academic science “is not scientific excellence and theory-building as such but rather the production of a result that is relevant and applicable for the users of the research; in other words, the result should be socially relevant, socially robust and innovative” (Erno-Kjolhede and Hansson 2011, p. 134). The new objective also leads to changes in the ex-post evaluation of science. During the 1990s researchers started to develop evaluation systems with possible indicators in order to measure the societal impact of research (Miettinen et al. 2015). Also, governments and intermediaries (government-funded granting agencies) started requiring that applicants delineate their broader impact plans (Dance 2013). In the current UK Research Excellence Framework (REF), and the Excellence in Research for Australia (ERA) societal impact measurements are inherent parts of the national evaluation systems.

How is societal impact defined? A pointedly definition has been published by Wilsdon et al. (2015): “Research has a societal impact when auditable or recorded influence is achieved upon non-academic organisation(s) or actor(s) in a sector outside the university sector itself—for instance, by being used by one or more business corporations, government bodies, civil society organisations, media or specialist/professional media organisations or in public debate. As is the case with academic impacts, societal impacts need to be demonstrated rather than assumed. Evidence of external impacts can take the form of references to, citations of or discussion of a person, their work or research results” (p. 6). Samuel and Derrick (2015) interviewed evaluators working in the REF and asked for their definition of societal impact. The interviewees defined societal impact as ‘outcome’ from research which makes a change or difference (e.g. a change to clinical practice or patient care) or which creates economic benefits (e.g. creating jobs).

In this study, we deal with a relatively new form of societal impact measurements. Recently, Altmetric—a start-up providing publication level metrics (www.altmetric.com)—started to make data for academic publications available which have been mentioned in policy documents (Liu 2014) in order to uncover the interaction between science and politics. On the one side, governments allocate very large amounts of public money to various units (e.g. researchers or institutions) for various forms of research. In many countries, the public money is distributed by the soft money system in which researchers formulate proposals for projects and funding bodies decide on their acceptance or rejection. For governments, academic science is one section of a vague defined Research & Development system which ranges from basic science to near-market technological development (Ziman 2000). On the other side, independent and still active scientists advice stakeholders in the policy area: “The scientific community is increasingly being called upon to provide evidence and advice to government policy-makers across a range of issues, from short-term public health emergencies through to longer-term challenges, such as population ageing or climate change” (OECD 2015, p. 5). The scientific advice can be made in direct face-to-face interactions or in indirect interactions whereby papers written by scientists (actually written for their colleagues) are read by political actors and mentioned in policy-related documents. The latter type of interaction can possibly be measured by the new data source offered by Altmetric.

We regard the new data source as an interesting possibility to specifically measure the (societal) impact of research on policy-related areas which should be empirically investigated. Using a comprehensive dataset with publications on climate change as an example, we study the usefulness of the new data source for impact measurement. As publication set in this study, we used climate change literature because (1) corresponding policy sites are continuously analyzed by Altmetric. (2) We expect to observe many references to the scientific literature in the policy documents (Liu 2014) because climate change is a policy relevant topic since many years. We are especially interested in the characteristics of the papers which are mentioned in the policy documents: Are these papers published in certain journals (e.g. popular journals like Nature and Science), in certain publication years (e.g. more recent years), or with certain document types (e.g. reviews)?

Literature overview

Current literature overviews on societal impact studies have been published by Bornmann (2012, 2013). Thus, we concentrate in this chapter on more recently published studies which are especially relevant for this study.

Societal impact measurements are mostly commissioned by governments which argue that measuring the impact on science says little about real-world benefits of research (Cohen et al. 2015). Nightingale and Scott (2007) summarize this argumentation in the following pointedly sentence: “Research that is highly cited or published in top journals may be good for the academic discipline but not for society” (p. 547). Governments are interested to know the importance of publicly-funded research (1) for the private and public sectors (e.g. health care), (2) to tackle societal challenges (e.g. climate change), and (3) for education and training of the next generations (ERiC 2010; Grimson 2014). The impact model of Cleary et al. (2013) additionally highlights the policy enactment of research, in which the impact on policies, laws, and regulations is of special interest. The current study seizes upon this additional issue by investigating a possible source for measuring policy enactment of research.

The early studies which targeted societal impact of research focused on the economic dimension. Researchers were interested in the impact of Research and Development (R & D) on economic growth and productivity as well as the rate of return to investments in R & D (Godin and Dore 2005). The relationships had been quantitatively-statistically investigated. In the current frameworks of national evaluation systems (especially the REF), impact is broadly measured (i.e. one focuses not only on the impact of research on economy) and qualitative approaches of measuring impact are rated higher than quantitative approaches. REF evaluation panels review case studies (documents of four pages) which explain how particular research (conducted in the last 15 years and recognized internationally in terms of originality and significance) had influenced the wider, non-academic society (Derrick et al. 2014; Samuel and Derrick 2015). According to Miettinen et al. (2015) “in the recent Research Excellence Framework, 6975 impact case studies were conducted and evaluated by more than 1000 assessment panel members from academic and societal interest groups”.

The use of the case study approach for measuring societal impact is positively as well as negatively assessed in the scientometric literature. The preparation of case studies means a high effort for an institution. David Price, the vice-provost for research at the University College London, said that the university alone “wrote 300 case studies that took around 15 person-years of work, and hired four full-time staff members to help” (Van Noorden 2015, p. 150). According to Wilsdon et al. (2015) case studies “offer the potential to present complex information and warn against more focus on quantitative metrics for impact case studies. Others however see case studies as ‘fairy tales of influence’ and argue for a more consistent toolkit of impact metrics that can be more easily compared across and between cases” (p. 49, see also Atkinson 2014). For Cohen et al. (2015) “the holy grail is to find short term indicators that can be measured before, during or immediately after the research is completed and that are robust predictors of the longer term impact … from the research”. Ovseiko et al. (2012) think that important areas of impact can only be captured qualitatively. “However, the emphasis on qualitative indicators would stretch traditional peer review further and concentrate on the most prominent examples of impact, overlooking more modest contributions”.

Although the literature overview on societal impact studies of Bornmann (2012, 2013) reveal many proposals to measure societal impact quantitatively, Wilsdon et al. (2015) highlight only two metrics which might reflect wider societal impact: (1) Google patent citations and (2) clinical guideline citations. (Ad 1) The analysis of the reference list in patents can be used to assess the contribution of publicly funded research to innovations in industry (Kousha & Thelwall, in press; Ovseiko et al. 2012). (Ad 2) Grant (1999) proposed to use citations to publications in clinical guidelines to “quantify the progress of knowledge from biomedical research into clinical practice” (p. 33). The analysis of 43 UK guidelines shows, i.e., that “within the United Kingdom, Edinburgh and Glasgow stood out for their unexpectedly high contributions to the guidelines’ scientific base” (Lewison and Sullivan 2008, p. 1944). The results of Andersen (2013) point out that the quality of clinical guidelines correlates positively with the citation impact of the cited literature. Thus, quality in research and practice seems to be (closely) related.

In the current discussion around the quantitative measurement of societal impact, alternative metrics (altmetrics) play an important role (Bornmann 2014). Altmetrics count views, downloads, clicks, notes, saves, tweets, shares, likes, recommends, tags, posts, trackbacks, discussions, bookmarks, and comments to measure the impact of scholarly publications. Altmetrics are seen as an interesting possibility to measure quantitatively the broad impact of publications: “Funders also often see tremendous value in the general public understanding of publicly funded research projects and the scientific process. Use of alternative assessment metrics may help support needs in these areas” (NISO Alternative Assessment Metrics Project 2014).

There are many universal problems with societal impact measurements (Bornmann 2012, 2013). Milat et al. (2015) emphasized that “research impacts are complex, non-linear, and unpredictable in nature and there is a propensity to ‘count what can be easily measured’, rather than measuring what ‘counts’ in terms of significant, enduring changes”. In order to tackle the complexity of societal impact measurements, proposed models of impact measurements tended to be complexly designed. These models might be able to measure impact multi-dimensionally, but do not have the right balance between comprehensiveness and feasibility (Milat et al. 2015). Another problematic part of societal impact measurement is the long time lag before impact manifests in practice. One can expect around 15 years until research evidence is implemented into practice (Balas and Boren 2000). The US National Research Council (2014) lists the following additional “barriers to assessing research impacts: research can have both positive and negative effects (e.g., the creation of chlorofluorocarbons reduced stratospheric ozone); the adoption of research findings depends on sociocultural factors; transformative innovations often depend on previous research; it is extremely difficult to assess the individual and collective impacts of multiple researchers who are tackling the same problem; and finally, it is difficult to assess the transferability of research findings to other, unintended problems” (p. 75).

Although mentions of papers in policy documents are not able to solve all these problems, they are an interesting source of data to measure the broader impact of research target-oriented (on policy-related areas in society). Also, the data can possibly serve to shed light on the relationship between academic research and policy making. Decision processes in policy making are “deeply permeated” by active scientists (Lasswell 1971, p. 125). Scientists participate in different positions and roles in the policy process, which are combined with different strategies and goals. Typologies of the positions and roles are described by Weiss (2003, 2006) and Pielke (2007). Pielke (2007) suggests that the role for scientists in political debate is to “help us to understand the associations between different choices and their outcomes” (p. 139).

Weiss (2003, 2006) developed a typology with five positions which an advising scientist can take when addressing uncertainties. “Each position represents an attitude that results from a given level of uncertainty in combination with differences in the perceived necessity to take measures and in the willingness to do so based on associated (societal) costs” (Spruijt et al. 2016, p. 45). The typology of Pielke (2007) consists of four roles: The pure scientist is not interested on the interaction with political actors; she is only oriented towards facts. The science arbiter consents to answer specific questions from political actors (which should be factually oriented). The issue advocate promotes one solution in the range of options available to political actors. The honest broker of policy alternatives tries to explain and/or to expand the range of options available to political actors.

There is a wide body of literature available investigating the relationship between academic research and policy making, especially concerning climate research. For example, Ford et al. (2013) assess the ‘usability’ of climate change research for decision-making. Using a case study of the Canadian International Polar Year, they introduce a novel approach to quantitatively evaluate the usability of climate change research for informing decision-making. Lemos and Morehouse (2005) examine the use of interactive models of research in the regional integrated scientific assessments (RISAS) in the US. They show that highly interactive models increase the possibilities of societal impact. The recent study of Hermann et al. (2015) focusses on the expert advices in the field of Austrian climate policy. The authors map the different actors and advisory forms, assess the relevance of scientific knowledge, and identify patterns of science-policy interactions.

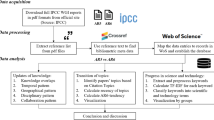

Methods

Analyses of policy documents at Altmetric

Altmetric has developed a text-mining solution (the Altmetric Policy Miner, APM) to discover mentions of publications in policy documents (Liu 2014; Liu et al. 2015). The following list shows some sources which are analyzed by Altmetric:

-

European Food Safety Authority (EFSA)

-

GOV.UK–Policy papers, Research & Analysis

-

Intergovernmental Panel on Climate Change (IPCC)

-

International Committee of the Red Cross (ICRC).

-

World Health Organization (WHO)

-

International Monetary Fund (IMF)

-

Médicins sans Frontières (MSF)

-

NICE Evidence

-

Oxfam Policy & Practice

-

UNESCO

-

World Bank

According to Liu et al. (2015) one limitation of the current policy tracking is that the sources are limited to major organizations from North America and Europe. Altmetric plans to curate and add sources, particularly those from regions such as Asia and Africa.

Climate change publication set

In 2015, we have constructed a set of 222,060 papers (articles and reviews only) on climate change (also referred to as climate change papers, CCP, in the following) published between 1980 and 2014 via a sophisticated search method called “interactive query formulation”. A detailed explanation of the procedure can be found in Haunschild, Bornmann, and Marx (2016). We used the dataset to study (1) the growth of the overall publication output on climate change as well as (2) of the major subfields, and (3) the contributing journals and countries as well as their citation impact. (4) Furthermore, we illustrated the time evolution and relative importance of specific research topics on the basis of a title word analysis.

Our Web of Science (WoS) in-house database—derived from the Science Citation Index Expanded (SCI-E), Social Sciences Citation Index (SSCI), and Arts and Humanities Citation Index (AHCI) provided by Thomson Reuters (Philadelphia, USA)—has DOIs for 149,657 of the 222,060 papers. We tried to obtain the DOIs for the remaining 72,403 papers from CrossRef using the Perl module Bib::CrossRef.Footnote 1 The API returned a DOI for each paper. Therefore, we checked if the publication year, journal title, and issue agree with the information in our in-house database. For 42.5 % (n = 30,784) of the cases, the bibliographic information disagreed and the papers were discarded. Using this procedure, we obtained 41,619 additional DOIs from the climate change publication set.

The DOIs (n = 191,276, 86.1 % of the full publication set) from our in-house database and the DOIs from CrossRef were used to retrieve information about policy mentions from Altmetric via their APIFootnote 2 on 16 November, 2015. For 1.2 % (n = 2341) of the papers, we found at least one policy mention.

Table 1 shows the policy sources which mention CCP. The most mentions of climate change papers originate from two organizations: “Food and Agriculture Organization of the United Nations” and “Intergovernmental Panel on Climate Change”.

Results

Climate change papers mentioned in policy documents

Table 2 shows the number of CCP with one or more mentions in policy documents. As the results in the table show, the papers received up to 18 mentions. However, most of the papers (92 %) received only one (78.7 %) or two (13.3 %) mentions. This means that not only few papers are ever mentioned in policy documents; nearly all mentioned papers received only one or two mentions. In the following we will reveal some characteristics of the CCP which have been mentioned in policy documents. Since the number of mentions per CCP is very low (\({\bar{\text{X}}}\) = 1.4), the following tables in this section do not consider the number of mentions, but analyze the characteristics of the CCP mentioned at least once in policy documents (CCP_P). Section 4.2 discusses the few CCP which were mentioned very often in policy documents.

Table 3 shows the distribution of the CCP and CCP_P across the publication years. The table focuses on the years 2000 to 2014 in which 2321 CCP_P have been mentioned. Since only 20 CCP_P have been mentioned between 1981 and 1999, these years are not considered in the table. Table 3 compares the distribution of the annual number of CCP with the distribution of the annual number of CCP_P. In this study, the annual percentages of CCP are used as the expected values of which the annual percentages of CCP_P more or less differ (see the column “Difference between percentages”). A positive (negative) percentage means that the authors of the policy documents are more (less) interested in the CCP of a year than one can expect.

The time-curve of the citations referenced by scientific papers usually shows a distinct peak between 2 and 4 years after publication of the cited paper. However, many highly cited papers show the phenomenon of delayed recognition: their citation rate peaks many years after publication (Redner 2005). As the results in Table 3 show, it also needs more time to be mentioned in a policy document, because the period of origin of the policy documents is much longer than that of a typical scientific paper (see e.g. the IPCC Reports which appear only every 6–7 years, whereas a typical scientific paper arises within 1 year). This corresponds to the maximum of the difference between the percentages around 2005–2006 in the right column of Table 3.

Table 4 shows the number of CCP and number of CCP_P broken down by the journals in which the papers had appeared. Not surprisingly, the prominent journals Nature and Science are comparatively often mentioned by policy documents. Their multidisciplinary orientation and the more general character of many papers published by them is a decisive advantage for being considered and mentioned comparatively frequently. In contrast, the journal Geophysical Research Letters at the top of Table 4 is far more subject-specific (concerning climate change). The journal publishes many more policy-relevant papers in general (19.3 % among CCP_P) than one can expect (9.1 % among CCP). This corresponds to the publication output in terms of the total number of climate change related papers published and the large proportion of highly cited papers (Haunschild et al. 2016). Both facts may increase the probability of being mentioned in a policy document, too.

In agreement with the relatively high percentages of Nature and Science papers (i.e. papers with a more general character and thereby review-like) among CCP_P shown in Table 4, review papers are mentioned comparatively more frequently in CCP_P (12.1 %) than one can expect from the distribution among CCP (5.5 %)—as the results in Table 5 reveal. The opposite is true for research articles (87.9 vs. 94.5 %). Reviews summarize research results of a topic spread out in a variety of specific papers and are therefore more useful for the authors of policy documents. Possibly, the authors (and potential readers) of such documents are unable to cope with more specific research results and to assess their importance and significance for the different questions around climate change.

Based on the journals in which the CCP have been published the CCP (and the CCP_P) were assigned to broader subject categories as defined by the OECD (Paris, France).Footnote 3 Table 6 shows the number of CCP and number of CPP_P broken down by these subject categories. Climate change research is hardly comparable with classical research topics (for example like photovoltaics or even nanoscience as a broader field). Due to the fact that nearly all systems on this planet are affected by the impacts of a changing climate, a multitude of quite different disciplines all over science is interacting and cooperating in this demanding field of science. Therefore, it is reasonable to differentiate the impact of climate change research on policy documents with regard to the various disciplines and sub-disciplines involved. Their influence on policy documents can be assumed to be quite different.

The overrepresentation of the “Earth and related environmental sciences” papers among the CCP_P (see the positive difference between the CPP and CPP_P percentages at the top of Table 6) corresponds to the overrepresentation of the associated journals of this category (in particular the Geophysical Research Letters) presented in Table 4. The large positive difference between the CPP and CPP_P percentages for the category Social and economic geography may initially be surprising, because the category is far outside the “classical” climate change related categories, like earth sciences. However, in view of the rapid growth of the publication output dealing with adaptation, impacts and vulnerability of climate change and the emergence of related title words within climate change literature (see the results in Haunschild et al. 2016), the overrepresentation of Social and economic geography is understandable.

Climate change papers mentioned in policy documents most frequently

As Table 7 shows, papers published in Nature and Science benefit from these prestigious and most visible journals and the more general character of their papers: Five of the nine CCP_P with the most mentions (at least ten) in policy documents have been published in these journals. The topics of the papers most frequently mentioned in policy documents are more notable: Eight out of nine papers are about adaptation and vulnerability of climate change and deal with agriculture (crop production, food security) and fishery (one paper discusses emission scenarios of greenhouse gases). Thus, the papers have the more practical consequences of climate change for society as a topic. Since the results of Haunschild et al. (2016) show that the share of the publication output of climate change papers assigned to agriculture is rather low (about 9 % of the overall climate change literature), policy documents seem to focus on a part of the climate change literature which is especially interesting in the political context—the welfare of the population.

Discussion

In recent years, societal impact measurements of academic research have become more and more important. This trend is not only visible by their consideration in national evaluation systems (e.g. the REF), but also in the commercial success of providers delivering altmetrics data (e.g. Altmetric). Currently, the most important and most frequently used method of societal impact measurement is the case study approach in which cases of research are described leading successfully to a specific form of societal impact (King’s College London and Digital Science 2015). However, case studies have the disadvantages that they are expensive, the results are biased towards success stories, and the results for different entities (e.g. universities) are not comparable. Based on the results of case studies, it is not possible to say that one entity is more successful in generating societal impact than another entity. Such comparisons are only possible by using quantitative indicators with a large coverage of research entities: “The advantage of using quantitative indicators is that they can be standardized and aggregated, allowing universities to use them on a continuous basis to track their impact, compare it with other universities, and recognise the contribution of every faculty member, of whatever scale” (Ovseiko et al. 2012).

Whereas bibliometric indicators have emerged as the most important metrics to measure the recursive impact of research, the development of metrics for the measurement of societal impact is challenging. According to the US National Research Council (2014) “no high-quality metrics for measuring societal impact currently exist that are adequate for evaluating the impacts of federally funded research on a national scale … Each metric describes but a part of the larger picture, and even collectively, they fail to reveal the larger picture. Moreover, few if any metrics can accurately measure important intangibles, such as the knowledge generated by research and research training” (p. 70). In Sect. 2, we refer to Wilsdon et al. (2015) who highlight only two quantitative indicators which can be used for the societal impact measurement: Google patent citations (for measuring innovation in industry) and clinical guideline citations (for measuring the impact of biomedical research on clinical practice). However, further indicators are necessary which allow targeting the three institutional foundations of impact: (1) epistemological (better understanding of phenomena behind different kinds of societal problems), (2) artefactual (development of technological artefacts and instruments), and (3) interactional (organizational forms of partnerships between researchers and different kinds of societal actors) (Miettinen et al. 2015).

This study focusses on a relatively new form of impact measurement (provided by Altmetric), which could complement Google patent citations and clinical guideline citations: mentions of publications in policy documents. It is an interesting form of impact measurement compared to other altmetrics (e.g. mentions in tweets and blogs) because (1) it is target-oriented (i.e. it measures the impact on a specific sector of society) and (2) it focusses on a relevant part of society for research—the policy area. Many research topics are policy-relevant (e.g. health care or labor market research) and it is interesting to know in the context of wider impact evaluations which (kind of) publications have more or less impact. Altmetric and Scholastica (2015) exemplify that policy document mentions cannot only be used on the institutional level to demonstrate impact, but also on the level of single researchers. They describe the case of a university professor who would like to show the broader impact of her research to the National Institutes of Health (Bethesda, MD; USA) and the Medical Research Council’s (London UK) program officers: “Altmetrics were able to show her that her work had been referenced in policy documents published by two major organizations—evidence she considered ‘bona fide data demonstrating that practitioners—not researchers—but folks who can affect lives through legislation, health care, and education, are using my research to better their work’” (p. 26).

In this study, we used a comprehensive dataset of papers on climate change to investigate mentions of papers in policy documents. Climate change is particularly useful in this respect because the topic is very policy-relevant since many years. Thus, we expected to find a large number of papers mentioned in policy documents in comparison with other research fields—especially because corresponding policy sites are continuously evaluated by Altmetric. However, the results of the analyses could not validate our expectation: Of n = 191,276 publications on climate change in the dataset, only 1.2 % (n = 2341) have at least one policy mention. The rate of 1.2 % is also small in comparison with the result of Kousha and Thelwall (in press) who show that “within Biomedical Engineering, Biotechnology, and Pharmacology & Pharmaceutics, 7–10 % of Scopus articles had at least one patent citation”. The result of this study contradicts the claim of Khazragui and Hudson (2015) that “it is rare that a single piece of research has a decisive influence on policy. Rather policy tends to be based upon a large body of work constituting ‘the commons’” (p. 55). The low percentage of 1.2 % which we found in this study might be due to the fact that Altmetric quite recently started to analyze policy documents and the coverage of the literature is still low (but will be extended). However, the low percentage might also reflect that only a small part of the literature is really policy-relevant and most of the papers are only relevant for researchers studying climate change. Two other reasons for the low percentage might be that (1) policy documents may not mention every important paper on which a policy document is based on. (2) There are possible barriers and low interaction levels between researchers and policy makers.

The low number of mentions in policy documents further raises the question what mentions in policy-related documents really measure: Is it relevance of academic papers? Do the mentions reflect the effort of researchers to interact with policy makers, an ongoing relationship between researchers and policy makers, or the effort of the policy organization to include (climate change) research in policy documents? Future studies should try to find an answer to these questions by undertaking (1) analyses of the context of paper mentions in policy-related documents or (2) surveys of the authors of these documents asking for the motivations for the paper mentions. Independent of these and other possible results of forthcoming studies, one should keep in mind that non-mentioned papers are not necessarily less relevant than mentioned papers; they may simply be unknown to policy makers.

In order to find out which kind of papers are more or less interesting in the policy context (e.g. articles or reviews), we compared the distribution of papers among CCP and CCP_P. The results show that the policy literature tends to cite research which has been published a longer time ago than researchers do in their papers. Thus, research papers seem to need more time to produce impact on politics than on research itself. As expected, reviews are overrepresented among CCP_P: the observed CCP_P value is higher than the expected value delivered by the CCP distribution. Reviews summarize the results of many primary research papers and connect research lines from different research groups. Good reviews save the labor of reviewing the literature on one’s own responsibility. In this study, we further revealed that papers published in Nature and Science as well from the areas “Earth and related environmental sciences” and “Social and economic geography” are especially relevant in the policy context.

This study is a first attempt to analyze mentions of scientific publications in policy documents. We encourage that further empirical studies follow because the data source is of special interest in the use of altmetrics data for measuring the broader impact of research. It will be interesting to see whether more papers are used in policy documents in upcoming years (because of the wider coverage of the policy literature by Altmetric). Furthermore, it would be interesting to generate results from other areas of research (which are also particularly interesting for the policy area) in order to compare the results for climate change with the results for other areas. For example, it will be interesting to see whether there are higher or lower percentages than 1.2 % of publications mentioned in policy documents (see above). Do policy documents from other areas focus on more recent literature than policy documents on climate change do? When the coverage of research papers in policy documents will be on a significantly higher level, future studies should also try to normalize policy document mentions. This study has already demonstrated that mentions in policy documents are time-dependent and we can expect that policy documents will differently focus on certain areas of research. Thus, time- and area-specific forms of normalization will be necessary if entities (e.g. research groups or institutions) with broad outputs in times and areas are evaluated.

In conclusion, we would like to mention limitations of this study. The first one refers to the documents analyzed by Altmetric: Altmetric does not only analyze documents from governments, but also documents from researchers who summarize the status of research on a certain topic for politicians as potential readers. Bornmann and Marx (2014) proposed that the former document type can be named as assessment report: Assessment reports summarize the status of research on a certain subject in form of narrative reviews or meta-analyses. However, assessment reports are preliminary stages of impact on politics, because they summarize the literature with the goal of increasing the impact of research on politics. Real impact on politics can only be measured by analyzing documents from governments. A second limitation of this study is the problem of disambiguating policy documents in different and same languages. Some policy documents have the same content while only the language differs. In these cases, the mentions are counted multiple times.

Conclusions

Bibliometrics is particularly successful in measuring impact, because the target of impact measurement is clearly defined: the publishing researcher who is working in the science system. Thus, citation counts provide target-oriented metrics (Lähteenmäki-Smith et al. 2006). However, many societal impact measurement studies are intended to measure impact in a broad sense whereas broad means the impact on all areas of society (or at least as many as possible). This is especially the case for studies which are based on various sources of altmetrics. Furthermore, there is the tendency in altmetrics to use different altmetrics sources for the calculation of a composite indicator. Composite indicators are calculated because many altmetrics sources are characterized by low counts. For example, Altmetric has proposed the Altmetric Attention Score, which summarizes the impact of a piece of research over different altmetrics sources (e.g. blog posts and tweets) (https://help.altmetric.com/support/solutions/articles/6000059309-about-altmetric-and-the-altmetric-attention-score).

We deem appropriate that the measurement of impact should always be target-oriented. Altmetric and Scholastica (2015) give some examples here: “For example, someone publishing a study on water use in Africa may be particularly keen to see that many of those tweeting and sharing the work are based in that region, whereas economics scholars might want to keep track of where their work is being referenced in public policy or by leading think-tanks” (p. 24). Thus, we appreciate those quantitative approaches which analyse scholarly paper citations, clinical guideline citations, policy document mentions, or patent citations. Without the restriction of impact to specific target groups (identifiable recipients) it is not clear which kind of impact is actually measured. The general uncertainty in scientometrics about the meaning of altmetrics is probably based on the tendency to use composite indicators or counts without target-restrictions when altmetrics are applied.

Policy documents are one of the few altmetrics sources which can be used for the target-oriented impact measurement. As source for the measurement of impact on politics, policy documents are one of the most interesting altmetrics sources which should be studied in more detail in future studies. For the use of a metric based on policy document mentions in these studies, we deem it as very necessary that Altmetric publishes an up-to-date list of the sources with policy-related documents which they continuously analyze. Without this information it is scarcely possible to interpret the results of a study which is based on mentions in policy-related documents. On the current homepage, Altmetric only lists some examples of the type of organizations included in the database (Liu 2014). Many national sources of policy documents, such as ministries or regional governments, are not mentioned in the list. Are these sources not included in the Altmetric database? We suggest to list in detail the types of policy making bodies included in the database and the number of organizations per type (perhaps with examples). This gives the user an idea of the inclusiveness of the database and thereby allows them to make a more informed assessment of the results of the analysis. If the typology would also contain the total number of papers mentioned, it could show which type of policy making bodies already cooperate closely with researchers.

Taken as a whole, many questions should be answered until one can decide whether this new altmetrics source can be used in practice, i.e. in the evaluation practice of the REF, ERA or others. Currently, there are too many open questions which disregard the new source as a metric for a prompt consideration. The user of the new data source and recipient of corresponding results should always keep in mind that the analysis is of quantitative nature; counting the number of mentions of a paper set in policy documents. It is not a qualitative analysis of how the research described in the paper set is being discussed in policy documents. This information could only be retrieved when the context of mentions in policy documents is analyzed (Bornmann and Daniel 2008). Furthermore, the analysis of mentions in policy-related documents cannot uncover the different forms of interactions between science and policy. In Sect. 2, we described two typologies which have been developed to describe these forms. The quantitative analysis of mentions in policy-related documents treats the mentioned scientist as a pure scientist who is oriented towards facts and is actually not interested on the interaction with political actors (Pielke, 2007). The interaction is reduced to the reading of papers (which are oriented to academic audiences) by political actors who deemed the papers as so important that they mention them in the policy-related document.

References

Altmetric and Scholastica. (2015). The evolution of impact indicators: From bibliometrics to altmetrics. London: Altmetric and Scholastica.

Andersen, J. P. (2013). Association between quality of clinical practice guidelines and citations given to their references. Paper presented at the proceedings of ISSI 2013—14th international society of scientometrics and informetrics conference.

Atkinson, P. M. (2014). Assess the real cost of research assessment. Nature, 516(7530), 145.

Balas, E. A., & Boren, S. A. (2000). Managing clinical knowledge for health care improvement. In J. Bemmel & A. T. McCray (Eds.), Yearbook of medical informatics 2000: Patient-centered systems (pp. 65–70). Stuttgart: Schattauer Verlagsgesellschaft mbH.

Bornmann, L. (2011). Scientific peer review. Annual Review of Information Science and Technology, 45, 199–245.

Bornmann, L. (2012). Measuring the societal impact of research. EMBO Reports, 13(8), 673–676.

Bornmann, L. (2013). What is societal impact of research and how can it be assessed? A literature survey. Journal of the American Society of Information Science and Technology, 64(2), 217–233.

Bornmann, L. (2014). Do altmetrics point to the broader impact of research? An overview of benefits and disadvantages of altmetrics. Journal of Informetrics, 8(4), 895–903. doi:10.1016/j.joi.2014.09.005.

Bornmann, L., & Daniel, H.-D. (2008). What do citation counts measure? a review of studies on citing behavior. Journal of Documentation, 64(1), 45–80. doi:10.1108/00220410810844150.

Bornmann, L., & Marx, W. (2014). How should the societal impact of research be generated and measured? A proposal for a simple and practicable approach to allow interdisciplinary comparisons. Scientometrics, 98(1), 211–219.

Cleary, M., Siegfried, N., Jackson, D., & Hunt, G. E. (2013). Making a difference with research: Measuring the impact of mental health research. International Journal of Mental Health Nursing, 22(2), 103–105. doi:10.1111/Inm.12016.

Cohen, G., Schroeder, J., Newson, R., King, L., Rychetnik, L., Milat, A. J., et al. (2015). Does health intervention research have real world policy and practice impacts: testing a new impact assessment tool. Health Research Policy and Systems, 13, 12. doi:10.1186/1478-4505-13-3.

Dance, A. (2013). Impact: Pack a punch. Nature, 502, 397–398. doi:10.1038/nj7471-397a.

Derrick, G. E., Meijer, I., & van Wijk, E. (2014). Unwrapping “impact” for evaluation: A co-word analysis of the UK REF2014 policy documents using VOSviewer. In P. Wouters (Ed.), Proceedings of the science and technology indicators conference 2014 Leiden “context counts: Pathways to master big and little data” (pp. 145–154). Leiden: University of Leiden.

ERiC. (2010). Evaluating the societal relevance of academic research: A guide. Delft: Delft University of Technology.

Erno-Kjolhede, E., & Hansson, F. (2011). Measuring research performance during a changing relationship between science and society. Research Evaluation, 20(2), 131–143. doi:10.3152/095820211x12941371876544.

Ford, J. D., Knight, M., & Pearce, T. (2013). Assessing the ‘usability’ of climate change research for decision-making: A case study of the canadian international polar year. Global Environmental Change, 23(5), 1317–1326. doi:10.1016/j.gloenvcha.2013.06.001.

Godin, B., & Dore, C. (2005). Measuring the impacts of science; beyond the economic dimension, INRS Urbanisation, Culture et Société. Paper presented at the HIST Lecture, Helsinki Institute for Science and Technology Studies, Helsinki, Finland. http://www.csiic.ca/PDF/Godin_Dore_Impacts.pdf.

Grant, J. (1999). Evaluating the outcomes of biomedical research on healthcare. Research Evaluation, 8(1), 33–38.

Grimson, J. (2014). Measuring research impact: not everything that can be counted counts, and not everything that counts can be counted. In W. Blockmans, L. Engwall, & D. Weaire (Eds.), Bibliometrics: Use and abuse in the review of research performance (pp. 29–41). London: Portland Press.

Haunschild, R., Bornmann, L., & Marx, W. (2016). Climate change research in view of bibliometrics. PloS One, 11(7), e0160393. doi:10.1371/journal.pone.0160393.

Hermann, A. T., Pregernig, M., Hogl, K., & Bauer, A. (2015). Cultural imprints on scientific policy advice: Climate science-policy interactions within Austrian neo-corporatism. Environmental Policy and Governance, 25(5), 343–355. doi:10.1002/eet.1674.

Khazragui, H., & Hudson, J. (2015). Measuring the benefits of university research: impact and the REF in the UK. Research Evaluation, 24(1), 51–62. doi:10.1093/reseval/rvu028.

King’s College London and Digital Science. (2015). The nature, scale and beneficiaries of research impact: An initial analysis of research excellence framework (REF) 2014 impact case studies. London: King’s College London.

Kousha, K., & Thelwall, M. (in press). Patent citation analysis with Google. Journal of the Association for Information Science and Technology. doi: 10.1002/asi.23608.

Lähteenmäki-Smith, K., Hyytinen, K., Kutinlahti, P., & Konttinen, J. (2006). Research with an impact evaluation practises in public research organisations. Kemistintie: VTT Technical Research Centre of Finland.

Lasswell, H. D. (1971). A pre-view of policy sciences. New York: Elsevier.

Lemos, M. C., & Morehouse, B. J. (2005). The co-production of science and policy in integrated climate assessments. Global Environmental Change-Human and Policy Dimensions, 15(1), 57–68. doi:10.1016/j.gloenvcha.2004.09.004.

Lewison, G., & Sullivan, R. (2008). The impact of cancer research: How publications influence UK cancer clinical guidelines. British Journal of Cancer, 98(12), 1944–1950.

Liu, J. (2014). New source alert: Policy documents. Retrieved September 10, 2014, from http://www.altmetric.com/blog/new-source-alert-policy-documents/.

Liu, J., Konkiel, S., & Williams, C. (2015, 2015/06/17). Understanding the impact of research on policy using Altmetric data. Retrieved November 4, 2015, from http://figshare.com/articles/Understanding_the_impact_of_research_on_policy_using_Altmetric_data/1439723.

Miettinen, R., Tuunainen, J., & Esko, T. (2015). Epistemological, artefactual and interactional–institutional foundations of social impact of academic research. Minerva,. doi:10.1007/s11024-015-9278-1.

Milat, A. J., Bauman, A. E., & Redman, S. (2015). A narrative review of research impact assessment models and methods. Health Research Policy and Systems,. doi:10.1186/s12961-015-0003-1.

National Research Council. (2014). Furthering america’s research enterprise. Washington: The National Academies Press.

Nightingale, P., & Scott, A. (2007). Peer review and the relevance gap: Ten suggestions for policy-makers. Science and Public Policy, 34(8), 543–553. doi:10.3152/030234207x254396.

NISO Alternative Assessment Metrics Project. (2014). NISO Altmetrics Standards Project White Paper. Retrieved July 8, 2014, from http://www.niso.org/apps/group_public/document.php?document_id=13295&wg_abbrev=altmetrics.

OECD. (2015). Scientific Advice for Policy Making. Paris: OECD Publishing.

Ovseiko, P. V., Oancea, A., & Buchan, A. M. (2012). Assessing research impact in academic clinical medicine: A study using research excellence framework pilot impact indicators. Bmc Health Services Research,. doi:10.1186/1472-6963-12-478.

Petit, J. C. (2004). Why do we need fundamental research? European Review, 12(2), 191–207.

Pielke, R. A. (2007). The honest broker: Making sense of science in policy and politics. Cambridge: Cambridge University Press.

Redner, S. (2005). Citation statistics from 110 years of physical review. Physics Today, 58(6), 49–54. doi:10.1063/1.1996475.

Samuel, G. N., & Derrick, G. E. (2015). Societal impact evaluation: Exploring evaluator perceptions of the characterization of impact under the REF2014. Research Evaluation,. doi:10.1093/reseval/rvv007.

Spruijt, P., Knol, A. B., Petersen, A. C., & Lebret, E. (2016). Differences in views of experts about their role in particulate matter policy advice: Empirical evidence from an international expert consultation. Environmental Science and Policy, 59, 44–52. doi:10.1016/j.envsci.2016.02.003.

Van Noorden, R. (2015). Seven thousand stories capture impact of science. Nature, 518(7538), 150–151.

Weiss, C. (2003). Scientific uncertainty and science-based precaution. International Environmental Agreements, 3(2), 137–166. doi:10.1023/a:1024847807590.

Weiss, C. (2006) Precaution: The willingness to accept costs to avert uncertain danger. In:1. Lecture notes in economics and mathematical systems (vol. 581, pp. 315–330).

Wilsdon, J., Allen, L., Belfiore, E., Campbell, P., Curry, S., Hill, S., et al. (2015). The metric tide: Report of the independent review of the role of metrics in research assessment and management. Bristol: Higher Education Funding Council for England (HEFCE).

Ziman, J. (1996). “Postacademic Science”: Constructing knowledge with networks and norms. Science Studies, 9(1), 67–80.

Ziman, J. (1998). Why must scientists become more ethically sensitive than they used to be? Science, 282(5395), 1813–1814.

Ziman, J. (2000). Real science. What it is, and what it means. Cambridge: Cambridge University Press.

Acknowledgments

The bibliometric data used in this paper are from CrossRef and an in-house database developed and maintained by the Max Planck Digital Library (MPDL, Munich) and derived from the Science Citation Index Expanded (SCI-E), Social Sciences Citation Index (SSCI), Arts and Humanities Citation Index (AHCI) prepared by Thomson Reuters (Philadelphia, Pennsylvania, USA). The policy document information was retried via the API of Altmetric. Open access funding provided by Max Planck Society.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bornmann, L., Haunschild, R. & Marx, W. Policy documents as sources for measuring societal impact: how often is climate change research mentioned in policy-related documents?. Scientometrics 109, 1477–1495 (2016). https://doi.org/10.1007/s11192-016-2115-y

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-016-2115-y