Abstract

Student questioning is an important self-regulative strategy and has multiple benefits for teaching and learning science. Teachers, however, need support to align student questioning to curricular goals. This study tests a prototype of a principle-based scenario that supports teachers in guiding effective student questioning. In the scenario, mind mapping is used to provide both curricular structure as well as support for student questioning. The fidelity of structure and the process of implementation were verified by interviews, video data and a product collection. Results show that the scenario was relevant for teachers, practical in use and effective for guiding student questioning. Results also suggest that shared responsibility for classroom mind maps contributed to more intensive collective knowledge construction.

Similar content being viewed by others

Introduction

Asking questions is a powerful heuristic for students to acquire knowledge about the world (Chouinard et al. 2007). Student questioning, in this study defined as the process in which students generate, formulate and answer questions to seek knowledge or to resolve cognitive conflicts, seems to have multiple benefits for teaching and learning science (Biddulph 1989; Van der Meij 1994). Research shows that student questioning is an important self-regulative strategy that enhances intrinsic motivation, fosters feelings of competence and autonomy and supports both knowledge construction and the development of meta-cognitive strategies (Chin and Osborne 2008).

Unfortunately, teachers dominate questioning and student questions seem to be rare in classrooms (Dillon 1988; Reinsvold and Cochran 2012). Although many teachers acknowledge the importance of student questioning, its implementation seems limited for several reasons. A major obstacle seems to be that teachers feel pressured to cover the curriculum, the curriculum being a set of predetermined learning goals established by National Standards, school systems, syllabi and/or teachers (Wells 2001). Rop (2002) shows that teachers prefer direct instruction in order to achieve curriculum goals and they sometimes discourage spontaneous student questioning to prevent disruption of planned lessons. On the other hand, Zeegers (2002) finds that the teachers that are most effective in promoting student questioning facilitate students to pursue questions of personal interest. Self-formulated student questions, however, might not necessarily address curriculum goals, an issue that worries teachers. In addition to concerns about attaining curricular goals, teachers encounter two major practical challenges: (a) to organise quality guidance for a wide variety of questions and (b) to facilitate the exchange of learning outcomes to prevent fragmented knowledge construction among students (Keys 1998).

Facing these concerns and challenges, teachers seek a balance between providing structure to attain curricular goals and allowing autonomy to support student questioning (Brown 1992; Van Loon et al. 2012). In short, teachers need to guide effective student questioning, defined in this study as the degree in which student questions contribute to attaining curriculum goals. The aim of this study is to design and evaluate a prototype of a scenario that supports teachers in guiding effective student questioning. In addressing this aim, research questions about the relevance, practicality and effectiveness of the scenario will be answered. Relevance concerns teachers’ perceptions that mind mapping addresses important challenges in guiding student questioning (Nieveen 1999). Practicality consists of teachers’ perceptions that working with mind mapping is possible within the practical limitations of time, means and knowledge (Nieveen 2009). Effectiveness refers to the perceived support of mind mapping for realising effective student questioning (Doyle and Ponder 1977).

Theoretical Framework

Asking questions about phenomena in the world is at the heart of scientific inquiry (Chin and Osborne 2008). Therefore, one might expect that teaching students to ask questions would play a pivotal role in science education. The reforms in science education in the USA and Europe, which began in the mid 1990s, do indeed prioritise asking questions as one of the essential components of inquiry-based science teaching (e.g. National Research Council 1996). However, even in the most inquiry-based pedagogical approaches, which intend to support students in learning how to research natural phenomena, teachers still seem to ask the questions (Osborne and Dillon 2008). Only in the most open form of inquiry-based learning, referred to as “Open inquiry”, are students encouraged to raise their own questions (Bianchi and Bell 2008). Although many science teachers acknowledge the importance of student questioning for knowledge construction, to foster discussion, for self-evaluation and to arouse epistemic curiosity, student questions in the classroom are not only rare but are also rarely welcomed by teachers and fellow students (e.g. Reinsvold and Cochran 2012; Rop 2003). Therefore teachers seem to require support to teach science in a “student question-driven classroom” (Shodell 1995, p.278). In order to design the appropriate support for teachers, we first examine the process of student questioning; the challenges this poses for teachers, which design principles support teacher guidance and what support visual tools might offer. In the next section, we describe the scenario that was developed on the basis of these theoretical findings from the literature.

Challenges in Teacher Guidance of Student Questioning

In general, questioning can be described as a process that consists of three subsequent phases: (a) generating, (b) formulating and (c) answering questions (cf. Van der Meij 1994). In the generating phase, students become aware of a need or possibility to ask a question, caused, for example, by an experience of perplexity or a cognitive disequilibrium, and they then brainstorm about possible questions to ask. In the formulating phase, students specify their need for information; when necessary, they reformulate their questions, and they decide which questions to pursue. In the third phase, that of answering the question, students consult available resources and/or conduct inquiry activities. Although students are the questioners, teachers can support students at each phase.

In the generating phase, teachers can support student questioning by activating and extending students’ prior knowledge and allowing them to ask questions that arise from personal interest (Stokhof et al. 2017). Zeegers (2002) finds that a supportive classroom culture is a prerequisite for question generation. Teachers can enhance this culture by modelling an open stance of inquiry (Commeyras 1995). Additionally, Keys (1998) shows when students perceive topics to be relevant to their personal lives they are motivated to raise questions. Furthermore, group work seems to support question generation by facilitating the exchange of ideas and providing a sense of security, especially in small group interactions (Baumfield and Mroz 2002). Finally, prompts and visual tools are effective when they (a) evoke cognitive conflict or a sense of wonderment, (b) offer students the opportunity to think freely, and (c) visually support the exchange of ideas and questions (Hakkarainen 2003).

From a curricular perspective, the challenge at this phase is to align question generation to curricular goals (Stokhof et al. 2017). Spontaneous student questioning is generally unfocussed and does not necessarily address the key issues in the domain or contribute to extending students’ conceptual structures (De Vries et al. 2008). Although textbook curricula offer conceptual structure, they do not allow for much student questioning (Rop 2002). Presenting a core curriculum that consists of a limited number of interrelated key concepts, which represent the essential characteristics of the subject, might offer the conceptual focus to align question generation with the curriculum (cf. Scardamalia and Bereiter 2006).

In the formulating phase, teachers usually need to mediate initially unclear and un-investigable questions into effective student questions (Stokhof et al. 2017). Van Tassel (2001) finds that question mediation seems to require question clarification, modelling and feedback. Question formulation is fostered by a classroom culture of shared responsibility where students raise and discuss their questions collectively (Chin and Kayalvizhi 2002). Zhang et al. (2007) show that student collaboration in formulating questions increases diversity and supports the mutual adoption of questions. From a curricular perspective, all questions should be evaluated and mediated for their potential to attain curriculum goals. Beck (1998) observes that when properly valued and guided, all student questions can become valuable contributions to the curriculum.

With regard to the answering phase, Hakkarainen (2003) suggests that teachers should be aware of the progressive nature of student questioning because fact-seeking questions appear to evolve towards more profound questioning over time. Progressive inquiry emerges when answers to questions evoke new follow-up questions and thus start threads of inquiry (Zhang et al. 2007). Teachers can support progressive inquiry by activating and extending prior knowledge, pointing out important ideas and seeking questions (Martinello 1998). The most effective approach to sustain progressive inquiry seems to be a collective effort of teachers and students, sharing and discussing questions together and building upon each other’s questions and answers, such as that shown by Lehrer et al. (2000). These authors found that a Grade 1 classroom that was willing and able to explore the process of decomposition in compost columns over the course of a whole year sustained progressive inquiry by exchanging each other’s observations, ideas, questions and answers. Visual tools can support the phase of answering by providing a collaborative common workspace for sharing and elaborating on questions and answers (Zhang et al. 2007). From a curricular perspective, such a collaborative workspace illustrates or visualises the way in which progressive inquiry can cover the core curriculum. To realise effective student questioning, educational design should support teachers to balance student autonomy with curricular goals.

Design Principles to Support Teacher Guidance

Four general design principles emerged from an extensive literature review on guiding effective student questioning: (1) define conceptual focus in a core curriculum, (2) support question generation by acknowledging potential in all questions, (3) establish a sense of shared responsibility to collectively cover a core curriculum, and (4) visualise inquiry and its relation to the curriculum (Stokhof et al. 2017). First, guiding effective student questioning is likely to require a clear but flexible conceptual focus. A core curriculum supports teachers in setting curricular goals and in making an inventory of students’ prior knowledge, and it simultaneously provides opportunity for diversity in student questions. Second, supportive teachers are needed who welcome all questions and recognise their potential. Third, peer collaboration and shared responsibility enhance the generation, formulation and answering of questions. Peer guidance can support students to exchange prior knowledge, compare and improve questions and to share and discuss answers. Fourth, visualisation seems to support all phases of the questioning process. Visual tools can help students to become aware of their prior knowledge and interests, relate questions to each other and the curriculum and exchange their answers by creating a shared point of reference. Moreover, by visualising and discussing learning outcomes, new questions can be evoked that lead to progressive inquiry.

Building on the four design principles, we developed a principle-based scenario for teachers to guide effective student questioning. Given the differences in context and content between schools and their curricula, teachers should be able to adapt this scenario to their own specific classroom needs. Therefore, our principle-based scenario aims to offer flexible support by providing a lesson plan that structures the process of student questioning but at the same time leaves open the exact content (cf. Zhang et al. 2011). It is expected that the principle-based scenario provides freedom to support student questioning and offers a structure for attaining curricular goals.

Visual Support for Teacher Guidance

An essential component of the scenario is the visual support for guiding the questioning process. Specific requirements for such a visual tool were identified in the literature (Stokhof et al. 2017). Simple visual tools, such as posters or bulletin boards, merely visualise the listing, exchange and categorisation of questions. These simple visual tools support students to remember, share and compare their questions and can help to identify subtopics and act as a stimulus for further questioning (e.g. Van Tassel 2001). More advanced visual tools also support the refinement of questions. For example, when teachers visualised which student questions met the required criteria in a T-bone chart and discussed their quality, students began to ask higher-level questions (Di Teodoro et al. 2011). Moreover, advanced visual tools also visualise the exchange of findings and the transformation of individual answers into collective knowledge. For example, the driving question board (DQB) supported students not only to categorise their questions into specific subcategories but also to visualise the relation between all findings, which helped students to learn about the whole topic under study (Weizman et al. 2008). Complex visual tools offer even more opportunity to support student questioning. Complex visual tools are not only platforms for recording and sharing questions and findings but they also offer an adaptable flexible structure for emergent ideas and new lines of inquiry (Stokhof et al. 2017). Moreover, complex visual tools allow for both a sense of student autonomy, by offering opportunities to raise and answer questions of personal interest, and supporting a sense of collective responsibility by visualising and monitoring collective knowledge development. An example of such a complex visual tool is the knowledge forum (Zhang et al. 2007). This digital platform is based on the knowledge building principles of Scardamalia and Bereiter (2006) and visually supports the exchange, discussion and elaboration of ideas. Knowledge forum consists of a digital database in which students post their ideas as “notes”, with the aim of stimulating their peers to respond with questions, suggestions, comments or answers (Zhang et al. 2007). Although this platform supports student collaboration and collective knowledge construction, it was not specifically designed to support teachers in guiding effective student questioning.

A complex visual tool seemed most appropriate for the scenario because teachers needed a flexible, adaptable tool that supported them in guiding both individual student questioning and collective knowledge building. However, the visual tool should also be easy to use by teachers and students in primary education; otherwise, it would most likely not be adopted (Rogers 2003).

After careful consideration, digital mind mapping was selected as the visual tool for the scenario. A mind map is a radial branch-like visual organiser in which concepts are structured hierarchically or associatively (Buzan and Buzan 2006). Research has shown that mind maps have the features of a complex visual tool and are suitable for students in primary education. Furthermore, mind maps have five specific characteristics that make them particularly suitable for this scenario. First, Näykki and Järvelä (2008) have shown that mind maps support recording, exchanging and comparing information. Second, Eppler (2006) reported that mind maps have a flexible structure in which relations between concepts are easily visualised. Third, digital mind maps in particular, support quick elaborations and allow for continuous alterations in their conceptual structure (Eppler 2006). Fourth, Tergan (2005) reported that digital mind maps could be used as data repositories in which new information can be stored and exchanged. Finally, only a limited set of rules is required for constructing a mind map: branch out from a central theme, use one word on each branch, split branches at the end, place text on top and use colour consistently (Buzan and Buzan 2006). For example, Merchie and Van Keer (2012) have shown that primary school students can learn and apply these rules with relative ease.

Having the features of a complex visual tool, it was hypothesised that digital mind mapping would support generating, formulating and answering student questioning. Further, it was assumed that recording, sharing and comparing student prior knowledge in a mind map would support generating questioning. When students become aware of the conceptual structure of their knowledge, new wonderments might be elicited and new interests raised (Hakkarainen 2003). Mind maps were also expected to support formulating questions by visualising and discussing criteria such as relevance and the contribution of questions to the curriculum. The relevance of questions and their contribution to the expansion of knowledge on the topic could be discussed by localising them in the conceptual structure of the mind map. Less relevant questions are more likely to be placed on the outer branches of the mind map and might only add new information or examples on minor details. Highly relevant questions often address the relation between key concepts and might refine the conceptual structure in the mind map. Finally, mind maps were also expected to support answering questions because knowledge development can be made visible by adding answers and elaborating the mind map. Students might thus become aware of the contributions of their questions to the collective knowledge, supporting a shared sense of responsibility for answering the questions and potentially even raising new questions (e.g. Zhang et al. 2007).

Design of the Scenario

Based on four design principles, a scenario to guide effective student questioning was developed that consisted of a teacher preparation phase, three phases of questioning and an evaluation phase. This sequence of phases is similar to that of “an interactive approach to science”, as developed by Biddulph and Osborne (1984). In each phase, mind mapping was used to visualise the core curriculum and the collective process of questioning and answering.

In Phase 1, the teachers prepare a core curriculum around a chosen central topic. The intended output is a visualised core curriculum represented as an expert mind map. An expert mind map serves primarily as a point of reference for teachers to guide student questioning. This means that to allow for optimal student autonomy, teachers use an expert mind map only implicitly to structure and support student input in later phases. Teachers also prepare an introductory activity that raises students’ interest in the topic and activates their prior knowledge about important concepts and issues.

The aim of phase 2 is to activate and record students’ prior knowledge and to prompt students to generate questions. First, the topic is introduced to the whole class by means of an activity that raises interest and activates prior knowledge, for example by demonstrating an experiment or discussing an ambiguous claim. Students are then asked to individually note all the concepts they associate with the topic. They subsequently exchange their notes in small groups before sharing them with the whole class by making a collaborative inventory of concepts in an unstructured field of words. Before structuring the collective prior knowledge, students are requested to record their individual prior knowledge in an individual mind map. Teachers then support students in structuring the field of words into clusters and, subsequently, into mind map branches, alternating between small group work and whole class discussion. Together, all mind map branches form a classroom mind map that visualises collective conceptual prior knowledge as a structure of key concepts, examples, details and their mutual relations. Finally, students are presented with a question-focus, which is a prompt in the form of a statement or visual aid that attracts and focuses student attention and stimulates questioning (Rothstein and Santana 2011). Prompted by a question-focus, students brainstorm in small groups about potential questions. Every student is invited to generate as many questions as they can think of, and all input is recorded.

In phase 3, student questions are exchanged, evaluated, selected and reformulated. First, students in various groupings discuss the relevance and learning potential of the questions and their classroom mind map is used as a shared point of reference. The most relevant and promising questions are selected during classroom discussion and, when necessary, further clarified and reformulated by students with support from the teacher. Finally, the selected questions are visualised in the classroom mind map and each student adopts one question for further inquiry.

In phase 4, the selected and adopted student questions are answered. Students investigate questions individually or in dyads. Some questions are investigated by using primary sources, such as performing an experiment, doing observations, collecting data on a fieldtrip or interviewing an expert. Other questions are explored with secondary sources such as dictionaries, encyclopaedias, books, websites or video. Students use question worksheets to record: their question; which concept in the classroom mind map it addresses; a prediction for an answer; which resources might be supportive and what (preliminary) answers have been found. Students present the answers to their peers, and these are subsequently discussed with the whole class with the aim of exchanging learning outcomes and evoking possible follow-up questions. To visualise collective knowledge construction, answers are also integrated in the classroom mind map by either elaborating or restructuring the mind map. Ideally, new follow-up questions emerge when discussing the answers, and students can adopt these questions by starting a new cycle of inquiry.

Finally, in phase 5, learning outcomes are evaluated. By comparing the expert mind map with the final classroom mind map, teachers and students can evaluate the degree to which the core curriculum has been covered. Furthermore, students construct a post-test individual mind map. Students are provided with pencil and paper and allowed 45 min to visualise their knowledge in a mind map. By comparing pre- and post-test individual mind maps and that of the expert mind map, teachers and students can assess individual learning outcomes and determine the extent to which curriculum goals are attained by all students.

Testing the Scenario

To assess the value of the scenario for guiding effective student questioning, both structure fidelity and process fidelity of implementation were measured (cf. O’Donnell 2008). Structure fidelity describes the degree to which teachers worked with the scenario, and this is operationalised as adherence—the extent to which teachers perform the suggested activities in the scenario as intended—and duration, which refers to the number, length or frequencies of the performed activities (Mombray et al. 2003). Process validity describes how teachers perceived the support of mind mapping in the scenario in terms of guiding effective student questioning and how it was operationalised in the variables of relevance, practicality and effectiveness. Relevance refers to the teachers’ perceptions that mind mapping addressed important challenges in guiding student questioning (Nieveen 1999). Practicality consists of the teachers’ perceptions that working with mind mapping was possible within the practical limitations of time, means and knowledge (Nieveen 2009). Effectiveness refers to the perceived support of mind mapping for realising effective student questioning (Doyle and Ponder 1977).

Although process fidelity is the focus of this study, the degree of structure fidelity is taken into account with the aim of relating the teacher’s performance to his or her perceptions and to make comparisons between cases. Taken together, the three process variables assess the quality of the scenario and serve to answer the following research question: What is the relevance, practicality and effectiveness of digital mind mapping in a principle-based scenario for guiding effective student questioning?

Method

The research was set up as a multiple case design study in which a prototype of a scenario to support guidance of effective student questioning was developed, implemented and evaluated in close collaboration with practitioners in primary education (McKenney and Reeves 2012). The study aims to evaluate the process of implementation of the prototype in order to improve it.

Participants

The study participants comprised of 12 teachers and their 268 students from grades 3–6, distributed over nine classrooms in two primary schools in a suburban district in the Netherlands. The group of teachers consisted of five males and seven females aged 28 to 56 years old. All participants were experienced teachers with between 10 and 32 years of teaching experience. Most teachers worked full-time but five teachers worked part-time from 2 to 4 days a week. Each classroom was regarded as a separate case, so in total, nine cases participated. Cases 1–9 were selected, first because their teachers had expressed a need for support in guiding effective student questioning, and second because they were able and willing to test the scenario from the perspective of the end-users (McKenney and Reeves 2012).

The scenario was tested for the social science curriculum, which is mandatory in the Netherlands for primary education and comprises subjects such as history, geography, physics and biology. Teachers in both schools taught project-based social science for periods of 6 to 8 weeks but had no previous experience with student questioning. Teachers in school A had some experience in the use of mind maps to visualise learning content. All cases were equipped with the I-Mind Map 6™ software and an interactive white board (IWB) to project and manipulate computer images on a large touchscreen for the whole class.

Training

All teachers were trained in two preparatory sessions. In a first 2-h session, the teachers were informed about the general steps in the scenario; they practised and discussed phases of generating, formulating and evaluating questions and explored how the scenario could be implemented in their specific classrooms. In a second 2-h session, the teachers collectively designed an expert mind map and introductory activities. The topics chosen by school were “health” for a combined grade 3–4 and “the river” for grades 5 and 6. School B selected the topic “my body” for six combined classes for grades 4–5–6.

Data Collection and Analyses

Data was collected during a 6-week period in the spring of 2014. In each case, all classroom activities from phases 2 to 5 of the scenario were video-recorded. All participating teachers were involved in the collective design sessions in phase 1, which were audio-recorded. After completing phase 5, individual semistructured interviews were held with all participating teachers. The interviews focused on teachers’ perceptions of the relevance, practicality and effectiveness of the five phases of the scenario. For example, teachers were asked about their perceptions of the practicality of phase 2: “To what extent do you consider making a classroom mind map to be effective as an introduction to the topic?” An overview of all interview questions can be found in Appendix 1. To triangulate video and audio data, classroom products were collected, such as individual and classroom mind maps produced in the several phases of the scenario. In addition, we collected the worksheets of pupils that administered the questions they posed and the answers they found.

The analysis took account of several variables for fidelity of structure and process (Table 1). The fidelity of structure was determined first. The adherence was analysed by observing the video data and using a checklist of suggested activities for each phase (Appendix 2). To ensure interrater reliability, a sample of approximately 20% of video recordings was independently coded by two researchers. An intercoder agreement of κ = 0.90 for the sample was established. After discussing differences, the remainder of the video data was coded by the first author. The video data on adherence could also be triangulated for most activities by product collection. For example, multiple versions of the classroom mind map, which showed increasing elaboration, confirm its use in phase 4. Furthermore, duration was measured by logging the minutes in the videos spent on the various activities. The total amount of time spent on the scenario in each case for each phase was then calculated, rounding the totals up to 5 min for easy comparison.

Fidelity of process was mainly determined by coding the transcriptions of the teacher interviews and the design sessions. The variable relevance, practicality or effectiveness, as shown in Table 1, were operationalised as coding categories in an analysis matrix to determine for each segment of the transcript: the phase to which it referred, the variable addressed and whether the perceived value was positive, negative or mixed (Appendix 3). To ensure interrater reliability of this matrix, two raters independently used MAXQDA11™ software to score 20% of the interview transcripts. An average score of κ = 0.83 was calculated for all coding categories, indicating a strong agreement among raters. The first author then coded the remainder of the transcripts using MAXQDA11™. Coded data was then qualitatively analysed to distinguish trends, similarities, differences and peculiarities for each coding category.

Classroom products and video data were used to triangulate findings for the variable practicality and effectiveness. Classroom products such as question worksheets provided additional data about individual student questioning in phase 4. The development of classroom mind maps was analysed by comparing versions in terms of similarity of content and structure. In preparation for the interviews, teachers were asked to compare pre- and post-test student mind maps with their expert mind map and to determine the degree to which their curricular goals had been achieved. Teachers’ perceptions of student learning outcomes were discussed during the interviews. When the video data revealed the absence of suggested activities, this was also discussed during the interviews in relation to their perceived practicality.

Results

The following discussion will first consider the fidelity to structure of the scenario in terms of adherence and duration before presenting findings about fidelity of process, operationalised as relevance, practicality and effectiveness.

Structure Fidelity of Implementation

Table 2 shows observed adherence to all suggested activities of the scenario for each case. Phase 1 is not included because these preparatory meetings of the teachers were chaired by the first author and were therefore executed as intended. For phase 2, the data show that all teachers organised their students to collect and cluster prior knowledge in order to co-construct a classroom mind map. Furthermore, a question brainstorm was held in all cases and students were asked to construct a pre-test individual mind map. With the exception of case 5, all the activities of phase 3 were observed in all cases. Unfortunately, due to a malfunctioning camera, all video recordings for case 5 in phase 3 were lost, although product collection confirms that this phase was executed. In phase 4, differences in adherence between cases became apparent. The question worksheet was not used in case 1. In cases 2 and 3, there was missing data on predicting answers. The most remarkable difference in phase 4, however, was that the classroom mind map was not adapted or elaborated in cases 5 and 7. This was confirmed by analysis of the classroom mind maps. Another remarkable finding was the relatively limited number of follow-up questions in most cases, except for cases 4 and 9. In phase 5, only three teachers evaluated the development of the classroom mind map with the students (cases 1, 3, and 4). Individual mind maps were not evaluated with the students as suggested, although almost all students made pre- and post-test mind maps. We conclude that, in general, the teachers adhered to the structure of the scenario, but adherence decreased in later phases of the scenario.

Duration, which was operationalised as the amount of time each case spent on working on the scenario, is presented in Table 3. Over a 6-week period, teachers were scheduled to work on the scenario for approximately 3 h each week. Most time was spent on phase 4, in which students had to find or construct answers to their questions and subsequently present and discuss them in class. Although in only three cases did teachers discuss the development of the classroom mind map in their class, all teachers allotted time for students to construct their individual mind maps as pre- and post-test in phase 5. When comparing cases, a significant difference was only observed for phase 4 in case 1.

Process Fidelity

How teachers perceived relevance, practicality and effectiveness of mind mapping for guiding effective student questioning is summarised in Tables 4, 5, and 6. In many cases teachers perceived the variables as either positive (+) or negative (−). However, for some variables in certain phases, teachers described having perceived both positive and negative aspects, which is indicated as mixed (+/−). For example, the teacher in case 1 considered it to be relevant for most pupils to make an inventory of their own individual prior knowledge in phase 2 but had some reservations about whether this would be suitable for certain pupils. More qualitative details and examples will be presented on each phase for these variables.

Perceived Relevance

All teachers perceived the preparation of an expert mind map in phase 1 as relevant because it addressed their need to acquire a conceptual overview of the topic (Table 4). Previously, teachers had mainly followed instructions from the manual for these projects, regarding the prescribed educational activities as the stepping stones for the curriculum. However, in so doing, the teachers had lacked an overview as to what knowledge students were supposed to acquire from these activities. By exploring and discussing the topic, and selecting a core curriculum, teachers felt they could conceptually rise above a mere sequence of activities. As one teacher said, “I used to look several times a day [in the manual] to keep an overview [on which activities I am supposed to offer to the students], but since we made the expert mind map, I haven’t looked once”.

In phase 2, all but two teachers perceived making an inventory of students’ prior knowledge by means of a classroom mind map as relevant. Seven teachers mentioned that the classroom mind map addressed their need for an overview of students’ prior knowledge and offered a conceptual focus to elicit student questions. The other two teachers felt somewhat constrained in their teaching because they felt too much time was spent on “what was already known” when they would have liked to introduce new knowledge.

In phase 3, the teachers felt the need for an efficient method to guide student questioning to address curricular topics. In the past, most teachers had experienced guiding question formulating as both time-consuming and not always effective. All but two teachers perceived that question brainstorming produced a valuable reservoir of questions, from which many relevant questions for learning the curriculum could be selected.

With regard to phase 4, teachers expressed two needs: first, to support and monitor student progress in answering their questions and, second, to guide an effective exchange of learning outcomes. Teachers perceived their classroom mind maps as providing an overview of which questions were addressed by whom, but they did not specifically allow for monitoring students’ individual progress. To address this need to visualise the progress of the individual students, one of the teachers invented a “monitor board”. On this board, every student placed his name card on specific step in the questioning process he or she was working on: formulating questions, searching information, processing information, preparing presentations or giving presentations. Four of the five colleagues in her school readily adopted this monitor-board.

Phase 5 of the scenario was designed to support teachers in evaluating the individual and collective learning outcomes with their students. Teachers were encouraged to discuss the development of collective knowledge as visualised by versions of the classroom mind map or individual knowledge development as visualised in pre- and post-test student mind maps. In the interviews, all teachers stated that they perceived evaluating learning outcomes with mind maps to be relevant (Table 4).

Perceived Practicality

Phase 1 was perceived as practical because teachers managed in one 2-h session to determine the core curriculum in an expert mind map. Some teachers indicated that they sometimes found it difficult to let go of their personal interpretations of the topic and to allow alternative perspectives of its conceptual structure, but all agreed the resulting discussion had been beneficial for their understanding (Table 5).

Constructing the classroom mind map in phase 2 was generally perceived as practical, especially when teachers found a balance between alternating whole class and small group work to keep students active and engaged. Teachers appreciated the possibility in the principle-based scenario to make “short-cut” decisions that could speed up the construction process. For example, as one teacher explained, “You can discuss for hours how to structure concepts in clusters, but you can also suggest [the names of] the clusters [in other words, give students the key concepts on the head branches of the mind map], and let the students figure out how to structure their concepts accordingly”.

Although most students needed teacher support when evaluating the quality of questions in phase 3, teachers perceived the classroom mind map as practical visual support for this discussion. The classroom mind map helped to visualise the relevance of a question for the curriculum and to estimate its potential learning outcome.

For the exchange of answers in phase 4, the classroom mind map was used in seven cases, although perceptions on its practicality differed among these teachers (Table 5). The four teachers who themselves took the responsibility to expand the classroom mind map struggled to find time to integrate the findings of the students. A complicating factor in these cases was that many students only produced answers and presentations in the last weeks and thus elaboration of the classroom mind map was delayed to the last moment. In cases 2, 4, and 6, the teachers made weekly alternating groups of students responsible for elaborating the classroom mind map. In cases 5 and 7, classroom mind maps were only used to relate questions to the curriculum, but these were not expanded. In case 5, this was a result of the prolonged absence of the regular teacher. In case 7, the teacher chose to organise an alternative exchange of findings by means of a “mini-conference”.

In contrast to the unanimously expressed need for evaluation in phase 5, only in cases 1, 3, and 4 did the teachers discuss the collective knowledge development with their students, as visible in versions of the classroom mind map. The individual knowledge development of students, which might become apparent by comparing pre- and post-test personal mind maps, was not discussed in any of the cases. Teachers explained that this was primarily due to time-concerns because they were still busy wrapping up the projects in the last week.

Perceived Effectiveness

Phase 1 was perceived as effective by all teachers because constructing an expert mind map not only deepened their understanding of the topic and enhanced their self-confidence in guiding student questions that addressed the topic but also provided practical experience for the upcoming process of constructing a classroom mind map together with students (Table 6).

The classroom mind map was considered by all teachers to be effective for visualising students’ collective prior knowledge in phase 2. In seven cases, the classroom mind map was perceived to be effective as a question focus for the students’ question brainstorm. In two cases, teachers chose objects and photomontages as alternative question foci. However, in these cases, teachers were somewhat dissatisfied with the resulting question output, classifying many questions as insufficiently focused on the topic.

In phase 3, teachers felt that being able to generate, select and reformulate questions with the whole class was more effective, compared to a one-to-one teacher-student approach. Moreover, by allowing students to adopt each other’s question, all students were able to work on relevant questions of their own interest, even when they had difficulty in formulating questions. The two teachers who had perceived their question brainstorm as less successful indicated that they struggled to support students in reformulating their questions, but that they had eventually succeeded in having a sufficient number of relevant questions for students to choose from (Tables 7 and 8 ).

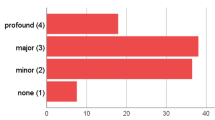

Although some teachers struggled to organise collective knowledge construction in the classroom mind map, all teachers generally regarded phase 4 as effective because all student questions were answered, exchanged and discussed. In cases 2, 3, 4, and 6, where the students’ collective responsibility for the knowledge construction was well organised, the classroom mind maps were elaborated more continuously and the numbers of added concepts were the highest among the cases, as shown in Fig. 1. The mean number of questions under investigation in each classroom was about 16, with outliers of 10 and 28 questions in cases 1 and 7 respectively (Fig. 1). Remarkably, only two teachers, from case 4 and case 6, expressed some concerns about the low number of follow-up questions and wondered why students seldom raised them.

In phase 5, the small number of teachers that did evaluate the development of collective knowledge discovered that many students were able to explain the contribution of specific questions to elaborating the classroom mind map. However, students also mentioned that without the example mind map in sight, it was sometimes hard to recollect all the specific concepts in the classroom mind map beyond the head branches.

The student learning outcomes of phase 5 were therefore primarily evaluated with the teachers during interviews to determine the teachers’ perception of effectiveness. In preparation for these interviews, the teachers were requested to compare student pre- and post-test mind maps and the expert mind map. To help teachers compare, some indicators for the quality of the mind map were suggested. As quantitative measures, teachers could compare the number of head branches, the number of concepts and the number of layers in branches and as qualitative measures, the use of key concepts and specific terminology from the expert mind map. During the interviews, teachers used examples to illustrate their perceptions of students’ learning progress. One of these examples is shown in Fig. 2. When carrying out the comparison, the teacher noticed that the number of concepts had doubled, and more terminology and key concepts from the expert mind map were embedded in the post-test mind map. For example, for the key concept “diseases”, the student added terms such as “hereditary”, “contagious” and “remedy”. In addition, the mind map structure became more refined and elaborated, as is visible in the increase in the number of layers, from 2 to 3 levels for each branch to 3 to 6 levels.

With the exception of case 1, teachers were generally satisfied with the progress students had made in their mind maps. Teachers frequently presented examples to show that students had embedded more key concepts in the post-test mind maps, and the structure of the mind map was often elaborated and refined. However, teachers expressed concerns that mind maps might not always represent the actual knowledge students possessed. In most cases, teachers identified one or two students who had great difficulty constructing mind maps, but who, on the other hand, had shown that they possessed profound knowledge of the topic during their presentations. Teachers suggested that although they considered mind mapping to be a useful method to assess conceptual knowledge, it might not be a valid instrument for summative assessments for all students. In case 1, the teacher was dissatisfied with the learning outcomes of her students and was disappointed because many students failed to use some of the specific key concepts she had added to the classroom mind map. However, the results on adherence showed that students in case 1 spent considerably less time on researching their questions and exchanging answers than in other cases.

Discussion

The aim of this study was to answer the following research question: What is the relevance, practicality and effectiveness of digital mind mapping in a principle-based scenario for guiding effective student questioning? Results show that teachers adhered to most of the suggested activities of the scenario, with the exception of evaluating learning outcomes with students and managed to finish the project within the time available. Moreover, most teachers perceived mind mapping as relevant, practical and effective for guiding effective student questioning, although two teachers were critical of the practicality and effectiveness of mind mapping for all phases. We therefore conclude that mind mapping can support teachers in guiding student questions to contribute to curricular goals.

Although this study set out to test the functionality of mind mapping in a principle-based scenario, some more general observations could also be made about teacher guidance of effective student questioning. First, a thorough preparation in which teachers explore, discuss and determine a conceptual focus for student questioning was effective in boosting teachers’ self-confidence about guiding student questioning to contribute to curricular goals. This is in keeping with the findings of Zeegers (2002) and Diaz (2011) who reported that teachers’ self-efficacy to guide student questioning was correlated with their domain knowledge. Second, in this study, a visualised inventory of students’ prior knowledge was the most effective question focus for generating relevant student questions. However, to our knowledge, this finding has not been reported in previous literature and requires more thorough research to be validated. Third, the use of question brainstorms, as suggested by Rothstein and Santana (2011), was highly effective for generating many student questions. Bringing students temporarily into a “question-modus”, in which their only focus is on generating questions, seemed to elicit creativity and wonderment in student questioning. Question brainstorms might thus overcome the phenomenon, which was reported by Scardamalia et al. (1992), that students would restrict themselves to fact-seeking questions that might easily be answered because of their concerns about how to conduct subsequent inquiries. On the contrary, the reservoir of questions produced in the question brainstorm allowed many students to adopt questions that interested them and challenged their answering skills. Fourth, making students mutually responsible for each other’s questions and answers was found in this study to be the most effective strategy to establish a continuous process of collective knowledge construction. This is congruent with the findings of Zhang et al. (2007) who reported that shared responsibility is an important precondition for effective collective knowledge construction. Fifth, although a collective visual platform, such as a classroom mind map, might support a mutual feeling of responsibility for knowledge construction, it is not sufficient in itself. Our results suggest that a culture of mutual responsibility also requires that teachers transfer some of their classroom control to the students. Hume (2001) and Harris et al. (2011) have reported similar observations. Finally, the evaluation of learning outcomes in mind maps was primarily carried out by the teachers with the aim of the “assessment of learning”. Although this generally supported teachers in evaluating student’ learning outcomes, students themselves missed out on the opportunity to evaluate their own mind maps. Our finding that most teachers did not provide their pupils with feedback on task is not uncommon, as Hattie and Timperley (2007) have shown. However, this is unfortunate because Bybee et al. (2006) has shown that overall student’ results would rise by 17% if student self-evaluation of learning activities was emphasised in inquiry-based science units (cited in Bybee et al. 2006). Moreover, from the perspective of “assessment for learning”, mind maps may have great potential to make students aware of their evolving knowledge structures (cf. Black et al. 2004).

To correctly interpret the findings presented here, we would like to point out some methodological limitations of our study. First, participating teachers were willing and able to try out the scenario, which might have influenced their objectivity. On the other hand, evaluation by voluntary practitioners is recommended when testing educational designs in the prototyping phase because non-voluntary participants might be unwilling to stretch the design to its full potential, thus exposing its strengths and its flaws (Nieveen 2009). Second, the quality of the scenario is primarily measured by teachers’ perceptions. This is ecologically valid in terms of evaluating teachers’ experiences, but, on the other hand, teacher perception is a subjective measure for the quality of student learning outcomes, although findings were triangulated by video-recordings and product collection. Therefore, future research should also seek objective measures to determine the success of the scenario for student learning outcomes.

Another limitation, with regard to the aims of the study, was that none of the cases demonstrated progressive inquiry, the self-perpetuating process of questioning and answering. There are several possible explanations for this finding. First, the duration of the intervention might have been a factor. The projects is this study only lasted for 6 weeks, whereas most studies that report progressive inquiry lasted for a semester or longer (Hakkarainen 2003; Lehrer et al. 2000). A second factor could be that questioning was perceived as a task rather than a stance. Students might have perceived asking questions as a task, just like the other assignments at school. When the answer was found, the students might have thought that the “the job was done”. In contrast, progressive inquiry requires that students perceive answers as stepping stones to new questions. Therefore, merely allowing students to raise their own questions might be insufficient for them to develop “questioning as a stance” (Cochran-Smith and Lyte 2009, p.3). Third, the scenario contained no specific instructions for teachers to guide progressive inquiry. Therefore, more research seems to be necessary to establish how teachers can foster progressive inquiry during collective knowledge construction. Possible strategies might entail adopting critical peer-evaluation of answers, teacher modelling of progressive inquiry or by challenging students to present both answers as well as follow-up questions during the answering phase.

References

Baumfield, V., & Mroz, M. (2002). Investigating pupils’ questions in the primary classroom. Educational Research. doi:10.1080/00131880110107388.

Beck, T. A. (1998). Are there any questions? One teacher’s view of students and their questions in a fourth-grade classroom. Teaching and Teacher Education. doi:10.1016/S0742-051X(98)00035-3.

Bianchi, H., & Bell, R. (2008). The many levels of inquiry. Science and Children, 46(2), 26–29.

Biddulph, F. G. M. (1989). Children’s questions: their place in primary science education. Doctoral dissertation, University of Waikato, New Zealand. http://www.nzcer.org.nz/pdfs/T01219.pdf. Accessed 29 December 2013.

Biddulph, F., & Osborne, R. (1984). Making sense of our world: an interactive teaching approach. Hamilton, New Zealand: University of Waikato, Science Education Research Unit.

Black, P., Harrison, C., Lee, C., Marshal, B., & Wiliam, D. (2004). Working inside the black box: assessment for learning in the classroom. Phi Delta Kappan, 86(1), 8–21.

Brown, A. L. (1992). Design experiments: theoretical and methodological challenges in creating complex interventions in classroom settings. The Journal of the Learning Sciences. doi:10.1207/s15327809jls0202_2.

Buzan, T., & Buzan, B. (2006). The mind map book. Harlow: Pearson Education.

Bybee, R. W., Taylor, J. A., Gardner, A., Van Scotter, P., Powell, J. C., Westbrook, A., & Landes, N. (2006). The BSCS 5E instructional model: origins and effectiveness. Colorado Springs, CO: BSCS.

Chin, C., & Kayalvizhi, G. (2002). Posing problems for open investigations: what questions do pupils ask? Research in Science and Technological Education. doi:10.1080/0263514022000030499.

Chin, C., & Osborne, J. (2008). Students’ questions: a potential resource for teaching and learning science. Studies in Science Education. doi:10.1080/03057260701828101.

Chouinard, M. M., Harris, P. L., & Maratsos, M. P. (2007). Children’s questions: a mechanism for cognitive development. Monographs of the Society for Research in Child Development, 72(1), 1–129.

Cochran-Smith, M., & Lytle, S. L. (2009). Inquiry as stance: practitioner research for the next generation. New York, NY: Teachers College Press.

Commeyras, M. (1995). What can we learn from students’ questions? Theory Into Practice. doi:10.1080/00405849509543666.

De Vries, B., van der Meij, H., & Lazonder, A. W. (2008). Supporting reflective web searching in elementary schools. Computers in Human Behavior. doi:10.1016/j.chb.2007.01.021.

Diaz Jr., J. F. (2011) Examining student-generated questions in an elementary science classroom. Doctoral dissertation, University of Iowa. http://ir.uiowa.edu/cgi/viewcontent.cgi?article=2331andcontext=etd. Accessed 29 December 2013.

Dillon, J. T. (1988). The remedial status of student questioning. Journal of Curriculum Studies. doi:10.1080/0022027880200301.

Di Teodoro, S., Donders, S., Kemp-Davidson, J., Robertson, P., & Schuyler, L. (2011). Asking good questions: promoting greater understanding of mathematics through purposeful teacher and student questioning. The Canadian Journal of Action Research, 12(2), 18–29.

Doyle, W., & Ponder, G. (1977). The practicality ethic in teacher decision making. Interchange. doi:10.1007/BF01189290.

Eppler, M. J. (2006). A comparison between concept maps, mind maps, conceptual diagrams, and visual metaphors as complementary tools for knowledge construction and sharing. Information Visualization. doi:10.1057/palgrave.ivs.9500131.

Hakkarainen, K. (2003). Progressive inquiry in a computer-supported biology class. Journal of Research in Science Teaching. doi:10.1002/tea.10121.

Harris, C. J., Phillips, R. S., & Penuel, W. R. (2011). Examining teachers’ instructional moves aimed at developing students’ ideas and questions in learner-centered science classrooms. Journal of Science Teacher Education. doi:10.1007/s10972-011-9237-0.

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research. doi:10.3102/003465430298487.

Hume, K. (2001). Seeing shades of gray: developing a knowledge community through science. In G. Wells (Ed.), Action, talk, and text: Learning and teaching through inquiry (pp. 171–194). New York, NY: Teachers College Press.

Keys, C. W. (1998). A study of grade six students generating questions and plans for open-ended science investigations. Research in Science Education. doi:10.1007/BF02461565.

Lehrer, R., Carpenter, S., Schauble, L., & Putz, A. (2000). Designing classrooms that support inquiry. In J. Ministrell & E. van Zee (Eds.), Inquiring into inquiry learning and teaching in science (pp. 80–99). Washington, DC: American Association for the Advancement of Science.

Martinello, M. L. (1998). Learning to question for inquiry. The Educational Forum, 62(2), 164–171.

McKenney, S., & Reeves, T. (2012). Conducting educational design research. London: Routledge.

Merchie, E., & Van Keer, H. (2012). Spontaneous mind map use and learning from texts: The role of instruction and student characteristics. Procedia — Social and Behavioral Sciences. doi:10.1016/j.sbspro.2012.12.077.

Mombray, C., Holter, M. C., Teague, G. B., & Bybee, D. (2003). Fidelity criteria: development, measurement, and validation. American Journal of Evaluation, 24, 315–340.

National Research Council. (1996). National science education standards. Washington, DC: National Academy Press.

Näykki, P., & Järvelä, S. (2008). How pictorial knowledge representations mediate collaborative knowledge construction in groups. Journal of Research on Technology in Education. doi:10.1080/15391523.2008.10782512.

Nieveen, N. (1999). Prototyping to reach product quality. In J. van den Akker, R. M. Branch, K. Gustafson, N. Nieveen, & T. Plomp (Eds.), Design approaches and tools in education and training (pp. 125–136). Dordrecht: Kluwer.

Nieveen, N. (2009). Formative evaluation in educational design research. In T. Plomp & N. Nieveen (Eds.), An introduction to educational design research (pp. 89–102). Enschede: SLO.

O’Donnell, C. L. (2008). Defining, conceptualizing, and measuring fidelity of implementation and its relationship to outcomes in K–12 curriculum intervention research. Review of Educational Research. doi:10.3102/0034654307313793.

Osborne, J., & Dillon, J. (2008). Science education in Europe: critical reflections. London: Nuffield Foundation.

Reinsvold, L. A., & Cochran, K. F. (2012). Power dynamics and questioning in elementary science classrooms. Journal of Science Teacher Education. doi:10.1007/s10972-011-9235-2.

Rogers, E. M. (2003). Diffusion of innovations (5th rev. ed.). New York, NY: Free Press.

Rop, C. J. (2002). The meaning of student inquiry questions: a teacher’s beliefs and responses. International Journal of Science Education. doi:10.1080/09500690110095294.

Rop, C. J. (2003). Spontaneous inquiry questions in high school chemistry classrooms: perceptions of a group of motivated learners. International Journal of Science Education. doi:10.1080/09500690210126496.

Rothstein, D., & Santana, L. (2011). Make just one change. Teach students to ask their own questions. Cambridge, MA: Harvard Education Press.

Scardamalia, M., & Bereiter, C. (2006). Knowledge building: theory, pedagogy, and technology. In K. Sawyer (Ed.), Cambridge handbook of the learning sciences (pp. 97–118). New York, NY: Cambridge University Press.

Scardamalia, M., Bereiter, C., Scardamalia, M., Bereiter, C. (1992) Text-Based and Knowledge Based Questioning by Children. Cognition and Instruction, 9(3), 177–199.

Shodell, M. (1995). The question-driven classroom. American Biology Teacher, 57(5), 278–282.

Stokhof, H.J.M., De Vries, B., Martens, R., & Bastiaens, T. (2017). How to guide effective student questioning: a review of teacher guidance in primary education. Review of Education. doi:10.1002/rev3.3089.

Tergan, S. O. (2005). Digital concept maps for managing knowledge and information. Knowledge and Information Visualization. Lecture Notes in Computer Science. doi:10.1007/11510154_10.

Van der Meij, H. (1994). Student questioning: a componential analysis. Learning and Individual Differences. doi:10.1016/1041-6080(94)90007-8.

Van Loon, A. M., Ros, A., & Martens, R. (2012). Motivated learning with digital learning tasks: what about autonomy and structure? Educational Technology Research and Development. doi:10.1007/s11423-012-9267-0.

Van Tassel, M. A. (2001). Student inquiry in science asking questions, building foundations and making connections. In G. Wells (Ed.), Action, talk, and text: learning and teaching through inquiry (pp. 41–59). New York, NY: Teachers College Press.

Weizman, A., Shwartz, Y., & Fortus, D. (2008). The driving question board. The Science Teacher, 75(8), 33–37.

Wells, G. (2001). The case for dialogic inquiry. In G. Wells (Ed.), Action, talk, and text: Learning and teaching through inquiry (pp. 71–194). New York, NY: Teachers College Press.

Zeegers, Y. (2002). Teacher praxis in the generation of students’ questions in primary science. Doctoral dissertation, Deakin University, Australia.

Zhang, J., Hong, H. Y., Scardamalia, M., Teo, C. L., & Morley, E. A. (2011). Sustaining knowledge building as a principle-based innovation at an elementary school. The Journal of the Learning Sciences. doi:10.1080/10508406.2011.528317.

Zhang, J., Scardamalia, M., Lamon, M., Messina, R., & Reeve, R. (2007). Socio-cognitive dynamics of knowledge building in the work of 9- and 10-year-olds. Educational Technology Research and Development. doi:10.1007/s11423-006-9019-0.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Questions for semi-structured teacher interviews

Phase 1

-

What is for you the relevance of preparing an expert mind map?

-

Do you consider making an expert mind map as practical? Please explain.

-

To what extent do you consider making an expert mind map as effective for guiding student questioning? Please explain.

Phase 2

-

What is for you the relevance of making inventory of prior knowledge in a classroom mind map as introduction to the topic?

-

Do you consider making a classroom mind map as practical? Please explain.

-

To what extent do you consider making a classroom mind map effective as introduction to the topic? Please explain.

Phase 3

-

What is for you the relevance of the components for phase 3 of the scenario?

-

The question brainstorm?

-

(Pre-)selecting questions?

-

Discussing the relevance, feasibility and learning potential of questions?

-

Reformulating questions?

-

Adopting questions?

-

-

To what extent do you consider these components as practical? Please explain.

-

Do you consider the components as effective for guiding student questioning? Please explain.

Phase 4

-

What is for you the relevance of collective knowledge building for guiding student questioning? Do you consider the classroom mind map as suitable for this purpose?

-

To what extent do you perceive mind mapping as practical for guiding collective knowledge building? Please explain.

-

To what extent do you perceive mind mapping as effective for guiding collective knowledge building? Please explain.

Phase 5

-

What is for you the relevance of evaluating collective and individual knowledge development? Do you consider the mind maps as suitable instruments for these purposes?

-

To what extent do you perceive mind mapping as practical for evaluating collective and individual knowledge development? Please explain.

-

To what extent do you perceive mind mapping as practical for evaluating collective and individual knowledge development? Please explain.

Appendix 2

Appendix 3

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Stokhof, H., de Vries, B., Bastiaens, T. et al. Mind Map Our Way into Effective Student Questioning: a Principle-Based Scenario. Res Sci Educ 49, 347–369 (2019). https://doi.org/10.1007/s11165-017-9625-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11165-017-9625-3